Multispectral Food Classification and Caloric Estimation Using Convolutional Neural Networks

Abstract

:1. Introduction

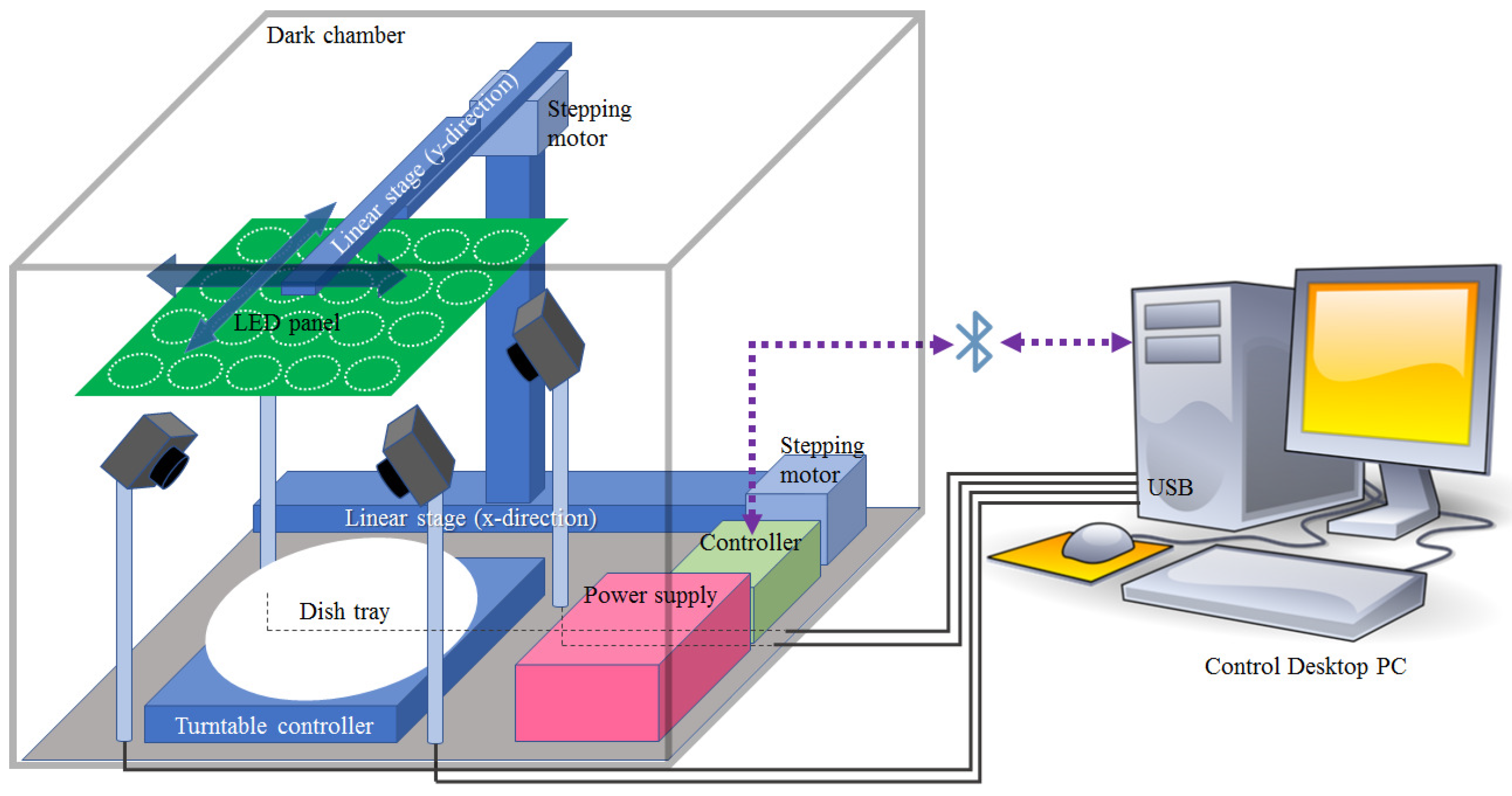

2. Data Acquisition

3. Food Analysis

3.1. Preliminary Feasibility Tests for UV/NIR Images

3.2. Preprocessing

3.3. Food Analysis Using a Convolutional Neural Network

3.4. Selection of the Wavelengths

4. Experimental Results

4.1. Accuracy for Food Item Classification

4.2. Accuracy for Caloric Estimation

4.3. Analysis of the Selected Wavelengths

5. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Moayyedi, P. The epidemiology of obesity and gastrointestinal and other diseases: An overview. Dig. Dis. Sci. 2008, 9, 2293–2299. [Google Scholar] [CrossRef]

- Prentice, A.M.; Black, A.E.; Murgatroyd, P.R.; Goldberg, G.R.; Coward, W.A. Metabolism or appetite: Questions of energy balance with particular reference to obesity. J. Hum. Nutr. Diet. 1989, 2, 95–104. [Google Scholar] [CrossRef]

- De Castro, J.M. Methodology, correlational analysis, and interpretation of diet diary records of the food and fluid intake of free-living humans. Appetite 1994, 2, 179–192. [Google Scholar] [CrossRef]

- Kaczkowski, C.H.; Jones, P.J.H.; Feng, J.; Bayley, H.S. Four-day multimedia diet records underestimate energy needs in middle-aged and elderly women as determined by doubly-labeled water. J. Nutr. 2000, 4, 802–805. [Google Scholar] [CrossRef] [PubMed]

- Nishimura, J.; Kuroda, T. Eating habits monitoring using wireless wearable in-ear microphone. In Proceedings of the International Symposium on Wireless Pervasive Communication, Santorini, Greece, 7–9 May 2008; pp. 130–133. [Google Scholar]

- Päfiler, S.; Wolff, M.; Fischer, W.-J. Food intake monitoring: An acoustical approach to automated food intake activity detection and classification of consumed food. Physiol. Meas. 2012, 33, 1073–1093. Available online: http://stacks.iop.org/0967.3334/33/1073 (accessed on 28 November 2022).

- Shuzo, M.; Komori, S.; Takashima, T.; Lopez, G.; Tatsuta, S.; Yanagimoto, S.; Warisawa, S.; Delaunay, J.-J.; Yamada, I. Wearable eating habit sensing system using internal body sound. J. Adv. Mech. Des. Syst. Manuf. 2010, 1, 158–166. [Google Scholar] [CrossRef]

- Alshurafa, N.; Kalantarian, H.; Pourhomayoun, M.; Liu, J.; Sarin, S.; Sarrafzadeh, M. Recognition of nutrition-intake using time-frequency decomposition in a wearable necklace using a piezoelectric sensor. IEEE Sens. J. 2015, 7, 3909–3916. [Google Scholar] [CrossRef]

- Bi, Y.; Lv, M.; Song, C.; Xu, W.; Guan, N.; Yi, W. Autodietary: A wearable acoustic sensor system for food intake recognition in daily life. IEEE Sens. J. 2016, 3, 806–816. [Google Scholar] [CrossRef]

- Weiss, R.; Stumbo, P.J.; Divakaran, A. Automatic food documentation and volume computation using digital imaging and electronic transmission. J. Am. Diet. Assoc. 2010, 1, 42–44. [Google Scholar] [CrossRef]

- Lester, J.; Tan, D.; Patel, S. Automatic classification of daily fluid intake. In Proceedings of the IEEE 4th International Conference on Pervasive Computing Technologies for Healthcare, Munich, Germany, 22–25 March 2010; pp. 1–8. [Google Scholar]

- Zhang, R.; Amft, O. Monitoring chewing and eating in free-living using smart eyeglasses. IEEE J. Biomed. Health Inform. 2018, 1, 23–32. [Google Scholar] [CrossRef]

- Thong, Y.J.; Nguyen, T.; Zhang, Q.; Karunanithi, M.; Yu, L. Prediction food nutrition facts using pocket-size near-infrared sensor. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Seogwipo, Republic of Korea, 11–15 July 2017; pp. 11–15. [Google Scholar]

- Lee, K.-S. Joint Audio-ultrasonic food recognition using MMI-based decision fusion. IEEE J. Biomed. Health Inform. 2020, 5, 1477–1489. [Google Scholar] [CrossRef]

- Sun, M.; Liu, Q.; Schmidt, K.; Yang, J.; Yao, N.; Fernstrom, J.D.; Fernstrom, M.H.; DeLany, J.P.; Sclabassi, R.J. Determination of food portion size by image processing. In Proceedings of the 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 21–24 August 2008; pp. 871–874. [Google Scholar]

- Zhu, F.; Bosch, M.; Woo, I.; Kim, S.Y.; Boushey, C.J.; Ebert, D.S.; Delp, E.J. The use of mobile devices in aiding dietary assessment and evaluation. IEEE J. Sel. Top. Signal Process. 2010, 4, 756–766. [Google Scholar] [PubMed]

- Pouladzadeh, P.; Shirmohammadi, S.; Al-Maghrabi, R. Measuring calorie and nutrition from food image. IEEE Trans. Instrum. Meas. 2014, 8, 1947–1956. [Google Scholar] [CrossRef]

- Ege, T.; Yanai, K. Simultaneous estimation of food categories and calories with multi-task CNN. In Proceedings of the 15th International Conference on Machine Vision Applications, Nagoya, Japan, 8–12 May 2017; pp. 198–201. [Google Scholar]

- Ege, T.; Ando, Y.; Tanno, R.; Shimoda, W.; Yanai, K. Image-based estimation of real food size for accurate food calorie estimation. In Proceedings of the IEEE conference on Multimeda Information Processing and Retrieval, San Jose, CA, USA, 28–30 May 2019; pp. 274–279. [Google Scholar]

- Lee, K.-S. Automatic estimation of food intake amount using visual and ultrasonic signals. Electronics 2021, 10, 2153. [Google Scholar] [CrossRef]

- Dehais, J.; Anthimopoulos, M.; Shevchik, S.; Mougiakakou, S. Two-view 3D reconstruction for food volume estimation. IEEE Trans. Multimed. 2017, 5, 1090–1099. [Google Scholar] [CrossRef]

- Lubura, J.; Pezo, L.; Sandu, M.A.; Voronova, V.; Donsì, F.; Šic Žlabur, J.; Ribić, B.; Peter, A.; Šurić, J.; Brandić, I.; et al. Food Recognition and Food Waste Estimation Using Convolutional Neural Network. Electronics 2022, 11, 3746. [Google Scholar] [CrossRef]

- Dai, Y.; Park, S.; Lee, K. Utilizing Mask R-CNN for Solid-Volume Food Instance Segmentation and Calorie Estimation. Appl. Sci. 2022, 12, 10938. [Google Scholar] [CrossRef]

- Raju, V.B.; Sazonov, E. Detection of oil-containing dressing on salad leaves using multispectral imaging. IEEE Access 2020, 8, 86196–86206. [Google Scholar] [CrossRef]

- Sugiyama, J. Visualization of sugar content in the flesh of a melon by near-infrared imaging. J. Agric. Food Chem. 1999, 47, 2715–2718. [Google Scholar] [CrossRef]

- Ropodi, A.I.; Pavlidis, D.E.; Mohareb, F.; Pangaou, E.Z.; Nychas, E. Multispectral image analysis approach to detect adulteration of beef and pork in raw meats. Food Res. Int. 2015, 67, 12–18. [Google Scholar] [CrossRef]

- Liu, J.; Cao, Y.; Wang, Q.; Pan, W.; Ma, F.; Liu, C.; Chen, W.; Yang, J.; Zheng, L. Rapid and non-destructive identification of water-injected beef samples using multispectral imaging analysis. Food Chem. 2016, 190, 938–943. [Google Scholar] [CrossRef]

- Tang, C.; He, H.; Li, E.; Li, H. Multispectral imaging for predicting sugar content of Fuji apples. Opt. Laser Technol. 2018, 106, 280–285. [Google Scholar]

- Nawi, N.M.; Chen, G.; Jensen, T.; Mehdizadeh, S.A. Prediction and classification of sugar content of sugarcane based on skin scanning using visible and shortwave near infrared. Biosyst. Eng. 2013, 115, 154–161. [Google Scholar] [CrossRef]

- Morishita, Y.; Omachi, T.; Asano, K.; Ohtera, Y.; Yamada, H. Study on non-destructive measurement of sugar content of peach fruit utilizing photonic crystal-type NIR spectroscopic camera. In Proceedings of the International Workshop on Emerging ICT, Sendai, Japan, 31 October–2 November 2016. [Google Scholar]

- Fu, X.; Wang, X.; Rao, X. An LED-based spectrally tuneable light source for visible and near-infrared spectroscopy analysis: A case study for sugar content estimation of citrus. Biosyst. Eng. 2017, 163, 87–93. [Google Scholar] [CrossRef]

- Gomes, V.M.; Fernandes, A.M.; Faia, A.; Melo-Pinto, P. Comparison of different approaches for the prediction of sugar content in new vintage of whole Port wine grape berries using hyperspectral imaging. Comput. Electron. Agric. 2017, 140, 244–254. [Google Scholar]

- Khodabakhshian, R.; Emadi, B.; Khojastehpour, M.; Golzarian, M.R.; Sazgarnia, A. Development of a multispectral imaging system for online quality assessment of pomegranate fruit. Int. J. Food Prop. 2017, 20, 107–118. [Google Scholar]

- Rady, A.M.; Guyer, D.E.; Watson, N.J. Near-infrared spectroscopy and hyperspectral imaging for sugar content evaluation in potatoes over multiple growing seasons. Food Anal. Methods 2021, 14, 581–595. [Google Scholar]

- Wickramasinghe, W.A.N.D.; Ekanayake, E.M.S.L.N.; Wijedasa, M.A.C.S.; Wijesinghe, A.D.; Madhujith, T.; Ekanayake, M.P.B.; Godaliyadda, G.M.R.I.; Herath, H.M.V.R. Validation of multispectral imaging for the detection of sugar adulteration in black tea. In Proceedings of the 10th International Conference on Information and Automation for Sustainability, Padukka, Sri Lanka, 11–13 August 2021. [Google Scholar]

- Wu, D.; He, Y. Study on for soluble solids contents measurement of grape juice beverage based on Vis/NIRS and chemomtrics. Proc. SPIE 2007, 6788, 639–647. [Google Scholar]

- Zhang, C.; Liu, F.; Kong, W.; He, Y. Application of visible and near-infrared hyperspectral imaging to determine soluble protein content in oilseed rape leaves. Sensors 2015, 15, 16576–16588. [Google Scholar] [CrossRef]

- Ahn, D.; Choi, J.-Y.; Kim, H.-C.; Cho, J.-S.; Moon, K.-D. Estimating the composition of food nutrients from hyperspectral signals based on deep neural networks. Sensors 2019, 19, 1560. [Google Scholar] [CrossRef]

- Chungcharoen, T.; Donis-Gonzalez, I.; Phetpan, K.; Udompetnikul, V.; Sirisomboon, P.; Suwalak, R. Machine learning-based prediction of nutritional status in oil palm leaves using proximal multispectral images. Comput. Electron. Agric. 2022, 198, 107019. [Google Scholar] [CrossRef]

- Food Nutrient Database. The Ministry of Korea Food and Drug Safety (KFDA). Available online: https://various.foodsafetykorea.go.kr/nutrient/nuiIntro/nui/intro.do (accessed on 28 November 2022).

| Food Item | Weight | Calorie | Food Item | Weight | Calorie |

|---|---|---|---|---|---|

| Units | g | kcal | Units | g | kcal |

| apple juice | 180.5 | N/a | pork (steamed) | 119.3 | 441.41 |

| almond milk | 175.5 | 41.57 | potato chips | 23.5 | 130.82 |

| banana | 143.6 | 127.80 | potato chips (onion flavor) | 23.5 | 133.95 |

| banana milk | 174.6 | 110.27 | sports drink (blue) | 170 | 17.00 |

| chocolate bar (high protein) | 35 | 167.00 | chocolate bar (with fruits) | 40 | 170.00 |

| beef steak | 68.1 | 319.39 | milk pudding | 140 | 189.41 |

| beef steak with source | 79 | 330.29 | ramen (Korean-style noodles) | 308 | 280.00 |

| black noodles | 127.4 | 170.00 | rice (steamed) | 172.3 | 258.45 |

| black noodles with oil | 132.4 | N/a | rice cake | 119.3 | 262.46 |

| blacktea | 168 | 52.68 | rice cake and honey | 127.9 | 288.60 |

| bread | 47.8 | 129.54 | rice juice | 173.8 | 106.21 |

| bread and butter | 54.8 | 182.04 | rice (steamed, low-calorie) | 164.6 | 171.18 |

| castella | 89.9 | 287.68 | multi-grain rice | 175.3 | 258.08 |

| cherryade | 168 | 79.06 | rice noodles | 278 | 140.00 |

| chicken breast | 100.6 | 109.00 | cracker | 41.5 | 217.88 |

| chicken noodles | 70 | 255.00 | salad1 (lettuce and cucumber) | 96.8 | 24.20 |

| black chocolate | 40.37 | 222.04 | salad1 with olive oil | 106 | 37.69 |

| milk chocolate | 41 | 228.43 | salad2 (cabbage and carrot) | 69.1 | 17.28 |

| chocolate milk | 180.1 | 122.62 | salad2 with fruit-dressing | 79 | 28.04 |

| cider | 166 | 70.55 | armond cereal (served with milk) | 191.7 | 217.36 |

| clam chowder | 160 | 90.00 | corn cereal (served with milk) | 192 | 205.19 |

| coffee | 167 | 18.56 | soybean milk | 171.9 | 85.95 |

| coffee with sugar (10%) | 167 | 55.74 | spagetti | 250 | 373.73 |

| coffee with sugar (20%) | 167 | 92.92 | kiwi soda (sugar-free) | 166 | 2.34 |

| coffee with sugar (30%) | 167 | 130.11 | tofu | 138.6 | 62.37 |

| coke | 166 | 76.36 | cherry tomato | 200 | 36.00 |

| corn milk | 166.6 | 97.18 | tomato juice | 176.8 | 59.80 |

| corn soup | 160 | 85.00 | cherry tomato and syrup | 210 | 61.90 |

| cup noodle | 262.5 | 120.00 | fruit soda | 169 | 27.04 |

| rice with tuna and pepper | 305 | 418.15 | vinegar | 168 | 20.16 |

| dietcoke | 166 | 0.00 | pure water | 166 | 0.00 |

| choclate bar | 50 | 249.00 | watermelon juice | 177.7 | 79.97 |

| roasted duck | 117.2 | 360.98 | grape soda | 170.9 | 92.43 |

| orange soda | 173.6 | 33.33 | grape soda (sugar-free) | 170.9 | 0.00 |

| orange soda (sugar-free) | 166.2 | 2.77 | fried potato | 110.5 | 331.50 |

| fried potato and powder | 120 | 364.92 | yogurt | 179 | 114.56 |

| sports drink | 177.1 | 47.23 | yogurt and sugar | 144.6 | 106.04 |

| ginger tea | 178.3 | 96.79 | milk soda | 167 | 86.84 |

| honey tea | 183.9 | 126.69 | salt crackers | 41.3 | 218.89 |

| caffelatte | 171.6 | 79.13 | onion soap | 160 | 83.00 |

| caffelatte with sugar (10%) | 171.6 | 115.66 | orange juice | 182.6 | 82.17 |

| caffelatte with sugar (20%) | 171.6 | 152.19 | peach (cutted) | 142 | 55.38 |

| caffelatte with sugar (30%) | 171.6 | 188.72 | pear juice | 181.5 | 90.02 |

| mango candy | 36.4 | 91.00 | peach and syrup | 192 | 124.80 |

| mango jelly | 58.6 | 212.43 | peanuts | 37.1 | 217.96 |

| milk | 171 | 94.50 | peanuts and salt | 37.3 | 218.21 |

| sweet milk | 171 | N/a | milk tea | 167 | 63.46 |

| green soda | 174.5 | 84.55 | pizza (beef) | 85.5 | 212.08 |

| pizza (seafood) | 60 | 148.83 | pizza (potato) | 72.3 | 179.34 |

| pizza (combination) | 70.9 | 175.87 | plain yogurt | 143.7 | 109.89 |

| sports drink (white) | 175.8 | 43.95 | |||

| mean | 141.17 | 139.27 | |||

| standard deviation | 60.69 | 101.36 | |||

| Food Items | RGB-Image | RGB-Image |

|---|---|---|

| +Image at 890 nm | ||

| black noodles with oil | 58.33 | 100 |

| coffee with sugar (10%) | 16.67 | 100 |

| orange soda | 47.22 | 100 |

| caffelatte with sugar (30%) | 25.00 | 97.22 |

| peach (cut) | 55.56 | 97.22 |

| milk pudding | 44.44 | 100 |

| rice cake | 44.44 | 86.11 |

| rice cake and honey | 52.78 | 100 |

| salad1 with olive oil | 47.22 | 94.44 |

| salad2 (cabbage and carrot) | 47.22 | 94.44 |

| grape soda | 0.00 | 44.44 |

| Food Items | RGB-Image | RGB-Image |

|---|---|---|

| +Image at 970 nm | ||

| salad1 (lettuce and cucumber) | 94.46 | 20.85 |

| salad2 (cabbage and carrot) | 85.2 | 35.37 |

| rice with tuna and pepper | 51.68 | 6.27 |

| orange soda (sugar-free) | 63.06 | 18.19 |

| salad2 with fruit dressing | 62.11 | 15.41 |

| cherry tomato | 58.87 | 21.38 |

| fried potato and powder | 55.06 | 25.41 |

| green soda | 52.99 | 17.53 |

| salad1 with olive oil | 62.65 | 28.58 |

| rice cake and honey | 62.1 | 29.52 |

| rice cake | 61.94 | 30.09 |

| No. of images | Selected wavelengths (nm) | |||||||||||||||||||

| 1 | 870 | |||||||||||||||||||

| 2 | 660 | 950 | ||||||||||||||||||

| 3 | 660 | 950 | 970 | |||||||||||||||||

| 4 | 590 | 660 | 950 | 970 | ||||||||||||||||

| 5 | 490 | 660 | 890 | 950 | 970 | |||||||||||||||

| 6 | 405 | 490 | 660 | 890 | 950 | 970 | ||||||||||||||

| 7 | 385 | 405 | 490 | 560 | 660 | 890 | 970 | |||||||||||||

| 8 | 385 | 490 | 560 | 660 | 870 | 890 | 970 | 1020 | ||||||||||||

| 9 | 385 | 405 | 490 | 560 | 850 | 870 | 890 | 970 | 1020 | |||||||||||

| 10 | 385 | 490 | 560 | 645 | 810 | 850 | 870 | 890 | 970 | 1020 | ||||||||||

| 11 | 385 | 490 | 510 | 560 | 625 | 645 | 810 | 850 | 870 | 890 | 970 | |||||||||

| 12 | 385 | 430 | 490 | 510 | 560 | 625 | 810 | 850 | 870 | 890 | 910 | 970 | ||||||||

| 13 | 385 | 405 | 430 | 490 | 510 | 560 | 625 | 850 | 870 | 890 | 910 | 950 | 970 | |||||||

| 14 | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 625 | 850 | 870 | 890 | 910 | 950 | 970 | ||||||

| 15 | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 625 | 645 | 850 | 870 | 890 | 910 | 950 | 970 | |||||

| 16 | 385 | 405 | 430 | 470 | 490 | 510 | 625 | 645 | 660 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 | ||||

| 17 | 385 | 405 | 430 | 470 | 490 | 510 | 625 | 645 | 660 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 | |||

| 18 | 385 | 405 | 430 | 470 | 490 | 510 | 590 | 625 | 645 | 660 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 | ||

| 19 | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 590 | 625 | 645 | 660 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 | |

| No. of images | Selected wavelengths (nm) | |||||||||||||||||||

| 1 | RGB | |||||||||||||||||||

| 2 | RGB | 890 | ||||||||||||||||||

| 3 | RGB | 870 | 970 | |||||||||||||||||

| 4 | RGB | 385 | 870 | 970 | ||||||||||||||||

| 5 | RGB | 385 | 870 | 890 | 970 | |||||||||||||||

| 6 | RGB | 385 | 625 | 870 | 890 | 970 | ||||||||||||||

| 7 | RGB | 385 | 625 | 810 | 870 | 890 | 970 | |||||||||||||

| 8 | RGB | 385 | 625 | 810 | 870 | 890 | 910 | 970 | ||||||||||||

| 9 | RGB | 385 | 430 | 625 | 870 | 890 | 910 | 950 | 970 | |||||||||||

| 10 | RGB | 385 | 430 | 490 | 625 | 870 | 890 | 910 | 950 | 970 | ||||||||||

| 11 | RGB | 385 | 430 | 490 | 625 | 645 | 870 | 890 | 910 | 950 | 970 | |||||||||

| 12 | RGB | 385 | 405 | 430 | 625 | 645 | 870 | 890 | 910 | 950 | 970 | 1020 | ||||||||

| 13 | RGB | 385 | 405 | 430 | 510 | 625 | 645 | 870 | 890 | 910 | 950 | 970 | 1020 | |||||||

| 14 | RGB | 385 | 405 | 430 | 510 | 560 | 625 | 645 | 870 | 890 | 910 | 950 | 970 | 1020 | ||||||

| 15 | RGB | 385 | 405 | 430 | 490 | 510 | 560 | 625 | 645 | 870 | 890 | 910 | 950 | 970 | 1020 | |||||

| 16 | RGB | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 625 | 645 | 870 | 890 | 910 | 950 | 970 | 1020 | ||||

| 17 | RGB | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 625 | 645 | 810 | 870 | 890 | 910 | 950 | 970 | 1020 | |||

| 18 | RGB | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 625 | 645 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 | ||

| 19 | RGB | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 590 | 625 | 660 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 | |

| 20 | RGB | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 590 | 625 | 645 | 660 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 |

| No. of images | Selected wavelengths (nm) | |||||||||||||||||||

| 1 | 430 | |||||||||||||||||||

| 2 | 385 | 970 | ||||||||||||||||||

| 3 | 385 | 560 | 970 | |||||||||||||||||

| 4 | 385 | 430 | 560 | 970 | ||||||||||||||||

| 5 | 385 | 405 | 430 | 560 | 970 | |||||||||||||||

| 6 | 385 | 405 | 430 | 560 | 660 | 970 | ||||||||||||||

| 7 | 385 | 405 | 430 | 510 | 560 | 660 | 970 | |||||||||||||

| 8 | 385 | 405 | 430 | 510 | 560 | 625 | 660 | 970 | ||||||||||||

| 9 | 385 | 405 | 430 | 510 | 560 | 625 | 660 | 910 | 970 | |||||||||||

| 10 | 385 | 405 | 430 | 470 | 510 | 560 | 660 | 850 | 910 | 970 | ||||||||||

| 11 | 385 | 405 | 430 | 470 | 510 | 560 | 645 | 660 | 850 | 910 | 970 | |||||||||

| 12 | 385 | 405 | 430 | 470 | 510 | 560 | 625 | 645 | 660 | 850 | 910 | 970 | ||||||||

| 13 | 385 | 405 | 430 | 470 | 510 | 560 | 590 | 625 | 660 | 850 | 890 | 910 | 970 | |||||||

| 14 | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 625 | 660 | 810 | 850 | 890 | 910 | 970 | ||||||

| 15 | 385 | 405 | 470 | 490 | 510 | 560 | 625 | 645 | 660 | 810 | 850 | 890 | 910 | 950 | 970 | |||||

| 16 | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 625 | 645 | 660 | 810 | 850 | 890 | 910 | 950 | 970 | ||||

| 17 | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 590 | 625 | 645 | 660 | 810 | 850 | 890 | 910 | 950 | 970 | |||

| 18 | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 590 | 625 | 645 | 660 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | ||

| 19 | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 590 | 625 | 645 | 660 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 | |

| No. of images | Selected wavelengths (nm) | |||||||||||||||||||

| 1 | RGB | |||||||||||||||||||

| 2 | RGB | 970 | ||||||||||||||||||

| 3 | RGB | 405 | 1020 | |||||||||||||||||

| 4 | RGB | 405 | 510 | 1020 | ||||||||||||||||

| 5 | RGB | 405 | 510 | 950 | 1020 | |||||||||||||||

| 6 | RGB | 405 | 430 | 510 | 950 | 1020 | ||||||||||||||

| 7 | RGB | 405 | 430 | 510 | 660 | 950 | 1020 | |||||||||||||

| 8 | RGB | 405 | 430 | 470 | 510 | 660 | 950 | 1020 | ||||||||||||

| 9 | RGB | 430 | 470 | 510 | 590 | 660 | 950 | 970 | 1020 | |||||||||||

| 10 | RGB | 385 | 430 | 470 | 590 | 660 | 810 | 950 | 970 | 1020 | ||||||||||

| 11 | RGB | 385 | 405 | 430 | 470 | 590 | 660 | 870 | 950 | 970 | 1020 | |||||||||

| 12 | RGB | 385 | 405 | 430 | 470 | 560 | 590 | 660 | 870 | 950 | 970 | 1020 | ||||||||

| 13 | RGB | 385 | 405 | 430 | 470 | 560 | 590 | 660 | 810 | 870 | 950 | 970 | 1020 | |||||||

| 14 | RGB | 385 | 405 | 430 | 470 | 560 | 590 | 625 | 810 | 850 | 870 | 950 | 970 | 1020 | ||||||

| 15 | RGB | 385 | 405 | 430 | 470 | 490 | 560 | 590 | 625 | 810 | 850 | 870 | 950 | 970 | 1020 | |||||

| 16 | RGB | 385 | 405 | 430 | 470 | 490 | 560 | 625 | 660 | 810 | 850 | 870 | 910 | 950 | 970 | 1020 | ||||

| 17 | RGB | 385 | 405 | 430 | 470 | 490 | 560 | 590 | 625 | 660 | 810 | 850 | 870 | 910 | 950 | 970 | 1020 | |||

| 18 | RGB | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 590 | 625 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 | ||

| 19 | RGB | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 590 | 625 | 660 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 | |

| 20 | RGB | 385 | 405 | 430 | 470 | 490 | 510 | 560 | 590 | 625 | 645 | 660 | 810 | 850 | 870 | 890 | 910 | 950 | 970 | 1020 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, K.-S. Multispectral Food Classification and Caloric Estimation Using Convolutional Neural Networks. Foods 2023, 12, 3212. https://doi.org/10.3390/foods12173212

Lee K-S. Multispectral Food Classification and Caloric Estimation Using Convolutional Neural Networks. Foods. 2023; 12(17):3212. https://doi.org/10.3390/foods12173212

Chicago/Turabian StyleLee, Ki-Seung. 2023. "Multispectral Food Classification and Caloric Estimation Using Convolutional Neural Networks" Foods 12, no. 17: 3212. https://doi.org/10.3390/foods12173212

APA StyleLee, K.-S. (2023). Multispectral Food Classification and Caloric Estimation Using Convolutional Neural Networks. Foods, 12(17), 3212. https://doi.org/10.3390/foods12173212