Evaluation of Ensemble Inflow Forecasts for Reservoir Management in Flood Situations

Abstract

1. Introduction

2. Materials and Methods

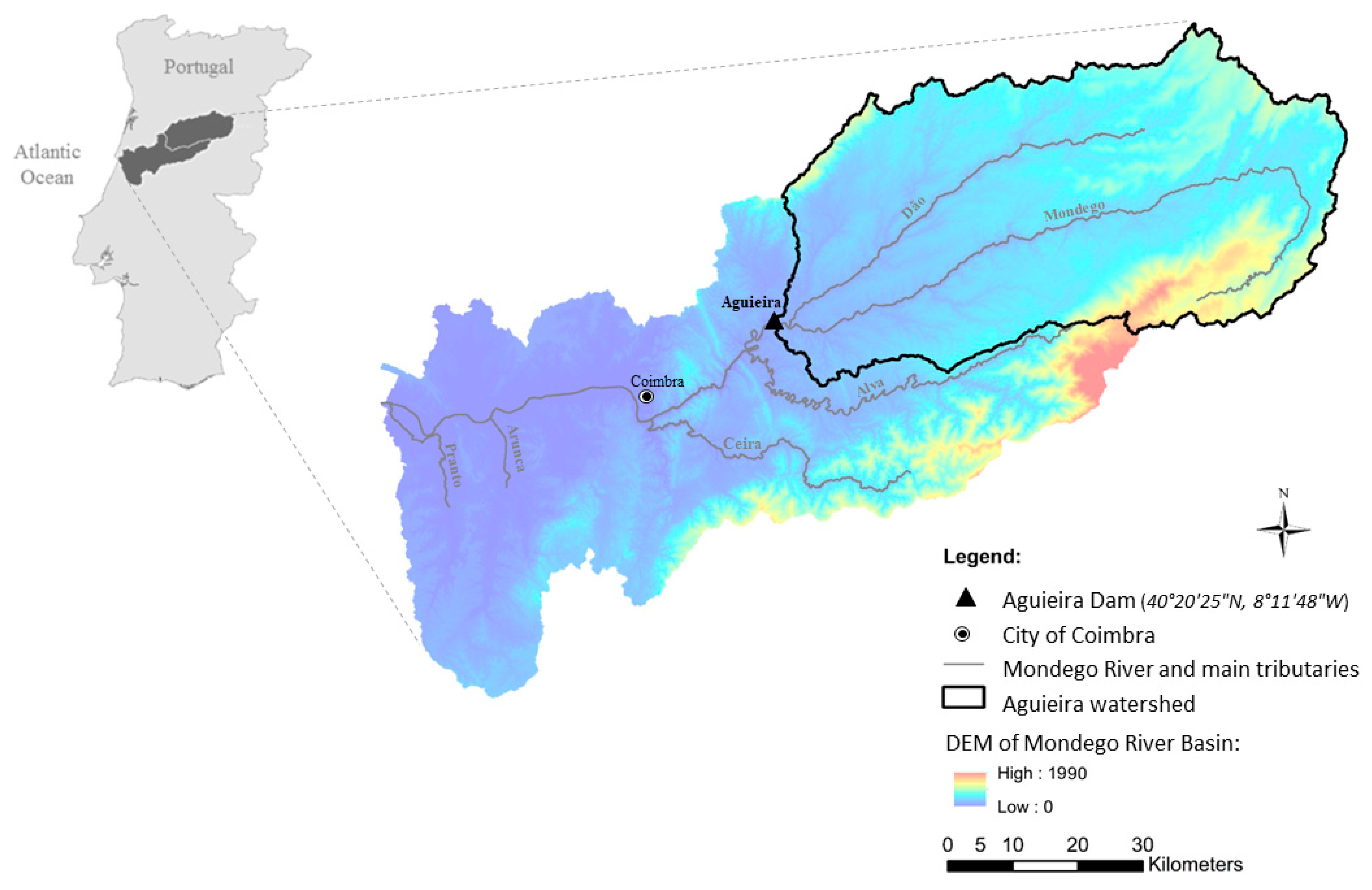

2.1. Study Case

2.2. Methodology

- (a)

- Threshold 1: 100 m3/s, which corresponds to the mean value of the incoming flow to the reservoir during the wet period (December to March), in the 4-year period of records under analysis;

- (b)

- Threshold 2: 500 m3/s, which corresponds to the maximum flow capacity of the hydraulic circuit of the dam, with the circuit discharge corresponding to the one to be first used in case of flooding.

3. Results and Discussions

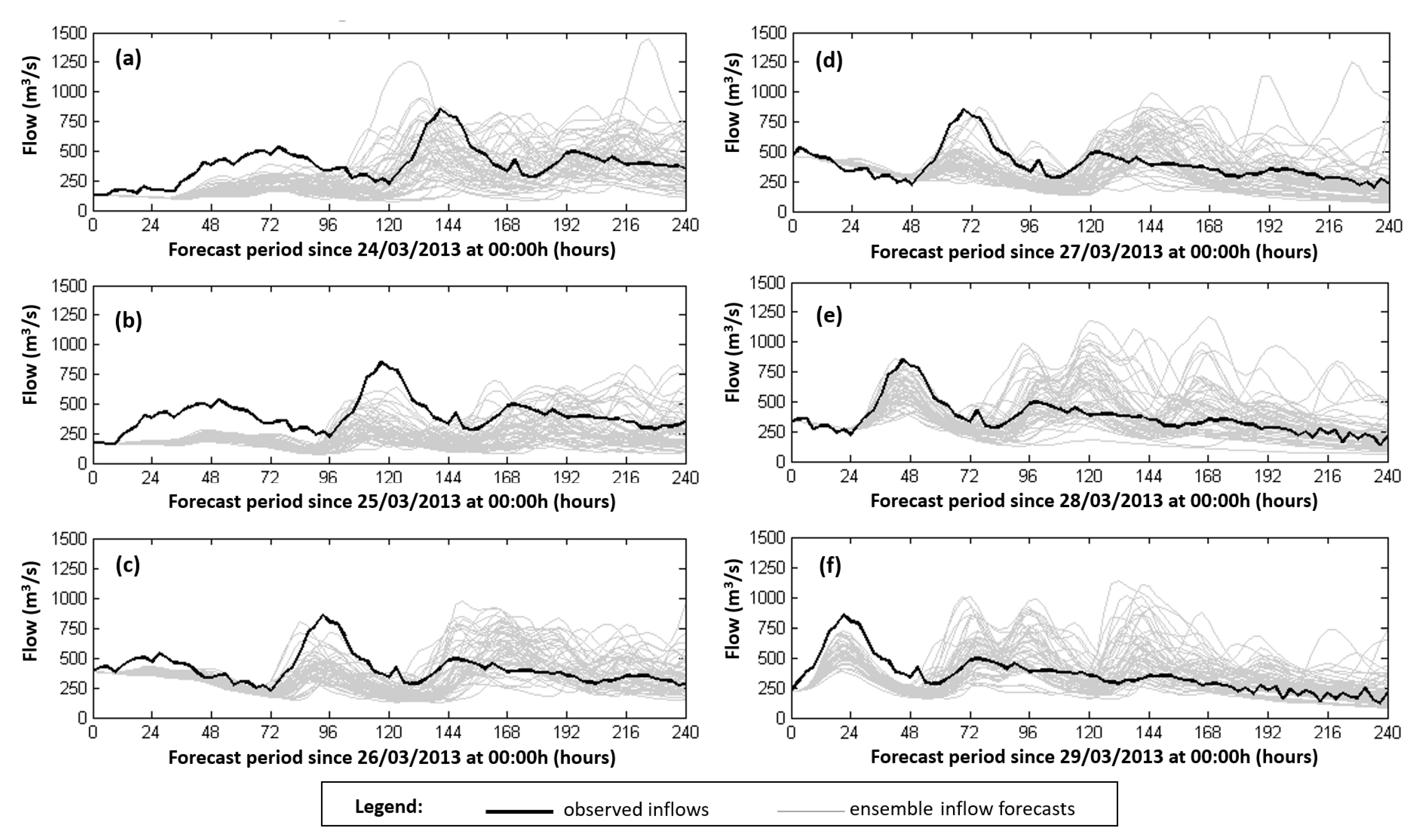

3.1. Graphical Analysis

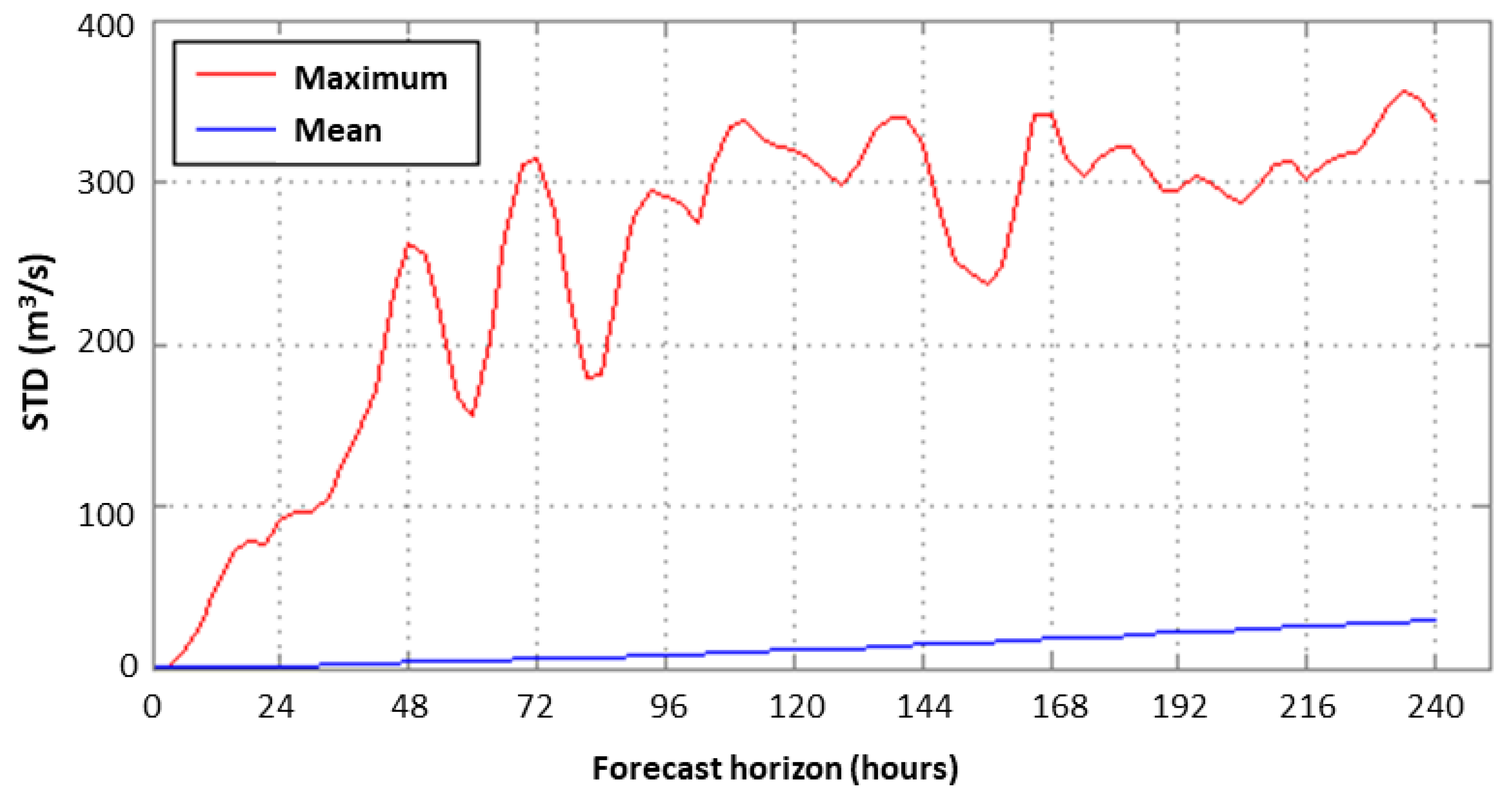

3.2. Statistical Analysis of the Consistency

3.3. Statistical Analysis of the Quality

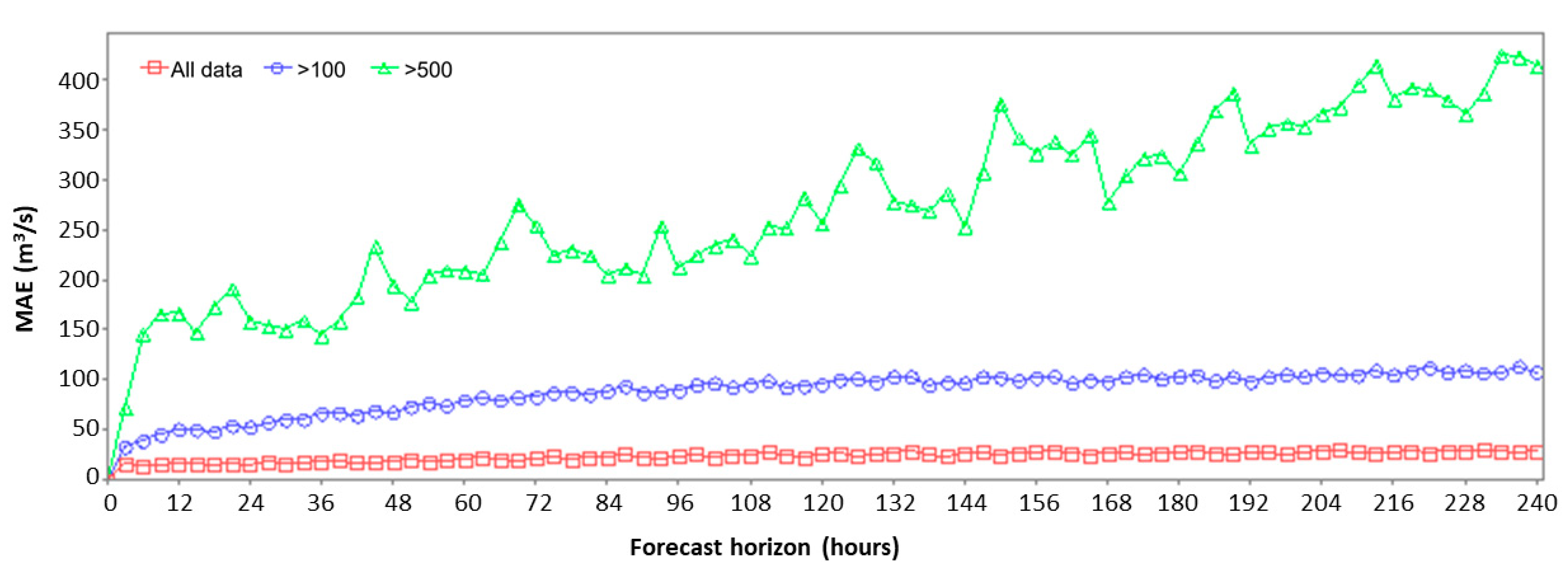

3.3.1. Mean Absolute Error (MAE)

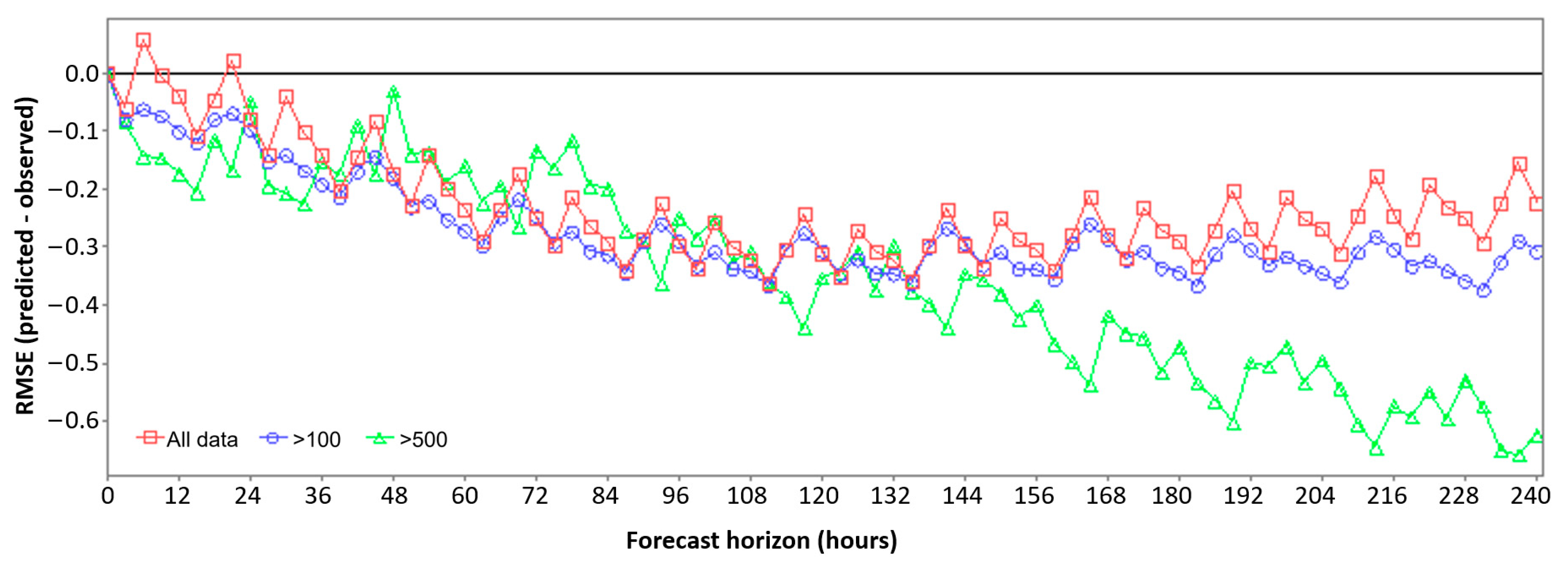

3.3.2. Relative Mean Error (RME)

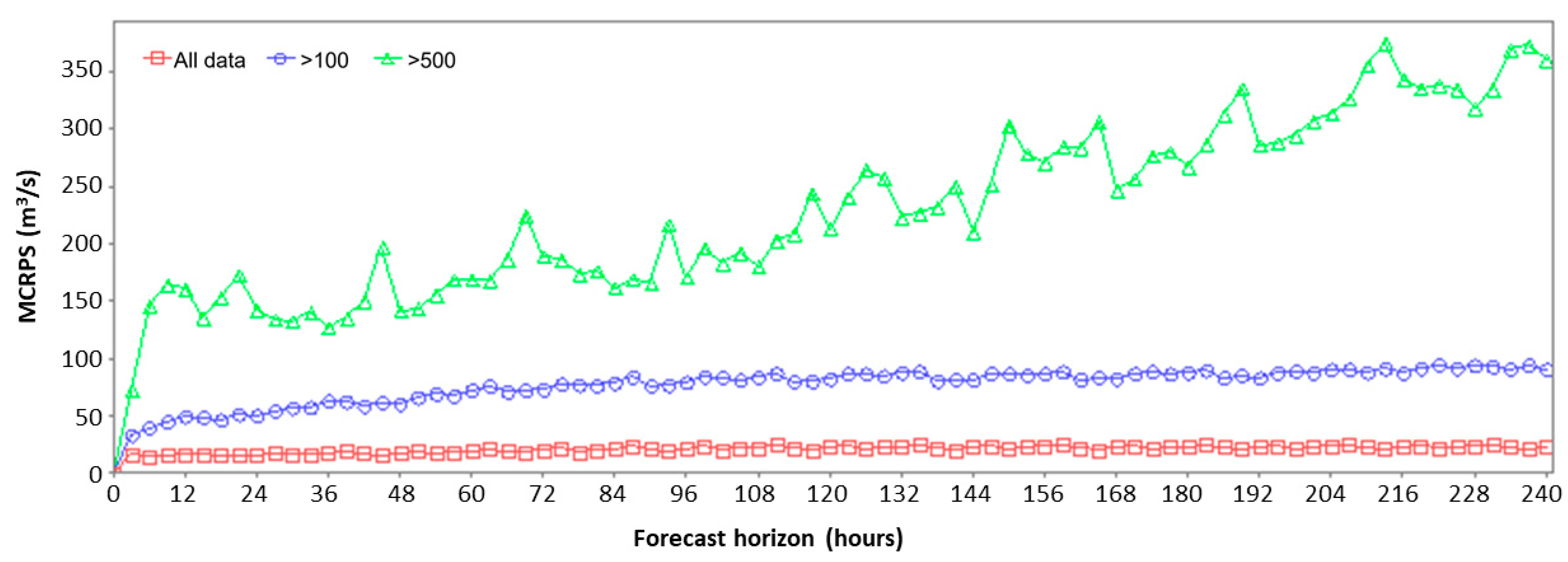

3.3.3. Mean Continuous Rank Probability Score (MCRPS)

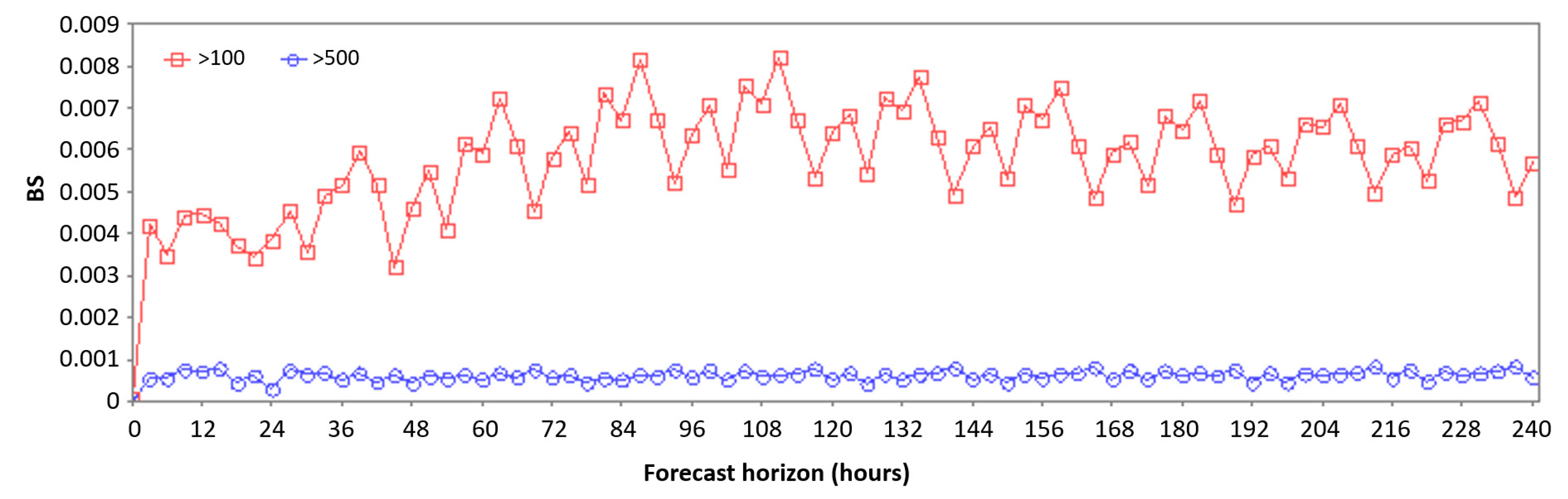

3.3.4. Brier Score (BS)

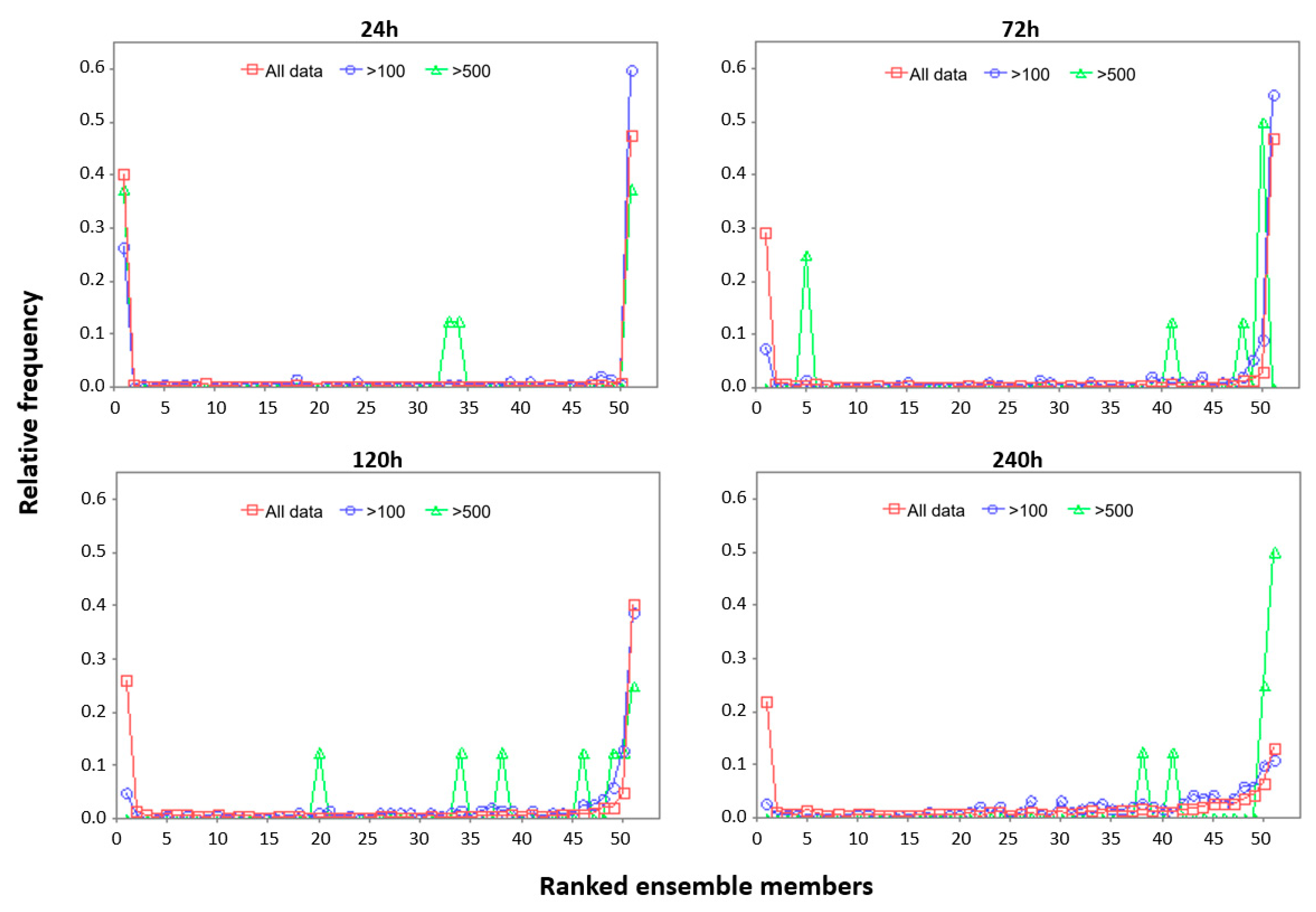

3.3.5. Rank Histogram (RH)

3.3.6. Relative Operating Characteristic Diagram (ROCD)

4. Conclusions

- (i)

- A maximum ensemble value in the first 72 h, which corresponds to the most conservative solution with the lowest associated error, compared to the use of a lower percentile;

- (ii)

- The 75th percentile of the ensemble forecasts in the following hours (from 72 to 240 h), which is a more conservative operational guideline and exhibits a lower relative mean error compared to the use of the mean values of the ensemble forecasts, and, on the other hand, corresponds to a probability of detecting false alarms lower than the use of the maximum ensemble value. In fact, and since the predictions above 72 h show greater dispersion, the 75th percentile (whose values are between the mean value and the ensemble maximum value) was selected as a reference since it minimizes the triggering of unnecessary measures for the control of floods, caused by false alarms, and at the same time preserves a good performance in the detection of true alarms. In addition, the deviations in the reference forecast after the 72hour forecast will not have a significant impact on the operational management of the reservoir since the errors can be minimized with the next day’s forecast.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ramos, M.; Bartholmes, J.; Thielen, J. Development of decision support products based on ensemble forecasts in the European Flood Alert System. Atmos. Science Lett. 2007, 8, 113–119. [Google Scholar] [CrossRef]

- ECMWF. IFS Documentation CY33R1-Part V: Ensemble Prediction System. In IFS Documentation CY33R1; ECMWF: Reading, UK, 2009; Volume 5, pp. 1–25. [Google Scholar] [CrossRef]

- Cloke, H.; Pappenberger, F. Ensemble flood forecasting: A review. J. Hydrol. 2009, 375, 613–626. [Google Scholar] [CrossRef]

- Nobert, S.; Demeritt, D.; Cloke, H. Using Ensemble Predictions for Operational Flood Forecasting: Lessons from Sweden. J. Flood Risk Manag. 2010, 3, 72–79. [Google Scholar] [CrossRef]

- Bao, H.; Zhao, L.; He, Y.; Li, Z.; Wetterhall, F.; Cloke, H.; Pappenberger, F.; Manful, D. Coupling ensemble weather predictions based on TIGGE database with Grid-Xinanjiang model for flood forecast. Adv. Geosci. 2011, 29, 61–67. [Google Scholar] [CrossRef]

- Fan, F. Ensemble Forecast of Inflows to Reservoirs in Large Brazilian River Basins. Ph.D. Thesis, Federal University of Rio Grande do Sul-Institute of Hydraulic Research, Porto Alegre, Brazil, 2015. (In Portuguese). [Google Scholar]

- Mendes, J. Ph.D. Thesis, Faculty of Engineering of University of Porto, Porto, Portugal, 2017. (In Portuguese).

- Ramos, M.; Thielen, J.; Pappenberger, F. Utilisation de la prévision météorologique d’ensemble pour la prévision hydrologique opérationnelle et l’alerte aux crues. In Proceedings of the Colloque SHF-191º CST-“Prévisions hydrométéorologiques”, Lyon, France, 18–19 November 2008. [Google Scholar]

- Wu, W.; Emerton, R.; Duan, Q.; Wood, A.W.; Wetterhall, F.; Robertson, D.E. Ensemble flood forecasting: Current status and future opportunities. WIREs Water 2020, 7, e1432. [Google Scholar] [CrossRef]

- Alemu, E.; Palmer, R.; Polebitski, A.; Meaker, B. A Decision Support System for Optimizing Reservoir Operations Using Ensemble Streamflow Predictions. J. Water Res. Plann. Manag. 2011, 137, 72–82. [Google Scholar] [CrossRef]

- Fan, F.; Schwanenberg, D.; Collischonna, W.; Weerts, A. Verification of inflow into hydropower reservoirs using ensemble forecasts of the TIGGE database for large scale basins in Brazil. J. Hydrol. Region. Stud. 2015, 4, 196–227. [Google Scholar] [CrossRef]

- Arsenault, R.; Côte, P. Analysis of the effects of biases in ensemble streamflow prediction (ESP) forecasts on electricity production in hydropower reservoir management. Hydrol. Earth Syst. Sci. 2019, 23, 2735–2750. [Google Scholar] [CrossRef]

- Peng, A.; Zang, X.; Peng, Y.; Xu, W.; You, F. The application of ensemble precipitation forecasts to reservoir operation. Water Suppl. 2019, 19, 588–595. [Google Scholar] [CrossRef]

- Delaney, C.J.; Hartman, R.K.; Mendoza, J.; Dettinger, M.; DelleMonache, L.; Jasperse, J.; Ralph, F.M.; Talbot, C.; Brown, J.; Reynolds, D.; et al. Forecast informed reservoir operations using ensemble streamflow predictions for a multipurpose reservoir in Northern California. Water Res. Res. 2020, 56, e2019WR026604. [Google Scholar] [CrossRef]

- Cassagnole, M.; Ramos, M.H.; Zalachori, I.; Thirel, G.; Garçon, R.; Gailhard, J.; Ouillon, T. Impact of the quality of hydrological forecasts on the management and revenue of hydroelectric reservoirs–a conceptual approach. Hydrol. Earth Syst. Sci. 2021, 25, 1033–1052. [Google Scholar] [CrossRef]

- Coustau, M.; Rousset-Regimbeau, F.; Thirel, G.; Habets, F.; Janet, B.; Martin, E.; Saint-Aubin, C.; Soubeyroux, J. Impact of improved meteorological forcing, profile of soil hydraulic conductivity and data assimilation on an operational Hydrological Ensemble Forecast System over France. J. Hydrol. 2015, 525, 781–792. [Google Scholar] [CrossRef]

- Schaake, J.; Hamill, T.; Buizza, R.; Clark, M. HEPEX: The Hydrological Ensemble Prediction Experiment. Bullet. Am. Meteorol. Soc. 2007, 88, 1541–1547. [Google Scholar] [CrossRef]

- Murphy, A.H. What is a good forecast? An essay on nature of goodness in weather forecasting. Weather Forecast. 1993, 8, 281–293. [Google Scholar] [CrossRef]

- Hashino, T.; Bradley, A.A.; Schwartz, S.S. Evaluation of bias-correction methods for ensemble streamflow volume forecasts. Hydrol. Earth Syst. Sci. 2007, 11, 939–950. [Google Scholar] [CrossRef]

- Brown, J.; Demargne, J.; Seo, D.-J.; Liu, Y. The Ensemble Verification System (EVS): A software tool for verifying ensemble forecasts of hydrometeorological and hydrologic variables at discrete locations. Environ. Model. Softw. 2010, 25, 854–872. [Google Scholar] [CrossRef]

- Pappenberger, F.; Bogner, K.; Wetterhall, F.; He, Y.; Thielen, J. Forecast convergence score: A forecaster’s approach to analysing hydrometeorological forecast systems. Adv. Geosci. 2011, 29, 27–32. [Google Scholar] [CrossRef]

- Pappenberger, F.; Ramos, M.H.; Cloke, H.L.; Wetterhall, F.; Alfieri, L.; Bogner, K.; Salamon, P. How do I know if my forecasts are better? Using benchmarks in hydrological ensemble prediction. J. Hydrol. 2015, 522, 697–713. [Google Scholar] [CrossRef]

- Demargne, J.; Brown, J. HEPEX Science and Challenges: Verification of Ensemble Forecasts. HEPEX 2013. Available online: http://hepex.irstea.fr/hepex-science-and-challenges-verification-of-ensemble-forecasts/ (accessed on 3 August 2022).

- CAWCR. WWRP/WGNE Joint Working Group on Forecast Verification Research. Collaboration for Australian Weather and Climate Research; 2015. Available online: http://www.cawcr.gov.au/projects/verification/ (accessed on 3 August 2022).

- UNISDR. Guidelines for Reducing Flood Losses. A Contribution to the International Strategy for Disaster Reduction. United Nations Office for Disaster Risk Reduction, United Nations 2002. Available online: http://www.un.org/esa/sustdev/publications/flood_guidelines.pdf (accessed on 3 August 2022).

- Roebber, P.; Bosart, L. The complex relationship between forecast skill and forecast value: A real-world analysis. Weather Forecast. 1996, 11, 544–559. [Google Scholar] [CrossRef]

- Mendes, J.; Maia, R. Hydrologic modelling calibration for operational flood forecasting. Water Res. Manag. 2016, 30, 5671–5685. [Google Scholar] [CrossRef]

- Owens, R.G.; Hewson, T.D. ECMWF Forecast User Guide; ECMWF: Reading, UK, 2018. [Google Scholar] [CrossRef]

| Attribute | Evaluation Methods | Type of Forecast | Optimal Result | |

|---|---|---|---|---|

| Total Error | MAE | Deterministic | (N) | Values equal to 0 |

| MCRPS | Ensemble | (N) | ||

| BS | Ensemble | (Y) | ||

| Bias | RME | Deterministic | (N) | |

| Reliability | RH | Ensemble | (Y) | Horizontally Uniform Histogram |

| Discrimination | ROCD | Both | (Y) | Points located in the upper left corner of the diagram (POD = 1 and POFD = 0) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mendes, J.; Maia, R. Evaluation of Ensemble Inflow Forecasts for Reservoir Management in Flood Situations. Hydrology 2023, 10, 28. https://doi.org/10.3390/hydrology10020028

Mendes J, Maia R. Evaluation of Ensemble Inflow Forecasts for Reservoir Management in Flood Situations. Hydrology. 2023; 10(2):28. https://doi.org/10.3390/hydrology10020028

Chicago/Turabian StyleMendes, Juliana, and Rodrigo Maia. 2023. "Evaluation of Ensemble Inflow Forecasts for Reservoir Management in Flood Situations" Hydrology 10, no. 2: 28. https://doi.org/10.3390/hydrology10020028

APA StyleMendes, J., & Maia, R. (2023). Evaluation of Ensemble Inflow Forecasts for Reservoir Management in Flood Situations. Hydrology, 10(2), 28. https://doi.org/10.3390/hydrology10020028