Hand Gesture Signatures Acquisition and Processing by Means of a Novel Ultrasound System

Abstract

:1. Introduction

2. System Description

2.1. Proposed Methodology

2.2. Hardware and Software Details

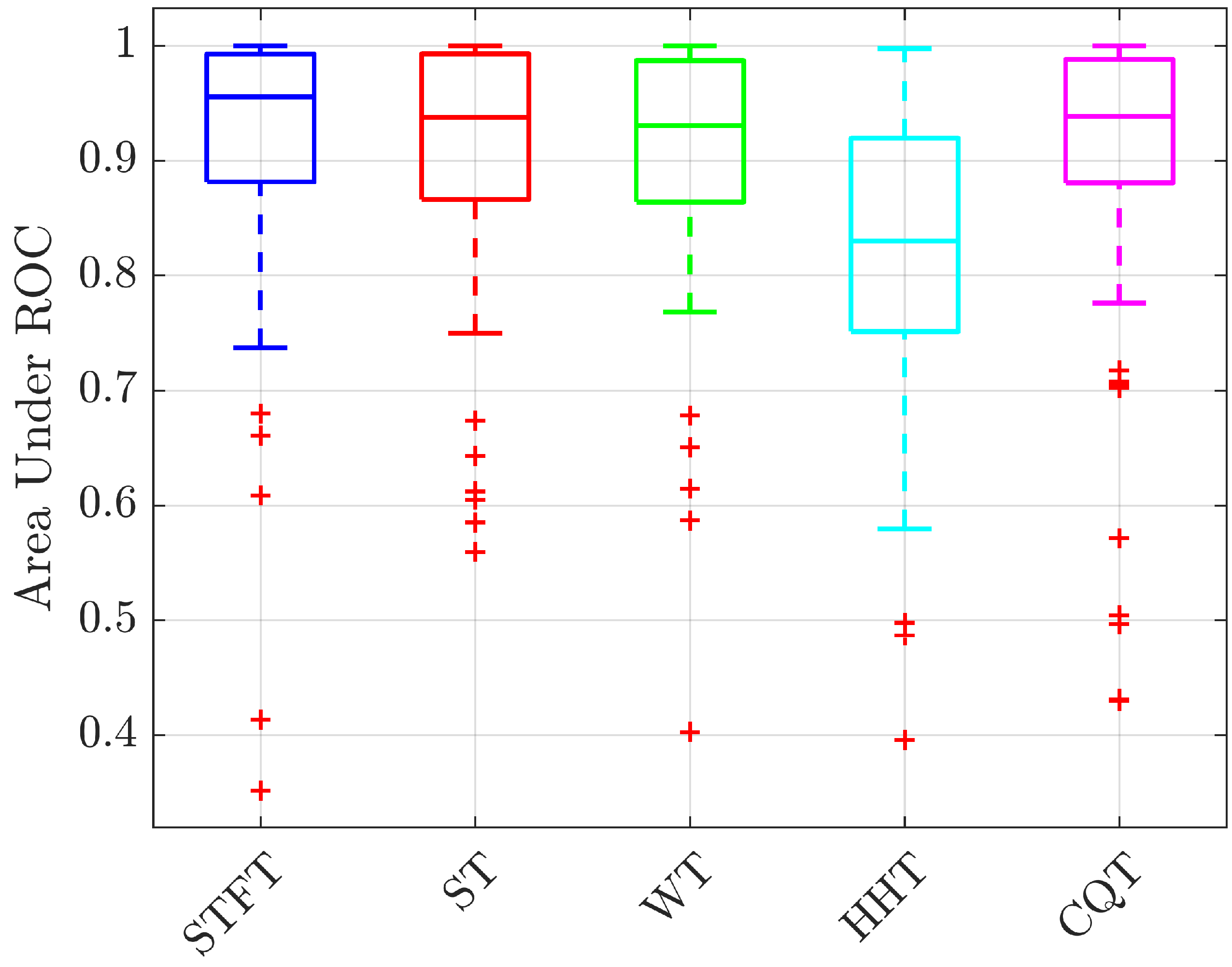

- Short-time Fourier Transform (STFT), a sequence of Fourier transforms of a windowed signal. The result is a two-dimensional (2D) signal representing both time and frequency information, and its absolute value is named Spectrogram.

- Wavelet Transform (WT), which decomposes the signal in a linear combination of orthogonal functions (“Wavelets”). Conversely from STFT, Wavelet transform provides high frequency resolution at low frequencies and high time resolution at high frequencies. The matrix of Wavelet coefficients could be seen as a time/frequency representation named Scalogram or Scaleogram.

- Stokewell Transform (ST), also known as “S-Transform”, which is an extension of the Wavelet transform using a specific Wavelet whose size is inversely proportional to the frequency of the signal.

- Hilbert–Huang Transform (HHT), which decomposes the signal into oscillatory waves named “Intrinsic Mode Functions” (IMF), which are characterized by a time-varying amplitude and frequency. Subsequently, the instantaneous frequencies of each IMF are computed, obtaining the so-called Hilbert spectrum.

- Constant Q Transform (CQT), which follows similar procedures with respect to the STFT with the main difference of non-uniform frequency resolution of time-frequency representation.

3. Prototype Performance Evaluation

3.1. Acquisition Protocol

3.2. Results

3.3. Comparison with the State of the Art

3.4. Computational Burden

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ECG | ElectroCardioGraphic |

| FMCW | Frequency-Modulated Continous Wave |

| US | UltraSound |

| CNN | Convolutional Neural Network |

| STFT | Short-Time Fourier Transform |

| WT | Wavelet Transform |

| ST | Stokewell Transform |

| HHT | Hilbert–Huang Transform |

| IMF | Intrinsic Mode Functions |

| CQT | Constant Q Transform |

| TPR | True Positive Rate |

| FPR | False Positive Rate |

| FNR | False Negative Rate |

| TNR | True Negative Rate |

| ROC | Receiver Operative Curve |

| AUR | Area under ROC |

| EER | Equal Error Rate |

References

- Sims, D. Biometric recognition: Our hands, eyes, and faces give us away. IEEE Comput. Graph. Appl. 1994, 14, 14–15. [Google Scholar] [CrossRef]

- Abuhamad, M.; Abusnaina, A.; Nyang, D.; Mohaisen, D. Sensor-Based Continuous Authentication of Smartphones’ Users Using Behavioral Biometrics: A Contemporary Survey. IEEE Internet Things J. 2021, 8, 65–84. [Google Scholar] [CrossRef]

- Jain, A.K.; Ross, A.; Prabhakar, S. An introduction to biometric recognition. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 4–20. [Google Scholar] [CrossRef] [Green Version]

- Jain, A.K.; Ross, A.; Pankanti, S. Biometrics: A tool for information security. IEEE Trans. Inf. Forensics Secur. 2006, 1, 125–143. [Google Scholar] [CrossRef] [Green Version]

- Jain, A.K. Biometric recognition: Overview and recent advances. In Progress in Pattern Recognition, Image Analysis and Applications; Springer: Berlin/Heidelberg, Germany, 2007; pp. 13–19. [Google Scholar]

- Kodituwakku, S.R. Biometric authentication: A review. Int. J. Trend Res. Dev. 2015, 2, 2394–9333. [Google Scholar]

- Rui, Z.; Yan, Z. A survey on biometric authentication: Toward secure and privacy-preserving identification. IEEE Access 2019, 7, 5994–6009. [Google Scholar] [CrossRef]

- Akhtar, Z.; Micheloni, C.; Foresti, G.L. Biometric liveness detection: Challenges and research opportunities. IEEE Secur. Priv. 2015, 13, 63–72. [Google Scholar] [CrossRef]

- Jaafar, H.; Ismail, N.S. Intelligent person recognition system based on ecg signal. J. Telecommun. Electron. Comput. Eng. (JTEC) 2018, 10, 83–88. [Google Scholar]

- Johnson, B.; Maillart, T.; Chuang, J. My thoughts are not your thoughts. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 1329–1338. [Google Scholar]

- Al-Omari, Y.M.; Abdullah, S.N.H.S.; Omar, K. State-of-the-art in offline signature verification system. In Proceedings of the 2011 International Conference on Pattern Analysis and Intelligence Robotics, Kuala Lumpur, Malaysia, 28–29 June 2011; Volume 1, pp. 59–64. [Google Scholar]

- Sayed, B.; Traoré, I.; Woungang, I.; Obaidat, M.S. Biometric authentication using mouse gesture dynamics. IEEE Syst. J. 2013, 7, 262–274. [Google Scholar] [CrossRef]

- Vandersmissen, B.; Knudde, N.; Jalalvand, A.; Couckuyt, I.; Bourdoux, A.; Neve, W.; Dhaene, T. Indoor person identification using a low-power fmcw radar. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3941–3952. [Google Scholar] [CrossRef] [Green Version]

- Sun, Z.; Wang, Y.; Qu, G.; Zhou, Z. A 3-D Hand Gesture Signature Based Biometric Authentication System for Smartphones; ICST (Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering): Ghent, Belgium, 2011; pp. 157–164. [Google Scholar]

- Yang, L.; Guo, Y.; Ding, X.; han, J.; liu, Y.; Wang, C.; Hu, C. Unlocking Smart Phone through Handwaving Biometrics. IEEE Trans. Mob. Comput. 2015, 38, 1044–1055. [Google Scholar] [CrossRef]

- Rzecki, K.; Pławiak, P.; Niedźwiecki, M.; Sośnicki, T.; Leśkow, J.; Ciesielski, M. Person recognition based on touch screen gestures using computational intelligence methods. Inf. Sci. 2017, 415–416, 70–84. [Google Scholar] [CrossRef]

- Mehrabian, A.; Ferris, S.R. Inference of attitudes from nonverbal communication in two channels. J. Consult. Psychol. 1967, 31, 248–252. [Google Scholar] [CrossRef]

- Ducray, B.; Cobourne, S.; Mayes, K.; Markantonakis, K. Authentication based on a changeable biometric using gesture recognition with the kinect™. In Proceedings of the 2015 International Conference on Biometrics (ICB), Phuket, Thailand, 19–22 May 2015; pp. 38–45. [Google Scholar]

- Malik, J.; Elhayek, A.; Guha, S.; Ahmed, S.; Gillani, A.; Stricker, D. DeepAirSig: End-to-End Deep Learning Based In-Air Signature Verification. IEEE Access 2020, 8, 195832–195843. [Google Scholar] [CrossRef]

- Mendels, O.; Stern, H.; Berman, S. User identification for home entertainment based on free-air hand motion signatures. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 1461–1473. [Google Scholar] [CrossRef]

- Wu, J.; Konrad, J.; Ishwar, P. Dynamic time warping for gesture-based user identification and authentication with Kinect. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–30 May 2013; pp. 2371–2375. [Google Scholar]

- Zheng, Z.; Yu, N.; Zhang, J.; Dai, H.; Wang, Q.; Wang, Q. Wi-ID: WiFi-Based Identification System Using Rock-Paper-Scissors Hand Gestures. Wirel. Pers. Commun. 2022. [Google Scholar] [CrossRef]

- Blumrosen, G.; Fishman, B.; Yovel, Y. Noncontact Wideband Sonar for Human Activity Detection and Classification. IEEE Sens. J. 2014, 14, 4043–4054. [Google Scholar] [CrossRef]

- Iula, A. Ultrasound Systems for Biometric Recognition. Sensors 2019, 19, 2317. [Google Scholar] [CrossRef] [Green Version]

- Chen, V.C.; Tahmoush, D.; Miceli, W.J. Radar Micro-Doppler Signatures; Institution of Engineering and Technology: London, UK, 2014. [Google Scholar]

- Skolnik, M.I. Introduction to Radar Systems, 3rd ed.; McGraw-Hill Education: New York, NY, USA, 2001. [Google Scholar]

- Franceschini, S.; Ambrosanio, M.; Vitale, S.; Baselice, F.; Gifuni, A.; Grassini, G.; Pascazio, V. Hand Gesture Recognition via Radar Sensors and Convolutional Neural Networks. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020; pp. 1–5. [Google Scholar]

- Sejdić, E.; Djurović, I.; Jiang, J. Time–frequency feature representation using energy concentration: An overview of recent advances. Digit. Signal Process. 2009, 19, 153–183. [Google Scholar] [CrossRef]

- Brown, J.C. Calculation of a constant Q spectral transform. J. Acoust. Soc. Am. 1991, 89, 425–434. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote. Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Vitale, S.; Ferraioli, G.; Pascazio, V. Multi-objective cnn-based algorithm for sar despeckling. IEEE Trans. Geosci. Remote. Sens. 2020, 59, 9336–9349. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- lavi, Y.; Birnbaum, D.; Shabaty, O.; Blumrosen, G. Biometric System Based on Kinect Skeletal, Facial and Vocal Features. In Proceedings of the Future Technologies Conference (FTC), Vancouver, BC, Canada, 15–16 November 2018; pp. 884–903. [Google Scholar]

| TPR for FPR = 0.005 | TPR for FPR = 0.01 | TPR for FPR = 0.05 | TPR for FPR = 0.1 | AUR | EER | |

|---|---|---|---|---|---|---|

| Gesture 1 | 0.51 | 0.61 | 0.8 | 0.85 | 0.92 | 0.13 |

| Gesture 2 | 0.32 | 0.41 | 0.68 | 0.78 | 0.89 | 0.15 |

| Gesture 3 | 0.21 | 0.28 | 0.54 | 0.66 | 0.85 | 0.20 |

| TPR for FPR = 0.005 | TPR for FPR = 0.01 | TPR for FPR = 0.05 | TPR for FPR = 0.1 | AUR | EER | |

|---|---|---|---|---|---|---|

| Short-Time Delay | 0.29 | 0.4 | 1 | 1 | 0.99 | 0.04 |

| Long-Time Delay | 0.12 | 0.15 | 0.88 | 0.93 | 0.97 | 0.08 |

| Training Time | Testing Time | |

|---|---|---|

| Short-Time Fourier Transform | 6 min | 3 ms |

| Stokewell Transform | 35 min | 27 ms |

| Wavelet Transform | 30 min | 25 ms |

| Hilbert-Huang Transform | 70 min | 13 ms |

| Constant Q Transform | 8 min | 8 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Franceschini, S.; Ambrosanio, M.; Pascazio, V.; Baselice, F. Hand Gesture Signatures Acquisition and Processing by Means of a Novel Ultrasound System. Bioengineering 2023, 10, 36. https://doi.org/10.3390/bioengineering10010036

Franceschini S, Ambrosanio M, Pascazio V, Baselice F. Hand Gesture Signatures Acquisition and Processing by Means of a Novel Ultrasound System. Bioengineering. 2023; 10(1):36. https://doi.org/10.3390/bioengineering10010036

Chicago/Turabian StyleFranceschini, Stefano, Michele Ambrosanio, Vito Pascazio, and Fabio Baselice. 2023. "Hand Gesture Signatures Acquisition and Processing by Means of a Novel Ultrasound System" Bioengineering 10, no. 1: 36. https://doi.org/10.3390/bioengineering10010036

APA StyleFranceschini, S., Ambrosanio, M., Pascazio, V., & Baselice, F. (2023). Hand Gesture Signatures Acquisition and Processing by Means of a Novel Ultrasound System. Bioengineering, 10(1), 36. https://doi.org/10.3390/bioengineering10010036