The Comfort and Measurement Precision-Based Multi-Objective Optimization Method for Gesture Interaction

Abstract

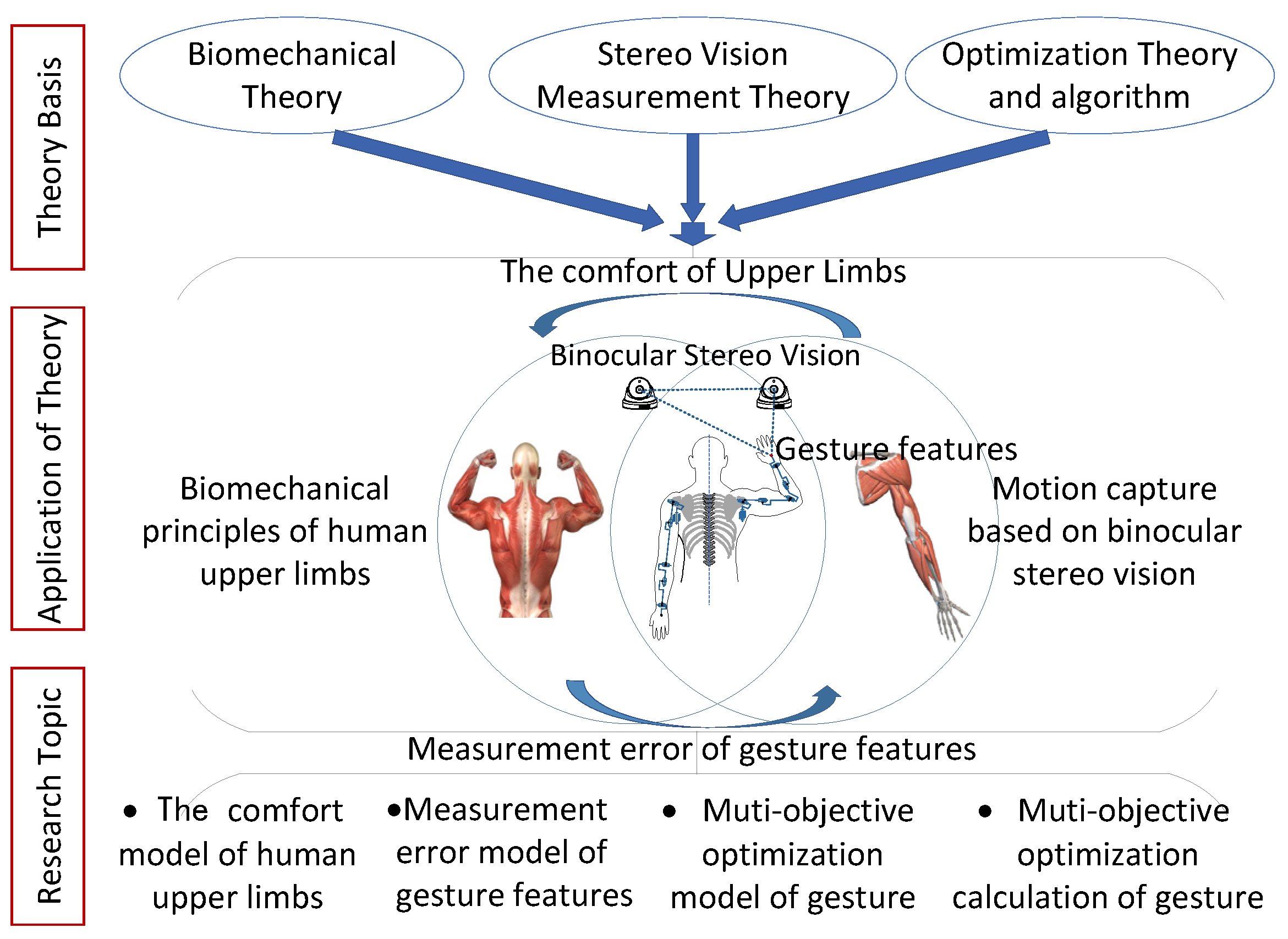

:1. Introduction

2. Gesture Comfort Modeling

2.1. Muscle Mechanical Energy Expenditure of Gesture

2.2. Comfort Model of Gesture

3. Measurement Precision Modeling

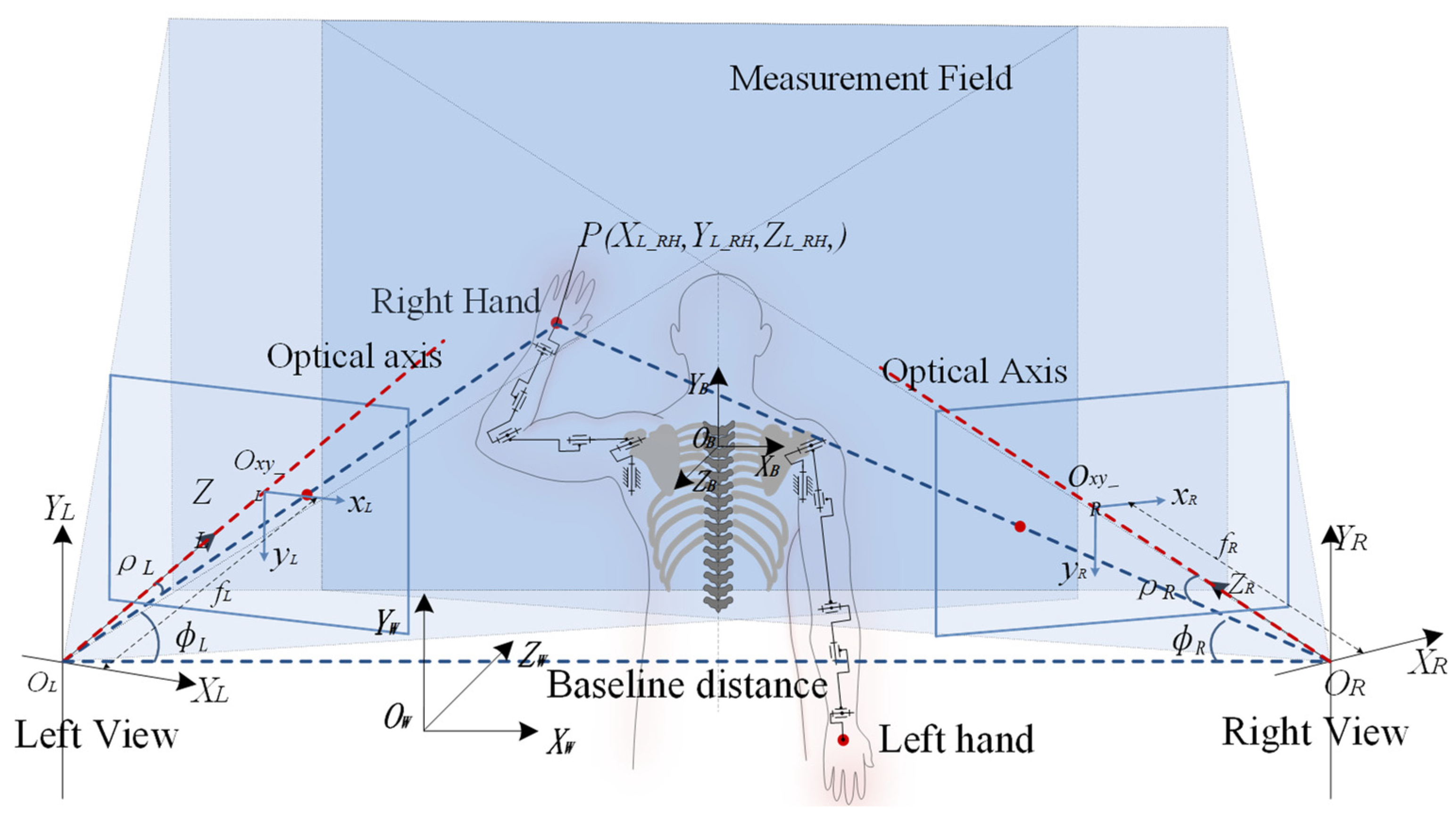

3.1. Depth Stereo Measurement Model

3.2. Measurement Precision Model

4. Multi-Objective Optimization Method for Gestures

4.1. Multi-Objective Optimization Model

4.2. Multi-Objective Optimization Calculation

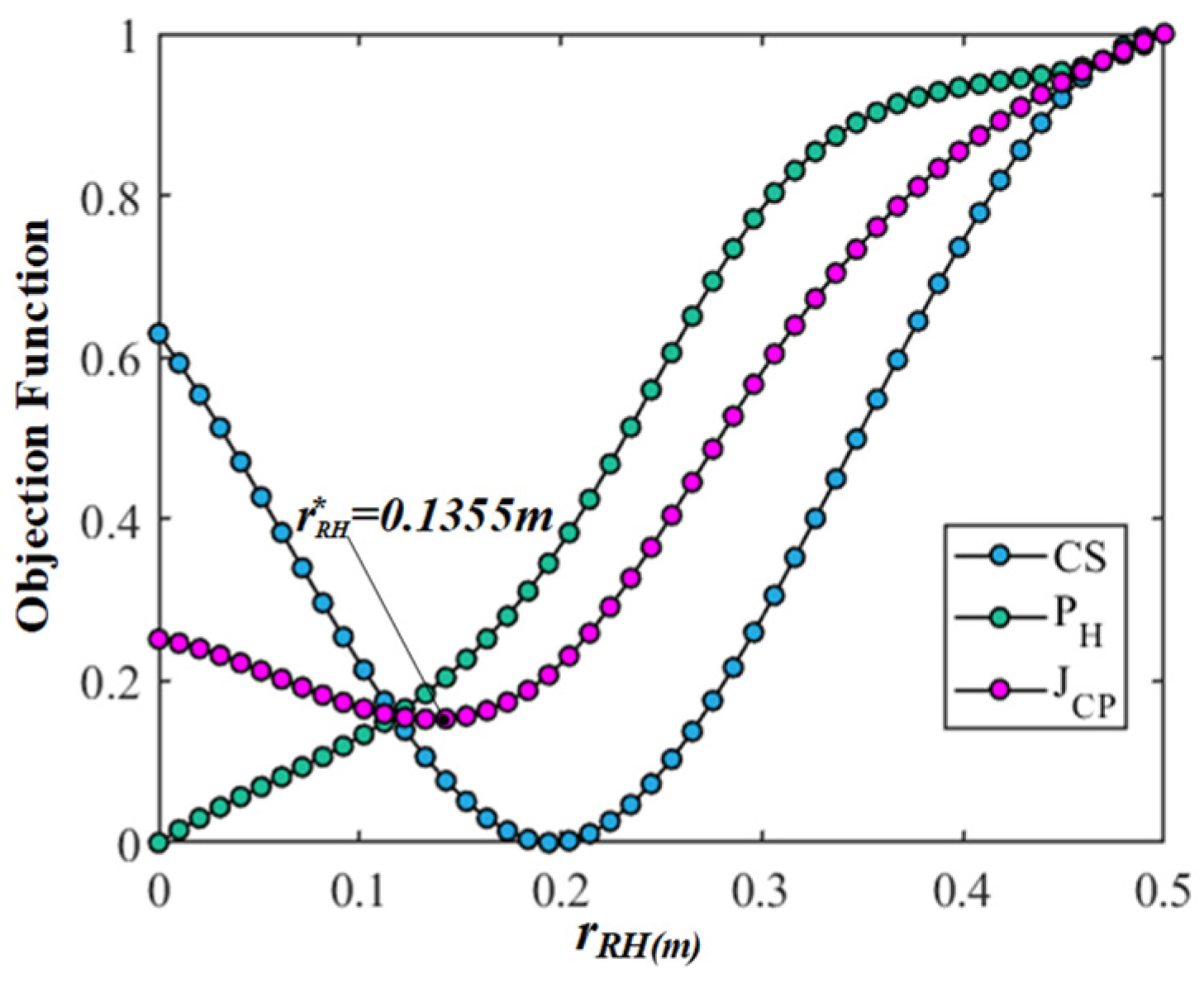

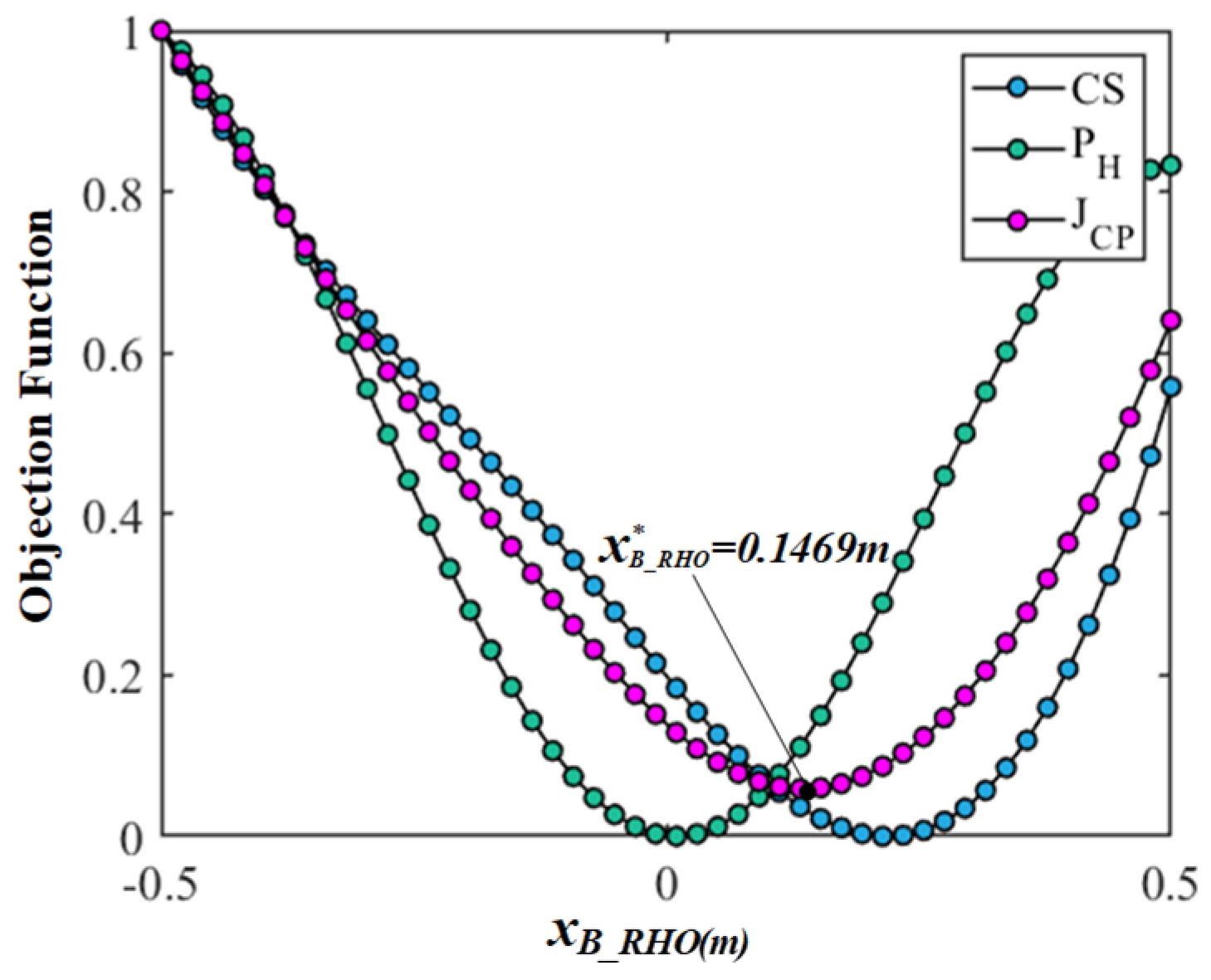

4.3. Case Analysis and Results of Multi-Objective Optimization of Gestures

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gupta, S.; Maple, C.; Crispo, B.; Raja, K.; Yautsiukhin, A.; Martinelli, F. A Survey of Human-Computer Interaction (HCI) & Natural Habits-Based Behavioural Biometric Modalities for User Recognition Schemes. Pattern Recognit. 2023, 139, 109453. [Google Scholar] [CrossRef]

- Zakia, U.; Menon, C. Detecting Safety Anomalies in PHRI Activities via Force Myography. Bioengineering 2023, 10, 326. [Google Scholar] [CrossRef] [PubMed]

- Ezzameli, K.; Mahersia, H. Emotion Recognition from Unimodal to Multimodal Analysis: A Review. Inf. Fusion 2023, 99, 101847. [Google Scholar] [CrossRef]

- Ryumin, D.; Ivanko, D.; Ryumina, E. Audio-Visual Speech and Gesture Recognition by Sensors of Mobile Devices. Sensors 2023, 23, 2284. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.; Sun, B.; Wang, L.; Bai, Y.; Li, K.; Fu, Y. Skeleton Aware Multi-Modal Sign Language Recognition. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; pp. 3408–3418. [Google Scholar] [CrossRef]

- Hrúz, M.; Gruber, I.; Kanis, J.; Boháček, M.; Hlaváč, M.; Krňoul, Z. Ensemble Is What We Need: Isolated Sign Recognition Edition. Sensors 2022, 22, 5043. [Google Scholar] [CrossRef] [PubMed]

- Novopoltsev, M.; Verkhovtsev, L.; Murtazin, R.; Milevich, D.; Zemtsova, I. Fine-Tuning of Sign Language Recognition Models: A Technical Report. arXiv 2023. [Google Scholar] [CrossRef]

- Ryumin, D.; Ivanko, D.; Axyonov, A. Cross-Language Transfer Learning Using Visual Information For Automatic Sign Gesture Recognition. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2023, 48, 209–216. [Google Scholar] [CrossRef]

- Chung, J.; Zisserman, A. Lip Reading in the Wild (LRW) Dataset. Asian Conf. Comput. Vis. 2016, 2016, 10112. [Google Scholar]

- Sincan, O.M.; Keles, H.Y. AUTSL: A Large Scale Multi-Modal Turkish Sign Language Dataset and Baseline Methods. IEEE Access 2020, 8, 181340–181355. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. Investigating Critical Frequency Bands and Channels for EEG-Based Emotion Recognition with Deep Neural Networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. AffectNet: A Database for Facial Expression, Valence, and Arousal Computing in the Wild. IEEE Trans. Affect. Comput. 2019, 10, 18–31. [Google Scholar] [CrossRef]

- Mao, J.; Xu, R.; Yin, X.; Chang, Y.; Nie, B.; Huang, A. POSTER++: A Simpler and Stronger Facial Expression Recognition Network. arXiv 2023, arXiv:2301.12149. [Google Scholar]

- She, J.; Hu, Y.; Shi, H.; Wang, J.; Shen, Q.; Mei, T. Dive into Ambiguity: Latent Distribution Mining and Pairwise Uncertainty Estimation for Facial Expression Recognition. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 6244–6253. [Google Scholar] [CrossRef]

- Chunhui, J.; Zhang, J. Research on Car Gesture Interaction Design Based on the Line Design. In Lecture Notes in Computer Science; Rau, P.L., Ed.; Springer: Cham, Germany, 2017; Volume 10281. [Google Scholar] [CrossRef]

- Johnson-Glenberg, M. Immersive VR and Education: Embodied Design Principles That Include Gesture and Hand Controls. Front. Robot. AI 2018, 5, 81. [Google Scholar] [CrossRef]

- Rautaray, S.S.; Agrawal, A. Vision Based Hand Gesture Recognition for Human Computer Interaction: A Survey. Artif. Intell. Rev. 2015, 43, 1–54. [Google Scholar] [CrossRef]

- Brambilla, C.; Nicora, M.L.; Storm, F.; Reni, G.; Malosio, M.; Scano, A. Biomechanical Assessments of the Upper Limb for Determining Fatigue, Strain and Effort from the Laboratory to the Industrial Working Place: A Systematic Review. Bioengineering 2023, 10, 445. [Google Scholar] [CrossRef]

- Wu, C.; Chen, W.; Lin, C.H. Depth-Based Hand Gesture Recognition. Multimed. Tools Appl. 2016, 75, 7065–7086. [Google Scholar] [CrossRef]

- Kee, D.; Karwowski, W. LUBA: An Assessment Technique for Postural Loading on the Upper Body Based on Joint Motion Discomfort and Maximum Holding Time. Appl. Ergon. 2001, 32, 357–366. [Google Scholar] [CrossRef]

- Escalona, E.; Hernández, M.; Yanes, E.L.; Yanes, L.; Yanes, L. Ergonomic Evaluation in a Values Transportation Company in Venezuela. Work 2012, 41, 710–713. [Google Scholar] [CrossRef]

- Sala, E.; Cipriani, L.; Bisioli, A.; Paraggio, E.; Tomasi, C.; Apostoli, P.; Palma, G. De A Twenty-Year Retrospective Analysis of Risk Assessment of Biomechanical Overload of the Upper Limbs in Multiple Occupational Settings: Comparison of Different Ergonomic Methods. Bioengineering 2023, 10, 580. [Google Scholar] [CrossRef]

- Andreoni, G.; Mazzola, M.; Ciani, O.; Zambetti, M.; Romero, M.; Costa, F.; Preatoni, E. Method for Movement and Gesture Assessment (MMGA) in Ergonomics. In Digital Human Modeling. ICDHM 2009. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5620, pp. 591–598. [Google Scholar] [CrossRef]

- Nowara, R.; Holzgreve, F.; Golbach, R.; Wanke, E.M.; Maurer-grubinger, C.; Erbe, C.; Brueggmann, D.; Nienhaus, A.; Groneberg, D.A.; Ohlendorf, D. Testing the Level of Agreement between Two Methodological Approaches of the Rapid Upper Limb Assessment (RULA) for Occupational Health Practice—An Exemplary Application in the Field of Dentistry. Bioengineering 2023, 10, 477. [Google Scholar] [CrossRef] [PubMed]

- Keyvani, A.; Hanson, L. Ergonomic Risk Assessment of a Manikin’s Wrist Movements-a Test Study in Manual Assembly. In Proceedings of the Second International Digital Human Modeling Symposium, San Diego, CA, USA, 11–13 June 2013. [Google Scholar]

- Qing, T.; Jinsheng, K.; Wen-Lei, S.; Shou-Dong, W.; Zhao-Bo, L. Analysis of the Sitting Posture Comfort Based on Motion Capture System and JACK Software. In Proceedings of the 23rd International Conference on Automation & Computing, Huddersfield, UK, 7–8 September 2017; pp. 7–8. [Google Scholar] [CrossRef]

- Smulders, M.; Berghman, K.; Koenraads, M.; Kane, J.A.; Krishna, K.; Carter, T.K.; Schultheis, U. Comfort and Pressure Distribution in a Human Contour Shaped Aircraft Seat (Developed with 3D Scans of the Human Body). Work 2016, 54, 925–940. [Google Scholar] [CrossRef]

- Liu, S.; Schiavon, S.; Das, H.P.; Jin, M.; Spanos, C.J. Personal Thermal Comfort Models with Wearable Sensors. Build. Environ. 2019, 162, 106281. [Google Scholar] [CrossRef]

- Wyss, T.; Roos, L.; Beeler, N.; Veenstra, B.; Delves, S.; Buller, M.; Friedl, K. The Comfort, Acceptability and Accuracy of Energy Expenditure Estimation from Wearable Ambulatory Physical Activity Monitoring Systems in Soldiers. J. Sci. Med. Sport 2017, 20, S133–S134. [Google Scholar] [CrossRef]

- Mcdevitt, S.; Hernandez, H.; Hicks, J.; Lowell, R.; Bentahaikt, H.; Burch, R.; Ball, J.; Chander, H.; Freeman, C.; Taylor, C.; et al. Wearables for Biomechanical Performance Optimization and Risk Assessment in Industrial and Sports Applications. Bioengineering 2022, 9, 33. [Google Scholar] [CrossRef]

- Stern, H.I.; Wachs, J.P.; Edan, Y. Human Factors for Design of Hand Gesture Human–Machine Interaction. IEEE Int. Conf. Syst. Man Cybern. 2006, 5, 4052–4056. [Google Scholar] [CrossRef]

- Stern, H.I.; Wachs, J.P.; Edan, Y. Hand Gesture Vocabulary Design: A Multicriteria Optimization. IEEE Int. Conf. Syst. Man Cybern. 2004, 1, 19–23. [Google Scholar] [CrossRef]

- Herman, B.; Zahraee, A.H.; Szewczyk, J.; Morel, G.; Bourdin, C.; Vercher, J.L.; Gayet, B. Ergonomic and Gesture Performance of Robotized Instruments for Laparoscopic Surgery. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1333–1338. [Google Scholar] [CrossRef]

- Xu, Y.; Chen, L.Y.; Zhang, H.B.; Zhao, X.; Tian, Y.S.; Ding, L. Optimal Pressure Comfort Design for Pilot Helmets. Comput. Methods Biomech. Biomed. Engin. 2018, 21, 437–443. [Google Scholar] [CrossRef]

- Battini, D.; Delorme, X.; Dolgui, A.; Persona, A.; Sgarbossa, F. Ergonomics in Assembly Line Balancing Based on Energy Expenditure: A Multi-Objective Model. Int. J. Prod. Res. 2016, 54, 824–845. [Google Scholar] [CrossRef]

- Zhang, Q.; Tao, J.; Yang, Q.; Fan, S. The Simulation and Evaluation of Fatigue/Load System in Basic Nursing Based on JACK. J. Phys. Conf. Ser. 2021, 1983, 12096. [Google Scholar] [CrossRef]

- Miller, R.H. A Comparison of Muscle Energy Models for Simulating Human Walking in Three Dimensions. J. Biomech. 2014, 47, 1373–1381. [Google Scholar] [CrossRef]

- Kistemaker, D.A.; Wong, J.D.; Gribble, P.L. The Central Nervous System Does Not Minimize Energy Cost in Arm Movements. J. Neurophysiol. 2010, 104, 2985–2994. [Google Scholar] [CrossRef]

- Pang, L.; Li, P.; Bai, L.; Liu, D.; Zhou, Y.; Yao, J. Optimization of Air Distribution Mode Coupled Interior Design for Civil Aircraft Cabin. Build. Environ. 2018, 134, 131–145. [Google Scholar] [CrossRef]

- De Temmerman, J.; Deprez, K.; Hostens, I.; Anthonis, J.; Ramon, H. Conceptual Cab Suspension System for a Self-Propelled Agricultural Machine–Part 2: Operator Comfort Optimisation. Biosyst. Eng. 2005, 90, 271–278. [Google Scholar] [CrossRef]

- Ramamurthy, N.V.; Vinayagam, B.K.; Roopchand, J. Comfort Level Refinement of Military Tracked Vehicle Crew through Optimal Control Study. Def. Sci. J. 2018, 68, 265–272. [Google Scholar] [CrossRef]

- Pinzon, J.A.; Vergara, P.P.; Da Silva, L.C.P.; Rider, M.J. Optimal Management of Energy Consumption and Comfort for Smart Buildings Operating in a Microgrid. IEEE Trans. Smart Grid 2019, 10, 3236–3247. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, L.; Hu, Y.; Yang, F. Vibration Characteristics Analysis of Convalescent-Wheelchair Robots Equipped with Dynamic Absorbers. Shock Vib. 2018, 2018, 5393051. [Google Scholar] [CrossRef]

- Wang, W.; Qin, X.; Zheng, C.; Wang, H.; Li, J.; Niu, J. Mechanical Energy Expenditure-Based Comfort Evaluation Model for Gesture Interaction. Comput. Intell. Neurosci. 2018, 2018, 9861697. [Google Scholar] [CrossRef]

- Zago, M.; Luzzago, M.; Marangoni, T.; De Cecco, M.; Tarabini, M.; Galli, M. 3D Tracking of Human Motion Using Visual Skeletonization and Stereoscopic Vision. Front. Bioeng. Biotechnol. 2020, 8, 181. [Google Scholar] [CrossRef]

- Hussmann, S.; Knoll, F.; Edeler, T. Modulation Method Including Noise Model for Minimizing the Wiggling Error of TOF Cameras. IEEE Trans. Instrum. Meas. 2014, 63, 1127–1136. [Google Scholar] [CrossRef]

- Huang, H.; Liu, J.; Liu, S.; Jin, P.; Wu, T.; Zhang, T. Error Analysis of a Stereo-Vision-Based Tube Measurement System. Meas. J. Int. Meas. Confed. 2020, 157, 107659. [Google Scholar] [CrossRef]

- Wang, Q.; Yin, Y.; Zou, W.; Xu, D. Measurement Error Analysis of Binocular Stereo Vision: Effective Guidelines for Bionic Eyes. IET Sci. Meas. Technol. 2017, 11, 829–838. [Google Scholar] [CrossRef]

- Ma, C.; Wang, A.; Chen, G.; Xu, C. Hand Joints-Based Gesture Recognition for Noisy Dataset Using Nested Interval Unscented Kalman Filter with LSTM Network. Vis. Comput. 2018, 34, 1053–1063. [Google Scholar] [CrossRef]

- Gorevoĭ, A.V.; Kolyuchkin, V.Y.; Machikhin, A.S. Estimating the Measurement Error of the Coordinates of Markers on Images Recorded with a Stereoscopic System. J. Opt. Technol. 2020, 87, 266. [Google Scholar] [CrossRef]

- Sankowski, W.; Włodarczyk, M.; Kacperski, D.; Grabowski, K. Estimation of Measurement Uncertainty in Stereo Vision System. Image Vis. Comput. 2017, 61, 70–81. [Google Scholar] [CrossRef]

The spring damping system represents the human muscle model.

The spring damping system represents the human muscle model.

The spring damping system represents the human muscle model.

The spring damping system represents the human muscle model.

| Variable Values | Radius | |||

|---|---|---|---|---|

| (m) | (m) | (m) | (m) | |

| 0 | 0 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Hou, Y.; Tian, S.; Qin, X.; Zheng, C.; Wang, L.; Shang, H.; Wang, Y. The Comfort and Measurement Precision-Based Multi-Objective Optimization Method for Gesture Interaction. Bioengineering 2023, 10, 1191. https://doi.org/10.3390/bioengineering10101191

Wang W, Hou Y, Tian S, Qin X, Zheng C, Wang L, Shang H, Wang Y. The Comfort and Measurement Precision-Based Multi-Objective Optimization Method for Gesture Interaction. Bioengineering. 2023; 10(10):1191. https://doi.org/10.3390/bioengineering10101191

Chicago/Turabian StyleWang, Wenjie, Yongai Hou, Shuangwen Tian, Xiansheng Qin, Chen Zheng, Liting Wang, Hepeng Shang, and Yuangeng Wang. 2023. "The Comfort and Measurement Precision-Based Multi-Objective Optimization Method for Gesture Interaction" Bioengineering 10, no. 10: 1191. https://doi.org/10.3390/bioengineering10101191