A Three-Stage Fusion Neural Network for Predicting the Risk of Root Fracture—A Pilot Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collections

2.2. Datasets

2.2.1. Categorical Data

2.2.2. Numerical Data

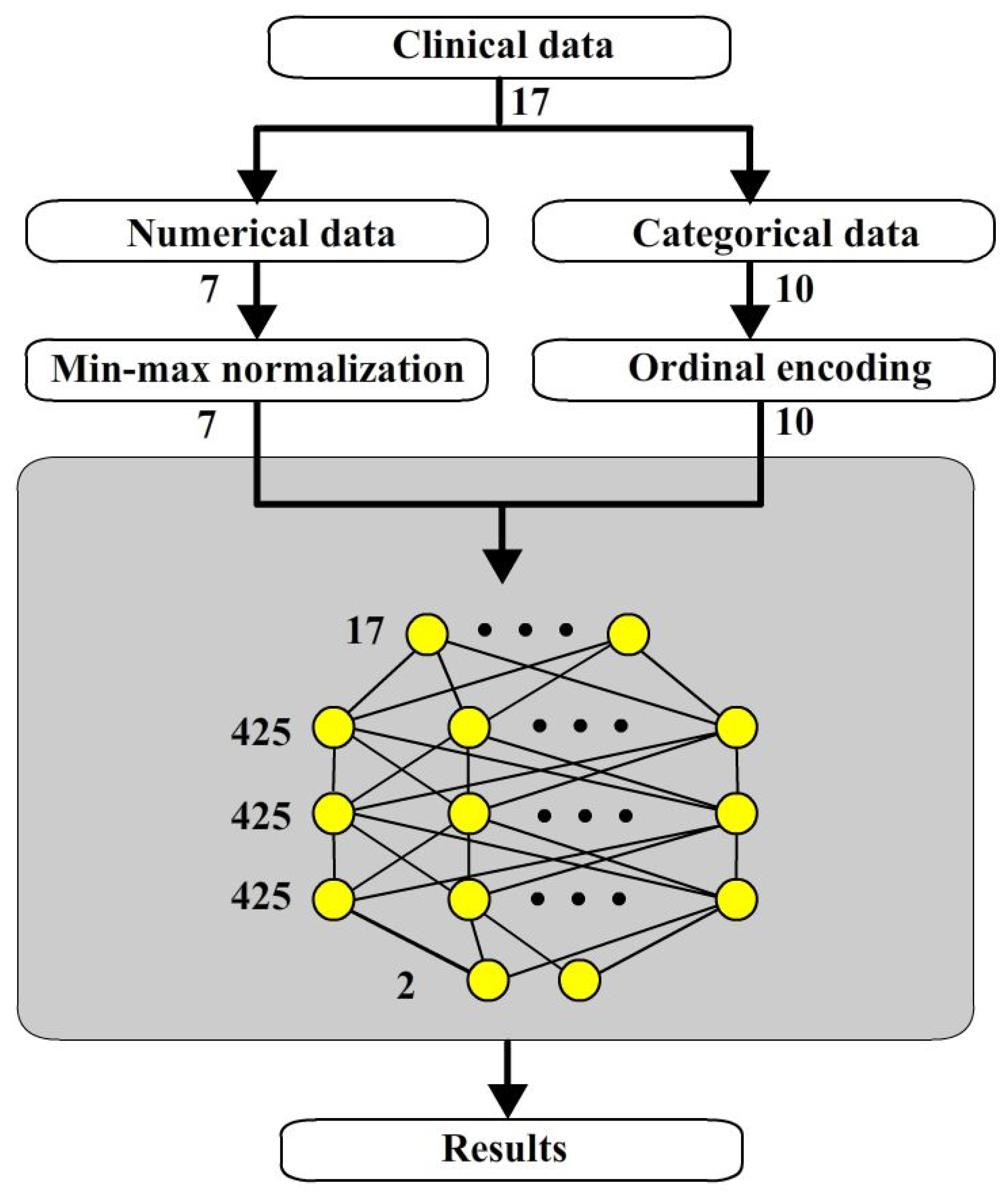

2.3. Stage 1 Numerical Neural Network (NNN)

2.3.1. Architecture of Numerical Neural Network

2.3.2. Min-Max Normalization for Numerical Items

2.4. Stage 2 Categorical Neural Network (CNN)

2.4.1. Ordinal Encoding for Categorical Items

2.4.2. Embedding

2.4.3. Categorical Neural Network

2.5. Three Stage Fusion Neural Networks

2.6. Batch Normalization

3. Results

3.1. Validation Methods

3.2. Evaluation Methods

3.3. Performance of Batch Normalization

3.4. Performance of Embedding Layer

3.5. Performance of Fusion Neural Networks

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| TSFNN | Three Stages Fusion Neural Network |

| NNN | Numerical Neural Network |

| CNN | Categorical Neural Network |

| FNN | Fusion Neural Network |

References

- Touré, B.; Faye, B.; Kane, A.W.; Lo, C.M.; Niang, B.; Boucher, Y. Analysis of reasons for extraction of endodontically treated teeth: A prospective study. J. Endod. 2011, 37, 1512–1515. [Google Scholar] [CrossRef] [PubMed]

- Rivera, E.M.; Walton, R.E. Longitudinal tooth fractures: Findings that contribute to complex endodontic diagnoses. Endod. Top. 2007, 16, 82–111. [Google Scholar] [CrossRef]

- Sedgley, C.M.; Messer, H.H. Are endodontically treated teeth more brittle? J. Endod. 1992, 18, 332–335. [Google Scholar] [CrossRef] [PubMed]

- Reeh, E.S.; Messer, H.H.; Douglas, W.H. Reduction in tooth stiffness as a result of endodontic and restorative procedures. J. Endod. 1989, 15, 512–516. [Google Scholar] [CrossRef]

- Barreto, M.S.; Moraes, R.A.; Rosa, R.A.; Moreira, C.H.C.; Só, M.V.R.; Bier, C.A.S. Vertical root fractures and dentin defects: Effects of root canal preparation, filling, and mechanical cycling. J. Endod. 2012, 38, 1135–1139. [Google Scholar] [CrossRef]

- Wilcox, L.R.; Roskelley, C.; Sutton, T. The relationship of root canal enlargement to finger-spreader induced vertical root fracture. J. Endod. 1997, 23, 533–534. [Google Scholar] [CrossRef]

- Saw, L.H.; Messer, H.H. Root strains associated with different obturation techniques. J. Endod. 1995, 21, 314–320. [Google Scholar] [CrossRef] [PubMed]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry–A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef]

- Boreak, N. Effectiveness of artificial intelligence applications designed for endodontic diagnosis, decision-making, and prediction of prognosis: A systematic review. J. Contemp. Dent. Pract. 2020, 21, 926–934. [Google Scholar] [CrossRef]

- Saghiri, M.A.; Asgar, K.; Boukani, K.K.; Lotfi, M.; Aghili, H.; Delvarani, A.; Karamifar, K.; Saghiri, A.M.; Mehrvarzfar, P.; Garcia-Godoy, F. A new approach for locating the minor apical foramen using an artificial neural network. Int. Endod. J. 2012, 45, 257–265. [Google Scholar] [CrossRef]

- Mahmoud, Y.E.; Labib, S.S.; Mokhtar, H.M. Clinical prediction of teeth periapical lesion based on machine learning techniques. In Proceedings of the Second International Conference on Digital Information Processing, Data Mining, and Wireless Communications, Dubai, United Arab Emirates, 16–18 December 2015. [Google Scholar]

- Chang, W.T.; Huang, H.Y.; Lee, T.M.; Sung, T.Y.; Yang, C.H.; Kuo, Y.M. Predicting root fracture after root canal treatment and crown installation using deep learning. J. Dent. Sci. 2024, 19, 587–593. [Google Scholar] [CrossRef] [PubMed]

- Ekert, T.; Krois, J.; Meinhold, L.; Elhennawy, K.; Emara, R.; Golla, T.; Schwendicke, F. Deep learning for the radiographic detection of apical lesions. J. Endod. 2019, 45, 917–922. [Google Scholar] [CrossRef] [PubMed]

- Orhan, K.; Bayrakdar, I.S.; Ezhov, M.; Kravtsov, A.; Özyürek, T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. Int. Endod. J. 2020, 53, 680–689. [Google Scholar] [CrossRef]

- Fukuda, M.; Inamoto, K.; Shibata, N.; Ariji, Y.; Yanashita, Y.; Kutsuna, S.; Nakata, K.; Katsumata, A.; Fujita, H.; Ariji, E. Evaluation of an artificial intelligence system for detecting vertical root fracture on panoramic radiography. Oral Radiol. 2020, 36, 337–343. [Google Scholar] [CrossRef] [PubMed]

- Hatvani, J.; Horváth, A.; Michetti, J.; Basarab, A.; Kouamé, D.; Gyöngy, M. Deep learning-based super-resolution applied to dental computed tomography. IEEE Trans. Radiat. Plasma Med. Sci. 2018, 3, 120–128. [Google Scholar] [CrossRef]

- Hiraiwa, T.; Ariji, Y.; Fukuda, M.; Kise, Y.; Nakata, K.; Katsumata, A.; Fujita, H.; Ariji, E. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofacial Radiol. 2019, 48, 20180218. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, K.; Lyu, P.; Li, H.; Zhang, L.; Wu, J.; Lee, C.H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019, 9, 3840. [Google Scholar] [CrossRef]

- Campo, L.; Aliaga, I.J.; De Paz, J.F.; García, A.E.; Bajo, J.; Villarubia, G.; Corchado, J.M. Retreatment predictions in odontology by means of CBR systems. Comput. Intell. Neurosci. 2016, 2016, 1–11. [Google Scholar] [CrossRef]

- Johari, M.; Esmaeili, F.; Andalib, A.; Garjani, S.; Saberkari, H. Detection of vertical root fractures in intact and endodontically treated premolar teeth by designing a probabilistic neural network: An ex vivo study. Dentomaxillofacial Radiol. 2017, 46, 20160107. [Google Scholar] [CrossRef]

- Kuwada, C.; Ariji, Y.; Fukuda, M.; Kise, Y.; Fujita, H.; Katsumata, A.; Ariji, E. Deep learning systems for detecting and classifying the presence of impacted supernumerary teeth in the maxillary incisor region on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 130, 464–469. [Google Scholar] [CrossRef]

- Pinto-Coelho, L. How artificial intelligence is shaping medical imaging technology: A survey of innovations and applications. Bioengineering 2023, 10, 1435. [Google Scholar] [CrossRef] [PubMed]

- Avesta, A.; Hossain, S.; Lin, M.; Aboian, M.; Krumholz, H.M.; Aneja, S. Comparing 3D, 2.5D, and 2D approaches to brain Image auto-segmentation. Bioengineering 2023, 10, 181. [Google Scholar] [CrossRef] [PubMed]

| No | Items | Options |

|---|---|---|

| 1 | sex | Male, Female |

| 2 | previous dental fractures | Yes, No |

| 3 | previous prostheses | Yes, No |

| 4 | preoperative pain | Yes, No |

| 5 | percussion pain | Yes, No |

| 6 | endodontical retreatment | Yes, No |

| 7 | tooth position | Maxillary anterior teeth |

| maxillary molars | ||

| maxillary premolar | ||

| Mandibular front teeth | ||

| Mandibular molars | ||

| Mandibular premolar | ||

| 8 | posts placement | None |

| Para post | ||

| Casting post | ||

| Fiber post | ||

| Screw post | ||

| 9 | abutment of removable dentures | None |

| or | fixed partial dental prostheses | |

| Abutment of removable dentures | ||

| fixed partial dental prostheses | Both | |

| 10 | previous sapicoectomy | None |

| or | Previous sapicoectomy | |

| root amputation | Root amputation | |

| 11 | the age at the time of treatment | number |

| 12 | quantity of remaining tooth walls | number |

| 13 | duration from completion of root canal treatment until the date of prosthetic installation | number |

| 14 | tooth wear condition | number |

| 15 | periodontal condition | number |

| 16 | remaining root canal wall thickness | number |

| 17 | pericervical dentin thickness | number |

| No | Items | Options | Ordinal Code |

|---|---|---|---|

| 1 | sex | Male | 1 |

| Female | 2 | ||

| 2 | previous dental fractures | Yes | 3 |

| No | 4 | ||

| 3 | previous prostheses | Yes | 5 |

| No | 6 | ||

| 4 | preoperative pain | Yes | 7 |

| No | 8 | ||

| 5 | percussion pain | Yes | 9 |

| No | 10 | ||

| 6 | endodontical retreatment | Yes | 11 |

| No | 12 | ||

| 7 | tooth position | Maxillary anterior teeth | 13 |

| maxillary molars | 14 | ||

| maxillary premolar | 15 | ||

| Mandibular front teeth | 16 | ||

| Mandibular molars | 17 | ||

| Mandibular premolar | 18 | ||

| 8 | posts placement | None | 19 |

| Para post | 20 | ||

| Casting post | 21 | ||

| Fiber post | 22 | ||

| Screw post | 23 | ||

| 9 | abutment of removable dentures | None | 24 |

| or | fixed partial dental prostheses | 25 | |

| Abutment of removable dentures | 26 | ||

| fixed partial dental prostheses | Both of them | 27 | |

| 10 | previous sapicoectomy | None | 28 |

| or | Prvious sapicoectomy | 29 | |

| root amputation | Root amputation | 30 |

| Architectures | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| TSFNN | 0.759 | 0.818 | 0.823 | 0.819 |

| TSFNN with batch normalization | 0.821 | 0.822 | 0.937 | 0.874 |

| Improvement | 0.062 | 0.004 | 0.114 | 0.055 |

| Architectures | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Ordinal encoding only | 0.703 | 0.757 | 0.813 | 0.784 |

| Ordinal encoding with embedding layer | 0.821 | 0.822 | 0.937 | 0.874 |

| Improvement | 0.118 | 0.065 | 0.124 | 0.090 |

| Architectures | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Two-way NN | 0.752 | 0.780 | 0.887 | 0.828 |

| TSFNN | 0.821 | 0.822 | 0.937 | 0.874 |

| Improvement | 0.069 | 0.042 | 0.05 | 0.046 |

| Methods | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| SVM | 0.717 | 0.719 | 0.947 | 0.817 |

| ANNs | 0.731 | 0.801 | 0.793 | 0.796 |

| Two-way ANNs | 0.752 | 0.780 | 0.887 | 0.828 |

| TSFNN | 0.821 | 0.822 | 0.937 | 0.874 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kuo, Y.-M.; Kuo, L.-Y.; Huang, H.-Y.; Sung, T.-Y.; Yang, C.-H.; Chang, W.-T.; Lo, C.-S. A Three-Stage Fusion Neural Network for Predicting the Risk of Root Fracture—A Pilot Study. Bioengineering 2025, 12, 447. https://doi.org/10.3390/bioengineering12050447

Kuo Y-M, Kuo L-Y, Huang H-Y, Sung T-Y, Yang C-H, Chang W-T, Lo C-S. A Three-Stage Fusion Neural Network for Predicting the Risk of Root Fracture—A Pilot Study. Bioengineering. 2025; 12(5):447. https://doi.org/10.3390/bioengineering12050447

Chicago/Turabian StyleKuo, Yung-Ming, Liang-Yin Kuo, Hsun-Yu Huang, Tsen-Yu Sung, Chun-Hung Yang, Wan-Ting Chang, and Chien-Shun Lo. 2025. "A Three-Stage Fusion Neural Network for Predicting the Risk of Root Fracture—A Pilot Study" Bioengineering 12, no. 5: 447. https://doi.org/10.3390/bioengineering12050447

APA StyleKuo, Y.-M., Kuo, L.-Y., Huang, H.-Y., Sung, T.-Y., Yang, C.-H., Chang, W.-T., & Lo, C.-S. (2025). A Three-Stage Fusion Neural Network for Predicting the Risk of Root Fracture—A Pilot Study. Bioengineering, 12(5), 447. https://doi.org/10.3390/bioengineering12050447