Deep Reinforcement Learning for Trading—A Critical Survey

Abstract

:1. Introduction

- Ref. [2] looks at model-free methods and is thus limiting. Moreover, it discusses some aspects from a financial-economical point of view: the portfolio management problem, the relation between the Kelly criterion, risk parity, and mean variance. We are interested in the RL perspective, and the need for risk measures is only to feed the agents with helpful reward functions. We also discuss the hierarchical approach which we consider to be quite promising, especially for applying a DRL agent to multiple markets.

- Ref. [3] looks only at the prediction problem using deep learning.

- Ref. [4] has a similar outlook as this work, but the works discussed are quite old, very few using deep learning techniques.

- Ref. [5] looks at more applications in economics, not just trading. Additionally, the deep reinforcement learning part is quite small in the whole article.

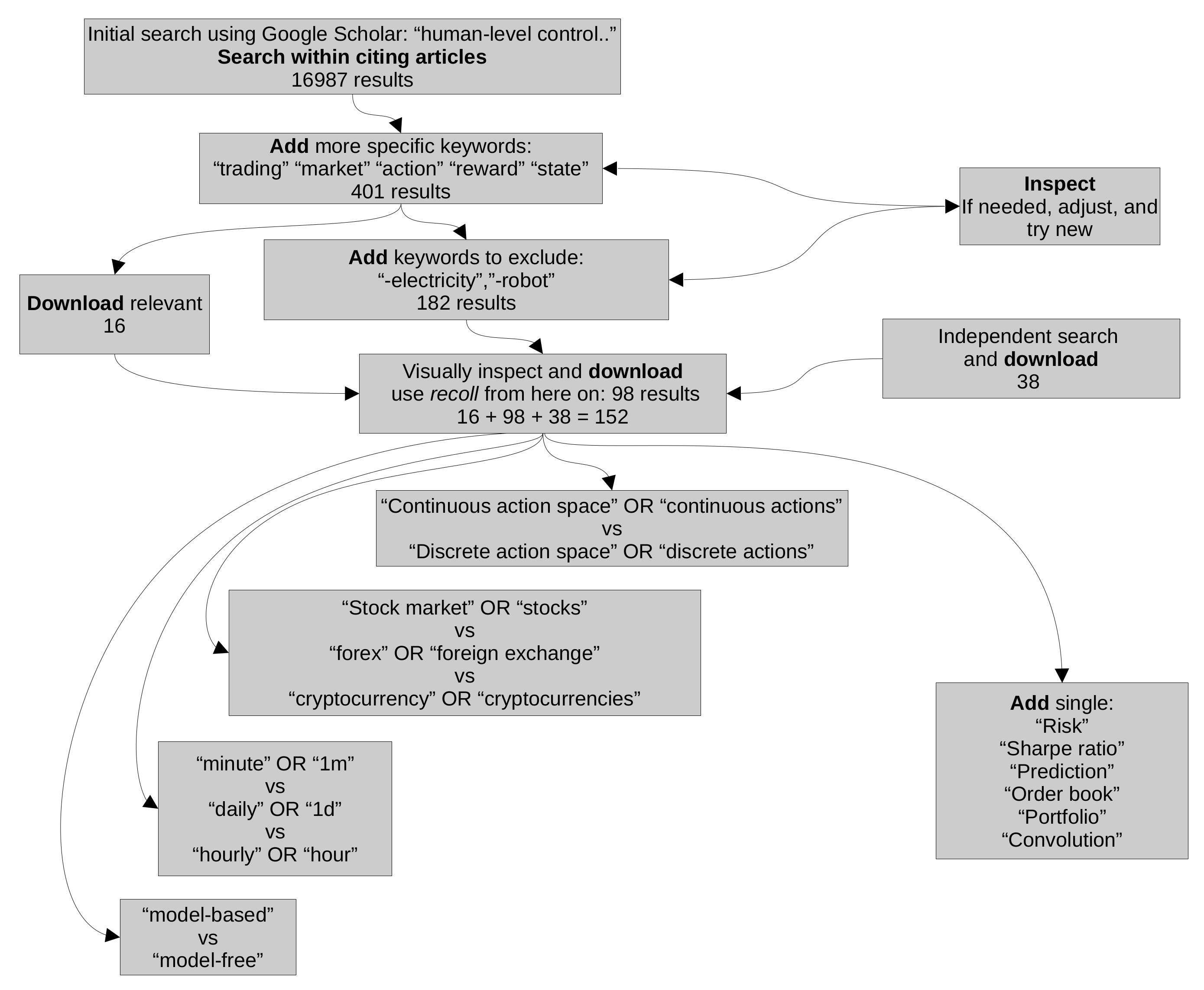

- All papers using DRL for trading will most probably cite the first paper that introduced DQN [1].

- Because of the above reason, we can use the feature in Scholar which searches through the citing articles.

- Google Scholar returns results also present on all other portals such as IEEE, Scopus, WebOfScience, etc.

- The prediction problem, where we want to accurately predict some time-steps in the future based on the past and on the general dynamics of the time-series. What we mean by this is that, for example, gold or oil do not have such large fluctuations as the crypto markets. When one is predicting the next point for the price, it is advisable to take this information into account. One way to do this is to consider general a priori models, based on large historical samples, such that we embed this possible, historical dynamics into our prediction model. This would function as a type of general prior information; for example, it is absurd to consider models where gold will rise 100% overnight, as this has never happened and probably never will. However, for the crypto markets, this might not be that impossible, and some of them did in fact rise thousands of percent overnight, with many still rising significantly every day https://coinmarketcap.com/, accessed on 7 October 2021. Deep learning models have been successfully applied to such predictions [3,10,11,12] as well as many other ML techniques [13,14,15]. In the context of RL, prediction is usually done through model-based RL [16], where a simulation of the environment is created based on data seen so far. Then, prediction amounts to running this simulation to see what comes next (at least according to the prediction model). More on model-based RL can be found in Section 10.1. For a comprehensive survey, see [17].

- The strategy itself might be based on the prediction but embed other information as well, such as the certainty of the prediction (which should inform us how much we would like to invest, assuming a rising or bull future market) or other external information sources, such as social media sentiments [18] (e.g., are people positive or negative about an asset?) or news [19], volume, or other sources of related information which might influence the decision. The strategy should be also customisable, in the sense that we might want a risk-averse strategy or risk-seeking one. This cannot be done from a point prediction only, but there are some ways of estimating risk for DRL [20]. For all these reasons, we consider the strategy or decision making a quite different problem than the prediction problem. This is the general trend in the related literature [21,22].

- The portfolio management problem is encountered when dealing with multiple assets. Until now we have been talking only about a single asset, but if some amount of resources is being split among multiple concurrent assets, then we have to decide how to split these resources. At first, the crypto markets were highly correlated (if Bitcoin was going up, then most other cryptocurrencies would go up, so this differentiation did not matter too much), but more recently, it has been shown that the correlation among different pairs is significantly decreasing https://charts.coinmetrics.io/correlations/, accessed on 11 October 2021. Thus, an investing strategy is needed to select among the most promising (for the next time-slice) coins. The time-slice for which one does rebalancing is also a significant parameter of the investing strategy. Rebalancing usually means how to split resources (evenly) among the most promising coins. Most of the work in trading using smart techniques such as DRL is situated here. See, for example, [2,23,24,25,26,27,28]. Not all the related works we are citing deal with cryptocurrency markets, but since all financial markets are quite similar, we consider these relevant for this article.

- The risk associated with some investments is inevitable. However, through smart techniques, a market actor might minimize the risk of investment while still achieving significant profits. Additionally, some actors might want to follow a risk-averse strategy while other might be risk-seeking. The risk of a strategy is generally measured by using the Sharpe ratio or Sortino ratio, which take into account a risk-free investment (e.g., U.S. treasury bond or cash), but even though we acknowledge the significance of these measures, they do have some drawbacks. We will discuss in more detail the two measures, giving a formal description and proposing alternative measures of risk. Moreover, if one uses the prediction in the strategy, by looking at the uncertainty of prediction, one can also infuse a strategy with a measure of risk given by this certainty, i.e., if the prediction of some asset going up is very uncertain, then the risk is high, and, on the other hand, if the prediction is certain, the risk is lower.

2. Prediction

3. Strategy for RL

- Single or multi-asset strategy: This needs to take into account data for each of the assets, either time-based or price-based data. This can be done individually (e.g., have one strategy for each asset) or aggregated.

- The sampling rate and nature of the data: We can have strategies which make decisions at second intervals or even less (high frequency trading or HFT [32]) or make a few decisions throughout a day (intraday) [33], day trading (at the end of each day all open orders are closed) [34], or higher intervals. The type of data fed is usually time-based, but there are also price-based environments for RL [35].

- The reward fed to the RL agent is completely governing its behaviour, so a wise choice of the reward shaping function is critical for good performance. There are quite a number of rewards one can choose from or combine, from risk-based measures to profitability or cumulative return, number of trades per interval, etc. The RL framework accepts any sort of reward: the denser, the better. For example, in [35], the authors test seven different reward functions based on various measures of risk or PnL (profit and loss).

Benchmark Strategies

- Uniform constant rebalanced portfolio (UCRP) [41] rebalances the assets every day, keeping the same distribution among them.

- A deep RL architecture [27] has been very well received by the community and with great results. It has also been used in other related works for comparison since the code is readily available https://github.com/ZhengyaoJiang/PGPortfolio, accessed on 10 October 2021.

4. Portfolio Management

5. Risk

5.1. Sharpe Ratio and Sortino Ratio

5.1.1. Sortino Ratio

5.1.2. Differential Sharpe Ratio

5.2. Value at Risk

5.3. Conditional Value at Risk

5.4. Turbulence

5.5. Maximum Drawdown (MDD)

6. Deep Reinforcement Learning

7. The Financial Market as an Environment for DRL

- The data: this is how we sample them, concatenate them, preprocess them, and feed them. This amounts to defining the state space for the DRL agent: what is in a state and how it is represented.

- The actions: what can the agent do at any decision point? Can it choose from a continuous set of values, or does it have a limited choice? This amounts to what type of operations we allow for the agent. If they are continuous, this usually happens for portfolio management, where we have multiple assets to manage and we would like to assign a specific amount of the total wallet value to a specific asset. This then needs to translate into the actual trade-making as a function of the current portfolio holdings. This is generally more complicated, and not all RL algorithms can deal with continuous action spaces out of the box.In the case of a discrete action set for one asset, this amounts to the number of coins to buy/sell which can very from three to hundreds (where three is a simple buy/sell a fixed amount, say one, and hold). For more than three actions, this generally allows more quantities to be bought or sold, with each one representing an increasing number of coins to buy or sell.

- The reward is fully governing the agent’s behaviour; thus, a good choice of reward function is critical for the desired type of strategy and its performance. Since the reward will be maximized, it is natural to think of measures such as current balance or PnL as a possible reward function, and they have been used extensively in the community. However, these are often not sufficient to be able to customise a strategy according to different criteria; thus, measures of risk seem quite an obvious choice, and these also have been used with success in the DRL trading community.

8. Time-Series Encodings or State Representation for RL

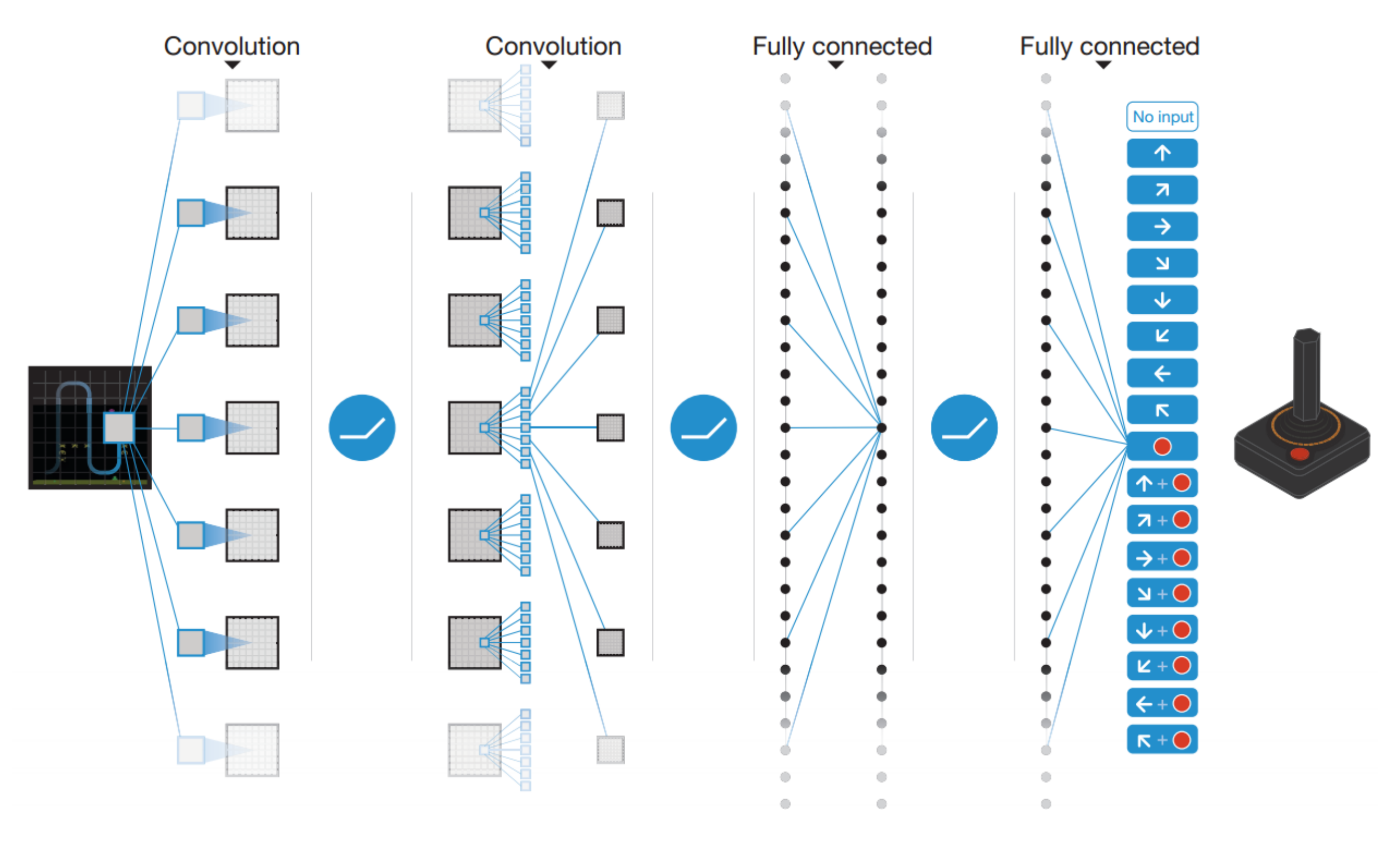

8.1. Convolutions

8.2. Raw-Data OHLCV

8.3. Technical Trading Indicators

8.4. Correlated Pairs

8.5. Historical Binning or Price-Events Environment

8.6. Adding Various Account Information

9. Action Space

10. Reward Shaping

10.1. Model-Based RL

Model-Based RL in Trading

10.2. Policy-Based RL

11. Hierarchical Reinforcement Learning

HRL in Trading

12. Conclusions

12.1. Common Ground

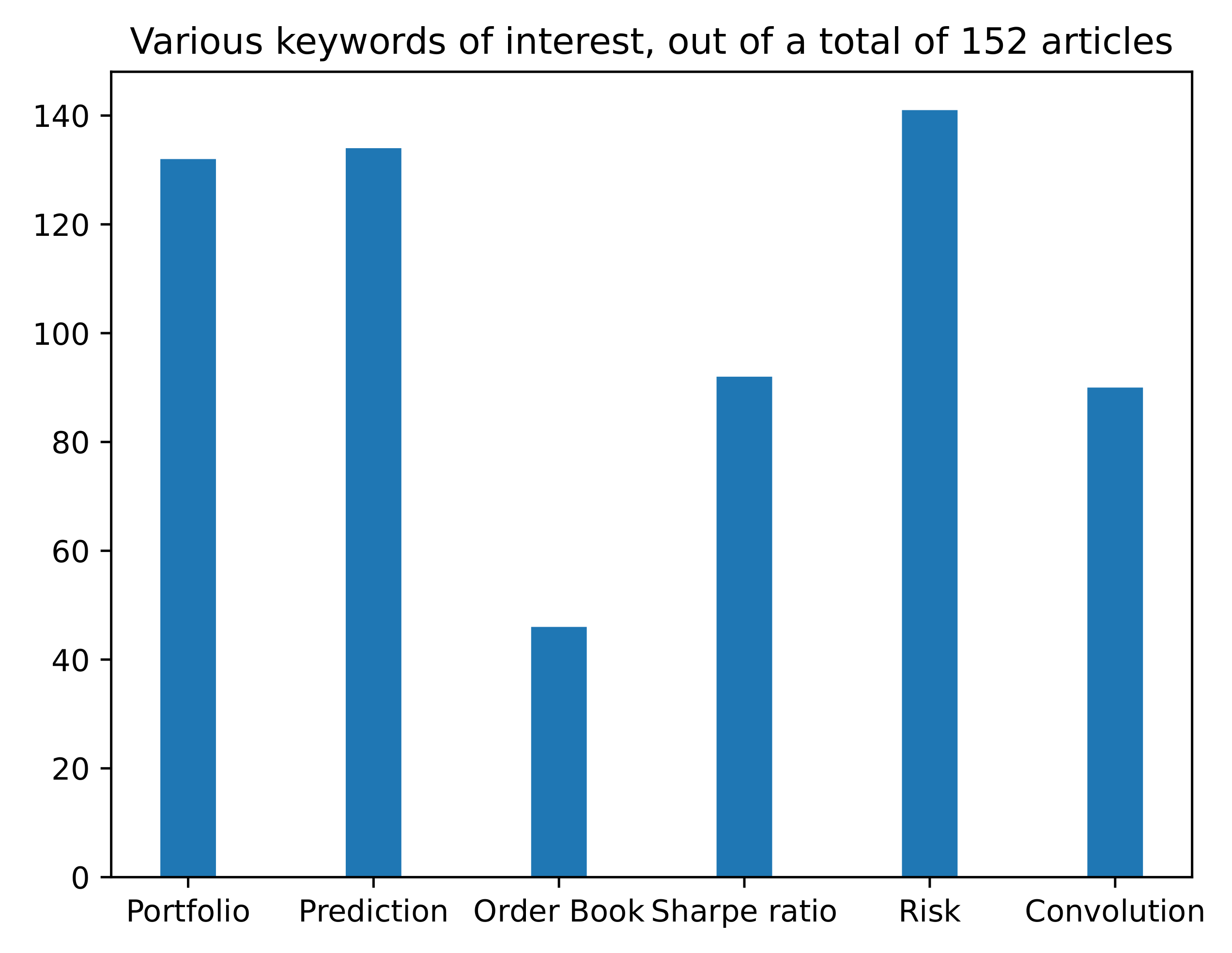

- Many papers use convolutional neural networks to (at least) preprocess the data.

- A significant number of papers concatenate the last few prices as a state for the DRL agent (this can vary from ten steps to hundreds).

- Many papers use the Sharpe ratio as a performance measure.

- Most of the papers deal with the portfolio management problem.

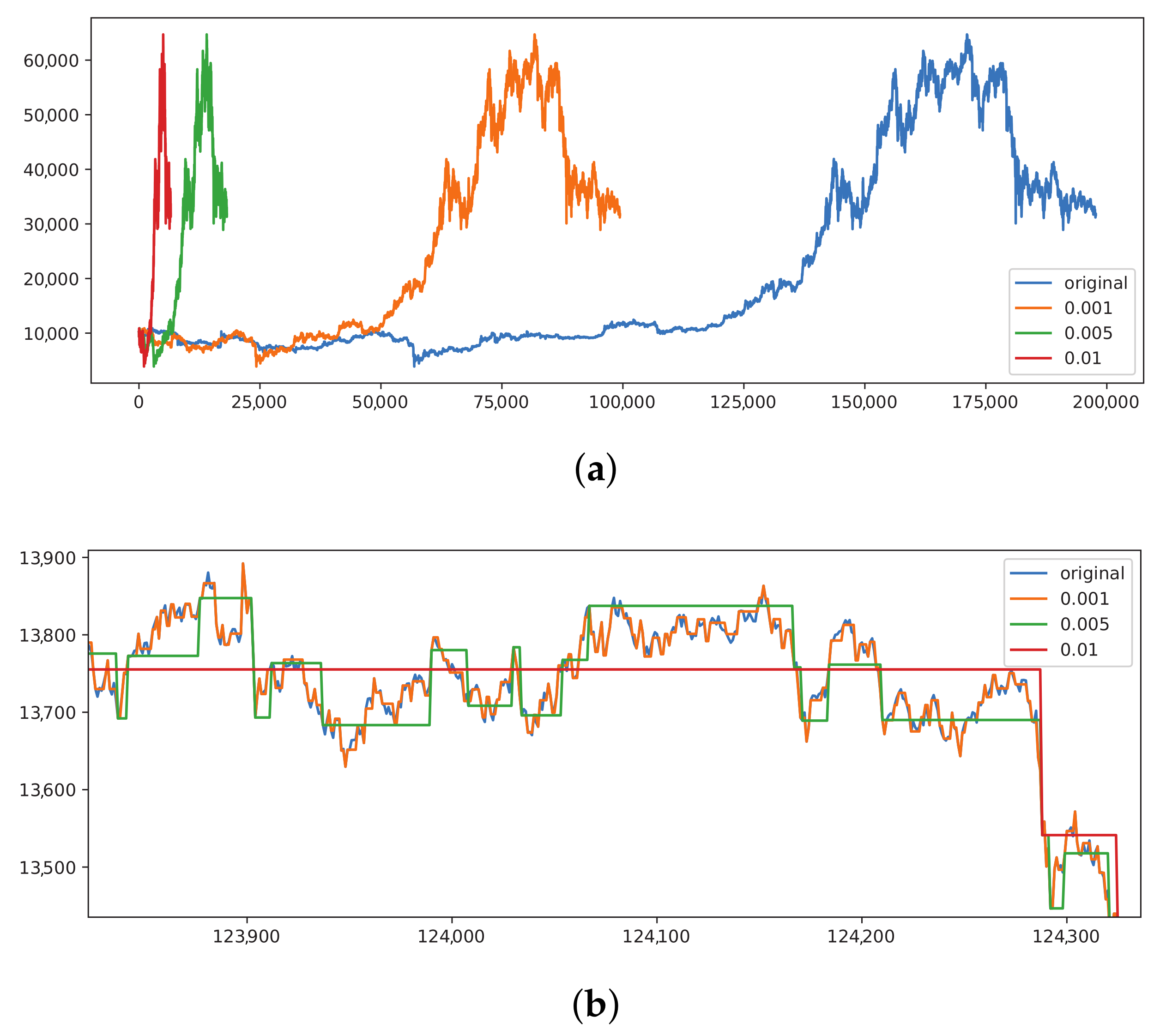

- Very few papers consider all three factors: transaction cost, slippage (when the market is moving so fast that the price is different at the time of the transaction than at the decision time), and spread (the difference between the buy price and sell price). The average slippage on Binance is around https://blog.bybit.com/en-us/insights/a-comparison-of-slippage-in-different-exchanges/, accessed on 5 Octobere 2021, while the spread is around , with the maximum even being (see Figure 9).

12.2. Methodological Issues

- Inconsistency of data used: Almost all papers use different datasets, so it is hard to compare between them. Even if using the same assets (such as Bitcoin), the periods used are different, but in general, the assets are significantly different.

- Different preprocessing techniques: Many papers preprocess the data differently and thus feed different inputs to the algorithms. Even if algorithms are the same, the results might be different due to the nature of the data used. One could argue that this is part of research, and we agree, but the question remains: how can we know if the increased performance of one algorithm is due to the nature of the algorithm or the data fed?

- Very few works use multiple markets (stock market, FX, cryptocurrency markets, gold, oil). It would be interesting to see how some algorithms perform on different types of markets, if hyperparameters for optimal performance differ, and, if so, how they differ. Basically, nobody answers the question, how does this algorithm perceive and perform on different types of markets?

- Very few works have code available online. In general, the code is proprietary and hard to reproduce due to the missing details of the implementation, such as hyperparameters or the deep neural network architecture used.

- Many works mention how hard it is to train a DRL for good trading strategies and the sensitivity to hyperparameters that all DRL agents exhibit. This might also be due to the fact that they do not approach the issues systematically and build a common framework, such as, for example, automatic hyperparameter tuning using some form of meta-learning [85] or autoML [86]. This is an issue for DRL in general, but more pronounced in this application domain, where contributions are often minor in terms of solving more fundamental issues but are more oriented towards increasing performance.

- There is no consistency among the type of environment used. There are various constraints of the environment, with some being more restrictive (not allowing short-selling for example) or simplifying, assuming that the buy operation happens with a fixed number of lots or shares. A few works allow short-selling; among our corpus, 20 mention short-selling, while about 5 actually allow it [61,75,87,88,89].

12.3. Limitations

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Sato, Y. Model-free reinforcement learning for financial portfolios: A brief survey. arXiv 2019, arXiv:1904.04973. [Google Scholar]

- Hu, Z.; Zhao, Y.; Khushi, M. A survey of forex and stock price prediction using deep learning. Appl. Syst. Innov. 2021, 4, 9. [Google Scholar] [CrossRef]

- Fischer, T.G. Reinforcement Learning in Financial Markets-a Survey; Technical Report; Friedrich-Alexander University Erlangen-Nuremberg, Institute for Economics: Erlangen, Germany, 2018. [Google Scholar]

- Mosavi, A.; Faghan, Y.; Ghamisi, P.; Duan, P.; Ardabili, S.F.; Salwana, E.; Band, S.S. Comprehensive review of deep reinforcement learning methods and applications in economics. Mathematics 2020, 8, 1640. [Google Scholar] [CrossRef]

- Meng, T.L.; Khushi, M. Reinforcement learning in financial markets. Data 2019, 4, 110. [Google Scholar] [CrossRef] [Green Version]

- Nakamoto, S.; Bitcoin, A. A peer-to-peer electronic cash system. Decentralized Bus. Rev. 2008, 4, 21260. [Google Scholar]

- Islam, M.R.; Nor, R.M.; Al-Shaikhli, I.F.; Mohammad, K.S. Cryptocurrency vs. Fiat Currency: Architecture, Algorithm, Cashflow & Ledger Technology on Emerging Economy: The Influential Facts of Cryptocurrency and Fiat Currency. In Proceedings of the 2018 International Conference on Information and Communication Technology for the Muslim World (ICT4M), Kuala Lumpur, Malaysia, 23–25 July 2018; pp. 69–73. [Google Scholar]

- Tan, S.K.; Chan, J.S.K.; Ng, K.H. On the speculative nature of cryptocurrencies: A study on Garman and Klass volatility measure. Financ. Res. Lett. 2020, 32, 101075. [Google Scholar] [CrossRef]

- Wang, J.; Sun, T.; Liu, B.; Cao, Y.; Wang, D. Financial markets prediction with deep learning. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 97–104. [Google Scholar]

- Song, Y.G.; Zhou, Y.L.; Han, R.J. Neural networks for stock price prediction. arXiv 2018, arXiv:1805.11317. [Google Scholar]

- Selvin, S.; Vinayakumar, R.; Gopalakrishnan, E.; Menon, V.K.; Soman, K. Stock price prediction using LSTM, RNN and CNN-sliding window model. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (Icacci), Manipal, India, 13–16 September 2017; pp. 1643–1647. [Google Scholar]

- Henrique, B.M.; Sobreiro, V.A.; Kimura, H. Stock price prediction using support vector regression on daily and up to the minute prices. J. Financ. Data Sci. 2018, 4, 183–201. [Google Scholar] [CrossRef]

- Vijh, M.; Chandola, D.; Tikkiwal, V.A.; Kumar, A. Stock closing price prediction using machine learning techniques. Procedia Comput. Sci. 2020, 167, 599–606. [Google Scholar] [CrossRef]

- Rathan, K.; Sai, S.V.; Manikanta, T.S. Crypto-currency price prediction using decision tree and regression techniques. In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019; pp. 190–194. [Google Scholar]

- Ke, N.R.; Singh, A.; Touati, A.; Goyal, A.; Bengio, Y.; Parikh, D.; Batra, D. Modeling the long term future in model-based reinforcement learning. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Moerland, T.M.; Broekens, J.; Jonker, C.M. Model-based reinforcement learning: A survey. arXiv 2020, arXiv:2006.16712. [Google Scholar]

- Pant, D.R.; Neupane, P.; Poudel, A.; Pokhrel, A.K.; Lama, B.K. Recurrent neural network based bitcoin price prediction by twitter sentiment analysis. In Proceedings of the 2018 IEEE 3rd International Conference on Computing, Communication and Security (ICCCS), Kathmandu, Nepal, 25–27 October 2018; pp. 128–132. [Google Scholar]

- Vo, A.D.; Nguyen, Q.P.; Ock, C.Y. Sentiment Analysis of News for Effective Cryptocurrency Price Prediction. Int. J. Knowl. Eng. 2019, 5, 47–52. [Google Scholar] [CrossRef]

- Clements, W.R.; Van Delft, B.; Robaglia, B.M.; Slaoui, R.B.; Toth, S. Estimating risk and uncertainty in deep reinforcement learning. arXiv 2019, arXiv:1905.09638. [Google Scholar]

- Sebastião, H.; Godinho, P. Forecasting and trading cryptocurrencies with machine learning under changing market conditions. Financ. Innov. 2021, 7, 1–30. [Google Scholar] [CrossRef]

- Suri, K.; Saurav, S. Attentive Hierarchical Reinforcement Learning for Stock Order Executions. Available online: https://github.com/karush17/Hierarchical-Attention-Reinforcement-Learning (accessed on 5 October 2021).

- Yu, P.; Lee, J.S.; Kulyatin, I.; Shi, Z.; Dasgupta, S. Model-based deep reinforcement learning for dynamic portfolio optimization. arXiv 2019, arXiv:1901.08740. [Google Scholar]

- Lucarelli, G.; Borrotti, M. A deep Q-learning portfolio management framework for the cryptocurrency market. Neural Comput. Appl. 2020, 32, 17229–17244. [Google Scholar] [CrossRef]

- Wang, R.; Wei, H.; An, B.; Feng, Z.; Yao, J. Commission Fee is not Enough: A Hierarchical Reinforced Framework for Portfolio Management. arXiv 2020, arXiv:2012.12620. [Google Scholar]

- Gao, Y.; Gao, Z.; Hu, Y.; Song, S.; Jiang, Z.; Su, J. A Framework of Hierarchical Deep Q-Network for Portfolio Management. In Proceedings of the ICAART (2), Online Streaming, 4–6 February 2021; pp. 132–140. [Google Scholar]

- Jiang, Z.; Xu, D.; Liang, J. A deep reinforcement learning framework for the financial portfolio management problem. arXiv 2017, arXiv:1706.10059. [Google Scholar]

- Shi, S.; Li, J.; Li, G.; Pan, P. A Multi-Scale Temporal Feature Aggregation Convolutional Neural Network for Portfolio Management. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 1613–1622. [Google Scholar]

- Itoh, Y.; Adachi, M. Chaotic time series prediction by combining echo-state networks and radial basis function networks. In Proceedings of the 2010 IEEE International Workshop on Machine Learning for Signal Processing, Kittila, Finland, 29 August–1 September 2010; pp. 238–243. [Google Scholar]

- Dubois, P.; Gomez, T.; Planckaert, L.; Perret, L. Data-driven predictions of the Lorenz system. Phys. D 2020, 408, 132495. [Google Scholar] [CrossRef] [Green Version]

- Mehtab, S.; Sen, J. Stock price prediction using convolutional neural networks on a multivariate timeseries. arXiv 2020, arXiv:2001.09769. [Google Scholar]

- Briola, A.; Turiel, J.; Marcaccioli, R.; Aste, T. Deep Reinforcement Learning for Active High Frequency Trading. arXiv 2021, arXiv:2101.07107. [Google Scholar]

- Boukas, I.; Ernst, D.; Théate, T.; Bolland, A.; Huynen, A.; Buchwald, M.; Wynants, C.; Cornélusse, B. A deep reinforcement learning framework for continuous intraday market bidding. arXiv 2020, arXiv:2004.05940. [Google Scholar] [CrossRef]

- Conegundes, L.; Pereira, A.C.M. Beating the Stock Market with a Deep Reinforcement Learning Day Trading System. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Sadighian, J. Extending Deep Reinforcement Learning Frameworks in Cryptocurrency Market Making. arXiv 2020, arXiv:2004.06985. [Google Scholar]

- Hu, Y.; Liu, K.; Zhang, X.; Su, L.; Ngai, E.; Liu, M. Application of evolutionary computation for rule discovery in stock algorithmic trading: A literature review. Appl. Soft Comput. 2015, 36, 534–551. [Google Scholar] [CrossRef]

- Taghian, M.; Asadi, A.; Safabakhsh, R. Learning Financial Asset-Specific Trading Rules via Deep Reinforcement Learning. arXiv 2020, arXiv:2010.14194. [Google Scholar]

- Bisht, K.; Kumar, A. Deep Reinforcement Learning based Multi-Objective Systems for Financial Trading. In Proceedings of the 2020 5th IEEE International Conference on Recent Advances and Innovations in Engineering (ICRAIE), Online, 1–3 December 2020; pp. 1–6. [Google Scholar]

- Théate, T.; Ernst, D. An application of deep reinforcement learning to algorithmic trading. Expert Syst. Appl. 2021, 173, 114632. [Google Scholar] [CrossRef]

- Bu, S.J.; Cho, S.B. Learning optimal Q-function using deep Boltzmann machine for reliable trading of cryptocurrency. In Proceedings of the International Conference on Intelligent Data Engineering and Automated Learning, Madrid, Spain, 21–23 November 2018; pp. 468–480. [Google Scholar]

- Cover, T.M. Universal portfolios. In The Kelly Capital Growth Investment Criterion: Theory and Practice; World Scientific: London, UK, 2011; pp. 181–209. [Google Scholar]

- Li, B.; Hoi, S.C. On-line portfolio selection with moving average reversion. arXiv 2012, arXiv:1206.4626. [Google Scholar] [CrossRef]

- Moon, S.H.; Kim, Y.H.; Moon, B.R. Empirical investigation of state-of-the-art mean reversion strategies for equity markets. arXiv 2019, arXiv:1909.04327. [Google Scholar]

- Sharpe, W.F. Mutual fund performance. J. Bus. 1966, 39, 119–138. [Google Scholar] [CrossRef]

- Moody, J.; Wu, L. Optimization of trading systems and portfolios. In Proceedings of the IEEE/IAFE 1997 Computational Intelligence for Financial Engineering (CIFEr), New York, NY, USA, 24–25 March 1997; pp. 300–307. [Google Scholar] [CrossRef]

- Gran, P.K.; Holm, A.J.K.; Søgård, S.G. A Deep Reinforcement Learning Approach to Stock Trading. Master’s Thesis, NTNU, Trondheim, Norway, 2019. [Google Scholar]

- Yang, H.; Liu, X.Y.; Zhong, S.; Walid, A. Deep reinforcement learning for automated stock trading: An ensemble strategy. SSRN 2020. [Google Scholar] [CrossRef]

- Magdon-Ismail, M.; Atiya, A.F. An analysis of the maximum drawdown risk measure. Citeseer 2015. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, UK, 1998; Volume 1. [Google Scholar]

- Li, Y. Deep reinforcement learning: An overview. arXiv 2017, arXiv:1701.07274. [Google Scholar]

- Mousavi, S.S.; Schukat, M.; Howley, E. Deep reinforcement learning: An overview. In Proceedings of the SAI Intelligent Systems Conference, London, UK, 21–22 September 2016; pp. 426–440. [Google Scholar]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. Deep reinforcement learning: A brief survey. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef] [Green Version]

- Narasimhan, K.; Kulkarni, T.; Barzilay, R. Language understanding for text-based games using deep reinforcement learning. arXiv 2015, arXiv:1506.08941. [Google Scholar]

- Foerster, J.N.; Assael, Y.M.; de Freitas, N.; Whiteson, S. Learning to communicate to solve riddles with deep distributed recurrent q-networks. arXiv 2016, arXiv:1602.02672. [Google Scholar]

- Heravi, J.R. Learning Representations in Reinforcement Learning; University of California: Merced, CA, USA, 2019. [Google Scholar]

- Stooke, A.; Lee, K.; Abbeel, P.; Laskin, M. Decoupling representation learning from reinforcement learning. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 9870–9879. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Grefenstette, E.; Blunsom, P.; De Freitas, N.; Hermann, K.M. A deep architecture for semantic parsing. arXiv 2014, arXiv:1404.7296. [Google Scholar]

- Ren, H.; Xu, B.; Wang, Y.; Yi, C.; Huang, C.; Kou, X.; Xing, T.; Yang, M.; Tong, J.; Zhang, Q. Time-series anomaly detection service at Microsoft. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 3009–3017. [Google Scholar]

- Chen, Y.; Kang, Y.; Chen, Y.; Wang, Z. Probabilistic forecasting with temporal convolutional neural network. Neurocomputing 2020, 399, 491–501. [Google Scholar] [CrossRef] [Green Version]

- Yashaswi, K. Deep Reinforcement Learning for Portfolio Optimization using Latent Feature State Space (LFSS) Module. arXiv 2021, arXiv:2102.06233. [Google Scholar]

- Technical Indicators. Available online: https://www.tradingtechnologies.com/xtrader-help/x-study/technical-indicator-definitions/list-of-technical-indicators/ (accessed on 21 June 2021).

- Wu, X.; Chen, H.; Wang, J.; Troiano, L.; Loia, V.; Fujita, H. Adaptive stock trading strategies with deep reinforcement learning methods. Inf. Sci. 2020, 538, 142–158. [Google Scholar] [CrossRef]

- Chakraborty, S. Capturing financial markets to apply deep reinforcement learning. arXiv 2019, arXiv:1907.04373. [Google Scholar]

- Jia, W.; Chen, W.; Xiong, L.; Hongyong, S. Quantitative trading on stock market based on deep reinforcement learning. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Rundo, F. Deep LSTM with reinforcement learning layer for financial trend prediction in FX high frequency trading systems. Appl. Sci. 2019, 9, 4460. [Google Scholar] [CrossRef] [Green Version]

- Huotari, T.; Savolainen, J.; Collan, M. Deep reinforcement learning agent for S&P 500 stock selection. Axioms 2020, 9, 130. [Google Scholar]

- Tsantekidis, A.; Passalis, N.; Tefas, A. Diversity-driven knowledge distillation for financial trading using Deep Reinforcement Learning. Neural Netw. 2021, 140, 193–202. [Google Scholar] [CrossRef]

- Lucarelli, G.; Borrotti, M. A deep reinforcement learning approach for automated cryptocurrency trading. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, Crete, Greece, 24–26 May 2019; pp. 247–258. [Google Scholar]

- Wu, M.E.; Syu, J.H.; Lin, J.C.W.; Ho, J.M. Portfolio management system in equity market neutral using reinforcement learning. Appl. Intell. 2021, 51, 8119–8131. [Google Scholar] [CrossRef]

- Weng, L.; Sun, X.; Xia, M.; Liu, J.; Xu, Y. Portfolio trading system of digital currencies: A deep reinforcement learning with multidimensional attention gating mechanism. Neurocomputing 2020, 402, 171–182. [Google Scholar] [CrossRef]

- Suri, K.; Shi, X.Q.; Plataniotis, K.; Lawryshyn, Y. TradeR: Practical Deep Hierarchical Reinforcement Learning for Trade Execution. arXiv 2021, arXiv:2104.00620. [Google Scholar]

- Wei, H.; Wang, Y.; Mangu, L.; Decker, K. Model-based reinforcement learning for predictions and control for limit order books. arXiv 2019, arXiv:1910.03743. [Google Scholar]

- Leem, J.; Kim, H.Y. Action-specialized expert ensemble trading system with extended discrete action space using deep reinforcement learning. PLoS ONE 2020, 15, e0236178. [Google Scholar] [CrossRef]

- Jeong, G.; Kim, H.Y. Improving financial trading decisions using deep Q-learning: Predicting the number of shares, action strategies, and transfer learning. Expert Syst. Appl. 2019, 117, 125–138. [Google Scholar] [CrossRef]

- Lei, K.; Zhang, B.; Li, Y.; Yang, M.; Shen, Y. Time-driven feature-aware jointly deep reinforcement learning for financial signal representation and algorithmic trading. Expert Syst. Appl. 2020, 140, 112872. [Google Scholar] [CrossRef]

- Hirchoua, B.; Ouhbi, B.; Frikh, B. Deep reinforcement learning based trading agents: Risk curiosity driven learning for financial rules-based policy. Expert Syst. Appl. 2021, 170, 114553. [Google Scholar] [CrossRef]

- Deisenroth, M.; Rasmussen, C.E. PILCO: A model-based and data-efficient approach to policy search. In Proceedings of the 28th International Conference on machine learning (ICML-11), Citeseer, Bellevue, WA, USA, 28 June–2 July 2011; pp. 465–472. [Google Scholar]

- Abdolmaleki, A.; Lioutikov, R.; Peters, J.R.; Lau, N.; Pualo Reis, L.; Neumann, G. Model-based relative entropy stochastic search. Adv. Neural Inf. Process. Syst. 2015, 28, 3537–3545. [Google Scholar]

- Levine, S.; Koltun, V. Guided policy search. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1–9. [Google Scholar]

- Littman, M.L. Reinforcement learning improves behaviour from evaluative feedback. Nature 2015, 521, 445–451. [Google Scholar] [CrossRef]

- Hinton, G.E.; Zemel, R.S. Autoencoders, minimum description length, and Helmholtz free energy. Adv. Neural Inf. Process. Syst. 1994, 6, 3–10. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Jaderberg, M.; Mnih, V.; Czarnecki, W.M.; Schaul, T.; Leibo, J.Z.; Silver, D.; Kavukcuoglu, K. Reinforcement learning with unsupervised auxiliary tasks. arXiv 2016, arXiv:1611.05397. [Google Scholar]

- Xu, Z.; van Hasselt, H.; Silver, D. Meta-gradient reinforcement learning. arXiv 2018, arXiv:1805.09801. [Google Scholar]

- He, X.; Zhao, K.; Chu, X. AutoML: A Survey of the State-of-the-Art. Knowl.-Based Syst. 2021, 212, 106622. [Google Scholar] [CrossRef]

- Zhang, Z. Hierarchical Modelling for Financial Data. Ph.D. Thesis, University of Oxford, Oxford, UK, 2020. [Google Scholar]

- Filos, A. Reinforcement Learning for Portfolio Management. Master’s Thesis, Imperial College London, London, UK, 2019. [Google Scholar]

- De Quinones, P.C.F.; Perez-Muelas, V.L.; Mari, J.M. Reinforcement Learning in Stock Market. Master’s Thesis, University of Valencia, Valencia, Spain.

| Article | Data | State Space | Action Space | Reward | Performance |

|---|---|---|---|---|---|

| Deep Reinforcement Learning Agent for S&P 500 stock selection [67] | 28 S&P500 5283 days for 415 stocks | total return, closing price, volumes, quarterly earnings, the out-paid dividends, the declaration dates for dividends, the publication dates for the quarterly reports (d, n, f), where d is the length of the observation in days, n is the number of stocks in the environment, f is the number of features for a single stock | Continuous 20 dims | Differential Sharpe | 1255 days Total return: 328% Sharpe=0.91 |

| Diversity-driven knowledge distillation for financial trading using Deep Reinforcement Learning [68] | 28 FX, 1h | normalized OHLCV, current open position as one-hot | Discrete (exit, buy, sell) | PnL | 2 years PnL ≈ 0.35 |

| A Deep Reinforcement Learning Approach for Automated Cryptocurrency Trading [69] | Bitcoin, 3200 h | time stamp, OHLCV, USD volume weighted Bitcoin price | Discrete (buy, hold, sell) | Profit Sharpe | 800h return ≈ 3% |

| An application of deep reinforcement learning to algorithmic trading [39] | 30 stocks daily, 5 years | current trading position, OHLCV | Discrete (buy, sell) | Normalized price change | Sharpe = 0.4 AAPL annual return=0.32 |

| Adaptive Stock Trading Strategies with Deep Reinforcement Learning Methods [63] | 15 stocks daily, 8 years | OHLCV + Technical indicators (MA, EMA, MACD, BIAS, VR, and OBV) | Discrete (long, neutral, short) | Sortino ratio | 3 years −6%−200% |

| Portfolio management system in equity market neutral using RL [70] | 50 stocks daily, 2 years | normalized OHLC | Continuous 50 dims | Sharpe ratio | 2 years profit ≈ 50% |

| Deep Reinforcement Learning for Automated Stock Trading: [47] | 30 stocks daily, 7 years | balance, shares, price technical indicators (MACD, RSI, CCI, ADX) | Discrete | Portfolio value change Turbulence | Sharpe=1.3 Annual return=13% |

| Portfolio trading system of digital currencies: A deep reinforcement learning with multidimensional attention gating mechanism [71] | 20 assets 30 min, 4 years | normalized HLC, shares | Continuous 20 dims | Log cumulative return | 2 months Return = 22x |

| A framework of hierarchical deep q-network for portfolio management [26] | 4 Chinese stocks 2 days, 2 years | OHLC, shares | Discretised × 4 | Price change | 1 year 44% |

| Attentive hierarchical reinforcement learning for stock order executions [22] | 6 stocks 2 min, 2 months | OHLCV | Discrete (buy, sell) | Balance | 1 month unclear% |

| Trader: Practical deep hierarchical reinforcement learning for trade execution [72] | 35 stocks 1 min, 1 year | OHLCV | Discrete (buy, hold, sell) and Continuous | Auxiliary: Surprise minimisation | unclear |

| Commission fee is not enough: A hierarchical reinforced framework for portfolio management [25] | 46 stocks daily, 14 years | LOB, OHLCV | Discrete (buy, sell) and Continuous | Price change, Cumulative trading costs | Wealth ≈ 200% |

| Model-based reinforcement learning for predictions and control for limit order books [73] | one stock 17s, 61 days | LOB, trade prints | Discrete 21 actions | Predicted price change | 19 days cumulative PnL = 20 |

| Model-based deep reinforcement learning for dynamic portfolio optimization [23] | 7 stocks hourly, 14 years augmented | OHLC predicted next HLC market perf. index | Continuous | Predicted price change | 2 years annual return = 8% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Millea, A. Deep Reinforcement Learning for Trading—A Critical Survey. Data 2021, 6, 119. https://doi.org/10.3390/data6110119

Millea A. Deep Reinforcement Learning for Trading—A Critical Survey. Data. 2021; 6(11):119. https://doi.org/10.3390/data6110119

Chicago/Turabian StyleMillea, Adrian. 2021. "Deep Reinforcement Learning for Trading—A Critical Survey" Data 6, no. 11: 119. https://doi.org/10.3390/data6110119