Multi-Layout Invoice Document Dataset (MIDD): A Dataset for Named Entity Recognition

Abstract

:1. Summary

- Publicly available datasets consist of poor-quality, blurred, skewed, and low-resolution document images, leading to poor text extraction [6].

- Publicly available datasets are obsolete, consisting of the old or obsolete formats of documents [7].

- Publicly available datasets are domain-specific and task-specific. For example, the dataset proposed in [8,9] is used for the healthcare domain, and the dataset proposed in [10,11] is used for the legal contract analysis domain. In addition, they are used for specific tasks such as metadata extraction from scientific articles [12] or patient detail extraction from the clinical dataset [13].

1.1. Research Purpose/Goal of Multi-Layout Invoice Document Dataset (MIDD)

- To provide the annotated and varied invoice layout documents in IOB format to identify and extract named entities (named entity recognition) from the invoice documents to the researchers working in this domain. Obtaining a high-quality and sufficient annotated corpus for automated information extraction from unstructured documents is the biggest challenge researchers face.

- To overcome the limitations of rule-based and template-based named entity extraction from unstructured documents traditionally used so far in information extraction approaches. Template-free processing is the only key to processing, and managing a huge pile of unstructured documents in the recent digitized era.

- To provide varied invoice layouts so that researchers can develop a generalized AI-based model that will train on various unstructured invoice layouts. Obtained structured output can later be utilized for integrating into information management application of the organization and used for the decision-making process.

1.2. Related Datasets

2. Data Description

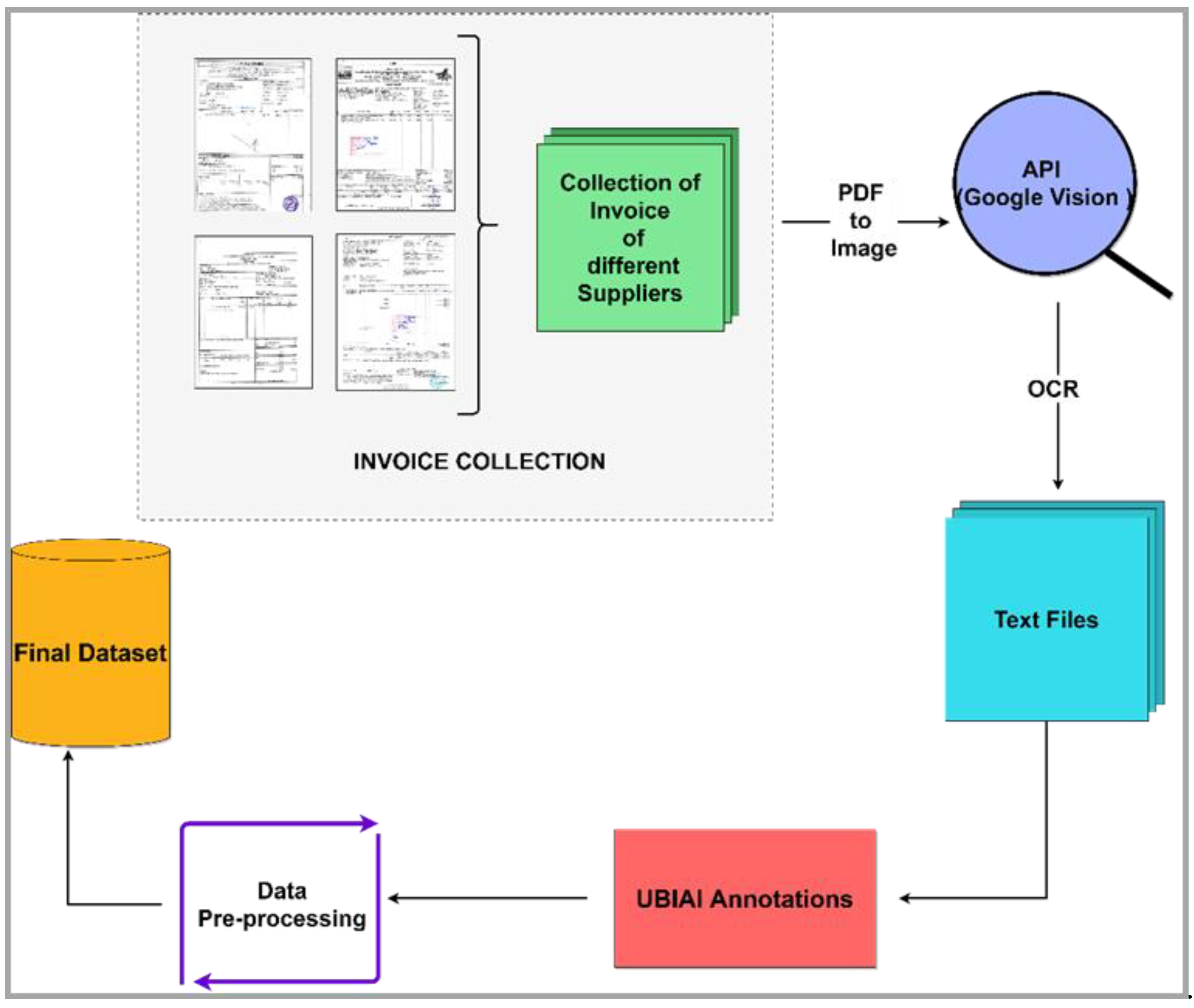

3. Methods

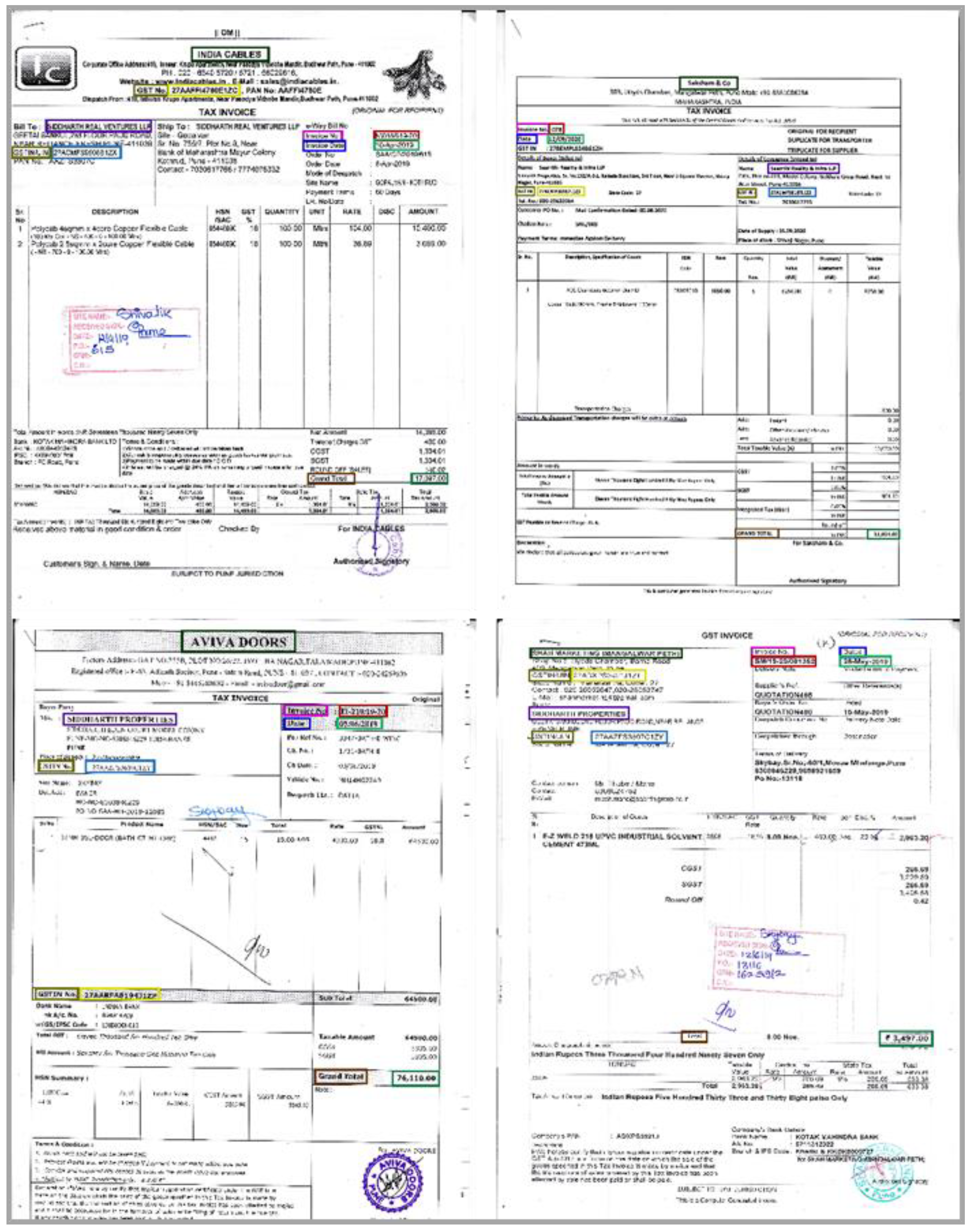

3.1. Data Acquisition

3.2. Data (Named Entities) Annotation

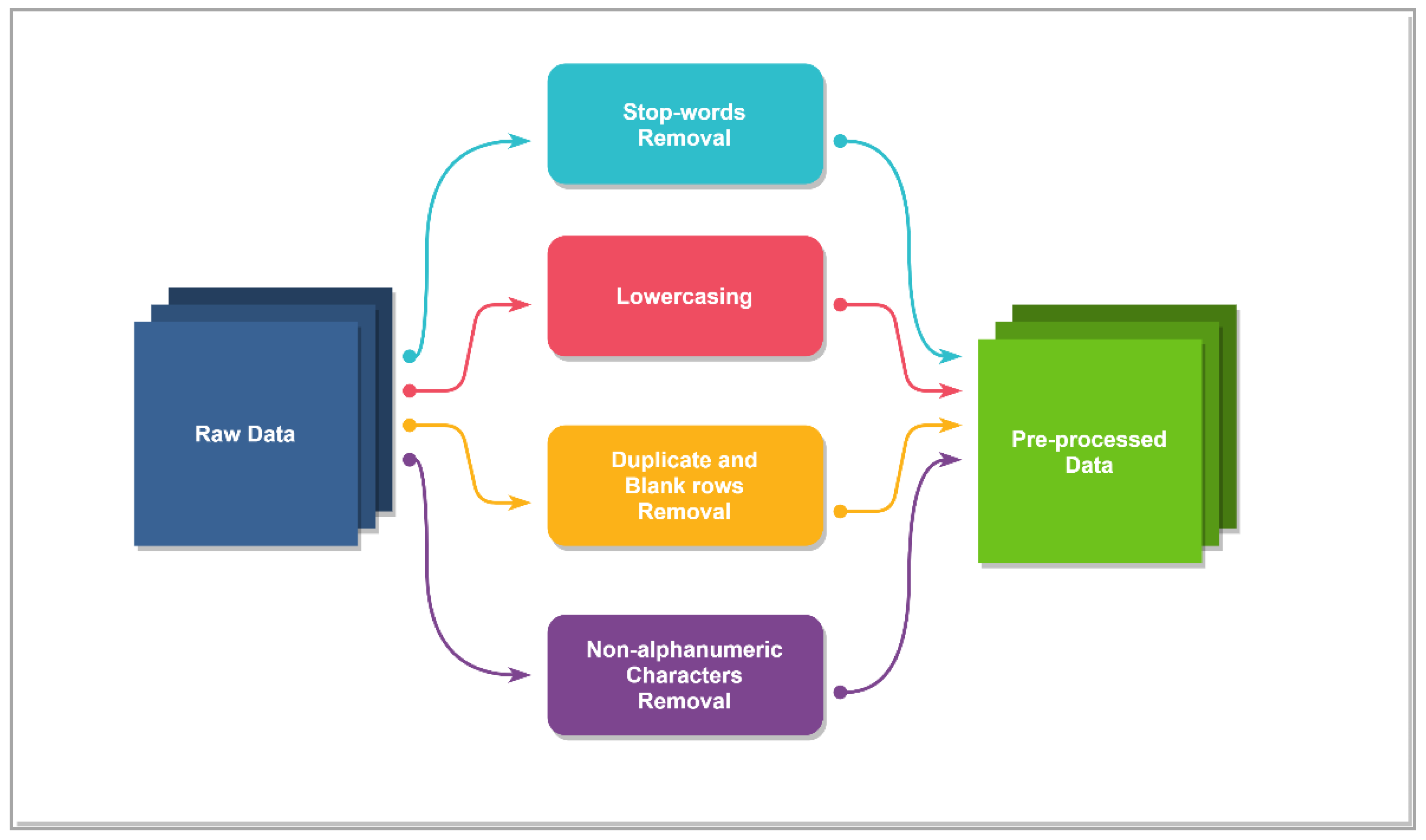

3.3. Data Pre-Processing

3.4. Practical Applications/Use-Cases of MIDD

- The proposed MIDD dataset has many practical implications for extracting named entities as a structured output from the huge pile of unstructured invoice documents. In addition, end-to-end automation of invoice information extraction workflow helps the accounting department in every organization for quick invoice processing and to verify accounts payable and receivable.

- Automated key field extraction from financial documents such as invoices impacts the performance of the business by customer onboarding and verification processes. It can reduce significantly the cost employed for manual data entry and verification of thousands of daily received invoices.

4. Conclusions

5. Future Work

- Increase the data size in MIDD.We aim to increase the number of supplier invoice layouts to achieve more data diversity.

- Automatic data annotation.Automatic data annotations make the researcher’s task simpler and quicker. Therefore, we aim to find a way to annotate invoice documents automatically.

- Use of pre-trained neural networks.Pre-trained Neural Networks such as BERT and their variants can be utilized on MIDD to evaluate its performance.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Data Citation

Acknowledgments

Conflicts of Interest

References

- 30 Eye-Opening Big Data Statistics for 2020: Patterns Are Everywhere. Available online: https://kommandotech.com/statistics/big-data-statistics/ (accessed on 5 December 2020).

- Philosophy, L.; Ahirrao, S.; Baviskar, D. A Bibliometric Survey on Cognitive Document Processing. Libr. Philos. Pract. 2020, 1–31. [Google Scholar]

- Baviskar, D.; Ahirrao, S.; Potdar, V.; Kotecha, K. Efficient Automated Processing of the Unstructured Documents using Artificial Intelligence: A Systematic Literature Review and Future Directions. IEEE Access 2021, 9, 72894–72936. [Google Scholar] [CrossRef]

- Adnan, K.; Akbar, R. Limitations of information extraction methods and techniques for heterogeneous unstructured big data. Int. J. Eng. Bus. Manag. 2019, 11, 1–23. [Google Scholar] [CrossRef]

- Adnan, K.; Akbar, R. An analytical study of information extraction from unstructured and multidimensional big data. J. Big Data 2019, 6, 1–38. [Google Scholar] [CrossRef]

- Palm, R.B.; Laws, F.; Winther, O. Attend, copy, parse end-to-end information extraction from documents. In Proceedings of the International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 329–336. [Google Scholar]

- Reul, C.; Christ, D.; Hartelt, A.; Balbach, N.; Wehner, M.; Springmann, U.; Wick, C.; Grundig, C.; Büttner, A.; Puppe, F. OCR4all-An open-source tool providing a (semi-)automatic OCR workflow for historical printings. Appl. Sci. 2019, 9, 4853. [Google Scholar] [CrossRef]

- Abbas, A.; Afzal, M.; Hussain, J.; Lee, S. Meaningful Information Extraction from Unstructured Clinical Documents. Available online: https://www.researchgate.net/publication/336797539_Meaningful_Information_Extraction_from_Unstructured_Clinical_Documents (accessed on 17 September 2020).

- Steinkamp, J.M.; Bala, W.; Sharma, A.; Kantrowitz, J.J. Task definition, annotated dataset, and supervised natural language processing models for symptom extraction from unstructured clinical notes. J. Biomed. Inform. 2020, 102, 103354. [Google Scholar] [CrossRef] [PubMed]

- Joshi, S.; Shah, P.; Pandey, A.K. Location identification, extraction and disambiguation using machine learning in legal contracts. In Proceedings of the 2018 4th International Conference on Computing Communication and Automation (ICCCA), Greater Noida, India, 14–15 December 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Shah, P.; Joshi, S.; Pandey, A.K. Legal clause extraction from contract using machine learning with heuristics improvement. In Proceedings of the 2018 4th International Conference on Computing Communication and Automation (ICCCA), Greater Noida, India, 14–15 December 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Tkaczyk, D.; Szostek, P.; Bolikowski, L. GROTOAP2—The methodology of creating a large ground truth dataset of scientific articles. D-Lib Mag. 2014, 20, 11–12. [Google Scholar] [CrossRef]

- Yang, J.; Liu, Y.; Qian, M.; Guan, C.; Yuan, X. Information extraction from electronic medical records using multitask recurrent neural network with contextual word embedding. Appl. Sci. 2019, 9, 3658. [Google Scholar] [CrossRef]

- Eberendu, A.C. Unstructured Data: An overview of the data of Big Data. Int. J. Comput. Trends Technol. 2016, 38, 46–50. [Google Scholar] [CrossRef]

- Davis, B.; Morse, B.; Cohen, S.; Price, B.; Tensmeyer, C. Deep visual template-free form parsing. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, NSW, Australia, 20–25 September 2019; pp. 134–141. [Google Scholar] [CrossRef]

- Zhao, X.; Niu, E.; Wu, Z.; Wang, X. Cutie: Learning to understand documents with convolutional universal text information extractor. arXiv 2019, arXiv:1903.12363. [Google Scholar]

- Patel, S.; Bhatt, D. Abstractive information extraction from scanned invoices (AIESI) using end-to-end sequential approach. arXiv 2020, arXiv:2009.05728. [Google Scholar]

- Huang, Z.; Chen, K.; He, J.; Bai, X.; Karatzas, D.; Lu, S.; Jawahar, C.V.V. ICDAR2019 competition on scanned receipt OCR and information extraction. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1516–1520. [Google Scholar]

- Kerroumi, M.; Sayem, O.; Shabou, A. VisualWordGrid: Information extraction from scanned documents using a multimodal approach. arXiv 2020, arXiv:2010.02358. [Google Scholar]

- Palm, R.B.; Winther, O.; Laws, F. CloudScan—A Configuration—Free Invoice Analysis System Using Recurrent Neural Networks. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR); Kyoto, Japan, 9–15 November 2017, Volume 1, pp. 406–413.

- Liu, W.; Zhang, Y.; Wan, B. Unstructured Document Recognition on Business Invoice. 2016. Available online: http://cs229.stanford.edu/proj2016/report/LiuWanZhang-UnstructuredDocumentRecognitionOnBusinessInvoice-report.pdf (accessed on 18 November 2020).

- Baviskar, D.; Ahirrao, S.; Kotecha, K. Multi-layout Unstructured Invoice Documents Dataset: A dataset for Template-free Invoice Processing and its Evaluation using AI Approaches. IEEE Access 2021, 1. [Google Scholar] [CrossRef]

| Subject Area | Natural Language Processing, Artificial Intelligence |

|---|---|

| More precise area | Named Entity Recognition |

| Type of files collected | Scanned invoice PDFs |

| Method of data acquisition | Scanner |

| Provided file format in our dataset | IOB and manually annotated |

| Source of data acquisition | Different supplier organizations |

| Number of files | 630 |

| Number of layouts | 4 |

| Layouts | Number of PDFs | Size of Invoices (in MB) | Labels in Dataset |

|---|---|---|---|

| Layout 1 | 196 | 164 | Invoice Number: INV_NO Invoice Date: INV_DT Buyer Name: BUY_N Supplier Name: SUPP_N Buyer GST Number: BUY_G Supplier GST Number: SUPP_G Grand Total Amount: GT_AMT |

| Layout 2 | 29 | 25.8 | |

| Layout 3 | 14 | 23.6 | |

| Layout 4 | 391 | 353 | |

| Total | 630 | 566.4 |

| Period of invoice collection | From March 2015 to October 2020 for all supplier organizations |

| Type of supplier products/items in invoices | Building construction material such as doors, cement, glass |

| Number of words per invoice page | 350 words per page on average. (One .csv file for one invoice page) |

| Number of named entities labeled for each invoice | 11 labels in each invoice, including entity heading name and actual value of that entity. (For example, INV_DL for Invoice Date Label, INV_DT for actual Invoice Date value) |

| Size of one invoice PDF of any layout | 500 KB minimum 1.5 MB average 3 MB maximum |

| Image quality of invoice PDF | 300 dpi |

| Software used during MIDD construction | Python-3 Jupiter Notebook, Google colab, wand library (version 0.6.6) for converting invoice PDF to image Google vision OCR (version 2.3.2) for text extraction from image UBIAI Framework for NER annotations |

| Hardware used during MIDD construction | HP Laserjet M1005 MFP Printer and Scanner and HP Pavilion Laptop AMD RYZEN NVIDIA GEFORCE GTX card |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baviskar, D.; Ahirrao, S.; Kotecha, K. Multi-Layout Invoice Document Dataset (MIDD): A Dataset for Named Entity Recognition. Data 2021, 6, 78. https://doi.org/10.3390/data6070078

Baviskar D, Ahirrao S, Kotecha K. Multi-Layout Invoice Document Dataset (MIDD): A Dataset for Named Entity Recognition. Data. 2021; 6(7):78. https://doi.org/10.3390/data6070078

Chicago/Turabian StyleBaviskar, Dipali, Swati Ahirrao, and Ketan Kotecha. 2021. "Multi-Layout Invoice Document Dataset (MIDD): A Dataset for Named Entity Recognition" Data 6, no. 7: 78. https://doi.org/10.3390/data6070078

APA StyleBaviskar, D., Ahirrao, S., & Kotecha, K. (2021). Multi-Layout Invoice Document Dataset (MIDD): A Dataset for Named Entity Recognition. Data, 6(7), 78. https://doi.org/10.3390/data6070078