DriverSVT: Smartphone-Measured Vehicle Telemetry Data for Driver State Identification

Abstract

:1. Introduction

- We introduced a diverse dataset collected using different sensors in real-time driving scenarios for analyzing driver behavior.

- We applied an unsupervised method (the K-means clustering) for labeling the data, then we analyzed the obtained results to detect the most frequent dangerous situations in each scenario.

2. Related Work

3. Dataset

3.1. Collection Methodology

3.2. Data Description

3.3. Data Exploration

4. Data Evaluation

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Road Traffic Injuries. Available online: https://www.who.int/news-room/fact-sheets/detail/road-traffic-injuries (accessed on 21 October 2022).

- Zohoorian Yazdi, M.; Soryani, M. Driver drowsiness detection by identification of yawning and eye closure. Automot. Sci. Eng. 2019, 9, 3033–3044. [Google Scholar]

- Reddy, B.; Kim, Y.H.; Yun, S.; Seo, C.; Jang, J. Real-time driver drowsiness detection for embedded system using model compression of deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 121–128. [Google Scholar]

- Maior, C.B.S.; das Chagas Moura, M.J.; Santana, J.M.M.; Lins, I.D. Real-time classification for autonomous drowsiness detection using eye aspect ratio. Expert Syst. Appl. 2020, 158, 113505. [Google Scholar] [CrossRef]

- Muthukumaran, N.; Prasath, N.R.G.; Kabilan, R. Driver Sleepiness Detection Using Deep Learning Convolution Neural Network Classifier. In Proceedings of the 2019 3rd IEEE International conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud)(I-SMAC), Palladam, India, 12–14 December 2019; pp. 386–390. [Google Scholar]

- Huda, C.; Tolle, H.; Utaminingrum, F. Mobile-based driver sleepiness detection using facial landmarks and analysis of EAR Values. Int. Assoc. Online Eng. 2020, 14, 14. [Google Scholar] [CrossRef]

- Li, Z.; Li, S.E.; Li, R.; Cheng, B.; Shi, J. Online detection of driver fatigue using steering wheel angles for real driving conditions. Sensors 2017, 17, 495. [Google Scholar] [CrossRef] [Green Version]

- Patel, M.; Lal, S.K.; Kavanagh, D.; Rossiter, P. Applying neural network analysis on heart rate variability data to assess driver fatigue. Expert Syst. Appl. 2011, 38, 7235–7242. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, H.; Fu, R. Automated detection of driver fatigue based on entropy and complexity measures. IEEE Trans. Intell. Transp. Syst. 2013, 15, 168–177. [Google Scholar] [CrossRef]

- Kim, W.; Choi, H.K.; Jang, B.T.; Lim, J. Driver distraction detection using single convolutional neural network. In Proceedings of the 2017 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 18–20 October 2017; pp. 1203–1205. [Google Scholar] [CrossRef]

- Eraqi, H.M.; Abouelnaga, Y.; Saad, M.H.; Moustafa, M.N. Driver distraction identification with an ensemble of convolutional neural networks. J. Adv. Transp. 2019, 2019, 1–12. [Google Scholar] [CrossRef]

- Chen, K.T.; Chen, H.Y.W. Driving style clustering using naturalistic driving data. Transp. Res. Rec. 2019, 2673, 176–188. [Google Scholar] [CrossRef]

- Mantouka, E.G.; Barmpounakis, E.N.; Vlahogianni, E.I. Identifying driving safety profiles from smartphone data using unsupervised learning. Saf. Sci. 2019, 119, 84–90. [Google Scholar] [CrossRef]

- Fazeen, M.; Gozick, B.; Dantu, R.; Bhukhiya, M.; González, M.C. Safe Driving Using Mobile Phones. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1462–1468. [Google Scholar] [CrossRef]

- Palimkar, P.; Bajaj, V.; Mal, A.K.; Shaw, R.N.; Ghosh, A. Unique action identifier by using magnetometer, accelerometer and gyroscope: KNN approach. In Advanced Computing and Intelligent Technologies; Springer: Berlin/Heidelberg, Germany, 2022; pp. 607–631. [Google Scholar]

- Salvati, L.; d’Amore, M.; Fiorentino, A.; Pellegrino, A.; Sena, P.; Villecco, F. Development and Testing of a Methodology for the Assessment of Acceptability of LKA Systems. Machines 2020, 8, 47. [Google Scholar] [CrossRef]

- McDonald, A.D.; Schwarz, C.; Lee, J.D.; Brown, T.L. Real-time detection of drowsiness related lane departures using steering wheel angle. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Boston, MA, USA, 22–26 October 2012; Sage Publications Sage CA: Los Angeles, CA, USA, 2012; Volume 56, pp. 2201–2205. [Google Scholar]

- Othman, W.; Kashevnik, A.; Ali, A.; Shilov, N. DriverMVT: In-Cabin Dataset for Driver Monitoring Including Video and Vehicle Telemetry Information. Data 2022, 7, 62. [Google Scholar] [CrossRef]

- Kashevnik, A.; Lashkov, I.; Gurtov, A. Methodology and Mobile Application for Driver Behavior Analysis and Accident Prevention. IEEE Trans. Intell. Transp. Syst. 2020, 21, 2427–2436. [Google Scholar] [CrossRef]

- Kashevnik, A.; Lashkov, I.; Ponomarev, A.; Teslya, N.; Gurtov, A. Cloud-Based Driver Monitoring System Using a Smartphone. IEEE Sens. J. 2020, 20, 6701–6715. [Google Scholar] [CrossRef]

- Kanungo, T.; Mount, D.M.; Netanyahu, N.S.; Piatko, C.D.; Silverman, R.; Wu, A.Y. An efficient k-means clustering algorithm: Analysis and implementation. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 881–892. [Google Scholar] [CrossRef]

- Zhao, C.; Zhang, B.; He, J.; Lian, J. Recognition of driving postures by contourlet transform and random forests. IET Intell. Transp. Syst. 2012, 6, 161–168. [Google Scholar] [CrossRef]

- Zhang, X.; Sugano, Y.; Fritz, M.; Bulling, A. MPIIGaze: Real-World Dataset and Deep Appearance-Based Gaze Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 162–175. [Google Scholar] [CrossRef] [Green Version]

- Diaz-Chito, K.; Hernández-Sabaté, A.; López, A.M. A reduced feature set for driver head pose estimation. Appl. Soft Comput. 2016, 45, 98–107. [Google Scholar] [CrossRef]

- Romera, E.; Bergasa, L.M.; Arroyo, R. Need data for driver behaviour analysis? Presenting the public UAH-DriveSet. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 387–392. [Google Scholar]

- Wawage, P.; Deshpande, Y. Smartphone Sensor Dataset for Driver Behavior Analysis. Data Brief 2022, 41, 107992. [Google Scholar] [CrossRef]

- Ohn-Bar, E.; Martin, S.; Tawari, A.; Trivedi, M.M. Head, eye, and hand patterns for driver activity recognition. In Proceedings of the 2014 IEEE 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 660–665. [Google Scholar]

- McDonald, A.D.; Lee, J.D.; Schwarz, C.; Brown, T.L. A contextual and temporal algorithm for driver drowsiness detection. Accid. Anal. Prev. 2018, 113, 25–37. [Google Scholar] [CrossRef]

- Murata, A. Proposal of a method to predict subjective rating on drowsiness using physiological and behavioral measures. IIE Trans. Occup. Ergon. Hum. Factors 2016, 4, 128–140. [Google Scholar] [CrossRef]

- Correa, A.G.; Orosco, L.; Laciar, E. Automatic detection of drowsiness in EEG records based on multimodal analysis. Med. Eng. Phys. 2014, 36, 244–249. [Google Scholar] [CrossRef]

- de Naurois, C.J.; Bourdin, C.; Stratulat, A.; Diaz, E.; Vercher, J.L. Detection and prediction of driver drowsiness using artificial neural network models. Accid. Anal. Prev. 2019, 126, 95–104. [Google Scholar] [CrossRef] [PubMed]

- Kontaxi, A.; Ziakopoulos, A.; Yannis, G. Trip characteristics impact on the frequency of harsh events recorded via smartphone sensors. IATSS Res. 2021, 45, 574–583. [Google Scholar] [CrossRef]

- Liu, L.; Wang, Z.; Qiu, S. Driving behavior tracking and recognition based on multisensors data fusion. IEEE Sens. J. 2020, 20, 10811–10823. [Google Scholar] [CrossRef]

- Kashevnik, A.; Ali, A.; Lashkov, I.; Zubok, D. Human Head Angle Detection Based on Image Analysis. In Proceedings of the Future Technologies Conference (FTC) 2020, Virtual, 5–6 November 2020; Springer International Publishing: Cham, Switzerland, 2021; Volume 1, pp. 233–242. [Google Scholar]

- Kashevnik, A.; Ali, A.; Lashkov, I.; Shilov, N. Seat Belt Fastness Detection Based on Image Analysis from Vehicle In-abin Camera. In Proceedings of the 2020 26th Conference of Open Innovations Association (FRUCT), Yaroslavl, Russia, 23–24 April 2020; pp. 143–150. [Google Scholar] [CrossRef]

| Column Name | Description | Notation/Unit |

|---|---|---|

| datetime | Time the entry was recorded | Unix timestamp (ms) |

| datetimestart | Driving trip starting time | Unix timestamp (ms) |

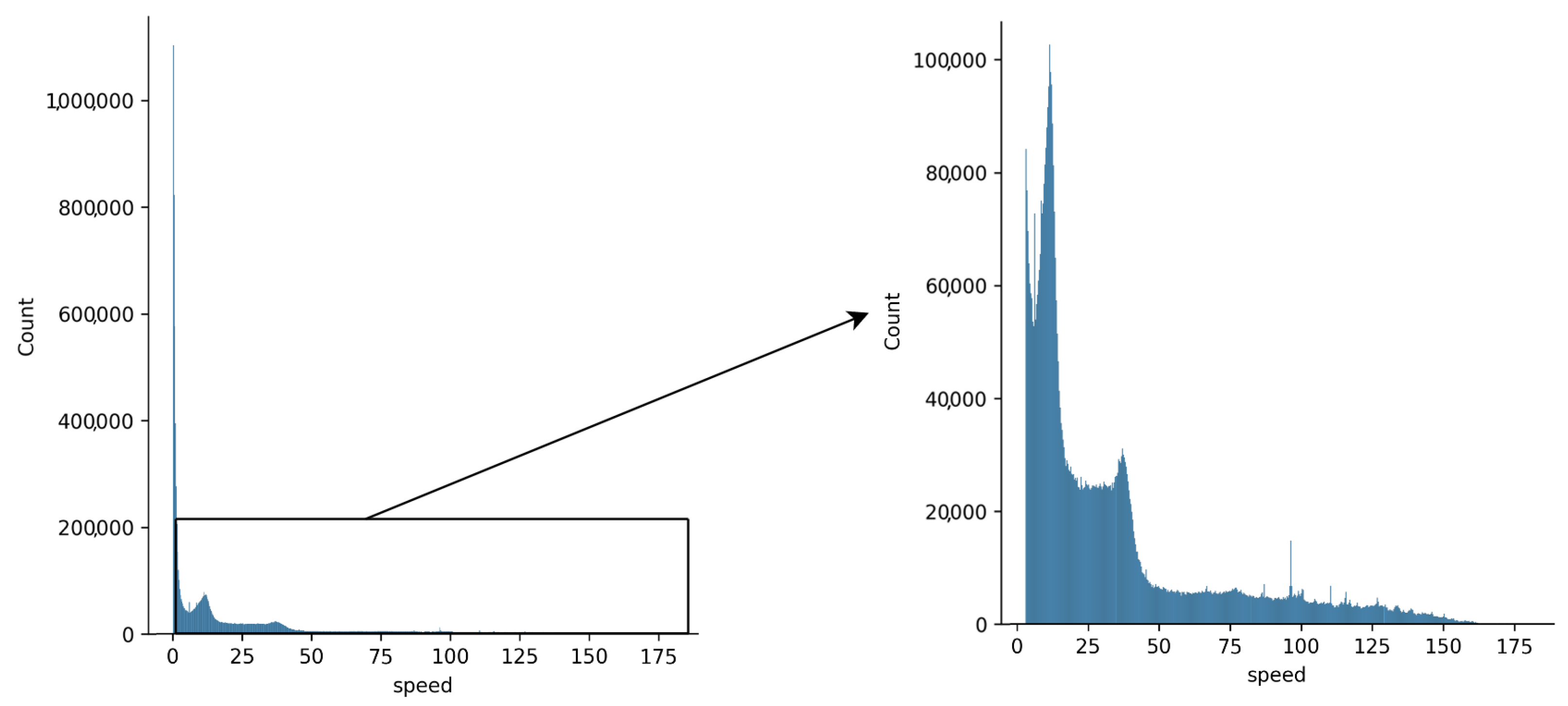

| speed | Vehicle speed | km/h |

| acc_X,acc_Y,acc_Z | Linear acceleration on X, Y, Z axes, respectively | m/ |

| lightlevel | Light level | lux |

| euleranglerotatephone | The Euler angles of the used phone | degrees |

| perclos | Percentage of time when the driver’s eyes were closed | - |

| userid | The id of the driver | - |

| accelerometer_data_raw | Raw data from the accelerometer | m/s |

| gyroscope_data_raw | Raw data from the gyroscope | degree/s |

| magnetometer_data_raw | Raw data from the magnetometer | Tesla |

| deviceinfo | Specifications of the device used to collect the information | - |

| gforce | Data provided by the g-force sensor | - |

| head_pose | Raw Euler angles (pitch, yaw, roll) | degrees |

| accelerometer_data | Changes in velocity | m/ |

| gyroscope_data | Angular velocity | degree/s |

| magnetometer_data | Magnetic field intensity | Tesla |

| face_mouth | Mouth openness ratio | - |

| heart_rate | Driver’s heart rate | beats per minute |

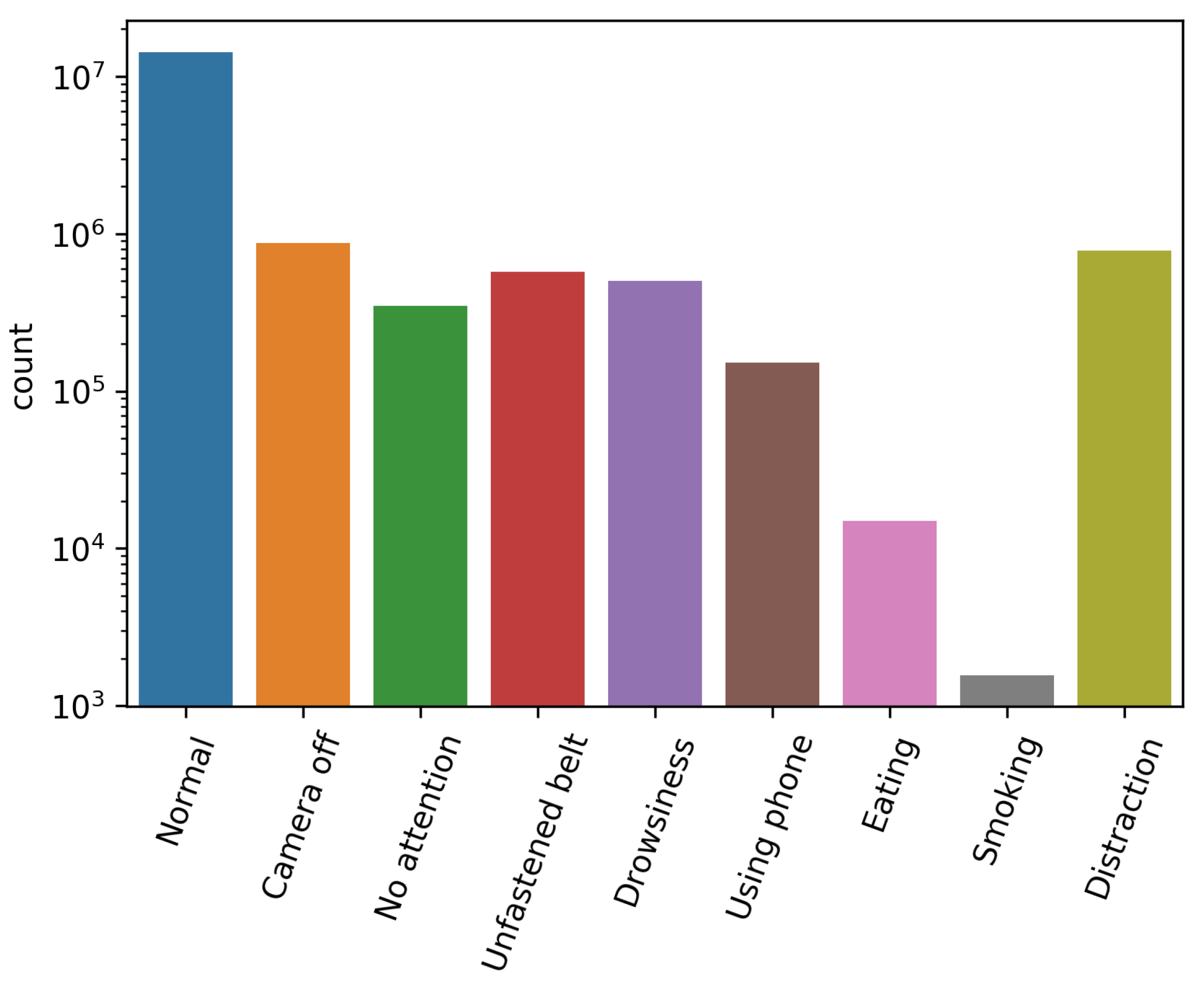

| dangerousstate | A critical event | - |

| Critical Event | Speed | acc | gforce | gyro_X | gyro_Y | gyro_Z | mag_X | mag_Y | mag_Z | grv_X | grv_Y | grv_Z |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| No attention | 139.65 | 2.75 | 6.0 | 0.49 | 0.46 | 0.46 | 361.23 | 586.99 | 556.92 | 9.79 | 9.8 | 9.74 |

| Smoking | 79.81 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 0.0 | 0.0 | 0.0 | 0.0 |

| Eating | 127.02 | 6.32 | 7.84 | 1.24 | 1.27 | 0.25 | 20.57 | 0.0 | 12.21 | 2.1 | 3.6 | 9.8 |

| Unfastened belt | 127.09 | 3.84 | 12.93 | 1.312 | 0.99 | 0.66 | 60.7 | 61.48 | 175.75 | 9.8 | 9.78 | 9.74 |

| Using phone | 129.65 | 7.76 | 4.08 | 0.38 | 1.52 | 0.74 | 110.68 | 46.09 | 11.53 | 2.8 | 9.8 | 9.78 |

| Camera off | 71.3 | 15.13 | 21.81 | 2.79 | 3.05 | 3.01 | 286.97 | 589.69 | 559.71 | 9.8 | 9.8 | 9.8 |

| Distraction | 169.97 | 44.55 | 69.58 | 7.35 | 5.57 | 4.84 | 818.10 | 851.94 | 213.20 | - | - | - |

| Drowsiness | 163.65 | 44.55 | 22.52 | 6.39 | 4.29 | 2.66 | 828.6 | 871.86 | 261.53 | 1.52 | 9.75 | 0.0 |

| Normal | 169.89 | 46.85 | 105.819 | 8.36 | 12.7 | 11.71 | 2603.9 | 1902.78 | 4915.05 | 9.8 | 9.8 | 9.8 |

| Cluster | Number of Samples |

|---|---|

| Cluster 0 | 16,257,599 |

| Cluster 1 | 1,303,902 |

| Cluster | Number of Samples |

|---|---|

| Cluster 0 | 2,187,217 |

| Cluster 1 | 933,391 |

| Cluster 2 | 14,440,893 |

| Cluster 0 | Cluster 1 | Cluster 2 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| min | Mean | max | min | Mean | max | min | Mean | max | |

| speed | 18.96 | 36.31 | 70.38 | 69.29 | 103.35 | 169.97 | 0.00 | 2.31 | 19.62 |

| gforce | −13.18 | 0.11 | 69.58 | −11.14 | 0.25 | 30.67 | −24.85 | 0.14 | 105.82 |

| acc_X | 0.00 | 0.07 | 9.00 | 0.00 | 0.43 | 9.00 | 0.00 | 0.04 | 9.00 |

| acc_Y | 0.00 | 0.06 | 9.00 | 0.00 | 0.11 | 9.00 | 0.00 | 0.04 | 9.00 |

| acc_Z | 0.00 | 0.50 | 9.00 | 0.00 | 1.62 | 9.00 | 0.00 | 0.19 | 9.00 |

| accelerometer_data_X | −18.05 | 1.32 | 22.84 | −14.01 | 4.07 | 23.54 | −55.13 | 0.80 | 49.67 |

| accelerometer_data_Y | −32.74 | 0.75 | 27.81 | −10.60 | 2.83 | 22.72 | −78.44 | 0.76 | 39.23 |

| accelerometer_data_Z | −42.55 | 1.04 | 32.44 | −18.14 | 1.43 | 20.41 | −63.33 | 2.00 | 54.54 |

| gyroscope_data_X | −5.99 | 0.00 | 3.89 | −5.71 | 0.00 | 3.59 | −11.93 | 0.00 | 8.36 |

| gyroscope_data_Y | −8.10 | 0.00 | 5.25 | −4.32 | 0.00 | 3.03 | −17.82 | 0.00 | 12.70 |

| gyroscope_data_Z | −4.69 | 0.00 | 4.84 | −2.55 | 0.00 | 1.61 | −9.31 | 0.00 | 11.71 |

| magnetometer_data_X | −963.73 | −3.88 | 816.90 | −963.73 | −11.56 | 828.60 | −1448.70 | −1.23 | 2603.90 |

| magnetometer_data_Y | −2596.39 | −4.56 | 863.88 | −2535.17 | −7.85 | 871.86 | −2599.13 | 8.55 | 1902.78 |

| magnetometer_data_Z | −775.52 | −1.67 | 852.60 | −734.27 | −6.55 | 381.81 | −1160.90 | 0.67 | 4915.05 |

| gravity_X | −9.70 | 0.00 | 9.81 | −9.71 | −0.04 | 9.75 | −9.80 | −0.02 | 9.81 |

| gravity_Y | −9.36 | 0.05 | 9.81 | −3.44 | 0.01 | 9.81 | −9.81 | 0.40 | 9.81 |

| gravity_Z | −6.09 | 0.58 | 9.81 | −3.43 | 0.09 | 9.78 | −9.80 | 1.20 | 9.81 |

| euleranglerotatephone_roll | −180.00 | −3.03 | 180.00 | −180.00 | −13.02 | 180.00 | −180.00 | 1.58 | 180.00 |

| euleranglerotatephone_pitch | −85.18 | 7.18 | 90.00 | −84.70 | 6.50 | 89.95 | −89.88 | 15.18 | 90.00 |

| euleranglerotatephone_yaw | −180.00 | −13.53 | 180.00 | −180.00 | −33.41 | 180.00 | −180.00 | −9.36 | 180.00 |

| State | Cluster 0 | Cluster 1 |

|---|---|---|

| Unfastened belt | 14.88% | 0.09% |

| Drowsiness | 6.34% | 21.29% |

| Eating | 0.35% | 0.41% |

| Distraction | 6.50% | 11.36% |

| Normal | 58.10% | 62.86% |

| Smoking | 0.04% | 0.05% |

| No attention | 8.96% | 2.31% |

| Camera off | 1.15% | 0.01% |

| Using phone | 3.67% | 1.61% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Othman, W.; Kashevnik, A.; Hamoud, B.; Shilov, N. DriverSVT: Smartphone-Measured Vehicle Telemetry Data for Driver State Identification. Data 2022, 7, 181. https://doi.org/10.3390/data7120181

Othman W, Kashevnik A, Hamoud B, Shilov N. DriverSVT: Smartphone-Measured Vehicle Telemetry Data for Driver State Identification. Data. 2022; 7(12):181. https://doi.org/10.3390/data7120181

Chicago/Turabian StyleOthman, Walaa, Alexey Kashevnik, Batol Hamoud, and Nikolay Shilov. 2022. "DriverSVT: Smartphone-Measured Vehicle Telemetry Data for Driver State Identification" Data 7, no. 12: 181. https://doi.org/10.3390/data7120181

APA StyleOthman, W., Kashevnik, A., Hamoud, B., & Shilov, N. (2022). DriverSVT: Smartphone-Measured Vehicle Telemetry Data for Driver State Identification. Data, 7(12), 181. https://doi.org/10.3390/data7120181