1. Introduction

As public awareness about environmental protection grows, society has pivoted its attention to technologies and applications of clean, renewable energy. This shift in focus has greatly accelerated the development of energy storage technologies, which are essential for the storage and exploitation of renewable energy sources, e.g., solar and wind. Among the rapidly evolving landscape of energy storage technologies, lithium-ion batteries have stood out with their high energy density and high power density [

1]. These characteristics have made them ideal for powering diverse applications ranging from portable electronics [

2] to electric vehicles [

3], power grid systems, and even aerospace applications. To ensure the safe and efficient usage of lithium-ion batteries, a so-called battery management system (BMS) is often integrated, especially in larger battery systems. A BMS is a sophisticated electronic system that manages the usage of the corresponding battery system. It performs multiple critical functionalities including monitoring the voltage, current, and temperature of the cells in the battery pack. Furthermore, it is also responsible for cell balancing, which equalizes the charge across the cells to prevent accelerated aging or failure due to imbalanced cell states. Another fundamental role of BMSs is safety protection, where the BMS prevents the battery system from hazardous failures by monitoring faulty usage, such as overcharging, deep discharging, and overheating. Beyond these functionalities, as well as serving as the foundation of them, BMS provides valuable information about the critical states of the cells [

4]. An accurate estimation of these internal states is closely related to the efficiency and longevity of the batteries, where state of charge (SOC) and state of health (SOH) are considered to be the two key states [

5].

SOC serves as an indicator of the remaining charge in a battery relative to its total capacity, which is defined as follows:

where

Q stands for the residual charge quantity that can be taken from the battery at the moment, and

C stands for the capacity of the battery, which is the charge quantity that can be taken when the battery is fully charged. This measure is similar to a fuel gauge but for batteries, providing a quantifiable metric to assess how much electric charge is stored at any given moment relative to the battery’s current maximum capacity. However, in contrast to a fuel gauge, the SOC cannot be determined with a simple measurement. SOC estimation plays a crucial role not only in the optimized management of usage profiles with the prediction of the remaining runtime but also underpins safety protection functionalities by offering a direct metric for the battery system to stay in the appropriate operation window. Therefore, an accurate estimation of SOC ensures efficient and safe utilization, as well as an optimized lifespan of the battery.

SOH is another key state that represents the overall health condition of a battery. The definition of SOH can either be based on the capacity or the internal resistance of the battery [

6]:

where

and

are the current actual capacity and the rated capacity of the battery,

,

and

stand for the current internal resistance, the end-of-life internal resistance and the rated internal resistance. In this paper, the capacity-based definition of SOH is used. SOH provides a deep insight into the remaining useful life of a battery. An accurate SOH estimation ensures the reliability and economy of the corresponding energy storage system by monitoring the health conditions of each individual cell, thus preventing unexpected failures and optimizing the lifecycle cost of the batteries. Understanding SOH allows for optimized battery usage, ensuring a durable and safe operational life of the battery.

Estimation algorithms of SOC and SOH can generally be classified into three basic categories: direct measurements, model-based approaches, and data-driven approaches [

7,

8]. Of course, sometimes a hybrid of the three basic approaches is also applied [

9,

10]. As the name indicates, direct measurement approaches aim at determining the states through parameters directly derived from measurements without algorithms. For the determination of SOC, frequently applied direct measurement approaches include coulomb counting and open-circuit voltage-based (OCV-based) estimation [

11,

12]. The coulomb counting method, also known as Ampere-hour counting, calculates the integral of the current over time and divides it by the reference capacity to get the change in SOC. This method is simple to implement but has several critical drawbacks. The reference capacity and the initial SOC must be known. Furthermore, this method is prone to error because of the integral operation of the current measurement. The quantization method also plays an important role since, in real applications, unlike in the ideal situation, the current value between two sampled time steps might not be constant. As for OCV-based SOC estimation, the underlying idea is that the OCV of a battery cell is a non-linear function of SOC [

11]. With a pre-built OCV-SOC lookup table saved in the BMS, it is possible to look up the corresponding SOC value based on the current OCV. Although this method is also simple to implement, it is usually not possible to obtain the OCV with an adequate relaxation period in real-world applications. Furthermore, some types of cells may have a flat OCV curve or a hysteresis in the OCV curve, which further weakens the feasibility of this method. To determine SOH via direct measurement, either the current capacity or the internal resistance can be measured. However, to measure the full usable capacity of a battery, a full discharge is needed, which is often not the case in real applications. The same goes for an internal resistance measurement, which requires an impedance measurement or a pulse-based measurement.

Model-based battery state estimation approaches determine the states indirectly based on parameterized battery models and algorithms [

13,

14]. The fundamental principle of model-based approaches is to make predictions using the battery model and then adjust the predictions with the help of measurements. There exist different kinds of models that are used for model-based state estimation, such as empirical models [

15] and electrochemical models [

16], but the most popular model is the equivalent circuit model because it represents a balance between simplicity and accuracy [

14]. A variety of algorithms have been applied by researchers to this topic, including filter-based methods like the Kalman filter (KF) [

17], particle filter [

18], extended Kalman filter (EKF) [

19,

20,

21], and unscented Kalman filter (UKF) [

22,

23,

24] and observer-based methods like the Luenberger observer [

25], sliding mode observer [

26], and H-infinity observer [

27]. Because of the great correlation between SOC and SOH, they are also often estimated together. If the estimation of SOC and SOH is carried out in one extended model, then the setup is called joint estimation [

28,

29]. However, such a setup increases the computational cost due to the increasing matrix size, and it implements the update of SOC and SOH at the same time scale. Conversely, if the estimation of SOC and SOH is done in two separate models and connected, then the setup is called dual estimation or co-estimation [

30,

31]. Such a setup keeps the matrix size small and enables the possibility of treating the estimated states in different time scales, which is much more efficient since the change in SOH is much less dynamic than the change in SOC. However, an accurate model-based state estimation requires a well-parameterized model and large computing resources for an online application [

32]. In-depth domain knowledge about the electrical, thermal, and aging behavior of the studied battery is the premise for a model-based battery state estimation, which, together with the complex filtering algorithms, keeps the implementation of model-based approaches complicated.

With the development of modern artificial intelligence technology and the availability of data, more and more researchers are pivoting to data-driven approaches for battery state estimation, hoping to find a solution to the aforementioned problems of direct measurement and model-based approaches. As the name indicates, data-driven approaches utilize models that try to capture the intricate relationships and patterns of the target problem by learning from historical or real-time data. The overall workflow of data-driven approaches includes data collection, data preprocessing, model development, and model deployment [

33]. A large amount of data is usually the premise for data-driven approaches so that the trained model can achieve adequate generalization abilities. For that, researchers can design the tests and do the measurements themselves, which allows for tailoring the data to their specific needs and conditions and bringing novelty to the research as well. An alternative would be using public datasets available online [

34], which could save time and resources and facilitate comparisons. A variety of methods have been applied to data-driven battery state estimation approaches [

13,

33], including traditional machine learning methods [

35,

36], fuzzy logic methods [

37], shallow neural networks [

38,

39], and deep learning models [

40,

41,

42,

43,

44,

45]. Ref. [

40] proposed a deep neural network for SOC estimation and tested the performance with respect to different numbers of precedent time steps and different temperature conditions, where the mean squared error (MAE) is generally under 2%. Ref. [

42] proposed a convolutional neural network (CNN) for SOC estimation and studied the influence of different time horizons and sampling rates, together with added noise as data augmentation, where the MAE on the test cycle ranged from 0.36% to 3.12%. Ref. [

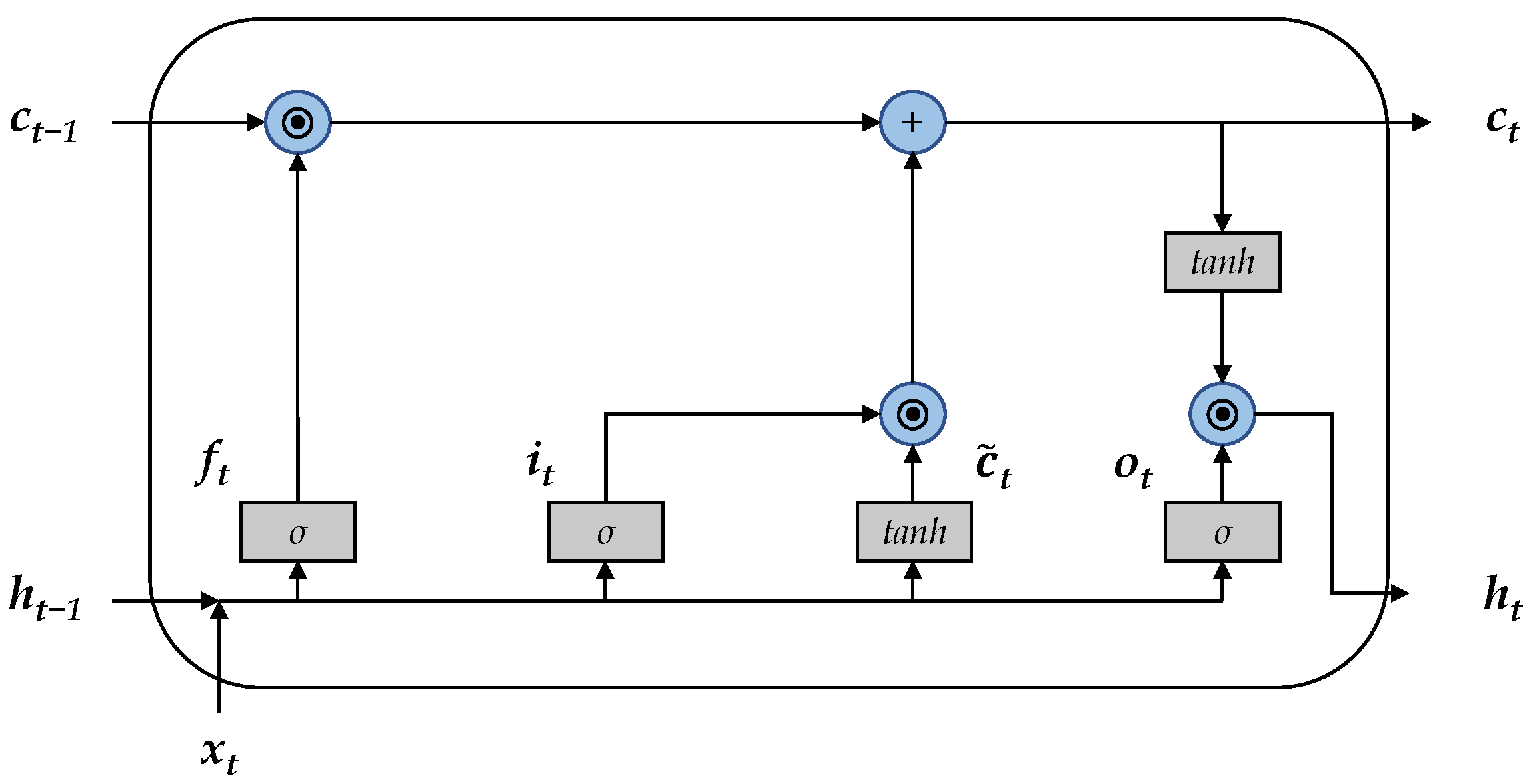

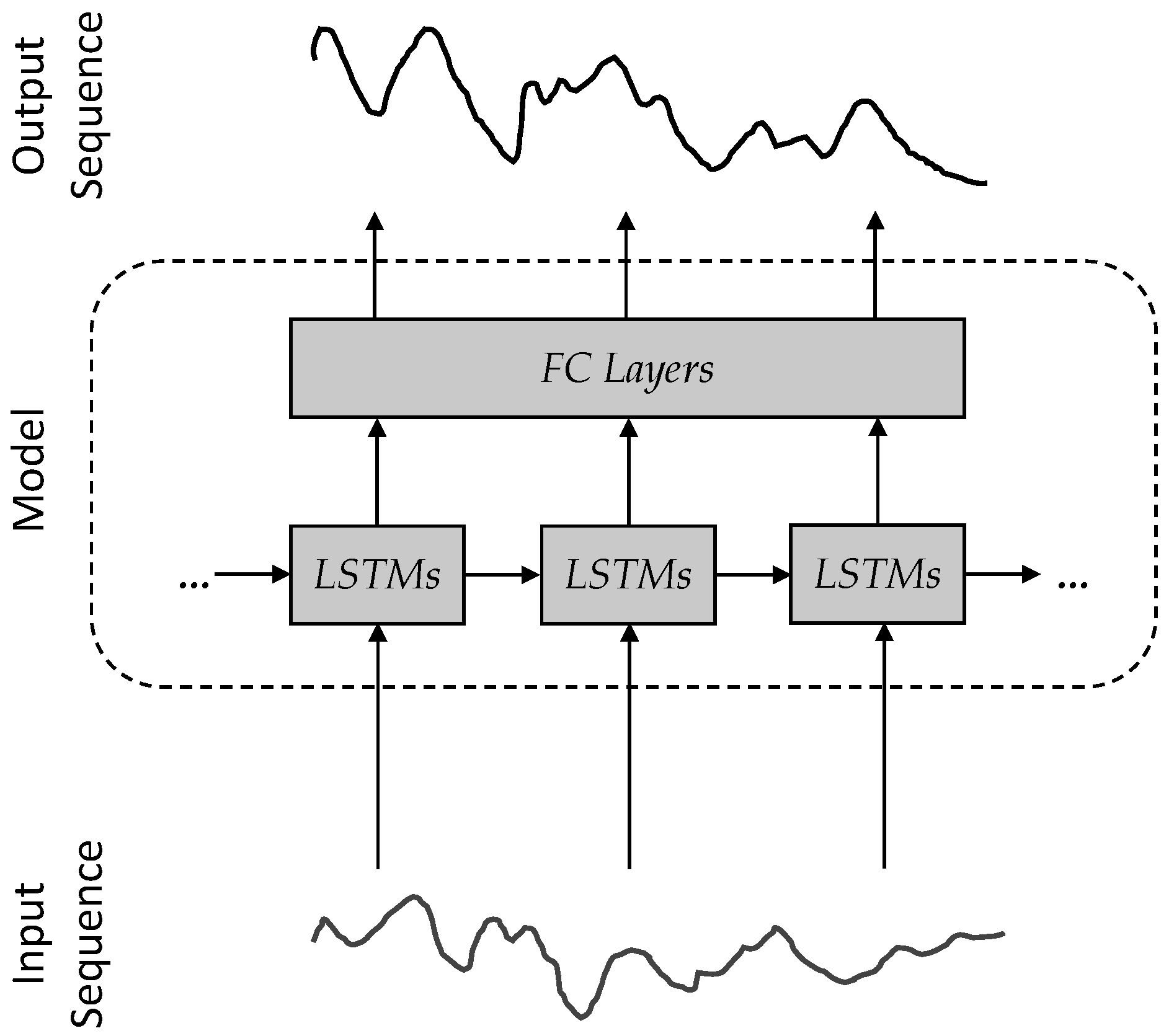

41] utilized a recurrent neural network (RNN) with long short-term memory (LSTM) layers for SOC estimation with different time depths and different temperature conditions, where the MAE on the test set ranged from 0.573% to 2.088%. Ref. [

44] proposed a gated recurrent unit-convolutional neural network (GRU-CNN) network architecture for SOH estimation using charging data, which achieved an MAE of 1.03% on the NASA dataset and 0.62% on the Oxford dataset. Ref. [

45] utilized a deep CNN to estimate the capacity online using partial charge data, which achieved an RMSE of 1.477% on the NASA cells. Ref. [

43] proposed a snapshot-based LSTM-based RNN to trace the SOH of electric vehicle batteries using partial cycling data with an average root mean square error (RMSE) lower than 2.46%.

Similar to those mentioned in the model-based approach, several joint or dual estimation setups also exist for data-driven approaches. Ref. [

5] estimated SOC and the capacity dually with a hybrid machine learning framework consisting of a Gaussian process regression method and a convolutional neural network. With the assistance of fiber sensor measurements, the proposed model achieved an RMSE of

Ah on the estimated capacity based on the estimated SOC and an RMSE of 0.62% on SOC estimation with updated capacity and fiber sensor measurements. Ref. [

46] utilized a nonlinear state space reconstruction-long short-term memory neural network for dual estimation of SOC and SOH on lithium-ion battery packs of electric vehicles. Their proposed model consists of two LSTM neural network estimators for SOC and SOH, respectively, and achieved an RMSE of within 1.3% for SOC estimation and 2.5% for SOH estimation. Ref. [

47] proposed a novel SOC-SOH estimation framework for joint estimation of SOC and SOH during the charging phase, consisting of encoders and decoders for charging and SOH. The proposed framework was able to achieve an MAE of 0.362% for SOC estimation and an MAE of 0.41% for SOH estimation on the test set.

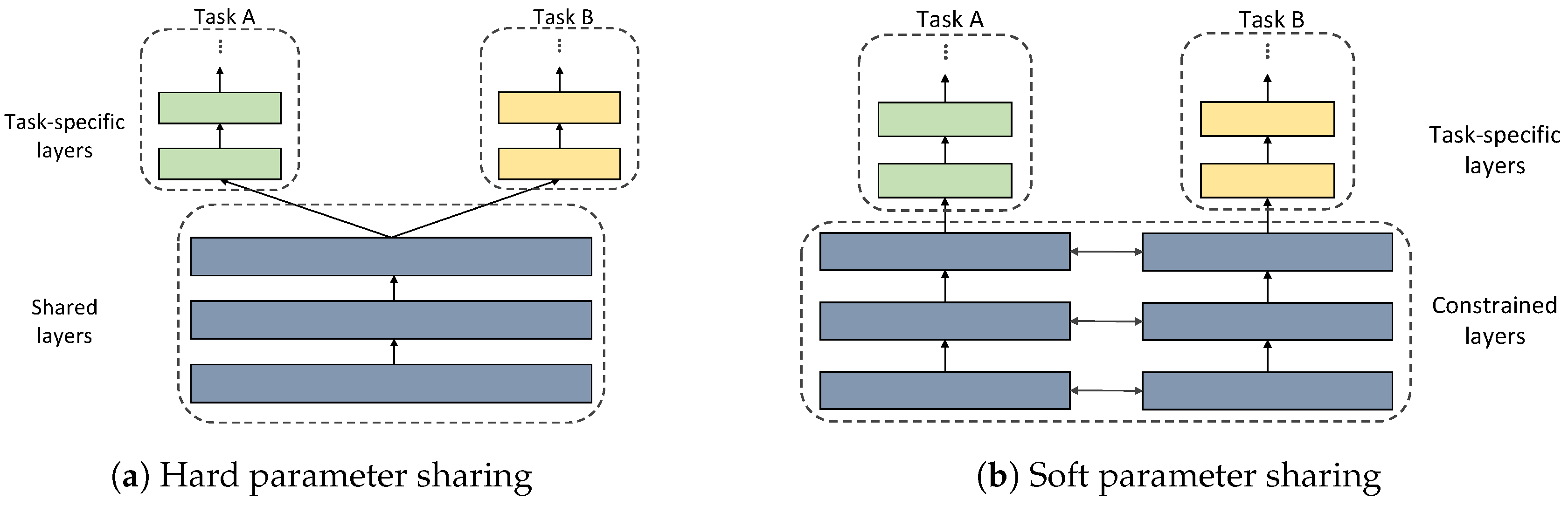

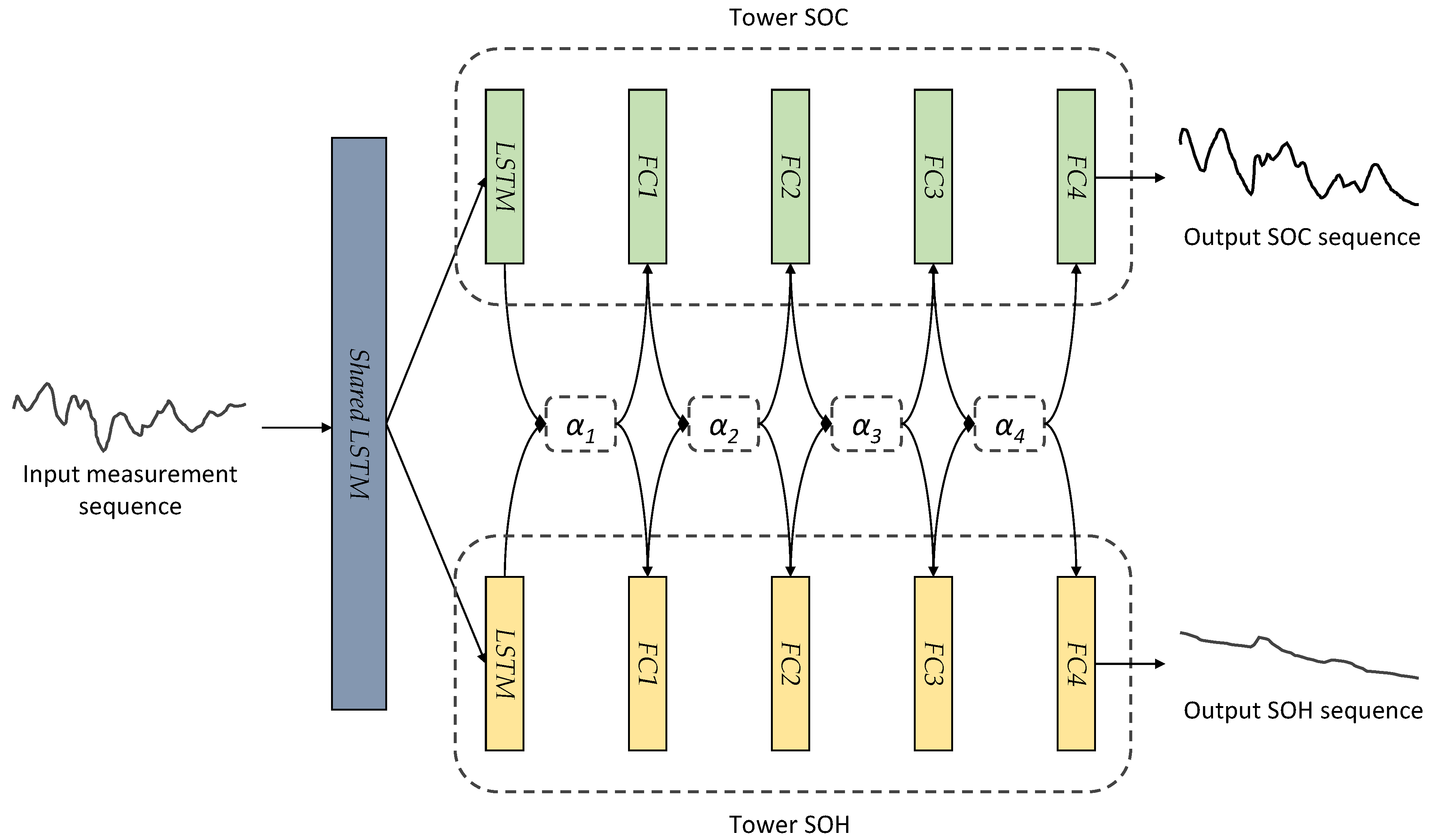

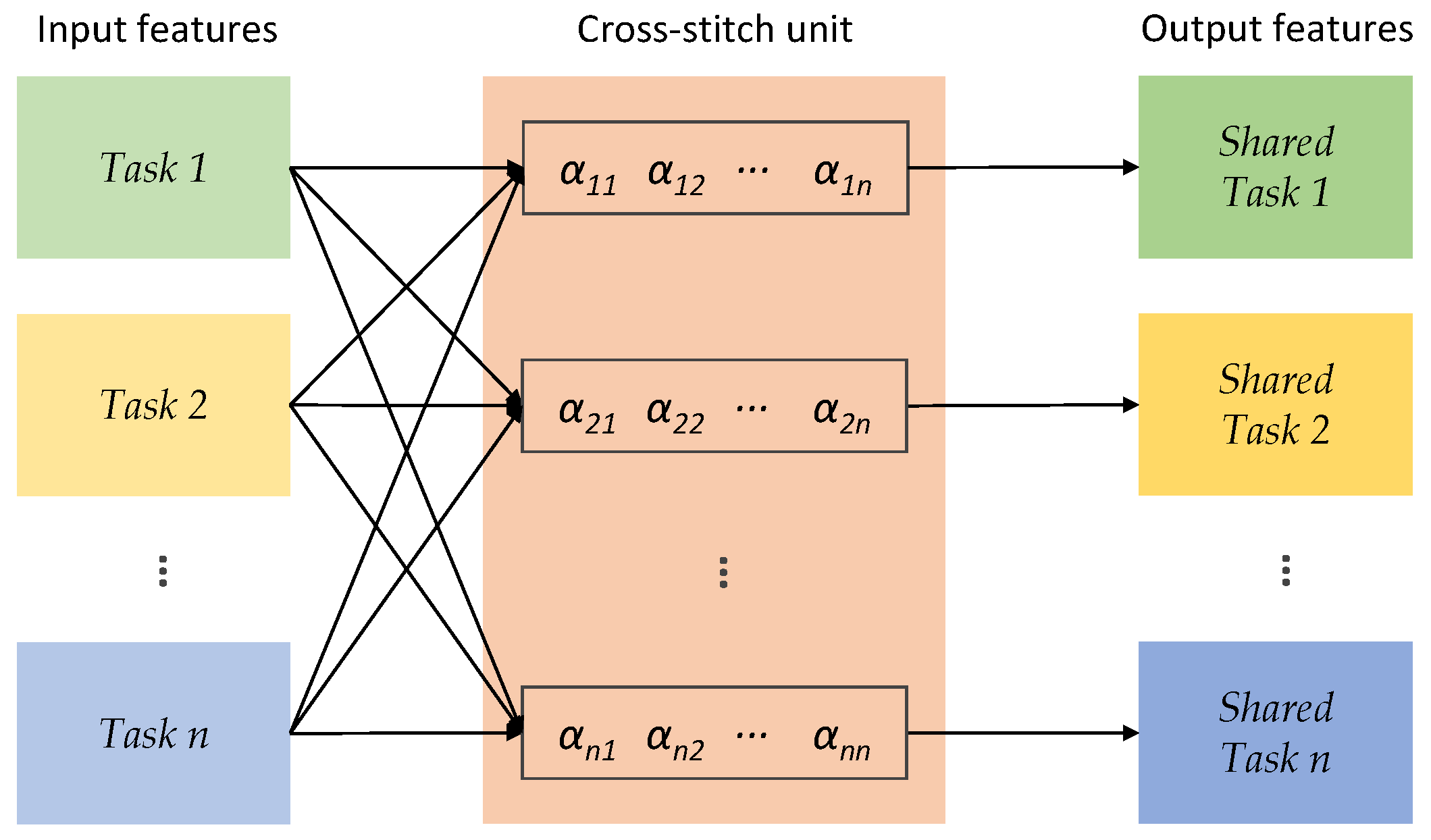

Compared with joint model-based estimation of SOC and SOH, significantly less work has been done for joint data-driven estimation. However, prior knowledge tells us that when we estimate SOC and SOH at the same time, these two states are dependent on each other, especially SOC on SOH. There should be some shared information about the two states, which can be captured by a neural network and thus benefit the model. A deep learning paradigm of this type is called multi-task learning (MTL), which has been successfully applied across a variety of applications of machine learning [

48,

49], such as natural language processing [

50] and computer vision [

51]. Research has shown that applying MTL techniques brings improved learning efficiency, better generalization, less risk of overfitting, and higher resource efficiency to deep learning models [

48,

49]. There have also been several applications of MTL to batteries [

52,

53,

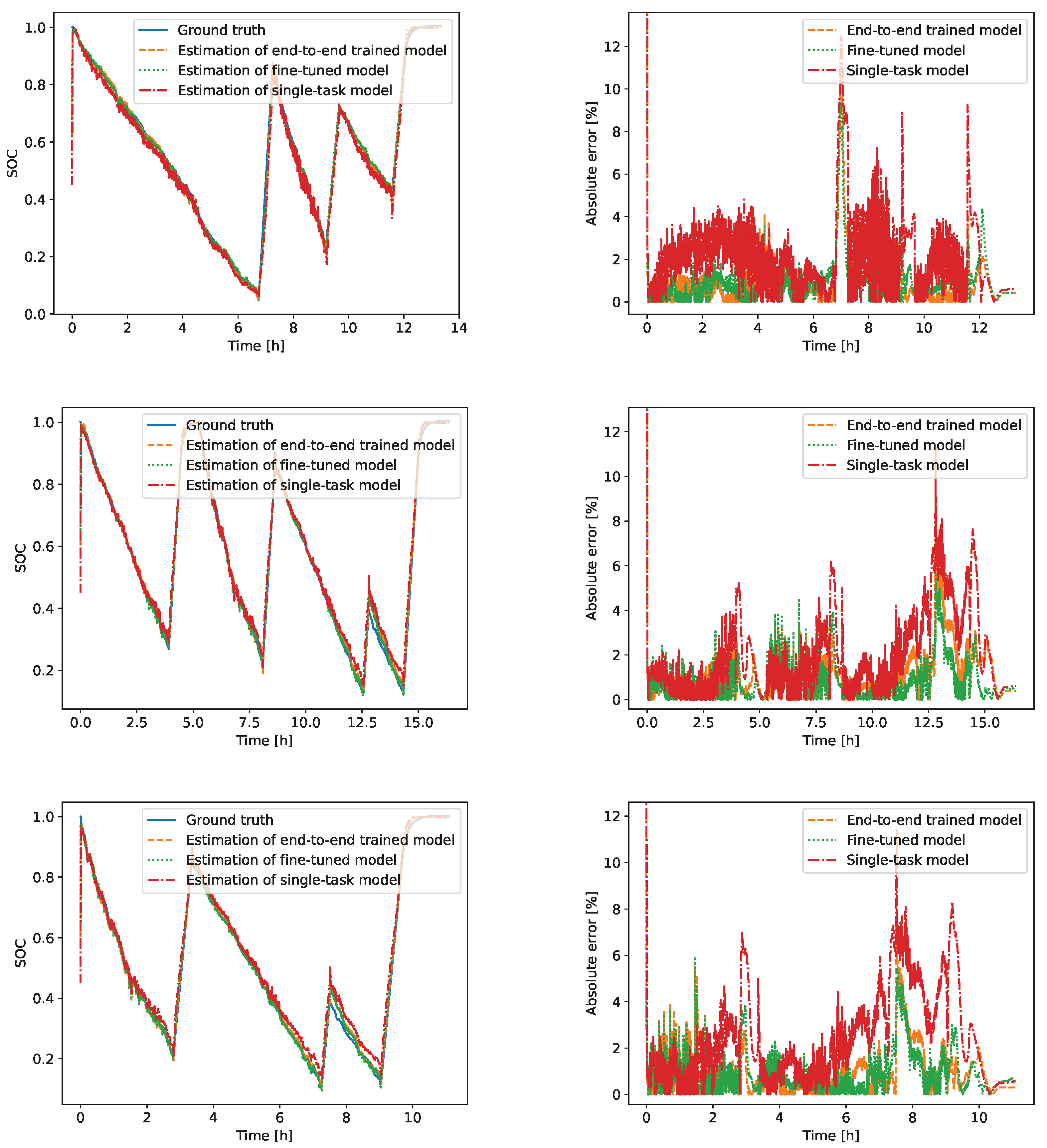

54]. However, to the best of our knowledge, there is still no precedent work applying MTL to the joint estimation of SOC and SOH, the two most important states of a battery cell. Furthermore, we deem the implementation of such joint estimation of SOC and SOH much more meaningful for real-world applications if the model allows for an online estimation, meaning the estimation is causal and conducted in real time based solely on historical and current information without interrupting the normal usage of the battery. Therefore, in this paper, we propose a novel data-driven approach for joint SOC and SOH online estimation of lithium-ion batteries utilizing multi-task learning. The proposed model takes the differing time scales in the dynamics of SOC and SOH into consideration, delivering accurate estimation results. It is suitable for online applications through synchronous sequence-to-sequence implementation.

The rest of this paper is structured as follows:

Section 2 introduces the proposed model with the applied methodology explained in detail;

Section 3 displays the acquired results of the proposed model under different experiments, which are then analyzed and interpreted in

Section 4. Finally,

Section 5 summarizes the achieved results of this paper and gives a short outlook of our future work.