Abstract

The greater cane rat algorithm (GCRA) is a swarm intelligence algorithm inspired by the discerning and intelligent foraging behavior of the greater cane rats, which facilitates mating during the rainy season and non-mating during the dry season. However, the basic GCRA exhibits serious drawbacks of high parameter sensitivity, insufficient solution accuracy, high computational complexity, susceptibility to local optima and overfitting, poor dynamic adaptability, and a severe curse of dimensionality. In this paper, a hybrid nonlinear greater cane rat algorithm with sine–cosine algorithm named (SCGCRA) is proposed for resolving the benchmark functions and constrained engineering designs; the objective is to balance exploration and exploitation to identify the globally optimal precise solution. The SCGCRA utilizes the periodic oscillatory fluctuation characteristics of the sine–cosine algorithm and the dynamic regulation and decision-making of nonlinear control strategy to improve search efficiency and flexibility, enhance convergence speed and solution accuracy, increase population diversity and quality, avoid premature convergence and search stagnation, remedy the disequilibrium between exploration and exploitation, achieve synergistic complementarity and reduce sensitivity, and realize repeated expansion and contraction. Twenty-three benchmark functions and six real-world engineering designs are utilized to verify the reliability and practicality of the SCGCRA. The experimental results demonstrate that the SCGCRA exhibits certain superiority and adaptability in achieving a faster convergence speed, higher solution accuracy, and stronger stability and robustness.

1. Introduction

The global optimization involves transforming complex problems with high-dimensionality, nonlinearity, and multiple constraints into explicit mathematical models with objective functions and constraints, within a defined variable domain or under specific constraints, which is utilized to regulate the quantitative indicators; attain the global extremum solution; acquire objective and repeatable results; eschew the subjectivity of empirical decision-making; and materialize optimal system performance, lowest cost, and highest efficiency. The traditional optimization methods, such as gradient descent, Newton’s method, Lagrange multiplier method, and simplex method, exhibit notable drawbacks: strong dependency on function properties, weak handling skills of high dimensions, sensitive constraint handling conditions, poor anti-interference and robustness, and narrow practical scenarios. However, metaheuristic algorithms (MHAs) exhibit remarkable advantages, including strong universality and usability, robustness and adaptability, excellent parallelism and distributed computing, extensive practicality and scalability, preferable detection efficiency and solution accuracy, and low computational complexity. MAs can be broadly categorized into four main types according to the source of inspiration to address the large-scale, nonlinear, multimodal optimization problems and identify the global extremum solutions.

- (1)

- Swarm intelligence algorithms (SIAs)

SIAs, inspired by the collective behavior of organisms in nature, are distributed computing methods that imitate the local rules of numerous simple individuals to achieve interaction and collaboration, facilitating information sharing and adaptive adjustment, and thereby emerging with collective intelligent behavior, thereby efficiently acquiring the global extremum solution. SIAs exhibit local perception and global emergence, a balance of positive and negative feedback, a combination of randomness and determinism, and division of labor and collaboration mechanisms. SIAs exhibit specific competitive characteristics of substantial decentralization, self-organization, robustness, scalability, simplicity and ease implementation, excellent local interaction and global emergence, preferable distribution and parallelism, and reliable population diversity and global convergence, without gradient information, such as Chinese pangolin optimization (CPO) [1], black-winged kite algorithm (BKA) [2], elk herd optimization (EHO) [3], puma optimization (PO) [4], horned lizard optimization algorithm (HLOA) [5], and greater cane rat algorithm (GCRA) [6].

- (2)

- Evolutionary algorithms (EAs)

EAs inspired by biological evolution theory are stochastic computational methods that imitate natural selection, genetic variation, and population reproduction. They utilize population iteration, fitness-driven, and random search mechanisms to approach global extremum solutions gradually. EAs are independent of the mathematical properties of continuity and differentiability of the problems, which are suitable for addressing nonlinear, non-convex, multimodal, high-dimensional, and multi-constraint problems. EAs exhibit specific competitive characteristics of intense exploration and exploitation, flexible multi-objective optimization and parallel computing, eschewing dimensional disaster and gradient information, strong flexibility, parallelism, scalability and adaptability, such as wave search algorithm (WSA) [7], snow avalanches algorithm (SAA) [8], liver cancer algorithm (LCA) [9], coronavirus mask protection algorithm (CMPA) [10], gooseneck barnacle optimization (GBO) [11], and orchard algorithm (OA) [12].

- (3)

- Physics/Chemistry/Mathematics-inspired algorithms

Physics/Chemistry/Mathematics-inspired algorithms based on natural phenomena or mathematical principles are randomized computational methods, which imitate the energy conservation, gravitational interaction, thermodynamics process of physical systems, molecular interactions, energy conversion, chemical reaction equilibrium of the chemistry systems, mathematical transformations, probability distributions, and differential evolution of the mathematics systems to maintain population diversity and multi-objective dynamic equilibrium; promote information compensation coordination and collaborative optimization of dual populations; explore high-quality search regions; and exploit global extremum solutions. These algorithms exhibit specific competitive characteristics of strong multi-objective adaptability; parallelism and scalability; low parameter sensitivity; rigorous logicality and convergence; optimal complementary advantages; preferable universality and energy orientation; superior solution quality and efficiency, such as artemisinin optimization (AO) [13]; Newton–Raphson-based optimization (NRBO) [14]; exponential distribution optimizer (EDO) [15]; Young’s double-slit experiment (YDSE) [16]; Lévy arithmetic algorithm (LAA) [17]; and triangulation topology aggregation optimizer (TTAO) [18].

- (4)

- Human-inspired algorithms (HBAs)

HBAs are inspired by social activities, human decision-making, cognitive patterns, environmental adaptation, and collaborative logic, which abstract the empirical rules, group interactions, adaptive strategies, or search logic accumulated by humans in addressing practical problems into computable mathematical models, and construct efficient search mechanisms. HBAs utilize individual experience accumulation, group information sharing, goal-oriented trial and error, or adaptive adjustment to efficiently explore the high-quality detection scope, extract global extremum solutions, achieve multi-agent collaboration, and avoid blind search and premature convergence. HBAs exhibit specific competitive characteristics of intense collaboration, adaptability, robustness, parallelism, interpretability and self-organization, straightforward interpretation and implementation; low dependency on mathematical properties; low computational complexity; intuitive and easily tunable parameters; strong multi-objective optimization and dynamic adaptability; and equilibrium between global exploration and local exploitation, such as educational competition optimization (ECO) [19], information acquisition optimizer (IAO) [20], human evolutionary optimization algorithm (HEOA) [21], memory backtracking strategy (MBS) [22], guided learning strategy (GLS) [23], and thinking innovation strategy (TIS) [24].

The greater cane rat algorithm (GCRA) is motivated by the dispersed foraging behavior during the mating season and the concentrated foraging behavior during the non-mating season of the greater cane rats, which facilitates an efficient switch between global exploration and local exploitation, expands the search range, and updates the population’s position to obtain potential optimal solutions [6]. The basic GCRA exhibits serious drawbacks of high parameter sensitivity, insufficient solution accuracy, high computational complexity, susceptibility to local optima and overfitting, poor dynamic adaptability, and severe curse of dimensionality. The no-free-lunch (NFL) theorem explicitly states that there is no universal algorithm or absolutely superior algorithm that applies to all complex problems, which not only reveals the conditional dependence of algorithms and the adaptability of difficulties but also prompts us to construct a hybrid nonlinear greater cane rat algorithm with sine–cosine algorithm called (SCGCRA) to resolve the benchmark functions and constrained engineering designs. The core purpose is to integrate the powerful global exploration ability of GCRA and the excellent local exploitation ability of SCA, and adaptively balance the two algorithms through a nonlinear control strategy, which can comprehensively improve the optimization performance of SCGCRA in handling complex function optimization and engineering practical problems, achieve dynamic balance between exploration and exploitation, enhance the adaptability and robustness of algorithms, provide efficient, reliable, and practical solutions for complex optimization problems. For function optimization, the SCGCRA employs mechanism complementarity, dynamic tuning, and information sharing to overcome local optima, accelerate convergence, and enhance solution accuracy. For engineering examples, the SCGCRA has certain superiority and practicality in handling complex constraints, adapting to dynamic environments, and solving high-dimensional problems. The SCGCRA not only employs global coarse exploration to enable greater cane rats to move and forage among scattered shelters within the territory, leave trail marks leading to food sources, and explore potential solutions, but also utilizes local refined exploitation to allow isolated males to concentrate on meticulous foraging in food-rich areas and enhance solution accuracy.

The main contributions of the SCGCRA are summarized as follows: (1) The hybrid nonlinear greater cane rat algorithm with sine–cosine algorithm (SCGCRA) is proposed to resolve the global optimization and constrained engineering applications. (2) The periodic oscillatory fluctuation characteristics of the sine–cosine algorithm and the dynamic regulation and decision-making of nonlinear control strategy improve search efficiency and flexibility, enhance convergence speed and solution accuracy, increase population diversity and quality, avoid premature convergence and search stagnation, remedy the disequilibrium between exploration and exploitation, achieve synergistic complementarity and reduce sensitivity, and realize repeated expansion and contraction. (3) The SCGCRA is compared with numerous advanced algorithms that contain recently published, highly cited, and highly performing algorithms, such as CPO, BKA, EHO, PO, WSA, HLOA, ECO, IAO, AO, HEOA, NRBO, and GCRA. (4) The SCGCRA is tested against twenty-three benchmark functions and six real-world engineering designs by performing simulation experiments and analyzing the results. (5) The evaluation metrics and overall performance of the SCGCRA outperform those of other algorithms. The SCGCRA exhibits substantial superiority and adaptability in achieving a dynamic balance between exploration and exploitation, leveraging a diversity mechanism to create a synergistic effect of complementary strengths, comprehensively enhancing convergence speed, solution accuracy, stability, and robustness.

The following sections constitute the article: Section 2 emphasizes the greater cane rat algorithm (GCRA). Section 3 elucidates the nonlinear greater cane rat algorithm with the sine–cosine algorithm (SCGCRA). Section 4 elaborates on the simulation test and result analysis for tackling benchmark functions. Section 5 portrays the SCGCRA for tackling engineering designs. Section 6 encapsulates the conclusions and future research.

2. Greater Cane Rat Algorithm (GCRA)

The GCRA is based on the intelligent foraging behavior and social collaboration mechanism of the greater cane rats (GCRs), which simulates their territory exploration, path tracking, and reproduction strategies to construct an efficient framework for global exploration and local exploitation in complex optimization problems. GCRs are highly nocturnal animals that primarily inhabit areas such as swamps, riverbanks, and cultivated land, with sugarcane and grass as their main diet. The GCRA simulates the dispersed foraging behavior of GCRs during the non-mating or dry season and the aggregated breeding behavior of GCRs during the mating or rainy season in balancing the ability to explore globally (search for unknown areas) and exploit locally (optimize known solutions). Figure 1 portrays the natural foraging habitat of GCRs. GCRs live near the water source in the bottom shaded areas. The whiteness of the regions and paths depicts the trails taken by the vine-like grasses, which are features of previously known food sources.

Figure 1.

The natural foraging habitat of GCRs.

2.1. Population Initialization

The matrix of a randomly initialized population is calculated as follows:

where denotes the GCRs population, denotes location in dimension, denotes the population scale, and denotes the problem dimension. is calculated as follows:

where and and denotes the upper and lower boundaries.

The dominant male GCR is identified as the fittest individual, which can guide the group towards known food sources or shelters, realign the locations, and avoid blind search. serves as a variable that determines whether it is the rainy season or not, which is used to dynamically switch between exploration and exploitation.

where denotes the latest location and denotes the current location.

2.2. Exploration

GCRs construct hideout or shallow burrow shelters scattered around the territory in swamps, riverbanks, and cultivated land, which achieves dispersed migration for foraging and leaves trail marks through territorial sheltering and route tracking. Figure 2 portrays the exploration action of GCRs while looking for sources. The most suitable location of the dominant male GCR is considered the food source route, while the remaining GCRs follow and adjust their locations to explore different areas, expand the solution regions, avoid search stagnation, and obtain multiple potential solutions. The location is calculated as follows:

where denotes the latest location of GCR, denotes the location of dimension, denotes the GCR’s current location, denotes the dominant male GCR’s location, denotes the fitness of the , denotes the current fitness, denotes the dispersed food sources and shelters, imitates the impact of a diverse food source and intensify exploitation, imitates a decreasing food source and forces GCRs to explore the latest food sources and shelters, and promptes GCRs to relocate to other abundant food sources available within the breeding areas. , , and are calculated as follows:

where denotes the current iteration and denotes the maximum iteration.

Figure 2.

Exploration action of GCRs while looking for sources.

2.3. Exploitation

Rampant breeding male GCRs leave the groups during the rainy season to forage deeply within areas with abundant food sources. Figure 3 portrays the exploitation action of GCRs during mating season. The GCRA utilizes the random selection of female GCRs to imitate the focused exploitation of high-quality areas through reproductive behavior, strength local meticulous search, and enhance solution quality. The location is calculated as follows:

where denotes the female GCR’s location and imitates the number of offspring produced

by each female GCR.

Figure 3.

Exploitation action of GCRs during mating season.

Algorithm 1 portrays the pseudocode of the GCRA.

| Algorithm 1 GCRA |

| Step 1. Initialize the GCRs population Step 2. Estimate the fitness of GCRs, renovate the global best solution () Sift the fittest GCR as the dominant male Renovate the remaining GCRs stem from via Equation (3) Step 3. while do for all GCRs Renovate , , , , , if Exploration Renovate GCRs positions via Equation (4) else Exploitation Renovate GCRs positions via Equation (9) end if end for Affirm whether any solution has overflowed the search interval and revise it Estimate the fitness of GCRs stem from a renewed location Renovate GCRs positions via Equation (5) Renovate and sift a renewed dominant male end while Return |

3. Nonlinear Greater Cane Rat Algorithm with Sine–Cosine Algorithm (SCGCRA)

The SCGCRA integrates the intelligent discerning and foraging behavior of GCRA, the mathematical periodic oscillatory fluctuation characteristic of the sine–cosine algorithm, and the adaptive adjustment mechanism in remedying the disequilibrium between global exploration and local exploitation, enhancing convergence efficiency and solution accuracy, highlighting robustness and applicability, avoiding premature convergence and dimensional disaster, preventing redundant search, and achieving superior solution quality.

3.1. Nonlinear GCRA

The downward slope of the reduction in the parameter value has been altered according to the algorithm structure. The nonlinear control strategy exhibits a strong anti-disturbance ability and nonlinear processing characteristic, ensuring solution accuracy and stability, enhancing overall search efficiency and localized fine-tuning, strengthening adaptability and operability, and facilitating dynamic regulation and solution quality [25]. The locations are calculated as follows:

where denotes the current iteration and denotes the maximum iteration.

3.2. Sine–Cosine Algorithm (SCA)

The SCA is derived from the periodic fluctuation and range restriction of sine and cosine functions, which imitates the dynamic oscillatory behavior of trigonometric functions to guide the search agents in efficiently searching within a multi-dimensional solution space and approximating the global optimal solution [26]. The SCA offers remarkable advantages, including concise structure and parameters, easy equilibrium and implementation, structural flexibility and stability, efficient astringency and solution quality, strong robustness and versatility, adaptive switching between exploration and exploitation, low computational overhead, and abundant population multiplicity. The location is calculated as follows:

where denotes the current location, denotes the latest location, denotes the fittest location, , , , and ∣∣ denotes the absolute value.

The is calculated as follows:

where .

3.3. SCGCRA

The SCGCRA utilizes the group migration mechanism led by the dominant male GCRs and the randomly dispersed food source simulation mechanism to provide clear global search directions, avoid direction dispersion and redundant detection caused by SCA’s mathematical periodic oscillatory fluctuation characteristics, ensure complete coverage of the solution space, dynamically expand the search scope, enhance population diversity, and avoid premature convergence. The SCGCRA utilizes the local aggregation mechanism of the female GCRs and narrow amplitude oscillations of the SCA to exploit a small range of high-quality solution areas, efficiently locate the optimal fitness scope, achieve weak fine adjustment, remedy local roughness, reduce ineffective iterations, accelerate the convergence speed, and enhance solution accuracy. The nonlinear control strategy can dynamically adjust the dispersion to avoid search stagnation and improve robustness and usability. The SCGCRA utilizes global guidance, local oscillation, and dynamic control to achieve a fast convergence speed, high solution accuracy, and strong anti-interference and adaptability.

In exploration of the SCGCRA, the location is calculated as follows:

In exploitation of the SCGCRA, the location is calculated as follows:

where denotes the location of dimension, and denotes the latest location of dimension, denotes the location of GCR, denotes the latest location of GCR, denotes the dominant male GCR’s location, , , , and is linearly decreases from 2 to 0.

The SCGCRA combines three existing techniques: GCRA, SCA, and a nonlinear control strategy. The motivation of a specific combination is summarized as follows: (1) Core problem-driven: Limitations of a single algorithm and the necessity of a hybrid design. The inherent characteristic of GCRA is to simulate the foraging and escape behavior of GCRs in terms of food source attraction and breeding season grouping, and achieve global detection and extensive search of the solution space. The GCRA lacks strong randomness and dispersion due to a lack of dynamic adjustment mechanisms, relying on individual experience accumulation and mathematically driven, refined development strategies, which determine limited solution accuracy. The inherent characteristics of SCA are based on the mathematical periodic oscillation characteristics of sine and cosine functions, which can systematically perform fine local development near the current optimal solution. The amplitude coefficient is usually linearly attenuated, and the later oscillation amplitude is too small to break through the local extremum. The SCGCRA achieves a balance between the breadth of global exploration and the accuracy of local exploitation by combining the biological behavior of GCRA with the mathematical periodicity of SCA, which has strong robustness and adaptability to high-dimensional, multimodal, and dynamic constraint problems. (2) Complementarity: A collaborative mechanism between biological behavior and mathematical models. The GCRA employs an adaptive grouping strategy and a constraint attraction mechanism to balance global exploration and local exploitation dynamically, guiding the population to move towards the feasible domain naturally. The SCA utilizes periodic oscillation coverage and amplitude dynamic attenuation to frequently switch directions, thereby enhancing the ability to escape local optima and requiring nonlinear control optimization. The SCGCRA possesses strong global exploration and local exploitation capabilities, enabling hybrid collaboration, identifying potential high-quality areas, and enhancing solution accuracy. (3) Nonlinear control strategy: Dynamic regulation and performance enhancement. The nonlinear control strategy adjusts SCGCRA parameters in real-time through feedback mechanisms, achieving optimal matching between solution quality and parameter adjustment. It avoids premature convergence or excessive oscillation caused by fixed parameters, ensuring the stability and robustness of the search process.

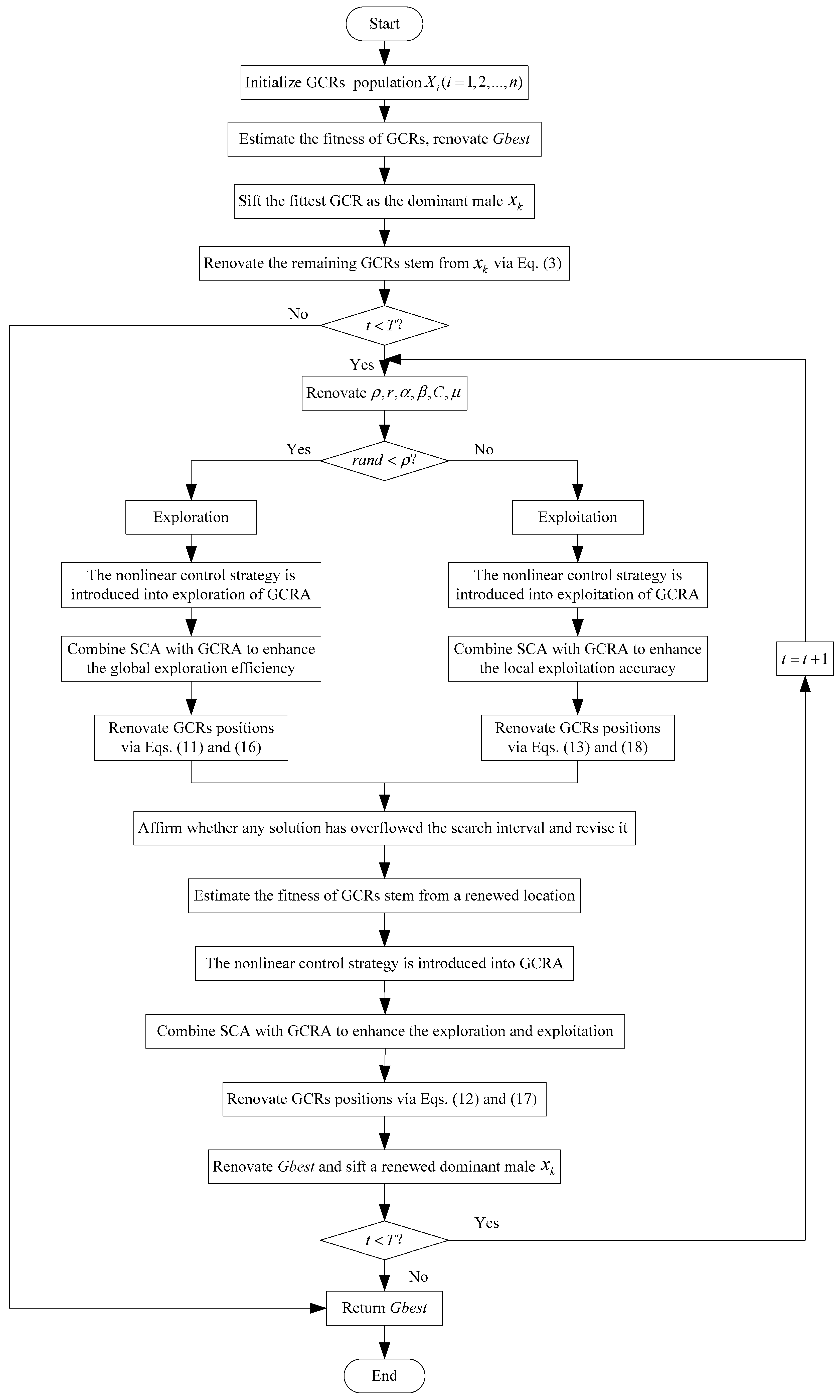

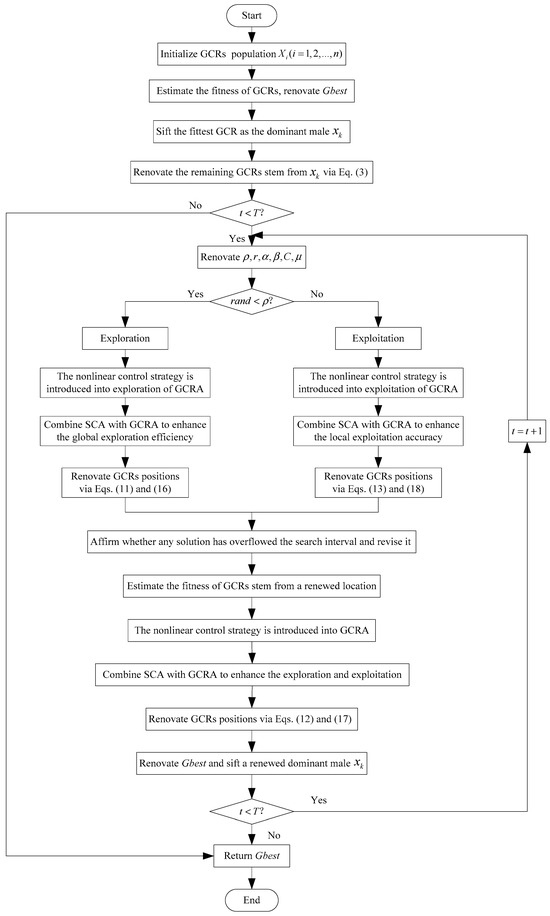

Algorithm 2 portrays the pseudocode of the SCGCRA. Figure 4 portrays the flowchart of SCGCRA.

Figure 4.

Flowchart of SCGCRA.

| Algorithm 2 SCGCRA |

| Step 1. Initialize the GCRs population Step 2. Estimate the fitness of GCRs, renovate the global best solution () Sift the fittest GCR as the dominant male Renovate the remaining GCRs stem from via Equation (3) Step 3. while do for all GCRs Renovate , , , , , if Exploration The nonlinear control strategy is introduced into exploration of GCRA Combine SCA with GCRA to enhance the global exploration efficiency Renovate GCRs positions via Equations (11) and (16) else Exploitation The nonlinear control strategy is introduced into exploitation of GCRA Combine SCA with GCRA to enhance the local exploitation accuracy Renovate GCRs positions via Equations (13) and (18) end if end for Affirm whether any solution has overflowed the search interval and revise it Estimate the fitness of GCRs stem from a renewed location The nonlinear control strategy is introduced into GCRA Combine SCA with GCRA to enhance the exploration and exploitation Renovate GCRs positions via Equations (12) and (17) Renovate and sift a renewed dominant male end while Return |

4. Simulation Test and Result Analysis for Tackling Benchmark Functions

4.1. Experimental Disposition

The experimental disposition stipulated a 64-bit Windows 11 OS, a 12th Gen Intel(R) Core(TM) i9-12900HX 2.30 GHz CPU, 4 TB storage, an independent 16 GB graphics card, and 16 GB RAM. All comparison approaches were implemented in MATLAB R2022b.

4.2. Benchmark Functions

The SCGCRA employed unimodal functions (), multimodal functions (), and fixed-dimension multimodal functions () to validate the reliability and practicality. Table 1 outlines the benchmark functions.

Table 1.

Benchmark functions.

4.3. Parameter Settings

To validate the practicality and applicability, the SCGCRA is compared with the CPO, BKA, EHO, PO, WSA, HLOA, ECO, IAO, AO, HEOA, NRBO, and GCRA. The parameter selection and sensitivity analysis are summarized as follows: (1) Inheritance principle: The control parameters of SCGCRA are directly derived from the original parameters of GCRA and SCA, and strictly inherit the widely validated recommended or default values in the original papers. These parameters have been extensively studied and proven to possess broad applicability, reliable representativeness, and strong robustness. The necessity of repetitive experimental verification is relatively low. Modifying these highly standardized parameters would undermine the mathematical foundation and convergence guarantee of the SCGCRA. (2) Principles of Cybernetics: A nonlinear control strategy will smoothly transition and dynamically adjust the equivalent effects based on state variables, such as iteration times and population diversity, utilizing adaptive compensation mechanisms that are insensitive to the initial absolute values. A nonlinear control strategy can automatically compensate for performance losses caused by parameter deviations, ensure the robustness of parameter changes, theoretically guarantee the algorithm’s stability, and guide the search in a favorable direction. (3) Normalization principle: We dimensionless the few critical parameters that need to be set (such as values between 0 and 1, or correlated with the number of iterations), which dramatically reduces the coupling degree between selected parameters and the specific problem scale, making a set of parameters applicable to a class of problems. (4) Principle of structural superiority: The SCGCRA realizes the complementary advantages of the GCRA’s directional exploitation ability and SCA’s global exploration ability. The dynamic scheduling of the nonlinear control strategy makes the SCGCRA insensitive to subtle changes in parameters. The performance improvement primarily comes from structural innovation rather than fine-tuning of parameters. The algorithm structure itself ensures good performance within a reasonable range of parameters.

CPO: Invariable values , , .

BKA: Stochastic values , , Cauchy mutation , invariable values , , .

EHO: Stochastic values , , .

PO: Invariable values , , , , stochastic values , .

WSA: Invariable values , .

HLOA: Hue circle angle , binary value , invariable values , , , , , stochastic values , , .

ECO: Stochastic values , , invariable values , , .

IAO: Stochastic values , , , , , , , , , .

AO: Stochastic values , , .

HEOA: Stochastic values , , invariable values , .

NRBO: Stochastic values , , , , , , , , , invariable value , binary value .

GCRA: Stochastic values , , , invariable value .

SCGCRA: Stochastic values , , , , , , invariable values , .

4.4. Simulation Test and Result Analysis

To objectively and comprehensively evaluate the convergence characteristics and solution accuracy of each algorithm, the population scale, maximum iteration, and stand-alone run remained consistent, with values of 50, 1000, and 30, respectively. Table 2 outlines the contrastive results of benchmark functions.

Table 2.

Contrastive results of benchmark functions.

The SCGCRA was manipulated to resolve the benchmark functions; the core objective was to break through the limitations of the original GCRA and the bottlenecks of local optimum, enhance the adaptability and reliability of strongly constrained functions, promote the detection efficiency and solution quality, strengthen stability and robustness, diminish the parameter sensitivity and result fluctuations, and identify the global optimum or the high-quality approximate solution. The optimal value (Best), worst value (Worst), mean value (Mean), and standard deviation (Std) systematically validate the core characteristics and reflect the reliability and applicability from different dimensions. The optimal value is the objective fitness value corresponding to the fittest solution in multiple stand-alone runs, which verifies the global detectability and convergence accuracy. When the optimal value approaches the theoretical global optimum, the algorithm has enhanced the potential to excavate high-quality solutions and strengthened the effectiveness to break through the local optimum. The rapid decrease and stabilization of the optimal value during the iteration process indicate that the algorithm has a fast convergence speed and high solution accuracy. The worst value is the objective fitness value corresponding to the worst solution in multiple stand-alone runs, which estimates the robustness and adaptability to extreme scenarios. The smaller the disparity between the worst value and the optimal value, the lower the sensitivity of the algorithm to initial population, parameter settings, complex solution domain, or randomness, and the stronger the robustness. The modified strategy significantly surpasses the original algorithm in terms of value. It effectively reduces the number of extreme poor solutions during the search process, thereby avoiding infeasible solutions and extreme local convergence. The mean value is the arithmetic mean of the objective fitness values of all solutions in multiple stand-alone runs, which reveals the overall average performance, search efficiency, and general applicability. It assesses the stability of the algorithm and the uniformity of the distribution of the disaggregation. The extent to which the mean value approaches the theoretical optimal solution evaluates the overall search efficiency of the algorithm, designates the correctness of the overall search direction, and avoids search stagnation due to local optima. The standard deviation is the degree of the objective fitness value relative to the mean value, which measures stability and distribution uniformity of the algorithm. The smaller standard deviation indicates that the algorithm exhibits less fluctuation in the convergence results, higher stability, and stronger repeatability. The standard deviation is extended to the degree of discrepancy in the objective space, which is used to evaluate the impact of the modified approach on solution diversity. For unimodal functions, the solution space comprises a unique global extremum point without local extremum points. The monotonicity can swiftly narrow the search scope, diminish ineffective exploration, and clarify the direction of gradient descent. The core requirement was to rapidly and precisely approximate the optimal solution, which validates the algorithm’s local mining accuracy, convergence efficiency, and adaptability of simple solution spaces. For , , , and , the optimal values, worst values, mean values, and standard deviations of the IAO, GCRA, and SCGCRA remained consistent and exhibited optimal extreme solutions. The quantitative metrics, detection efficiency, and solution accuracy of the SCGCRA were superior to those of the CPO, BKA, EHO, PO, WSA, HLOA, ECO, AO, HEOA, and NRBO, and the SCGCRA utilized the aggregation effect of dominant male GCRs and the decentralized search of the population to overlap the global exploration scope, locate potential optimal areas, strengthen local exploitation, and enhance solution accuracy. For , , and , the SCGCRA not only achieved a small improvement in quantitative metrics, detection efficiency, and solution accuracy but also significantly outperformed other algorithms. The SCGCRA utilized the periodic fluctuations of sine and cosine functions to furnish multi-directional perturbation paths, actualize high-precision local convergence, avert search oscillation, narrow the search solution space, and ensure population diversity. For multimodal functions , the solution space comprised multiple local extremum points, some of which were close in solution quality to the global extremum point. The core requirement was to avoid search stagnation, surmount local traps, and identify the global optimum, which validates the algorithm’s global search capability, the maintenance and activation of population diversity. For and , the optimal values, worst values, mean values, and standard deviations of the CPO, BKA, PO, WSA, HLOA, ECO, IAO, NRBO, and GCRA, the SCGCRA remained consistent and exhibited optimal extreme solutions, which were superior to those of the EHO and AO. The SCGCRA integrated the nonlinear control strategy of GCRA and the periodic oscillatory fluctuation of SCA to guide dominant individuals, adjust dynamic step sizes, and enhance adaptability and complementarity. For , , and , the quantitative metrics, detection efficiency, and solution accuracy of the SCGCRA were better than those of the CPO, BKA, EHO, PO, WSA, HLOA, ECO, IAO, AO, HEOA, NRBO, and GCRA. The SCGCRA prioritized strong reliability and practicality in scanning the solution scope, facilitating extensive global exploration, enhancing group collaboration and guidance, discovering precise optimal solutions, and avoiding premature convergence. For fixed-dimension multimodal , the solution space retained multiple local extremum points of multimodal functions and a unique global extremum point of unimodal functions, adopted fixed-dimensionality to control complexity, and eliminated interference of dimensionality changes. The core requirement was to resist performance degradation caused by increased dimensionality, which validates the algorithm’s global stability, robustness, and consistency, the decoupling and search direction focusing on high-dimensional variables, adaptability to the curse of dimensionality, and equilibrium ability between detection and exploitation. For , , , , , , , and , the optimal values, worst values, and mean values of the SCGCRA remained consistent and exhibited optimal extreme solutions; the quantitative metrics, detection efficiency, and solution accuracy of the SCGCRA were superior to those of CPO, BKA, EHO, PO, WSA, HLOA, ECO, IAO, AO, HEOA, NRBO, and GCRA. The SCGCRA exhibited strong stability and robustness in effectively guiding and regulating the initial population distribution, reducing dependence on initial conditions, and steadily searching towards the optimal solution, and diminishing the algorithm’s variability. For , , and , the SCGCRA exhibited strong superiority and operability in terms of the quantitative metrics, detection efficiency, and solution accuracy. The SCGCRA utilized the random fluctuation and periodicity of SCA, the group collaboration and guidance mechanism of GCRA, and dynamic adjustment and search mechanism of the nonlinear control strategy to explore the solution scope extensively and intensively identify potential high-quality regions; meticulously exploit the extremum solutions; effectively avoid blind large-scale search; strictly avert search stagnation and slow convergence; and preferably balance exploration and exploitation to approximate the optimal solution.

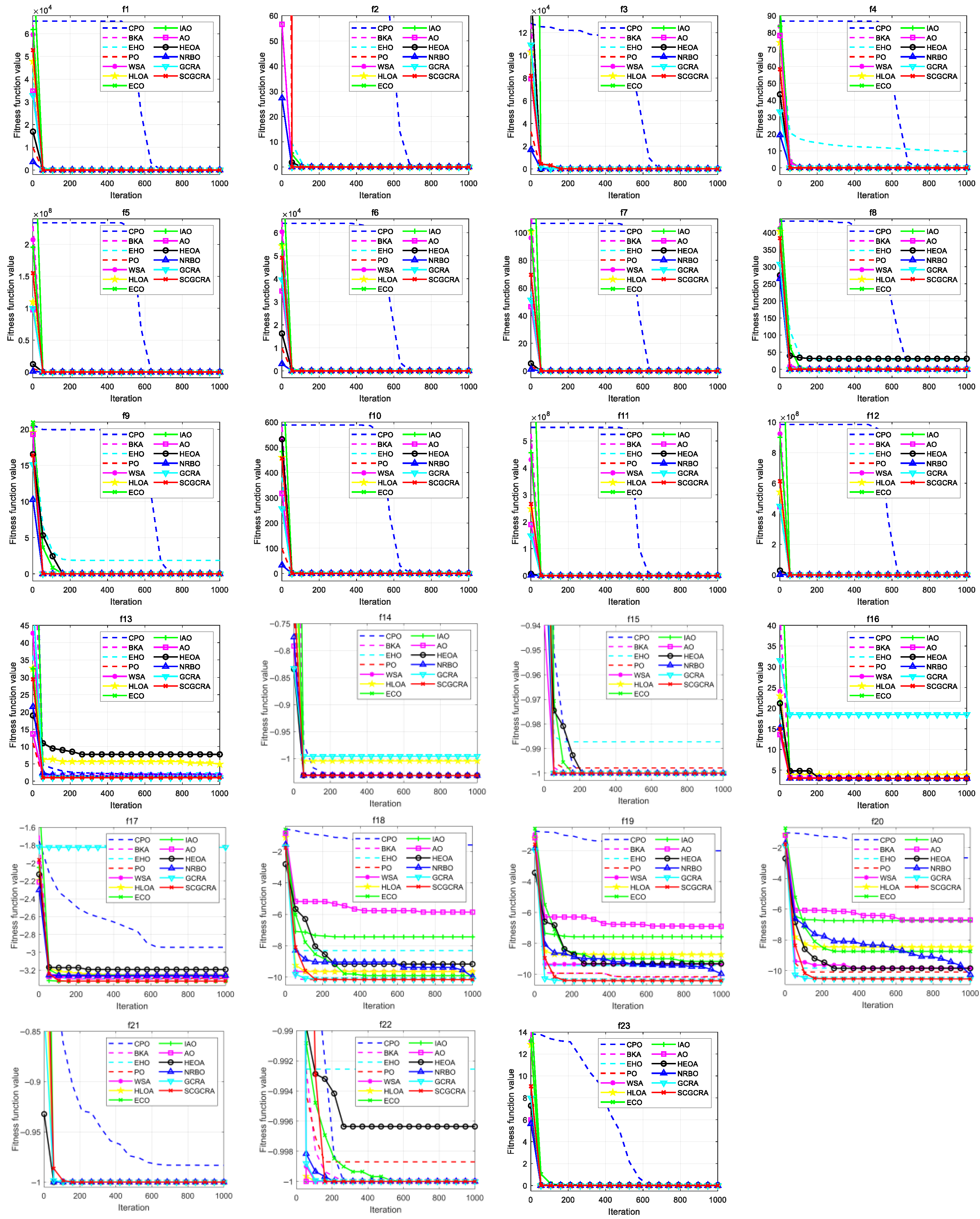

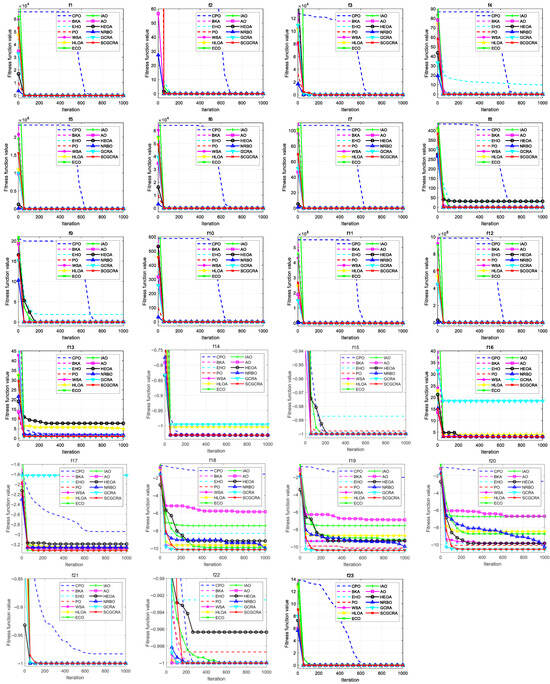

4.5. Convergence Analysis

Figure 5 portrays the convergence curves of the SCGCRA and comparative algorithms for addressing the benchmark functions. The convergence curves can be used to determine whether an algorithm can efficiently approximate the optimal solution and measure solution accuracy by observing steep descent, plateau periods, and oscillatory curves. This also allows for quantifying convergence efficiency by observing the number of iterations required to reach a specific stable value quickly. The optimal value and mean value jointly reveal the search efficiency and convergence accuracy of the algorithm. If the disparity between the optimal value and the average value is significant, it validates that the algorithm has prematurely fallen into a local optimum. If the discrepancy between the optimal value and the average value is slight and continues to decrease, it validates that the algorithm exhibits a strong equilibrium between global exploration and local exploitation. For unimodal functions, the numerous quantitative metrics, detection efficiency, and solution accuracy of the SCGCRA were superior to those of the CPO, BKA, EHO, PO, WSA, HLOA, ECO, IAO, AO, HEOA, NRBO, and GCRA. The SCGCRA utilized the territorial foraging behavior and dominant individual guidance mechanism of the GCRA to quickly locate potential optimal areas, avoid aimless search, cover the solution space, remedy the disequilibrium between exploration and exploitation, and enhance robustness and reliability. For multimodal functions, the SCGCRA exhibited remarkable advantages and superiority in terms of numerous quantitative metrics, detection efficiency, and solution accuracy compared to other comparative algorithms. The SCGCRA utilized the mathematical periodic oscillatory fluctuation of the SCA to enhance local exploitation, quantify solution accuracy, avoid dimensional disaster, achieve synergistic complementarity, and reduce sensitivity, thereby increasing population diversity and flexibility. For fixed-dimensional multimodal , the multitudinous optimal values, mean values, detection efficiency, and solution accuracy of the SCGCRA outperformed the comparative algorithms. The SCGCRA employed a nonlinear control strategy to exhibit strong anti-disturbance ability and stability, thereby enhancing adaptability, operability, and practicality; facilitating repeated expansion and contraction; and improving dynamic regulation and solution quality. To summarize, the SCGCRA integrated the periodic oscillatory fluctuation characteristics of the SCA and the dynamic regulation and decision-making of a nonlinear control strategy to provide clear global search directions, avoid direction dispersion and redundant detection, ensure complete coverage of the solution space, enhance population diversity, and achieve good detection efficiency and solution accuracy.

Figure 5.

Convergence curves of the SCGCRA and comparative algorithms for addressing the benchmark functions.

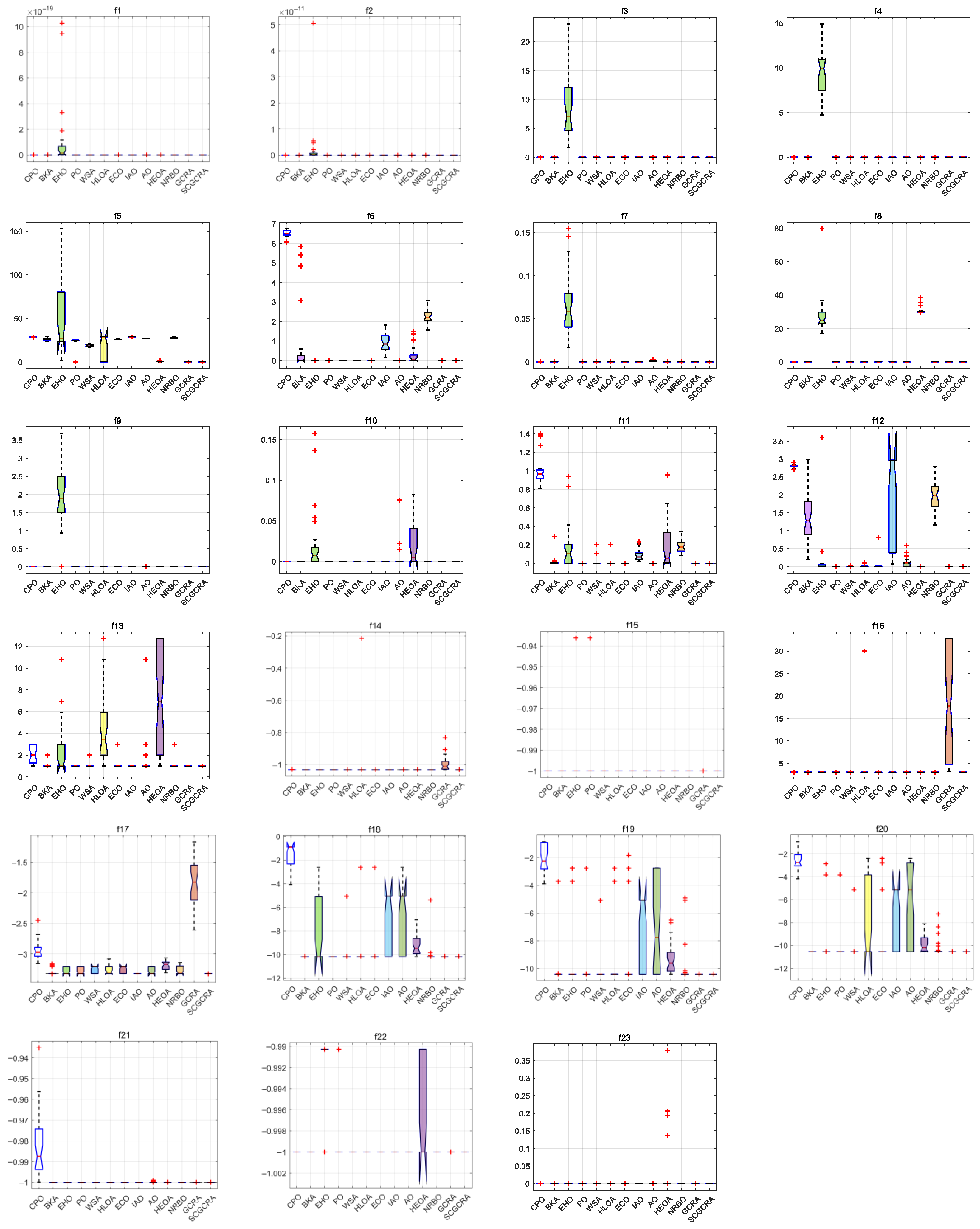

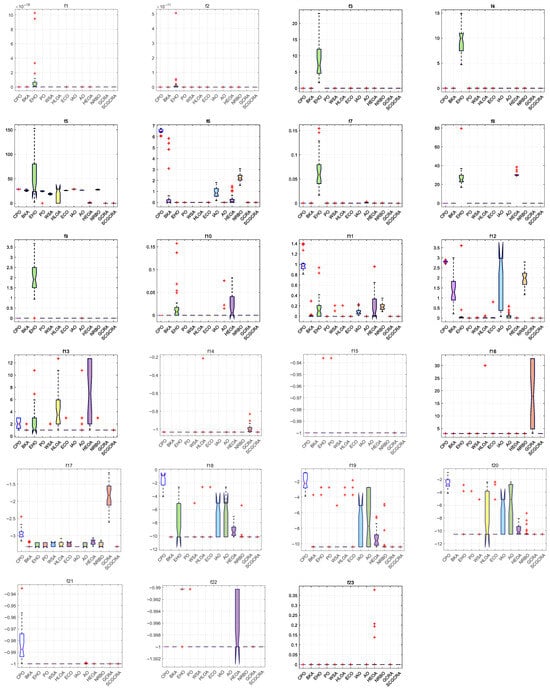

4.6. Boxplot Analysis

Figure 6 portrays boxplots of the SCGCRA and comparative algorithms for addressing the benchmark functions. The boxplots can quantify the stability and reliability of solutions, reflect the changes in solution diversity over time, and describe the dispersion of individual solutions within the population. The standard deviation intuitively demonstrates the sensitivity of the algorithm to initial population and parameter perturbations. The continuous decrease in standard deviation indicates that the algorithm maintains good convergence to force the population to converge towards the global extremum solution. If standard deviation mutates or oscillates, it may fall into a local optimum or require parameter adjustment. The standard deviation and worst value jointly reveal the stability and robustness of the algorithm. If the standard deviation is slight, the worst value infinitely approaches the optimal value, which validates that the algorithm is insensitive to randomness and the adaptability of initial conditions. The standard deviation has strong stability and expansibility in assessing the uniformity of the distribution of the solution set (such as the dispersity of solutions on the Pareto front in multi-objective optimization) and in verifying the profound impact of improvement strategies on solution diversity. For unimodal functions , the multitudinous standard deviation and dispersion of the SCGCRA were superior to those of other comparative algorithms. The SCGCRA exhibited strong adaptability and operability in overcoming the drawbacks of high parameter sensitivity, insufficient solution accuracy, high computational complexity, susceptibility to local optima and overfitting, poor dynamic adaptability, and the severe curse of dimensionality. For multimodal functions , compared with other comparative algorithms, the SCGCRA exhibited remarkable advantages and superiority in terms of the multitudinous standard deviation and dispersion. The SCGCRA utilized the intelligent foraging behavior and social collaboration mechanism of GCRs to simulate territory exploration, path tracking, and reproduction strategies. The SCGCRA employed a nonlinear control strategy to ensure solution accuracy and stability, facilitate dynamic regulation, and improve solution quality. For fixed-dimensional multimodal , the multitudinous standard deviation and dispersion of the SCGCRA outperformed the comparative algorithms. The SCGCRA exhibited strong reliability and practicality, facilitating mating during the rainy season and non-mating during the dry season. This enabled the expansion of the search range, updated population positions, and enhanced population diversity, ultimately identifying the globally optimal precise solution. To summarize, the SCGCRA not only exhibited substantial superiority and adaptability to determine the superior standard deviation and dispersion and enhance the stability and robustness, but also exhibited strong practicality and reliability to balance the global coarse exploration and local refined exploitation, overlap the global exploration scope, locate potential optimal areas, strengthen local exploitation, and enhance solution accuracy.

Figure 6.

Boxplots of the SCGCRA and comparative algorithms for addressing the benchmark functions.

4.7. Wilcoxon Rank-Sum Test

The Wilcoxon rank-sum test is a non-parametric statistical approach for paired data, which quantifies whether the overall discrepancy between the SCGCRA and other algorithms is statistically significant without relying on assumptions about data distribution [27]. designates the dramatic difference, designates the non-dramatic difference, and N/A designates “not applicable”. Table 3 portrays the contrastive results of the p-value Wilcoxon rank-sum test on the benchmark functions. The SCGCRA exhibits strong stability and reliability in acquiring genuine and effective data rather than accidental data.

Table 3.

Contrastive results of the p-value Wilcoxon rank-sum test on the benchmark functions.

5. SCGCRA for Tackling Engineering Designs

To validate adaptability and practicality, the SCGCRA was utilized to tackle the constrained real-world engineering designs: three-bar truss design [28], piston lever design [29], gear train design [30], car side impact design [31], multiple-disk clutch brake design [32], and rolling element bearing design [33].

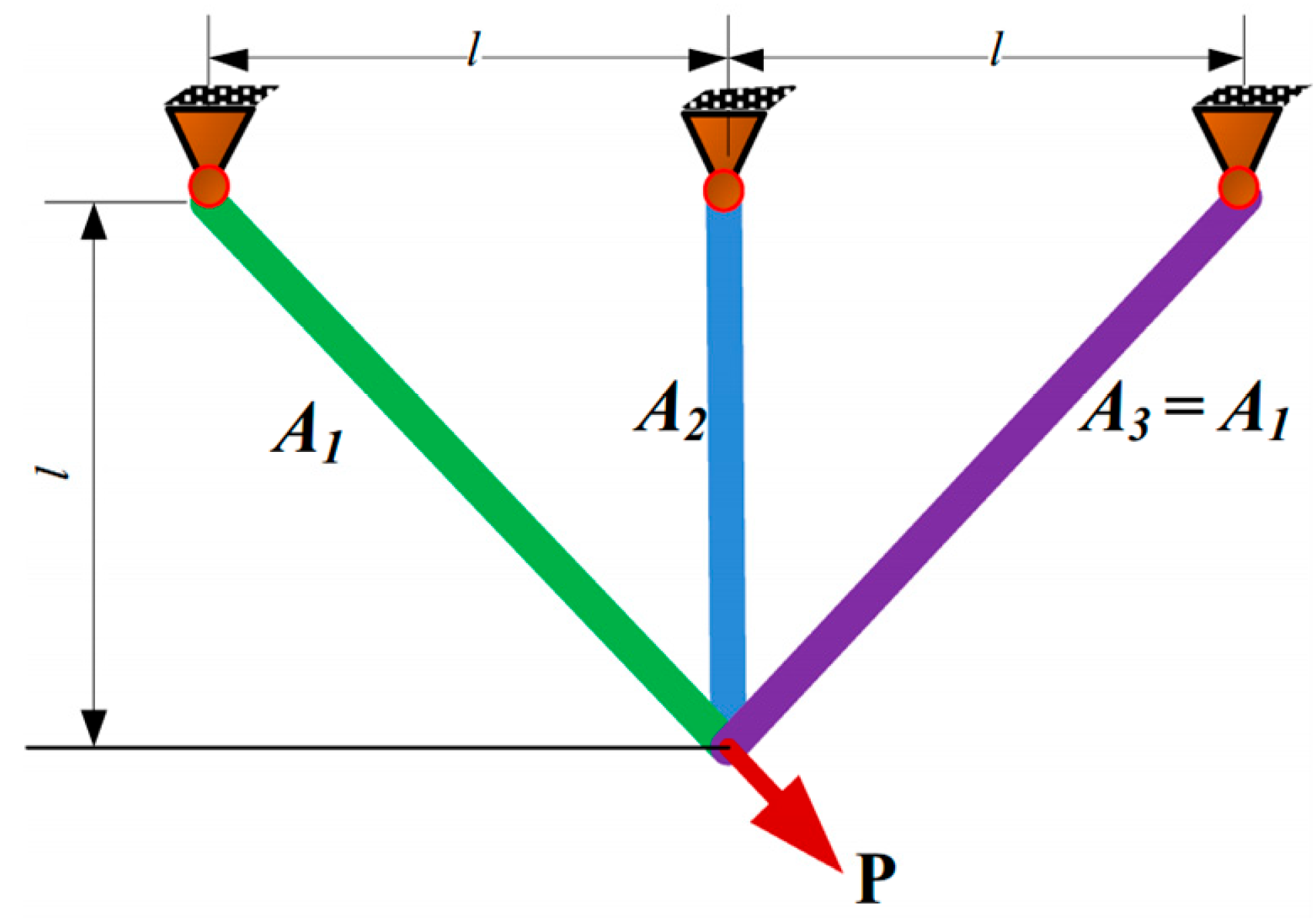

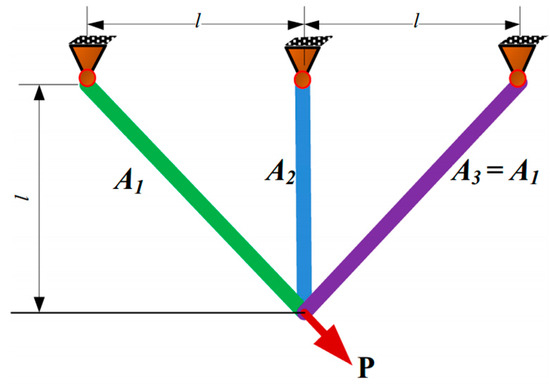

5.1. Three-Bar Truss Design

The dominant motivation was to weaken the cumulative weight, which incorporated two quantitative metrics: the intersecting surfaces and . Figure 7 portrays the sketch map of the three-bar truss design.

Figure 7.

Sketch map of the three-bar truss design.

Consider

Minimize

Subject to

Variable range

Table 4 outlines the contrastive results of the three-bar truss design. The SCGCRA used periodic oscillations of sine and cosine functions to achieve large-scale and multi-directional coverage of the solution space without relying on neighborhood continuity. This can directly cross distant subregions in high-dimensional space, avoiding the traversal blind spots caused by a single GCRA dominated by local neighborhood search. The SCGCRA employed a large step size for strong fluctuation and a small step size for weak contraction to lock out the high-quality regions, approximate the computational extreme solution, weaken redundant searches, and accelerate convergence speed. The global extremum solution was materialized by the SCGCRA at quantitative metrics: 0.78645 and 0.41813, with the optimum weight of 263.8543.

Table 4.

Contrastive results of the three-bar truss design.

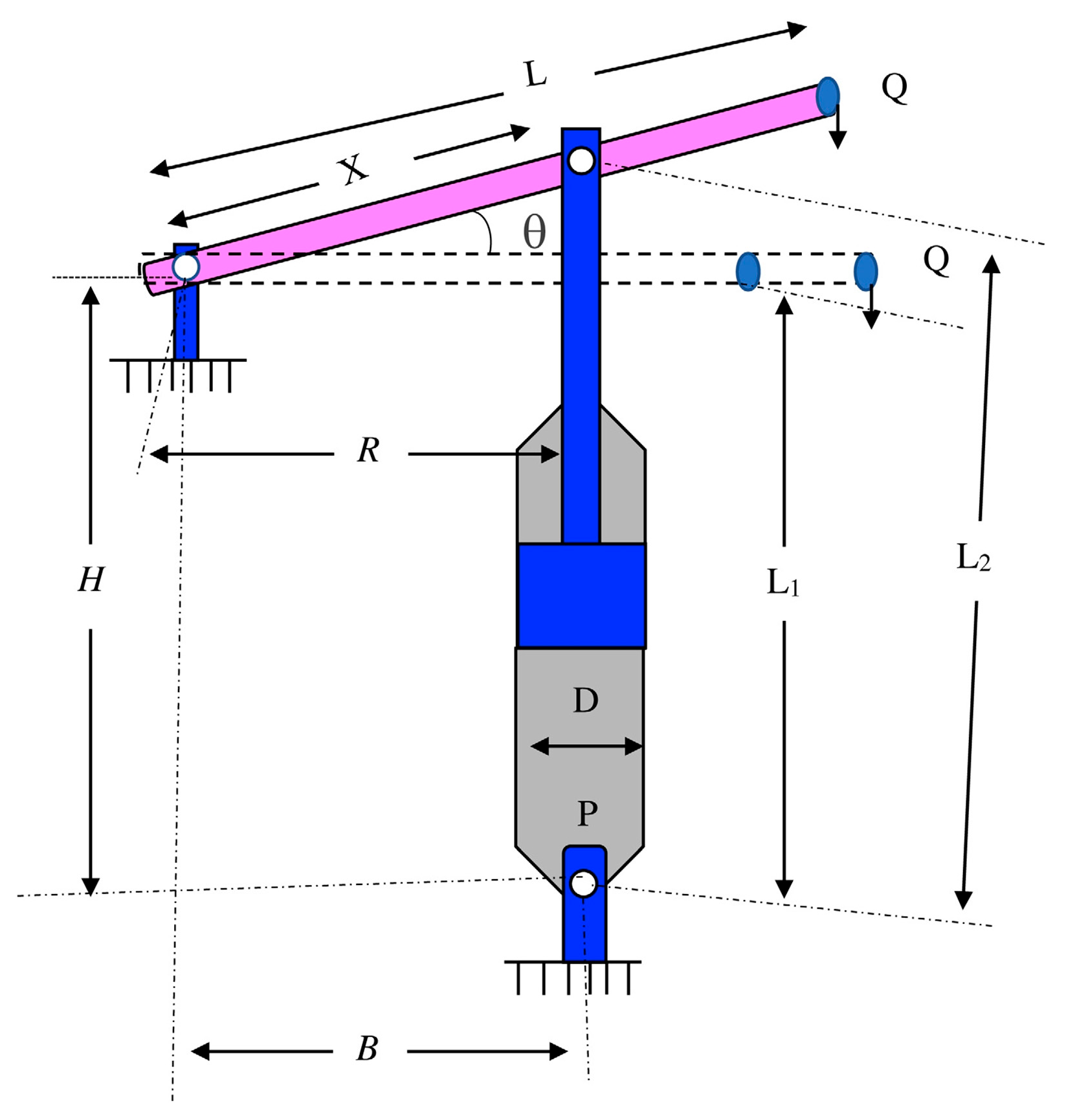

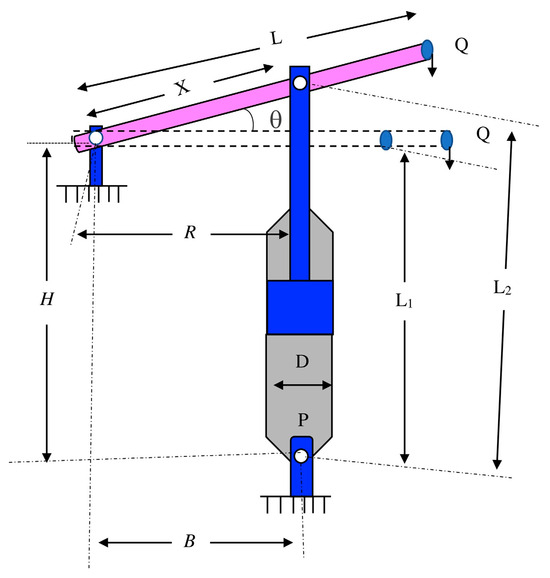

5.2. Piston Lever Design

The dominant motivation was to weaken (attenuate) the cumulative weight, oil volume, and ascertain the components as the piston joystick is elevated from to , which incorporated four quantitative metrics: , , , and . Figure 8 portrays the sketch map of the piston lever design.

Figure 8.

Sketch map of the piston lever design.

Consider

Minimize

Subject to

Variable range

Table 5 outlines the contrastive results of the piston lever design. The SCGCRA simulated the regional search and information sharing behavior of GCRs, which adopts individual position interaction and group optimal guidance to systematically cover the multimodal space of nonlinear problems, fully detect globally, accurately mine locally, and balance optimization efficiency and stability. The SCGCRA utilized the periodic oscillatory fluctuations of the SCA to overcome the exploration limitations of a population converging towards dominant individuals, ensure the population focuses on nuanced exploration in high-quality areas, and avoid the step size from rigidly oscillating near the extremal solution. The global extremum solution was materialized by the SCGCRA at quantitative metrics: 0.05, 0.125364154, 120, and 4.12410157, with the optimum weight of 7.794.

Table 5.

Contrastive results of the piston lever design.

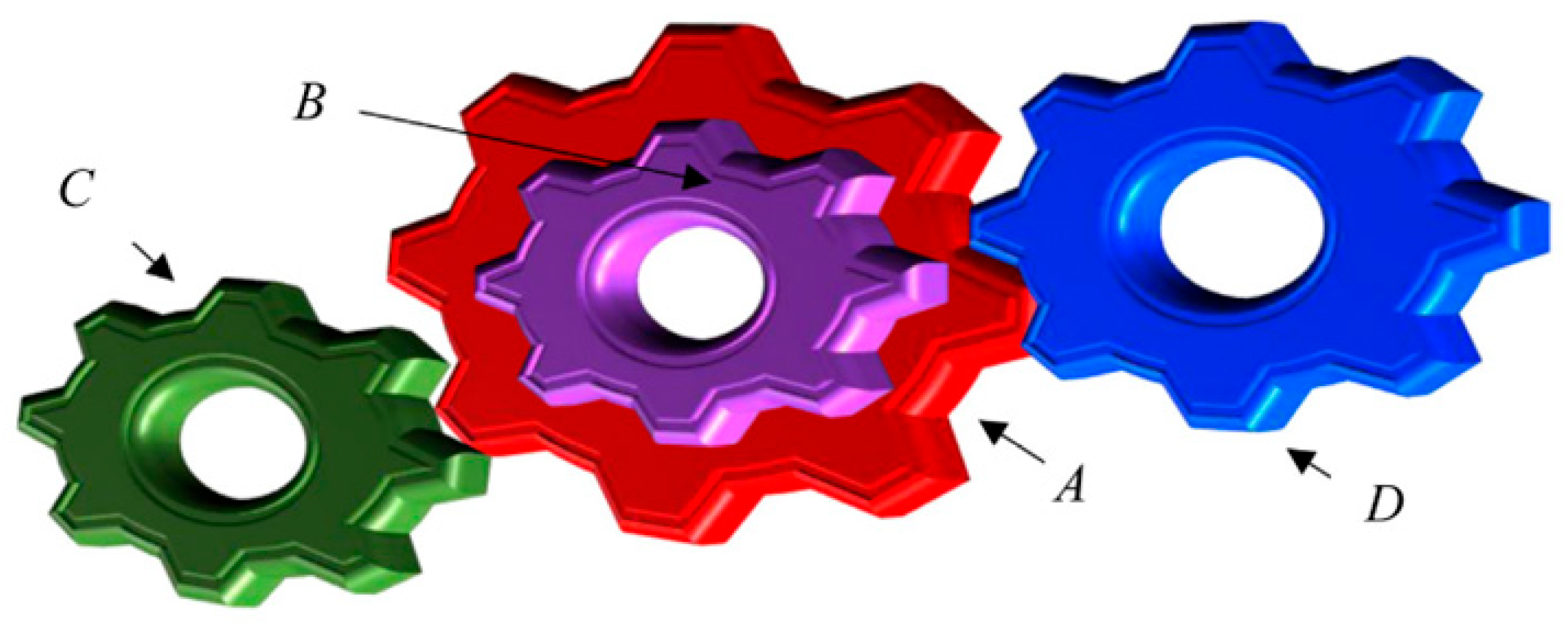

5.3. Gear Train Design

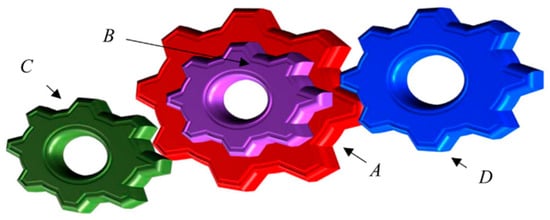

The dominant motivation was to weaken (attenuate) the cumulative teeth size and the gear ratio’s optimum cost, which incorporates four quantitative metrics: the teeth scales of the gear train , , and . Figure 9 portrays the sketch map of the gear train design.

Figure 9.

Sketch map of the gear train design.

Consider

Minimize

Variable range

Table 6 outlines the contrastive results of the gear train design. The SCGCRA utilized the sine direction adjustment and cosine step size control to achieve periodic small-scale fluctuations within the potential optimal region locked by GCRA, dynamically decreased the step size with iteration, and compensated for the shortcomings of slow convergence speed and low solution accuracy of a single GCRA in the later stage. The SCGCRA exhibited strong adaptability and practicality, facilitating the exploration of fluctuations and targeted exploitation, thereby covering the solution space, triggering population diversity, avoiding chaotic search, and enhancing resistance to the curse of dimensionality. The SCGCRA materialized the global extremum solution at quantitative metrics: 50, 22, 19, and 52, with the optimum cost of 3.25.

Table 6.

Contrastive results of the gear train design.

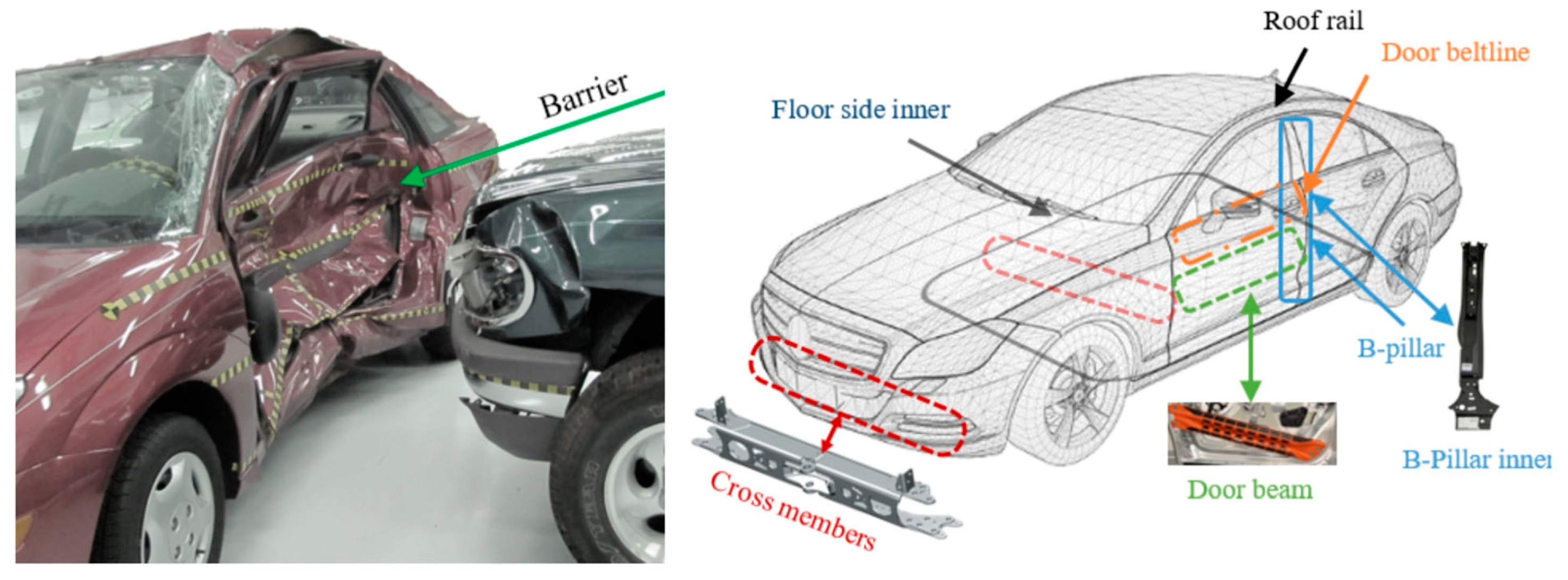

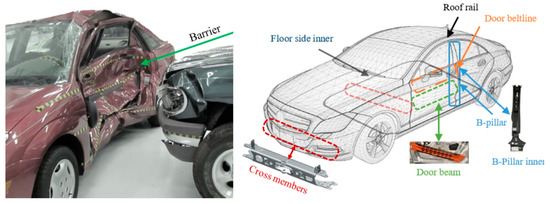

5.4. Car Side Impact Design

The dominant motivation was to weaken (attenuate) the cumulative weight, which incorporates 11 quantitative metrics: thicknssssess of B-pillar inner (), B-pillar reinforcement (), floor side inner (), cross members (), door beam (), door beltline reinforcement (), roof rail (), materials of B-pillar inner (), floor side inner (), barrier height (), and hitting position (). Figure 10 portrays the sketch map of the car side impact design.

Figure 10.

Sketch map of the car side impact design.

Consider

Minimize

Subject to

Variable range

Table 7 outlines the contrastive results of the car side impact design. The GCRA did not require complex dimensional decoupling to update positions, which used relative position adjustment between individual and population optimal solutions to achieve optimization, naturally handling multi-parameter coupling relationships. The nonlinear control strategy provided dynamic feedback regulation to suppress fluctuations and quickly switch between GCRA and SCA to enhance exploration or development when the SCGCRA deviated from the optimal region. The SCGCRA possessed strong scalability and adaptability, which reduced the sensitivity of control parameters, promoted population diffusion, avoided premature convergence, focused on the optimal region, and eschewed search disorder. The global extremum solution is materialized by the SCGCRA at quantitative metrics: 0.5, 1.11643, 0.5, 1.30208, 0.5, 1.5, 0.5, 0.345, 0.192, −19.54935, and −0.00431, with the optimum weight of 22.84294.

Table 7.

Contrastive results of the car side impact design.

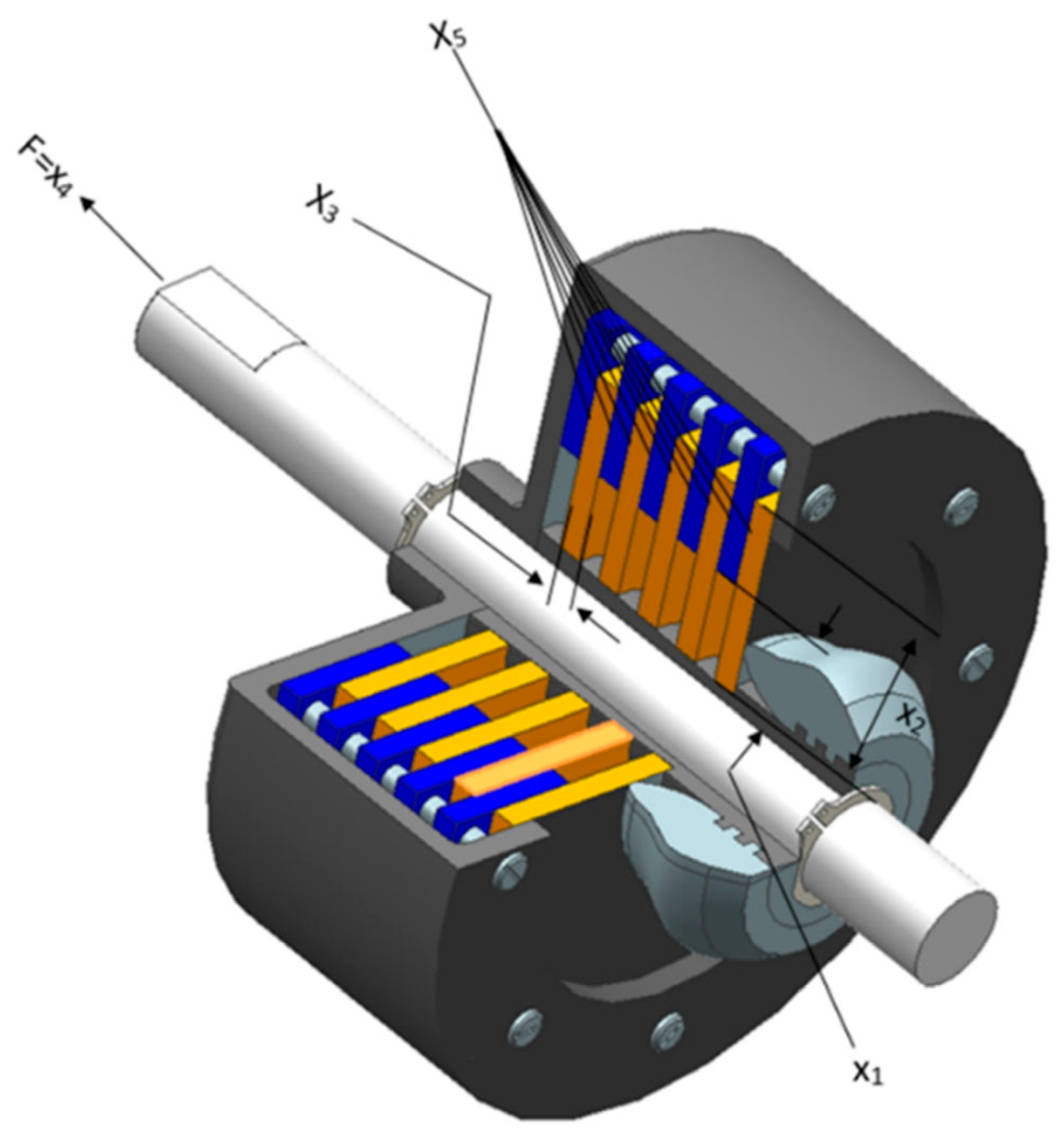

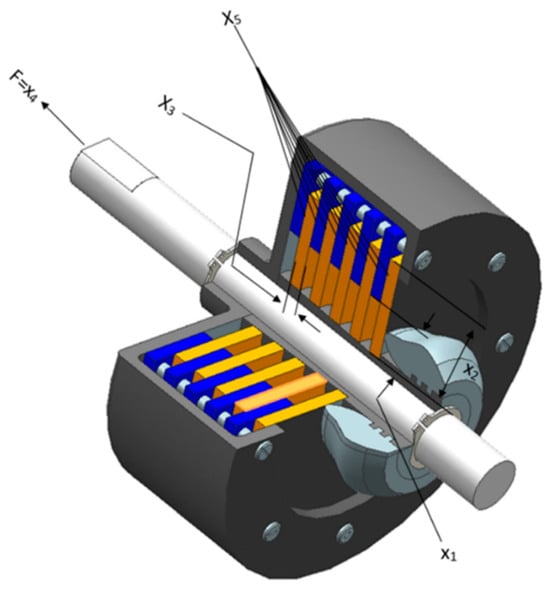

5.5. Multiple-Disk Clutch Brake Design

The dominant motivation is to weaken (attenuate) the cumulative weight, which incorporates five quantitative metrics: thickness of disks (), inner radius (), outer radius (), actuating force (), and the number of friction surfaces (). Figure 11 portrays the sketch map of the multiple-disk clutch brake.

Figure 11.

Sketch map of the multiple-disk clutch brake design.

Consider

Minimize

Subject to

Table 8 outlines the contrastive results of the multiple-disk clutch brake design. The SCA possesses a strong fine-tuning ability, ensuring the precise matching of control parameters with nonlinear system characteristics, thereby meeting the dual requirements of fast response and minimal system overshoot. The SCGCRA achieved closed-loop coordination between a nonlinear control strategy and GCRA optimization, which received control parameter feedback, thereby enhancing the robustness of the control system. The SCGCRA utilized the global coarse exploration and the local refined exploitation to promote search efficiency and flexibility, enhance convergence speed and solution accuracy, avoid search stagnation and dimensional disaster, strengthen adaptability and operability, and facilitate dynamic regulation and solution quality. The SCGCRA materialized the global extremum solution at quantitative metrics: 70, 90, 1, 600, and 2, with the optimum weight of 0.235247.

Table 8.

Contrastive results of the multiple-disk clutch brake design.

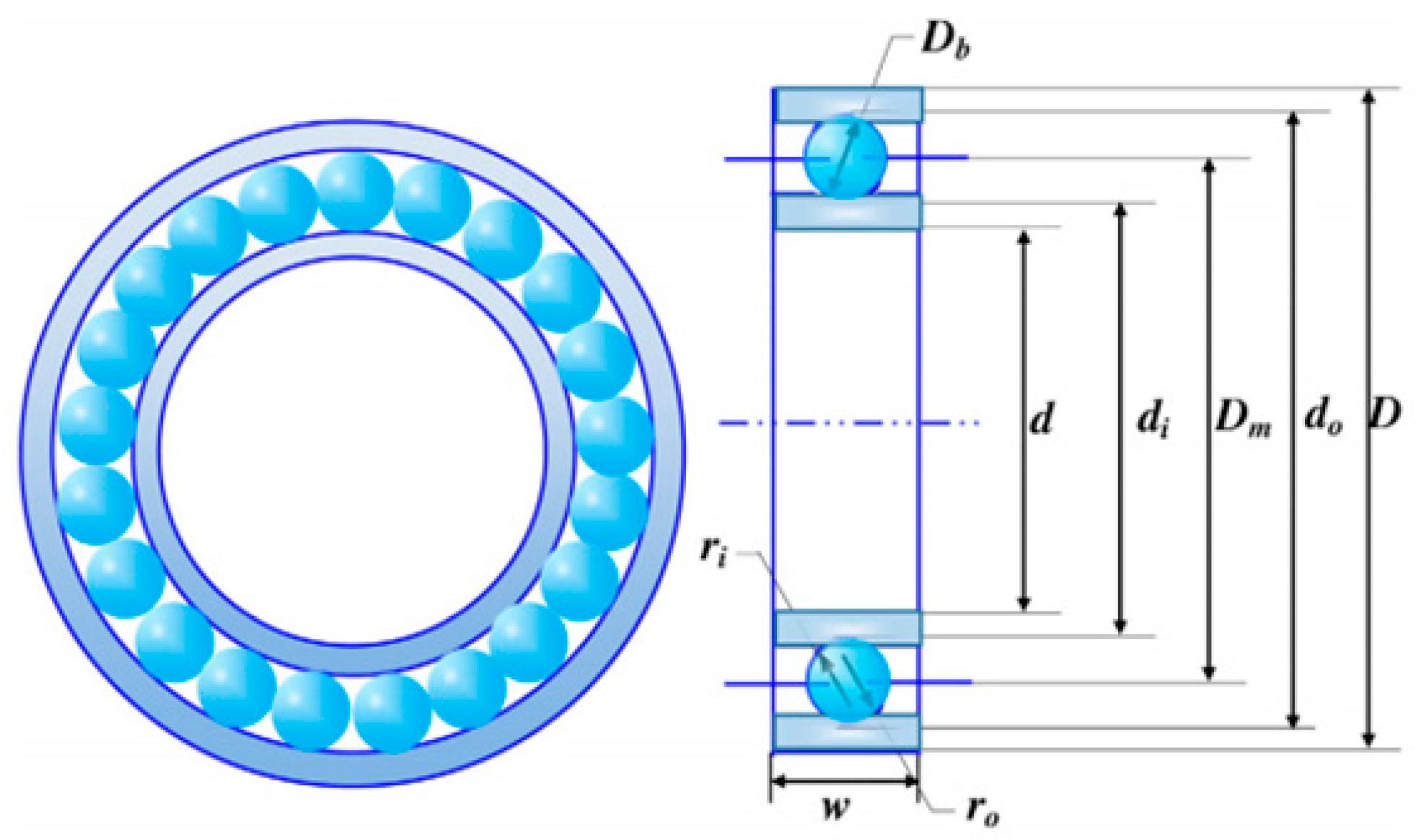

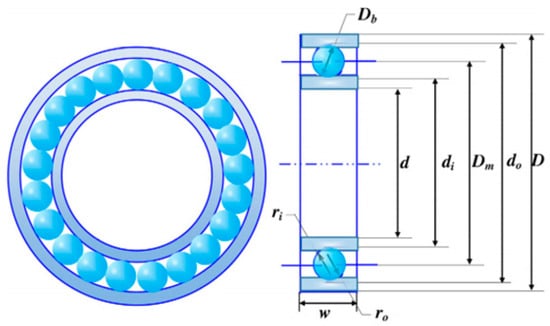

5.6. Rolling Element Bearing Design

The dominant motivation was to maximize the dynamic load-bearing capacity and optimum cost, which incorporates 10 quantitative metrics: pitch diameter (), ball diameter (), number of balls (), inner (), and outer () raceway curvature coefficients, , , , , and . Figure 12 portrays the sketch map of the rolling element bearing design.

Figure 12.

Sketch map of the rolling element bearing design.

Consider

Minimize

Subject to

Variable range

Table 9 outlines the contrastive results of the rolling element bearing design. The GCRA and SCA both contain control parameters that need to be set. The introduction of a nonlinear control strategy compensated for the performance loss caused by suboptimal sub-algorithm parameters through its adaptive scheduling mechanism. The nonlinear control strategy dynamically adjusted the dominance of GCRA and SCA based on real-time feedback signals such as iteration progress, population diversity, or convergence speed. The SCGCRA utilized the SCA’s mathematical periodic oscillatory fluctuation characteristics to overlap the global exploration scope, locate potential optimal areas, and enhance population diversity. The SCGCRA employed a nonlinear control strategy to adjust dispersion, minimize ineffective exploration, and improve adaptability and complementarity. The global extremum solution was materialized by the SCGCRA at the following quantitative metrics: 126.2339, 20.1947, 10.5139, 0.5524, 0.5428, 0.4072, 0.6565, 0.3254, 0.0681, and 0.6142, with an optimum cost of 90,020.39.

Table 9.

Contrastive results of the rolling element bearing design.

6. Conclusions and Future Works

This paper portrays the SCGCRA to address the twenty-three benchmark functions and six constrained real-world engineering designs; the dominant motivation is to balance global coarse exploration and local refined exploitation to identify the superior quantitative metrics, detection efficiency, and solution accuracy, and exhibit strong practicality and adaptability to strengthen solution quality and discover the global extremum or high-quality approximate solution. To address the GCRA’s serious drawbacks of the high parameter sensitivity, insufficient solution accuracy, high computational complexity, susceptibility to local optima and overfitting, poor dynamic adaptability, and severe curse of dimensionality, the SCGCRA combines the periodic oscillatory fluctuation characteristics of the SCA and the anti-disturbance ability and nonlinear processing characteristics of the nonlinear control strategy to realize repeated expansion and contraction, facilitate dynamic regulation and population diversity, avert dimensional disaster and search oscillation, achieve synergistic complementarity and reduce sensitivity, and quantify the solution accuracy and search efficiency. The SCGCRA possesses strong flexibility and operability, enabling multi-directional perturbation paths that achieve high-precision local convergence, narrow the search solution space, expand the global exploration scope, locate potential optimal areas, strengthen local exploitation, and enhance solution accuracy. The SCGCRA is compared with the CPO, BKA, EHO, PO, WSA, HLOA, ECO, IAO, AO, HEOA, NRBO, and GCRA. The experimental results demonstrate that the SCGCRA exhibits substantial superiority and responsibility in remedying the disequilibrium between exploration and exploitation, thereby accelerating convergence speed, enhancing solution accuracy, and attaining the global extremum solution.

In future research, we will utilize the SCGCRA to resolve the numerical experiments of CEC2017, CEC2019, CEC2020, CEC2021, and CEC2022, which will further verify the practicality and reliability of the proposed algorithm. We will utilize the maximum fitness evaluations to compare the performance of different algorithms fairly. We will leverage the Anhui Provincial Engineering Research Center for Intelligent Equipment for Under-forest Crops to showcase the core aspects: deep technological integration and innovation, breakthroughs in equipment for specialty crops, and the upgrading of green and intelligent equipment. We will combine the distributed computing capabilities of the SCGCRA with the decentralized characteristics of under-forest crops to achieve thoughtful and efficient detection, and bionic precision harvesting equipment, thereby reducing redundant searches and enhancing detection efficiency. We will utilize the dynamic task allocation capability and optimization of tillage depth and frequency provided by the SCGCRA to exploit lightweight and modular equipment that is detachable and easy to transport, thereby reducing soil damage. We will utilize the energy management strategy of the SCGCRA to develop low-power, long-endurance harvesting robots, reduce carbon emissions, achieve variable fertilization and precise irrigation, dwindle resource waste, and promote the coordinated progress of agriculture and ecological protection.

Author Contributions

Conceptualization, J.Z.; methodology, J.Z. and T.Z.; software, J.Z. and A.J.; validation, J.Z., A.J. and T.Z.; formal analysis, J.Z. and A.J.; investigation, A.J. and T.Z.; resources, A.J. and T.Z.; data curation, J.Z.; writing—original draft preparation, J.Z. and A.J.; writing—review and editing, A.J. and T.Z.; visualization, J.Z. and A.J.; supervision, A.J. and T.Z.; project administration, A.J. and T.Z.; funding acquisition, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Natural Science Key Research Project of Anhui Educational Committee under Grant No. 2024AH051989, Start-up Fund for Distinguished Scholars of West Anhui University under Grant No. WGKQ2022052, Horizontal topic: Research on path planning technology of smart agriculture and forestry harvesting robots based on evolutionary algorithms under Grant No. 0045024064, School-level Quality Engineering (Teaching and Research Project) under Grant No. wxxy2023079, and School-level Quality Engineering (School-enterprise Cooperation Development Curriculum Resource Construction) under Grant No. wxxy2022101. The authors would like to thank the editor and anonymous reviewers for their helpful comments and suggestions.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. The MATLAB code developed for this study is available from the corresponding author upon reasonable request.

Acknowledgments

The authors would like to thank everyone involved for their contributions to this article. The authors would like to thank the editors and reviewers for providing useful comments and suggestions to improve the quality of this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Guo, Z.; Liu, G.; Jiang, F. Chinese Pangolin Optimizer: A Novel Bio-Inspired Metaheuristic for Solving Optimization Problems. J. Supercomput. 2025, 81, 517. [Google Scholar] [CrossRef]

- Wang, J.; Wang, W.; Hu, X.; Qiu, L.; Zang, H. Black-Winged Kite Algorithm: A Nature-Inspired Meta-Heuristic for Solving Benchmark Functions and Engineering Problems. Artif. Intell. Rev. 2024, 57, 98. [Google Scholar] [CrossRef]

- Al-Betar, M.A.; Awadallah, M.A.; Braik, M.S.; Makhadmeh, S.; Doush, I.A. Elk Herd Optimizer: A Novel Nature-Inspired Metaheuristic Algorithm. Artif. Intell. Rev. 2024, 57, 48. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Khodadadi, N.; Barshandeh, S.; Trojovskỳ, P.; Gharehchopogh, F.S.; El-kenawy, E.-S.M.; Abualigah, L.; Mirjalili, S. Puma Optimizer (PO): A Novel Metaheuristic Optimization Algorithm and Its Application in Machine Learning. Clust. Comput. 2024, 27, 5235–5283. [Google Scholar] [CrossRef]

- Peraza-Vázquez, H.; Peña-Delgado, A.; Merino-Treviño, M.; Morales-Cepeda, A.B.; Sinha, N. A Novel Metaheuristic Inspired by Horned Lizard Defense Tactics. Artif. Intell. Rev. 2024, 57, 59. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Saha, A.K.; Pal, J.; Abualigah, L.; Mirjalili, S. Greater Cane Rat Algorithm (GCRA): A Nature-Inspired Metaheuristic for Optimization Problems. Heliyon 2024, 10, e31629. [Google Scholar] [CrossRef]

- Zhang, H.; San, H.; Sun, H.; Ding, L.; Wu, X. A Novel Optimization Method: Wave Search Algorithm. J. Supercomput. 2024, 80, 16824–16859. [Google Scholar] [CrossRef]

- Golalipour, K.; Nowdeh, S.A.; Akbari, E.; Hamidi, S.S.; Ghasemi, D.; Abdelaziz, A.Y.; Kotb, H.; Yousef, A. Snow Avalanches Algorithm (SAA): A New Optimization Algorithm for Engineering Applications. Alex. Eng. J. 2023, 83, 257–285. [Google Scholar] [CrossRef]

- Houssein, E.H.; Oliva, D.; Samee, N.A.; Mahmoud, N.F.; Emam, M.M. Liver Cancer Algorithm: A Novel Bio-Inspired Optimizer. Comput. Biol. Med. 2023, 165, 107389. [Google Scholar] [CrossRef]

- Yuan, Y.; Shen, Q.; Wang, S.; Ren, J.; Yang, D.; Yang, Q.; Fan, J.; Mu, X. Coronavirus Mask Protection Algorithm: A New Bio-Inspired Optimization Algorithm and Its Applications. J. Bionic Eng. 2023, 20, 1747–1765. [Google Scholar] [CrossRef]

- Ahmed, M.; Sulaiman, M.H.; Mohamad, A.J.; Rahman, M. Gooseneck Barnacle Optimization Algorithm: A Novel Nature Inspired Optimization Theory and Application. Math. Comput. Simul. 2024, 218, 248–265. [Google Scholar] [CrossRef]

- Kaveh, M.; Mesgari, M.S.; Saeidian, B. Orchard Algorithm (OA): A New Meta-Heuristic Algorithm for Solving Discrete and Continuous Optimization Problems. Math. Comput. Simul. 2023, 208, 95–135. [Google Scholar] [CrossRef]

- Yuan, C.; Zhao, D.; Heidari, A.A.; Liu, L.; Chen, Y.; Wu, Z.; Chen, H. Artemisinin Optimization Based on Malaria Therapy: Algorithm and Applications to Medical Image Segmentation. Displays 2024, 84, 102740. [Google Scholar] [CrossRef]

- Sowmya, R.; Premkumar, M.; Jangir, P. Newton-Raphson-Based Optimizer: A New Population-Based Metaheuristic Algorithm for Continuous Optimization Problems. Eng. Appl. Artif. Intell. 2024, 128, 107532. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; El-Shahat, D.; Jameel, M.; Abouhawwash, M. Exponential Distribution Optimizer (EDO): A Novel Math-Inspired Algorithm for Global Optimization and Engineering Problems. Artif. Intell. Rev. 2023, 56, 9329–9400. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; El-Shahat, D.; Jameel, M.; Abouhawwash, M. Young’s Double-Slit Experiment Optimizer: A Novel Metaheuristic Optimization Algorithm for Global and Constraint Optimization Problems. Comput. Methods Appl. Mech. Eng. 2023, 403, 115652. [Google Scholar] [CrossRef]

- Barua, S.; Merabet, A. Lévy Arithmetic Algorithm: An Enhanced Metaheuristic Algorithm and Its Application to Engineering Optimization. Expert Syst. Appl. 2024, 241, 122335. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Cai, L.; Yang, R. Triangulation Topology Aggregation Optimizer: A Novel Mathematics-Based Meta-Heuristic Algorithm for Continuous Optimization and Engineering Applications. Expert Syst. Appl. 2024, 238, 121744. [Google Scholar] [CrossRef]

- Lian, J.; Zhu, T.; Ma, L.; Wu, X.; Heidari, A.A.; Chen, Y.; Chen, H.; Hui, G. The Educational Competition Optimizer. Int. J. Syst. Sci. 2024, 55, 3185–3222. [Google Scholar] [CrossRef]

- Wu, X.; Li, S.; Jiang, X.; Zhou, Y. Information Acquisition Optimizer: A New Efficient Algorithm for Solving Numerical and Constrained Engineering Optimization Problems. J. Supercomput. 2024, 80, 25736–25791. [Google Scholar] [CrossRef]

- Lian, J.; Hui, G. Human Evolutionary Optimization Algorithm. Expert Syst. Appl. 2024, 241, 122638. [Google Scholar] [CrossRef]

- Jia, H.; Lu, C.; Xing, Z. Memory Backtracking Strategy: An Evolutionary Updating Mechanism for Meta-Heuristic Algorithms. Swarm Evol. Comput. 2024, 84, 101456. [Google Scholar] [CrossRef]

- Jia, H.; Lu, C. Guided Learning Strategy: A Novel Update Mechanism for Metaheuristic Algorithms Design and Improvement. Knowl.-Based Syst. 2024, 286, 111402. [Google Scholar] [CrossRef]

- Jia, H.; Zhou, X.; Zhang, J. Thinking Innovation Strategy (TIS): A Novel Mechanism for Metaheuristic Algorithm Design and Evolutionary Update. Appl. Soft Comput. 2025, 175, 113071. [Google Scholar] [CrossRef]

- Dehkordi, A.A.; Sadiq, A.S.; Mirjalili, S.; Ghafoor, K.Z. Nonlinear-Based Chaotic Harris Hawks Optimizer: Algorithm and Internet of Vehicles Application. Appl. Soft Comput. 2021, 109, 107574. [Google Scholar]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar]

- Zhang, J.; Liu, W.; Zhang, G.; Zhang, T. Quantum Encoding Whale Optimization Algorithm for Global Optimization and Adaptive Infinite Impulse Response System Identification. Artif. Intell. Rev. 2025, 58, 158. [Google Scholar] [CrossRef]

- Zhong, C.; Li, G.; Meng, Z.; Li, H.; Yildiz, A.R.; Mirjalili, S. Starfish Optimization Algorithm (SFOA): A Bio-Inspired Metaheuristic Algorithm for Global Optimization Compared with 100 Optimizers. Neural Comput. Appl. 2025, 37, 3641–3683. [Google Scholar]

- Mohammadzadeh, A.; Mirjalili, S. Eel and Grouper Optimizer: A Nature-Inspired Optimization Algorithm. Clust. Comput. 2024, 27, 12745–12786. [Google Scholar] [CrossRef]

- Han, M.; Du, Z.; Yuen, K.F.; Zhu, H.; Li, Y.; Yuan, Q. Walrus Optimizer: A Novel Nature-Inspired Metaheuristic Algorithm. Expert Syst. Appl. 2024, 239, 122413. [Google Scholar] [CrossRef]

- Jia, H.; Li, Y.; Wu, D.; Rao, H.; Wen, C.; Abualigah, L. Multi-Strategy Remora Optimization Algorithm for Solving Multi-Extremum Problems. J. Comput. Des. Eng. 2023, 10, 1315–1349. [Google Scholar] [CrossRef]

- Dhiman, G. SSC: A Hybrid Nature-Inspired Meta-Heuristic Optimization Algorithm for Engineering Applications. Knowl.-Based Syst. 2021, 222, 106926. [Google Scholar] [CrossRef]

- Abualigah, L.; Abd Elaziz, M.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A Nature-Inspired Meta-Heuristic Optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Hashim, F.A.; Mostafa, R.R.; Hussien, A.G.; Mirjalili, S.; Sallam, K.M. Fick’s Law Algorithm: A Physical Law-Based Algorithm for Numerical Optimization. Knowl.-Based Syst. 2023, 260, 110146. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Azeem, S.A.A.; Jameel, M.; Abouhawwash, M. Kepler Optimization Algorithm: A New Metaheuristic Algorithm Inspired by Kepler’s Laws of Planetary Motion. Knowl.-Based Syst. 2023, 268, 110454. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Jameel, M.; Abouhawwash, M. Nutcracker Optimizer: A Novel Nature-Inspired Metaheuristic Algorithm for Global Optimization and Engineering Design Problems. Knowl.-Based Syst. 2023, 262, 110248. [Google Scholar] [CrossRef]

- Bai, J.; Li, Y.; Zheng, M.; Khatir, S.; Benaissa, B.; Abualigah, L.; Wahab, M.A. A Sinh Cosh Optimizer. Knowl.-Based Syst. 2023, 282, 111081. [Google Scholar] [CrossRef]

- Wang, W.; Tian, W.; Xu, D.; Zang, H. Arctic Puffin Optimization: A Bio-Inspired Metaheuristic Algorithm for Solving Engineering Design Optimization. Adv. Eng. Softw. 2024, 195, 103694. [Google Scholar] [CrossRef]

- Bai, J.; Nguyen-Xuan, H.; Atroshchenko, E.; Kosec, G.; Wang, L.; Wahab, M.A. Blood-Sucking Leech Optimizer. Adv. Eng. Softw. 2024, 195, 103696. [Google Scholar] [CrossRef]

- Bouaouda, A.; Hashim, F.A.; Sayouti, Y.; Hussien, A.G. Pied Kingfisher Optimizer: A New Bio-Inspired Algorithm for Solving Numerical Optimization and Industrial Engineering Problems. Neural Comput. Appl. 2024, 36, 15455–15513. [Google Scholar] [CrossRef]

- Kim, P.; Lee, J. An Integrated Method of Particle Swarm Optimization and Differential Evolution. J. Mech. Sci. Technol. 2009, 23, 426–434. [Google Scholar] [CrossRef]

- Rechenberg, I. Evolutionsstrategien. In Proceedings of the Simulationsmethoden in der Medizin und Biologie: Workshop, Hannover, Germany, 29 September–1 October 1977; Springer: Berlin/Heidelberg, Germany, 1978; pp. 83–114. [Google Scholar]

- Bayzidi, H.; Talatahari, S.; Saraee, M.; Lamarche, C.-P. Social Network Search for Solving Engineering Optimization Problems. Comput. Intell. Neurosci. 2021, 2021, 8548639. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat Swarm Optimization: A Nature-Inspired Algorithm to Solve Global Optimization Problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

- Azizi, M.; Talatahari, S.; Giaralis, A. Optimization of Engineering Design Problems Using Atomic Orbital Search Algorithm. IEEE Access 2021, 9, 102497–102519. [Google Scholar] [CrossRef]

- Sadeeq, H.T.; Abdulazeez, A.M. Giant Trevally Optimizer (GTO): A Novel Metaheuristic Algorithm for Global Optimization and Challenging Engineering Problems. IEEE Access 2022, 10, 121615–121640. [Google Scholar] [CrossRef]

- Ezugwu, A.E.; Agushaka, J.O.; Abualigah, L.; Mirjalili, S.; Gandomi, A.H. Prairie Dog Optimization Algorithm. Neural Comput. Appl. 2022, 34, 20017–20065. [Google Scholar] [CrossRef]

- Das, A.K.; Pratihar, D.K. Bonobo Optimizer (BO): An Intelligent Heuristic with Self-Adjusting Parameters over Continuous Spaces and Its Applications to Engineering Problems. Appl. Intell. 2022, 52, 2942–2974. [Google Scholar] [CrossRef]

- Singh, H.; Singh, B.; Kaur, M. An Improved Elephant Herding Optimization for Global Optimization Problems. Eng. Comput. 2022, 38, 3489–3521. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, C.; Gharehchopogh, F.S.; Mirjalili, S. An Improved Whale Optimization Algorithm Based on Multi-Population Evolution for Global Optimization and Engineering Design Problems. Expert Syst. Appl. 2023, 215, 119269. [Google Scholar] [CrossRef]

- Wang, L.; Cao, Q.; Zhang, Z.; Mirjalili, S.; Zhao, W. Artificial Rabbits Optimization: A New Bio-Inspired Meta-Heuristic Algorithm for Solving Engineering Optimization Problems. Eng. Appl. Artif. Intell. 2022, 114, 105082. [Google Scholar] [CrossRef]

- Yadav, D. Blood Coagulation Algorithm: A Novel Bio-Inspired Meta-Heuristic Algorithm for Global Optimization. Mathematics 2021, 9, 3011. [Google Scholar] [CrossRef]

- Tarkhaneh, O.; Alipour, N.; Chapnevis, A.; Shen, H. Golden Tortoise Beetle Optimizer: A Novel Nature-Inspired Meta-Heuristic Algorithm for Engineering Problems. arXiv 2021, arXiv:210401521. [Google Scholar]

- Wu, H.; Zhang, X.; Song, L.; Zhang, Y.; Gu, L.; Zhao, X. Wild Geese Migration Optimization Algorithm: A New Meta-Heuristic Algorithm for Solving Inverse Kinematics of Robot. Comput. Intell. Neurosci. 2022, 2022, 5191758. [Google Scholar] [CrossRef]

- Zamani, H.; Nadimi-Shahraki, M.H.; Gandomi, A.H. Starling Murmuration Optimizer: A Novel Bio-Inspired Algorithm for Global and Engineering Optimization. Comput. Methods Appl. Mech. Eng. 2022, 392, 114616. [Google Scholar] [CrossRef]

- Lang, Y.; Gao, Y. Dream Optimization Algorithm (DOA): A Novel Metaheuristic Optimization Algorithm Inspired by Human Dreams and Its Applications to Real-World Engineering Problems. Comput. Methods Appl. Mech. Eng. 2025, 436, 117718. [Google Scholar] [CrossRef]

- Luan, T.M.; Khatir, S.; Tran, M.T.; De Baets, B.; Cuong-Le, T. Exponential-Trigonometric Optimization Algorithm for Solving Complicated Engineering Problems. Comput. Methods Appl. Mech. Eng. 2024, 432, 117411. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–Learning-Based Optimization: A Novel Method for Constrained Mechanical Design Optimization Problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Bhesdadiya, R.; Trivedi, I.N.; Jangir, P.; Jangir, N. Moth-Flame Optimizer Method for Solving Constrained Engineering Optimization Problems. In Advances in Computer and Computational Sciences: Proceedings of ICCCCS 2016, Volume 2; Springer: Berlin/Heidelberg, Germany, 2017; pp. 61–68. [Google Scholar]

- Deb, K.; Srinivasan, A. Innovization: Discovery of Innovative Design Principles through Multiobjective Evolutionary Optimization. In Multiobjective Problem Solving from Nature: From Concepts to Applications; Springer: Berlin/Heidelberg, Germany, 2007; pp. 243–262. [Google Scholar]

- Sayed, G.I.; Darwish, A.; Hassanien, A.E. A New Chaotic Multi-Verse Optimization Algorithm for Solving Engineering Optimization Problems. J. Exp. Theor. Artif. Intell. 2018, 30, 293–317. [Google Scholar] [CrossRef]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water Cycle Algorithm–A Novel Metaheuristic Optimization Method for Solving Constrained Engineering Optimization Problems. Comput. Struct. 2012, 110, 151–166. [Google Scholar] [CrossRef]

- Singh, N.; Kaur, J. Hybridizing Sine–Cosine Algorithm with Harmony Search Strategy for Optimization Design Problems. Soft Comput. 2021, 25, 11053–11075. [Google Scholar] [CrossRef]

- Azizyan, G.; Miarnaeimi, F.; Rashki, M.; Shabakhty, N. Flying Squirrel Optimizer (FSO): A Novel SI-Based Optimization Algorithm for Engineering Problems. Iran J Optim 2019, 11, 177–205. [Google Scholar]

- Yildiz, B.S.; Pholdee, N.; Bureerat, S.; Yildiz, A.R.; Sait, S.M. Enhanced Grasshopper Optimization Algorithm Using Elite Opposition-Based Learning for Solving Real-World Engineering Problems. Eng. Comput. 2022, 38, 4207–4219. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. Artificial Ecosystem-Based Optimization: A Novel Nature-Inspired Meta-Heuristic Algorithm. Neural Comput. Appl. 2020, 32, 9383–9425. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Mirjalili, S. Artificial Hummingbird Algorithm: A New Bio-Inspired Optimizer with Its Engineering Applications. Comput. Methods Appl. Mech. Eng. 2022, 388, 114194. [Google Scholar] [CrossRef]

- Askari, Q.; Saeed, M.; Younas, I. Heap-Based Optimizer Inspired by Corporate Rank Hierarchy for Global Optimization. Expert Syst. Appl. 2020, 161, 113702. [Google Scholar] [CrossRef]

- Yang, Y.; Chen, H.; Heidari, A.A.; Gandomi, A.H. Hunger Games Search: Visions, Conception, Implementation, Deep Analysis, Perspectives, and towards Performance Shifts. Expert Syst. Appl. 2021, 177, 114864. [Google Scholar] [CrossRef]

- Naruei, I.; Keynia, F.; Sabbagh Molahosseini, A. Hunter–Prey Optimization: Algorithm and Applications. Soft Comput. 2022, 26, 1279–1314. [Google Scholar] [CrossRef]

- Zhao, W.; Zhang, Z.; Wang, L. Manta Ray Foraging Optimization: An Effective Bio-Inspired Optimizer for Engineering Applications. Eng. Appl. Artif. Intell. 2020, 87, 103300. [Google Scholar] [CrossRef]

- Dhiman, G.; Garg, M.; Nagar, A.; Kumar, V.; Dehghani, M. A Novel Algorithm for Global Optimization: Rat Swarm Optimizer. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 8457–8482. [Google Scholar] [CrossRef]

- Emami, H. Anti-Coronavirus Optimization Algorithm. Soft Comput. 2022, 26, 4991–5023. [Google Scholar] [CrossRef]

- Braik, M.; Ryalat, M.H.; Al-Zoubi, H. A Novel Meta-Heuristic Algorithm for Solving Numerical Optimization Problems: Ali Baba and the Forty Thieves. Neural Comput. Appl. 2022, 34, 409–455. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Heidari, A.A.; Gandomi, A.H.; Chu, X.; Chen, H. RUN beyond the Metaphor: An Efficient Optimization Algorithm Based on Runge Kutta Method. Expert Syst. Appl. 2021, 181, 115079. [Google Scholar] [CrossRef]

- Azizi, M.; Aickelin, U.; Khorshidi, H.A.; Baghalzadeh Shishehgarkhaneh, M. Energy Valley Optimizer: A Novel Metaheuristic Algorithm for Global and Engineering Optimization. Sci. Rep. 2023, 13, 226. [Google Scholar] [CrossRef]

- Emami, H. Stock Exchange Trading Optimization Algorithm: A Human-Inspired Method for Global Optimization. J. Supercomput. 2022, 78, 2125–2174. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).