Breast Cancer Classification Depends on the Dynamic Dipper Throated Optimization Algorithm

Abstract

1. Introduction

2. Literature Review

3. The Proposed Methodology

3.1. Dataset Augmentation

3.2. Transfer Learning

3.3. Classification of Selected Features

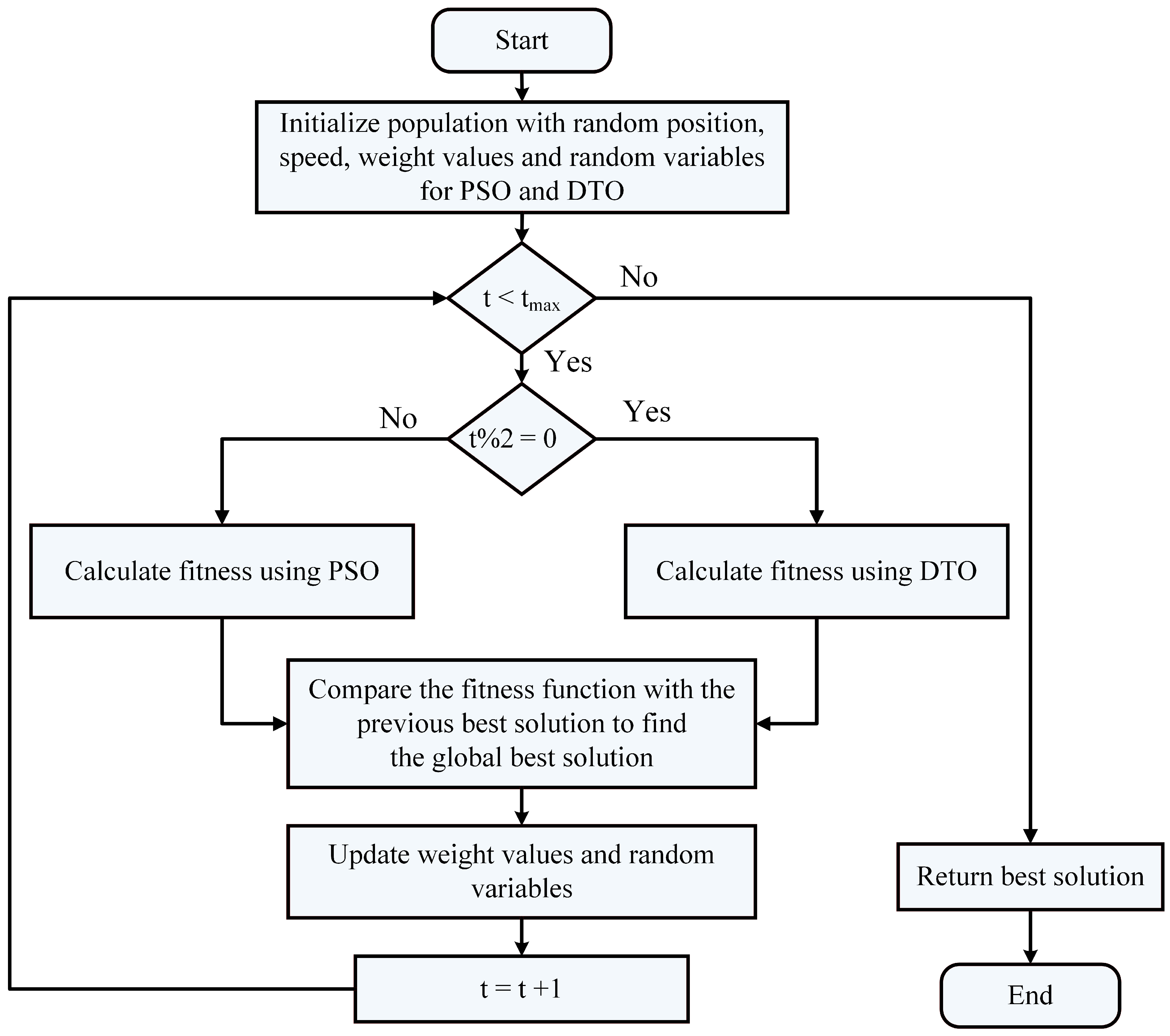

3.4. The Proposed Optimization Algorithm

3.5. Feature Selection Algorithm

| Algorithm 1: The proposed binary DDTPSO algorithm. |

| 1: Initialize the parameters of DDPSO algorithm |

| 2: Convert the resulting best solution to binary [0, 1] |

| 3: Evaluate the fitness of the resulting solutions |

| 4: Train KNN to assess the resulting solutions |

| 5: Set t = 1 |

| 6: while do |

| 7: Run DDPSO algorithm to obtain best solutions |

| 8: Convert best solutions to binary using the following equation: |

| 9: Calculate the fitness value |

| 10: Update the parameters of DDTPSO algorithm |

| 11: Update t = t + 1 |

| 12: end while |

| 13: Return best set of features |

4. Experimental Results

4.1. Configuration Parameters

4.2. Evaluation Metrics

| Algorithm | Parameter | Value |

|---|---|---|

| DTO [66] | Iterations | 500 |

| Number of runs | 30 | |

| Exploration percentage | 70 | |

| PSO [67] | Acceleration constants | [2, 12] |

| Inertia , | [0.6, 0.9] | |

| Number of particles | 10 | |

| Number of iterations | 80 | |

| WOA [68] | r | [0, 1] |

| Number of iterations | 80 | |

| Number of whales | 10 | |

| a | 2 to 0 | |

| GWO [69] | a | 2 to 0 |

| Number of iterations | 80 | |

| Number of wolves | 10 | |

| SBO [70] | Step size | 0.94 |

| Mutation probability | 0.05 | |

| Lowe and upper limit difference | 0.02 | |

| GA [71] | Cross over | 0.9 |

| Mutation ratio | 0.1 | |

| Selection mechanism | Roulette wheel | |

| Number of iterations | 80 | |

| Number of agents | 10 | |

| MVO [72] | Wormhole existence probability | [0.2, 1] |

| FA [73] | Number of fireflies | 10 |

| BA [74] | Inertia factor | [0, 1] |

4.3. Feature Extraction Evaluation

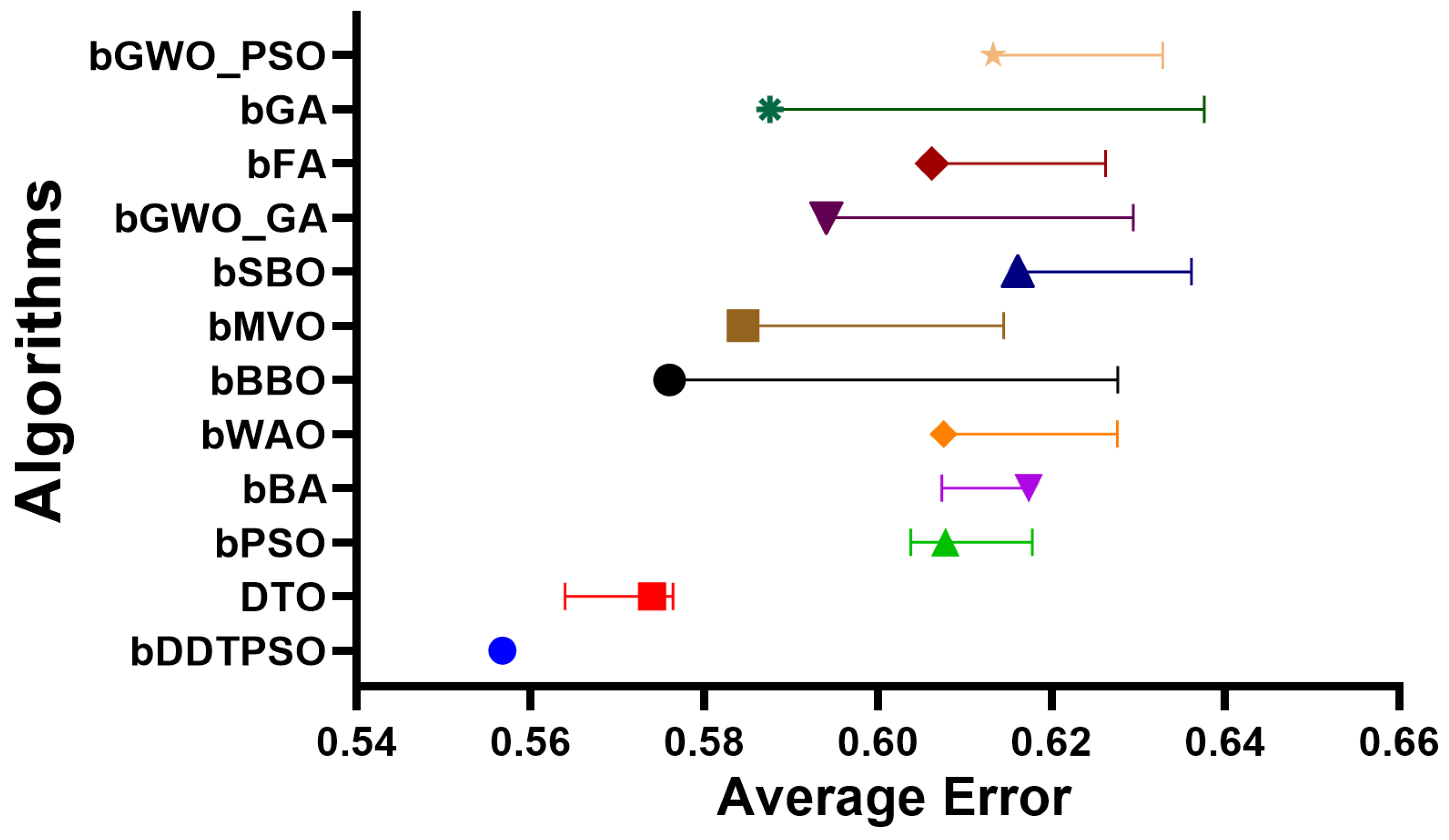

4.4. Feature Selection Evaluation

4.5. Evaluation of Classification Models

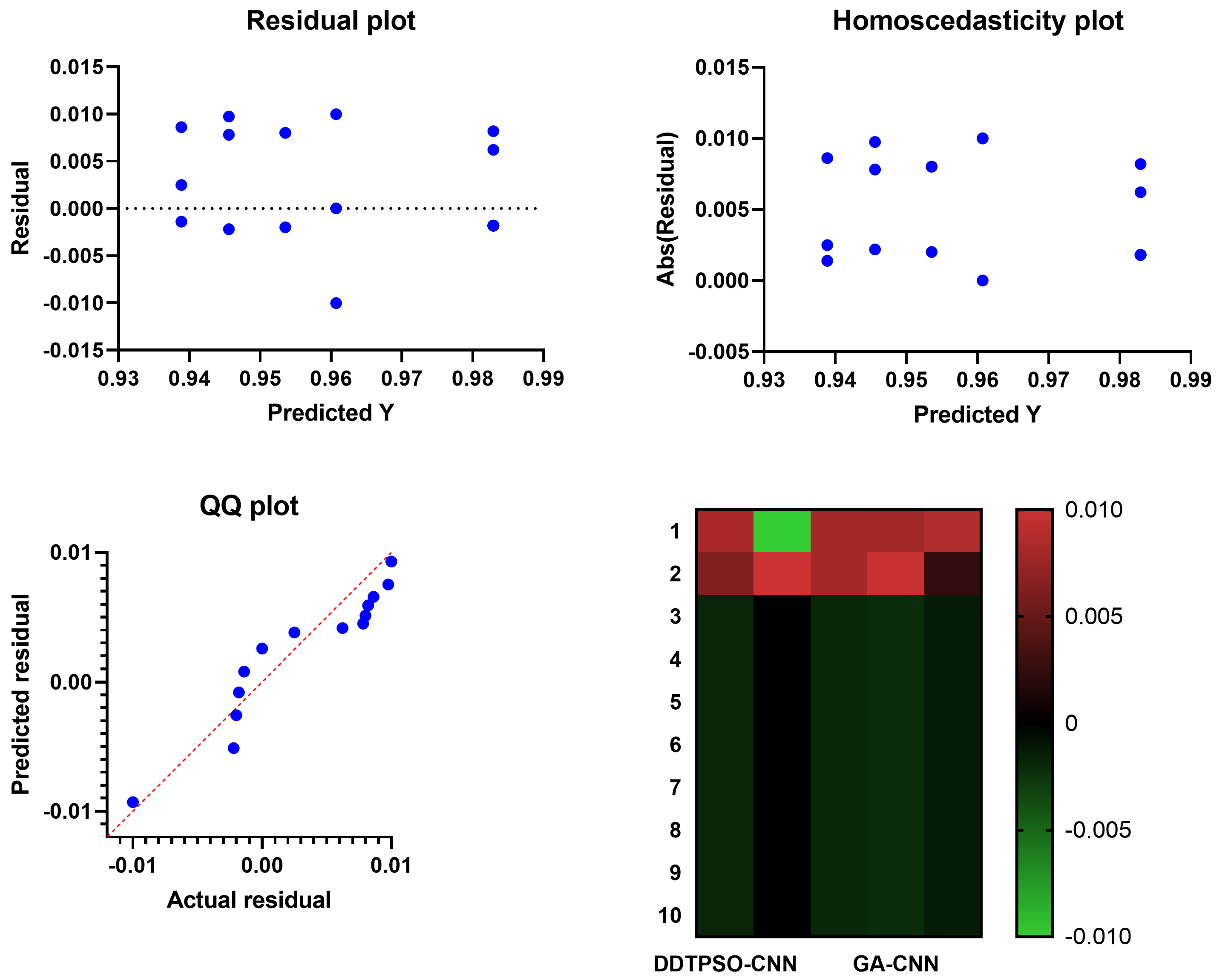

4.6. Evaluation of Optimized CNN Classification

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Yu, K.; Chen, S.; Chen, Y. Tumor Segmentation in Breast Ultrasound Image by Means of Res Path Combined with Dense Connection Neural Network. Diagnostics 2021, 11, 1565. [Google Scholar] [CrossRef] [PubMed]

- Feng, Y.; Spezia, M.; Huang, S.; Yuan, C.; Zeng, Z.; Zhang, L.; Ji, X.; Liu, W.; Huang, B.; Luo, W.; et al. Breast cancer development and progression: Risk factors, cancer stem cells, signaling pathways, genomics, and molecular pathogenesis. Genes Dis. 2018, 5, 77–106. [Google Scholar] [CrossRef]

- Badawy, S.M.; Mohamed, A.E.N.A.; Hefnawy, A.A.; Zidan, H.E.; GadAllah, M.T.; El-Banby, G.M. Automatic semantic segmentation of breast tumors in ultrasound images based on combining fuzzy logic and deep learning—A feasibility study. PLoS ONE 2021, 16, e0251899. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.C.; Hu, Z.Q.; Long, J.H.; Zhu, G.M.; Wang, Y.; Jia, Y.; Zhou, J.; Ouyang, Y.; Zeng, Z. Clinical Implications of Tumor-Infiltrating Immune Cells in Breast Cancer. J. Cancer 2019, 10, 6175–6184. [Google Scholar] [CrossRef] [PubMed]

- Irfan, R.; Almazroi, A.A.; Rauf, H.T.; Damaševičius, R.; Nasr, E.A.; Abdelgawad, A.E. Dilated Semantic Segmentation for Breast Ultrasonic Lesion Detection Using Parallel Feature Fusion. Diagnostics 2021, 11, 1212. [Google Scholar] [CrossRef] [PubMed]

- Faust, O.; Acharya, U.R.; Meiburger, K.M.; Molinari, F.; Koh, J.E.W.; Yeong, C.H.; Kongmebhol, P.; Ng, K.H. Comparative assessment of texture features for the identification of cancer in ultrasound images: A review. Biocybern. Biomed. Eng. 2018, 38, 275–296. [Google Scholar] [CrossRef]

- Abdelaziz, A.; Abdelhamid, S.R.A. Optimized Two-Level Ensemble Model for Predicting the Parameters of Metamaterial Antenna. Comput. Mater. Contin. 2022, 73, 917–933. [Google Scholar] [CrossRef]

- Sainsbury, J.R.C.; Anderson, T.J.; Morgan, D.A.L. Breast cancer. BMJ 2000, 321, 745–750. [Google Scholar] [CrossRef]

- Sun, Q.; Lin, X.; Zhao, Y.; Li, L.; Yan, K.; Liang, D.; Sun, D.; Li, Z.C. Deep Learning vs. Radiomics for Predicting Axillary Lymph Node Metastasis of Breast Cancer Using Ultrasound Images: Don’t Forget the Peritumoral Region. Front. Oncol. 2020, 10, 53. [Google Scholar] [CrossRef]

- Almajalid, R.; Shan, J.; Du, Y.; Zhang, M. Development of a Deep-Learning-Based Method for Breast Ultrasound Image Segmentation. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 1103–1108. [Google Scholar] [CrossRef]

- Ouahabi, A. (Ed.) Signal and Image Multiresolution Analysis; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Sami Khafaga, D.; Ali Alhussan, A.; El-kenawy, E.S.M.; Takieldeen, A.E.; Hassan, T.M.; Hegazy, E.A.; Eid, E.A.F.; Ibrahim, A.; Abdelhamid, A.A. Meta-heuristics for Feature Selection and Classification in Diagnostic Breast-Cancer. Comput. Mater. Contin. 2022, 73, 749–765. [Google Scholar]

- Sood, R.; Rositch, A.F.; Shakoor, D.; Ambinder, E.; Pool, K.L.; Pollack, E.; Mollura, D.J.; Mullen, L.A.; Harvey, S.C. Ultrasound for Breast Cancer Detection Globally: A Systematic Review and Meta-Analysis. J. Glob. Oncol. 2019, 5, 1–17. [Google Scholar] [CrossRef]

- Byra, M. Breast mass classification with transfer learning based on scaling of deep representations. Biomed. Signal Process. Control 2021, 69, 102828. [Google Scholar] [CrossRef]

- Chen, D.R.; Hsiao, Y.H. Computer-aided Diagnosis in Breast Ultrasound. J. Med. Ultrasound 2008, 16, 46–56. [Google Scholar] [CrossRef]

- Moustafa, A.F.; Cary, T.W.; Sultan, L.R.; Schultz, S.M.; Conant, E.F.; Venkatesh, S.S.; Sehgal, C.M. Color Doppler Ultrasound Improves Machine Learning Diagnosis of Breast Cancer. Diagnostics 2020, 10, 631. [Google Scholar] [CrossRef] [PubMed]

- Shen, W.C.; Chang, R.F.; Moon, W.K.; Chou, Y.H.; Huang, C.S. Breast Ultrasound Computer-Aided Diagnosis Using BI-RADS Features. Acad. Radiol. 2007, 14, 928–939. [Google Scholar] [CrossRef]

- Lee, J.H.; Seong, Y.K.; Chang, C.H.; Park, J.; Park, M.; Woo, K.G.; Ko, E.Y. Fourier-based shape feature extraction technique for computer-aided B-Mode ultrasound diagnosis of breast tumor. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 6551–6554. [Google Scholar] [CrossRef]

- Ding, J.; Cheng, H.D.; Huang, J.; Liu, J.; Zhang, Y. Breast Ultrasound Image Classification Based on Multiple-Instance Learning. J. Digit. Imaging 2012, 25, 620–627. [Google Scholar] [CrossRef]

- Bing, L.; Wang, W. Sparse Representation Based Multi-Instance Learning for Breast Ultrasound Image Classification. Comput. Math. Methods Med. 2017, 2017, e7894705. [Google Scholar] [CrossRef]

- Prabhakar, T.; Poonguzhali, S. Automatic detection and classification of benign and malignant lesions in breast ultrasound images using texture morphological and fractal features. In Proceedings of the 2017 10th Biomedical Engineering International Conference (BMEiCON), Hokkaido, Japan, 31 August–2 September 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, Q.; Suo, J.; Chang, W.; Shi, J.; Chen, M. Dual-modal computer-assisted evaluation of axillary lymph node metastasis in breast cancer patients on both real-time elastography and B-mode ultrasound. Eur. J. Radiol. 2017, 95, 66–74. [Google Scholar] [CrossRef]

- Gao, Y.; Geras, K.J.; Lewin, A.A.; Moy, L. New Frontiers: An Update on Computer-Aided Diagnosis for Breast Imaging in the Age of Artificial Intelligence. Am. J. Roentgenol. 2019, 212, 300–307. [Google Scholar] [CrossRef]

- Geras, K.J.; Mann, R.M.; Moy, L. Artificial Intelligence for Mammography and Digital Breast Tomosynthesis: Current Concepts and Future Perspectives. Radiology 2019, 293, 246–259. [Google Scholar] [CrossRef]

- Fujioka, T.; Mori, M.; Kubota, K.; Oyama, J.; Yamaga, E.; Yashima, Y.; Katsuta, L.; Nomura, K.; Nara, M.; Oda, G.; et al. The Utility of Deep Learning in Breast Ultrasonic Imaging: A Review. Diagnostics 2020, 10, 1055. [Google Scholar] [CrossRef] [PubMed]

- Khafaga, D.S.; Alhussan, A.A.; El-kenawy, E.S.M.; Ibrahim, A.; Abd Elkhalik, S.H.; El-Mashad, S.Y.; Abdelhamid, A.A. Improved Prediction of Metamaterial Antenna Bandwidth Using Adaptive Optimization of LSTM. Comput. Mater. Contin. 2022, 73, 865–881. [Google Scholar] [CrossRef]

- Kadry, S.; Rajinikanth, V.; Taniar, D.; Damaševičius, R.; Valencia, X.P.B. Automated segmentation of leukocyte from hematological images—a study using various CNN schemes. J. Supercomput. 2022, 78, 6974–6994. [Google Scholar] [CrossRef]

- Abayomi-Alli, O.; Damasevicius, R.; Misra, S.; Maskeliunas, R.; Abayomi-Alli, A. Malignant skin melanoma detection using image augmentation by oversamplingin nonlinear lower-dimensional embedding manifold. Turk. J. Electr. Eng. Comput. Sci. 2021, 29, 2600–2614. [Google Scholar] [CrossRef]

- Maqsood, S.; Damaševičius, R.; Maskeliūnas, R. Hemorrhage Detection Based on 3D CNN Deep Learning Framework and Feature Fusion for Evaluating Retinal Abnormality in Diabetic Patients. Sensors 2021, 21, 3865. [Google Scholar] [CrossRef] [PubMed]

- Ma, G.; Yue, X. An improved whale optimization algorithm based on multilevel threshold image segmentation using the Otsu method. Eng. Appl. Artif. Intell. 2022, 113, 104960. [Google Scholar] [CrossRef]

- Khan, M.A.; Kadry, S.; Parwekar, P.; Damaševičius, R.; Mehmood, A.; Khan, J.A.; Naqvi, S.R. Human gait analysis for osteoarthritis prediction: A framework of deep learning and kernel extreme learning machine. Complex Intell. Syst. 2021. [Google Scholar] [CrossRef]

- Dhungel, N.; Carneiro, G.; Bradley, A.P. The Automated Learning of Deep Features for Breast Mass Classification from Mammograms. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016, Proceedings of the 19th International Conference, Athens, Greece, 17–21 October 2016; Lecture Notes in Computer Science; Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 106–114. [Google Scholar] [CrossRef]

- Khan, M.A.; Alhaisoni, M.; Tariq, U.; Hussain, N.; Majid, A.; Damaševičius, R.; Maskeliūnas, R. COVID-19 Case Recognition from Chest CT Images by Deep Learning, Entropy-Controlled Firefly Optimization, and Parallel Feature Fusion. Sensors 2021, 21, 7286. [Google Scholar] [CrossRef]

- Odusami, M.; Maskeliūnas, R.; Damaševičius, R.; Krilavičius, T. Analysis of Features of Alzheimer’s Disease: Detection of Early Stage from Functional Brain Changes in Magnetic Resonance Images Using a Finetuned ResNet18 Network. Diagnostics 2021, 11, 1071. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Masood, M.; Mehmood, A.; Mahum, R.; Khan, M.A.; Kadry, S.; Thinnukool, O. Analysis of Brain MRI Images Using Improved CornerNet Approach. Diagnostics 2021, 11, 1856. [Google Scholar] [CrossRef]

- Metwally, M.; El-Kenawy, E.S.M.; Khodadadi, N.; Mirjalili, S.; Khodadadi, E.; Abotaleb, M.; Alharbi, A.H.; Abdelhamid, A.A.; Ibrahim, A.; Amer, G.M.; et al. Meta-Heuristic Optimization of LSTM-Based Deep Network for Boosting the Prediction of Monkeypox Cases. Mathematics 2022, 10, 3845. [Google Scholar] [CrossRef]

- AlEisa, H.N.; El-kenawy, E.M.; Alhussan, A.A.; Saber, M.; Abdelhamid, A.A.; Khafaga, D.S. Transfer Learning for Chest X-rays Diagnosis Using Dipper Throated Algorithm. Comput. Mater. Contin. 2022, 73, 2371–2387. [Google Scholar] [CrossRef]

- Abdallah, Y.; Abdelhamid, A.; Elarif, T.; Salem, A.B.M. Intelligent Techniques in Medical Volume Visualization. Procedia Comput. Sci. 2015, 65, 546–555. [Google Scholar] [CrossRef]

- Majid, A.; Khan, M.A.; Nam, Y.; Tariq, U.; Roy, S.; Mostafa, R.R.; Sakr, R.H. COVID19 Classification Using CT Images via Ensembles of Deep Learning Models. Comput. Mater. Contin. 2021, 69, 319–337. [Google Scholar] [CrossRef]

- Sharif, M.I.; Khan, M.A.; Alhussein, M.; Aurangzeb, K.; Raza, M. A decision support system for multimodal brain tumor classification using deep learning. Complex Intell. Syst. 2022, 8, 3007–3020. [Google Scholar] [CrossRef]

- Liu, D.; Liu, Y.; Li, S.; Li, W.; Wang, L. Fusion of Handcrafted and Deep Features for Medical Image Classification. J. Phys. Conf. Ser. 2019, 1345, 022052. [Google Scholar] [CrossRef]

- Alinsaif, S.; Lang, J. 3D shearlet-based descriptors combined with deep features for the classification of Alzheimer’s disease based on MRI data. Comput. Biol. Med. 2021, 138, 104879. [Google Scholar] [CrossRef]

- Khan, M.A.; Muhammad, K.; Sharif, M.; Akram, T.; Albuquerque, V.H.C.D. Multi-Class Skin Lesion Detection and Classification via Teledermatology. IEEE J. Biomed. Health Inform. 2021, 25, 4267–4275. [Google Scholar] [CrossRef]

- Masud, M.; Eldin Rashed, A.E.; Hossain, M.S. Convolutional neural network-based models for diagnosis of breast cancer. Neural Comput. Appl. 2022, 34, 11383–11394. [Google Scholar] [CrossRef]

- Jiménez-Gaona, Y.; Rodríguez-Álvarez, M.J.; Lakshminarayanan, V. Deep-Learning-Based Computer-Aided Systems for Breast Cancer Imaging: A Critical Review. Appl. Sci. 2020, 10, 8298. [Google Scholar] [CrossRef]

- Muhammad, M.; Zeebaree, D.; Brifcani, A.M.A.; Saeed, J.; Zebari, D.A. Region of Interest Segmentation Based on Clustering Techniques for Breast Cancer Ultrasound Images: A Review. J. Appl. Sci. Technol. Trends 2020, 1, 78–91. [Google Scholar] [CrossRef]

- Huang, K.; Zhang, Y.; Cheng, H.D.; Xing, P. Shape-Adaptive Convolutional Operator for Breast Ultrasound Image Segmentation. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Sadad, T.; Hussain, A.; Munir, A.; Habib, M.; Ali Khan, S.; Hussain, S.; Yang, S.; Alawairdhi, M. Identification of Breast Malignancy by Marker-Controlled Watershed Transformation and Hybrid Feature Set for Healthcare. Appl. Sci. 2020, 10, 1900. [Google Scholar] [CrossRef]

- Mishra, A.K.; Roy, P.; Bandyopadhyay, S.; Das, S.K. Breast ultrasound tumour classification: A Machine Learning—Radiomics based approach. Expert Syst. 2021, 38, e12713. [Google Scholar] [CrossRef]

- Hussain, S.; Xi, X.; Ullah, I.; Wu, Y.; Ren, C.; Lianzheng, Z.; Tian, C.; Yin, Y. Contextual Level-Set Method for Breast Tumor Segmentation. IEEE Access 2020, 8, 189343–189353. [Google Scholar] [CrossRef]

- Han, X.; Wang, J.; Zhou, W.; Chang, C.; Ying, S.; Shi, J. Deep Doubly Supervised Transfer Network for Diagnosis of Breast Cancer with Imbalanced Ultrasound Imaging Modalities. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2020, Proceedings of the 23rd International Conference, Lima, Peru, 4–8 October 2020; Lecture Notes in Computer Science; Martel, A.L., Abolmaesumi, P., Stoyanov, D., Mateus, D., Zuluaga, M.A., Zhou, S.K., Racoceanu, D., Joskowicz, L., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 141–149. [Google Scholar] [CrossRef]

- Moon, W.K.; Lee, Y.W.; Ke, H.H.; Lee, S.H.; Huang, C.S.; Chang, R.F. Computer-aided diagnosis of breast ultrasound images using ensemble learning from convolutional neural networks. Comput. Methods Programs Biomed. 2020, 190, 105361. [Google Scholar] [CrossRef]

- Byra, M.; Jarosik, P.; Szubert, A.; Galperin, M.; Ojeda-Fournier, H.; Olson, L.; O’Boyle, M.; Comstock, C.; Andre, M. Breast mass segmentation in ultrasound with selective kernel U-Net convolutional neural network. Biomed. Signal Process. Control 2020, 61, 102027. [Google Scholar] [CrossRef]

- Kadry, S.; Damaševičius, R.; Taniar, D.; Rajinikanth, V.; Lawal, I.A. Extraction of Tumour in Breast MRI using Joint Thresholding and Segmentation—A Study. In Proceedings of the 2021 Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII), Chennai, India, 25–27 March 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Lahoura, V.; Singh, H.; Aggarwal, A.; Sharma, B.; Mohammed, M.A.; Damaševičius, R.; Kadry, S.; Cengiz, K. Cloud Computing-Based Framework for Breast Cancer Diagnosis Using Extreme Learning Machine. Diagnostics 2021, 11, 241. [Google Scholar] [CrossRef]

- Maqsood, S.; Damasevicius, R.; Shah, F.M. An Efficient Approach for the Detection of Brain Tumor Using Fuzzy Logic and U-NET CNN Classification. In Computational Science and Its Applications—ICCSA 2021, Proceedings of the 21st International Conference, Cagliari, Italy, 13–16 September 2021; Lecture Notes in Computer Science; Gervasi, O., Murgante, B., Misra, S., Garau, C., Blečić, I., Taniar, D., Apduhan, B.O., Rocha, A.M.A.C., Tarantino, E., Torre, C.M., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 105–118. [Google Scholar] [CrossRef]

- Rajinikanth, V.; Kadry, S.; Taniar, D.; Damaševičius, R.; Rauf, H.T. Breast-Cancer Detection using Thermal Images with Marine-Predators-Algorithm Selected Features. In Proceedings of the 2021 Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII), Chennai, India, 25–27 March 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Al-Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief 2020, 28, 104863. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.I.; Raza, M.; Anjum, A.; Saba, T.; Shad, S.A. Skin lesion segmentation and classification: A unified framework of deep neural network features fusion and selection. Expert Syst. 2022, 39, e12497. [Google Scholar] [CrossRef]

- El-Kenawy, E.S.M.; Mirjalili, S.; Alassery, F.; Zhang, Y.D.; Eid, M.M.; El-Mashad, S.Y.; Aloyaydi, B.A.; Ibrahim, A.; Abdelhamid, A.A. Novel Meta-Heuristic Algorithm for Feature Selection, Unconstrained Functions and Engineering Problems. IEEE Access 2022, 10, 40536–40555. [Google Scholar] [CrossRef]

- Samee, N.A.; El-Kenawy, E.S.M.; Atteia, G.; Jamjoom, M.M.; Ibrahim, A.; Abdelhamid, A.A.; El-Attar, N.E.; Gaber, T.; Slowik, A.; Shams, M.Y. Metaheuristic Optimization Through Deep Learning Classification of COVID-19 in Chest X-Ray Images. Comput. Mater. Contin. 2022, 73, 4193–4210. [Google Scholar]

- Khafaga, D.S.; Alhussan, A.A.; El-Kenawy, E.S.M.; Ibrahim, A.; Metwally, M.; Abdelhamid, A.A. Solving Optimization Problems of Metamaterial and Double T-Shape Antennas Using Advanced Meta-Heuristics Algorithms. IEEE Access 2022, 10, 74449–74471. [Google Scholar] [CrossRef]

- Abdelaziz, A.; Abdelhamid, S.R.A. Robust Prediction of the Bandwidth of Metamaterial Antenna Using Deep Learning. Comput. Mater. Contin. 2022, 72, 2305–2321. [Google Scholar] [CrossRef]

- El-Kenawy, E.S.M.; Mirjalili, S.; Abdelhamid, A.A.; Ibrahim, A.; Khodadadi, N.; Metwally, M. Meta-Heuristic Optimization and Keystroke Dynamics for Authentication of Smartphone Users. Mathematics 2022, 10, 2912. [Google Scholar] [CrossRef]

- El-kenawy, E.S.M.; Albalawi, F.; Ward, S.A.; Ghoneim, S.S.M.; Metwally, M.; Abdelhamid, A.A.; Bailek, N.; Ibrahim, A. Feature Selection and Classification of Transformer Faults Based on Novel Meta-Heuristic Algorithm. Mathematics 2022, 10, 3144. [Google Scholar] [CrossRef]

- Takieldeen, A.E.; El-kenawy, E.S.M.; Hadwan, M.; Zaki, R.M. Dipper Throated Optimization Algorithm for Unconstrained Function and Feature Selection. Comput. Mater. Contin. 2022, 72, 1465–1481. [Google Scholar] [CrossRef]

- Awange, J.L.; Paláncz, B.; Lewis, R.H.; Völgyesi, L. Particle Swarm Optimization. In Mathematical Geosciences: Hybrid Symbolic-Numeric Methods; Awange, J.L., Paláncz, B., Lewis, R.H., Völgyesi, L., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 167–184. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Samareh Moosavi, S.H.; Khatibi Bardsiri, V. Satin bowerbird optimizer: A new optimization algorithm to optimize ANFIS for software development effort estimation. Eng. Appl. Artif. Intell. 2017, 60, 1–15. [Google Scholar] [CrossRef]

- Immanuel, S.D.; Chakraborty, U.K. Genetic Algorithm: An Approach on Optimization. In Proceedings of the 2019 International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 17–19 July 2019; pp. 701–708. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-Verse Optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Ariyaratne, M.; Fernando, T. A Comprehensive Review of the Firefly Algorithms for Data Clustering. In Advances in Swarm Intelligence: Variations and Adaptations for Optimization Problems; Biswas, A., Kalayci, C.B., Mirjalili, S., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 217–239. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

| Article | Approach | Dataset | Features |

|---|---|---|---|

| [56] | Fuzzy logic and U-Net | BUSI | CNN features |

| [14] | deep representation scaling and CNN | BUSI | Deep features through scaling layers |

| [49] | Radiomics and Machine learning | BUSI | Geometric features and Textural |

| [3] | Semantic segmentation and Fuzzy logic | BUSI | Deep features |

| [50] | U-Net Encoder-Decoder CNN | BUSI | High level contextual features |

| [48] | Watershed and Hilbert transform | BUSI | Textural features |

| [47] | Shape Adaptive CNN | Breast Ultrasound Images | Deep features |

| Metric | Value |

|---|---|

| Mean | |

| Best fitness | |

| Worst fitness | |

| Average fitness size | |

| Average error | |

| Standard deviation | |

| Accuracy | |

| p value | |

| N value | |

| Specificity | |

| Sensitivity | |

| F Score |

| Accuracy | Sensitivity | Specificity | p Value | N Value | F Score | |

|---|---|---|---|---|---|---|

| VGG16Net | 0.776 | 0.750 | 0.778 | 0.231 | 0.972 | 0.353 |

| ResNet-50 | 0.789 | 0.769 | 0.791 | 0.250 | 0.974 | 0.377 |

| AlexNet | 0.828 | 0.811 | 0.830 | 0.273 | 0.982 | 0.408 |

| GoogLeNet | 0.853 | 0.784 | 0.857 | 0.266 | 0.984 | 0.397 |

| bDDTPSO | DTO | bPSO | bBA | bWAO | bBBO | bMVO | bSBO | bFA | bGA | bGWO_PSO | bGWO_GA | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Average error | 0.557 | 0.574 | 0.608 | 0.617 | 0.608 | 0.576 | 0.584 | 0.616 | 0.606 | 0.588 | 0.613 | 0.594 |

| Average select size | 0.510 | 0.710 | 0.710 | 0.849 | 0.873 | 0.873 | 0.806 | 0.880 | 0.744 | 0.652 | 0.843 | 0.632 |

| Average fitness | 0.620 | 0.636 | 0.635 | 0.657 | 0.642 | 0.640 | 0.664 | 0.674 | 0.686 | 0.648 | 0.644 | 0.642 |

| Best fitness | 0.522 | 0.556 | 0.615 | 0.547 | 0.606 | 0.630 | 0.589 | 0.617 | 0.605 | 0.551 | 0.598 | 0.620 |

| Worst fitness | 0.620 | 0.623 | 0.683 | 0.649 | 0.683 | 0.716 | 0.707 | 0.697 | 0.703 | 0.666 | 0.708 | 0.696 |

| Std. fitness | 0.442 | 0.447 | 0.446 | 0.456 | 0.449 | 0.491 | 0.497 | 0.507 | 0.483 | 0.449 | 0.465 | 0.448 |

| bDDTPSO | DTO | bPSO | bBA | bWAO | bBBO | bMVO | bSBO | bFA | bGA | bGWO_PSO | bGWO_GA | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Number of values | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 |

| 75% Percentile | 0.557 | 0.574 | 0.608 | 0.617 | 0.608 | 0.596 | 0.585 | 0.616 | 0.606 | 0.588 | 0.613 | 0.594 |

| 25% Percentile | 0.557 | 0.574 | 0.608 | 0.617 | 0.608 | 0.576 | 0.585 | 0.616 | 0.606 | 0.588 | 0.613 | 0.594 |

| Maximum | 0.557 | 0.576 | 0.618 | 0.617 | 0.628 | 0.628 | 0.615 | 0.636 | 0.626 | 0.638 | 0.633 | 0.629 |

| Median | 0.557 | 0.574 | 0.608 | 0.617 | 0.608 | 0.576 | 0.585 | 0.616 | 0.606 | 0.588 | 0.613 | 0.594 |

| Minimum | 0.557 | 0.564 | 0.604 | 0.607 | 0.608 | 0.576 | 0.585 | 0.616 | 0.606 | 0.588 | 0.613 | 0.594 |

| Range | 0.000 | 0.012 | 0.014 | 0.010 | 0.020 | 0.052 | 0.030 | 0.020 | 0.020 | 0.050 | 0.020 | 0.035 |

| Std. error of mean | 0.000 | 0.001 | 0.001 | 0.001 | 0.002 | 0.006 | 0.003 | 0.002 | 0.002 | 0.005 | 0.002 | 0.003 |

| Std. deviation | 0.000 | 0.003 | 0.003 | 0.003 | 0.006 | 0.019 | 0.009 | 0.006 | 0.006 | 0.015 | 0.006 | 0.011 |

| Mean | 0.557 | 0.573 | 0.608 | 0.617 | 0.610 | 0.586 | 0.588 | 0.619 | 0.609 | 0.593 | 0.616 | 0.598 |

| SS | DF | MS | F (DFn, DFd) | p Value | |

|---|---|---|---|---|---|

| Treatment | 0.04443 | 11 | 0.004039 | F (11, 120) = 49.80 | p < 0.0001 |

| Residual | 0.009733 | 120 | 0.00008111 | ||

| Total | 0.05416 | 131 |

| bDDTPSO | DTO | bPSO | bBA | bWAO | bBBO | bMVO | bSBO | bFA | bGA | bGWO_PSO | bGWO_GA | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Theoretical median | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Actual median | 0.5568 | 0.574 | 0.6078 | 0.6174 | 0.6076 | 0.576 | 0.5845 | 0.6161 | 0.6062 | 0.5876 | 0.6133 | 0.5941 |

| Sum of negative ranks | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Sum of signed ranks (W) | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 |

| Sum of positive ranks | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 | 66 |

| Significant (alpha = 0.05)? | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Discrepancy | 0.5568 | 0.574 | 0.6078 | 0.6174 | 0.6076 | 0.576 | 0.5845 | 0.6161 | 0.6062 | 0.5876 | 0.6133 | 0.5941 |

| p value (two tailed) | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 |

| Number of values | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 |

| Accuracy | Sensitivity | Specificity | p Value | N Value | F Score | |

|---|---|---|---|---|---|---|

| CNN | 0.925 | 0.300 | 0.981 | 0.588 | 0.940 | 0.397 |

| NN | 0.917 | 0.300 | 0.972 | 0.492 | 0.940 | 0.373 |

| SVM | 0.894 | 0.333 | 0.947 | 0.375 | 0.938 | 0.353 |

| KNN | 0.884 | 0.727 | 0.897 | 0.364 | 0.976 | 0.485 |

| Accuracy | Sensitivity | Specificity | p Value | N Value | F Score | |

|---|---|---|---|---|---|---|

| DDTPSO-CNN | 0.981 | 0.984 | 0.981 | 0.865 | 0.998 | 0.920 |

| DTO-CNN | 0.961 | 0.982 | 0.949 | 0.914 | 0.990 | 0.947 |

| PSO-CNN | 0.952 | 0.982 | 0.927 | 0.914 | 0.985 | 0.947 |

| GA-CNN | 0.943 | 0.980 | 0.909 | 0.909 | 0.980 | 0.943 |

| WAO-CNN | 0.938 | 0.980 | 0.889 | 0.909 | 0.976 | 0.943 |

| DDTPSO-CNN | DTO-CNN | PSO-CNN | GA-CNN | WAO-CNN | |

|---|---|---|---|---|---|

| Number of values | 10 | 10 | 10 | 10 | 10 |

| Minimum | 0.981 | 0.951 | 0.952 | 0.943 | 0.938 |

| Mean | 0.983 | 0.961 | 0.954 | 0.946 | 0.939 |

| Median | 0.981 | 0.961 | 0.952 | 0.943 | 0.938 |

| Maximum | 0.991 | 0.971 | 0.962 | 0.955 | 0.948 |

| 75% Percentile | 0.983 | 0.961 | 0.954 | 0.946 | 0.939 |

| 25% Percentile | 0.981 | 0.961 | 0.952 | 0.943 | 0.938 |

| Std. error of mean | 0.0012 | 0.0015 | 0.0013 | 0.0015 | 0.0010 |

| Std. deviation | 0.0038 | 0.0047 | 0.0042 | 0.0046 | 0.0033 |

| Range | 0.010 | 0.020 | 0.010 | 0.012 | 0.010 |

| DDTPSO-CNN | DTO-CNN | PSO-CNN | GA-CNN | WAO-CNN | |

|---|---|---|---|---|---|

| Theoretical median | 0 | 0 | 0 | 0 | 0 |

| Actual median | 0.9811 | 0.9607 | 0.9516 | 0.9434 | 0.9375 |

| Sum of negative ranks | 0 | 0 | 0 | 0 | 0 |

| Sum of signed ranks (W) | 55 | 55 | 55 | 55 | 55 |

| Sum of positive ranks | 55 | 55 | 55 | 55 | 55 |

| p value (two tailed) | 0.002 | 0.002 | 0.002 | 0.002 | 0.002 |

| Exact or estimate | Exact | Exact | Exact | Exact | Exact |

| Discrepancy | 0.9811 | 0.9607 | 0.9516 | 0.9434 | 0.9375 |

| Significant (alpha = 0.05)? | Yes | Yes | Yes | Yes | Yes |

| Number of values | 10 | 10 | 10 | 10 | 10 |

| SS | DF | MS | F (DFn, DFd) | p Value | |

|---|---|---|---|---|---|

| Treatment | 0.01153 | 4 | 0.002882 | F (4, 45) = 165.9 | p < 0.0001 |

| Residual | 0.0007819 | 45 | 0.00001738 | ||

| Total | 0.01231 | 49 |

| Linear Regression | DTO-CNN | PSO-CNN | GA-CNN | WAO-CNN |

|---|---|---|---|---|

| Best-fit values | ||||

| Slope | −0.1515 | 1.094 | 1.186 | 0.8053 |

| Y-intercept | 1.11 | −0.1217 | −0.2197 | 0.1473 |

| X-intercept | 7.326 | 0.1113 | 0.1853 | −0.1829 |

| 1/slope | −6.603 | 0.9141 | 0.8435 | 1.242 |

| Std. error | ||||

| Slope | 0.4324 | 0.04789 | 0.09457 | 0.09921 |

| Y-intercept | 0.425 | 0.04707 | 0.09295 | 0.09751 |

| 95% confidence intervals | ||||

| Slope | −1.149 to 0.8457 | 0.9835 to 1.204 | 0.9675 to 1.404 | 0.5766 to 1.034 |

| Y-intercept | 0.1294 to 2.090 | −0.2303 to −0.01317 | −0.4341 to −0.005363 | −0.07754 to 0.3722 |

| X-intercept | 1.819 to +infinity | 0.01339 to 0.1912 | 0.005543 to 0.3092 | −0.6455 to 0.07498 |

| Goodness of fit | ||||

| R square | 0.0151 | 0.9849 | 0.9516 | 0.8917 |

| Sy.x | 0.004962 | 0.0005496 | 0.001085 | 0.001138 |

| Is slope significantly non-zero? | ||||

| F | 0.1227 | 521.8 | 157.2 | 65.9 |

| DFn, DFd | 1, 8 | 1, 8 | 1, 8 | 1, 8 |

| p value | <0.0001 | <0.0001 | <0.0001 | <0.0001 |

| Deviation from zero | Significant | Significant | Significant | Significant |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alhussan, A.A.; Eid, M.M.; Towfek, S.K.; Khafaga, D.S. Breast Cancer Classification Depends on the Dynamic Dipper Throated Optimization Algorithm. Biomimetics 2023, 8, 163. https://doi.org/10.3390/biomimetics8020163

Alhussan AA, Eid MM, Towfek SK, Khafaga DS. Breast Cancer Classification Depends on the Dynamic Dipper Throated Optimization Algorithm. Biomimetics. 2023; 8(2):163. https://doi.org/10.3390/biomimetics8020163

Chicago/Turabian StyleAlhussan, Amel Ali, Marwa M. Eid, S. K. Towfek, and Doaa Sami Khafaga. 2023. "Breast Cancer Classification Depends on the Dynamic Dipper Throated Optimization Algorithm" Biomimetics 8, no. 2: 163. https://doi.org/10.3390/biomimetics8020163

APA StyleAlhussan, A. A., Eid, M. M., Towfek, S. K., & Khafaga, D. S. (2023). Breast Cancer Classification Depends on the Dynamic Dipper Throated Optimization Algorithm. Biomimetics, 8(2), 163. https://doi.org/10.3390/biomimetics8020163