1. Introduction

The development of prostheses is necessary to improve the quality of life of people who have lost limbs. These artificial devices play a crucial role in replacing missing parts of the body, allowing for the restoration of functionality, mobility, and autonomy of affected individuals [

1,

2,

3]. Additionally, prosthesis provide long-term health benefits, drive technological advancements, and enable adaptation. Continuous research and development in this field are fundamental to further improving the lives of those who depend on these devices.

The hand is one of the most developed organs of the body. It allows humans to interact with their environment through complex movements due to the considerable number of degrees of freedom in its structure (27 in total: four in each finger, five in the thumb and six in the wrist). In addition, it is a fundamental part of physical and social interactions. The upper extremities depend on the hand, so losing one implies a reduction in autonomy, limitations in the development of work and daily activities, and a drastic change in the lifestyle of people [

4].

In Ecuador, more than 8% of the population has some need in their upper and/or lower limbs. The cost of a prosthesis, which is around 8000 USD, is excessively high compared to the income of the average Ecuadorian, which, according to

Encuesta Nacional de Empleo, Desempleo y Subempleo (Enemdu), reached 248 USD in August 2021 [

5]. In addition, of the people with physical disabilities, only 17% are employed and most of them are in vulnerable economic situations. Despite several initiatives dedicated to the design and manufacture of prostheses, the needs of existing patients have not been met. The “Las Manuelas” mission, founded in 2007, acquired machinery in 2012 to produce 300 prostheses per month. Until 2019, the prostheses produced did not exceed 10% of the target.

Considering the aforementioned information, it is vital to develop systems that can adjust and optimize gripping patterns and gripping capacity [

1] and hand movements according to the needs and preferences of each individual. This will allow for greater customization and comfort in the use of prostheses. Bionic hands, powered by electromyographic (EMG) signals, can interpret and translate the electrical signals generated by residual muscles into precise commands for finger and hand movement [

6]. This provides a higher degree of control and a more natural experience when using the prosthesis.

Furthermore, advances in artificial intelligence (AI) have played a crucial role in the design and creation of bionic hands capable of interpreting EMG signals for precise movement control. These techniques use machine learning algorithms, artificial neural networks (ANNs), and computer vision techniques to process EMG signals and enhance prostheses [

3], which play a key role in the design of bionic hands [

7]. AI has also improved the bionic hands’ ability to learn and adapt as they interact with the environment. By collecting and analyzing real-time data, bionic hands can adjust their movements and grip strength more precisely and efficiently. These advancements have allowed bionic hands to provide better and more sophisticated functionality.

Several studies have focused on the design and manufacture of hand prosthetics that utilize EMG signals and AI for real-time control. For example, Amsüss et al. [

8] propose a pattern recognition system for surface EMG signals to control upper limb prosthetics using a trained ANN. Other researchers [

9] present a study based on EMG signals acquired from muscles and motion detection through a Human–Machine Interface, designing an upper limb prosthesis using an AI-based controller. In another work [

10], hardware design for hand gesture recognition using EMG is developed and implemented on a Zynq platform, processing the acquired EMG signals with an eight-channel Myo sensor. Furthermore, in [

11], various methods are applied to detect and classify muscle activities using sEMG signals, with ANNs showing the highest accuracy in recognizing movements among and within subjects. Additionally, in [

12], a woven band sensor is manufactured for myoelectric control of prosthetic hands based on single-channel sEMG signals.

The use of artificial intelligence techniques, especially ANNs, has revolutionized the design of bionic hands by allowing control through EMG signals, providing a higher degree of control, functionality, and adaptability. These advancements have had a significant impact on the quality of life of people with amputations or disabilities in their upper limbs. However, despite the progress and promising applications of artificial intelligence in EMG signal processing and other fields, there are still challenges and considerations that need to be addressed. In this regard, this work proposes the design and manufacture of an accessible hand prosthesis powered by EMG signals and controlled by an online neural network. The system demonstrates accuracy in classification and load capacity.

The rest of this manuscript is structured as follows:

Section 2 presents some notable related works and provides general information on the types of prosthesis, techniques, and EMG signals and their applications using artificial intelligence.

Section 3 describes the system design, the mechanical and control circuit design, data acquisition and analysis, and system integration for controlling a bionic hand.

Section 4 gathers the experimental results and presents the discussion. Finally,

Section 5 presents the conclusions of this study and states the future work.

2. Background and Related Works

This section provides a background and a review of the works related to the main topics addressed in the research.

2.1. Types of Prostheses

Upper limb prostheses can be classified into two types according to their functionality.

2.1.1. Passive Prostheses

Passive prostheses can be classified into cosmetic or functional prostheses. The cosmetic ones, as seen in

Figure 1, are intended to be an aesthetic replacement, simulating the patient’s missing limb section as best as possible [

13]; while the functional ones, are intended to assist the subject in very specific activities, which limits the user’s capabilities.

2.1.2. Active Prostheses

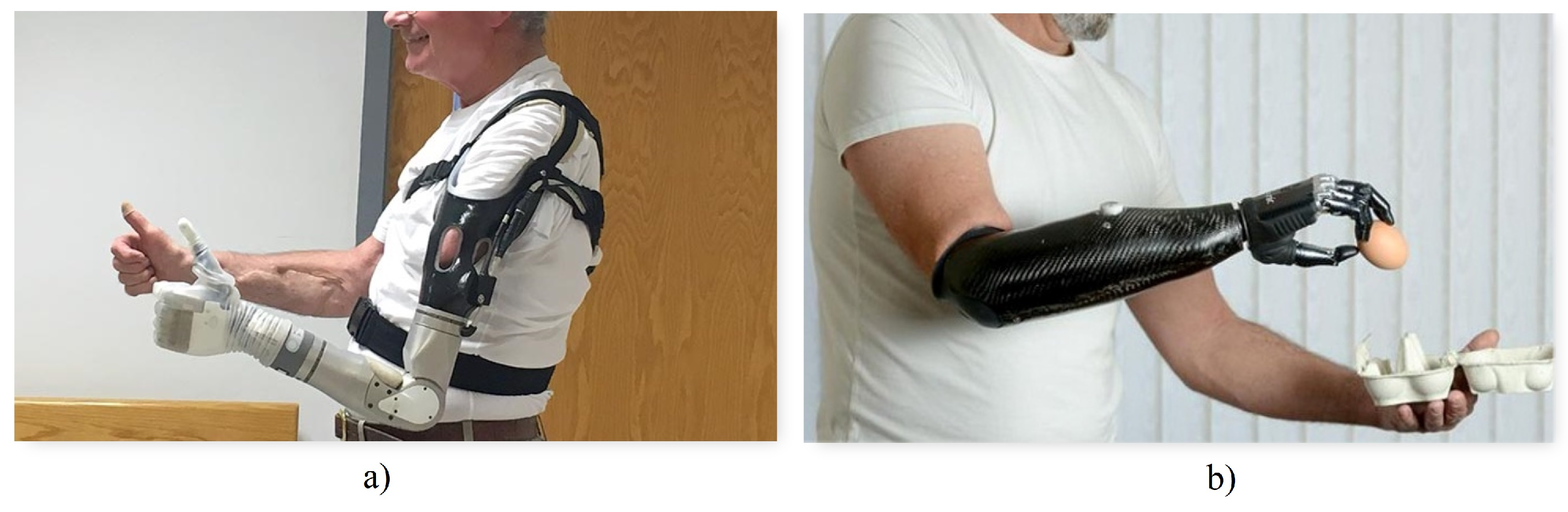

Active prostheses, as seen in

Figure 2a, have mobile joints and can be activated by two types of systems: mechanical actuation and external power supply [

4]. Mechanical actuation involves the use of cables and harnesses connected to the available upper limb parts as well as other torso muscles to open, close, or move the prosthesis. The functionality achieved with this method is limited to simple grips and support, with the disadvantage that it requires a considerable amount of effort from the user, fatiguing him or her. On the other hand, externally powered prostheses use batteries to obtain the energy required for movement, as shown in

Figure 2b. Additionally, in this type of prosthesis, both the mechanisms that make up the hand and the motion control system are considerably more complex, involving microprocessors and sensors that detect electromyographic signals from the user [

15].

2.2. Electromyography

Electromyography or EMG is the discipline that deals with the collection and analysis of electrical signals present during muscle contraction [

18]. Ideally, the movement of a joint is by voluntary control of the individual and is achieved by motor neurons. This begins in the upper motor neurons found in the cerebral cortex [

19]. These send an ionic flux to the lower motor neurons, which start in the spinal cord [

19] and are responsible for sending the signal across the membranes of the muscle fibers in question [

18]. This generated current is usually in the range of 0 to 10 mV [

20].

EMG signals can be used to detect a range of neuromotor diseases, such as muscle atrophy or weakness, chronic denervation, muscle twitching, among others. In turn, they can be used for the voluntary control of robots, computers, machines, and prostheses [

21]. In addition, the amplitude of the sEMG signal can be used to examine the timing of muscle activity and the relative intensity or interaction between actively engaged muscles simultaneously [

22].

2.2.1. Noise in EMG Signals

EMG signals present certain vulnerabilities, as they travel through different tissues and are affected by various types of noise.

Inherent noise: Measuring instruments, being electronic equipment, can introduce noise due to their very nature [

18]. This can be eliminated with high quality instruments [

20].

Environmental noise: Any electromagnetic device can generate signal noise. In turn, the human body itself contains electromagnetic radiation.

Movement: When there is any kind of movement, the electrodes—both their pads (which make contact with the skin) and their wires—may add noise to the signals.

Inherent signal instability: The anatomical and physiological properties of muscles are a type of noise that is impossible to avoid [

18]. For this reason, it is important to clean up the noise at a processing stage [

20].

2.2.2. EMG Techniques

EMG signals are recorded by means of electrodes, which record their speed and amplitude. For this purpose, there are two types of techniques [

23,

24].

Invasive techniques, as shown in

Figure 3b, require the introduction of electrodes to make contact with the inner musculature in order to record minimal current flows.

Advantages: By having electrodes that make direct contact with the muscle membrane, more precise signals are obtained.

Disadvantages: The complexity of the procedure, the discomfort it implies for the user, the requirement of a professional to carry out the process, and the care required to perform any movement. Therefore, oriented to a daily life application, several factors prevent a comfortable integration between the individual and the prosthesis.

On the other hand,

non-invasive or surface techniques, as shown in

Figure 3a, use surface electrodes placed on the skin.

Advantages: It provides much more comfort and freedom to the user, since it does not represent any significant risk in case any pad becomes detached or any electrode becomes disconnected.

Disadvantages: They are vulnerable to more sources of noise, such as skin impurities. At the same time, the electrode pads can easily wear out within a few hours.

2.2.3. Sampling Frequency

These signals contain relevant information in the 50–150 Hz range. However, some research corroborates the use of higher sampling frequencies to obtain better results [

27]. Using a high-pass filter with a cutoff frequency of 120 Hz allows for the elimination of electrical line noise, among others [

28].

2.3. Anatomy of the Hand

The human hand is made up of four fingers and a thumb. Each finger is composed of four bones. The three segments that protrude from the palm are called phalanges: distal, middle and proximal from the tip to the base of the finger respectively. The fourth bone of each finger is called the metacarpus, these connect each group of phalanges to a group of bones called carpals located at the base of the palm. The carpals allow the wrist to rotate and move using the radius and ulna as a pivot [

29]. This structure gives the hand 27 degrees of freedom: four in each finger, five in the thumb, and six in the wrist. Generally, prosthesis manufacturers limit movement to considerably fewer degrees of freedom due to power, space, weight, and control considerations.

2.4. Grip Types

Gripping an object involves grasping or holding it between two surfaces of the hand. While the ways in which objects of different shapes and sizes can be grasped are extremely diverse, there is a general system by which grasping can be classified according to the muscular function required to perform and maintain them [

30].

Under the previously mentioned system, the grip can be classified as either a power grip or a precision grip. The power grip usually results in flexion of all finger joints. It may include the thumb to stabilize the object to be grasped, which is held between the fingers and the palm. On the other hand, the precision grip positions an object between one or more fingers and the thumb without involving the palm.

2.5. Artificial Intelligence

Artificial intelligence (AI) is the scientific field dedicated to developing intelligent systems that can operate autonomously with minimal human intervention [

31]. With the advancements in EMG signal recording techniques and the rapid increase in computational power worldwide, the utilization of AI in processing biological signals is experiencing significant growth [

32,

33]. Moreover, significant progress has been made in the development of applications for engineering, technology, and medicine, mainly aimed at creating novel solutions that enable innovation and early detection of diseases [

34,

35,

36,

37]. Additionally, AI algorithms have been successfully employed in hand gesture recognition [

38,

39] and pattern recognition in EMG signals for the control of robotic prostheses [

40].

While artificial intelligence offers significant potential in EMG signal processing and various domains, addressing challenges related to dataset availability, interpretability, and ethical considerations remains crucial for the responsible and effective use of AI in these applications. It is important to note that in critical applications such as medical diagnostics, transparent and interpretable AI models are essential to build confidence and ensure the reliability of the results.

Within the concept of AI, there is Machine Learning (ML) and, within this, there is Deep Learning (DL).

2.5.1. Machine Learning

Machine learning is based on using algorithms based on mathematics and statistics that are capable of finding patterns in a given set of data [

31,

41].

There are three types of ML: the first is supervised learning, which requires the use of labeled data in order to extract patterns and associate them to their respective label. Classification and regression are two types of supervised learning, in which the discrete and continuous variables are predicted, respectively.

Unsupervised learning: It does not require labeled data, but rather consists of restructuring the input data and grouping them into subsets of data containing similar characteristics. Clustering and association are two types of unsupervised learning.

Reinforcement learning consists of rewarding or punishing an agent for performing on a certain way within an environment. The agent explores the environment, interacts with it, and learns from the feedback provided.

2.5.2. Deep Learning

Deep learning is based on artificial neural networks, which try to simulate the biological behavior of the human brain. They learn from large amounts of data, which are processed by a set of neurons, to finally yield predictions [

31].

2.5.3. Tensorflow Lite

There are several libraries designed for the development of AI models, such as Pytorch, Keras, Tensorflow, etc. However, it was necessary to select a library that would allow the model to be easily deployed on the selected microcontroller (see

Section 3.2.3). For this reason, Tensorflow Lite was considered as the best option, since it is specifically designed to deploy pre-trained neural networks on microcontrollers [

42]. It has a set of tools developed for the deployment of machine learning models in embedded systems. Among the advantages [

43] are

Latency: no need to access a server; the inference is made on device.

Connectivity: no internet connection is needed, so it can be used in remote sites.

Privacy: there is no data exchange, preventing the system from being prone to attacks.

Size: the models are compressed and their size is reduced.

2.6. Design Specifications

The average weight of a hand is 400 g or 0.6 percent of total body weight for men and 0.5 percent for women. However, existing prostheses of similar weight are considered too heavy by users. In a comparative study of myoelectric prostheses, a range of 350 g to 615 g was observed for commercial prostheses and 350 g to 2200 g for research prototypes. However, this range does not represent consistent comparisons because in some cases researchers include the actuation and control system in the total weight, while others consider only the weight of the structure that makes up the hand. While there is no maximum weight specification for prostheses, the consensus is that weight should be minimized, with some groups of researchers defining a limit of up to 500 g [

44].

The opening distance of commercial prostheses ranges from 35 to 102 degrees with an average closing speed of 78.2 degrees/s. The average grip force is 7.97 N with a maximum of 16.1 N and a minimum of 3 N. Additionally, the flexibility of the finger mechanism in the bending direction is an important factor in avoiding breakage [

44].

2.7. Mechanisms

The main mechanisms are separated into central and individual actuation systems. Central systems actuate all five fingers simultaneously with a single actuator, while individual systems dedicate one actuator to each finger and, in some cases, two actuators for thumb rotation and flexion–extension.

Fingers usually include a proximal joint similar to the metacarpophalangeal joint and a distal joint that encompasses the function of the distal interphalangeal and proximal interphalangeal joints. This type of mechanism can be seen in the Vincent, iLimb, and Bebionic prostheses in the

Figure 4. Other variants consist of a single segment as a finger with a metacarpophalangeal joint as can be seen in the Michelangelo prosthesis in

Figure 4 and in the SensorHand Speed prosthesis shown in

Figure 5.

Regardless of the number of joints, the links forming the fingers have a fixed motion relative to each other rather than each joint acting independently. This allows flexion–extension to be achieved by employing various four-bar mechanisms as can be seen in

Figure 4. Alternatives for flexion–extension include: ropes as tendons; a set of fingers connected by a link to form a gripper actuated by a single motor as shown in the

Figure 5; and a combination of ropes for flexion and springs for extension as seen in the

Figure 6.

2.8. Sensors

Pancholi and Agarwal [

47] developed a low-cost EMG system for arm activity recognition (AAR) acquisition. They found that about 80% of EMG signals were captured efficiently and the overall accuracy for AAR was about 79%. EMG data can be collected from various upper extremity actions, namely HO (open hand), HC (closed hand), WE (wrist extension), WF (wrist flexion), SG (soft grip), MG (medium grip), and HG (hard grip).

2.9. Preprocessing

It is important to note that despite the challenges and ongoing research in the field of preprocessing EMG signals, significant progress has been made. Researchers have been able to develop techniques and methodologies to mitigate the effects of noise and improve the quality of acquired signals. Reaz et al. [

20] not only identified obstacles in EMG signal acquisition but also proposed methods for detecting and classifying these signals. Their work provides valuable insights into the preprocessing techniques that can be employed to enhance the reliability and accuracy of EMG data analysis. Similarly, other authors [

48] conducted a comparative analysis of different configurations for acquiring hand motion EMG signals, achieving a considerable acquisition efficiency of 54%. This demonstrates the effectiveness of their preprocessing approaches in optimizing the signal acquisition process. Furthermore, Fang et al. [

49] highlighted the challenges associated with pattern recognition and classification of EMG signals, including issues related to data quality and interpretation. Their research sheds light on the complexities involved in preprocessing EMG data and emphasizes the need for further advancements in this area. In summary, while there are challenges to be addressed in preprocessing EMG signals, researchers have made significant strides in developing techniques and methodologies to overcome these obstacles. Continued research and development in this field are crucial for advancing the accuracy and reliability of EMG signal processing and analysis.

2.10. Feature Extraction and Classification

It is worth noting that the selection and utilization of feature extraction and classification techniques for EMG signals depend on various factors, including the specific application, the complexity of the task, and the available computational resources.

While artificial neural networks have shown promising results in EMG signal classification [

21,

50], other machine learning algorithms, such as Support Vector Machine [

51,

52], K Nearest Neighbors [

53,

54], and Multilayer Perceptron [

55], have also been successfully employed in this domain [

56]. The choice of algorithm often depends on the specific requirements and characteristics of the classification task.

In addition, the emergence of deep learning approaches has facilitated more complex and automated analysis of EMG signals [

32,

57,

58]. These deep neural networks can leverage the hierarchical representation learning capabilities to extract discriminative features directly from the raw EMG data, thereby simplifying the preprocessing stage.

However, regardless of the chosen technique, it is crucial to ensure the quality of the EMG signals. This involves employing appropriate signal cleaning and filtering techniques to remove noise and remove any external disturbances that could interfere with accurate classification [

20].

The use of artificial neural networks, along with other machine learning algorithms and deep learning approaches, has achieved excellent results and proven to be effective in the classification of EMG signals. The selection of techniques should be adapted to the specific requirements of the task. Additionally, signal quality and preprocessing steps play a vital role in obtaining reliable and accurate results.

2.11. Actuators

Due to space, weight, and energy consumption restrictions, motors are limited to small DC models with high gear reductions.

The prosthesis approach determines the number of actuators included in the prosthesis. Highly functional prostheses are designed with only one or two actuators connected to a transmission system that allows them to assume the main grasping positions with considerable force. On the other hand, anatomically correct prostheses have reduced grip force but are able to mimic a greater number of natural gestures and perform tasks that require greater precision [

44].

Additionally, the number of actuators considerably influences the weight of the prosthesis. To counteract this, they are commonly placed on the fingers near the metacarpophalangeal joint or in the center of the palm depending on the choice of main mechanism, as close as possible to each other and other components of considerable weight since the weight distribution influences the weight perceived by the user [

44].

5. Conclusions and Future Works

The design of a three-finger prosthesis capable of performing precision and strength grips was presented. This design utilizes a six-bar mechanism to alternate between its position limits and is capable of holding the target load of 500 g, as well as gripping small objects that require greater precision such as pens or screwdrivers. In terms of gripping force, it was estimated that based on the selected motor and the length of the fingers, it is capable of holding the target load of 500 g. It would reach 23 N, which is within the average range of research prototypes.

Additionally, this design was validated by a dynamic simulation of the opening and closing of the gripper and a finite element analysis on the most important link, both carried out using Autodesk Inventor 2022. Based on the resulting starting torque from the dynamic simulation, it was determined that the selected motor was adequate, ensuring that the mechanism will not suffer unforeseen displacements due to the load. On the other hand, the connecting link between the motor coupling and the finger link did not show any significant deformations or fatigue failures, so its design is adequate for the target load. To reduce the weight of the prototype, as well as the cost of printing while maintaining structural integrity, we plan to simplify the covers and turning base in future iterations using the shape optimization tool available in Fusion 360.

The gyroscope, which was used during data collection, did not provide relevant information to the problem. This is due to the fact that during the recording it was ensured that the arm maintained a single position and angle. Therefore, the sensor did not register significant movement changes. Its integration could be useful to explore future topics.

The sampling frequency used in the experiment (1 kHz) stood out compared to commercial EMG signal collection systems, which oscillate at around 128 Hz. This allowed us to work with smaller time windows during preprocessing, to filter the signal without losing representative information, and to characterize the signal efficiently by calculating the RMS values. Additionally, the latter technique allowed us to maintain the temporality of the signals.

Although the overall accuracy of the algorithm was 80.21%, the performance of the model with respect to the action of making a fist was specifically poor compared to the other actions, with an accuracy of 70%. Based on observations, in healthy subjects, this particular action involves both the inner and outer forearm muscle. However, in the test subject, this same action involved only the inner muscle. For example, the wrist flexion and extension actions are opposite actions that activate mostly opposite muscles of the arm: brachioradialis muscle and flexor carpi ulnaris. Therefore, it is easier to discriminate between them. As a solution to this challenge, it is proposed to train the algorithm using more data for it to generalize better across actions.

Since the hardware designed uses the ESP32-C3, its RISC-V based architecture presents advantages in the storage and reading of data in memory, allowing us to obtain a sampling rate of 1kHz for each of the nine variables of interest (three EMG sensors, three acceleration axes, three gyroscope axes). The designed electronic circuit was able to collect data for the training stage and in turn, was used for real-time operation to control the gripper. The ESP32-C3 development board was able to process the EMG signals and drive the gripper servomotors according to the outputs of the AI model.

Unlike EMG systems that may use signal intensity as a trigger, this system is based on an analysis of signal behavior; this provides robustness to the system. If a user presents any symptom of muscle fatigue due to prolonged use, the system would be able to characterize the shape of the signal, regardless of its relative intensity.

Through the analysis of the accuracy in different time windows, it was concluded that using a larger one during data recording does not necessarily represent a significant increase in the accuracy of the model. This is due to the fact that EMG MyoWare sensors have an active filter which prevents the signal from remaining active for a long period of time. Therefore, the most relevant gait is found in the activation of the muscle and not in its deactivation. Thus, reducing the time window could help to reduce the amount of not representative data.

The inference algorithm was able to efficiently classify 95.13% of two of the classes using data collected from one subject. The online classification time of the system was 0.08 s, while a data recording of 2 s of duration was needed, giving a total prosthesis reaction time of 2.18. By using a threshold to consider only predictions with an accuracy greater than 70%, we were able to reduce the number of erroneous predictions between classes. The priority was to reduce false positives as much as possible. In other words, when the subject performed an action, he preferred to keep the gripper still rather than having it move incorrectly. In this case, bias or variance phenomena was not detected in the model as training and testing errors were low.

The project was framed by certain specific objectives and met all the requirements. However, we recognize that the applications are limited to the framework of the project. The hand design could be improved to endure more weight, particularly on the wrist. Regarding the portability, as a future work, it is proposed to design a much more light and compact circuit that can be easily carried on the subject’s arm. Additionally, it is proposed to redesign the connections of the development board in order to reduce the noise generated in the EMG signals, due to physical factors, as much as possible. Regarding the artificial intelligence model used, it is proposed to evaluate the use of deep neural networks capable of generalizing between data from different test subjects, reducing the preprocessing time, and increasing the accuracy of the model. In addition use of recurrent neural networks to explore the capability of expanding the time window analysis can be studied.

For more reality-oriented implementations, it is proposed to analyze the use of the gyroscope. In addition, since the test subject does not remain static, but requires the use of the prosthesis while in motion, it is proposed to use dry electrodes, since adhesive sensors tend to cause skin irritation.