Application of Event Cameras and Neuromorphic Computing to VSLAM: A Survey

Abstract

1. Introduction

- A critical analysis and discussion on the methods and technologies employed by traditional VSLAM systems.

- An in-depth discussion on the challenges and further directions to improve or resolve the identified problems or limitations of traditional VSLAMs.

- A rationale for and analysis of the use of event cameras in VSLAM to overcome the issues faced by conventional VSLAM approaches.

- A detailed exploration of the feasibility of integrating neuromorphic processing into event-based VSLAM systems to further enhance performance and power efficiency.

- Introduction: Provides an overview of the problem domain and highlights the need for advancements in VSLAM technology.

- Frame-based cameras in VSLAM (Section 2): Discusses the traditional approach to VSLAM using frame-based cameras, outlining their limitations and challenges.

- Event cameras (Section 3): Introduces event cameras and their operational principles, along with discussing their potential benefits and applications across various domains.

- Neuromorphic computing in VSLAM (Section 4): Explores the application of neuromorphic computing to the VSLAM problem, emphasizing its capability to address performance and power consumption issues commonly encountered within the autonomous systems context.

- Summary and future directions (Section 5): Provides a synthesis of the key findings from the previous sections and outlines potential future directions for VSLAM research, particularly focusing on the integration of event cameras and neuromorphic processors.

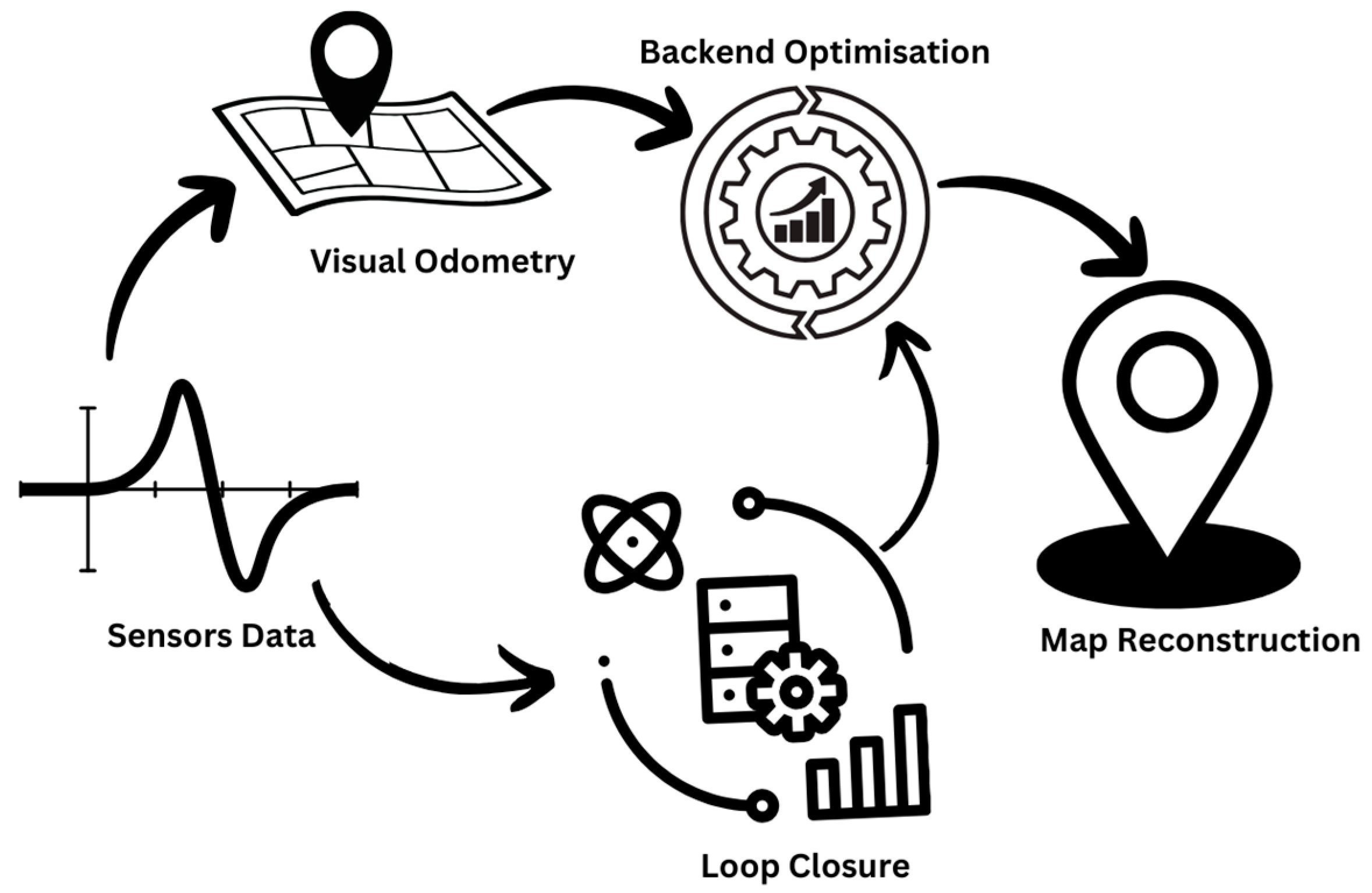

2. Camera-Based SLAM (VSLAM)

Limitations of Frame-Based Cameras in VSLAM

- Ambiguity in feature matching: In feature-based SLAM, feature matching is considered a critical step. However, frame-based cameras face difficulty in capturing scenes with ambiguous features (e.g., plain walls). Moreover, data without depth information (as obtained from standard monocular cameras) makes it even harder for the feature-matching process to distinguish between similar features, which can lead to potential errors in data association.

- Sensitivity to lighting conditions: The sensitivity of traditional cameras to changes in lighting conditions affects the features and makes it more challenging to match features across frames consistently [7]. This can result in errors during the localization and mapping process.

- Limited field of view: The use of frame-based cameras can be limited due to their inherently limited field of view. This limitation becomes more apparent in environments with complex structures or large open spaces. In such cases, having multiple cameras or additional sensor modalities may become necessary to achieve comprehensive scene coverage, but this can lead to greatly increased computational costs as well as other complexities.

- Challenge in handling dynamic environments: Frame-based cameras face difficulties when it comes to capturing dynamic environments, especially where there is movement of objects or people. It can be challenging to track features consistently in the presence of moving entities, and other sensor types such as depth sensors or inertial measurement units (IMUs) must be integrated, or additional strategies must be implemented to mitigate those challenges. Additionally, in situations where objects in a scene are moving rapidly, particularly if the camera itself is on a fast-moving platform (e.g., a drone), then motion blur can significantly degrade the quality of captured frames unless highly specialized cameras are used.

- High computational requirements: Although frame-based cameras are typically less computationally demanding than depth sensors such as LiDAR, feature extraction and matching processes can still necessitate considerable computational resources, particularly for real-time applications.

3. Event Camera-Based SLAM

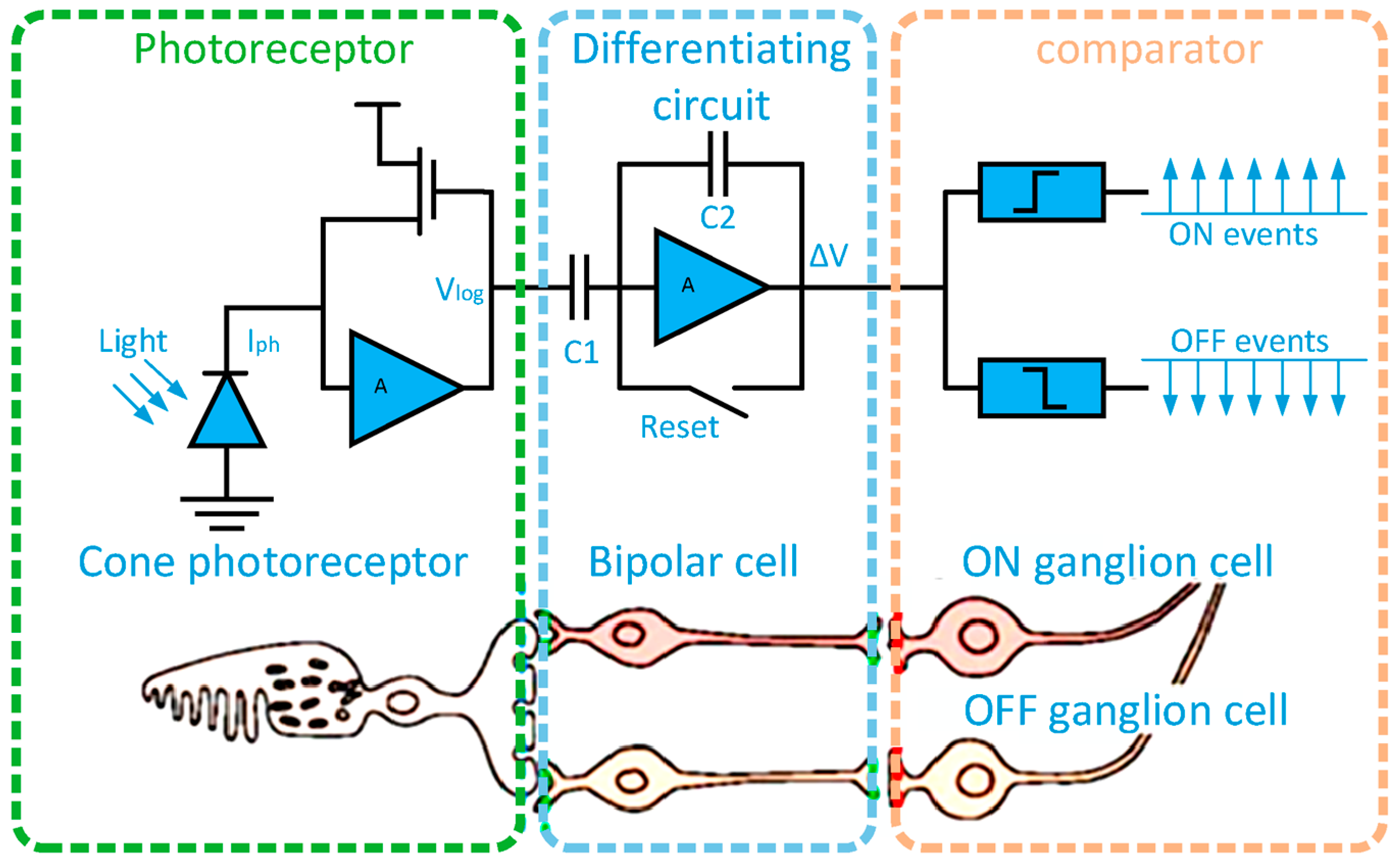

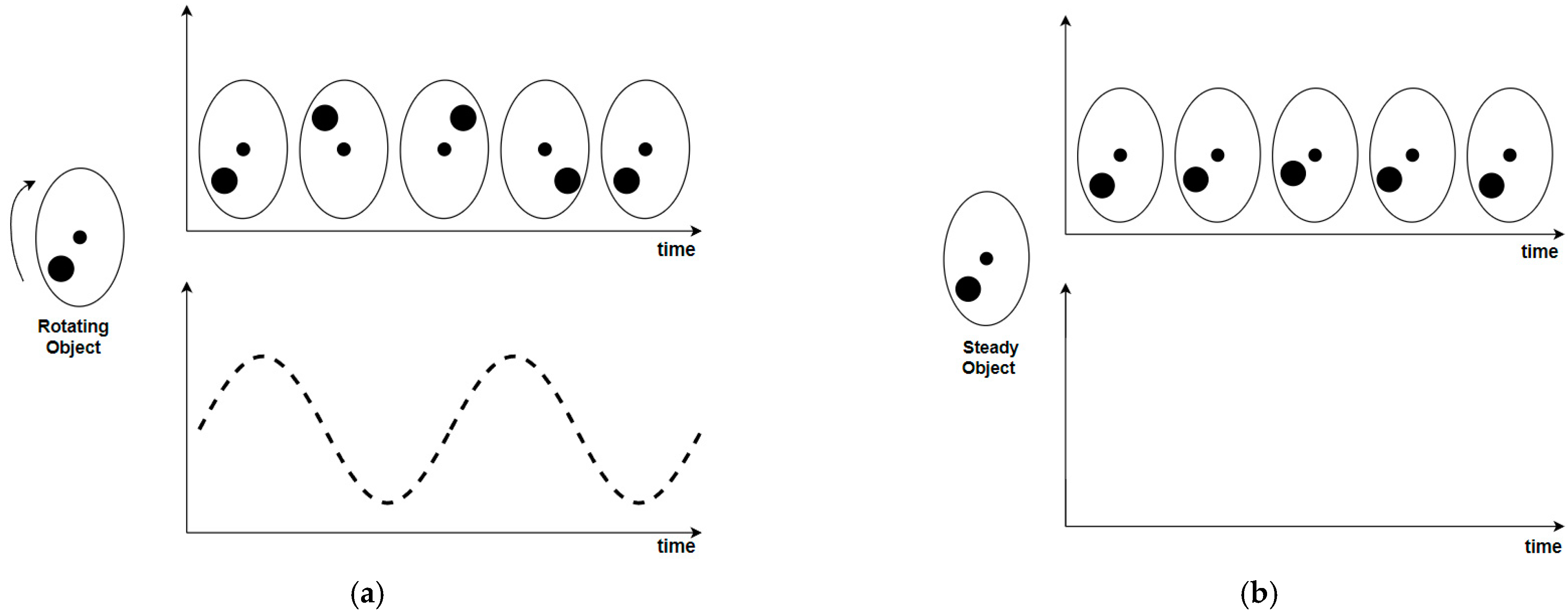

3.1. Event Camera Operating Principles

3.1.1. Event Generation Model

3.1.2. Event Representation

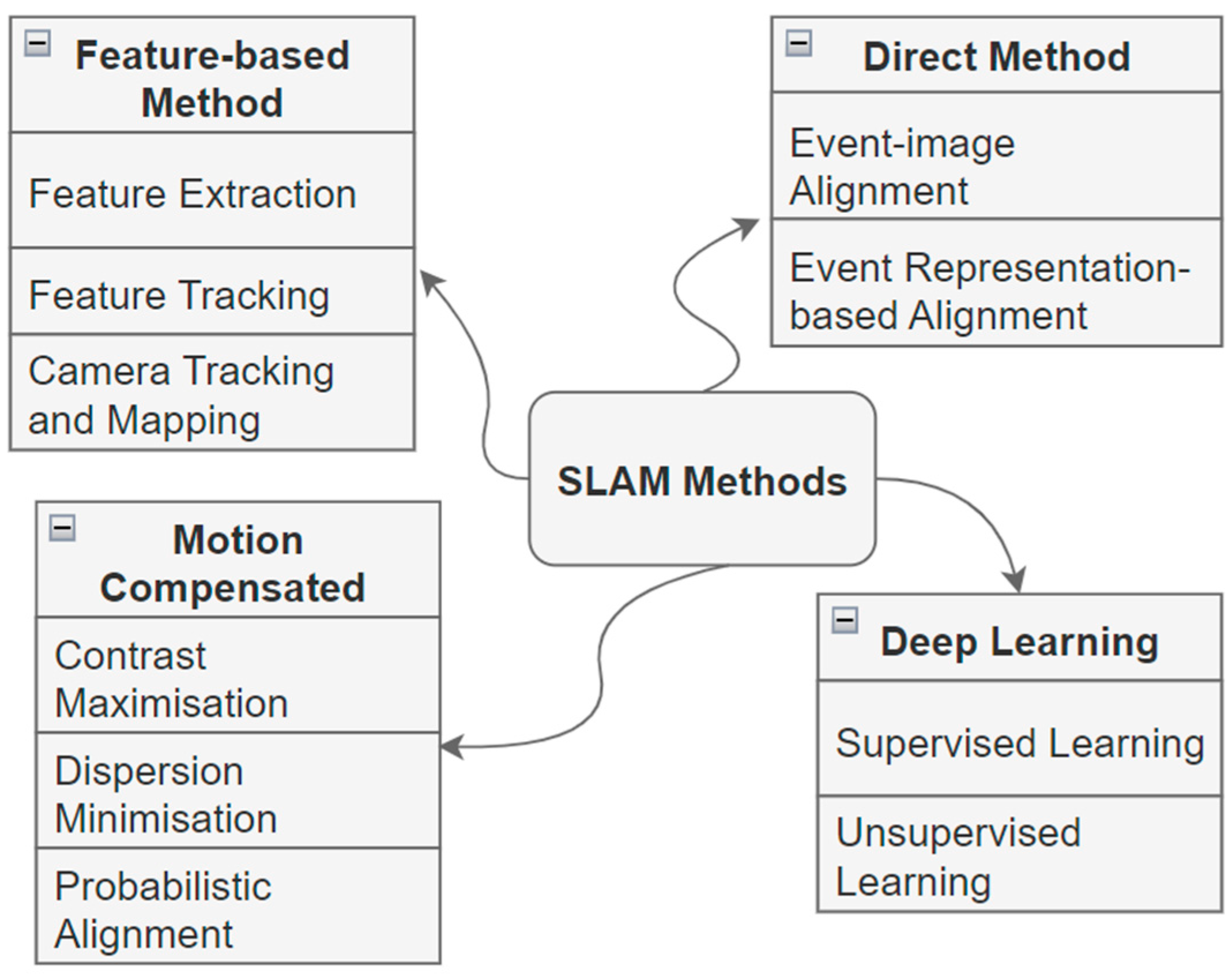

3.2. Method

3.2.1. Feature-Based Methods

Feature Extraction

Feature Tracking

Camera Tracking and Mapping

3.2.2. Direct Method

3.2.3. Motion Compensation Methods

3.2.4. Deep Learning Methods

3.3. Performance Evaluation of VSLAM Systems

3.3.1. Event Camera Datasets

3.3.2. Event-Based SLAM Metrics

3.3.3. Performance Comparison of SLAM Methods

Depth Estimation

Camera Pose Estimation

3.4. Applications of Event Camera-Based SLAM Systems

3.4.1. Robotics

3.4.2. Autonomous Vehicles

3.4.3. Virtual Reality (VR) and Augmented Reality (AR)

4. Application of Neuromorphic Computing to SLAM

4.1. Neuromorphic Computing Principles

4.2. Neuromorphic Hardware

4.2.1. SpiNNaker

4.2.2. TrueNorth

4.2.3. Loihi

4.2.4. BrainScaleS

4.2.5. Dynamic Neuromorphic Asynchronous Processors

4.2.6. Akida

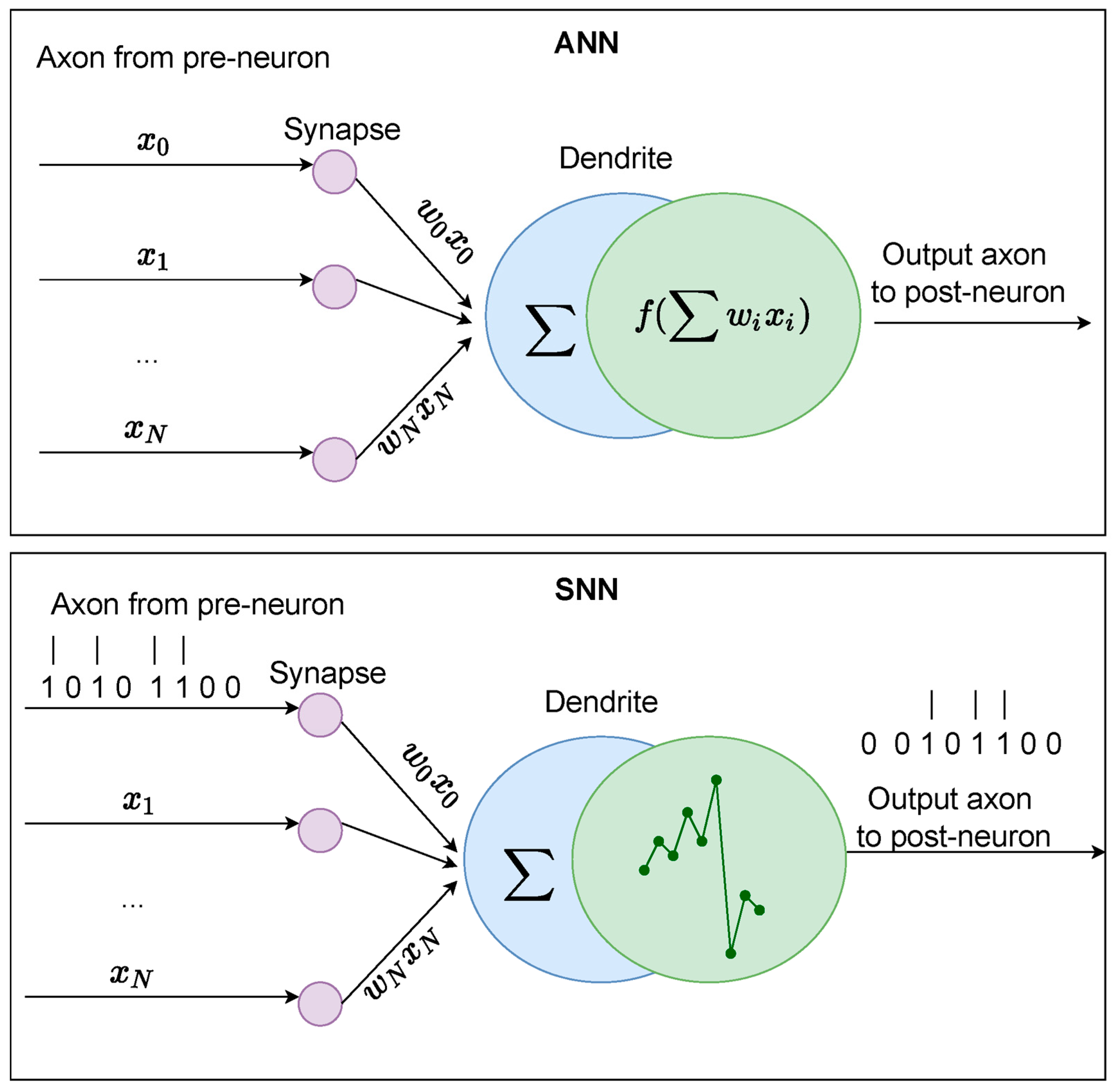

4.3. Spiking Neural Networks

4.4. Neuromorphic Computing in SLAM

- Efficiency: Neuromorphic hardware is designed to mimic the brain’s parallel processing capabilities, resulting in efficient computation with low power consumption. This efficiency is particularly beneficial in real-time SLAM applications where rapid low-power processing of sensor data is crucial.

- Adaptability: Neuromorphic systems can adapt to and learn from their environment, making them well-suited for SLAM tasks in dynamic or changing environments. They can continuously update their internal models based on new sensory information, leading to improved accuracy and robustness over time.

- Event-Based Processing: Event cameras capture data asynchronously in response to changes in the environment. This event-based processing enables SLAM systems to focus computational resources on relevant information, leading to faster and more efficient processing compared to traditional frame-based approaches.

- Sparse Representation: Neuromorphic algorithms can generate sparse representations of the environment, reducing memory and computational requirements. This is advantageous in resource-constrained SLAM applications, such as those deployed on embedded or mobile devices.

5. Conclusions

5.1. Summary of Key Findings

5.2. Limitations of the Study

5.3. Current State-of-the-Art and Future Scope

5.4. Neuromorphic SLAM Challenges

Author Contributions

Funding

Conflicts of Interest

References

- Wang, X.; Zheng, S.; Lin, X.; Zhu, F. Improving RGB-D SLAM accuracy in dynamic environments based on semantic and geometric constraints. Meas. J. Int. Meas. Confed. 2023, 217, 113084. [Google Scholar] [CrossRef]

- Khan, M.S.A.; Hussain, D.; Naveed, K.; Khan, U.S.; Mundial, I.Q.; Aqeel, A.B. Investigation of Widely Used SLAM Sensors Using Analytical Hierarchy Process. J. Sens. 2022, 2022, 5428097. [Google Scholar] [CrossRef]

- Gelen, A.G.; Atasoy, A. An Artificial Neural SLAM Framework for Event-Based Vision. IEEE Access 2023, 11, 58436–58450. [Google Scholar] [CrossRef]

- Taheri, H.; Xia, Z.C. SLAM definition and evolution. Eng. Appl. Artif. Intell. 2021, 97, 104032. [Google Scholar] [CrossRef]

- Shen, D.; Liu, G.; Li, T.; Yu, F.; Gu, F.; Xiao, K.; Zhu, X. ORB-NeuroSLAM: A Brain-Inspired 3-D SLAM System Based on ORB Features. IEEE Internet Things J. 2024, 11, 12408–12418. [Google Scholar] [CrossRef]

- Xia, Y.; Cheng, J.; Cai, X.; Zhang, S.; Zhu, J.; Zhu, L. SLAM Back-End Optimization Algorithm Based on Vision Fusion IPS. Sensors 2022, 22, 9362. [Google Scholar] [CrossRef] [PubMed]

- Theodorou, C.; Velisavljevic, V.; Dyo, V.; Nonyelu, F. Visual SLAM algorithms and their application for AR, mapping, localization and wayfinding. Array 2022, 15, 100222. [Google Scholar] [CrossRef]

- Liu, L.; Aitken, J.M. HFNet-SLAM: An Accurate and Real-Time Monocular SLAM System with Deep Features. Sensors 2023, 23, 2113. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.; Zhang, T. Introduction to Visual SLAM from Theory to Practice Introduction to Visual SLAM Introduction to Visual SLAM; Springer Nature: Singapore, 2021. [Google Scholar]

- Chen, W.; Shang, G.; Ji, A.; Zhou, C.; Wang, X.; Xu, C.; Li, Z.; Hu, K. An Overview on Visual SLAM: From Tradition to Semantic. Remote Sens. 2022, 14, 3010. [Google Scholar] [CrossRef]

- Huang, T.; Zheng, Y.; Yu, Z.; Chen, R.; Li, Y.; Xiong, R.; Ma, L.; Zhao, J.; Dong, S.; Zhu, L.; et al. 1000× Faster Camera and Machine Vision with Ordinary Devices. Engineering 2023, 25, 110–119. [Google Scholar] [CrossRef]

- Aitsam, M.; Davies, S.; Di Nuovo, A. Neuromorphic Computing for Interactive Robotics: A Systematic Review. IEEE Access 2022, 10, 122261–122279. [Google Scholar] [CrossRef]

- Cuadrado, J.; Rançon, U.; Cottereau, B.R.; Barranco, F.; Masquelier, T. Optical flow estimation from event-based cameras and spiking neural networks. Front. Neurosci. 2023, 17, 1160034. [Google Scholar] [CrossRef] [PubMed]

- Fischer, T.; Milford, M. Event-based visual place recognition with ensembles of temporal windows. IEEE Robot. Autom. Lett. 2020, 5, 6924–6931. [Google Scholar] [CrossRef]

- Furmonas, J.; Liobe, J.; Barzdenas, V. Analytical Review of Event-Based Camera Depth Estimation Methods and Systems. Sensors 2022, 22, 1201. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Gallego, G.; Shen, S. Event-Based Stereo Visual Odometry. IEEE Trans. Robot. 2021, 37, 1433–1450. [Google Scholar] [CrossRef]

- Wang, Y.; Shao, B.; Zhang, C.; Zhao, J.; Cai, Z. REVIO: Range- and Event-Based Visual-Inertial Odometry for Bio-Inspired Sensors. Biomimetics 2022, 7, 169. [Google Scholar] [CrossRef] [PubMed]

- Gallego, G.; Delbrück, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K.; et al. Event-Based Vision: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 154–180. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, S.; Gallego, G. Multi-Event-Camera Depth Estimation and Outlier Rejection by Refocused Events Fusion. Adv. Intell. Syst. 2022, 4, 2200221. [Google Scholar] [CrossRef]

- Liu, Z.; Shi, D.; Li, R.; Zhang, Y.; Yang, S. T-ESVO: Improved Event-Based Stereo Visual Odometry via Adaptive Time-Surface and Truncated Signed Distance Function. Adv. Intell. Syst. 2023, 5, 2300027. [Google Scholar] [CrossRef]

- Chen, G.; Cao, H.; Conradt, J.; Tang, H.; Rohrbein, F.; Knoll, A. Event-Based Neuromorphic Vision for Autonomous Driving: A Paradigm Shift for Bio-Inspired Visual Sensing and Perception. IEEE Signal Process. Mag. 2020, 37, 34–49. [Google Scholar] [CrossRef]

- Chen, P.; Guan, W.; Lu, P. ESVIO: Event-Based Stereo Visual Inertial Odometry. IEEE Robot. Autom. Lett. 2023, 8, 3661–3668. [Google Scholar] [CrossRef]

- Fischer, T.; Milford, M. How Many Events Do You Need? Event-Based Visual Place Recognition Using Sparse But Varying Pixels. IEEE Robot. Autom. Lett. 2022, 7, 12275–12282. [Google Scholar]

- Favorskaya, M.N. Deep Learning for Visual SLAM: The State-of-the-Art and Future Trends. Electronics 2023, 12, 2006. [Google Scholar] [CrossRef]

- Amir, A.; Taba, B.; Berg, D.; Melano, T.; McKinstry, J.; Di Nolfo, C.; Nayak, T.; Andreopoulos, A.; Garreau, G.; Mendoza, M.; et al. A Low Power, Fully Event-Based Gesture Recognition System. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Guo, G.; Feng, Y.; Lv, H.; Zhao, Y.; Liu, H.; Bi, G. Event-Guided Image Super-Resolution Reconstruction. Sensors 2023, 23, 2155. [Google Scholar] [CrossRef] [PubMed]

- Yu, F.; Shang, J.; Hu, Y.; Milford, M. NeuroSLAM: A brain-inspired SLAM system for 3D environments. Biol. Cybern. 2019, 113, 515–545. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Bartolozzi, C.; Zhang, H.H.; Nawrocki, R.A. Neuromorphic electronics for robotic perception, navigation and control: A survey. Eng. Appl. Artif. Intell. 2023, 126, 106838. [Google Scholar] [CrossRef]

- Schuman, C.D.; Kulkarni, S.R.; Parsa, M.; Mitchell, J.P.; Date, P.; Kay, B. Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci. 2022, 2, 10–19. [Google Scholar] [CrossRef]

- Renner, A.; Supic, L.; Danielescu, A.; Indiveri, G.; Frady, E.P.; Sommer, F.T.; Sandamirskaya, Y. Neuromorphic Visual Odometry with Resonator Networks. arXiv 2022, arXiv:2209.02000. [Google Scholar] [CrossRef]

- Davies, M.; Wild, A.; Orchard, G.; Sandamirskaya, Y.; Guerra, G.A.F.; Joshi, P.; Plank, P.; Risbud, S.R. Advancing Neuromorphic Computing With Loihi: A Survey of Results and Outlook. Proc. IEEE 2021, 109, 911–934. [Google Scholar] [CrossRef]

- Nunes, J.D.; Carvalho, M.; Carneiro, D.; Cardoso, J.S. Spiking Neural Networks: A Survey. IEEE Access 2022, 10, 60738–60764. [Google Scholar] [CrossRef]

- Hewawasam, H.S.; Ibrahim, M.Y.; Appuhamillage, G.K. Past, Present and Future of Path-Planning Algorithms for Mobile Robot Navigation in Dynamic Environments. IEEE Open J. Ind. Electron. Soc. 2022, 3, 353–365. [Google Scholar] [CrossRef]

- Schuman, C.D.; Potok, T.E.; Patton, R.M.; Birdwell, J.D.; Dean, M.E.; Rose, G.S.; Plank, J.S. A Survey of Neuromorphic Computing and Neural Networks in Hardware. arXiv 2017, arXiv:1705.06963. [Google Scholar]

- Pham, M.D.; D’Angiulli, A.; Dehnavi, M.M.; Chhabra, R. From Brain Models to Robotic Embodied Cognition: How Does Biological Plausibility Inform Neuromorphic Systems? Brain Sci. 2023, 13, 1316. [Google Scholar] [CrossRef] [PubMed]

- Saleem, H.; Malekian, R.; Munir, H. Neural Network-Based Recent Research Developments in SLAM for Autonomous Ground Vehicles: A Review. IEEE Sens. J. 2023, 23, 13829–13858. [Google Scholar] [CrossRef]

- Barros, A.M.; Michel, M.; Moline, Y.; Corre, G.; Carrel, F. A Comprehensive Survey of Visual SLAM Algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, Y.; Tong, K.; Chen, H.; Yuan, Y. Review of Visual Simultaneous Localization and Mapping Based on Deep Learning. Remote Sens. 2023, 15, 2740. [Google Scholar] [CrossRef]

- Tourani, A.; Bavle, H.; Sanchez-Lopez, J.L.; Voos, H. Visual SLAM: What Are the Current Trends and What to Expect? Sensors 2022, 22, 9297. [Google Scholar] [CrossRef] [PubMed]

- Bavle, H.; Sanchez-Lopez, J.L.; Cimarelli, C.; Tourani, A.; Voos, H. From SLAM to Situational Awareness: Challenges and Survey. Sensors 2023, 23, 4849. [Google Scholar] [CrossRef] [PubMed]

- Dumont, N.S.-Y.; Furlong, P.M.; Orchard, J.; Eliasmith, C. Exploiting semantic information in a spiking neural SLAM system. Front. Neurosci. 2023, 17, 1190515. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-Time Single Camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007. [Google Scholar]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.; Davison, A.J. SLAM++: Simultaneous Localisation and Mapping at the Level of Objects. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Ai, Y.; Rui, T.; Lu, M.; Fu, L.; Liu, S.; Wang, S. DDL-SLAM: A Robust RGB-D SLAM in Dynamic Environments Combined With Deep Learning. IEEE Access 2020, 8, 162335–162342. [Google Scholar] [CrossRef]

- Li, B.; Zou, D.; Huang, Y.; Niu, X.; Pei, L.; Yu, W. TextSLAM: Visual SLAM With Semantic Planar Text Features. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 593–610. [Google Scholar] [CrossRef] [PubMed]

- Liao, Z.; Hu, Y.; Zhang, J.; Qi, X.; Zhang, X.; Wang, W. SO-SLAM: Semantic Object SLAM With Scale Proportional and Symmetrical Texture Constraints. IEEE Robot. Autom. Lett. 2022, 7, 4008–4015. [Google Scholar] [CrossRef]

- Yang, C.; Chen, Q.; Yang, Y.; Zhang, J.; Wu, M.; Mei, K. SDF-SLAM: A Deep Learning Based Highly Accurate SLAM Using Monocular Camera Aiming at Indoor Map Reconstruction With Semantic and Depth Fusion. IEEE Access 2022, 10, 10259–10272. [Google Scholar] [CrossRef]

- Lim, H.; Jeon, J.; Myung, H. UV-SLAM: Unconstrained Line-Based SLAM Using Vanishing Points for Structural Mapping. IEEE Robot. Autom. Lett. 2022, 7, 1518–1525. [Google Scholar] [CrossRef]

- Ran, T.; Yuan, L.; Zhang, J.; Tang, D.; He, L. RS-SLAM: A Robust Semantic SLAM in Dynamic Environments Based on RGB-D Sensor. IEEE Sens. J. 2021, 21, 20657–20664. [Google Scholar] [CrossRef]

- Liu, Y.; Miura, J. RDMO-SLAM: Real-Time Visual SLAM for Dynamic Environments Using Semantic Label Prediction with Optical Flow. IEEE Access 2021, 9, 106981–106997. [Google Scholar] [CrossRef]

- Liu, Y.; Miura, J. RDS-SLAM: Real-Time Dynamic SLAM Using Semantic Segmentation Methods. IEEE Access 2021, 9, 23772–23785. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodriguez, J.J.G.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Li, Y.; Brasch, N.; Wang, Y.; Navab, N.; Tombari, F. Structure-SLAM: Low-Drift Monocular SLAM in Indoor Environments. IEEE Robot. Autom. Lett. 2020, 5, 6583–6590. [Google Scholar] [CrossRef]

- Bavle, H.; De La Puente, P.; How, J.P.; Campoy, P. VPS-SLAM: Visual Planar Semantic SLAM for Aerial Robotic Systems. IEEE Access 2020, 8, 60704–60718. [Google Scholar] [CrossRef]

- Gomez-Ojeda, R.; Moreno, F.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A Stereo SLAM System Through the Combination of Points and Line Segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In Proceedings of the Computer Vision–ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar]

- Huang, K.; Zhang, S.; Zhang, J.; Tao, D. Event-based Simultaneous Localization and Mapping: A Comprehensive Survey. arXiv 2023, arXiv:2304.09793. [Google Scholar]

- Derhy, S.; Zamir, L.; Nathan, H.L. Simultaneous Location and Mapping (SLAM) Using Dual Event Cameras. U.S. Patent 10,948,297, 16 March 2021. [Google Scholar]

- Boahen, K.A. A burst-mode word-serial address-event link-I: Transmitter design. IEEE Trans. Circuits Syst. I Regul. Pap. 2004, 51, 1269–1280. [Google Scholar] [CrossRef]

- Kim, H.; Leutenegger, S.; Davison, A.J. Real-Time 3D Reconstruction and 6-DoF Tracking with an Event Camera. In Proceedings of the Computer Vision–ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Gehrig, M.; Shrestha, S.B.; Mouritzen, D.; Scaramuzza, D. Event-Based Angular Velocity Regression with Spiking Networks. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Censi, A.; Scaramuzza, D. Low-latency event-based visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Alzugaray, I.; Chli, M. Asynchronous Multi-Hypothesis Tracking of Features with Event Cameras. In Proceedings of the 2019 International Conference on 3D Vision (3DV), Quebec City, QC, Canada, 16–19 September 2019. [Google Scholar]

- Kueng, B.; Mueggler, E.; Gallego, G.; Scaramuzza, D. Low-latency visual odometry using event-based feature tracks. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016. [Google Scholar]

- Ye, C.; Mitrokhin, A.; Fermüller, C.; Yorke, J.A.; Aloimonos, Y. Unsupervised Learning of Dense Optical Flow, Depth and Egomotion with Event-Based Sensors. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020. [Google Scholar]

- Chaney, K.; Zhu, A.Z.; Daniilidis, K. Learning Event-Based Height From Plane and Parallax. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Nguyen, A.; Do, T.T.; Caldwell, D.G.; Tsagarakis, N.G. Real-Time 6DOF Pose Relocalization for Event Cameras With Stacked Spatial LSTM Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Hidalgo-Carrió, J.; Gehrig, D.; Scaramuzza, D. Learning Monocular Dense Depth from Events. In Proceedings of the 2020 International Conference on 3D Vision (3DV), Fukuoka, Japan, 25–28 November 2020. [Google Scholar]

- Gehrig, D.; Ruegg, M.; Gehrig, M.; Hidalgo-Carrio, J.; Scaramuzza, D. Combining Events and Frames Using Recurrent Asynchronous Multimodal Networks for Monocular Depth Prediction. IEEE Robot. Autom. Lett. 2021, 6, 2822–2829. [Google Scholar] [CrossRef]

- Zhu, A.Z.; Thakur, D.; Ozaslan, T.; Pfrommer, B.; Kumar, V.; Daniilidis, K. The Multivehicle Stereo Event Camera Dataset: An Event Camera Dataset for 3D Perception. IEEE Robot. Autom. Lett. 2018, 3, 2032–2039. [Google Scholar] [CrossRef]

- Li, Z.; Snavely, N. MegaDepth: Learning Single-View Depth Prediction from Internet Photos. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Clady, X.; Ieng, S.-H.; Benosman, R. Asynchronous event-based corner detection and matching. Neural Netw. 2015, 66, 91–106. [Google Scholar] [CrossRef]

- Clady, X.; Maro, J.-M.; Barré, S.; Benosman, R.B. A motion-based feature for event-based pattern recognition. Front. Neurosci. 2017, 10, 594. [Google Scholar] [CrossRef]

- Vasco, V.; Glover, A.; Bartolozzi, C. Fast event-based Harris corner detection exploiting the advantages of event-driven cameras. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016. [Google Scholar]

- Scheerlinck, C.; Barnes, N.; Mahony, R. Asynchronous Spatial Image Convolutions for Event Cameras. IEEE Robot. Autom. Lett. 2019, 4, 816–822. [Google Scholar] [CrossRef]

- Mueggler, E.; Bartolozzi, C.; Scaramuzza, D. Fast Event-based Corner Detection. In Proceedings of the British Machine Vision Conference, London, UK, 4–7 September 2017. [Google Scholar]

- Zhu, A.Z.; Atanasov, N.; Daniilidis, K. Event-based feature tracking with probabilistic data association. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Zhu, A.Z.; Atanasov, N.; Daniilidis, K. Event-Based Visual Inertial Odometry. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Vidal, A.R.; Rebecq, H.; Horstschaefer, T.; Scaramuzza, D. Ultimate SLAM? Combining Events, Images, and IMU for Robust Visual SLAM in HDR and High-Speed Scenarios. IEEE Robot. Autom. Lett. 2018, 3, 994–1001. [Google Scholar]

- Manderscheid, J.; Sironi, A.; Bourdis, N.; Migliore, D.; Lepetit, V. Speed Invariant Time Surface for Learning to Detect Corner Points With Event-Based Cameras. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Chiberre, P.; Perot, E.; Sironi, A.; Lepetit, V. Detecting Stable Keypoints from Events through Image Gradient Prediction. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A Fast Line Segment Detector with a False Detection Control. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 722–732. [Google Scholar] [CrossRef] [PubMed]

- Everding, L.; Conradt, J. Low-latency line tracking using event-based dynamic vision sensors. Front. Neurorobot. 2018, 12, 4. [Google Scholar] [CrossRef] [PubMed]

- Wenzhen, Y.; Ramalingam, S. Fast localization and tracking using event sensors. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Bertrand, J.; Yiğit, A.; Durand, S. Embedded Event-based Visual Odometry. In Proceedings of the 2020 6th International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP), Krakow, Poland, 23–25 September 2020. [Google Scholar]

- Chamorro, W.; Solà, J.; Andrade-Cetto, J. Event-Based Line SLAM in Real-Time. IEEE Robot. Autom. Lett. 2022, 7, 8146–8153. [Google Scholar] [CrossRef]

- Guan, W.; Chen, P.; Xie, Y.; Lu, P. PL-EVIO: Robust Monocular Event-Based Visual Inertial Odometry With Point and Line Features. IEEE Trans. Autom. Sci. Eng. 2023, 1–17. [Google Scholar] [CrossRef]

- Brändli, C.; Strubel, J.; Keller, S.; Scaramuzza, D.; Delbruck, T. EliSeD—An event-based line segment detector. In Proceedings of the 2016 Second International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP), Krakow, Poland, 13–15 June 2016. [Google Scholar]

- Valeiras, D.R.; Clady, X.; Ieng, S.-H.O.; Benosman, R. Event-Based Line Fitting and Segment Detection Using a Neuromorphic Visual Sensor. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 1218–1230. [Google Scholar] [CrossRef] [PubMed]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Gehrig, D.; Rebecq, H.; Gallego, G.; Scaramuzza, D. EKLT: Asynchronous Photometric Feature Tracking Using Events and Frames. Int. J. Comput. Vis. 2020, 128, 601–618. [Google Scholar] [CrossRef]

- Seok, H.; Lim, J. Robust Feature Tracking in DVS Event Stream using Bézier Mapping. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020. [Google Scholar]

- Chui, J.; Klenk, S.; Cremers, D. Event-Based Feature Tracking in Continuous Time with Sliding Window Optimization. arXiv 2021, arXiv:2107.04536. [Google Scholar]

- Alzugaray, I.; Chli, M. HASTE: Multi-Hypothesis Asynchronous Speeded-up Tracking of Events. In Proceedings of the 31st British Machine Vision Virtual Conference (BMVC 2020), Virtual, 7–10 September 2020; ETH Zurich, Institute of Robotics and Intelligent Systems: Zurich, Switzerland, 2020. [Google Scholar]

- Hadviger, A.; Cvišić, I.; Marković, I.; Vražić, S.; Petrović, I. Feature-based Event Stereo Visual Odometry. In Proceedings of the 2021 European Conference on Mobile Robots (ECMR), Bonn, Germany, 31 August–3 September 2021. [Google Scholar]

- Hu, S.; Kim, Y.; Lim, H.; Lee, A.J.; Myung, H. eCDT: Event Clustering for Simultaneous Feature Detection and Tracking. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022. [Google Scholar]

- Messikommer, N.; Fang, C.; Gehrig, M.; Scaramuzza, D. Data-Driven Feature Tracking for Event Cameras. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Hidalgo-Carrió, J.; Gallego, G.; Scaramuzza, D. Event-aided Direct Sparse Odometry. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Rebecq, H.; Horstschaefer, T.; Gallego, G.; Scaramuzza, D. EVO: A Geometric Approach to Event-Based 6-DOF Parallel Tracking and Mapping in Real Time. IEEE Robot. Autom. Lett. 2017, 2, 593–600. [Google Scholar] [CrossRef]

- Gallego, G.; Lund, J.E.; Mueggler, E.; Rebecq, H.; Delbruck, T.; Scaramuzza, D. Event-Based, 6-DOF Camera Tracking from Photometric Depth Maps. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2402–2412. [Google Scholar] [CrossRef]

- Kim, H.; Handa, A.; Benosman, R.; Ieng, S.-H.; Davison, A. Simultaneous Mosaicing and Tracking with an Event Camera. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Gallego, G.; Forster, C.; Mueggler, E.; Scaramuzza, D. Event-based Camera Pose Tracking using a Generative Event Model. arXiv 2015, arXiv:1510.01972. [Google Scholar]

- Bryner, S.; Gallego, G.; Rebecq, H.; Scaramuzza, D. Event-based, Direct Camera Tracking from a Photometric 3D Map using Nonlinear Optimization. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Rebecq, H.; Gallego, G.; Mueggler, E.; Scaramuzza, D. EMVS: Event-Based Multi-View Stereo—3D Reconstruction with an Event Camera in Real-Time. Int. J. Comput. Vis. 2018, 126, 1394–1414. [Google Scholar] [CrossRef]

- Zuo, Y.-F.; Yang, J.; Chen, J.; Wang, X.; Wang, Y.; Kneip, L. DEVO: Depth-Event Camera Visual Odometry in Challenging Conditions. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE Press: Philadelphia, PA, USA, 2022; pp. 2179–2185. [Google Scholar]

- Gallego, G.; Rebecq, H.; Scaramuzza, D. A Unifying Contrast Maximization Framework for Event Cameras, with Applications to Motion, Depth, and Optical Flow Estimation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Gu, C.; Learned-Miller, E.; Sheldon, D.; Gallego, G.; Bideau, P. The Spatio-Temporal Poisson Point Process: A Simple Model for the Alignment of Event Camera Data. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised Learning of Depth and Ego-Motion from Video. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. DeepVO: Towards end-to-end visual odometry with deep Recurrent Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Yang, N.; Stumberg, L.V.; Wang, R.; Cremers, D. D3VO: Deep Depth, Deep Pose and Deep Uncertainty for Monocular Visual Odometry. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhang, S.; Zhang, J.; Tao, D. Towards Scale Consistent Monocular Visual Odometry by Learning from the Virtual World. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Zhao, H.; Zhang, J.; Zhang, S.; Tao, D. JPerceiver: Joint Perception Network for Depth, Pose and Layout Estimation in Driving Scenes. In Proceedings of the Computer Vision–ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; Springer Nature Switzerland: Cham, Switzerland, 2022. [Google Scholar]

- Zhu, A.Z.; Yuan, L.; Chaney, K.; Daniilidis, K. Unsupervised Event-Based Learning of Optical Flow, Depth, and Egomotion. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- He, W.; Wu, Y.; Deng, L.; Li, G.; Wang, H.; Tian, Y.; Ding, W.; Wang, W.; Xie, Y. Comparing SNNs and RNNs on neuromorphic vision datasets: Similarities and differences. Neural Netw. 2020, 132, 108–120. [Google Scholar] [CrossRef] [PubMed]

- Deng, L.; Wu, Y.; Hu, X.; Liang, L.; Ding, Y.; Li, G.; Zhao, G.; Li, P.; Xie, Y. Rethinking the performance comparison between SNNS and ANNS. Neural Netw. 2020, 121, 294–307. [Google Scholar] [CrossRef] [PubMed]

- Iyer, L.R.; Chua, Y.; Li, H. Is Neuromorphic MNIST neuromorphic? Analyzing the discriminative power of neuromorphic datasets in the time domain. Front. Neurosci. 2021, 15, 608567. [Google Scholar] [CrossRef]

- Kim, Y.; Panda, P. Optimizing Deeper Spiking Neural Networks for Dynamic Vision Sensing. Neural Netw. 2021, 144, 686–698. [Google Scholar] [CrossRef] [PubMed]

- Yao, M.; Zhang, H.; Zhao, G.; Zhang, X.; Wang, D.; Cao, G.; Li, G. Sparser spiking activity can be better: Feature Refine-and-Mask spiking neural network for event-based visual recognition. Neural Netw. 2023, 166, 410–423. [Google Scholar] [CrossRef]

- Mueggler, E.; Rebecq, H.; Gallego, G.; Delbruck, T.; Scaramuzza, D. The event-camera dataset and simulator: Event-based data for pose estimation, visual odometry, and SLAM. Int. J. Robot. Res. 2017, 36, 142–149. [Google Scholar] [CrossRef]

- Zhou, Y.; Gallego, G.; Rebecq, H.; Kneip, L.; Li, H.; Scaramuzza, D. Semi-dense 3D Reconstruction with a Stereo Event Camera. In Proceedings of the Computer Vision–ECCV 2018, Munich, Germany, 8–14 September 2018; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar]

- Delmerico, J.; Cieslewski, T.; Rebecq, H.; Faessler, M.; Scaramuzza, D. Are We Ready for Autonomous Drone Racing? The UZH-FPV Drone Racing Dataset. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Klenk, S.; Chui, J.; Demmel, N.; Cremers, D. TUM-VIE: The TUM Stereo Visual-Inertial Event Dataset. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021. [Google Scholar]

- Gao, L.; Liang, Y.; Yang, J.; Wu, S.; Wang, C.; Chen, J.; Kneip, L. VECtor: A Versatile Event-Centric Benchmark for Multi-Sensor SLAM. IEEE Robot. Autom. Lett. 2022, 7, 8217–8224. [Google Scholar] [CrossRef]

- Yin, J.; Li, A.; Li, T.; Yu, W.; Zou, D. M2DGR: A Multi-Sensor and Multi-Scenario SLAM Dataset for Ground Robots. IEEE Robot. Autom. Lett. 2022, 7, 2266–2273. [Google Scholar] [CrossRef]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012. [Google Scholar]

- Nunes, U.M.; Demiris, Y. Entropy Minimisation Framework for Event-Based Vision Model Estimation. In Proceedings of the Computer Vision–ECCV 2020, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Nunes, U.M.; Demiris, Y. Robust Event-Based Vision Model Estimation by Dispersion Minimisation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 9561–9573. [Google Scholar] [CrossRef]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. High Speed and High Dynamic Range Video with an Event Camera. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1964–1980. [Google Scholar] [CrossRef]

- Guan, W.; Lu, P. Monocular Event Visual Inertial Odometry based on Event-corner using Sliding Windows Graph-based Optimization. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022. [Google Scholar]

- Bai, Y.; Zhang, B.; Xu, N.; Zhou, J.; Shi, J.; Diao, Z. Vision-based navigation and guidance for agricultural autonomous vehicles and robots: A review. Comput. Electron. Agric. 2023, 205, 107584. [Google Scholar] [CrossRef]

- Zheng, S.; Wang, J.; Rizos, C.; Ding, W.; El-Mowafy, A. Simultaneous Localization and Mapping (SLAM) for Autonomous Driving: Concept and Analysis. Remote Sens. 2023, 15, 1156. [Google Scholar] [CrossRef]

- Aloui, K.; Guizani, A.; Hammadi, M.; Haddar, M.; Soriano, T. Systematic literature review of collaborative SLAM applied to autonomous mobile robots. In Proceedings of the 2022 IEEE Information Technologies and Smart Industrial Systems, ITSIS 2022, Paris, France, 15–17 July 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar]

- Wang, J.; Huang, G.; Yuan, X.; Liu, Z.; Wu, X. An adaptive collision avoidance strategy for autonomous vehicle under various road friction and speed. ISA Trans. 2023, 143, 131–143. [Google Scholar] [CrossRef] [PubMed]

- Ryan, C.; O’sullivan, B.; Elrasad, A.; Cahill, A.; Lemley, J.; Kielty, P.; Posch, C.; Perot, E. Real-time face & eye tracking and blink detection using event cameras. Neural Netw. 2021, 141, 87–97. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Falotico, E.; Vannucci, L.; Ambrosano, A.; Albanese, U.; Ulbrich, S.; Tieck, J.C.V.; Hinkel, G.; Kaiser, J.; Peric, I.; Denninger, O.; et al. Connecting Artificial Brains to Robots in a Comprehensive Simulation Framework: The Neurorobotics Platform. Front. Neurorobotics 2017, 11, 2. [Google Scholar] [CrossRef] [PubMed]

- Roy, K.; Jaiswal, A.; Panda, P. Towards spike-based machine intelligence with neuromorphic computing. Nature 2019, 575, 607–617. [Google Scholar] [CrossRef] [PubMed]

- Furber, S. Large-scale neuromorphic computing systems. J. Neural Eng. 2016, 13, 051001. [Google Scholar] [CrossRef] [PubMed]

- Nandakumar, S.; Kulkarni, S.R.; Babu, A.V.; Rajendran, B. Building Brain-Inspired Computing Systems: Examining the Role of Nanoscale Devices. IEEE Nanotechnol. Mag. 2018, 12, 19–35. [Google Scholar] [CrossRef]

- Bartolozzi, C.; Indiveri, G.; Donati, E. Embodied neuromorphic intelligence. Nat. Commun. 2022, 13, 1024. [Google Scholar] [CrossRef] [PubMed]

- Ivanov, D.; Chezhegov, A.; Kiselev, M.; Grunin, A.; Larionov, D. Neuromorphic artificial intelligence systems. Front. Neurosci. 2022, 16, 959626. [Google Scholar] [CrossRef] [PubMed]

- Shrestha, A.; Fang, H.; Mei, Z.; Rider, D.P.; Wu, Q.; Qiu, Q. A Survey on Neuromorphic Computing: Models and Hardware. IEEE Circuits Syst. Mag. 2022, 22, 6–35. [Google Scholar] [CrossRef]

- Jones, A.; Rush, A.; Merkel, C.; Herrmann, E.; Jacob, A.P.; Thiem, C.; Jha, R. A neuromorphic SLAM architecture using gated-memristive synapses. Neurocomputing 2020, 381, 89–104. [Google Scholar] [CrossRef]

- Chen, S. Neuromorphic Computing Device; Macronix Int Co., Ltd.: Hsinchu, Taiwan, 2020; Available online: https://www.lens.org/lens/patent/128-760-638-914-092/frontpage (accessed on 14 July 2024).

- Davies, M.; Srinivasa, N.; Lin, T.-H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A Neuromorphic Manycore Processor with On-Chip Learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- James, C.D.; Aimone, J.B.; Miner, N.E.; Vineyard, C.M.; Rothganger, F.H.; Carlson, K.D.; Mulder, S.A.; Draelos, T.J.; Faust, A.; Marinella, M.J.; et al. A historical survey of algorithms and hardware architectures for neural-inspired and neuromorphic computing applications. Biol. Inspired Cogn. Archit. 2017, 19, 49–64. [Google Scholar] [CrossRef]

- Strukov, D.; Indiveri, G.; Grollier, J.; Fusi, S. Building brain-inspired computing. Nat. Commun. 2019, 10, 4838. [Google Scholar]

- Thakur, C.S.; Molin, J.L.; Cauwenberghs, G.; Indiveri, G.; Kumar, K.; Qiao, N.; Schemmel, J.; Wang, R.; Chicca, E.; Olson Hasler, J.; et al. Large-Scale Neuromorphic Spiking Array Processors: A Quest to Mimic the Brain. Front. Neurosci. 2018, 12, 891. [Google Scholar] [CrossRef] [PubMed]

- Furber, S.B.; Galluppi, F.; Temple, S.; Plana, L.A. The SpiNNaker project. Proc. IEEE 2014, 102, 652–665. [Google Scholar] [CrossRef]

- Schemmel, J.; Briiderle, D.; Griibl, A.; Hock, M.; Meier, K.; Millner, S. A wafer-scale neuromorphic hardware system for large-scale neural modeling. In Proceedings of the 2010 IEEE International Symposium on Circuits and Systems (ISCAS), Paris, France, 30 May–2 June 2010. [Google Scholar]

- Schmitt, S.; Klahn, J.; Bellec, G.; Grubl, A.; Guttler, M.; Hartel, A.; Hartmann, S.; Husmann, D.; Husmann, K.; Jeltsch, S.; et al. Neuromorphic hardware in the loop: Training a deep spiking network on the BrainScaleS wafer-scale system. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017. [Google Scholar]

- DeBole, M.V.; Taba, B.; Amir, A.; Akopyan, F.; Andreopoulos, A.; Risk, W.P.; Kusnitz, J.; Otero, C.O.; Nayak, T.K.; Appuswamy, R.; et al. TrueNorth: Accelerating From Zero to 64 Million Neurons in 10 Years. Computer 2019, 52, 20–29. [Google Scholar] [CrossRef]

- Brainchip. 2023. Available online: https://brainchip.com/akida-generations/ (accessed on 4 October 2023).

- Vanarse, A.; Osseiran, A.; Rassau, A. Neuromorphic engineering—A paradigm shift for future IM technologies. IEEE Instrum. Meas. Mag. 2019, 22, 4–9. [Google Scholar] [CrossRef]

- Höppner, S.; Yan, Y.; Dixius, A.; Scholze, S.; Partzsch, J.; Stolba, M.; Kelber, F.; Vogginger, B.; Neumärker, F.; Ellguth, G.; et al. The SpiNNaker 2 Processing Element Architecture for Hybrid Digital Neuromorphic Computing. arXiv 2021, arXiv:2103.08392. [Google Scholar]

- Mayr, C.; Hoeppner, S.; Furber, S.B. SpiNNaker 2: A 10 Million Core Processor System for Brain Simulation and Machine Learning. In Communicating Process Architectures 2017 & 2018; IOS Press: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Van Albada, S.J.; Rowley, A.G.; Senk, J.; Hopkins, M.; Schmidt, M.; Stokes, A.B.; Lester, D.R.; Diesmann, M.; Furber, S.B. Performance Comparison of the Digital Neuromorphic Hardware SpiNNaker and the Neural Network Simulation Software NEST for a Full-Scale Cortical Microcircuit Model. Front. Neurosci. 2018, 12, 291. [Google Scholar] [CrossRef] [PubMed]

- Merolla, P.A.; Arthur, J.V.; Alvarez-Icaza, R.; Cassidy, A.S.; Sawada, J.; Akopyan, F.; Jackson, B.L.; Imam, N.; Guo, C.; Nakamura, Y.; et al. Artificial brains. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 2014, 345, 668–673. [Google Scholar] [CrossRef] [PubMed]

- Andreopoulos, A.; Kashyap, H.J.; Nayak, T.K.; Amir, A.; Flickner, M.D. A Low Power, High Throughput, Fully Event-Based Stereo System. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- DeWolf, T.; Jaworski, P.; Eliasmith, C. Nengo and Low-Power AI Hardware for Robust, Embedded Neurorobotics. Front. Neurorobotics 2020, 14, 568359. [Google Scholar] [CrossRef]

- Stagsted, R.K.; Vitale, A.; Renner, A.; Larsen, L.B.; Christensen, A.L.; Sandamirskaya, Y. Event-based PID controller fully realized in neuromorphic hardware: A one DoF study. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2020. [Google Scholar]

- Lava Software Framework. Available online: https://lava-nc.org/ (accessed on 30 April 2024).

- Grübl, A.; Billaudelle, S.; Cramer, B.; Karasenko, V.; Schemmel, J. Verification and Design Methods for the BrainScaleS Neuromorphic Hardware System. J. Signal Process. Syst. 2020, 92, 1277–1292. [Google Scholar] [CrossRef]

- Wunderlich, T.; Kungl, A.F.; Müller, E.; Hartel, A.; Stradmann, Y.; Aamir, S.A.; Grübl, A.; Heimbrecht, A.; Schreiber, K.; Stöckel, D.; et al. Demonstrating Advantages of Neuromorphic Computation: A Pilot Study. Front. Neurosci. 2019, 13, 260. [Google Scholar] [CrossRef] [PubMed]

- Merolla, P.; Arthur, J.; Akopyan, F.; Imam, N.; Manohar, R.; Modha, D.S. A digital neurosynaptic core using embedded crossbar memory with 45 pJ per spike in 45 nm. In Proceedings of the 2011 IEEE Custom Integrated Circuits Conference (CICC), San Jose, CA, USA, 19–21 September 2011. [Google Scholar]

- Stromatias, E.; Neil, D.; Galluppi, F.; Pfeiffer, M.; Liu, S.-C.; Furber, S. Scalable energy-efficient, low-latency implementations of trained spiking Deep Belief Networks on SpiNNaker. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015. [Google Scholar]

- Querlioz, D.; Bichler, O.; Gamrat, C. Simulation of a memristor-based spiking neural network immune to device variations. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011. [Google Scholar]

- Cramer, B.; Billaudelle, S.; Kanya, S.; Leibfried, A.; Grübl, A.; Karasenko, V.; Pehle, C.; Schreiber, K.; Stradmann, Y.; Weis, J.; et al. Surrogate gradients for analog neuromorphic computing. Proc. Natl. Acad. Sci. USA 2022, 119, e2109194119. [Google Scholar] [CrossRef] [PubMed]

- Schreiber, K.; Wunderlich, T.C.; Pehle, C.; Petrovici, M.A.; Schemmel, J.; Meier, K. Closed-loop experiments on the BrainScaleS-2 architecture. In Proceedings of the 2020 Annual Neuro-Inspired Computational Elements Workshop, Heidelberg Germany, 17–20 March 2020; Association for Computing Machinery: Heidelberg, Germany, 2022; p. 17. [Google Scholar]

- Moradi, S.; Qiao, N.; Stefanini, F.; Indiveri, G. A Scalable Multicore Architecture With Heterogeneous Memory Structures for Dynamic Neuromorphic Asynchronous Processors (DYNAPs). IEEE Trans. Biomed. Circuits Syst. 2017, 12, 106–122. [Google Scholar] [CrossRef] [PubMed]

- Vanarse, A.; Osseiran, A.; Rassau, A.; van der Made, P. A Hardware-Deployable Neuromorphic Solution for Encoding and Classification of Electronic Nose Data. Sensors 2019, 19, 4831. [Google Scholar] [CrossRef]

- Wang, X.; Lin, X.; Dang, X. Supervised learning in spiking neural networks: A review of algorithms and evaluations. Neural Netw. 2020, 125, 258–280. [Google Scholar] [CrossRef] [PubMed]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Guo, W.; Fouda, M.E.; Eltawil, A.M.; Salama, K.N. Neural Coding in Spiking Neural Networks: A Comparative Study for Robust Neuromorphic Systems. Front. Neurosci. 2021, 15, 638474. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Jia, S.; Cheng, X.; Xu, B. Tuning Convolutional Spiking Neural Network With Biologically Plausible Reward Propagation. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 7621–7631. [Google Scholar] [CrossRef] [PubMed]

- Cordone, L. Performance of Spiking Neural Networks on Event Data for Embedded Automotive Applications. Doctoral Dissertation, Université Côte d’Azur, Nice, France, 2022. Available online: https://theses.hal.science/tel-04026653v1/file/2022COAZ4097.pdf (accessed on 14 July 2024).

- Yamazaki, K.; Vo-Ho, V.-K.; Bulsara, D.; Le, N. Spiking Neural Networks and Their Applications: A Review. Brain Sci. 2022, 12, 863. [Google Scholar] [CrossRef]

- Izhikevich, E.M. Which model to use for cortical spiking neurons? IEEE Trans. Neural Netw. 2004, 15, 1063–1070. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, H.; Luo, T.; Qu, C.; Aung, M.T.L.; Cui, Y.; Zhou, J.; Wong, M.M.; Pu, J.; Do, A.T.; et al. Coreset: Hierarchical neuromorphic computing supporting large-scale neural networks with improved resource efficiency. Neurocomputing 2022, 474, 128–140. [Google Scholar] [CrossRef]

- Tang, G.; Shah, A.; Michmizos, K.P. Spiking Neural Network on Neuromorphic Hardware for Energy-Efficient Unidimensional SLAM. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019. [Google Scholar]

- Kreiser, R.; Cartiglia, M.; Martel, J.N.; Conradt, J.; Sandamirskaya, Y. A Neuromorphic Approach to Path Integration: A Head-Direction Spiking Neural Network with Vision-driven Reset. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018. [Google Scholar]

- Kreiser, R.; Renner, A.; Sandamirskaya, Y.; Pienroj, P. Pose Estimation and Map Formation with Spiking Neural Networks: Towards Neuromorphic SLAM. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Kreiser, R.; Waibel, G.; Armengol, N.; Renner, A.; Sandamirskaya, Y. Error estimation and correction in a spiking neural network for map formation in neuromorphic hardware. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Aboumerhi, K.; Güemes, A.; Liu, H.; Tenore, F.; Etienne-Cummings, R. Neuromorphic applications in medicine. J. Neural Eng. 2023, 20, 041004. [Google Scholar] [CrossRef] [PubMed]

- Boi, F.; Moraitis, T.; De Feo, V.; Diotalevi, F.; Bartolozzi, C.; Indiveri, G.; Vato, A. A Bidirectional Brain-Machine Interface Featuring a Neuromorphic Hardware Decoder. Front. Neurosci. 2016, 10, 563. [Google Scholar] [CrossRef] [PubMed]

- Nurse, E.; Mashford, B.S.; Yepes, A.J.; Kiral-Kornek, I.; Harrer, S.; Freestone, D.R. Decoding EEG and LFP signals using deep learning: Heading TrueNorth. In Proceedings of the ACM International Conference on Computing Frontiers, Como, Italy, 16–19 May 2016; Association for Computing Machinery: Como, Italy, 2016; pp. 259–266. [Google Scholar]

- Corradi, F.; Indiveri, G. A Neuromorphic Event-Based Neural Recording System for Smart Brain-Machine-Interfaces. IEEE Trans. Biomed. Circuits Syst. 2015, 9, 699–709. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Shan, H.; Fan, Z.; Zhang, Y.; Yang, L.; Zhu, Z. Towards neuromorphic brain-computer interfaces: Model and circuit Co-design of the spiking EEGNet. Microelectron. J. 2023, 137, 105808. [Google Scholar] [CrossRef]

- Gao, S.-B.; Zhang, M.; Zhao, Q.; Zhanga, X.-S.; Li, Y.-J. Underwater Image Enhancement Using Adaptive Retinal Mechanisms. IEEE Trans. Image Process. 2019, 28, 5580–5595. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.-J.; Gao, S.-B.; Xu, W.-Z.; Han, S.-C. Visible-Infrared Image Fusion Based on Early Visual Information Processing Mechanisms. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4357–4369. [Google Scholar] [CrossRef]

- Sinha, S.; Suh, J.; Bakkaloglu, B.; Cao, Y. A workload-aware neuromorphic controller for dynamic power and thermal management. In Proceedings of the 2011 NASA/ESA Conference on Adaptive Hardware and Systems (AHS), San Diego, CA, USA, 6–9 June 2011. [Google Scholar]

- Jin, L.; Brooke, M. A fully parallel learning neural network chip for real-time control. In Proceedings of the IJCNN’99. International Joint Conference on Neural Networks. Proceedings (Cat. No.99CH36339), Washington, DC, USA, 10–16 July 1999. [Google Scholar]

- Liu, J.; Brooke, M. Fully parallel on-chip learning hardware neural network for real-time control. In Proceedings of the 1999 IEEE International Symposium on Circuits and Systems (ISCAS), Orlando, FL, USA, 30 May–2 June 1999. [Google Scholar]

- Dong, Z.; Duan, S.; Hu, X.; Wang, L.; Li, H. A novel memristive multilayer feedforward small-world neural network with its applications in PID control. Sci. World J. 2014, 2014, 394828. [Google Scholar] [CrossRef]

- Ahmed, K.; Shrestha, A.; Wang, Y.; Qiu, Q. System Design for In-Hardware STDP Learning and Spiking Based Probablistic Inference. In Proceedings of the 2016 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Pittsburgh, PA, USA, 11–13 July 2016. [Google Scholar]

- Georgiou, J.; Andreou, A.G.; Pouliquen, P.O. A mixed analog/digital asynchronous processor for cortical computations in 3D SOI-CMOS. In Proceedings of the 2006 IEEE International Symposium on Circuits and Systems, Kos, Greece, 21–24 May 2006. [Google Scholar]

- Shih-Chii, L.; Oster, M. Feature competition in a spike-based winner-take-all VLSI network. In Proceedings of the 2006 IEEE International Symposium on Circuits and Systems (ISCAS), Kos, Greece, 21–24 May 2006. [Google Scholar]

- Glackin, B.; Harkin, J.; McGinnity, T.M.; Maguire, L.P.; Wu, Q. Emulating Spiking Neural Networks for edge detection on FPGA hardware. In Proceedings of the 2009 International Conference on Field Programmable Logic and Applications, Prague, Czech Republic, 31 August–2 September 2009. [Google Scholar]

- Goldberg, D.H.; Cauwenberghs, G.; Andreou, A.G. Probabilistic synaptic weighting in a reconfigurable network of VLSI integrate-and-fire neurons. Neural Netw. 2001, 14, 781–793. [Google Scholar] [CrossRef]

- Xiong, Y.; Han, W.H.; Zhao, K.; Zhang, Y.B.; Yang, F.H. An analog CMOS pulse coupled neural network for image segmentation. In Proceedings of the 2010 10th IEEE International Conference on Solid-State and Integrated Circuit Technology, Shanghai, China, 1–4 November 2010. [Google Scholar]

- Secco, J.; Farina, M.; Demarchi, D.; Corinto, F. Memristor cellular automata through belief propagation inspired algorithm. In Proceedings of the 2015 International SoC Design Conference (ISOCC), Gyeongju, Republic of Korea, 2–5 November 2015. [Google Scholar]

- Bohrn, M.; Fujcik, L.; Vrba, R. Field Programmable Neural Array for feed-forward neural networks. In Proceedings of the 2013 36th International Conference on Telecommunications and Signal Processing (TSP), Rome, Italy, 2–4 July 2013. [Google Scholar]

- Fang, W.-C.; Sheu, B.; Chen, O.-C.; Choi, J. A VLSI neural processor for image data compression using self-organization networks. IEEE Trans. Neural Netw. 1992, 3, 506–518. [Google Scholar] [CrossRef]

- Martínez, J.J.; Toledo, F.J.; Ferrández, J.M. New emulated discrete model of CNN architecture for FPGA and DSP applications. In Artificial Neural Nets Problem Solving Methods; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Shi, B.E.; Tsang, E.K.S.; Lam, S.Y.; Meng, Y. Expandable hardware for computing cortical feature maps. In Proceedings of the 2006 IEEE International Symposium on Circuits and Systems (ISCAS), Kos, Greece, 21–24 May 2006. [Google Scholar]

- Ielmini, D.; Ambrogio, S.; Milo, V.; Balatti, S.; Wang, Z.-Q. Neuromorphic computing with hybrid memristive/CMOS synapses for real-time learning. In Proceedings of the 2016 IEEE International Symposium on Circuits and Systems (ISCAS), Montreal, QC, Canada, 22–25 May 2016. [Google Scholar]

- Ranjan, R.; Ponce, P.M.; Hellweg, W.L.; Kyrmanidis, A.; Abu Saleh, L.; Schroeder, D.; Krautschneider, W.H. Integrated circuit with memristor emulator array and neuron circuits for neuromorphic pattern recognition. In Proceedings of the 2016 39th International Conference on Telecommunications and Signal Processing (TSP), Vienna, Austria, 27–29 June 2016. [Google Scholar]

- Hu, M.; Li, H.; Wu, Q.; Rose, G.S. Hardware realization of BSB recall function using memristor crossbar arrays. In Proceedings of the DAC Design Automation Conference 2012, San Francisco, CA, USA, 3–7 June 2012. [Google Scholar]

- Tarkov, M.S. Hopfield Network with Interneuronal Connections Based on Memristor Bridges. In Proceedings of the Advances in Neural Networks–ISNN 2016, St. Petersburg, Russia, 6–8 July 2016; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Soltiz, M.; Kudithipudi, D.; Merkel, C.; Rose, G.S.; Pino, R.E. Memristor-Based Neural Logic Blocks for Nonlinearly Separable Functions. IEEE Trans. Comput. 2013, 62, 1597–1606. [Google Scholar] [CrossRef]

- Zhang, P.; Li, C.; Huang, T.; Chen, L.; Chen, Y. Forgetting memristor based neuromorphic system for pattern training and recognition. Neurocomputing 2017, 222, 47–53. [Google Scholar] [CrossRef]

- Darwish, M.; Calayir, V.; Pileggi, L.; Weldon, J.A. Ultracompact Graphene Multigate Variable Resistor for Neuromorphic Computing. IEEE Trans. Nanotechnol. 2016, 15, 318–327. [Google Scholar] [CrossRef]

- Yang, C.; Liu, B.; Li, H.; Chen, Y.; Barnell, M.; Wu, Q.; Wen, W.; Rajendran, J. Security of neuromorphic computing: Thwarting learning attacks using memristor’s obsolescence effect. In Proceedings of the 2016 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Austin, TX, USA, 7–10 November 2016; IEEE Press: Austin, TX, USA, 2016; pp. 1–6. [Google Scholar]

- Vitabile, S.; Conti, V.; Gennaro, F.; Sorbello, F. Efficient MLP digital implementation on FPGA. In Proceedings of the 8th Euromicro Conference on Digital System Design (DSD’05), Porto, Portugal, 30 August–3 September 2005. [Google Scholar]

- Garbin, D.; Vianello, E.; Bichler, O.; Azzaz, M.; Rafhay, Q.; Candelier, P.; Gamrat, C.; Ghibaudo, G.; DeSalvo, B.; Perniola, L. On the impact of OxRAM-based synapses variability on convolutional neural networks performance. In Proceedings of the 2015 IEEE/ACM International Symposium on Nanoscale Architectures (NANOARCH’15), Boston, MA, USA, 8–10 July 2015. [Google Scholar]

- Fieres, J.; Schemmel, J.; Meier, K. Training convolutional networks of threshold neurons suited for low-power hardware implementation. In Proceedings of the 2006 IEEE International Joint Conference on Neural Network Proceedings, Vancouver, BC, Canada, 16–21 July 2006. [Google Scholar]

- Dhawan, K.; Perumal, R.S.; Nadesh, R.K. Identification of traffic signs for advanced driving assistance systems in smart cities using deep learning. Multimed. Tools Appl. 2023, 82, 26465–26480. [Google Scholar] [CrossRef] [PubMed]

- Ibrayev, T.; James, A.P.; Merkel, C.; Kudithipudi, D. A design of HTM spatial pooler for face recognition using memristor-CMOS hybrid circuits. In Proceedings of the 2016 IEEE International Symposium on Circuits and Systems (ISCAS), Montreal, QC, Canada, 22–25 May 2016. [Google Scholar]

- Knag, P.; Chester, L.; Zhengya, Z. A 1.40 mm2 141 mW 898GOPS sparse neuromorphic processor in 40 nm CMOS. In Proceedings of the 2016 IEEE Symposium on VLSI Circuits (VLSI-Circuits), Honolulu, HI, USA, 15–17 June 2016. [Google Scholar]

- Farabet, C.; Poulet, C.; Han, J.Y.; LeCun, Y. CNP: An FPGA-based processor for Convolutional Networks. In Proceedings of the 2009 International Conference on Field Programmable Logic and Applications, Prague, Czech Republic, 31 August–2 September 2009. [Google Scholar]

- Le, T.H. Applying Artificial Neural Networks for Face Recognition. Adv. Artif. Neural Syst. 2011, 2011, 673016. [Google Scholar] [CrossRef]

- Teodoro, Á.-S.; Jesús, A.Á.-C.; Roberto, H.-C. Detection of facial emotions using neuromorphic computation. In Applications of Digital Image Processing XLV; SPIE: San Diego, CA, USA, 2022. [Google Scholar]

- Manakitsa, N.; Maraslidis, G.S.; Moysis, L.; Fragulis, G.F. A Review of Machine Learning and Deep Learning for Object Detection, Semantic Segmentation, and Human Action Recognition in Machine and Robotic Vision. Technologies 2024, 12, 15. [Google Scholar] [CrossRef]

- Adhikari, S.P.; Yang, C.; Kim, H.; Chua, L.O. Memristor Bridge Synapse-Based Neural Network and Its Learning. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1426–1435. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Yang, L.; Bao, W.; Tao, L.; Zeng, Y.; Hu, D.; Xiong, J.; Shang, D. High-Speed Object Recognition Based on a Neuromorphic System. Electronics 2022, 11, 4179. [Google Scholar] [CrossRef]

- Yang, B. Research on Vehicle Detection and Recognition Technology Based on Artificial Intelligence. Microprocess. Microsyst. 2023, 104937. [Google Scholar] [CrossRef]

- Wan, H.; Gao, L.; Su, M.; You, Q.; Qu, H.; Sun, Q. A Novel Neural Network Model for Traffic Sign Detection and Recognition under Extreme Conditions. J. Sens. 2021, 2021, 9984787. [Google Scholar] [CrossRef]

- Mukundan, A.; Huang, C.-C.; Men, T.-C.; Lin, F.-C.; Wang, H.-C. Air Pollution Detection Using a Novel Snap-Shot Hyperspectral Imaging Technique. Sensors 2022, 22, 6231. [Google Scholar] [CrossRef] [PubMed]

- Kow, P.-Y.; Hsia, I.-W.; Chang, L.-C.; Chang, F.-J. Real-time image-based air quality estimation by deep learning neural networks. J. Environ. Manag. 2022, 307, 114560. [Google Scholar] [CrossRef] [PubMed]

- Onorato, M.; Valle, M.; Caviglia, D.D.; Bisio, G.M. Non-linear circuit effects on analog VLSI neural network implementations. In Proceedings of the Fourth International Conference on Microelectronics for Neural Networks and Fuzzy Systems, Turin, Italy, 26–28 September 1994. [Google Scholar]

- Yang, J.; Li, S.; Wang, Z.; Dong, H.; Wang, J.; Tang, S. Using Deep Learning to Detect Defects in Manufacturing: A Comprehensive Survey and Current Challenges. Materials 2020, 13, 5755. [Google Scholar] [CrossRef]

- Aibe, N.; Mizuno, R.; Nakamura, M.; Yasunaga, M.; Yoshihara, I. Performance evaluation system for probabilistic neural network hardware. Artif. Life Robot. 2004, 8, 208–213. [Google Scholar] [CrossRef]

- Ceolini, E.; Frenkel, C.; Shrestha, S.B.; Taverni, G.; Khacef, L.; Payvand, M.; Donati, E. Hand-Gesture Recognition Based on EMG and Event-Based Camera Sensor Fusion: A Benchmark in Neuromorphic Computing. Front. Neurosci. 2020, 14, 637. [Google Scholar] [CrossRef]

- Liu, X.; Aldrich, C. Deep Learning Approaches to Image Texture Analysis in Material Processing. Metals 2022, 12, 355. [Google Scholar] [CrossRef]

- Dixit, U.; Mishra, A.; Shukla, A.; Tiwari, R. Texture classification using convolutional neural network optimized with whale optimization algorithm. SN Appl. Sci. 2019, 1, 655. [Google Scholar] [CrossRef]

- Roska, T.; Horvath, A.; Stubendek, A.; Corinto, F.; Csaba, G.; Porod, W.; Shibata, T.; Bourianoff, G. An Associative Memory with oscillatory CNN arrays using spin torque oscillator cells and spin-wave interactions architecture and End-to-end Simulator. In Proceedings of the 2012 13th International Workshop on Cellular Nanoscale Networks and their Applications, Turin, Italy, 29–31 August 2012. [Google Scholar]

- Khosla, D.; Chen, Y.; Kim, K. A neuromorphic system for video object recognition. Front. Comput. Neurosci. 2014, 8, 147. [Google Scholar] [CrossRef]

- Al-Salih, A.A.M.; Ahson, S.I. Object detection and features extraction in video frames using direct thresholding. In Proceedings of the 2009 International Multimedia, Signal Processing and Communication Technologies, Aligarh, India, 14–16 March 2009. [Google Scholar]

- Wang, X.; Huang, Z.; Liao, B.; Huang, L.; Gong, Y.; Huang, C. Real-time and accurate object detection in compressed video by long short-term feature aggregation. Comput. Vis. Image Underst. 2021, 206, 103188. [Google Scholar] [CrossRef]

- Zhu, H.; Wei, H.; Li, B.; Yuan, X.; Kehtarnavaz, N. A Review of Video Object Detection: Datasets, Metrics and Methods. Appl. Sci. 2020, 10, 7834. [Google Scholar] [CrossRef]

- Koh, T.C.; Yeo, C.K.; Jing, X.; Sivadas, S. Towards efficient video-based action recognition: Context-aware memory attention network. SN Appl. Sci. 2023, 5, 330. [Google Scholar] [CrossRef]

- Sánchez-Caballero, A.; Fuentes-Jiménez, D.; Losada-Gutiérrez, C. Real-time human action recognition using raw depth video-based recurrent neural networks. Multimed. Tools Appl. 2023, 82, 16213–16235. [Google Scholar] [CrossRef]

- Tsai, J.-K.; Hsu, C.-C.; Wang, W.-Y.; Huang, S.-K. Deep Learning-Based Real-Time Multiple-Person Action Recognition System. Sensors 2020, 20, 4758. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Lee, H.-W.; Lee, J.; Bae, O.; Hong, C.-P. An Effective Motion-Tracking Scheme for Machine-Learning Applications in Noisy Videos. Appl. Sci. 2023, 13, 3338. [Google Scholar] [CrossRef]

- Liu, L.; Lin, B.; Yang, Y. Moving scene object tracking method based on deep convolutional neural network. Alexandria Eng. J. 2024, 86, 592–602. [Google Scholar] [CrossRef]

- Skrzypkowiak, S.S.; Jain, V.K. Video motion estimation using a neural network. In Proceedings of the IEEE International Symposium on Circuits and Systems-ISCAS’94, England, UK, 30 May–2 June 1994. [Google Scholar]

- Botella, G.; García, C. Real-time motion estimation for image and video processing applications. J. Real-Time Image Process. 2016, 11, 625–631. [Google Scholar] [CrossRef][Green Version]

- Lee, J.; Kong, K.; Bae, G.; Song, W.-J. BlockNet: A Deep Neural Network for Block-Based Motion Estimation Using Representative Matching. Symmetry 2020, 12, 840. [Google Scholar] [CrossRef]

- Yoon, J.H.; Raychowdhury, A. NeuroSLAM: A 65-nm 7.25-to-8.79-TOPS/W Mixed-Signal Oscillator-Based SLAM Accelerator for Edge Robotics. IEEE J. Solid-State Circuits 2021, 56, 66–78. [Google Scholar] [CrossRef]

- Lee, J.; Yoon, J.H. A Neuromorphic SLAM Accelerator Supporting Multi-Agent Error Correction in Swarm Robotics. In Proceedings of the International SoC Design Conference 2022, ISOCC 2022, Gangneung-si, Republic of Korea, 19–22 October 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar]

- Yoon, J.H.; Raychowdhury, A. 31.1 A 65 nm 8.79TOPS/W 23.82 mW Mixed-Signal Oscillator-Based NeuroSLAM Accelerator for Applications in Edge Robotics. In Proceedings of the 2020 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 16–20 February 2020. [Google Scholar]

| Year | Name | Sensors | Descriptions (Key Points) | Strength (Achievements) |

|---|---|---|---|---|

| 2024 | TextSLAM [47] | RGB-D | Text objects in the environment are used to extract semantic features | More accurate and robust even under challenging conditions |

| 2023 | HFNet-SLAM [8] | Monocular | Extension of ORB-SLAM3 (incorporates CNNs) | Performs better than ORB-SLAM3 (higher accuracy) |

| 2022 | SO-SLAM [48] | Monocular | Introduced object spatial constraints (object level map) | Proposed two new methods for object SLAM |

| 2022 | SDF-SLAM [49] | Monocular | Semantic deep fusion model with deep learning | Less absolute error than the state-of-the-art SLAM framework |

| 2022 | UV-SLAM [50] | Monocular | Vanishing points (line features) are used for structural mapping | Localization accuracy and mapping quality have improved |

| 2021 | RS-SLAM [51] | RGB-D | Employed semantic segmentation model | Both static and dynamic objects are detected |

| 2021 | RDMO-SLAM [52] | RGB-D | Semantic label prediction using dense optical flow | Reduce the influence of dynamic objects in tracking |

| 2021 | RDS-SLAM [53] | RGB-D | Extends ORB-SLAM3; Added semantic thread and a semantic-based optimization thread | Tracking thread is not required to wait for semantic information as novel threads run in parallel |

| 2021 | ORB-SLAM3 [54] | Monocular, Stereo and RGB-D | Perform visual, visual-inertial and multimap SLAM | Effectively exploits the data associations and boosts the system accuracy level |

| 2020 | Structure-SLAM [55] | Monocular | Decoupled rotation and translation estimation | Outperforms the state of the art on common SLAM benchmarks |

| 2020 | VPS-SLAM [56] | RGB-D | Combined low-level VO/VIO with planar surfaces | Provides better results than the state-of-the-art VO/VIO algorithms |

| 2020 | DDL-SLAM [46] | RGB-D | Dynamic object segmentation and background painting added to ORB-SLAM2 | Dynamic objects detected utilizing semantic segmentation and multi-view geometry |

| 2019 | PL-SLAM [57] | Stereo | Combines point and line segments | The first open-source SLAM system with points and line segment features |

| 2017 | ORB-SLAM2 [58] | Monocular, Stereo and RGB-D | Complete SLAM system including map reuse, loop closing, and re-localization capabilities | Achieved state-of-the-art accuracy while evaluating 29 popular public sequences |

| 2015 | ORB-SLAM [59] | Monocular | Feature-based monocular SLAM system | Robust to motion clutter, allows wide baseline loop closing and re-localization |

| 2014 | LSD-SLAM [60] | Monocular | Direct monocular SLAM system | Achieved post-estimation accuracy and 3D environment reconstructions |

| 2011 | DTAM [44] | Monocular | Camera tracking and reconstruction based on a dense feature | Achieved real-time performance using the commodity GPU hardware |

| 2007 | PTAM [43] | Monocular | Estimate camera pose in an unknown scene | Accuracy and robustness have surpassed the state-of-the-art system |

| 2007 | MonoSLAM [42] | Monocular | Real-Time Single Camera SLAM | Recovered the 3D trajectory of a monocular camera |

| Year | Processor/ Chips | I/O | On-Device Training | Feature Size (nm) | Remarks |

|---|---|---|---|---|---|

| 2011 | SpiNNaker | Real Numbers, Spikes | STDP | 22 | Minimal power consumption (20 nj/operation). First successful mimicking of biological brain-like structure. AER packets are required to be used for spike representations. |

| 2014 | TrueNorth | Spikes | No | 28 | First industrial neuromorphic device. Functionality is fixed at the hardware level; only addition and subtraction can be performed. |

| 2018 | Loihi | Spikes | STDP | 14 | First neuromorphic processor with on-chip learning capabilities. Spike signals are not programmable and lack context or range of values. |

| 2020 | BrainScaleS | Real Numbers, Spikes | STDP, Surrogate Gradient | 65 | Simulates spiking neurons using analog circuitry. Perform faster than biological neurons but lack flexibility. |

| 2021 | Loihi2 | Real Numbers, Spikes | STDP, Surrogate, Backpropagation | 7 | Integrates 3D multi-chip scaling that enables it to combine with numerous chips. Lava software framework was launched to streamline Loihi2 implementations. Limits the size and complexity of neural networks due to resource constraints. |

| 2021 | DYNAP SE2, SEL, CNN | Spikes | STDP (SEL) | 22 | DYNAP-SE2 is suitable for feed-forward, recurrent and reservoir networks. DYNAP-SEL facilitates on-chip learning. DYNAP-CNN supports conversion of CNN to SNN. |

| 2021 | Akida | Spikes | STDP (Last Layer) | 28 | First commercially available neuromorphic chip. Facilitates conversion of CNN to SNN. Notable features are on-chip, one-shot and continuous learning. Only the last layer of the fully connected layer supports on-chip continual learning. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tenzin, S.; Rassau, A.; Chai, D. Application of Event Cameras and Neuromorphic Computing to VSLAM: A Survey. Biomimetics 2024, 9, 444. https://doi.org/10.3390/biomimetics9070444

Tenzin S, Rassau A, Chai D. Application of Event Cameras and Neuromorphic Computing to VSLAM: A Survey. Biomimetics. 2024; 9(7):444. https://doi.org/10.3390/biomimetics9070444

Chicago/Turabian StyleTenzin, Sangay, Alexander Rassau, and Douglas Chai. 2024. "Application of Event Cameras and Neuromorphic Computing to VSLAM: A Survey" Biomimetics 9, no. 7: 444. https://doi.org/10.3390/biomimetics9070444

APA StyleTenzin, S., Rassau, A., & Chai, D. (2024). Application of Event Cameras and Neuromorphic Computing to VSLAM: A Survey. Biomimetics, 9(7), 444. https://doi.org/10.3390/biomimetics9070444