Web-Based Application for Biomedical Image Registry, Analysis, and Translation (BiRAT)

Abstract

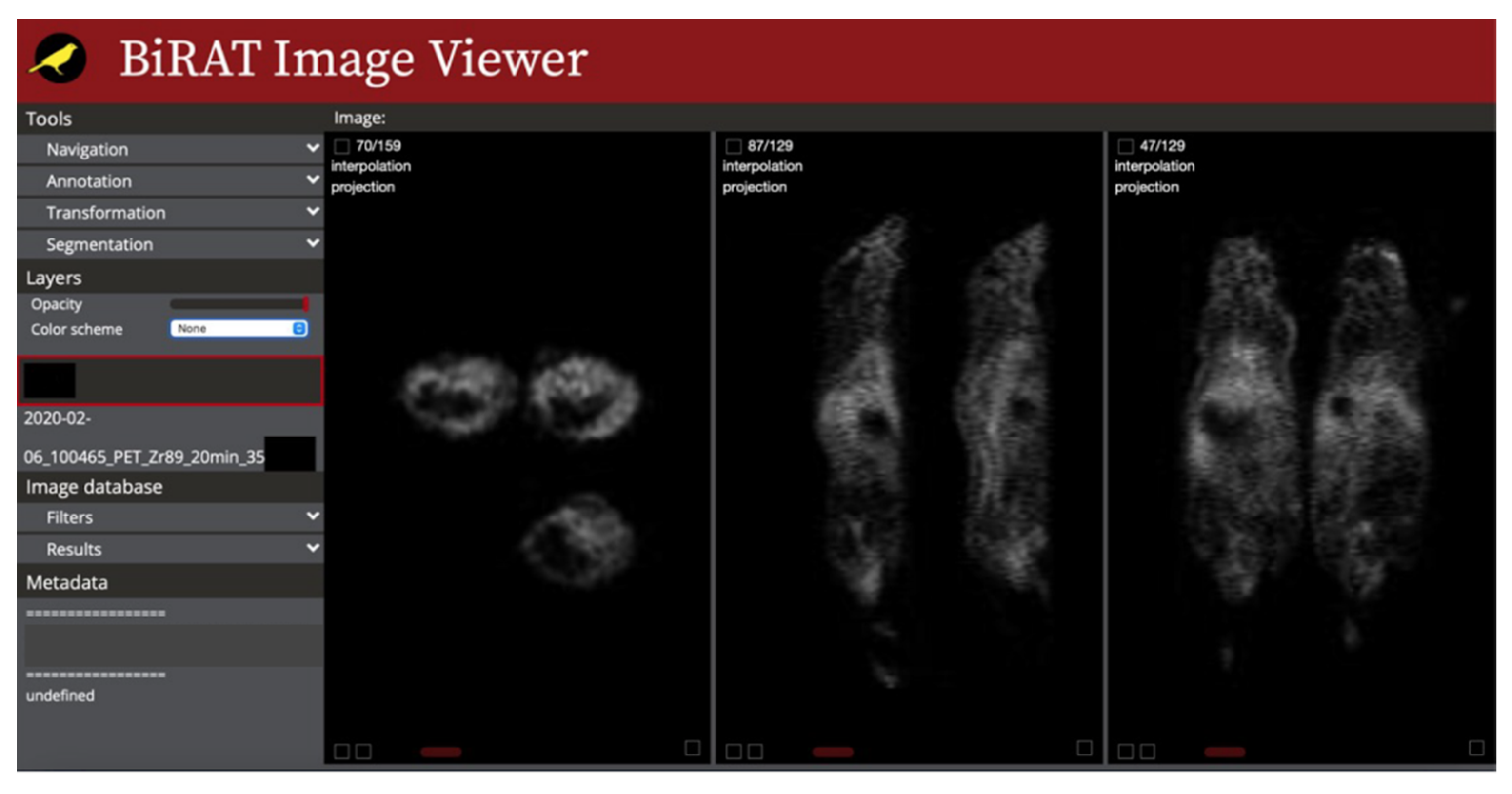

1. Introduction

2. Materials and Methods

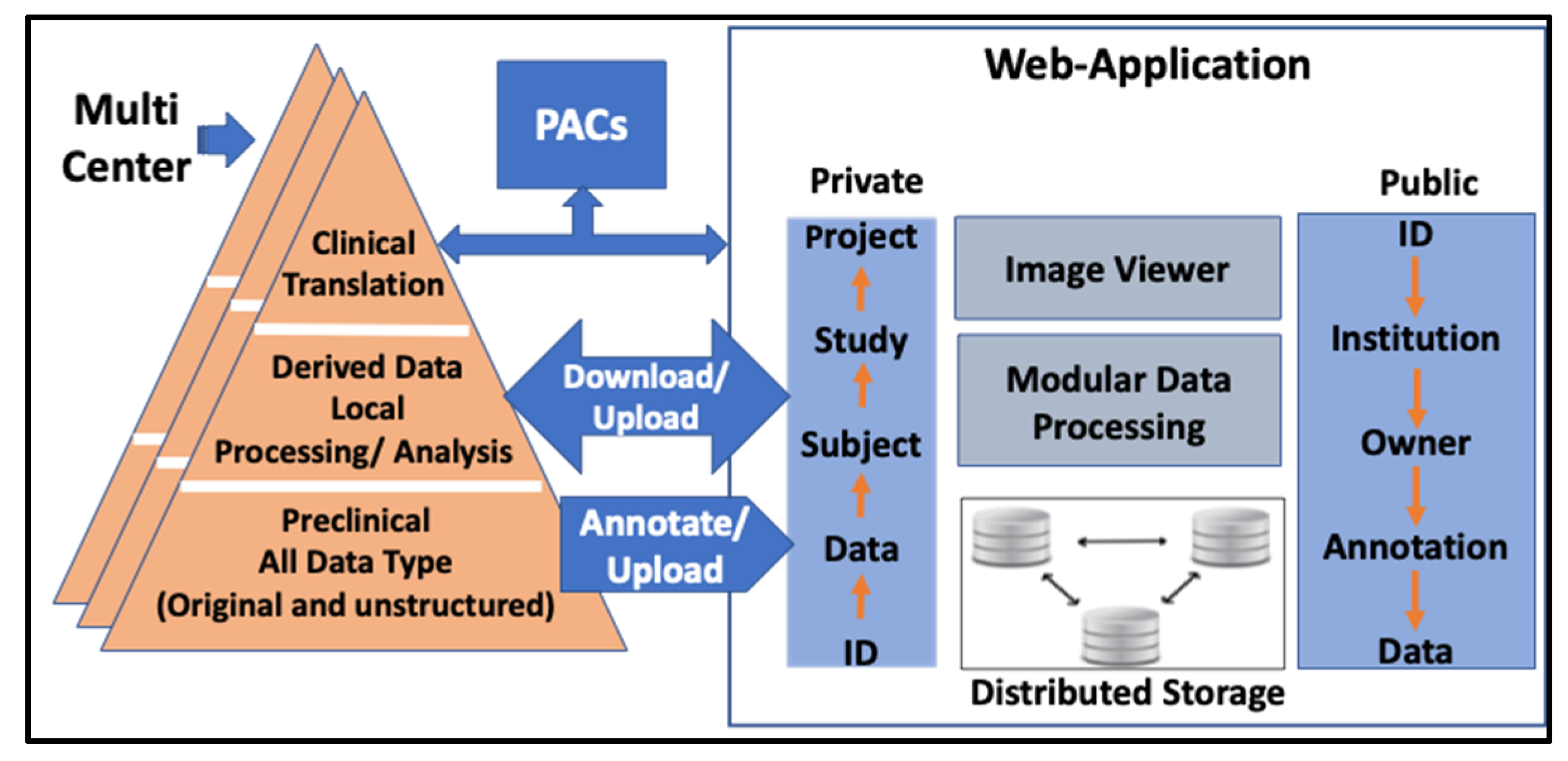

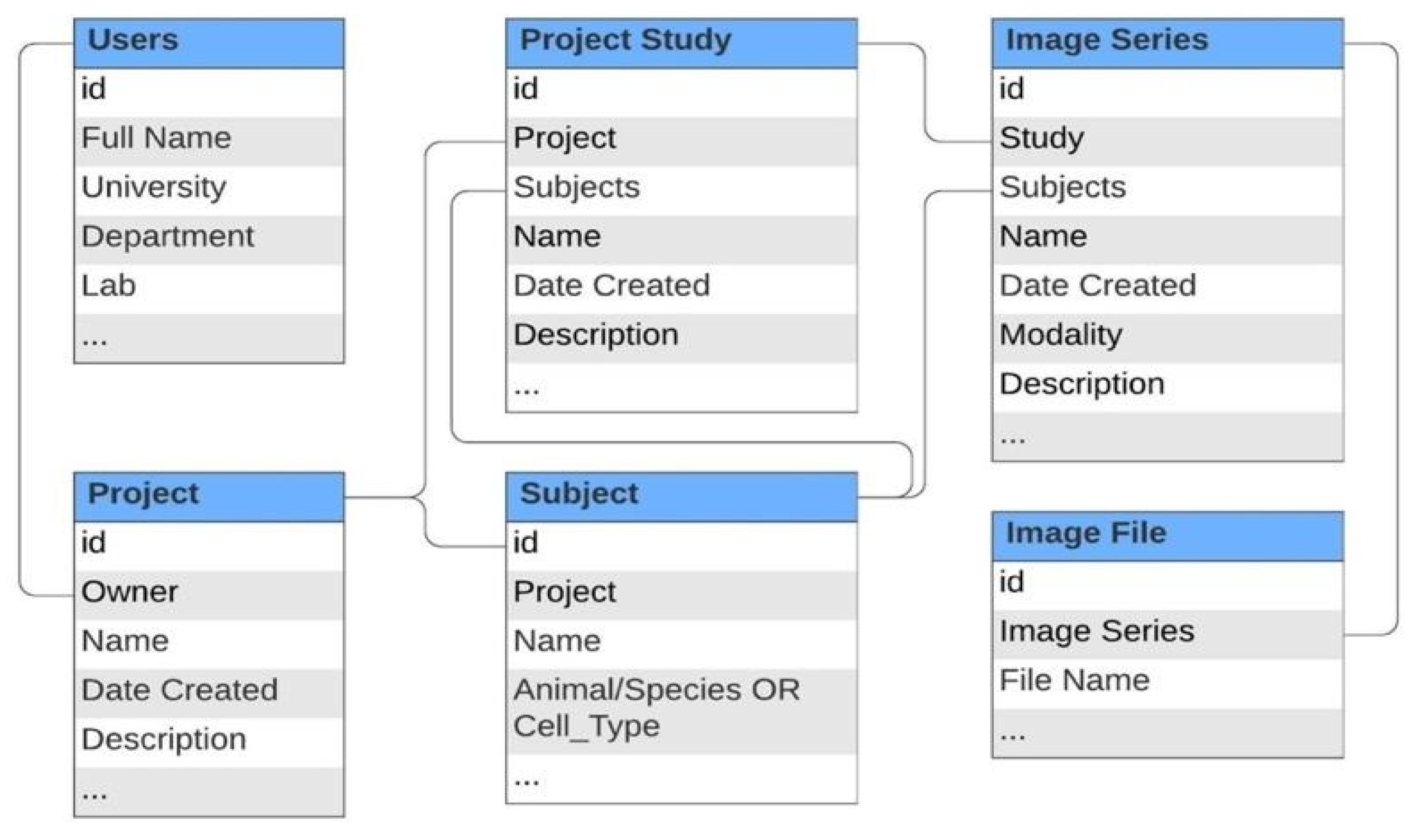

2.1. Conceptual Design and Data Model

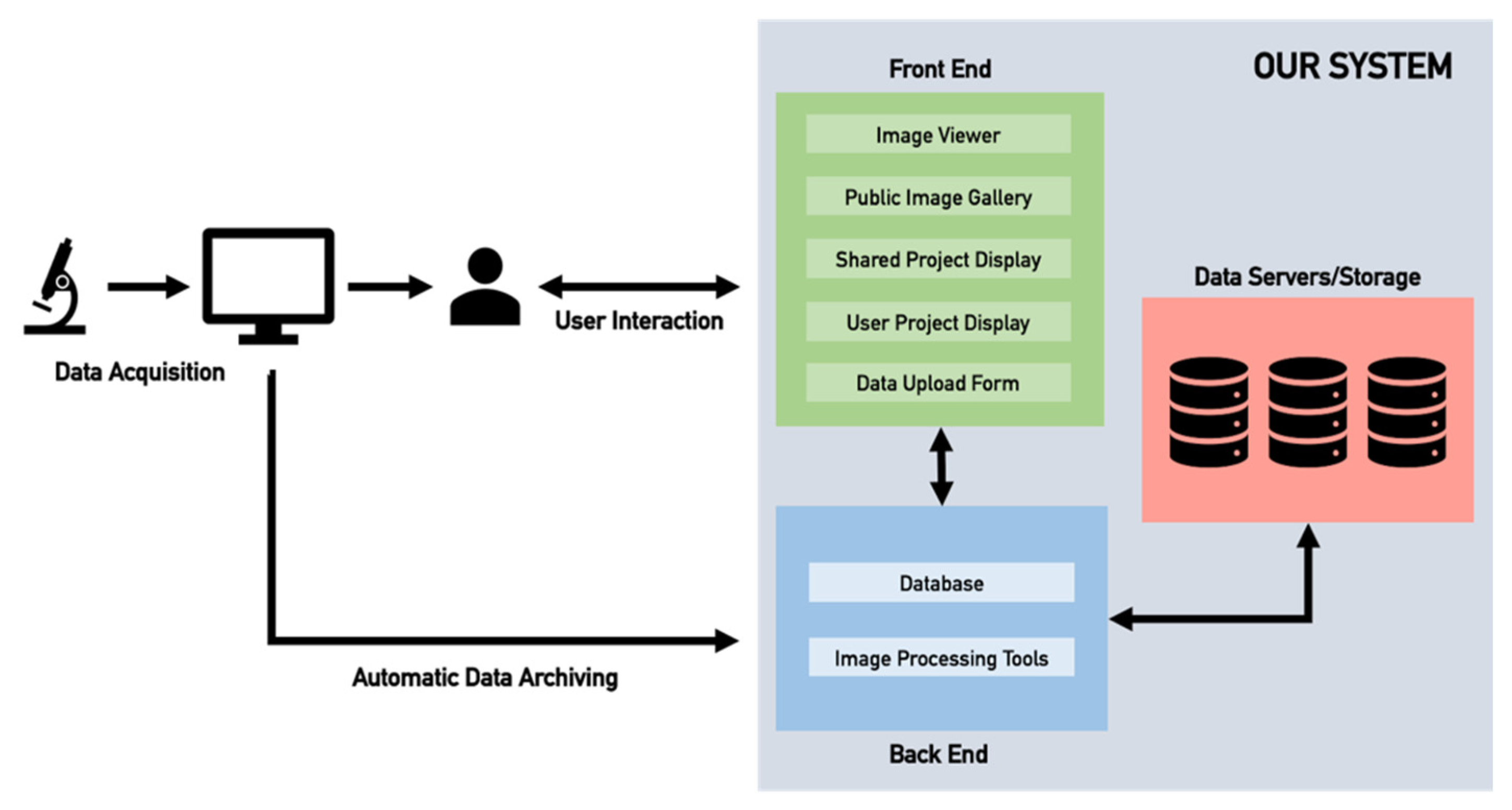

2.2. Web-Server Application

2.3. Image Viewer

2.4. Server Architecture and Storage

3. Results

3.1. Data Storage Structure

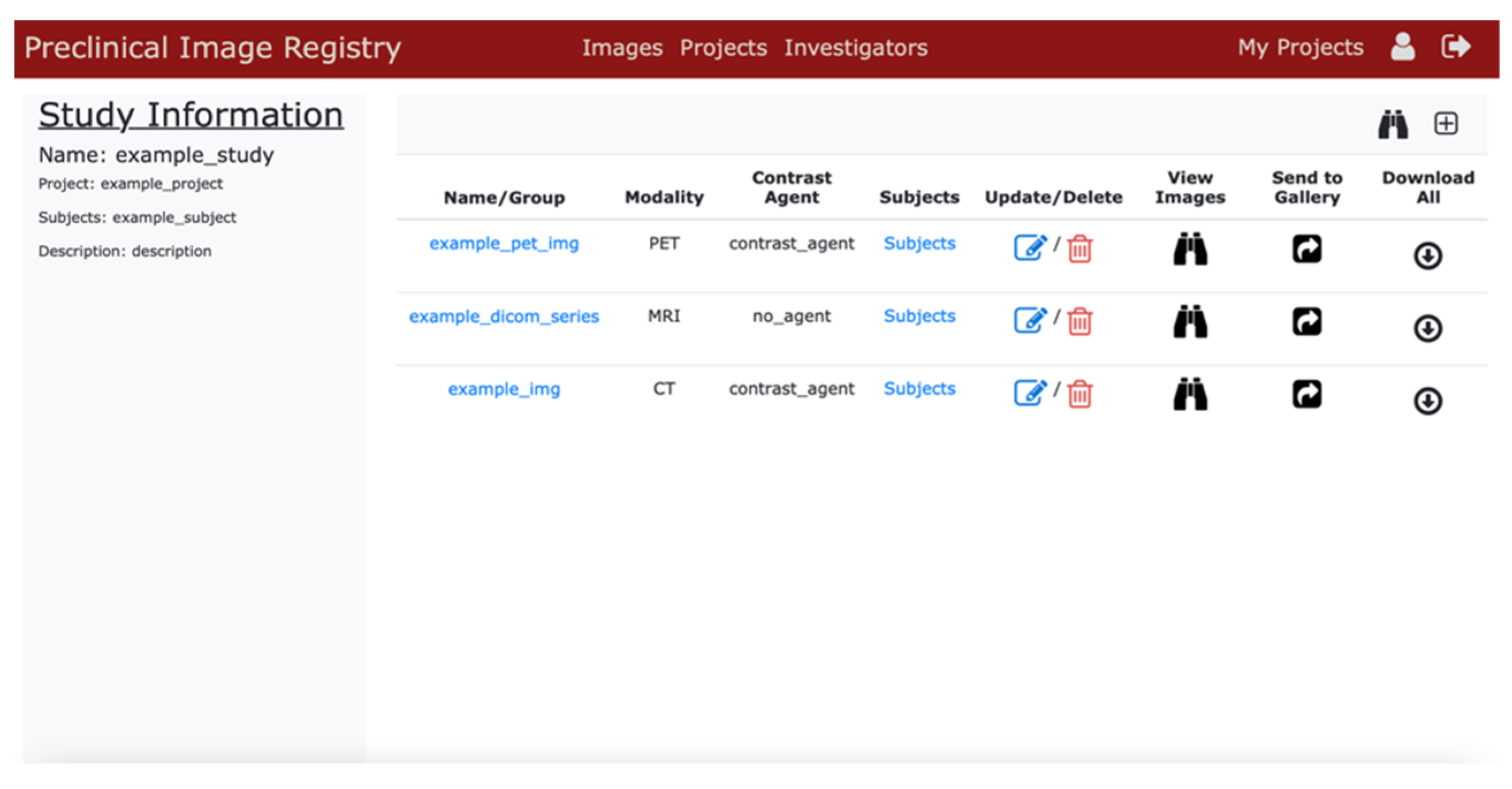

3.2. User Interface

3.3. Image Viewer

3.4. Public Image Repository Portal

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cunha, L.; Horváth, I.; Ferreira, S.; Lemos, J.; Costa, P.; Vieira, D.; Veres, D.S.; Szigeti, K.; Summavielle, T.; Máthé, D.; et al. Preclinical Imaging: An Essential Ally in Modern Biosciences. Mol. Diagn. Ther. 2014, 18, 153–173. [Google Scholar] [CrossRef]

- Lewis, J.; Achilefu, S.; Garbow, J.; Laforest, R.; Welch, M. Small animal imaging: Current technology and perspectives for oncological imaging. Eur. J. Cancer 2002, 38, 2173–2188. [Google Scholar] [CrossRef]

- Allport, J.R.; Weissleder, R. In vivo imaging of gene and cell therapies. Exp. Hematol. 2001, 29, 1237–1246. [Google Scholar] [CrossRef]

- O’Farrell, A.C.; Shnyder, S.; Marston, G.; Coletta, P.L.; Gill, J. Non-invasive molecular imaging for preclinical cancer therapeutic development. J. Cereb. Blood Flow Metab. 2013, 169, 719–735. [Google Scholar] [CrossRef] [PubMed]

- Hickson, J. In vivo optical imaging: Preclinical applications and considerations. Urol. Oncol. Semin. Orig. Investig. 2009, 27, 295–297. [Google Scholar] [CrossRef]

- Matthews, P.M.; Coatney, R.; Alsaid, H.; Jucker, B.; Ashworth, S.; Parker, C.; Changani, K. Technologies: Preclinical imaging for drug development. Drug Discov. Today Technol. 2013, 10, e343–e350. [Google Scholar] [CrossRef]

- Xie, J.; Lee, S.; Chen, X. Nanoparticle-based theranostic agents. Adv. Drug Deliv. Rev. 2010, 62, 1064–1079. [Google Scholar] [CrossRef]

- Banihashemi, B.; Vlad, R.; Debeljevic, B.; Giles, A.; Kolios, M.C.; Czarnota, G.J.; Sharkey, R.M.; Karacay, H.; Litwin, S.; Rossi, E.A.; et al. Ultrasound Imaging of Apoptosis in Tumor Response: Novel Preclinical Monitoring of Photodynamic Therapy Effects. Cancer Res. 2008, 68, 8590–8596. [Google Scholar] [CrossRef]

- Deng, W.-P.; Wu, C.-C.; Lee, C.-C.; Yang, W.K.; Wang, H.-E.; Liu, R.-S.; Wei, H.-J.; Gelovani, J.G.; Hwang, J.-J.; Yang, D.-M.; et al. Serial in vivo imaging of the lung metastases model and gene therapy using HSV1-tk and ganciclovir. J. Nucl. Med. 2006, 47, 877–884. [Google Scholar]

- Provenzano, P.P.; Eliceiri, K.; Keely, P.J. Multiphoton microscopy and fluorescence lifetime imaging microscopy (FLIM) to monitor metastasis and the tumor microenvironment. Clin. Exp. Metastasis 2009, 26, 357–370. [Google Scholar] [CrossRef]

- Martí-Bonmatí, L.; Sopena, R.; Bartumeus, P.; Sopena, P. Multimodality imaging techniques. Contrast Media Mol. Imaging 2010, 5, 180–189. [Google Scholar] [CrossRef] [PubMed]

- Tondera, C.; Hauser, S.; Krüger-Genge, A.; Jung, F.; Neffe, A.T.; Lendlein, A.; Klopfleisch, R.; Steinbach, J.; Neuber, C.; Pietzsch, J. Gelatin-based Hydrogel Degradation and Tissue Interaction in vivo: Insights from Multimodal Preclinical Imaging in Immunocompetent Nude Mice. Theranostics 2016, 6, 2114–2128. [Google Scholar] [CrossRef] [PubMed]

- Slomka, P.J.; Baum, R.P. Multimodality image registration with software: State-of-the-art. Eur. J. Pediatr. 2009, 36, 44–55. [Google Scholar] [CrossRef] [PubMed]

- De Souza, A.P.; Tang, B.; Tanowitz, H.B.; Araújo-Jorge, T.C.; Jelicks, E.L.A. Magnetic resonance imaging in experimental Chagas disease: A brief review of the utility of the method for monitoring right ventricular chamber dilatation. Parasitol. Res. 2005, 97, 87–90. [Google Scholar] [CrossRef]

- Jaffer, F.A.; Weissleder, R. Seeing Within. Circ. Res. 2004, 94, 433–445. [Google Scholar] [CrossRef]

- Shah, J.V.; Gonda, A.; Pemmaraju, R.; Subash, A.; Mendez, C.B.; Berger, M.; Zhao, X.; He, S.; Riman, R.E.; Tan, M.C.; et al. Shortwave Infrared-Emitting Theranostics for Breast Cancer Therapy Response Monitoring. Front. Mol. Biosci. 2020, 7, 569415. [Google Scholar] [CrossRef]

- Lapinlampi, N.; Melin, E.; Aronica, E.; Bankstahl, J.P.; Becker, A.; Bernard, C.; Gorter, J.A.; Gröhn, O.; Lipsanen, A.; Lukasiuk, K.; et al. Common data elements and data management: Remedy to cure underpowered preclinical studies. Epilepsy Res. 2017, 129, 87–90. [Google Scholar] [CrossRef]

- Anderson, M.N.R.; Lee, M.E.S.; Brockenbrough, J.S.; Minie, M.E.; Fuller, S.; Brinkley, J.; Tarczy-Hornoch, P. Issues in Biomedical Research Data Management and Analysis: Needs and Barriers. J. Am. Med. Inform. Assoc. 2007, 14, 478–488. [Google Scholar] [CrossRef]

- Persoon, L.; Van Hoof, S.; Van Der Kruijssen, F.; Granton, P.; Rivero, A.S.; Beunk, H.; Dubois, L.; Doosje, J.-W.; Verhaegen, F. A novel data management platform to improve image-guided precision preclinical biological research. Br. J. Radiol. 2019, 92, 20180455. [Google Scholar] [CrossRef]

- Poline, J.-B.; Breeze, J.L.; Ghosh, S.S.; Gorgolewski, K.; Halchenko, Y.O.; Hanke, M.; Haselgrove, C.; Helmer, K.G.; Keator, D.B.; Marcus, D.S.; et al. Data sharing in neuroimaging research. Front. Neuroinform. 2012, 6, 9. [Google Scholar] [CrossRef]

- Arabi, H.; Zaidi, H. Applications of artificial intelligence and deep learning in molecular imaging and radiotherapy. Eur. J. Hybrid Imaging 2020, 4, 17. [Google Scholar] [CrossRef] [PubMed]

- Yin, L.; Cao, Z.; Wang, K.; Tian, J.; Yang, X.; Zhang, J. A review of the application of machine learning in molecular imaging. Ann. Transl. Med. 2021, 9, 825. [Google Scholar] [CrossRef] [PubMed]

- Roh, Y.; Heo, G.; Whang, S.E. A Survey on Data Collection for Machine Learning: A Big Data—AI Integration Perspective. IEEE Trans. Knowl. Data Eng. 2021, 33, 1328–1347. [Google Scholar] [CrossRef]

- Suzuki, K. Overview of deep learning in medical imaging. Radiol. Phys. Technol. 2017, 10, 257–273. [Google Scholar] [CrossRef]

- Willemink, M.J.; Koszek, W.A.; Hardell, C.; Wu, J.; Fleischmann, D.; Harvey, H.; Folio, L.R.; Summers, R.M.; Rubin, D.L.; Lungren, M.P. Preparing Medical Imaging Data for Machine Learning. Radiology 2020, 295, 4–15. [Google Scholar] [CrossRef]

- Anastasopoulos, C.; Reisert, M.; Kellner, E. “Nora Imaging”: A Web-Based Platform for Medical Imaging. Neuropediatrics 2017, 48 (Suppl. 1), S1–S45. [Google Scholar] [CrossRef]

- Barillot, C.; Bannier, E.; Commowick, O.; Corouge, I.; Baire, A.; Fakhfakh, I.; Guillaumont, J.; Yao, Y.; Kain, M. Shanoir: Applying the Software as a Service Distribution Model to Manage Brain Imaging Research Repositories. Front. ICT 2016, 3. [Google Scholar] [CrossRef]

- Das, S.; Zijdenbos, A.P.; Harlap, J.; Vins, D.; Evans, A.C. LORIS: A web-based data management system for multi-center studies. Front. Neuroinform. 2012, 5, 37. [Google Scholar] [CrossRef]

- Hsu, W.; Antani, S.; Long, L.R.; Neve, L.; Thoma, G.R. SPIRS: A Web-based image retrieval system for large biomedical databases. Int. J. Med. Inform. 2009, 78, S13–S24. [Google Scholar] [CrossRef][Green Version]

- Kain, M.; Bodin, M.; Loury, S.; Chi, Y.; Louis, J.; Simon, M.; Lamy, J.; Barillot, C.; Dojat, M. Small Animal Shanoir (SAS) A Cloud-Based Solution for Managing Preclinical MR Brain Imaging Studies. Front. Neuroinform. 2020, 14, 20. [Google Scholar] [CrossRef]

- Marcus, D.S.; Olsen, T.R.; Ramaratnam, M.; Buckner, R.L. The extensible neuroimaging archive toolkit: An informatics platform for managing, exploring, and sharing neuroimaging data. Neuroinformatics 2007, 5, 11–33. [Google Scholar] [CrossRef]

- Ozyurt, I.B.; Keator, D.B.; Wei, D.; Fennema-Notestine, C.; Pease, K.R.; Bockholt, J.; Grethe, J.S. Federated Web-accessible Clinical Data Management within an Extensible NeuroImaging Database. Neuroinformatics 2010, 8, 231–249. [Google Scholar] [CrossRef] [PubMed]

- Zullino, S.; Paglialonga, A.; Dastrù, W.; Longo, D.L.; Aime, S. XNAT-PIC: Extending XNAT to Preclinical Imaging Centers. arXiv 2021, arXiv:2103.02044. [Google Scholar] [CrossRef] [PubMed]

- Mildenberger, P.; Eichelberg, M.; Martin, E. Introduction to the DICOM standard. Eur. Radiol. 2002, 12, 920–927. [Google Scholar] [CrossRef] [PubMed]

- Django. Django Software Foundation. 2013. Available online: https://www.djangoproject.com/ (accessed on 20 May 2022).

- Merkel, D. Docker: Lightweight linux containers for consistent development and deployment. Linux J. 2014, 239, 2. [Google Scholar]

- Traefik Labs. Available online: https://doc.traefik.io/traefik/ (accessed on 20 May 2022).

- Ziegler, E.; Urban, T.; Brown, D.; Petts, J.; Pieper, S.D.; Lewis, R.; Hafey, C.; Harris, G.J. Open Health Imaging Foundation Viewer: An Extensible Open-Source Framework for Building Web-Based Imaging Applications to Support Cancer Research. JCO Clin. Cancer Inform. 2020, 4, 336–345. [Google Scholar] [CrossRef]

- Gluster, F.S. Available online: https://www.gluster.org/ (accessed on 20 May 2022).

- Stout, D.B.; Chatziioannou, A.F.; Lawson, T.P.; Silverman, R.W.; Gambhir, S.S.; Phelps, M.E. Small Animal Imaging Center Design: The Facility at the UCLA Crump Institute for Molecular Imaging. Mol. Imaging Biol. 2005, 7, 393–402. [Google Scholar] [CrossRef][Green Version]

- Langer, S.G. Challenges for Data Storage in Medical Imaging Research. J. Digit. Imaging 2011, 24, 203–207. [Google Scholar] [CrossRef]

- Cuellar, L.K.; Friedrich, A.; Gabernet, G.; de la Garza, L.; Fillinger, S.; Seyboldt, A.; Koch, T.; Oven-Krockhaus, S.Z.; Wanke, F.; Richter, S.; et al. A data management infrastructure for the integration of imaging and omics data in life sciences. BMC Bioinform. 2022, 23, 61. [Google Scholar] [CrossRef]

- Herrick, R.; Horton, W.; Olsen, T.; McKay, M.; Archie, K.A.; Marcus, D.S. XNAT Central: Open sourcing imaging research data. NeuroImage 2016, 124 Pt B, 1093–1096. [Google Scholar] [CrossRef]

- Tapera, T.M.; Cieslak, M.; Bertolero, M.; Adebimpe, A.; Aguirre, G.K.; Butler, E.R.; Cook, P.A.; Davila, D.; Elliott, M.A.; Linguiti, S.; et al. FlywheelTools: Data Curation and Manipulation on the Flywheel Platform. Front. Neuroinform. 2021, 26, 678403. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pemmaraju, R.; Minahan, R.; Wang, E.; Schadl, K.; Daldrup-Link, H.; Habte, F. Web-Based Application for Biomedical Image Registry, Analysis, and Translation (BiRAT). Tomography 2022, 8, 1453-1462. https://doi.org/10.3390/tomography8030117

Pemmaraju R, Minahan R, Wang E, Schadl K, Daldrup-Link H, Habte F. Web-Based Application for Biomedical Image Registry, Analysis, and Translation (BiRAT). Tomography. 2022; 8(3):1453-1462. https://doi.org/10.3390/tomography8030117

Chicago/Turabian StylePemmaraju, Rahul, Robert Minahan, Elise Wang, Kornel Schadl, Heike Daldrup-Link, and Frezghi Habte. 2022. "Web-Based Application for Biomedical Image Registry, Analysis, and Translation (BiRAT)" Tomography 8, no. 3: 1453-1462. https://doi.org/10.3390/tomography8030117

APA StylePemmaraju, R., Minahan, R., Wang, E., Schadl, K., Daldrup-Link, H., & Habte, F. (2022). Web-Based Application for Biomedical Image Registry, Analysis, and Translation (BiRAT). Tomography, 8(3), 1453-1462. https://doi.org/10.3390/tomography8030117