Gorilla: An Open Interface for Smart Agents and Real-Time Power Microgrid System Simulations

Abstract

:1. Introduction

- buying, installing, and configuring all equipment might be prohibitively expensive;

- some equipment might still be under development and might need to be accounted for with mathematical models;

- consistent replication of each possible scenario with real equipment, in particular, those involving failing or misbehaving devices, becomes unpractical because it is not designed to support such scenarios; and,

- continuous development and manufacturing processes become timewise inefficient if run in real-time.

- defining the core operations that should support most types of interaction;

- proposing a set of principles for managing various aspects of the process;

- an initial API binding for the Java™ programming language; and,

- initial test cases exercising the API.

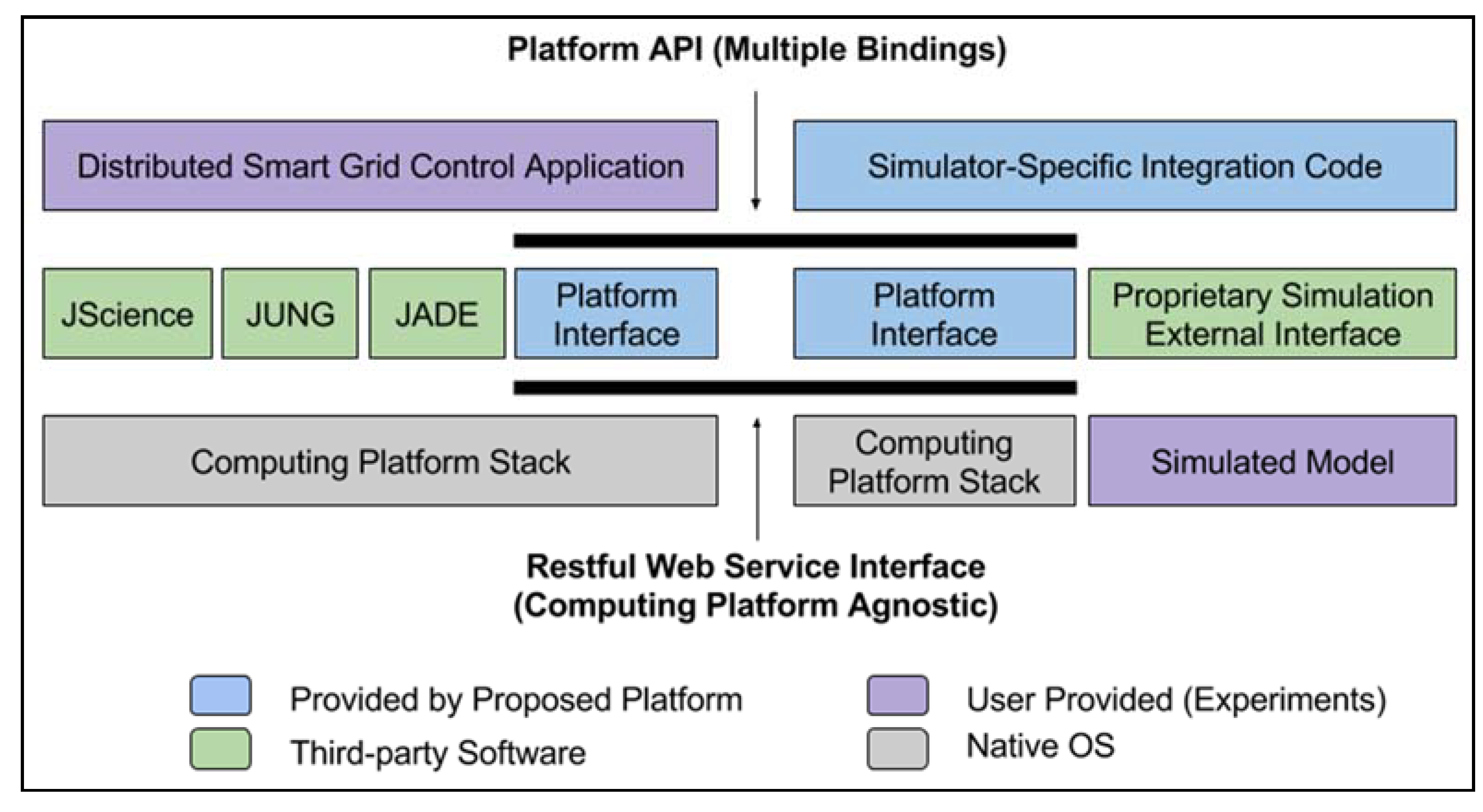

2. Proposed Application Programming Interface (API)

2.1. Goals and High-Level Architecture

- provide cross-domain, bidirectional access to data;

- support cross-domain, synchronous and asynchronous interactions;

- extensible to support all language bindings, computing and simulation platforms and networking technologies; and,

- support flexible data typing

- read/Write—Cross-domain reading/writing of variables;

- call—Cross-domain synchronous transfer of control; and,

- subscribe/Call-back—Cross-domain asynchronous event notification.

2.2. Operation Specification

2.3. Read/Write Operations

2.4. Call Operation

2.5. Subscribe Operation

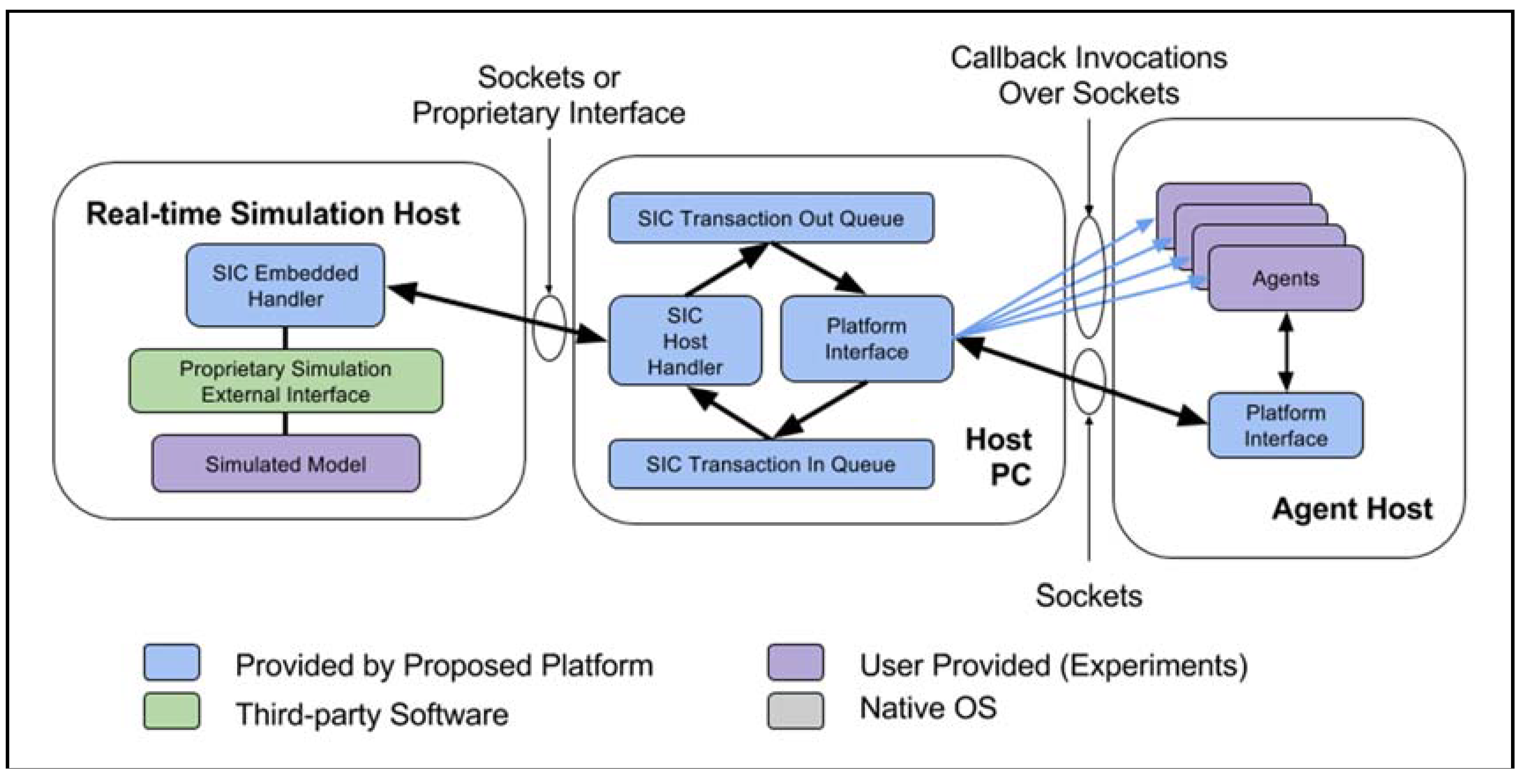

3. Applications Test Cases

3.1. Case 1: Agent-Based Voltage Control in a IEEE 13-Bus Distribution Test Feeder

- queue new or processed read, write, subscribe or call-back transactions to the SIC transaction out queue; and,

- de-queue new or returning read, write, subscribe, or call-back transactions from the SIC transaction in queue.

3.2. Case 2: Fuzzy Control for Home Microgrids

4. Results and Discussion

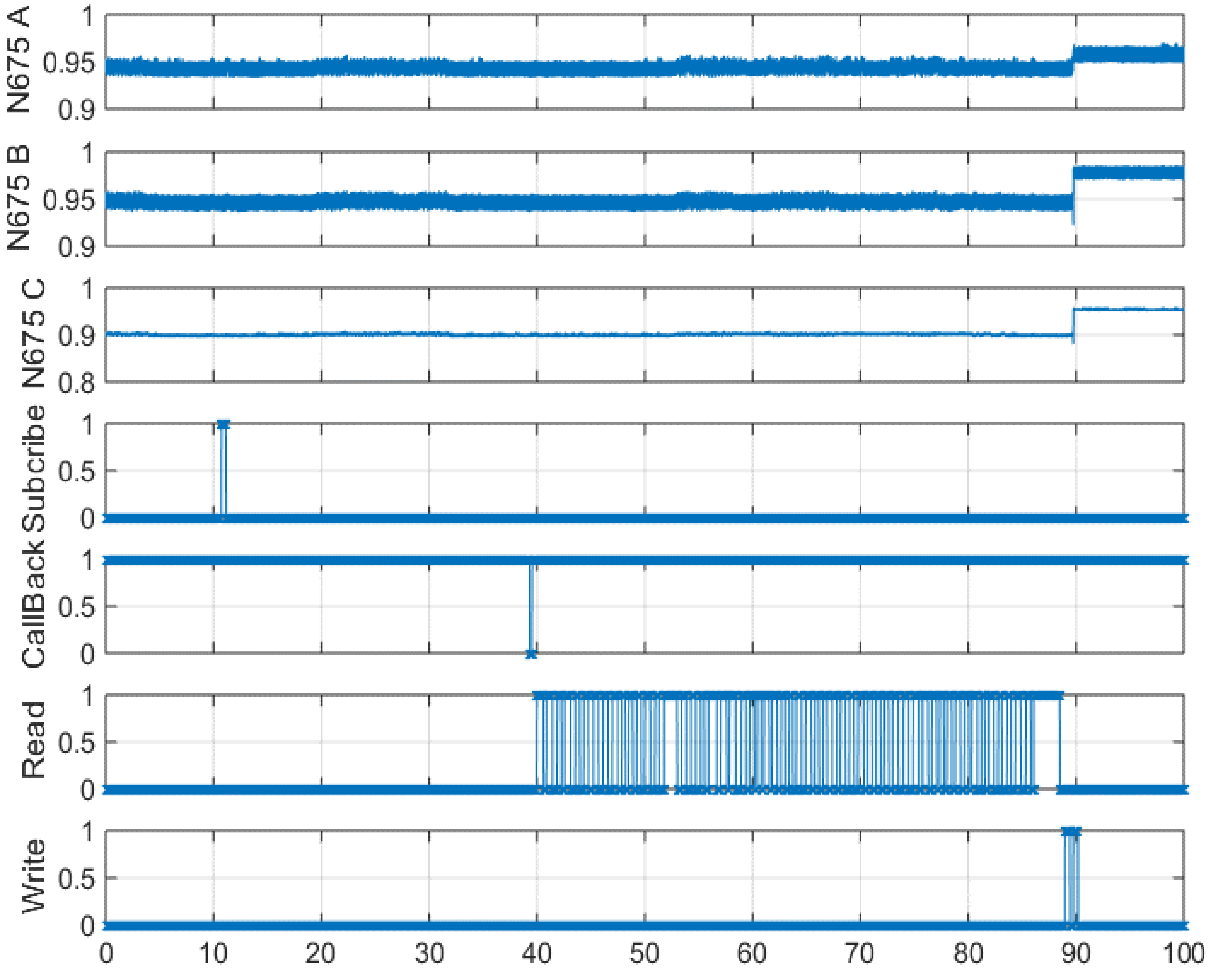

4.1. Case 1: Agent-Based Voltage Control

4.2. Case 2: Fuzzy Control for Home Microgrids

4.3. Performance Analysis of the API

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Nomenclature

| Actor | Real or simulated device or entity that interacts concurrently with others in a system |

| Agen | A software component that exhibits authority and independence to make decisions |

| Application Programming Interface (API) | A set of well-defined rules for communication between applications that depend on it and the software that provides its services |

| Client-server model | A distributed application arrangement composed of requesters (clients) and providers (servers) of a service |

| Computing Platform (Software) Stack | software components providing access to resources of the host computing platform |

| Java™ Agent Development Environment (JADE) | An environment for the development of agent-based systems with the Java™ programming language |

| JScience | An open software library for math operations using Java™ |

| Java™ Universal Network/Graph Framework (JUNG) | A framework for representing and exchanging network/graph information |

| Remote Procedure Call (RPC) | A mechanism to make an API provided by a remote host accessible to local applications by means of procedure invocations |

References

- McArthur, S.D.J.; Davidson, E.M.; Catterson, V.M. Multi-Agent Systems for Power Engineering Applications—Part I: Concepts, Approaches, and Technical Challenges. IEEE Trans. Power Syst. 2007, 22, 1743–1752. [Google Scholar] [CrossRef] [Green Version]

- Siqueira, R.; Mohagheghi, S. Impact of Communication System on Smart Grid Reliability, Security and Operation. In Proceedings of the 2016 North American Power Symposium (NAPS), Denver, CO, USA, 18–20 September 2016. [Google Scholar]

- Lin, H.; Veda, S.S.; Shukla, S.S.; Mili, L.; Thorp, J. GECO: Global Event-Driven Co-Simulation Framework for Interconnected Power System and Communication Network. IEEE Trans. Smart Grid 2012, 3, 1444–1456. [Google Scholar] [CrossRef]

- Hopkinson, K.; Wang, X.; Giovanini, R.; Thorp, J.; Birman, K.; Coury, D. EPOCHS: A platform for agent-based electric power and communication simulation built from commercial off-the-shelf components. IEEE Trans. Power Syst. 2006, 21, 548–558. [Google Scholar] [CrossRef]

- Rohjans, S.; Lehnhoff, S.; Schütte, S.; Scherfke, S.; Hussain, S. Mosaik—A modular platform for the evaluation of agent-based Smart Grid control. In Proceedings of the IEEE PES ISGT Europe 2013, Lyngby, Denmark, 6–9 October 2013; pp. 1–5. [Google Scholar]

- Taylor, S.J.E.; Sudra, R.; Janahan, T.; Tan, G.; Ladbrook, J. Towards COTS distributed simulation using GRIDS. In Proceedings of the 33nd Conference on Winter simulation 2001, Arlington, VA, USA, 9–12 December 2001; Volume 2, pp. 1372–1379. [Google Scholar]

- Nutaro, J. Designing power system simulators for the smart grid: Combining controls communications and electro-mechanical dynamics. In Proceedings of the IEEE Power and Energy Society General Meeting 2011 (PES ‘11), Detroit, MI, USA, 24–28 July 2011; pp. 1–5. [Google Scholar]

- Nutaro, J.; Kuruganti, P.; Miller, L.; Mullen, S.; Shankar, M. Integrated hybrid-simulation of electric power and communications systems. In Proceedings of the IEEE Power Engineering Society General Meeting 2007 (PES ‘07), Tampa, FL, USA, 24–28 June 2007; pp. 1–8. [Google Scholar]

- Mets, K.; Ojea, J.A.; Develder, C. Combining Power and Communication Network Simulation for Cost-Effective Smart Grid Analysis. IEEE Commun. Surv. Tutor. 2014, 16, 1771–1796. [Google Scholar] [CrossRef]

- Tsampasis, E.; Sarakis, L.; Leligou, H.C.; Zahariadis, T.; Garofalakis, J. Novel Simulation Approaches for Smart Grids. J. Sens. Actuator Netw. 2016, 5, 11. [Google Scholar] [CrossRef]

- IEEE Standard for Modeling and Simulation (M&S). High Level Architecture (HLA)—Framework and Rules; IEEE Std 1516–2010 (Revision of IEEE Std 1516–2000); IEEE: Piscataway, NJ, USA, 2010; pp. 1–38. [Google Scholar]

- IEEE Standard for Modeling and Simulation (M&S). High Level Architecture (HLA)—Federate Interface Specification; IEEE Std 1516.1-2010 (Revision of IEEE Std 1516.1-2000); IEEE: Piscataway, NJ, USA, 2010; pp. 1–378. [Google Scholar]

- IEEE Standard for Modeling and Simulation (M&S). High Level Architecture (HLA)—Object Model Template (OMT) Specification; IEEE Std 1516.2-2010 (Revision of IEEE Std 1516.2-2000); IEEE: Piscataway, NJ, USA, 2010; pp. 1–110. [Google Scholar]

- Nguyen, C.P.; Flueck, A.J. A novel agent-based distributed power flow solver for smart grids. IEEE Trans. Smart Grid 2014, 6, 1261–1270. [Google Scholar] [CrossRef]

- Gomez-gualdron, J.G.; Velez-Reyes, M. Simulating a Multi-Agent based Self-Reconfigurable Electric Power Distribution System. In Proceedings of the 2006 IEEE Workshops on Computers in Power Electronics, Troy, NY, USA, 16–19 September 2006; pp. 1–7. [Google Scholar]

- Pipattanasomporn, M.; Feroze, H.; Rahman, S. Multi-agent systems in a distributed smart grid: Design and implementation. In Proceedings of the PSCE 09 IEEE/PES, Power Systems Conference and Exposition, Seattle, WA, USA, 15–18 March 2009; pp. 1–8. [Google Scholar]

- Kleinberg, M.; Karen, M.; Nwankpa, C. Distributed multi-phase distribution power flow: Modeling solution algorithm and simulation results. Trans. Soc. Model. Simul. Int. 2008, 84, 403–412. [Google Scholar] [CrossRef]

- Andren, F.; Stifter, M.; Strasser, T.; de Castro, D.B. Frame-work for co-ordinated simulation of power networks and components in smart grids using common communication protocols. In Proceedings of the 37th Annual Conference on IEEE Industrial Electronics Society (IECON ‘11), Melbourne, Australia, 7–10 November 2011; pp. 2700–2705. [Google Scholar]

- Roche, R.; Natarajan, S.; Bhattacharyya, A.; Suryanarayanan, S. A Framework for Co-simulation of AI Tools with Power Systems Analysis Software. In Proceedings of the 2012 23rd International Workshop on Database and Expert Systems Applications, Vienna, Austria, 3–6 September 2012; pp. 350–354. [Google Scholar]

- Vélez-Rivera, C.J.; Arzuaga-Cruz, E.; Irizarry-Rivera, A.A.; Andrade, F. Global Data Prefetching Using BitTorrent for Distributed Smart Grid Control. In Proceedings of the 2016 North American Power Symposium (NAPS), Denver, CO, USA, 18–20 September 2016. [Google Scholar]

- Yergeau, F. UTF-8, a Transformation Format of ISO 10646. STD 63, RFC3629. November 2003. Available online: https://datatracker.ietf.org/doc/rfc3629/ (accessed on 27 August 2018).

- Kersting, W. Radial distribution test feeders. In Proceedings of the IEEE Power Engineering Society Winter Meeting, Columbus, OH, USA, 28 January–1 February 2001; pp. 908–912. [Google Scholar]

- Lebron, C.; Andrade, F.; O’Neill, E.; Irizarry, A. An Intelligent Battery Management System for Home Microgrids. In Proceedings of the 7th Conference on Innovative Smart Grid Technologies, Minneapolis, MN, USA, 6–9 September 2016. [Google Scholar]

- Kersting, W. Distribution System Modeling and Analysis, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vélez-Rivera, C.J.; Andrade, F.; Arzuaga-Cruz, E.; Irizarry-Rivera, A. Gorilla: An Open Interface for Smart Agents and Real-Time Power Microgrid System Simulations. Inventions 2018, 3, 58. https://doi.org/10.3390/inventions3030058

Vélez-Rivera CJ, Andrade F, Arzuaga-Cruz E, Irizarry-Rivera A. Gorilla: An Open Interface for Smart Agents and Real-Time Power Microgrid System Simulations. Inventions. 2018; 3(3):58. https://doi.org/10.3390/inventions3030058

Chicago/Turabian StyleVélez-Rivera, Carlos J., Fabio Andrade, Emmanuel Arzuaga-Cruz, and Agustín Irizarry-Rivera. 2018. "Gorilla: An Open Interface for Smart Agents and Real-Time Power Microgrid System Simulations" Inventions 3, no. 3: 58. https://doi.org/10.3390/inventions3030058

APA StyleVélez-Rivera, C. J., Andrade, F., Arzuaga-Cruz, E., & Irizarry-Rivera, A. (2018). Gorilla: An Open Interface for Smart Agents and Real-Time Power Microgrid System Simulations. Inventions, 3(3), 58. https://doi.org/10.3390/inventions3030058