Subjective Affective Responses to Natural Scenes Require Understanding, Not Spatial Frequency Bands

Abstract

1. Introduction

2. Method

2.1. Participants

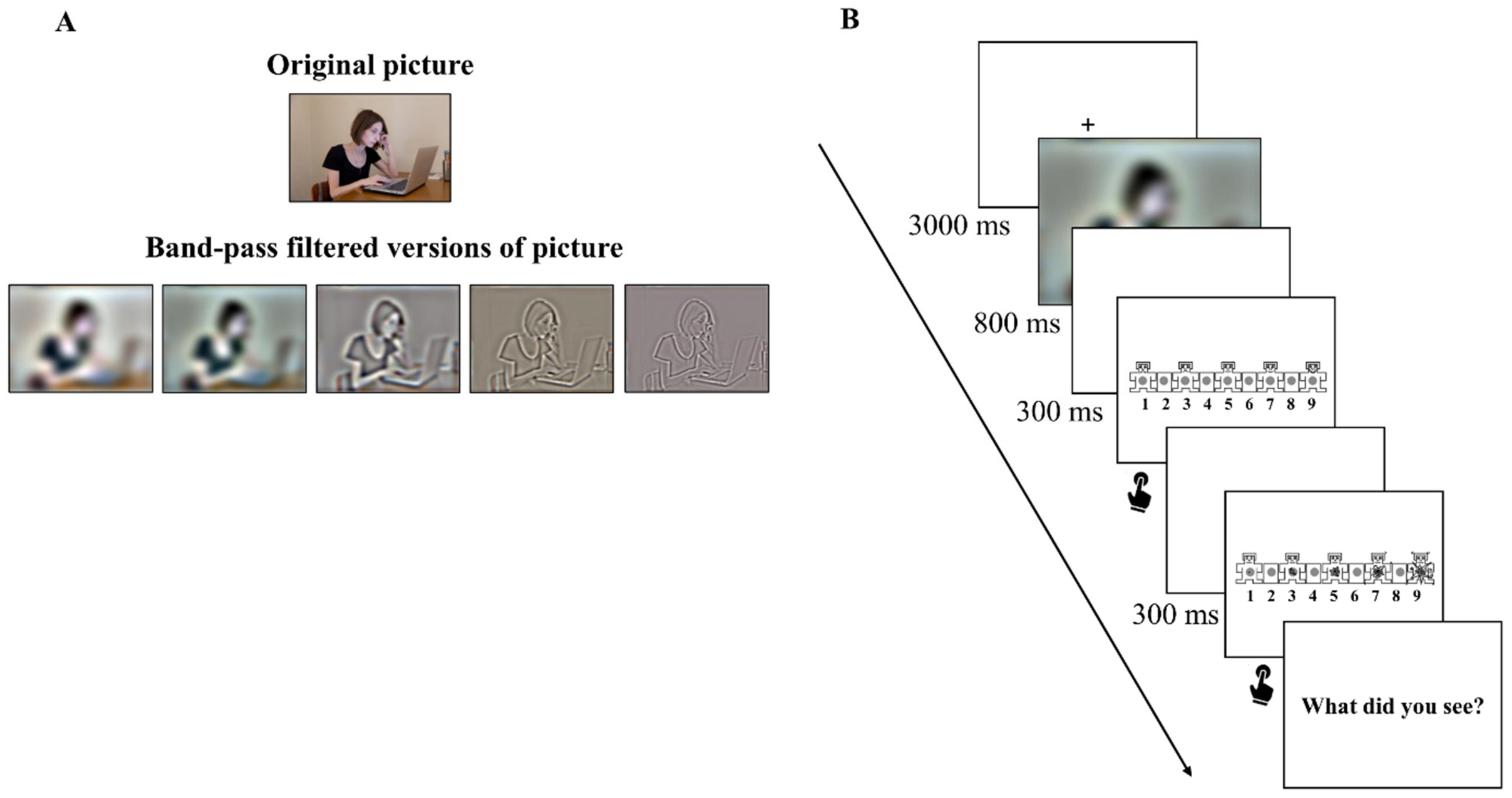

2.2. Stimuli and Equipment

2.3. Procedure

2.4. Data Analysis

3. Results

3.1. Identification Performance

3.2. Affective Response

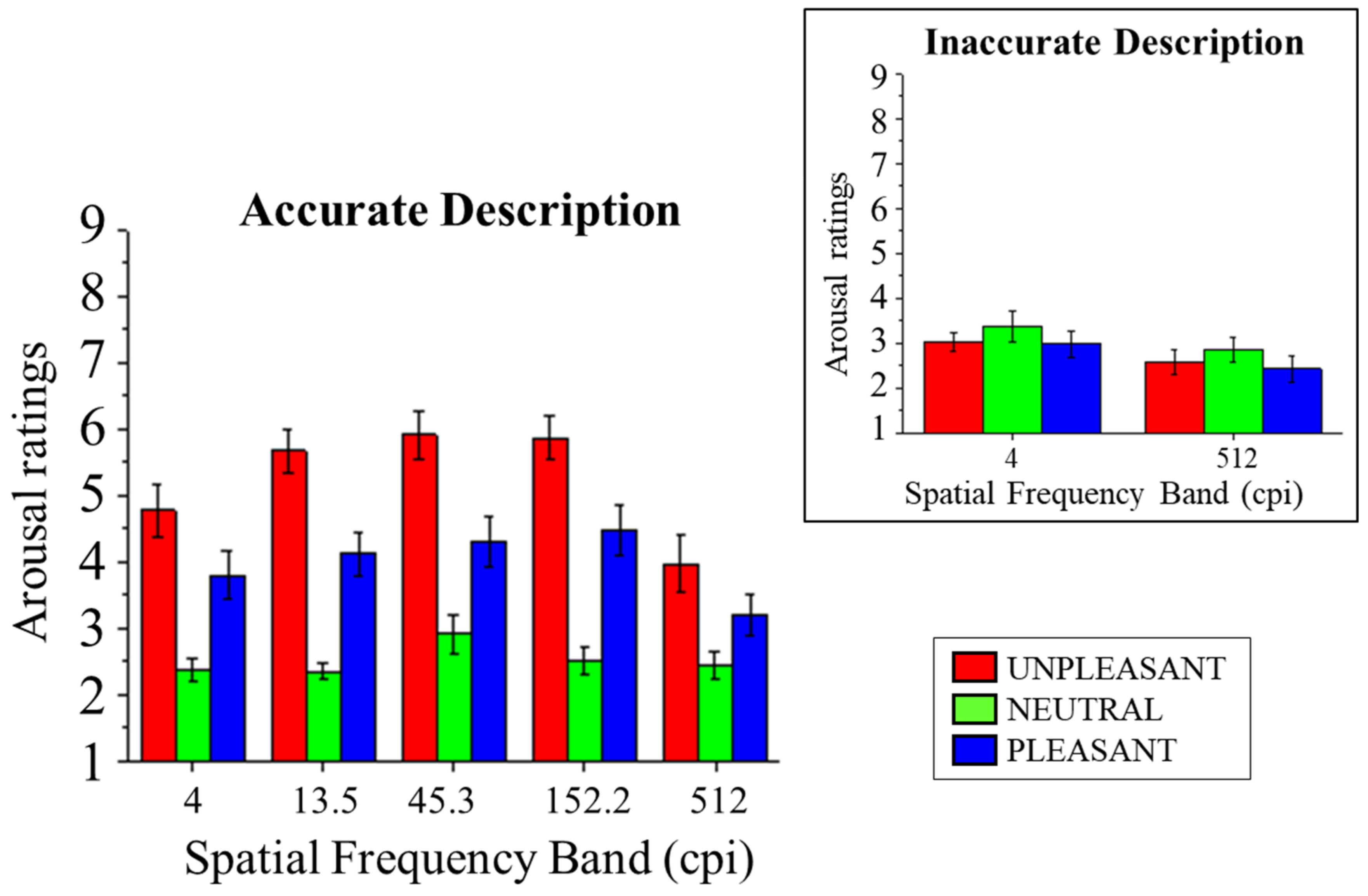

3.2.1. Arousal Ratings

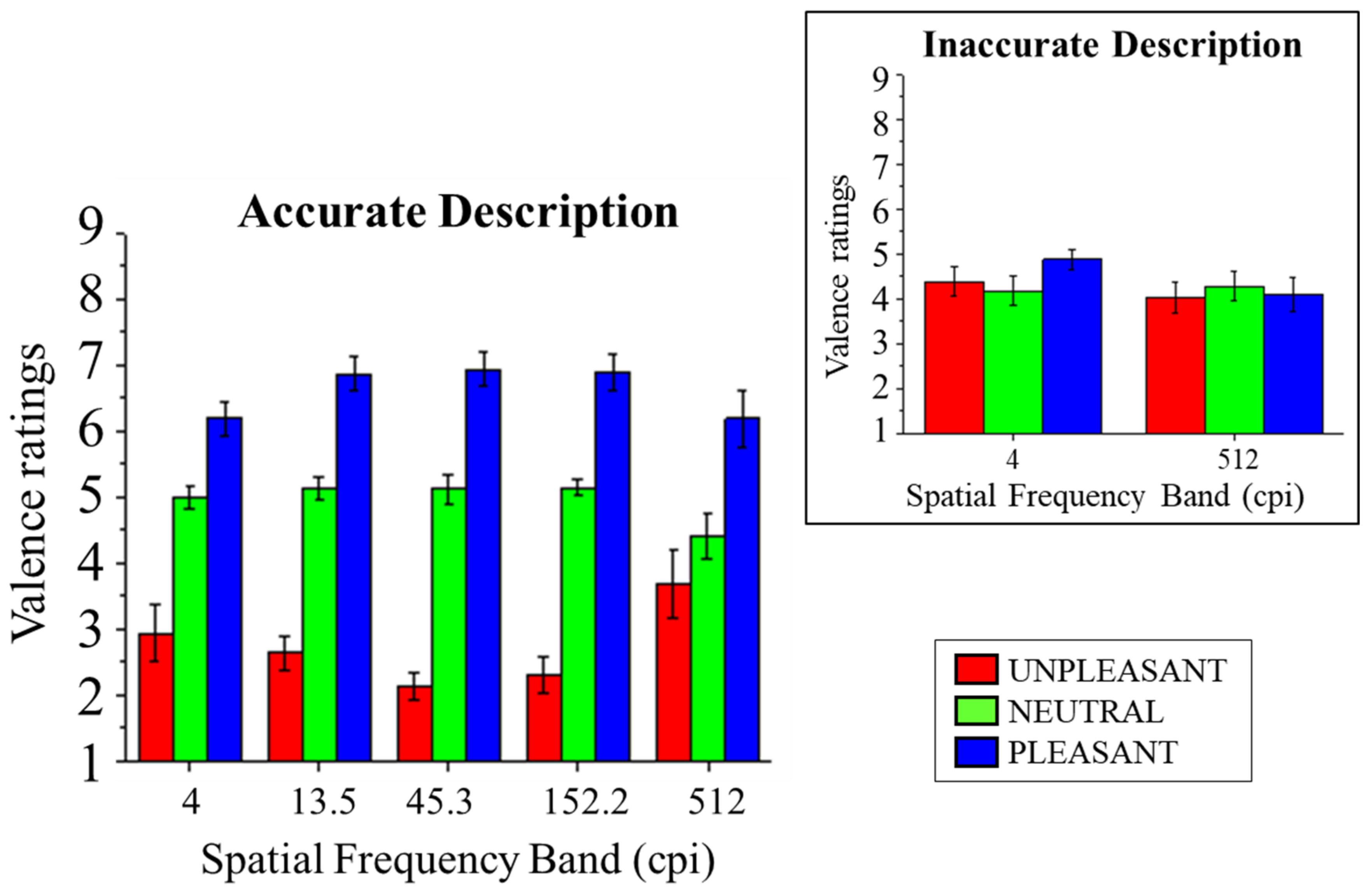

3.2.2. Valence Ratings

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bradley, M.M. Emotion and motivation. In Handbook of Psychophysiology, 2nd ed.; Cacioppo, J.T., Tassinary, L.G., Berntson, G.G., Eds.; Cambridge University Press: Cambridge, UK, 2000; pp. 602–642. [Google Scholar]

- Bradley, M.M. Natural selective attention: Orienting and emotion. Psychophysiology 2009, 46, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Bradley, M.M.; Lang, P.J. Emotion and motivation. In Handbook of Psychophysiology, 3rd ed.; Cacioppo, J.T., Tassinary, L.G., Berntson, G., Eds.; Cambridge University Press: New York, NY, USA, 2007; pp. 581–607. [Google Scholar]

- Cuthbert, B.N.; Schupp, H.T.; Bradley, M.M.; Birbaumer, N.; Lang, P.J. Brain potentials in affective picture processing: Covariation with autonomic arousal and affective report. Biol. Psychol. 2000, 52, 95–111. [Google Scholar] [CrossRef] [PubMed]

- Lang, P.J.; Greenwald, M.K.; Bradley, M.M.; Hamm, A.O. Looking at pictures: Affective, facial, visceral, and behavioral reactions. Psychophysiology 1993, 30, 261–273. [Google Scholar] [CrossRef] [PubMed]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. Motivated attention: Affect, activation, and action. In Attention and Orienting; Lang, P., Simons, R., Balaban, M., Eds.; Erlbaum: Hillsdale, NJ, USA, 1997; pp. 97–135. [Google Scholar]

- Osgood, C.E.; Suci, G.; Tannenbaum, P. The Measurement of Meaning; University of Illinois: Urbana, IL, USA, 1957. [Google Scholar]

- Russell, J.A.; Barrett, L.F. Affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. J. Personal. Soc. Psychol. 1999, 76, 805–819. [Google Scholar] [CrossRef] [PubMed]

- Schneirla, T. An evolutionary and developmental theory of biphasic processes underlying approach and withdrawal. In Nebraska Symposium on Motivation; Jones, M., Ed.; University of Nebraska Press: Lincoln, NE, USA, 1959; pp. 27–58. [Google Scholar]

- Wundt, W. Grundriss der Psychologie; Engelman: Leipzig, Germany, 1896. [Google Scholar]

- Codispoti, M.; De Cesarei, A. Arousal and attention: Picture size and emotional reactions. Psychophysiology 2007, 44, 680–686. [Google Scholar] [CrossRef] [PubMed]

- De Cesarei, A.; Codispoti, M. When does size not matter? Effects of stimulus size on affective modulation. Psychophysiology 2006, 43, 207–215. [Google Scholar] [CrossRef]

- De Cesarei, A.; Codispoti, M.; Schupp, H.T. Peripheral vision and preferential emotion processing. Neuroreport 2009, 20, 1439–1443. [Google Scholar] [CrossRef] [PubMed]

- Codispoti, M.; Mazzetti, M.; Bradley, M.M. Unmasking emotion: Exposure duration and emotional engagement. Psychophysiology 2009, 46, 731–738. [Google Scholar] [CrossRef] [PubMed]

- Shulman, G.L.; Wilson, J. Spatial frequency and selective attention to local and global information. Perception 1987, 16, 89–101. [Google Scholar] [CrossRef]

- Beligiannis, N.; Hermus, M.; Gootjes, L.; Van Strien, J.W. Both low and high spatial frequencies drive the early posterior negativity in response to snake stimuli. Neuropsychologia 2022, 177, 108403. [Google Scholar] [CrossRef]

- De Cesarei, A.; Codispoti, M. Affective modulation of the LPP and alpha-ERD during picture viewing. Psychophysiology 2011, 48, 1397–1404. [Google Scholar] [CrossRef]

- Codispoti, M.; Micucci, A.; De Cesarei, A. Time will tell: Object categorization and emotional engagement during processing of degraded natural scenes. Psychophysiology 2021, 58, e13704. [Google Scholar] [CrossRef] [PubMed]

- Storbeck, J.; Robinson, M.D.; McCourt, M. Semantic processing precedes affect retrieval: The neurological case for cognitive primacy in visual processing. Rev. Gen. Psychol. 2006, 10, 41–55. [Google Scholar] [CrossRef]

- Reisenzein, R.; Franikowski, P. On the latency of object recognition and affect: Evidence from temporal order and simultaneity judgments. J. Exp. Psychol. Gen. 2022, 151, 3060–3081. [Google Scholar] [CrossRef] [PubMed]

- De Cesarei, A.; Codispoti, M. Scene identification and emotional response: Which spatial frequencies are critical? J. Neurosci. 2011, 31, 17052–17057. [Google Scholar] [CrossRef][Green Version]

- De Cesarei, A.; Codispoti, M. Spatial frequencies and emotional perception. Rev. Neurosci. 2013, 24, 89–104. [Google Scholar] [CrossRef]

- Johnston, V.S.; Miller, D.R.; Burleson, M.H. Multiple P3s to emotional stimuli and their theoretical significance. Psychophysiology 1986, 23, 684–694. [Google Scholar] [CrossRef] [PubMed]

- Schupp, H.T.; Cuthbert, B.N.; Bradley, M.M.; Cacioppo, J.T.; Ito, T.; Lang, P.J. Affective picture processing: The late positive potential is modulated by motivational relevance. Psychophysiology 2000, 37, 257–261. [Google Scholar] [CrossRef] [PubMed]

- Schupp, H.T.; Flaisch, T.; Stockburger, J.; Junghöfer, M. Emotion and attention: Event-related brain potential studies. Prog. Brain Res. 2006, 156, 31–51. [Google Scholar] [CrossRef]

- Radilova, J. The late positive component of visual evoked response sensitive to emotional factors. Act. Nerv. Super. 1982, 3, 334–337. [Google Scholar]

- Nummenmaa, L.; Hyönä, J.; Calvo, M.G. Semantic categorization precedes affective evaluation of visual scenes. J. Exp. Psychol. Gen. 2010, 139, 222–246. [Google Scholar] [CrossRef] [PubMed]

- Mogg, K.; Bradley, B.P.; Williams, R.; Mathews, A. Subliminal processing of emotional information in anxiety and depression. J. Abnorm. Psychol. 1993, 102, 304–311. [Google Scholar] [CrossRef] [PubMed]

- Prochnow, D.; Kossack, H.; Brunheim, S.; Müller, K.; Wittsack, H.J.; Markowitsch, H.J.; Seitz, R.J. Processing of subliminal facial expressions of emotion: A behavioral and fMRI study. Soc. Neurosci. 2013, 8, 448–461. [Google Scholar] [CrossRef] [PubMed]

- Baier, D.; Kempkes, M.; Ditye, T.; Ansorge, U. Do Subliminal Fearful Facial Expressions Capture Attention? Front. Psychol. 2022, 13, 840746. [Google Scholar] [CrossRef] [PubMed]

- Tipura, E.; Pegna, A.J. Subliminal emotional faces do not capture attention under high attentional load in a randomized trial presentation. Vis. Cogn. 2022, 30, 280–288. [Google Scholar] [CrossRef]

- Tipura, E.; Renaud, O.; Pegna, A.J. Attention shifting and subliminal cueing under high attentional load: An EEG study using emotional faces. Neuroreport 2019, 30, 1251–1255. [Google Scholar] [CrossRef] [PubMed]

- Vuilleumier, P.; Armony, J.L.; Driver, J.; Dolan, R.J. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat. Neurosci. 2003, 6, 624–631. [Google Scholar] [CrossRef]

- Mermillod, M.; Droit-Volet, S.; Devaux, D.; Schaefer, A.; Vermeulen, N. Are coarse scales sufficient for fast detection of visual threat? Psychol. Sci. 2010, 21, 1429–1437. [Google Scholar] [CrossRef]

- Nakashima, T.; Goto, Y.; Abe, T.; Kaneko, K.; Saito, T.; Makinouchi, A.; Tobimatsu, S. Electrophysiological evidence for sequential discrimination of positive and negative facial expressions. Clin. Neurophysiol. 2008, 119, 1803–1811. [Google Scholar] [CrossRef]

- Schyns, P.G.; Petro, L.S.; Smith, M.L. Transmission of facial expressions of emotion co-evolved with their efficient decoding in the brain: Behavioral and brain evidence. PLoS ONE 2009, 4, e5625. [Google Scholar] [CrossRef]

- Smith, F.W.; Schyns, P.G. Smile through your fear and sadness: Transmitting and identifying facial expression signals over a range of viewing distances. Psychol. Sci. 2009, 20, 1202–1208. [Google Scholar] [CrossRef] [PubMed]

- Alorda, C.; Serrano-Pedraza, I.; Campos-Bueno, J.J.; Sierra-Vázquez, V.; Montoya, P. Low spatial frequency filtering modulates early brain processing of affective complex pictures. Neuropsychologia 2007, 45, 3223–3233. [Google Scholar] [CrossRef] [PubMed]

- Gomes, N.; Soares, S.C.; Silva, S.; Silva, C.F. Mind the snake: Fear detection relies on low spatial frequencies. Emotion 2018, 18, 886. [Google Scholar] [CrossRef] [PubMed]

- Öhman, A. The role of the amygdala in human fear: Automatic detection of threat. Psychoneuroendocrinology 2005, 30, 953–958. [Google Scholar] [CrossRef] [PubMed]

- Erdfelder, E.; Faul, F.; Buchner, A. GPOWER: A general power analysis program. Behav. Res. Methods Instrum. Comput. 1996, 28, 1–11. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioural Sciences; Academic Press: Cambridge, MA, USA, 1969. [Google Scholar]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; Technical Report A-8; University of Florida: Gainesville, FL, USA, 2008. [Google Scholar]

- Mathôt, S.; Schreij, D.; Theeuwes, J. OpenSesame: An open-source, graphical experiment builder for the social sciences. Behav. Res. Methods 2012, 44, 314–324. [Google Scholar] [CrossRef] [PubMed]

- Lang, P.J. Behavioral treatment and bio-behavioral assessment: Computer applications. In Technology in Mental Health Care Delivery; Sidowski, B., Johnson, J.H., Williams, T.A., Eds.; Ablex: Norwood, NJ, USA, 1980; pp. 119–137. [Google Scholar]

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef] [PubMed]

- Schlomer, G.L.; Bauman, S.; Card, N.A. Best practices for missing data management in counseling psychology. J. Couns. Psychol. 2010, 57, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Honaker, J.; King, G.; Blackwell, M. Amelia II: A Program for Missing Data. J. Stat. Softw. 2011, 45, 1–47. [Google Scholar] [CrossRef]

- O’Brien, F.; Cousineau, D. Representing error bars in within-subject designs in typical software packages. Quant. Methods Psychol. 2014, 10, 56–67. [Google Scholar] [CrossRef]

- Cuthbert, B.N.; Schupp, H.T.; Bradley, M.; McManis, M.; Lang, P.J. Probing affective pictures: Attended startle and tone probes. Psychophysiology 1998, 35, 344–347. [Google Scholar] [CrossRef] [PubMed]

- Greenwald, M.K.; Cook, E.W., III; Lang, P.J. Affective judgment and psychophysiological response: Dimensional covariation in the evaluation of pictorial stimuli. J. Psychophysiol. 1989, 3, 51–64. [Google Scholar]

- Lang, P.; Bradley, M. Emotion and the motivational brain. Biol. Psychol. 2010, 84, 437–450. [Google Scholar] [CrossRef]

- Bradley, M.M.; Codispoti, M.; Cuthbert, B.N.; Lang, P.J. Emotion and motivation I: Defensive and appetitive reactions in picture processing. Emotion 2001, 1, 276–298. [Google Scholar] [CrossRef]

- Dickinson, A.; Dearing, M.F. Appetitive–aversive interactions and inhibitory processes. In Mechanisms of Learning and Motivation; Dickinson, A., Boakes, R.A., Eds.; Erlbaum: Hillsdale, NJ, USA, 1979; pp. 203–231. [Google Scholar]

- Konorski, J. Integrative Activity of the Brain: An Interdisciplinary Approach; University of Chicago Press: Chicago, IL, USA, 1967. [Google Scholar]

- De Cesarei, A.; Codispoti, M. Effects of Picture Size Reduction and Blurring on Emotional Engagement. PLoS ONE 2010, 5, e13399. [Google Scholar] [CrossRef] [PubMed]

- De Cesarei, A.; Loftus, G.R. Global and local vision in natural scene identification. Psychon. Bull. Rev. 2011, 18, 840–847. [Google Scholar] [CrossRef] [PubMed]

- De Cesarei, A.; Loftus, G.R.; Mastria, S.; Codispoti, M. Understanding natural scenes: Contributions of image statistics. Neurosci. Biobehav. Rev. 2017, 74, 44–57. [Google Scholar] [CrossRef] [PubMed]

- Loftus, G.R.; Harley, E.M. Why is it easier to identify someone close than far away? Psychon. Bull. Rev. 2005, 12, 43–65. [Google Scholar] [CrossRef] [PubMed]

- De Cesarei, A.; Codispoti, M. Fuzzy picture processing: Effects of size reduction and blurring on emotional processing. Emotion 2008, 8, 352–363. [Google Scholar] [CrossRef]

- Löw, A.; Lang, P.J.; Smith, J.C.; Bradley, M.M. Both Predator and Prey: Emotional Arousal in Threat and Reward. Psychol. Sci. 2008, 19, 865–873. [Google Scholar] [CrossRef]

- Flaisch, T.; Junghöfer, M.; Bradley, M.M.; Schupp, H.T.; Lang, P.J. Rapid picture processing: Affective primes and targets. Psychophysiology 2008, 45, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Flaisch, T.; Stockburger, J.; Schupp, H.T. Affective prime and target picture processing: An ERP analysis of early and late interference effects. Brain Topogr. 2008, 20, 183–191. [Google Scholar] [CrossRef] [PubMed]

- Schupp, H.T.; Schmälzle, R.; Flaisch, T.; Weike, A.I.; Hamm, A.O. Affective picture processing as a function of preceding picture valence: An ERP analysis. Biol. Psychol. 2012, 91, 81–87. [Google Scholar] [CrossRef] [PubMed]

- Andreatta, M.; Winkler, M.H.; Collins, P.; Gromer, D.; Gall, D.; Pauli, P.; Gamer, M. VR for Studying the Neuroscience of Emotional Responses. In Virtual Reality in Behavioral Neuroscience: New Insights and Methods; Current Topics in Behavioral Neurosciences; Maymon, C., Grimshaw, G., Wu, Y.C., Eds.; Springer: Cham, Switzerland, 2023; Volume 65. [Google Scholar] [CrossRef]

- Maymon, C.; Wu, Y.C.; Grimshaw, G. The Promises and Pitfalls of Virtual Reality. In Virtual Reality in Behavioral Neuroscience: New Insights and Methods; Current Topics in Behavioral Neurosciences; Maymon, C., Grimshaw, G., Wu, Y.C., Eds.; Springer: Cham, Switzerland, 2023; Volume 65. [Google Scholar] [CrossRef]

| Emotional Content | ||||

|---|---|---|---|---|

| Spatial Frequency Band | Description Accuracy | Unpleasant | Neutral | Pleasant |

| Lowest (4 cpi) | Inaccurate | 78 | 38 | 52 |

| Accurate | 12 | 52 | 38 | |

| 13.5 cpi | Inaccurate | 36 | 6 | 2 |

| Accurate | 54 | 84 | 88 | |

| 54.3 cpi | Inaccurate | 18 | 1 | 1 |

| Accurate | 72 | 89 | 89 | |

| 152.2 cpi | Inaccurate | 26 | 3 | 0 |

| Accurate | 64 | 87 | 90 | |

| Highest (512 cpi) | Inaccurate | 84 | 51 | 74 |

| Accurate | 6 | 39 | 16 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mastria, S.; Codispoti, M.; Tronelli, V.; De Cesarei, A. Subjective Affective Responses to Natural Scenes Require Understanding, Not Spatial Frequency Bands. Vision 2024, 8, 36. https://doi.org/10.3390/vision8020036

Mastria S, Codispoti M, Tronelli V, De Cesarei A. Subjective Affective Responses to Natural Scenes Require Understanding, Not Spatial Frequency Bands. Vision. 2024; 8(2):36. https://doi.org/10.3390/vision8020036

Chicago/Turabian StyleMastria, Serena, Maurizio Codispoti, Virginia Tronelli, and Andrea De Cesarei. 2024. "Subjective Affective Responses to Natural Scenes Require Understanding, Not Spatial Frequency Bands" Vision 8, no. 2: 36. https://doi.org/10.3390/vision8020036

APA StyleMastria, S., Codispoti, M., Tronelli, V., & De Cesarei, A. (2024). Subjective Affective Responses to Natural Scenes Require Understanding, Not Spatial Frequency Bands. Vision, 8(2), 36. https://doi.org/10.3390/vision8020036