Vision-Based Structural Monitoring: Application to a Medium-Span Post-Tensioned Concrete Bridge under Vehicular Traffic

Abstract

:1. Introduction

- The measure of displacements is possible even in the very common case of absence of stationary points close to the structure to be monitored, e.g., bridges over valleys or rivers where displacement transducers cannot be installed.

- Comprehensive information can be obtained from a single video camera, i.e., displacements in the plane orthogonal to the optical axis of one or more selected portions of the acquired images can be extracted, allowing the identifications of deformations and rotations derived from the planar translational displacement field. The third dimension can be added with a second video camera in a proper vantage point.

- Efficient and effective video processing algorithms for displacement extractions are available in the libraries of many programming languages, permitting a relatively easy implementation of dedicated structural monitoring software that could also integrate the simultaneous use of video processing and contact sensors, e.g., accelerometers, strain gages, displacements transducers, and inclinometers.

- Two different methodologies can coexist and be combined in the same experimental campaign: (1) real-time processing of images for displacement extraction of selected points (only extracted displacement time histories can be stored in this case without the necessity to save space-consuming videos); (2) post-processing for the extraction of displacements without necessarily pre-defining the specific points of attention in the video (the entire video footage is stored in this case for subsequent analysis).

- Possible integration within the same video hardware of different applications such as structural static and dynamic monitoring together with inspection, surface damage detection, and integrity evaluation, e.g., [5,31,32,33,34], as well as security and/or traffic surveillance, e.g., [7], opening the way to cost-effective multi-purpose permanent installations.

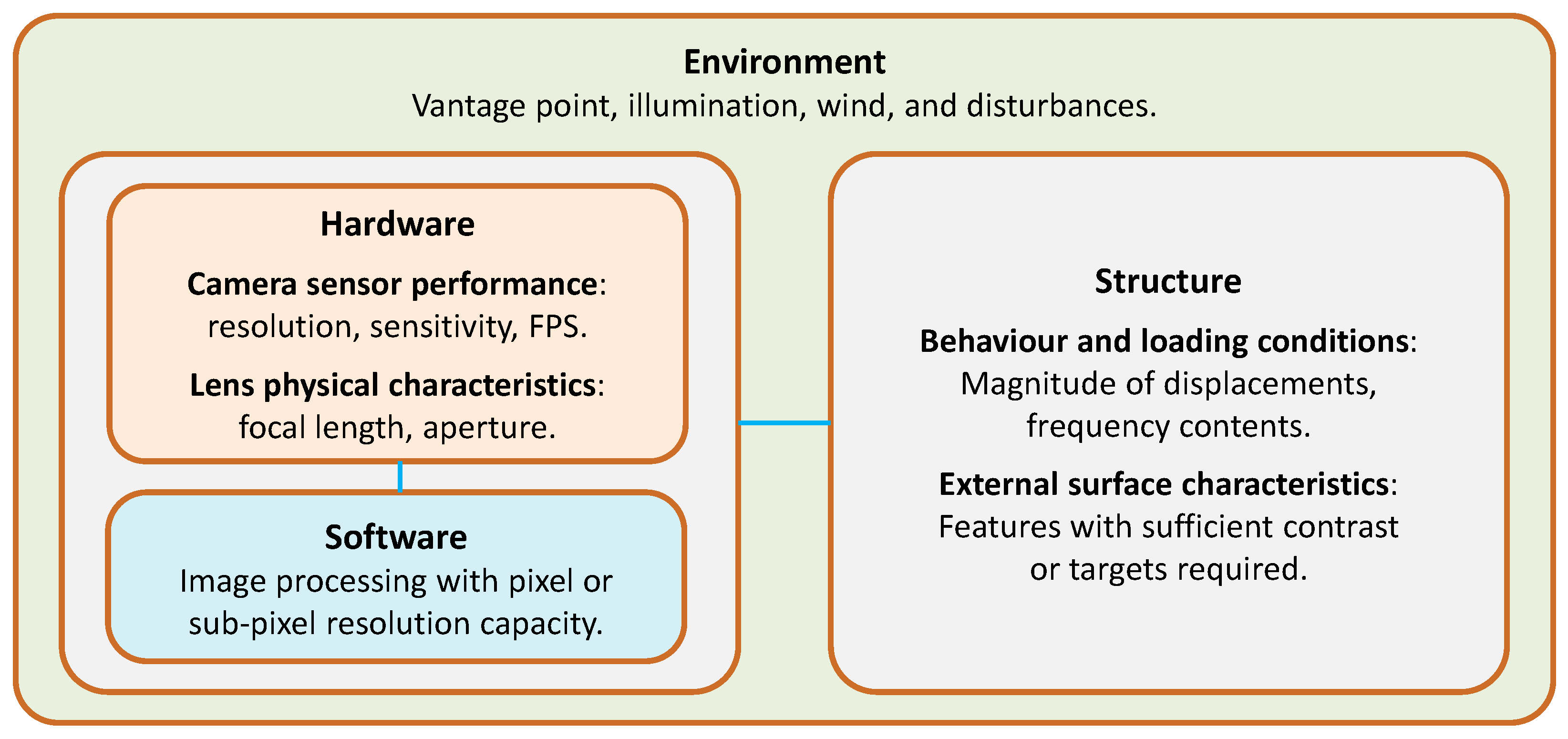

- The absolute displacement (spatial) resolution depends on the environment (distance of the camera from the target) as well as on the hardware and software specifications/settings.

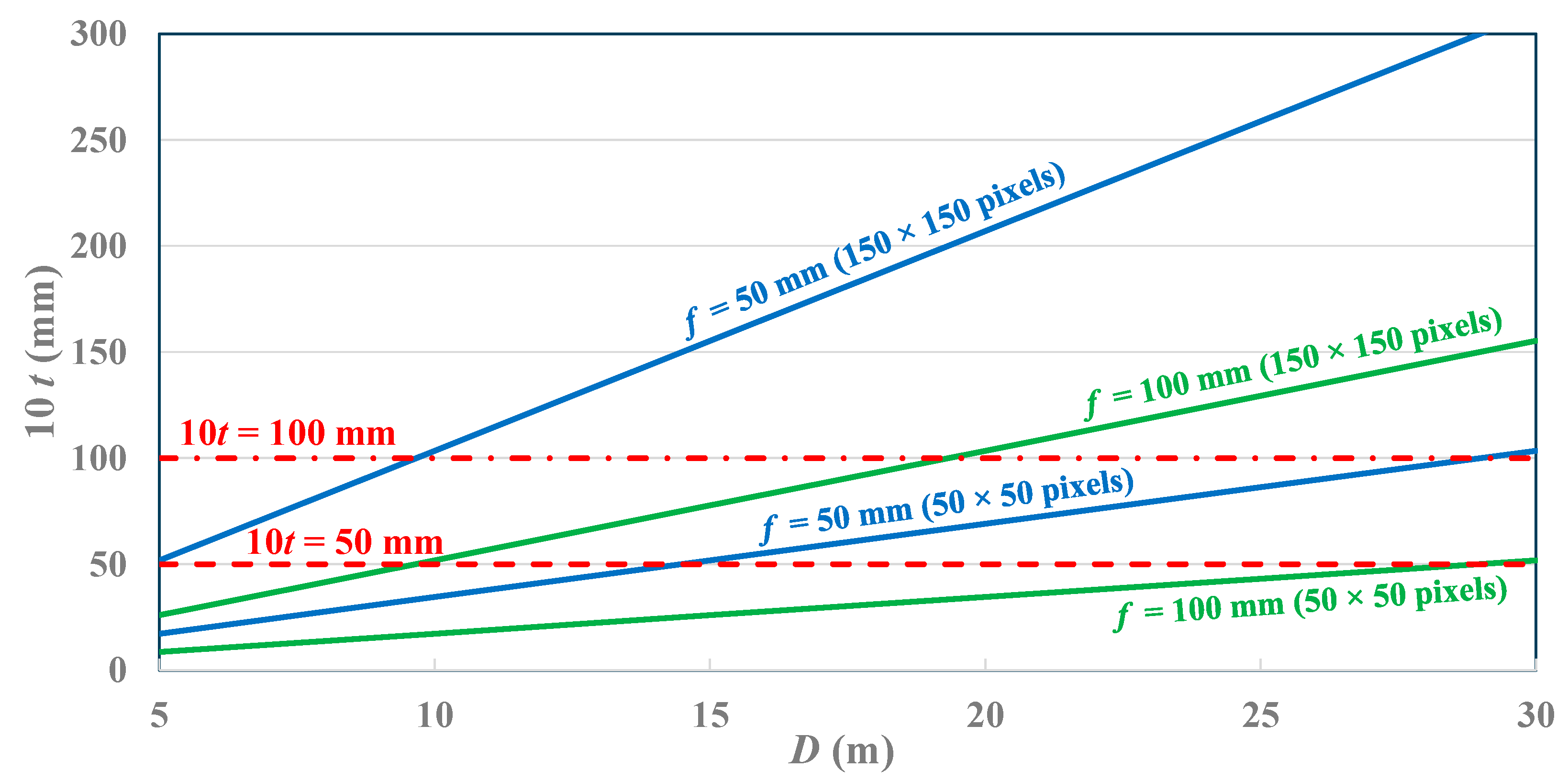

- The scale factor (SF), defined as the ratio between the physical dimension and pixel number, is determined by the sensor pixel dimensions (hardware specifications/settings), the distance of the camera from the target (environment), and the focal length of the adopted lens (hardware specification) in the case of an optical axis perpendicular to the surface of the structure being monitored.

- Although the condition of an optical axis perpendicular to the structural surface is very difficult to be satisfied in many real conditions, studies on the influence of the tilt angle [1] showed that errors in displacement estimation are not significant in practical applications, e.g., about 1% for a tilt angle of 30° when using a 50 mm focal length. In addition, it was found that errors are reduced when the focal length increases. Accordingly, inaccuracy from optical tilt angles can be generally neglected.

- More important than the absolute displacement resolution is the resolution relative to the magnitude of the displacements being monitored in the structure, which depends on the structural stiffness properties and loading conditions.

- The frequency of data acquisition is determined by the hardware (sensor sensitivity and lens light-gathering ability) and by the environment (illumination conditions). For example, for a given illumination intensity, a sensor with higher sensitivity allows a shorter exposition time and, hence, more frames per second (FPS) can be recorded; the same goes when using a lens with a smaller focal ratio, i.e., the ratio between the focal length and aperture diameter, gathering more light and, accordingly, permitting a shorter exposition time.

- High values of FPS might be incompatible with real-time tracking elaboration of displacements, depending on the speed of the processing software and hardware being used. If this is the case, only structural monitoring based on the post-processing of video footages might be used.

- The type of targets, i.e., artificial (high-contrast markers) or natural (surface features), can have an influence on time resolution (a higher contrast permits a lower exposition time and hence a higher FPS) as well as on spatial resolution (properly dimensioned targets might maximise SF), with a non-negligible difference in terms of the final accuracy and repeatability of measures.

- Image distortions could be induced by unfavourable environmental conditions such as heat haze; these distortions are inevitably amplified by the distance between the camera and the target (again an environmental factor). On the other hand, image distortions induced by the hardware have negligible influence, especially in the case of a high quality fixed-focal lens.

- Other adverse environmental conditions might be vibrations transmitted to the camera, either by the ground through the tripod, by the connecting cables, or directly by the wind or other source of noise; the negative influence of vibrations induced in the video camera is amplified by the distance of the camera from the target and by longer focal lengths. Inevitably, the negative influence of noise induced by the surrounding environment are expected to affect more the measurements of small displacement magnitudes.

- In concrete bridges, the deflections expected are generally lower and their frequency contents generally higher as compared to those found in steel bridges investigated in previous studies; thus, concrete bridges are a more demanding testbed for vision-based monitoring.

- The large number of concrete bridges built in the second half of the 20th century are approaching or are already at the end of their service life; thus, the demand for their monitoring is expected to rapidly rise in the near future [41]. Accordingly, a cost-effective monitoring solution providing comprehensive information has strategic importance for the security of our infrastructures [42,43,44,45,46,47,48,49,50].

- Analysis of the performance of a cost-effective vision-based monitoring system as compared to a system based on contact sensors, considering the influence of the operator and hardware settings.

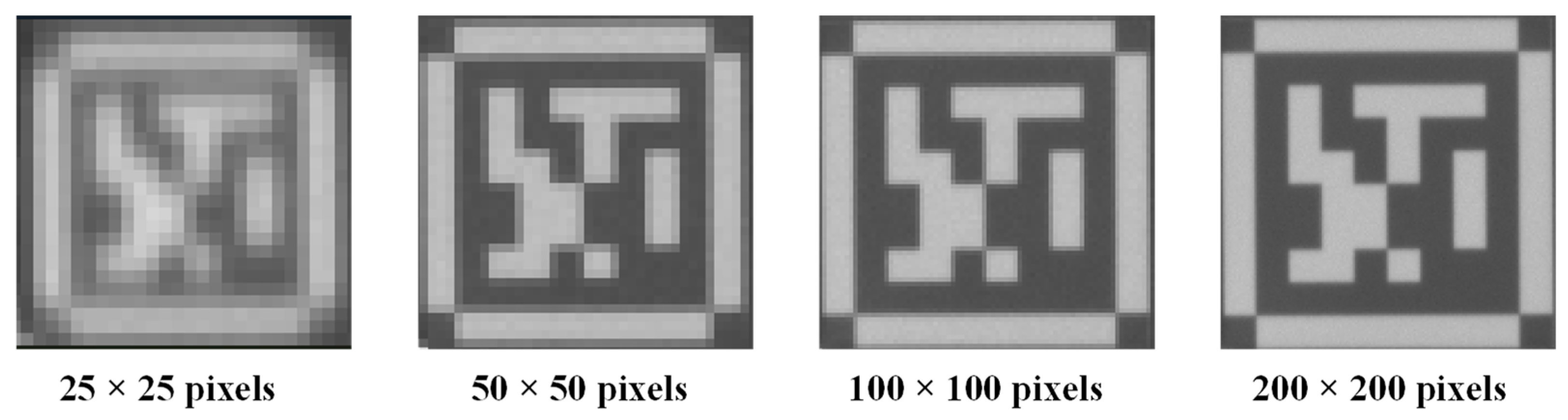

- Indications for the optimal design of targets and the definition of the target area as a function of sensor resolution, distance of the camera from the target, and available focal lengths.

- Identification of the physical and technological limits of a vision-based structural monitoring system in real-world field conditions.

2. Materials and Methods

2.1. Image Acquisition and Processing Procedures

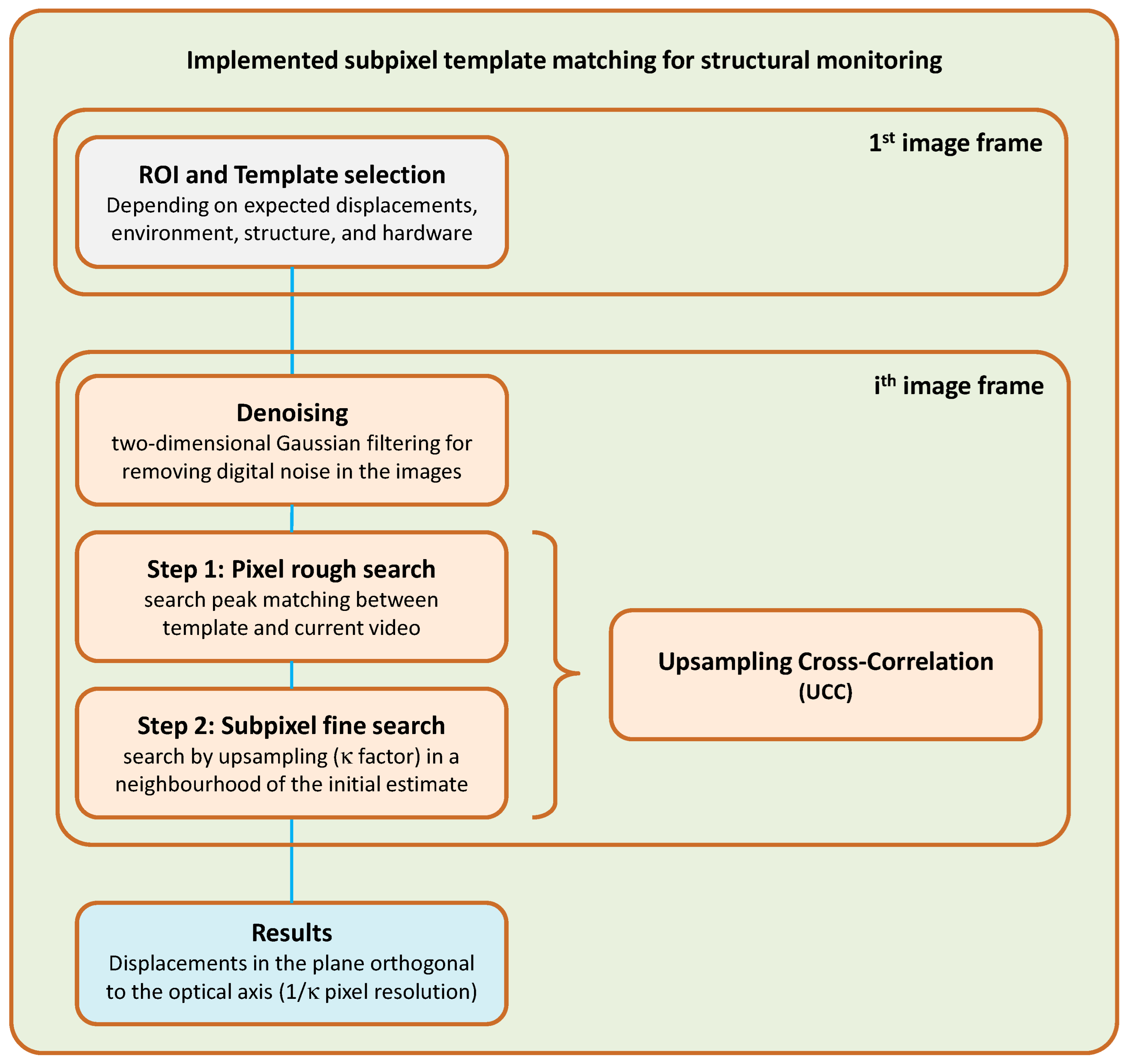

- A portion of the image frame, called the Region of Interest (ROI), is selected by the user and within the ROI a template is chosen. The motion of the template will be tracked only in the ROI; hence, the margin between the template and ROI must contain the maximum displacement that will be experienced during monitoring.

- Digital noise in each image frame is reduced prior to the application of the template matching algorithm through two-dimensional Gaussian filtering as implemented in MATLAB [51]. Such image denoising was found to be very beneficial in reducing the noise of the extracted displacement time histories.

- Cross-correlation peak matching is performed to identify the displacement of the template within the ROI in two steps: (1) a pixel-level rough search providing a preliminary estimation of the displacements with pixel resolution; (2) a subpixel fine search within a neighbourhood of the initial estimation achieving 1/κ pixel resolution where κ is the assigned upsampling factor (integer value). Analytical details can be found in [1,35].

- The extracted displacements in two orthogonal directions in the plane perpendicular to the optical axis are given as the final output.

2.2. Vision-Based Hardware

2.3. Scale Factor and Resolution

2.4. Target Design

3. Experimental Campaign

3.1. Case Study Bridge

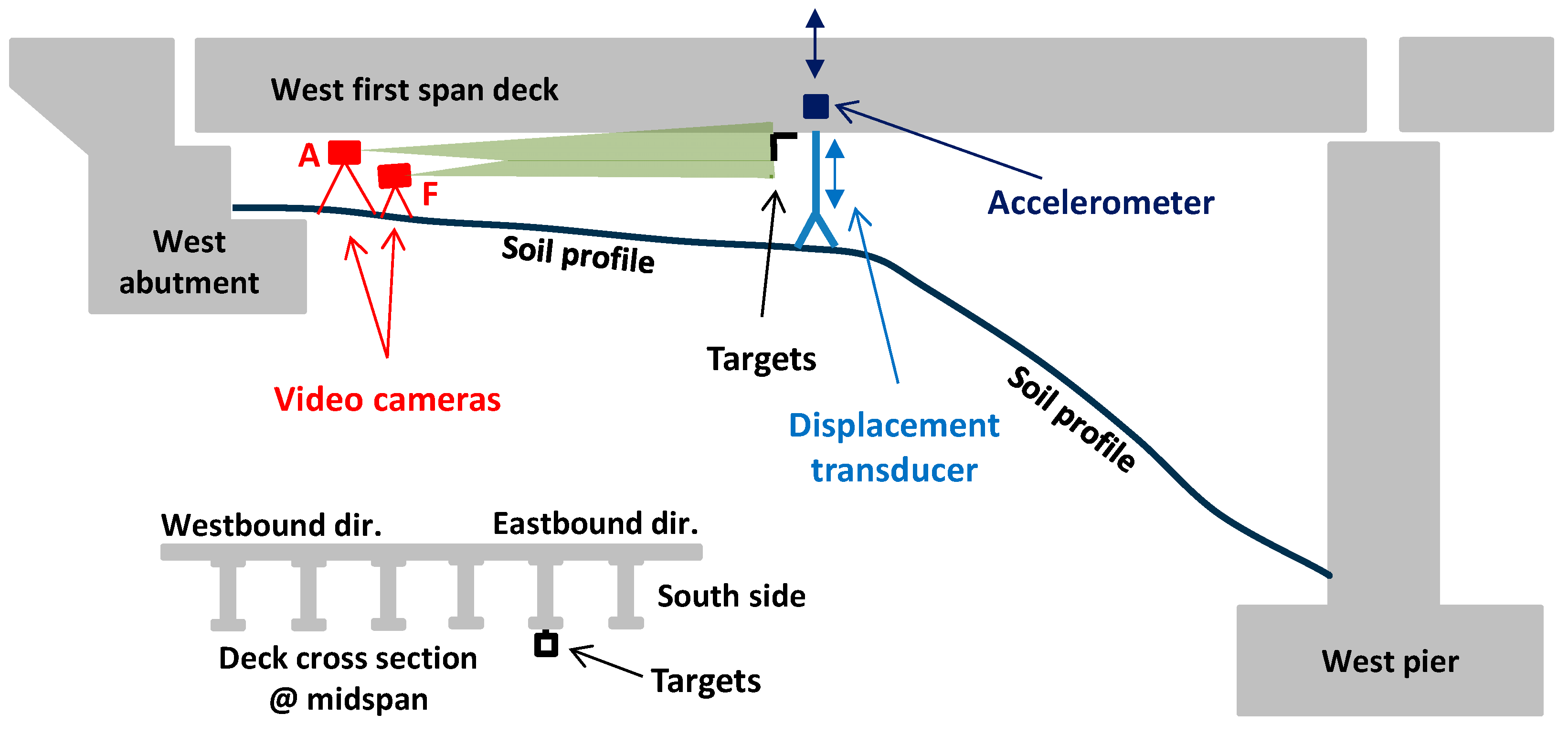

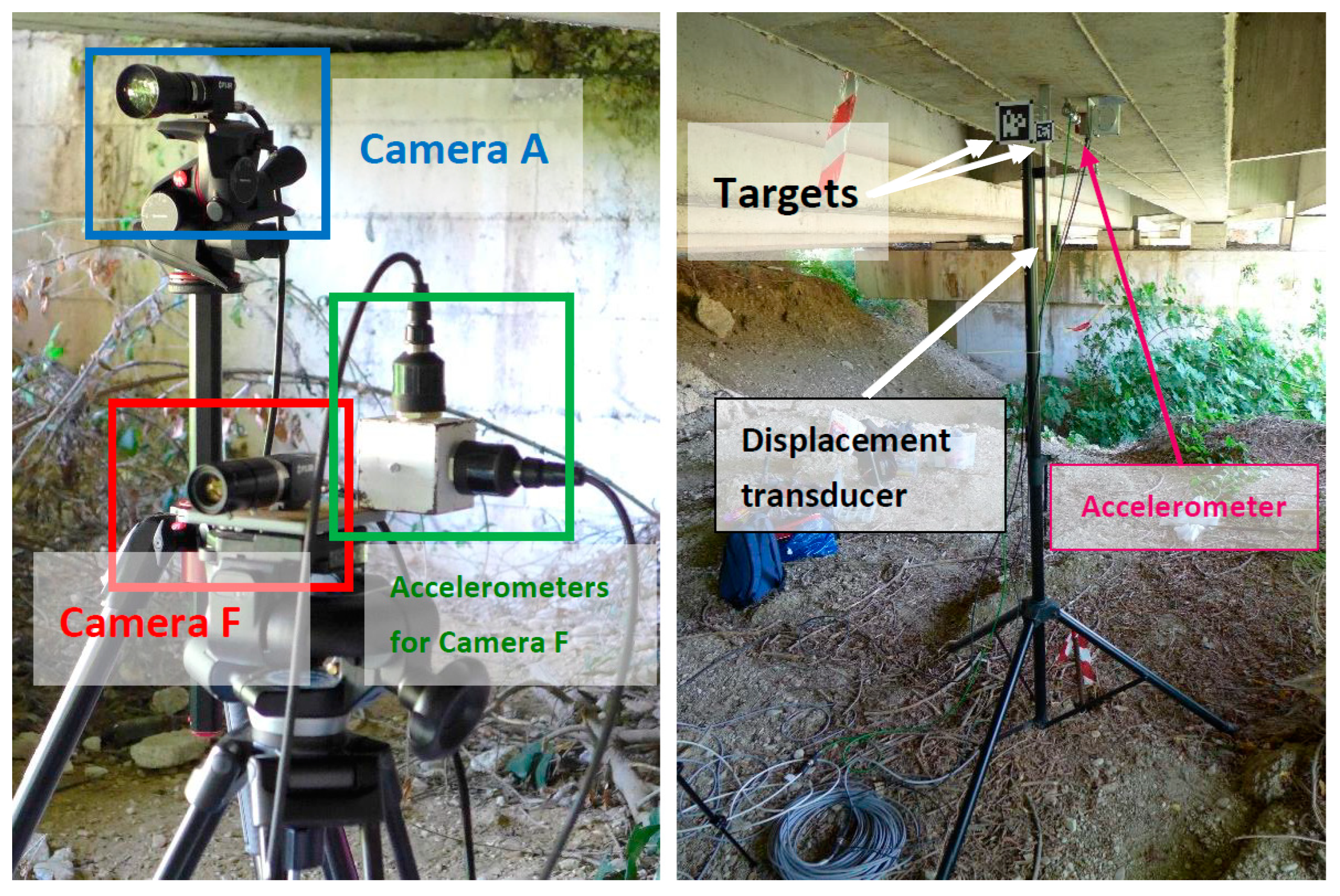

3.2. Vision-Based Hardware Installation

3.3. Contact-Based Hardware Installation

3.4. Camera Calibration and Settings

3.5. Field Measurements

3.6. Signal Alignment

4. Results

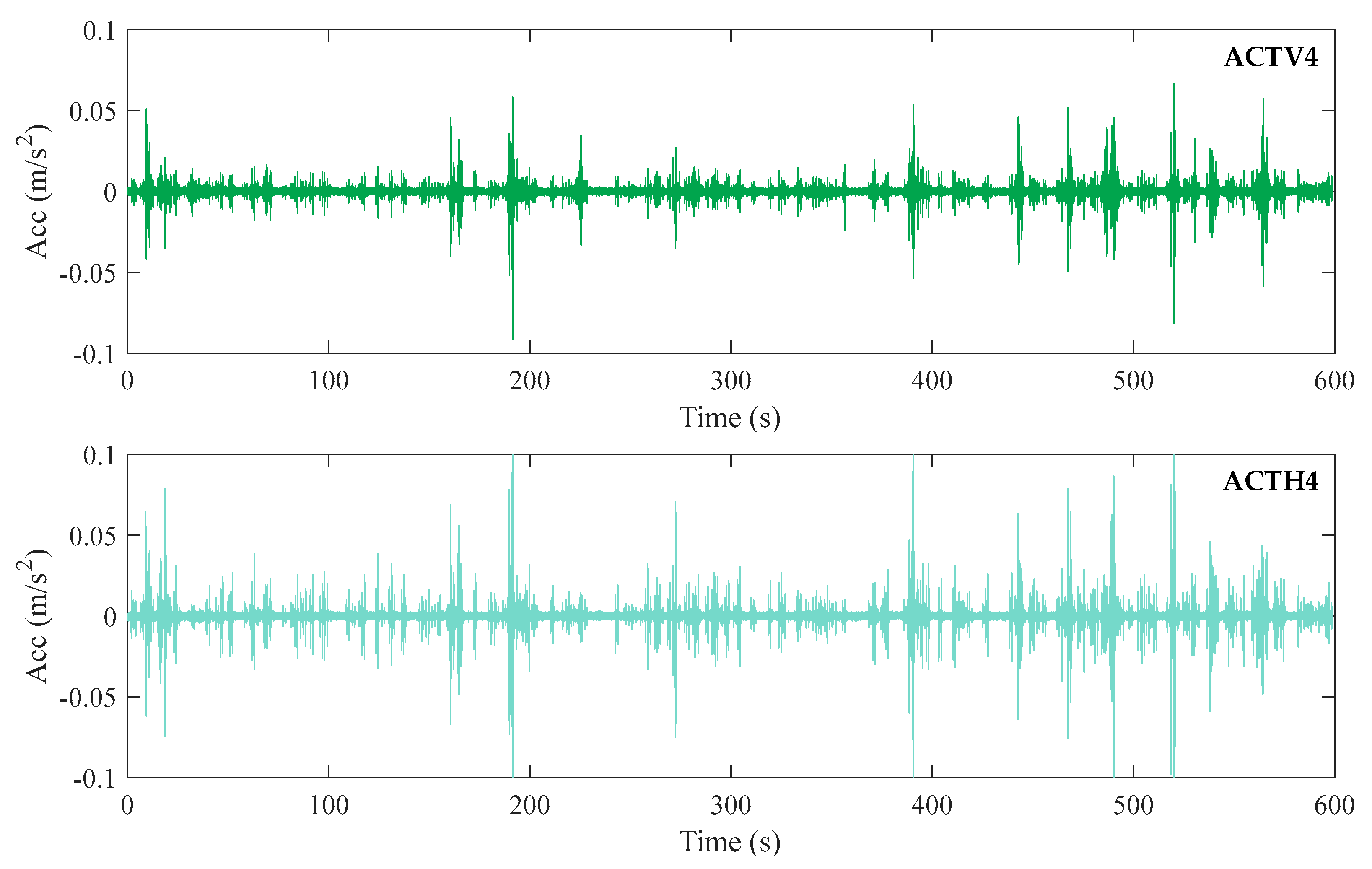

4.1. Environmental Conditions

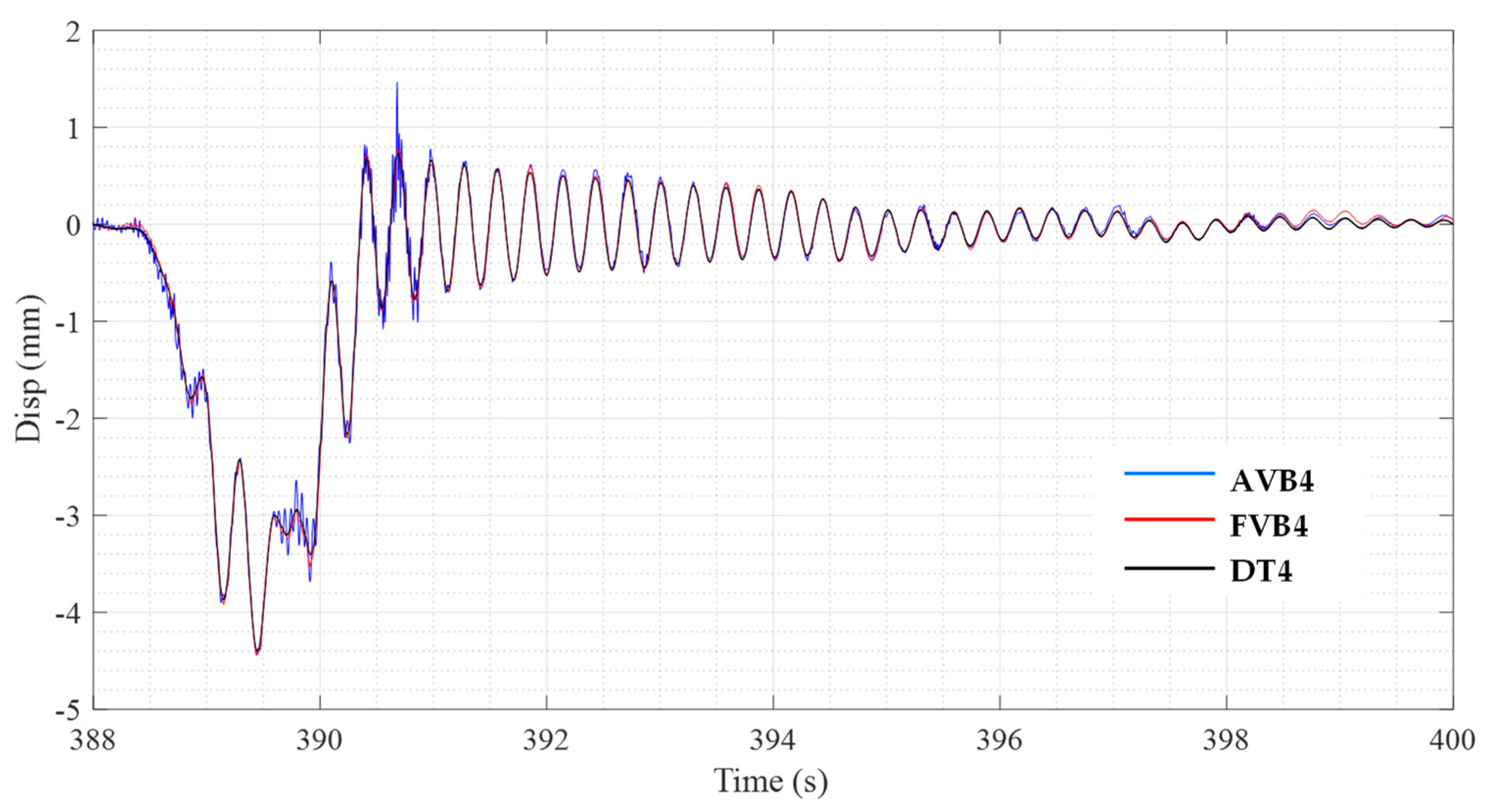

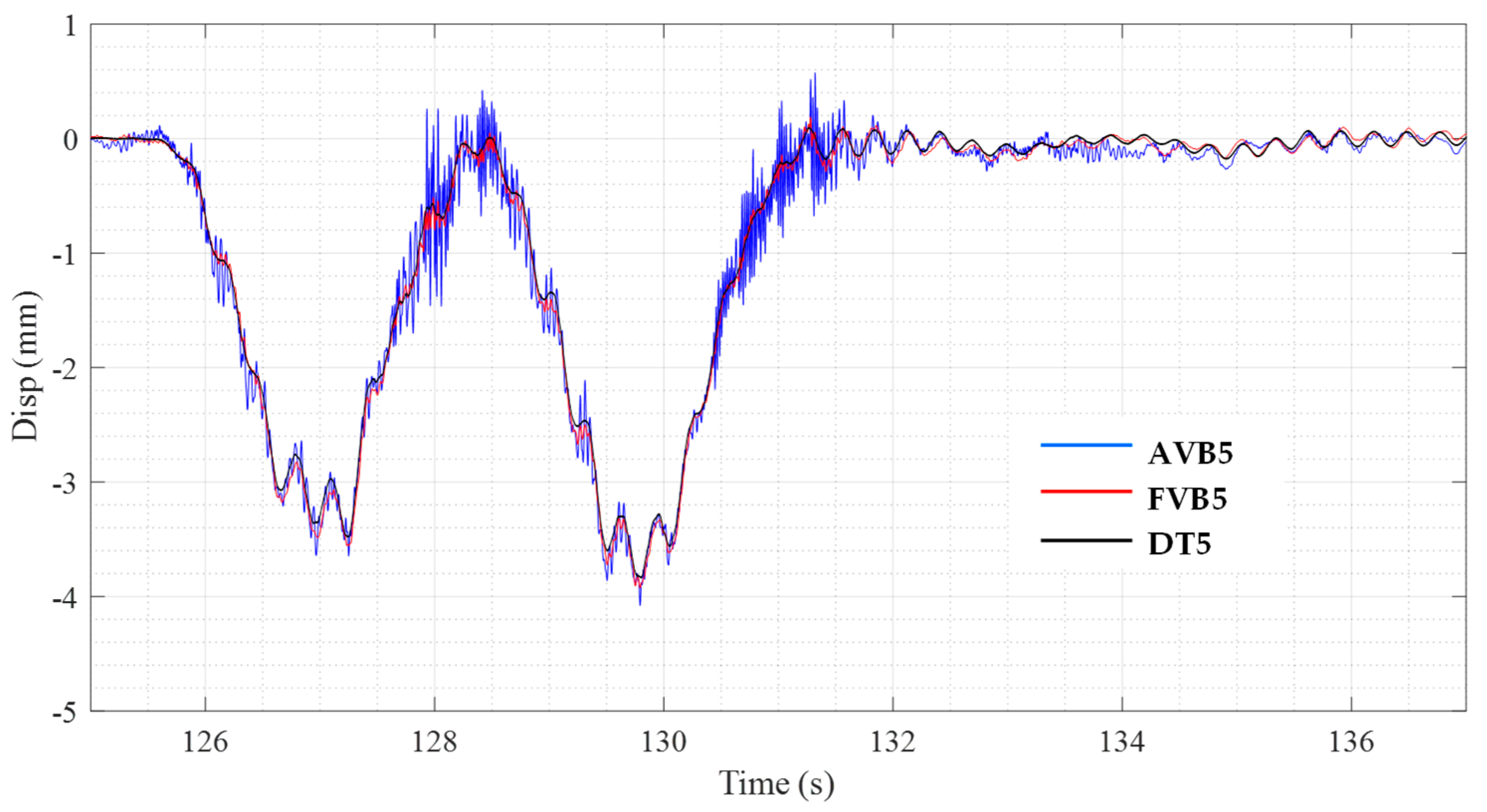

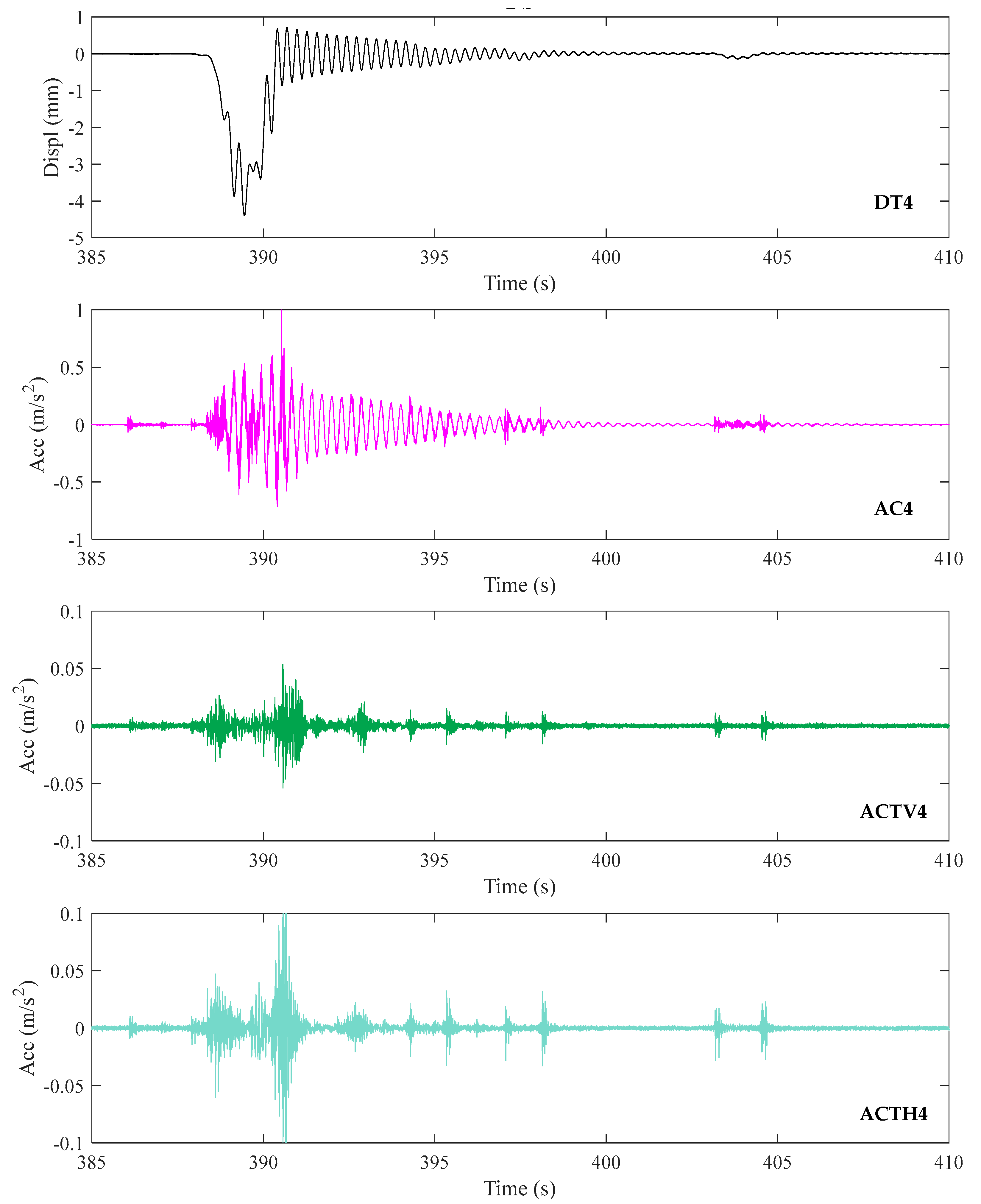

4.2. Analysis of Measured Displacements

4.3. Analysis of Measured Accelerations

5. Discussion

- Cost-effective hardware (industrial camera, lens, artificial targets) together with software based on an upsampling cross-correlation (UCC) template matching algorithm can deliver accurate real-time measurements of the deflections of medium-span post-tensioned concrete bridges under vehicular traffic as well as precise estimations of their first mode frequency.

- A simple method was proposed for the design of artificial targets based on just one design parameter (which defines both its global and local geometry) determined as a function of the distance between the camera and the target, the focal length of the adopted lens, and the pixel dimension of the camera sensor.

- The proposed artificial target, in addition to its main function to serve as a high-contrast surface for the UCC template matching algorithm, was also conceived to allow a very simple camera calibration and to facilitate the selection of the region of interest (ROI) and the template within the ROI. This contributes to standardise the camera setting procedures for the benefit of measure replicability and ease of use.

- Camera and software settings were varied to understand their effects on the quality of the measurements: one parameter influencing the time resolution, i.e., image acquisition frequency indicated by the acquired frames per second (FPS); three parameters influencing the displacement resolution, i.e., target size, focal length of the adopted lens, and upsampling factor in the UCC template matching algorithm.

- Increasing FPS was expected to increase the quality of the measurements under dynamic loading. This was not the case due to high frequency noise introduced by the vibrations in the tripod. Thus, no clear benefits were obtained by increasing FPS in the range 30 to 240. This deduction is supposed to change if mechanical solutions to reduce noise will be implemented.

- Changes in the parameters influencing the displacement resolution were made within the optimal range of the application of the proposed target design. No major benefit was clearly identified in lowering the minimum displacement that could be theoretically measured. However, the considered minimum displacement values were much lower that the peak displacements that were evaluated and lower than the noise induced by the vibrations in the tripod.

- The differences in selecting the ROI and template as well as the differences in the computation of the pixel counts for camera calibration (one single measure or average of four measures), within the guide of the adopted artificial target, had no noticeable effects on the measurements.

- The previous two points (no influence of parameters defining displacement resolution within the range imposed by the used target, camera calibration, ROI and template selection) show the effectiveness of the proposed target design in enforcing the replicability of the measurements in vision-based monitoring.

- The geometric relations derived between the target size and distance, lens focal length, and pixel size of the camera sensor, can also be used to provide indications on the suitability of a given hardware setting or selecting the most appropriate hardware among those available.

- Possible future developments of vision-based monitoring of post-tensioned concrete bridges are expected to deal with the identified critical aspects: illuminance of the target and vibration limitation of the video cameras.

- Targets might be improved using highly reflective materials to avoid or reduce the use of lamps, or efficient and cost-effective retro-illuminated solutions.

- Ways to limit the negative effects of vibrations transmitted to the video camera could be developed using different perspectives: mechanical devices (for example, decoupling connections or tuned tripods) or software algorithms (noise cancellation based on multi-point image tracking with the inclusion of stationary points).

- A vision-based system, as the one here adopted, relaying on real-time image processing for the extraction of displacement time histories without the need to store large memory-consuming video footages, might be suitable for longer monitoring campaigns or permanent monitoring. However, pilot applications and relevant studies are inevitably required to evaluate the long-term performance of a vision-based system and how night-and-day as well as seasonal changing light conditions can be conveniently handled.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Feng, D.; Feng, M.Q. Computer vision for SHM of civil infrastructure: From dynamic response measurement to damage detection—A review. Eng. Struct. 2018, 156, 105–117. [Google Scholar] [CrossRef]

- Dong, C.Z.; Catbas, F.N. A review of computer vision–based structural health monitoring at local and global levels. Struct. Health Monit. 2021, 20, 692–743. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Computer Vision for Structural Dynamics and Health Monitoring, 1st ed.; Wiley: Hoboken, NJ, USA, 2021; pp. 1–234. [Google Scholar]

- Zona, A. Vision-based vibration monitoring of structures and infrastructures: An overview of recent applications. Infrastructures 2021, 6, 4. [Google Scholar] [CrossRef]

- Luo, K.; Kong, X.; Zhang, J.; Hu, J.; Li, J.; Tang, H. Computer vision-based bridge inspection and monitoring: A review. Sensors 2023, 23, 7863. [Google Scholar] [CrossRef]

- Bhowmick, S.; Nagarajaiah, S. Identification of full-field dynamic modes using continuous displacement response estimated from vibrating edge video. J. Sound Vib. 2020, 489, 115657. [Google Scholar] [CrossRef]

- Aliansyah, Z.; Shimasaki, K.; Senoo, T.; Ishii, I.; Umemoto, S. Single-camera-based bridge structural displacement measurement with traffic counting. Sensors 2021, 21, 4517. [Google Scholar] [CrossRef]

- Kromanis, R.; Kripakaran, P. A multiple camera position approach for accurate displacement measurement using computer vision. J. Civ. Struct. Health Monit. 2021, 11, 661–678. [Google Scholar] [CrossRef]

- Lydon, D.; Lydon, M.; Kromanis, R.; Dong, C.-Z.; Catbas, N.; Taylor, S. Bridge damage detection approach using a roving camera technique. Sensors 2021, 21, 1246. [Google Scholar] [CrossRef]

- Obiechefu, C.B.; Kromanis, R. Damage detection techniques for structural health monitoring of bridges from computer vision derived parameters. Struct. Monit. Maint. 2021, 8, 91–110. [Google Scholar] [CrossRef]

- Voordijk, H.; Kromanis, R. Technological mediation and civil structure condition assessment: The case of vision-based systems. Civ. Eng. Environ. Syst. 2022, 39, 48–65. [Google Scholar] [CrossRef]

- Lydon, D.; Kromanis, R.; Lydon, M.; Early, J.; Taylor, S. Use of a roving computer vision system to compare anomaly detection techniques for health monitoring of bridges. J. Civ. Struct. Health Monit. 2022, 12, 1299–1316. [Google Scholar] [CrossRef]

- Nie, G.-Y.; Bodda, S.S.; Sandhu, H.K.; Han, K.; Gupta, A. Computer-vision-based vibration tracking using a digital camera: A sparse-optical-flow-based target tracking method. Sensors 2022, 22, 6869. [Google Scholar] [CrossRef] [PubMed]

- Bocian, M.; Nikitas, N.; Kalybek, M.; Kuzawa, M.; Hawryszków, P.; Bień, J.; Onysyk, J.; Biliszczuk, J. Dynamic performance verification of the Rędziński Bridge using portable camera-based vibration monitoring systems. Archiv. Civ. Mech. Eng. 2023, 23, 40. [Google Scholar] [CrossRef]

- Shajihan, S.A.V.; Hoang, T.; Mechitov, K.; Spencer, B.F. Wireless SmartVision system for synchronized displacement monitoring of railroad bridges. Comput. Aided Civ. Inf. 2022, 37, 1070–1088. [Google Scholar] [CrossRef]

- Ghyabi, M.; Timber, L.C.; Jahangiri, G.; Lattanzi, D.; Shenton, H.W., III; Chajes, M.J.; Head, M.H. Vision-based measurements to quantify bridge deformations. J. Bridge Eng. 2023, 28, 05022010. [Google Scholar] [CrossRef]

- Shao, Y.; Li, L.; Li, J.; Li, Q.; An, S.; Hao, H. Monocular vision based 3D vibration displacement measurement for civil engineering structures. Eng. Struct. 2023, 293, 116661. [Google Scholar] [CrossRef]

- Han, Y.; Wu, G.; Feng, D. Structural modal identification using a portable laser-and-camera measurement system. Measurement 2023, 214, 112768. [Google Scholar] [CrossRef]

- Rajaei, S.; Hogsett, G.; Chapagain, B.; Banjade, S.; Ghannoum, W. Vision-based large-field measurements of bridge deformations. J. Bridge Eng. 2023, 28, 04023075. [Google Scholar] [CrossRef]

- Yin, Y.; Yu, Q.; Hu, B.; Zhang, Y.; Chen, W.; Liu, X.; Ding, X. A vision monitoring system for multipoint deflection of large-span bridge based on camera networking. Comput. Aided Civ. Inf. 2023, 38, 1879–1891. [Google Scholar] [CrossRef]

- Dong, C.; Bas, S.; Catbas, F.N. Applications of computer vision-based structural monitoring on long-span bridges in Turkey. Sensors 2023, 23, 8161. [Google Scholar] [CrossRef]

- Choi, J.; Ma, Z.; Kim, K.; Sohn, H. Continuous structural displacement monitoring using accelerometer, vision, and infrared (IR) cameras. Sensors 2023, 23, 5241. [Google Scholar] [CrossRef] [PubMed]

- Luan, L.; Liu, Y.; Sun, H. Extracting high-precision full-field displacement from videos via pixel matching and optical flow. J. Sound Vib. 2023, 565, 117904. [Google Scholar] [CrossRef]

- Gentile, C.; Bernardini, G. An interferometric radar for noncontact measurement of deflections on civil engineering structures: Laboratory and full-scale tests. Struct. Infrastruct. Eng. 2010, 6, 521–534. [Google Scholar] [CrossRef]

- Negulescu, C.; Luzi, G.; Crosetto, M.; Raucoules, D.; Roullé, A.; Monfort, D.; Pujades, L.; Colas, B.; Dewez, T. Comparison of seismometer and radar measurements for the modal identification of civil engineering structures. Eng. Struct. 2013, 51, 10–22. [Google Scholar] [CrossRef]

- Gonzalez-Drigo, R.; Cabrera, E.; Luzi, G.; Pujades, L.G.; Vargas-Alzate, Y.F.; Avila-Haro, J. Assessment of post-earthquake damaged building with interferometric real aperture radar. Remote Sens. 2019, 11, 2830. [Google Scholar] [CrossRef]

- Michel, C.; Keller, S. Advancing ground-based radar processing for bridge infrastructure monitoring. Sensors 2021, 21, 2172. [Google Scholar] [CrossRef]

- Xia, H.; De Roeck, G.; Zhang, N.; Maeck, J. Experimental analysis of a high-speed railway bridge under Thalys trains. J. Sound Vib. 2003, 268, 103–113. [Google Scholar] [CrossRef]

- Nassif, H.H.; Gindy, M.; Davis, J. Comparison of laser Doppler vibrometer with contact sensors for monitoring bridge deflection and vibration. NDT E Int. 2005, 38, 213–218. [Google Scholar] [CrossRef]

- Garg, P.; Moreu, F.; Ozdagli, A.; Taha, M.R.; Mascareñas, D. Noncontact dynamic displacement measurement of structures using a moving laser doppler vibrometer. J. Bridge Eng. 2019, 24, 04019089. [Google Scholar] [CrossRef]

- Yu, W.; Nishio, M. Multilevel structural components detection and segmentation toward computer vision-based bridge inspection. Sensors 2022, 22, 3502. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Ruggieri, S.; Nettis, A.; Renò, V.; Uva, G. Physical interpretation of machine learning-based recognition of defects for the risk management of existing bridge heritage. Eng. Fail. Anal. 2023, 149, 107237. [Google Scholar] [CrossRef]

- Wu, Z.; Tang, Y.; Hong, B.; Liang, B.; Liu, Y. Enhanced precision in dam crack width measurement: Leveraging advanced lightweight network identification for pixel-level accuracy. Int. J. Intell. Syst. 2023, 2023, 9940881. [Google Scholar] [CrossRef]

- Tang, Y.; Huang, Z.; Chen, Z.; Chen, M.; Zhou, H.; Zhang, H.; Sun, J. Novel visual crack width measurement based on backbone double-scale features for improved detection automation. Eng. Struct. 2023, 274, 115158. [Google Scholar] [CrossRef]

- Guizar-Sicairos, M.; Thurman, S.T.; Fienup, J.R. Efficient subpixel image registration algorithms. Opt. Lett. 2008, 33, 156–158. [Google Scholar] [CrossRef]

- Karybali, I.G.; Psarakis, E.Z.; Berberidis, K.; Evangelidis, G.D. An efficient spatial domain technique for subpixel image registration. Signal Process. Image Commun. 2008, 23, 711–724. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q.; Ozer, E.; Fukuda, Y. A vision-based sensor for noncontact structural displacement measurement. Sensors 2015, 15, 16557–16575. [Google Scholar] [CrossRef]

- Mas, D.; Perez, J.; Ferrer, B.; Espinosa, J. Realistic limits for subpixel movement detection. Appl. Opt. 2016, 55, 4974–4979. [Google Scholar] [CrossRef]

- Antoš, J.; Nežerka, V.; Somr, M. Real-time optical measurement of displacements using subpixel image registration. EXP Tech 2019, 43, 315–323. [Google Scholar] [CrossRef]

- Pinto, P.E.; Franchin, P. Issues in the upgrade of Italian highway structures. J. Earthq. Eng. 2010, 14, 1221–1252. [Google Scholar] [CrossRef]

- Gkoumas, K.; Marques Dos Santos, F.L.; van Balen, M.; Tsakalidis, A.; Ortega Hortelano, A.; Grosso, M.; Haq, G.; Pekár, F. Research and Innovation in Bridge Maintenance, Inspection and Monitoring—A European Perspective Based on the Transport Research and Innovation Monitoring and Information System (TRIMIS); EUR 29650 EN; Publications Office of the European Union: Luxembourg City, Luxembourg, 2019. [Google Scholar] [CrossRef]

- Neves, A.C.; Leander, J.; González, I.; Karoumi, R. An approach to decision-making analysis for implementation of structural health monitoring in bridges. Struct. Control Health Monit. 2019, 26, e2352. [Google Scholar] [CrossRef]

- An, Y.; Chatzi, E.; Sim, S.H.; Laflamme, S.; Blachowski, B.; Ou, J. Recent progress and future trends on damage identification methods for bridge structures. Struct. Control Health Monit. 2019, 26, e2416. [Google Scholar] [CrossRef]

- Ercolessi, S.; Fabbrocino, G.; Rainieri, C. Indirect measurements of bridge vibrations as an experimental tool supporting periodic inspections. Infrastructures 2021, 6, 39. [Google Scholar] [CrossRef]

- Rainieri, C.; Notarangelo, M.A.; Fabbrocino, G. Experiences of dynamic identification and monitoring of bridges in serviceability conditions and after hazardous events. Infrastructures 2020, 5, 86. [Google Scholar] [CrossRef]

- D’Alessandro, A.; Birgin, H.B.; Cerni, G.; Ubertini, F. Smart infrastructure monitoring through self-sensing composite sensors and systems: A study on smart concrete sensors with varying carbon-based filler. Infrastructures 2022, 7, 48. [Google Scholar] [CrossRef]

- D’Angelo, M.; Menghini, A.; Borlenghi, P.; Bernardini, L.; Benedetti, L.; Ballio, F.; Belloli, M.; Gentile, C. Hydraulic safety evaluation and dynamic investigations of Baghetto Bridge in Italy. Infrastructures 2022, 7, 53. [Google Scholar] [CrossRef]

- Nicoletti, V.; Martini, R.; Carbonari, S.; Gara, F. Operational modal analysis as a support for the development of digital twin models of bridges. Infrastructures 2023, 8, 24. [Google Scholar] [CrossRef]

- Kim, H.-J.; Seong, Y.-H.; Han, J.-W.; Kwon, S.-H.; Kim, C.-Y. Demonstrating the test procedure for preventive maintenance of aging concrete bridges. Infrastructures 2023, 8, 54. [Google Scholar] [CrossRef]

- Natali, A.; Cosentino, A.; Morelli, F.; Salvatore, W. Multilevel approach for management of existing bridges: Critical analysis and application of the Italian Guidelines with the new operating instructions. Infrastructures 2023, 8, 70. [Google Scholar] [CrossRef]

- Mathworks MATLAB Version 2023a; The MathWorks Inc.: Natick, MA, USA. 2023. Available online: https://www.mathworks.com (accessed on 20 September 2023).

- Mathworks MATLAB Computer Vision Toolbox. Available online: https://mathworks.com/products/computer-vision.html (accessed on 20 September 2023).

- Mathworks MATLAB Image Acquisition Toolbox Support Package for GenICam. Interface MATLAB Central File Exchange. Available online: https://mathworks.com/matlabcentral/fileexchange/45180-image-acquisition-toolbox-support-package-for-genicam-interface (accessed on 20 September 2023).

- Guizar, M. Efficient Subpixel Image Registration by Cross-Correlation. MATLAB Central File Exchange. Available online: https://www.mathworks.com/matlabcentral/fileexchange/18401-efficient-subpixel-image-registration-by-cross-correlation (accessed on 20 September 2023).

- Teledyne FLIR Blackfly S BFS-U3-23S3 Specifications and Frame Rates. Available online: http://softwareservices.flir.com/BFS-U3-23S3/latest/Model/spec.html (accessed on 20 September 2023).

- Teledyne FLIR Blackfly S BFS-U3-23S3 Imaging Performance. Available online: http://softwareservices.flir.com/BFS-U3-23S3/latest/EMVA/EMVA.html (accessed on 20 September 2023).

- Teledyne FLIR Blackfly S BFS-U3-23S3 Readout Method. Available online: http://softwareservices.flir.com/BFS-U3-23S3/latest/40-Installation/Readout.htm (accessed on 20 September 2023).

- Yang, Y.B.; Yau, J.D.; Wu, Y.S. Vehicle–Bridge Interaction Dynamics with Applications to High-Speed Railways; World Scientific Publishing Co.: Singapore, 2004; pp. 1–530. [Google Scholar]

- Yang, Y.B.; Lin, C.W. Vehicle–bridge interaction dynamics and potential applications. J. Sound Vib. 2005, 284, 205–226. [Google Scholar] [CrossRef]

- Orfanidis, S.J. Optimum Signal Processing: An Introduction, 2nd ed.; Prentice-Hall: Englewood Cliffs, NJ, USA, 1996; pp. 1–377. [Google Scholar]

| Component | Model | Main Technical Specifications |

|---|---|---|

| Video camera | Teledyne FLIR BLACKFLY S BFS-U3-23S3M-C | Sensor: Sony IMX392 CMOS 1/2.3″ |

| Pixel size: 3.45 µm | ||

| Max resolution: 1920 × 1200 (2.3 M pixels) | ||

| Readout method: Global shutter | ||

| Chroma: Monochrome | ||

| Exposure range: 6.0 μs to 30.0 s | ||

| Max FPS at full resolution: 163 | ||

| Max FPS at 640 × 480 resolution: 392 | ||

| Max FPS at 320 × 240 resolution: 717 | ||

| Mass: 36 g | ||

| Lens | Tamron 23FM50SP | Focal length: 50 mm |

| Max aperture: F2.8 | ||

| Distortion: <0.01% | ||

| Focus range: 0.2 m–∞ | ||

| Field angle (H × V): 7.6 × 4.8° | ||

| Mass: 117 g | ||

| Kowa LM100JC1MS | Focal length: 100 mm | |

| Max aperture: F2.8 | ||

| Distortion: <0.05% | ||

| Focus range: 2.0 m–∞ | ||

| Field angle (H × V): 3.8 × 2.4° | ||

| Mass: 145 g |

| Start Time | Measure | f (mm) | FPS | 10t (mm) | ROI Size | Pixel Search | SF (mm) |

|---|---|---|---|---|---|---|---|

| 12:15 | AVB1 | 100 | 120 | 50 | 129 × 128 | 25 | 0.4254 |

| FVB1 | 100 | 120 | 50 | 183 × 178 | 25 | 0.4184 | |

| 12:30 | AVB2 | 100 | 120 | 50 | 115 × 114 | 19 | 0.4237 |

| FVB2 | 100 | 120 | 50 | 174 × 170 | 20 | 0.4155 | |

| 12:45 | AVB3 | 100 | 120 | 50 | 122 × 124 | 23 | 0.4247 |

| FVB3 | 100 | 30 | 50 | 170 × 167 | 20 | 0.4218 | |

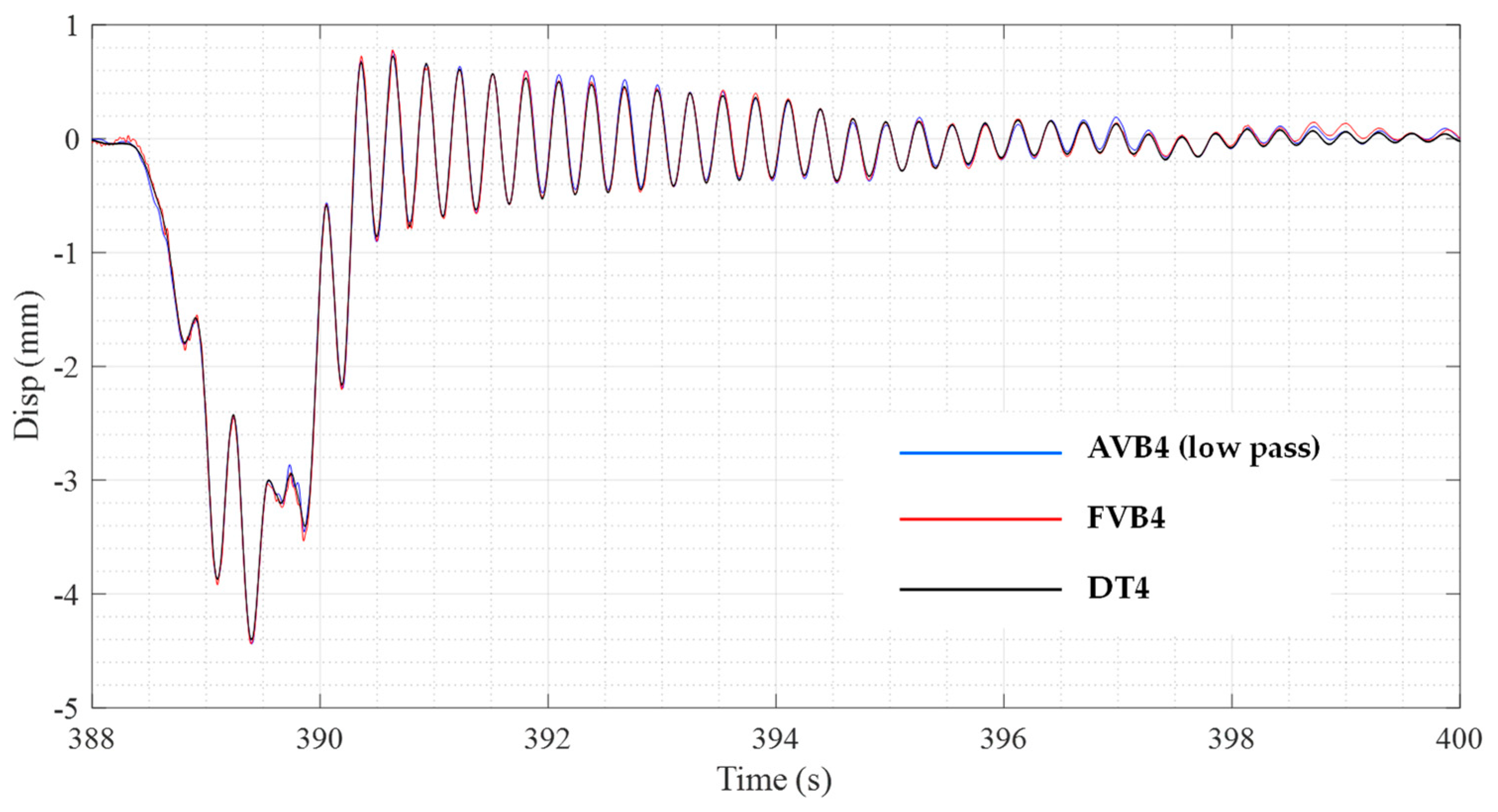

| 13:05 | AVB4 | 100 | 120 | 50 | 123 × 122 | 23 | 0.4256 |

| FVB4 | 100 | 60 | 50 | 168 × 166 | 20 | 0.4173 | |

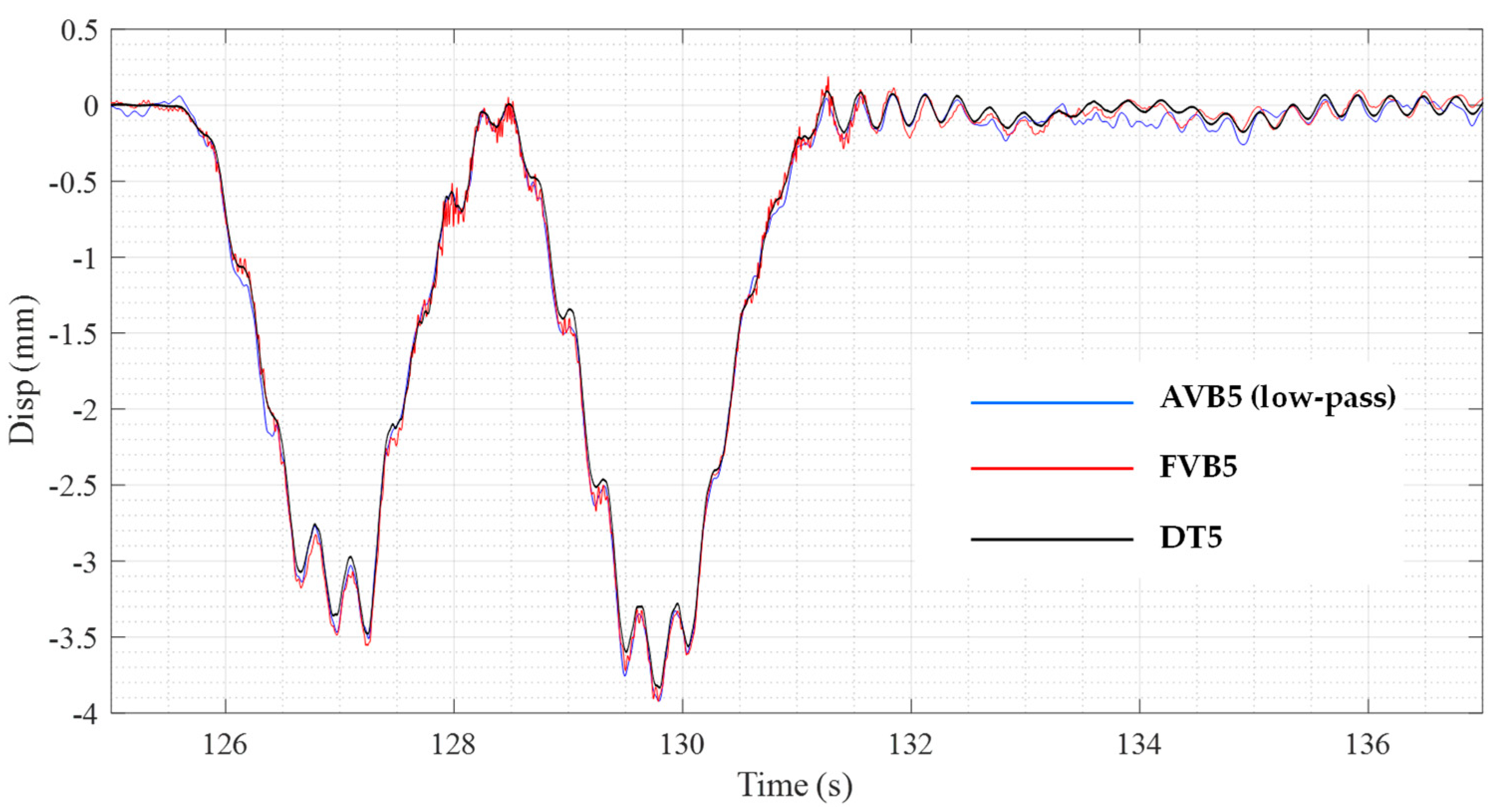

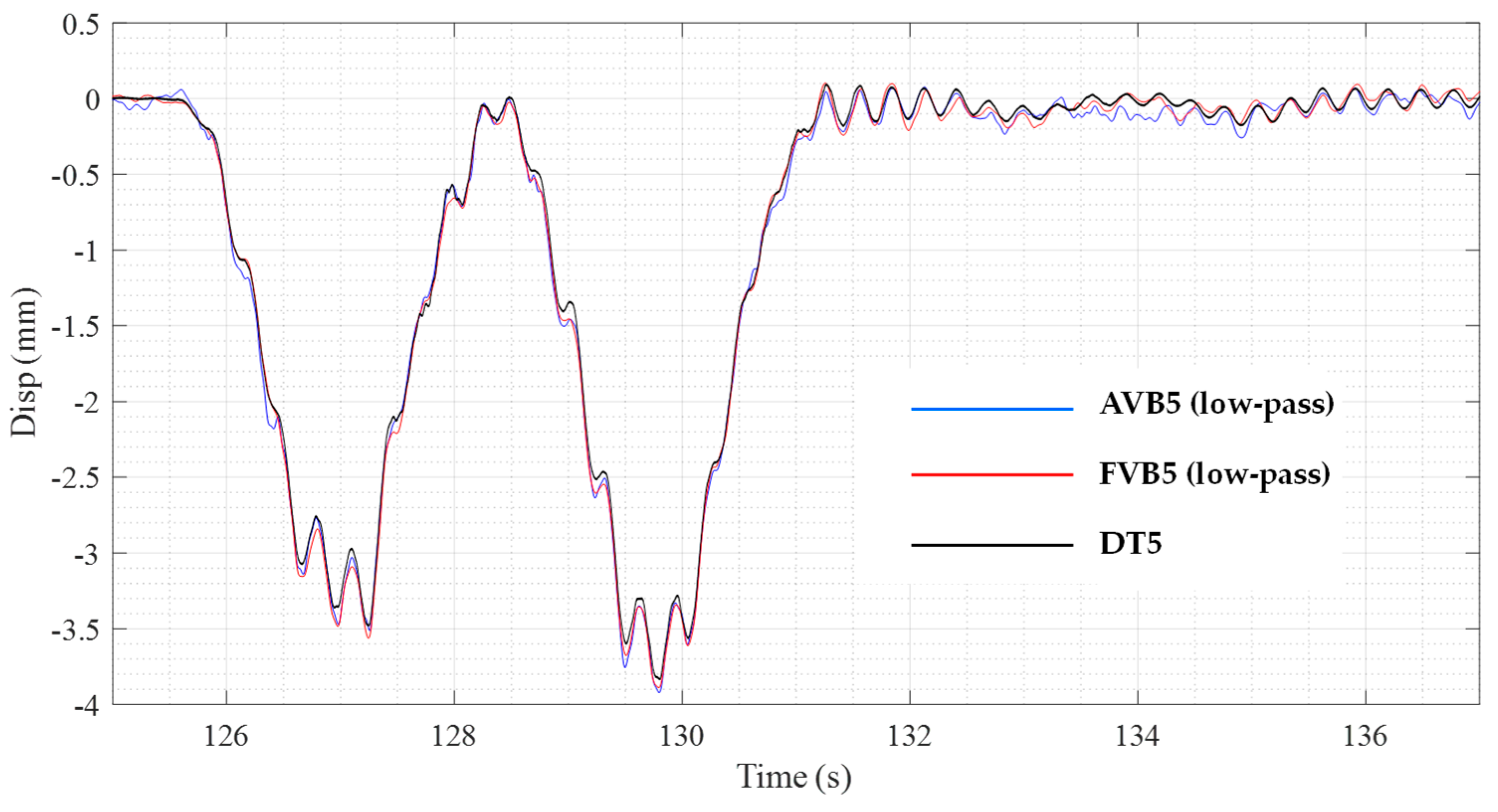

| 13:30 | AVB5 | 100 | 240 | 50 | 111 × 110 | 18 | 0.4259 |

| FVB5 | 100 | 120 | 50 | 160 × 156 | 15 | 0.4172 | |

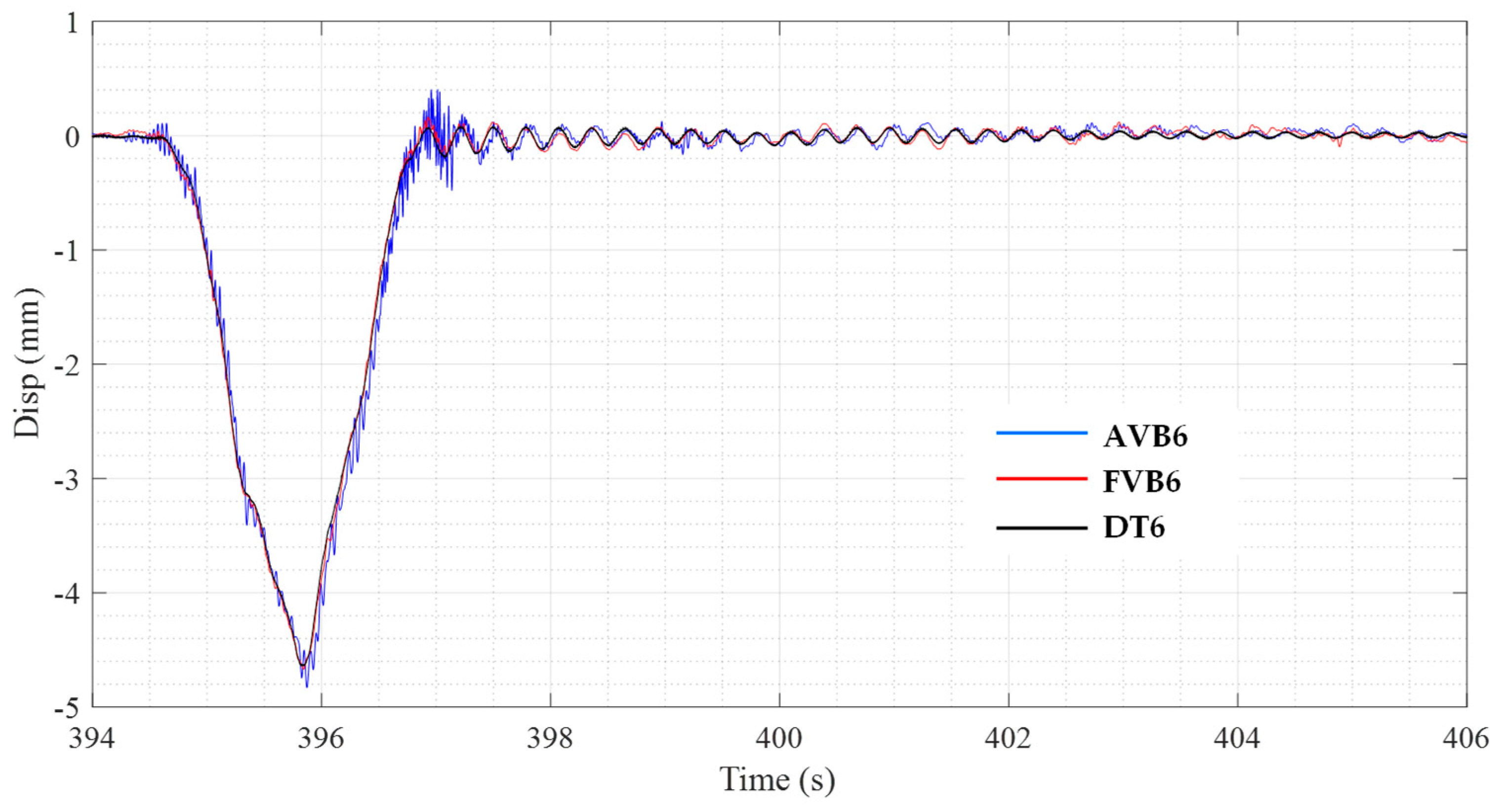

| 13:55 | AVB6 | 100 | 120 | 50 | 124 × 122 | 23 | 0.4256 |

| FVB6 | 50 | 120 | 50 | 85 × 83 | 10 | 0.8429 | |

| 16:00 | FVB7 | 50 | 120 | 100 | 145 × 145 | 15 | 0.8416 |

| Time | Temperature (°C) | Relative Humidity (%) |

|---|---|---|

| 11:00 | 31.6 | 45.9 |

| 12:00 | 31.9 | 40.4 |

| 13:00 | 32.5 | 37.9 |

| 14:00 | 32.5 | 38.3 |

| 15:00 | 32.8 | 46.3 |

| 16:00 | 32.2 | 56.2 |

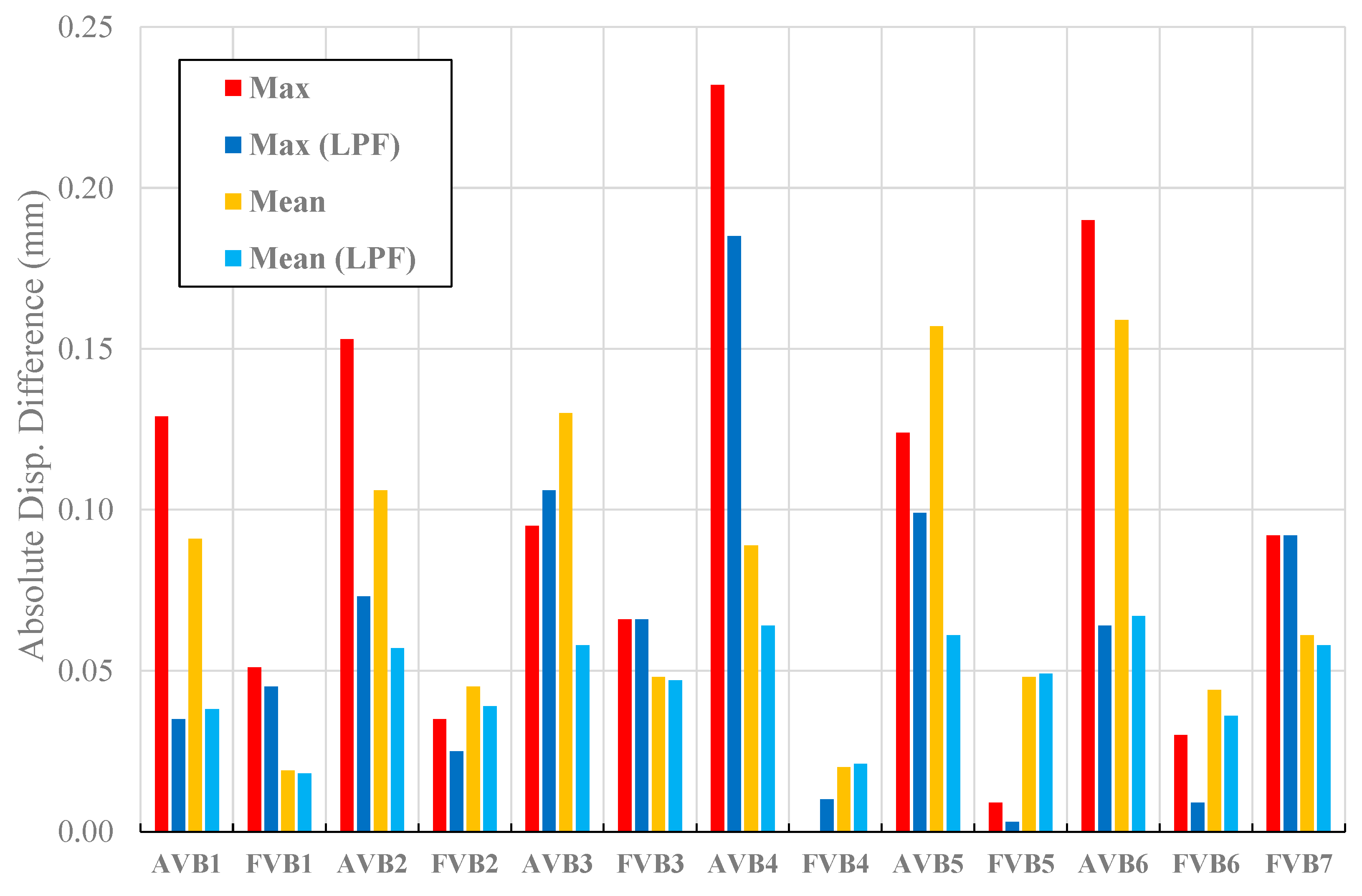

| Measure | Disp. Res. Rdisp (mm) | Disp. Range dmax (mm) | Heavy Vehicles | Max Disp. (mm) | Max Disp. Diff. (mm) | Max Disp. Diff. (%) | Mean Abs Diff. (mm) | Std Abs Diff. (mm) |

|---|---|---|---|---|---|---|---|---|

| DT1 | 1.19 × 10−5 | 100 | 2.533 | |||||

| AVB1 | 0.004254 | 10.635 | 4 | 2.662 | 0.129 | 5.10 | 0.091 | 0.045 |

| FVB1 | 0.008368 | 10.460 | 2.584 | 0.051 | 2.00 | 0.019 | 0.022 | |

| DT2 | 1.19 × 10−5 | 100 | 3.433 | |||||

| AVB2 | 0.004237 | 8.0503 | 3 | 3.586 | 0.153 | 4.44 | 0.106 | 0.074 |

| FVB2 | 0.004155 | 8.3100 | 3.468 | 0.035 | 1.01 | 0.045 | 0.035 | |

| DT3 | 1.19 × 10−5 | 100 | 3.778 | |||||

| AVB3 | 0.004247 | 9.7681 | 24 | 3.873 | 0.095 | 2.51 | 0.130 | 0.066 |

| FVB3 | 0.004218 | 8.4360 | 3.844 | 0.066 | 1.75 | 0.048 | 0.024 | |

| DT4 | 1.19 × 10−5 | 100 | 4.712 | |||||

| AVB4 | 0.004256 | 9.7888 | 5 | 4.944 | 0.232 | 4.93 | 0.089 | 0.095 |

| FVB4 | 0.004173 | 8.3460 | 4.712 | 0.000 | 0.00 | 0.020 | 0.019 | |

| DT5 | 1.19 × 10−5 | 100 | 4.608 | |||||

| AVB5 | 0.004259 | 7.6662 | 9 | 4.732 | 0.124 | 2.71 | 0.157 | 0.037 |

| FVB5 | 0.004172 | 6.2580 | 4.617 | 0.009 | 0.21 | 0.048 | 0.043 | |

| DT6 | 1.19 × 10−5 | 100 | 4.640 | |||||

| AVB6 | 0.004256 | 9.7888 | 12 | 4.830 | 0.190 | 4.10 | 0.159 | 0.080 |

| FVB6 | 0.008429 | 8.4290 | 4.670 | 0.030 | 0.65 | 0.044 | 0.039 | |

| DT7 | 1.19 × 10−5 | 100 | 13 | 4.766 | ||||

| FVB7 | 0.008416 | 12.624 | 4.674 | −0.092 | −1.93 | 0.061 | 0.055 |

| Measure | Max Disp. (mm) | Max Disp. Diff. (mm) | Max Disp. Diff. (%) | Mean Abs Diff. (mm) | Std Abs Diff. (mm) |

|---|---|---|---|---|---|

| DT1 | 2.533 | ||||

| AVB1 (LPF) | 2.568 | 0.035 | 1.36 | 0.038 | 0.035 |

| FVB1 (LPF) | 2.578 | 0.045 | 1.77 | 0.018 | 0.018 |

| DT2 | 3.433 | ||||

| AVB2 (LPF) | 3.506 | 0.073 | 2.10 | 0.057 | 0.041 |

| FVB2 (LPF) | 3.458 | 0.025 | 0.72 | 0.039 | 0.032 |

| DT3 | 3.778 | ||||

| AVB3 (LPF) | 3.884 | 0.106 | 2.81 | 0.058 | 0.040 |

| FVB3 (LPF) | 3.844 | 0.066 | 1.75 | 0.047 | 0.024 |

| DT4 | 4.712 | ||||

| AVB4 (LPF) | 4.897 | 0.185 | 3.92 | 0.064 | 0.082 |

| FVB4 (LPF) | 4.702 | −0.010 | −0.12 | 0.021 | 0.019 |

| DT5 | 4.608 | ||||

| AVB5 (LPF) | 4.707 | 0.099 | 2.15 | 0.061 | 0.043 |

| FVB5 (LPF) | 4.605 | −0.003 | −0.06 | 0.049 | 0.043 |

| DT6 | 4.640 | ||||

| AVB6 (LPF) | 4.704 | 0.064 | 1.39 | 0.067 | 0.052 |

| FVB6 (LPF) | 4.649 | 0.009 | 0.20 | 0.036 | 0.036 |

| DT7 | 4.766 | ||||

| FVB7 (LPF) | 4.674 | −0.092 | −1.94 | 0.058 | 0.055 |

| Acquisition Window | AC | DT | AVB | FVB | |||

|---|---|---|---|---|---|---|---|

| Freq. (Hz) | Freq. (Hz) | Diff. (%) | Freq. (Hz) | Diff. (%) | Freq. (Hz) | Diff. (%) | |

| #1 | 3.48 | 3.48 | 0.00 | 3.48 | 0.00 | 3.48 | 0.00 |

| #2 | 3.47 | 3.48 | 0.13 | 3.48 | 0.13 | 3.47 | 0.00 |

| #3 | 3.45 | 3.45 | −0.13 | 3.45 | −0.13 | 3.45 | −0.13 |

| #4 | 3.47 | 3.47 | 0.00 | 3.47 | 0.00 | 3.47 | 0.00 |

| #5 | 3.48 | 3.48 | 0.00 | 3.48 | 0.00 | 3.48 | 0.00 |

| #6 | 3.48 | 3.48 | 0.00 | 3.48 | 0.00 | 3.48 | 0.00 |

| #7 | 3.47 | 3.47 | 0.13 | - | - | 3.47 | 0.13 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Micozzi, F.; Morici, M.; Zona, A.; Dall’Asta, A. Vision-Based Structural Monitoring: Application to a Medium-Span Post-Tensioned Concrete Bridge under Vehicular Traffic. Infrastructures 2023, 8, 152. https://doi.org/10.3390/infrastructures8100152

Micozzi F, Morici M, Zona A, Dall’Asta A. Vision-Based Structural Monitoring: Application to a Medium-Span Post-Tensioned Concrete Bridge under Vehicular Traffic. Infrastructures. 2023; 8(10):152. https://doi.org/10.3390/infrastructures8100152

Chicago/Turabian StyleMicozzi, Fabio, Michele Morici, Alessandro Zona, and Andrea Dall’Asta. 2023. "Vision-Based Structural Monitoring: Application to a Medium-Span Post-Tensioned Concrete Bridge under Vehicular Traffic" Infrastructures 8, no. 10: 152. https://doi.org/10.3390/infrastructures8100152

APA StyleMicozzi, F., Morici, M., Zona, A., & Dall’Asta, A. (2023). Vision-Based Structural Monitoring: Application to a Medium-Span Post-Tensioned Concrete Bridge under Vehicular Traffic. Infrastructures, 8(10), 152. https://doi.org/10.3390/infrastructures8100152