Videogame-Based Training: The Impact and Interaction of Videogame Characteristics on Learning Outcomes

Abstract

:1. Introduction

1.1. Videogame Characteristic: Rules/Goals Clarity

1.2. Videogame Characteristic: Human Interaction

1.3. Post-Training Performance

1.4. The Impact of Videogame Characteristics

2. Materials and Methods

2.1. Participants

2.2. Procedures

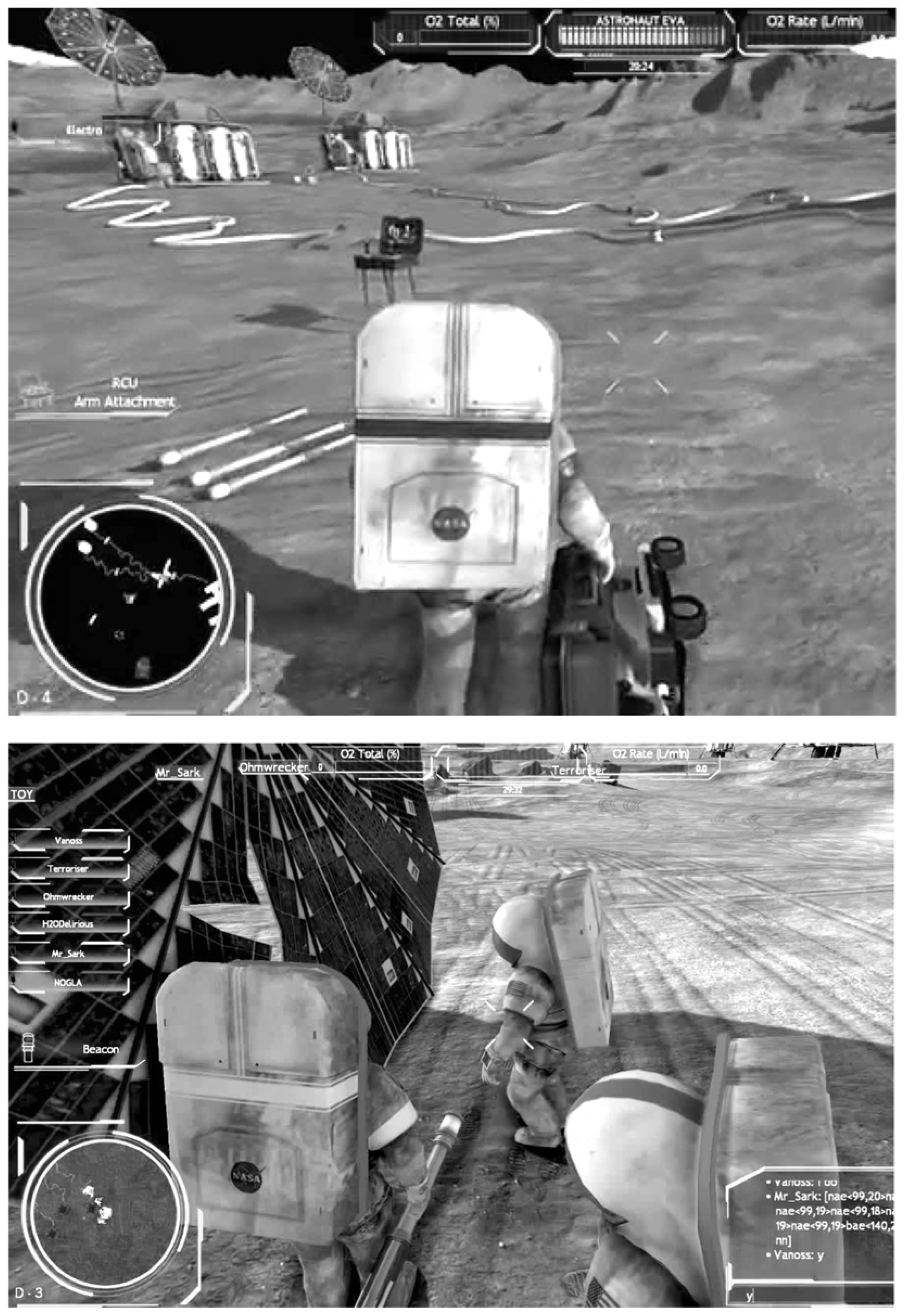

2.3. Videogame-Based Training

2.4. Scoring Methodology

2.5. Measures

3. Results

4. Discussion

4.1. The Benefits of Clear Rules/Goals

4.2. The Benefits of Human Interaction

4.3. Evaluating Post-Training Performance

4.4. Distinguishing Videogame Characteristics

4.5. The Importance of Game Selection

4.6. Limitations

4.7. Future Research

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Orlansky, J.; String, J. Cost-Effectiveness of Flight Simulators for Military Training; Document No. P-1275-VOL-1; Institute for Defense Analyses: Alexandria, VA, USA, 1977. [Google Scholar]

- Rowland, K.M.; Gardner, D.M. The Uses of Business Gaming in Education and Laboratory Research. Decis. Sci. 1973, 4, 268–283. [Google Scholar] [CrossRef] [Green Version]

- Thompson, F.A. Gaming via Computer Simulation Techniques for Junior College Economics Education; ERIC Document Reproduction Service No. ED 021 549; Riverside City College: Riverside, CA, USA, 1968. [Google Scholar]

- Agapiou, A. The use and evaluation of a simulation game to teach professional practice skills to undergraduate architecture students. J. Educ. Built Environ. 2006, 1, 3–14. [Google Scholar] [CrossRef] [Green Version]

- Bowers, C.A.; Jentsch, F. Use of commercial, off-the-shelf, simulations for team research. In Advances in Human Performance and Cognitive Engineering Research; Bowers, C.A., Salas, E., Eds.; Lawrence Erlbaum: Mahwah, NJ, USA, 2001; pp. 293–317. [Google Scholar]

- Marlow, S.L.; Salas, E.; Landon, L.B.; Presnell, B. Eliciting teamwork with game attributes: A systematic review and research agenda. Comput. Hum. Behav. 2016, 55, 413–423. [Google Scholar] [CrossRef]

- Dalla Rosa, A.; Vianello, M. On the effectiveness of a simulated learning environment. Procedia-Soc. Behav. Sci. 2015, 171, 1065–1074. [Google Scholar] [CrossRef]

- Denby, B.; Schofield, D. Role of virtual reality in safety training of mine personnel. Min. Eng. 1999, 51, 59–64. Available online: https://www.osti.gov/etdeweb/biblio/20007172 (accessed on 27 January 2022).

- Wood, A.; McPhee, C. Establishing a virtual learning environment: A nursing experience. J. Contin. Educ. Nurs. 2011, 42, 510–515. [Google Scholar] [CrossRef]

- Zajtchuk, R.; Satava, R.M. Medical applications of virtual reality. Commun. ACM 1997, 40, 63–64. [Google Scholar] [CrossRef]

- Anderson, G.S.; Hilton, S. Increase team cohesion by playing cooperative video games. CrossTalk 2015, 1, 33–37. [Google Scholar]

- Caroux, L.; Isbister, K.; Le Bigot, L.; Vibert, N. Player-video game interaction: A systematic review of current concepts. Comput. Hum. Behav. 2015, 48, 366–381. [Google Scholar] [CrossRef] [Green Version]

- Dempsey, J.; Lucassen, B.; Gilley, W.; Rasmussen, K. Since Malone’s theory of intrinsically motivating instruction: What’s the score in the gaming literature? J. Educ. Technol. Syst. 1993, 22, 1973–1983. [Google Scholar] [CrossRef]

- Griffiths, M. The educational benefits of videogames. Educ. Health 2002, 20, 47–51. [Google Scholar]

- Jacobs, J.W.; Dempsey, J.V. Simulation and gaming: Fidelity, feedback, and motivation. In Interactive Instruction and Feedback; Dempsey, J.V., Sales, G.C., Eds.; Educational Technology Publications: Englewood Hills, NJ, USA, 1993; pp. 197–228. [Google Scholar]

- Pierfy, D.A. Comparative simulation game research: Stumbling blocks and stepping stones. Simul. Games 1977, 8, 255–268. [Google Scholar] [CrossRef]

- Ricci, K.E.; Salas, E.; Cannon-Bowers, J. Do computer-based games facilitate knowledge acquisition and retention? Mil. Psychol. 1996, 8, 295–307. [Google Scholar] [CrossRef]

- Seok, S.; DaCosta, B. Predicting video game behavior: An investigation of the relationship between personality and mobile game play. Games Cult. 2015, 10, 481–501. [Google Scholar] [CrossRef]

- Landers, R.N.; Sanchez, D.R. Game-based, gamified, and gamefully designed assessments for employee selection: Definitions, distinctions, design, and validation. Int. J. Sel. Assess. 2022, 11, 12376. [Google Scholar] [CrossRef]

- Kirschner, D.; Williams, J.P. Measuring video game engagement through gameplay reviews. Simul. Gaming 2014, 45, 593–610. [Google Scholar] [CrossRef]

- Blair, L. The Use of Video Game Achievements to Enhance Player Performance, Self-Efficacy, and Motivation. Ph.D. Thesis, University of Central Florida, Orlando, FL, USA, 2011. [Google Scholar]

- Bell, B.S.; Kanar, A.M.; Kozlowski, S.W. Current issues and future directions in simulation-based training in North America. Int. J. Hum. Resour. Manag. 2008, 19, 1416–1434. [Google Scholar] [CrossRef]

- Girard, C.; Ecalle, J.; Magnan, A. Serious games as new educational tools: How effective are they? A meta-analysis of recent studies. J. Comput. Assist. Learn. 2013, 29, 207–219. [Google Scholar] [CrossRef]

- Fuchsberger, A. Improving decision making skills through business simulation gaming and expert systems. In Proceedings of the Hawaii International Conference on System Sciences, Koloa, HI, USA, 5–8 January 2016; pp. 827–836. [Google Scholar]

- Landers, R.N.; Bauer, K.N.; Callan, R.C.; Armstrong, M.B. Psychological theory and the gamification of learning. In Gamification in Education and Business; Reiners, T., Wood, L., Eds.; Springer: New York, NY, USA, 2015; pp. 165–186. [Google Scholar]

- Adcock, A. Making digital game-based learning working: An instructional designer’s perspective. Libr. Media Connect. 2008, 26, 56–57. [Google Scholar]

- Backlund, P.; Engström, H.; Johannesson, M.; Lebram, M.; Sjödén, B. Designing for self-efficacy in a game-based simulator: An experimental study and its implications for serious games design. In Proceedings of the Visualisation International Conference, London, UK, 9–11 July 2008; pp. 106–113. [Google Scholar]

- Lepper, M.R.; Chabay, R.W. Intrinsic motivation and instruction: Conflicting views on the role of motivational processes in computer-based education. Educ. Psychol. 1985, 20, 217–230. [Google Scholar] [CrossRef]

- Parry, S.B. The name of the game is simulation. Train. Dev. J. 1971, 25, 28–32. [Google Scholar]

- Bedwell, W.L.; Pavlas, D.; Heyne, K.; Lazzara, E.H.; Salas, E. Toward a taxonomy linking game attributes to learning an empirical study. Simul. Gaming 2012, 43, 729–760. [Google Scholar] [CrossRef]

- Erhel, S.; Jamet, E. Digital game-based learning: Impact of instructions and feedback on motivation and learning effectiveness. Comput. Educ. 2013, 67, 156–167. [Google Scholar] [CrossRef]

- Kampf, R. Are two better than one? Playing singly, playing in dyads in a computerized simulation of the Israeli–Palestinian conflict. Comput. Hum. Behav. 2014, 32, 9–14. [Google Scholar] [CrossRef]

- Cameron, B.; Dwyer, F. The effect of online gaming, cognition and feedback type in facilitating delayed achievement of different learning objectives. J. Interact. Learn. Res. 2005, 16, 243–258. Available online: https://www.learntechlib.org/primary/p/5896/ (accessed on 28 January 2022).

- Moreno, R.; Mayer, R.E. Role of guidance, reflection, and interactivity in an agent-based multimedia game. J. Educ. Psychol. 2005, 97, 117–128. [Google Scholar] [CrossRef] [Green Version]

- Egenfeldt-Nielsen, S. Overview of research on the educational use of video games. Digit. Kompet. 2006, 1, 184–213. [Google Scholar] [CrossRef]

- Kickmeier-Rust, M.D.; Peirce, N.; Conlan, O.; Schwarz, D.; Verpoorten, D.; Albert, D. Immersive digital games: The interfaces for next-generation e-learning? In Universal Access in Human-Computer Interaction. Applications and Services; Stephanidis, C., Ed.; Springer: Berlin, Germany, 2007; pp. 647–656. [Google Scholar]

- Kickmeier-Rust, M.D. Talking digital educational games. In Proceedings of the International Open Workshop on Intelligent Personalization and Adaptation in Digital Educational Games, Graz, Austria, 14 October 2009; Kickmeier-Rust, M.D., Ed.; pp. 55–66. [Google Scholar]

- Kirriemuir, J.; McFarlane, A. Literature Review in Games and Learning; NESTA Futurelab Series: Report 8; NESTA Futurelab: Bristol, UK, 2004; pp. 1–35. [Google Scholar]

- Michael, D.R.; Chen, S.L. Serious Games: Games That Educate, Train, and Inform; Thomson Course Technology, Muska & Lipman/Premier-Trade: Boston, MA, USA, 2005. [Google Scholar]

- Riedel, J.C.K.H.; Hauge, J.B. State of the art of serious games for business and industry. In Proceedings of the Concurrent Enterprising, Aachen, Germany, 20–22 June 2011; pp. 1–8. [Google Scholar]

- Sawyer, B.; Smith, P. Serious games taxonomy. In Proceedings of the Game Developers Conference, San Francisco, CA, USA, 18–22 February 2008. [Google Scholar]

- Zyda, M. From visual simulation to virtual reality to games. Computer 2005, 38, 25–32. [Google Scholar] [CrossRef]

- Lamb, R.L.; Annetta, L.; Vallett, D.B.; Sadler, T.D. Cognitive diagnostic like approaches using neural-network analysis of serious educational videogames. Comput. Educ. 2014, 70, 92–104. [Google Scholar] [CrossRef]

- Landers, R.N.; Landers, A.K. An empirical test of the theory of gamified learning: The effect of leaderboards on time-on-task and academic performance. Simul. Gaming 2015, 45, 769–785. [Google Scholar] [CrossRef]

- Oksanen, K. Subjective experience and sociability in a collaborative serious game. Simul. Gaming 2013, 44, 767–793. [Google Scholar] [CrossRef]

- Sedano, C.I.; Leendertz, V.; Vinni, M.; Sutinen, E.; Ellis, S. Hypercontextualized learning games fantasy, motivation, and engagement in reality. Simul. Gaming 2013, 44, 821–845. [Google Scholar] [CrossRef]

- Blunt, R. Does game-based learning work? Results from three recent studies. In Proceedings of the Interservice/Industry Training, Simulation, & Education Conference, Orlando, FL, USA, 26–29 November 2007; pp. 945–955. [Google Scholar]

- Garris, R.; Ahlers, R.; Driskell, J.E. Games, motivation, and learning: A research and practice model. Simul. Gaming 2002, 33, 441–467. [Google Scholar] [CrossRef]

- Locke, E.A.; Latham, G.P. A Theory of Goal Setting and Task Performance; Prentice Hall: Englewood Cliffs, NJ, USA, 1990. [Google Scholar]

- Akilli, G.K. Games and simulations: A new approach in education? In Games and Simulations in Online Learning: Research and Development Frameworks; Gibson, D.G., Aldrich, C.A., Prensky, M., Eds.; Information Science Publishing: Hershey, PA, USA, 2007; pp. 1–20. [Google Scholar]

- Alessi, S.M.; Trollip, S.R. Multimedia for Learning. Methods and Development, 3rd ed.; Allyn and Bacon: Needham Heights, MA, USA, 2001. [Google Scholar]

- Bergeron, B. Developing Serious Games; Charles River Media: Hingham, MA, USA, 2006. [Google Scholar]

- Hays, R.T. The Effectiveness of Instructional Games: A Literature Review and Discussion; Document No. NAWCTSD-TR-2005-004; Naval Air Warfare Center Training Systems Division: Orlando, FL, USA, 2005. [Google Scholar]

- Prensky, M. Digital Game-Based Learning; McGraw-Hill: New York, NY, USA, 2001. [Google Scholar]

- Vandercruysse, S.; Vandewaetere, M.; Clarebout, G. Game based learning: A review on the effectiveness of educational games. In Handbook of Research on Serious Games as Educational, Business, and Research Tools; Cruz-Cunha, M.M., Ed.; IGI Global: Hershey, PA, USA, 2012; pp. 628–647. [Google Scholar] [CrossRef]

- Adams, D.M.; Mayer, R.E.; MacNamara, A.; Koenig, A.; Wainess, R. Narrative games for learning: Testing the discovery and narrative hypotheses. J. Educ. Psychol. 2012, 104, 235–249. [Google Scholar] [CrossRef]

- Leutner, D. Guided discovery learning with computer-based simulation games: Effects of adaptive and non-adaptive instructional support. Learn. Instr. 1993, 3, 113–132. [Google Scholar] [CrossRef]

- Yerby, J.; Hollifield, S.; Kwak, M.; Floyd, K. Development of serious games for teaching digital forensics. Issues Inf. Syst. 2014, 15, 335–343. [Google Scholar]

- Morrison, J.E. Training for Performance: Principles of Applied Human Learning; Document No. 1507; John Wiley & Sons: Hoboken, NJ, USA, 1991. [Google Scholar]

- Carayon, P. Handbook of Human Factors and Ergonomics in Health Care and Patient Safety; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Weaver, S.J.; Salas, E.; King, H.B. Twelve best practices for team training evaluation in health care. Jt. Comm. J. Qual. Patient Saf. 2011, 37, 341–349. [Google Scholar] [CrossRef]

- Liang, D.W.; Moreland, R.; Argote, L. Group versus individual training and group performance: The mediating role of transactive memory. Personal. Soc. Psychol. Bull. 1995, 21, 384–393. [Google Scholar] [CrossRef]

- Brodbeck, F.C.; Greitemeyer, T. Effects of individual versus mixed individual and group experience in rule induction on group member learning and group performance. J. Exp. Soc. Psychol. 2000, 36, 621–648. [Google Scholar] [CrossRef]

- Laughlin, P.R.; Zander, M.L.; Knievel, E.M.; Tan, T.K. Groups perform better than the best individuals on letters-to-numbers problems: Informative equations and effective strategies. J. Personal. Soc. Psychol. 2003, 85, 684–694. [Google Scholar] [CrossRef] [Green Version]

- Dennis, K.A.; Harris, D. Computer-based simulation as an adjunct to a flight training. Int. J. Aviat. Psychol. 1998, 8, 261–276. [Google Scholar] [CrossRef]

- Proctor, M.D.; Panko, M.; Donovan, S.J. Considerations for training team situation awareness and task performance through PC-gamer simulated multi-ship helicopter operations. Int. J. Aviat. Psychol. 2004, 14, 191–205. [Google Scholar] [CrossRef]

- Papargyris, A.; Poulymenakou, A. The constitution of collective memory in virtual game worlds. J. Virtual Worlds Res. 2009, 1, 3–23. [Google Scholar] [CrossRef]

- Baldwin, T.T.; Ford, J.K. Transfer of training: A review and directions for future research. Pers. Psychol. 1988, 41, 63–105. [Google Scholar] [CrossRef]

- Kraiger, K. (Ed.) Decision-based evaluation. In Creating, Implementing, and Maintaining Effective Training and Development: State-of-the-Art Lessons for Practice; Jossey-Bass: San Francisco, CA, USA, 2002; pp. 331–376. [Google Scholar]

- Bloom, B.S. Taxonomy of Educational Objectives, Handbook; David McKay: New York, NY, USA, 1956. [Google Scholar]

- Velada, R.; Caetano, A.; Michel, A.; Lyons, B.D.; Kavanagh, M. The effects of training design, individual characteristics and work environment on transfer of training. Int. J. Train. Dev. 2007, 11, 282–294. [Google Scholar] [CrossRef]

- van Knippenberg, D.; De Dreu, C.K.W.; Homan, A.C. Work group diversity and group performance: An integrative model and research agenda. J. Appl. Psychol. 2004, 89, 1008–1022. [Google Scholar] [CrossRef] [PubMed]

- Lewis, K. Measuring transactive memory systems in the field: Scale development and validation. J. Appl. Psychol. 2003, 88, 587–604. [Google Scholar] [CrossRef] [Green Version]

- Tannenbaum, S.I.; Cerasoli, C.P. Do team and individual debriefs enhance performance? A meta-analysis. Hum. Factors 2013, 55, 231–245. [Google Scholar] [CrossRef]

- Peters, V.A.; Vissers, G.A. A simple classification model for debriefing simulation games. Simul. Gaming 2004, 35, 70–84. [Google Scholar] [CrossRef]

- Petranek, C.F.; Corey, S.; Black, R. Three levels of learning in simulations: Participating, debriefing, and journal writing. Simul. Gaming 1992, 23, 174–185. [Google Scholar] [CrossRef] [Green Version]

- NASA Moonbase Alpha Manual. Available online: http://www.nasa.gov/pdf/527465main_Moonbase_Alpha_Manual_Final.pdf (accessed on 27 January 2022).

- Koo, T.K.; Li, M.Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sanchez, D.R.; Langer, M. Video Game Pursuit (VGPu) scale development: Designing and validating a scale with implications for game-based learning and assessment. Simul. Gaming 2020, 51, 55–86. [Google Scholar] [CrossRef]

- Earley, P.C.; Northcraft, G.B.; Lee, C.; Lituchy, T.R. Impact of process and outcome feedback on the relation of goal setting to task performance. Acad. Manag. J. 1990, 33, 87–105. [Google Scholar] [CrossRef]

- Epstein, M.L.; Lazarus, A.D.; Calvano, T.B.; Matthews, K.A.; Hendel, R.A.; Epstein, B.B.; Brosvic, G.M. Immediate feedback assessment technique promotes learning and corrects inaccurate first responses. Psychol. Rec. 2002, 52, 187–201. [Google Scholar] [CrossRef] [Green Version]

- Pian-Smith, M.C.; Simon, R.; Minehart, R.D.; Podraza, M.; Rudolph, J.; Walzer, T.; Raemer, D. Teaching residents the two-challenge rule: A simulation-based approach to improve education and patient safety. Simul. Healthc. J. Soc. Simul. Healthc. 2009, 4, 84–91. [Google Scholar] [CrossRef]

- Stajkovic, A.D.; Luthans, F. Behavioral management and task performance in organizations: Conceptual background, meta-analysis, and test of alternative models. Pers. Psychol. 2003, 56, 155–194. [Google Scholar] [CrossRef] [Green Version]

- Bell, B.S.; Kozlowski, S.W. Adaptive guidance: Enhancing self-regulation, knowledge, and performance in technology-based training. Pers. Psychol. 2002, 55, 267–306. [Google Scholar] [CrossRef] [Green Version]

- Gist, M.E.; Stevens, C.K. Effects of practice conditions and supplemental training method on cognitive learning and interpersonal skill generalization. Organ. Behav. Hum. Decis. Processes 1998, 75, 142–169. [Google Scholar] [CrossRef]

- Kozlowski, S.W.; Gully, S.M.; Brown, K.G.; Salas, E.; Smith, E.M.; Nason, E.R. Effects of training goals and goal orientation traits on multidimensional training outcomes and performance adaptability. Organ. Behav. Hum. Decis. Processes 2001, 85, 1–31. [Google Scholar] [CrossRef]

- Stevens, C.K.; Gist, M.E. Effects of self-efficacy and goal-orientation training on negotiation skill maintenance: What are the mechanisms? Pers. Psychol. 1997, 50, 955–978. [Google Scholar] [CrossRef]

- Lee, M.; Faber, R.J. Effects of product placement in on-line games on brand memory: A perspective of the limited-capacity model of attention. J. Advert. 2007, 36, 75–90. [Google Scholar] [CrossRef]

- Kirschner, P.A.; Sweller, J.; Clark, R.E. Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educ. Psychol. 2006, 41, 75–86. [Google Scholar] [CrossRef]

- Mayer, R.E.; Griffith, E.; Naftaly, I.; Rothman, D. Increased interestingness of extraneous details leads to decreased learning. J. Exp. Psychol. Appl. 2008, 14, 329–339. [Google Scholar] [CrossRef] [PubMed]

- Sweller, J. Instructional Design in Technical Areas; ACER Press: Camberwell, Australia, 1999. [Google Scholar]

- deHaan, J.; Reed, W.M.; Kuwada, K. The effect of interactivity with a music video game on second language vocabulary recall. Lang. Learn. Technol. 2010, 14, 74–94. [Google Scholar]

- Harp, S.F.; Mayer, R.E. The role of interest in learning from scientific text and illustrations: On the distinction between emotional interest and cognitive interest. J. Educ. Psychol. 1997, 89, 92–102. [Google Scholar] [CrossRef]

- Harp, S.F.; Mayer, R.E. How seductive details do their damage: A theory of cognitive interest in science learning. J. Educ. Psychol. 1998, 90, 414–434. [Google Scholar] [CrossRef]

- Brodbeck, F.; Greitemeyer, T. A dynamic model of group performance: Considering the group members’ capacity to learn. Group Processes Intergroup Relat. 2000, 3, 159–182. [Google Scholar] [CrossRef]

- Kirschner, F.; Paas, F.; Kirschner, P.A. Superiority of collaborative learning with complex tasks: A research note on an alternative affective explanation. Comput. Hum. Behav. 2011, 27, 53–57. [Google Scholar] [CrossRef] [Green Version]

- Kirschner, F.; Paas, F.; Kirschner, P.A.; Janssen, J. Differential effects of problem-solving demands on individual and collaborative learning outcomes. Learn. Instr. 2011, 21, 587–599. [Google Scholar] [CrossRef]

- Onrubia, J.; Rochera, M.J.; Engel, A. Promoting individual and group regulated learning in collaborative settings: An experience in Higher Education. Electron. J. Res. Educ. Psychol. 2015, 13, 189–210. [Google Scholar] [CrossRef] [Green Version]

- Maynard, M.T.; Mathieu, J.E.; Gilson, L.L.; Sanchez, D.R.; Dean, M.D. Do I really know you and does it matter? Unpacking the relationship between familiarity and information elaboration in global virtual teams. Group Organ. Manag. 2018, 44, 3–37. [Google Scholar] [CrossRef]

- Henry, R.A. Improving group judgment accuracy: Information sharing and determining the best member. Organ. Behav. Hum. Decis. Processes 1995, 62, 190–197. [Google Scholar] [CrossRef]

- Schultze, T.; Mojzisch, A.; Schulz-Hardt, S. Why groups perform better than individuals at quantitative judgment tasks: Group-to-individual transfer as an alternative to differential weighting. Organ. Behav. Hum. Decis. Processes 2012, 118, 24–36. [Google Scholar] [CrossRef]

- Druckman, D.; Bjork, R.A. In the Mind’s Eye: Enhancing Human Performance; National Academies Press: Washington, DC, USA, 1991. [Google Scholar]

- Steiner, I.D. Group Process and Productivity; Academic Press: New York, NY, USA, 1972. [Google Scholar]

- Valacich, J.S.; Dennis, A.R.; Connolly, T. Idea generation in computer-based groups: A new ending to an old story. Organ. Behav. Hum. Decis. Processes 1994, 57, 448–467. [Google Scholar] [CrossRef]

- Brown, R. Group Processes: Dynamics within and between Groups; Blackwell: Oxford, UK, 1988. [Google Scholar]

- Salas, E.; Bowers, C.A.; Cannon-Bowers, J.A. Military team research: 10 years of progress. Mil. Psychol. 1995, 7, 55–75. [Google Scholar] [CrossRef]

- Hung, W.; Van Eck, R. Aligning problem solving and gameplay: A model for future research and design. In Interdisciplinary Models and Tools for Serious Games: Emerging Concepts and Future Directions; Van Eck, R., Ed.; IGI Global: Hershey, PA, USA, 2010; pp. 227–263. [Google Scholar] [CrossRef] [Green Version]

- Blumenfeld, P.C.; Kempler, T.M.; Krajcik, J.S. Motivation and cognitive engagement in learning environments. In The Cambridge Handbook of the Learning Sciences; Sawyer, R.K., Ed.; Cambridge University Press: Cambridge, NY, USA, 2006; pp. 475–488. [Google Scholar]

- Clark, R.E. Reconsidering research on learning from media. Rev. Educ. Res. 1983, 53, 445–459. [Google Scholar] [CrossRef]

- Annetta, L.A.; Minogue, J.; Holmes, S.Y.; Cheng, M.T. Investigating the impact of video games on high school students’ engagement and learning about genetics. Comput. Educ. 2009, 53, 74–85. [Google Scholar] [CrossRef]

- Tüzün, H. Multiple motivations framework. In Affective and Emotional Aspects of Human-Computer Interaction: Game-Based and Innovative Learning Approaches; Pivec, M., Ed.; IOS Press: Washington, DC, USA, 2006; pp. 59–92. [Google Scholar]

- Bandura, A. Social Foundations of Thought and Action: A Social Cognitive Theory; Prentice Hall: Englewood Cliffs, NJ, USA, 1986. [Google Scholar]

- Krendl, K.A.; Broihier, M. Student responses to computers: A longitudinal study. In Proceedings of the Annual Meeting of the International Communication Association, Chicago, IL, USA, 23–27 May 1991. [Google Scholar]

- Smith-Jentsch, K.A.; Mathieu, J.E.; Kraiger, K. Investigating linear and interactive effects of shared mental models on safety and efficiency in a field setting. J. Appl. Psychol. 2005, 90, 523–535. [Google Scholar] [CrossRef]

- Gist, M.E.; Stevens, C.K.; Bavetta, A.G. Effects of self-efficacy and post-training intervention on the acquisition and maintenance of complex interpersonal skills. Pers. Psychol. 1991, 44, 837–861. [Google Scholar] [CrossRef]

- Weiner, E.J.; Sanchez, D.R. Cognitive ability in Virtual Reality: Validity evidence for VR game-based assessments. Int. J. Sel. Assess. 2020, 28, 215–236. [Google Scholar] [CrossRef]

- Levine, J.M.; Moreland, R.L. Culture and socialization in work groups. In Perspectives in Perspectives on Socially Shared Cognition; Resnick, L.B., Levine, J.M., Teasley, S.D., Eds.; American Psychological Association: Washington, DC, USA, 1991; pp. 257–279. [Google Scholar]

- Behdani, B.; Sharif, M.R.; Hemmati, F. A comparison of reading strategies used by English major students in group learning vs. individual learning: Implications & applications. In Proceeding of the International Conference on Literature and Linguistics, Scientific Information Database, Tehran, Iran, 19–20 July 2016. [Google Scholar]

- Fong, G.T.; Nisbett, R.E. Immediate and delayed transfer of training effects in statistical reasoning. J. Exp. Psychol. Gen. 1991, 120, 34–45. [Google Scholar] [CrossRef]

- Kanawattanachai, P.; Yoo, Y. The impact of knowledge coordination on virtual team performance over time. MIS Q. 2007, 31, 783–808. [Google Scholar] [CrossRef] [Green Version]

- Dyer, J.L. Annotated Bibliography and State-of-the-Art Review of the Field of Team Training as It Relates to Military Teams; Document No. ARI-RN-86-18; Army Research Institute for the Behavioral and Social Sciences: Alexandria, VA, USA, 1986. [Google Scholar]

- Volet, S.; Vauras, M.; Salo, A.E.; Khosa, D. Individual contributions in student-led collaborative learning: Insights from two analytical approaches to explain the quality of group outcome. Learn. Individ. Differ. 2017, 53, 79–92. [Google Scholar] [CrossRef]

- Clark, R.E. Learning from serious games? Arguments, evidence, and research suggestions. Educ. Technol. 2007, 47, 56–59. Available online: https://www.jstor.org/stable/44429512 (accessed on 28 January 2022).

| M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. Age | 19.65 | 3.43 | |||||||

| 2. Sex | 0.64 | 0.48 | 0.08 | ||||||

| 3. Game Experience | 2.76 | 1.03 | 0.01 | 0.58 ** | |||||

| 4. Rules/Goals Clarity | 0.48 | 0.50 | 0.02 | −0.05 | −0.03 | ||||

| 5. Human Interaction | 0.71 | 0.45 | −0.07 | 0.03 | 0.02 | −0.01 | |||

| 6. Mid-Training | 0.31 | 0.19 | 0-.07 | 0.23 ** | 0.24 ** | 0.13 ** | −0.29 ** | ||

| 7. Post-Training—Familiar | 0.24 | 0.13 | −0.15 ** | 0.03 | 0.07 | 0.04 | 0.04 | 0.22 ** | |

| 8. Post-Training—Novel | 0.31 | 0.23 | −0.01 | 0.30 ** | 0.32 ** | 0.02 | 0.06 | 0.30 ** | 0.23 ** |

| B (SE) | F (1512) | p | R2 | |

|---|---|---|---|---|

| Mid-Training Performance | ||||

| Age | −0.59 (0.23) | 6.77 | 0.01 | 0.01 |

| Sex | 6.28 (1.97) | 10.16 | <0.001 | 0.02 |

| GE | 3.00 (0.92) | 10.64 | <0.001 | 0.02 |

| RG | −5.66 (1.83) | 9.41 | <0.001 | 0.02 |

| HI | 12.29 (2.45) | 55.83 | <0.001 | 0.10 |

| RGxHI | 0.88 (3.40) | 0.07 | 0.80 | <0.01 |

| Post-Training Performance—Familiar Task | ||||

| Age | −0.11 (0.28) | 0.16 | 0.69 | <0.01 |

| Sex | 8.09 (2.41) | 11.30 | <0.001 | 0.02 |

| GE | 4.77 (1.12) | 18.01 | <0.001 | 0.03 |

| RG | −4.14 (2.23) | <0.01 | 0.97 | <0.01 |

| HI | −6.76 (2.99) | 1.67 | 0.20 | <0.01 |

| RGxHI | 8.13 (4.16) | 3.82 | 0.05 | 0.01 |

| Post-Training Performance—Novel Task | ||||

| Age | −0.55 (0.17) | 10.98 | <0.001 | 0.02 |

| Sex | −0.19 (1.46) | 0.02 | 0.90 | <0.01 |

| GE | 0.98 (0.68) | 2.07 | 0.15 | <0.01 |

| RG | −1.76 (1.35) | 0.44 | 0.51 | <0.01 |

| HI | −1.81 (1.81) | 0.50 | 0.48 | <0.01 |

| RGxHI | 1.85 (2.52) | 0.54 | 0.46 | <0.01 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sanchez, D.R. Videogame-Based Training: The Impact and Interaction of Videogame Characteristics on Learning Outcomes. Multimodal Technol. Interact. 2022, 6, 19. https://doi.org/10.3390/mti6030019

Sanchez DR. Videogame-Based Training: The Impact and Interaction of Videogame Characteristics on Learning Outcomes. Multimodal Technologies and Interaction. 2022; 6(3):19. https://doi.org/10.3390/mti6030019

Chicago/Turabian StyleSanchez, Diana R. 2022. "Videogame-Based Training: The Impact and Interaction of Videogame Characteristics on Learning Outcomes" Multimodal Technologies and Interaction 6, no. 3: 19. https://doi.org/10.3390/mti6030019

APA StyleSanchez, D. R. (2022). Videogame-Based Training: The Impact and Interaction of Videogame Characteristics on Learning Outcomes. Multimodal Technologies and Interaction, 6(3), 19. https://doi.org/10.3390/mti6030019