Emotion-Aware In-Car Feedback: A Comparative Study

Abstract

1. Introduction

- RQ1: Impact on Emotions: To what extent can in-car feedback systems influence drivers’ emotional states?

- RQ2: Feedback Preferences: What are the differences in the effectiveness and user preference of various feedback modalities (light, sound, vibration)?

- RQ3: Safety of Testing: Is it safe to conduct road testing of such in-car interventions, and what measures can be taken to ensure safety during testing?

- Design Guidelines for Tailored In-Car Feedback: This study provides guidelines on creating personalised in-car feedback systems that adapt to a driver’s emotional state (positive, neutral, or negative).

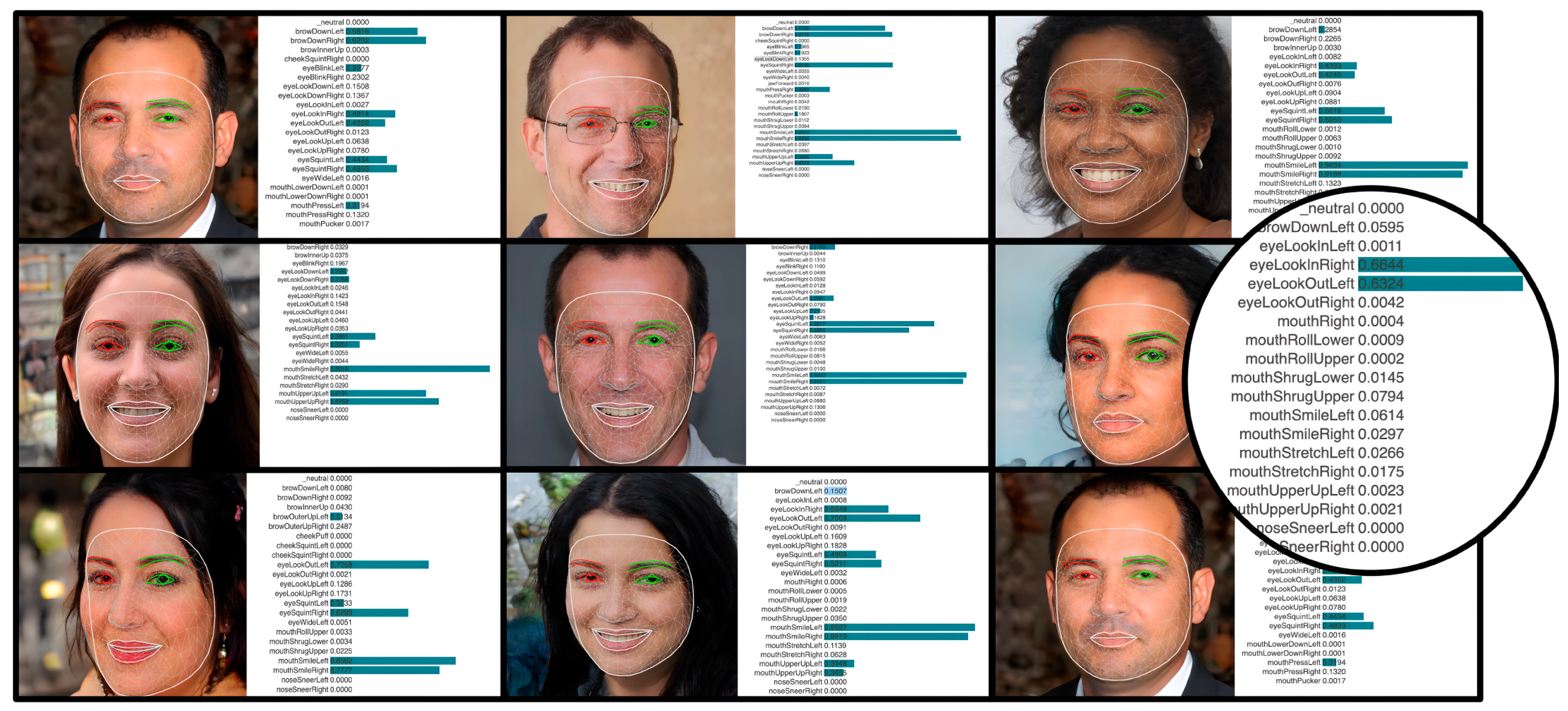

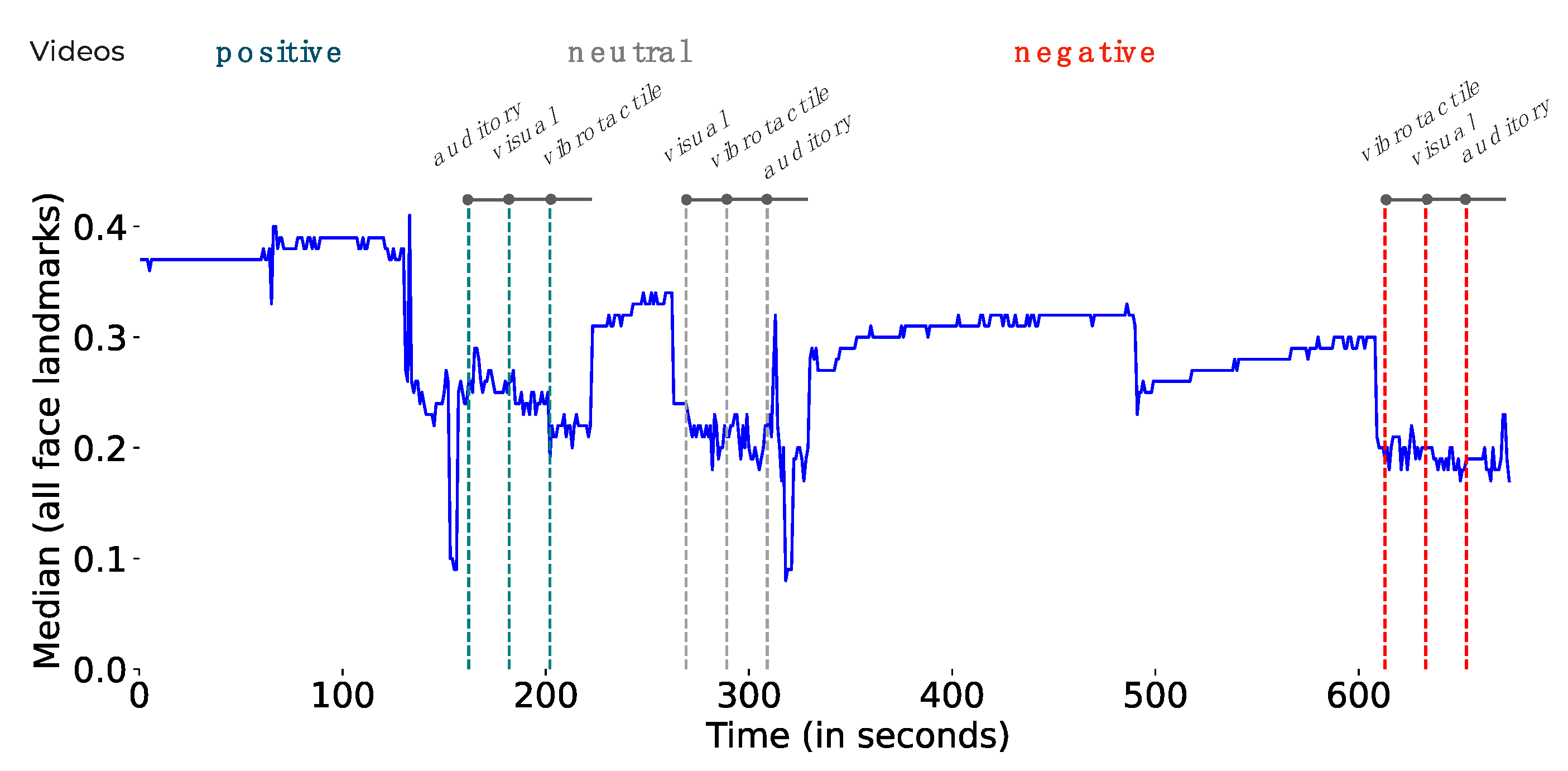

- Emotion Classification System using Facial Landmark Data: We developed a machine learning classification system that utilises only facial landmark data to categorise drivers’ emotional states into positive, neutral, or negative, enabling unobtrusive and accurate emotion detection.

- Dataset with Labeled Face Landmark Annotations: To facilitate future research in this area, we created a dataset containing fully labeled face landmark annotations, which can be used to improve emotion detection algorithms and evaluate more effective in-car feedback systems.

- Finally, it is worth noting the significance of the user study involving video stimuli, which constitutes a valuable contribution. We were pleasantly surprised by participants’ immersion in the presented mood, underscoring the relevance and impact of such investigations.

2. Related Work

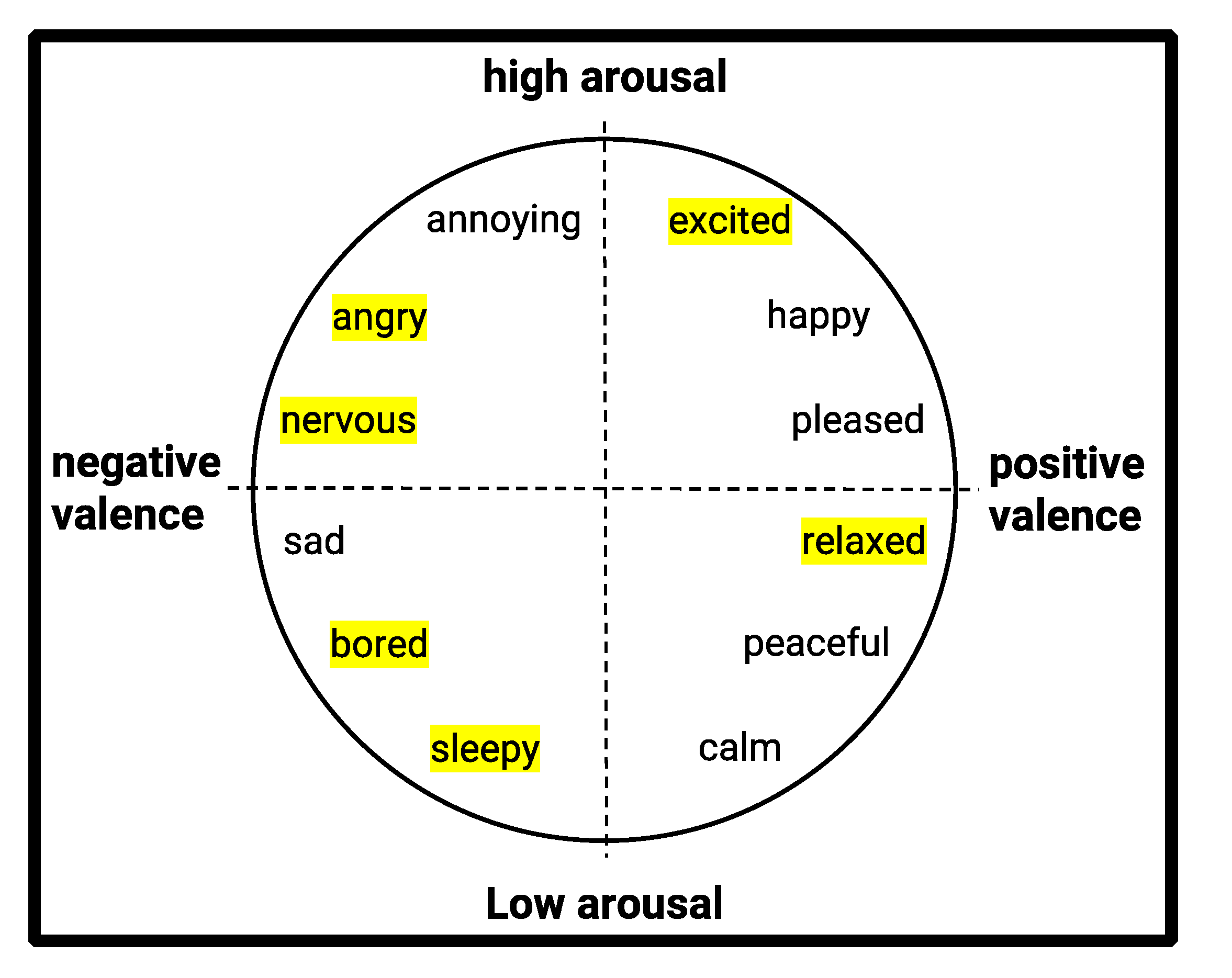

2.1. Theoretical Models for Understanding Emotions

2.2. In-Car Affective Computing

3. Concept

4. User Study

4.1. Apparatus

- Visual Feedback: This part of the setup featured Philips Hue white and color ambiance light strips (1800 lumens, 20 W) mounted in front of the driving simulator’s windshield. The lights were connected to a Philips Hue Bridge and managed through the Philips Hue API (https://github.com/nirantak/hue-api, accessed on 1 May 2024).

- Audio Feedback: The setup also included multimedia speakers placed on each side of the participant, connected to a Windows 10 PC via a 3.5 mm audio jack. These speakers were controlled through the PC’s audio settings and custom Python scripts.

- Vibrotactile Feedback: A massage seat (https://www.amazon.com/dp/B0CM3H474R/, accessed on 1 May 2024) was fixed onto the bucket seat frame of the driving simulator. It contained ten massage motors (four vibrotactile motors for the lower torso and six for the upper torso) and a heating function. The seat operated on a 12 V DC adapter and featured a built-in control panel for manually adjusting massage modes and intensities.

4.2. Participants

4.3. Design

- Positive Emotion: Visual feedback with warm, vibrant colours, e.g., orange with hue of 5000, saturation of 254, brightness of 254, and RGB value of (255, 130, 0); Auditory Feedback with upbeat, joyful music; and Vibrotactile Feedback with subtle vibration intensity. The choice of warm colours, like orange, for the Visual Feedback was based on studies emphasising their link to positive emotions and their capacity to evoke feelings of warmth and excitement [44]. Upbeat and joyful music was selected to complement this Visual Feedback, as research suggests music can influence emotional states [45,46]. The concept of “haptic empathy” [42] inspired the idea of Vibrotactile Feedback. This concept suggests that Vibrotactile Feedback can convey emotional meaning. For positive feedback, a subtle vibration was selected. Only one of the lower torso motors was activated with the lowest device-specific speed.

- Neutral Emotion: Visual Feedback with a soft and neutral colour, e.g., blue, with a hue of 46,920, saturation of 254, brightness of 254, and an RGB value of (0, 143, 255), was chosen to create a calming atmosphere [44]; Auditory Feedback with relaxing, ambient music; and Vibrotactile Feedback with mild vibration intensity (only two of the lower torso motors were active with an intermediate device-specific speed). Relaxing and ambient music was chosen to maintain the neutral emotion.

- Negative Emotion: Visual Feedback with cool and soothing colour, e.g., green, with a hue 25,500, saturation 254, brightness 254, and an RGB value of (0, 255, 128) were chosen to help regulate negative emotions or improve this state to a neutral state; Auditory Feedback with slow, calming music; and Vibrotactile Feedback with intermittent, pulsating vibration pattern (poke effect—with ten motors running simultaneously with the maximum device-specific speed). The strong, pulsating vibrotactile (with all motors active) was used to grab attention and disrupt the negative state.

4.4. Data Collection and Analysis

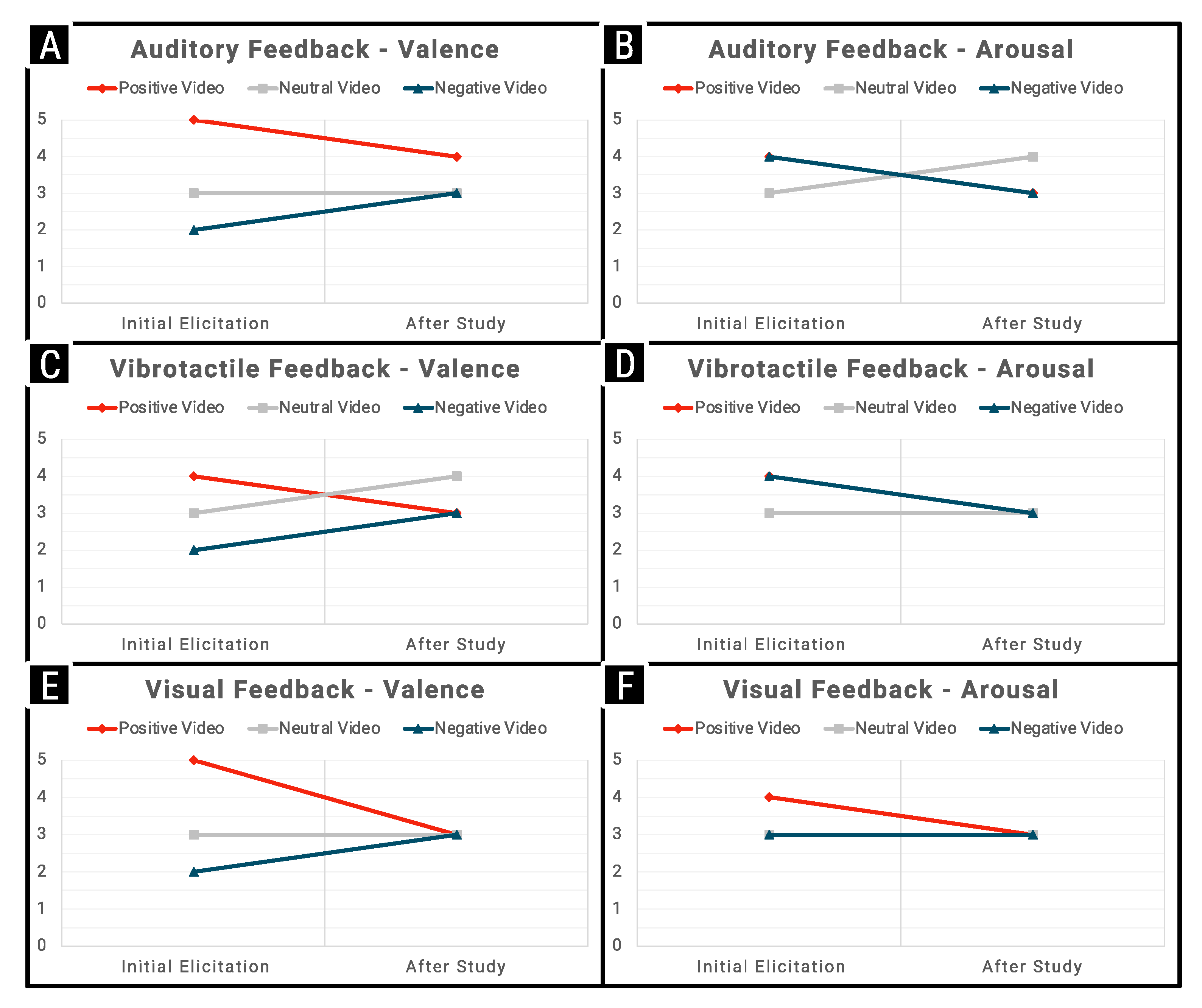

5. Results

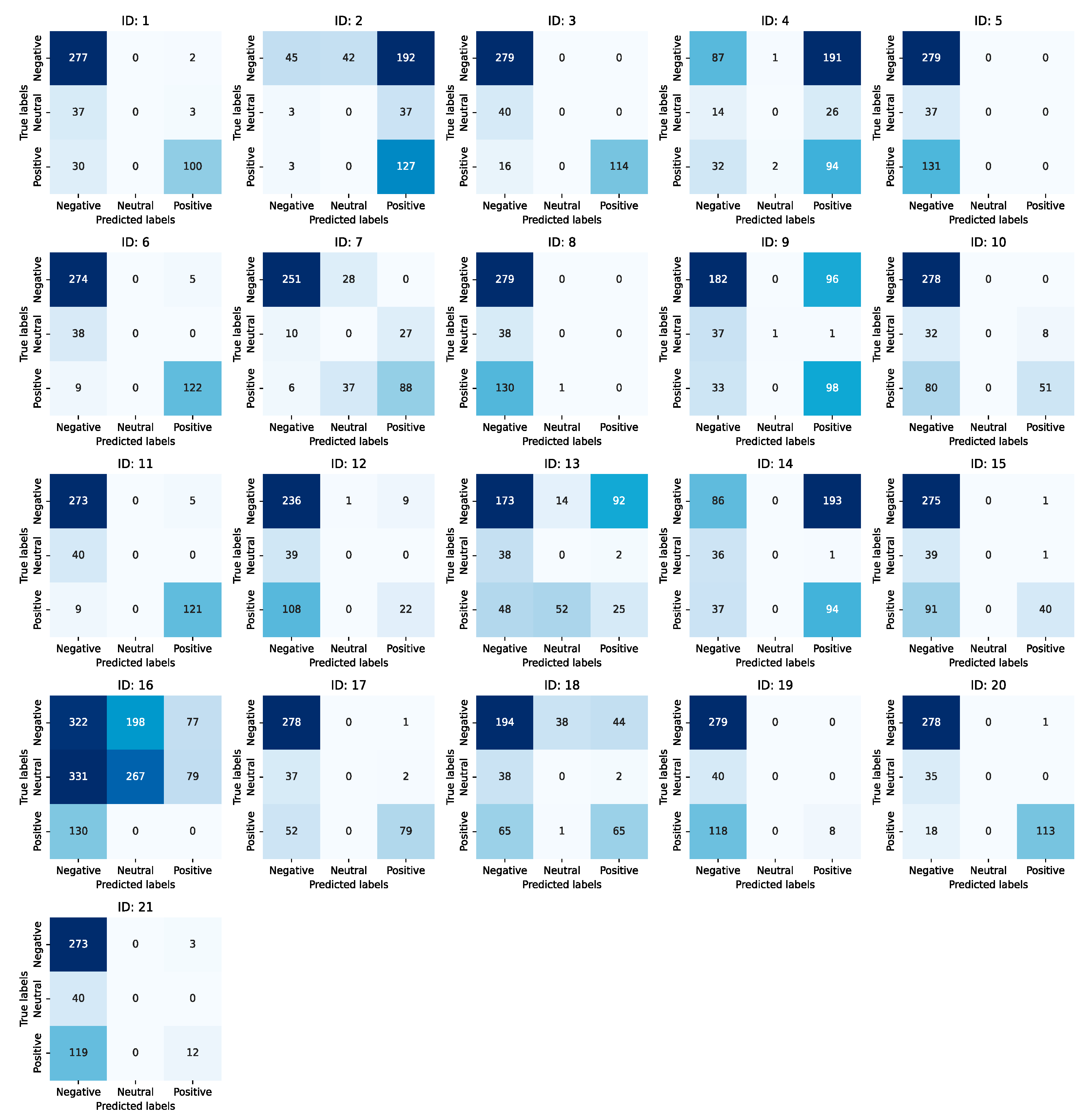

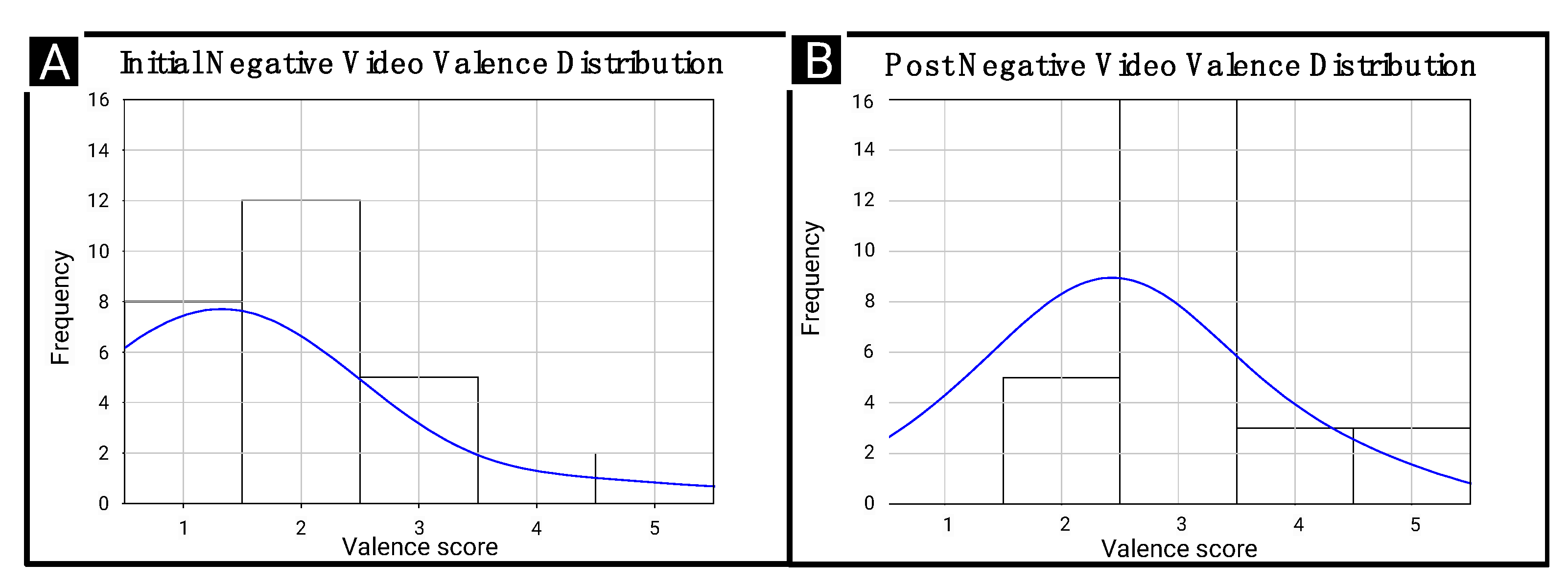

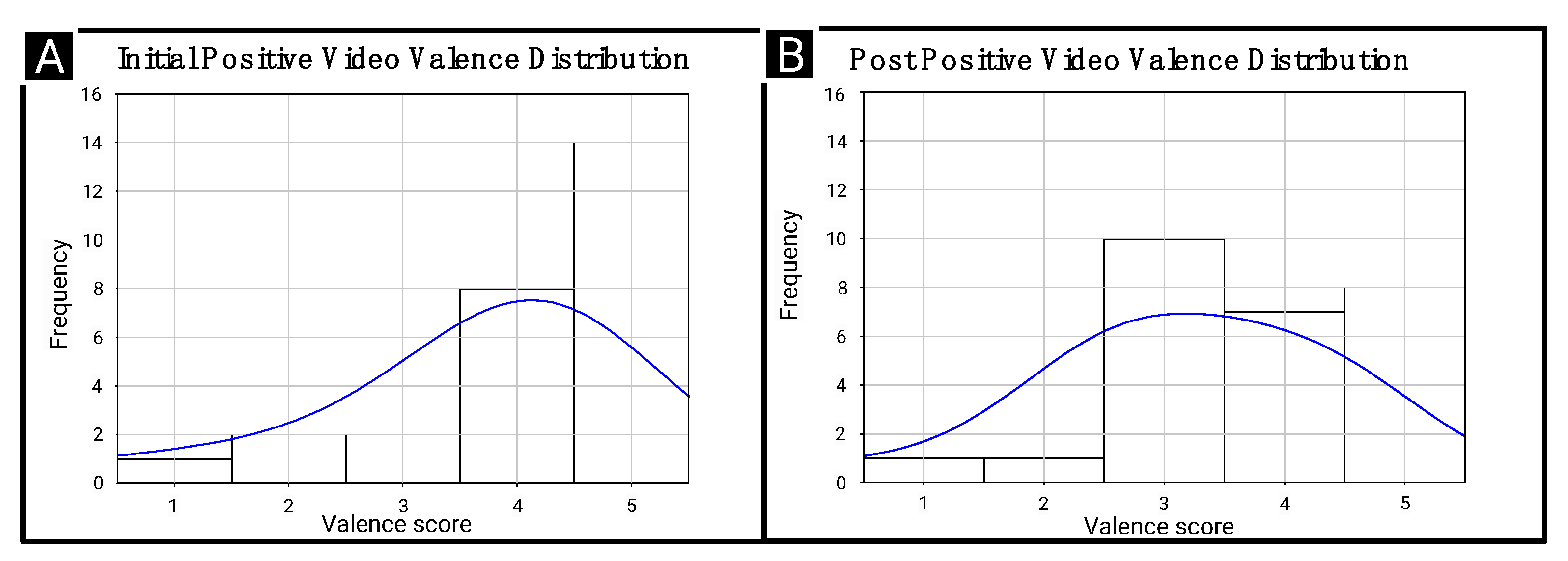

5.1. Emotional Sensing

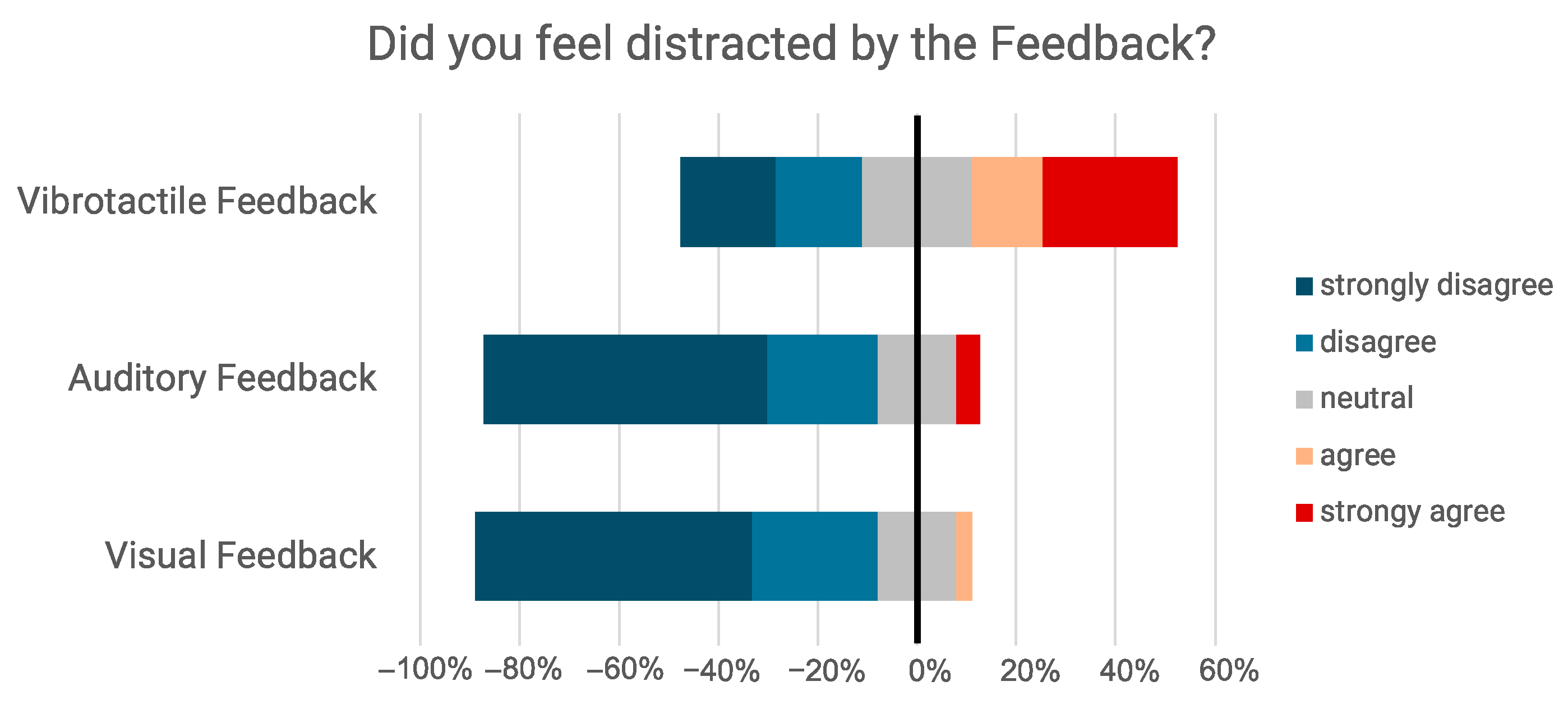

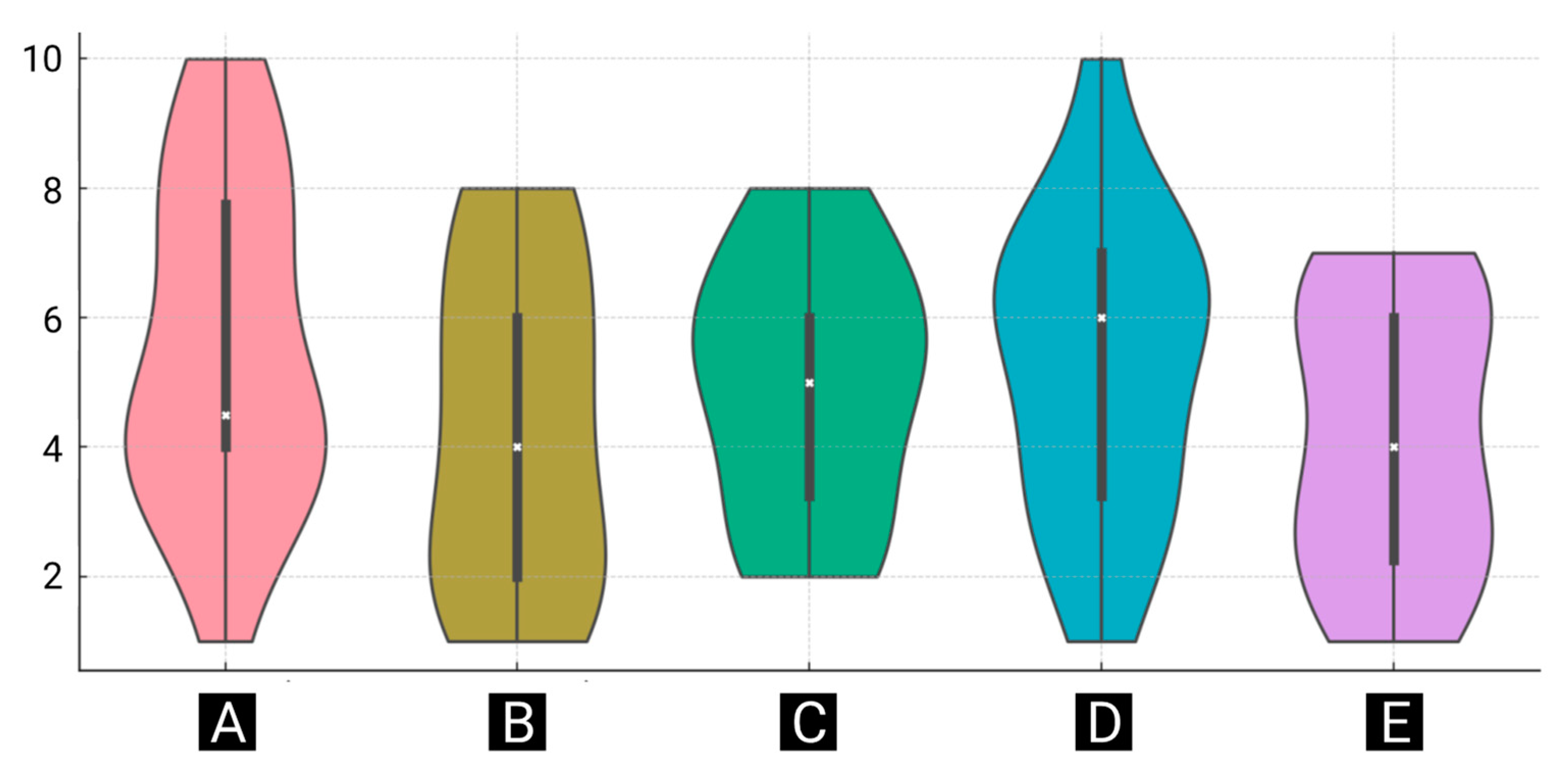

5.2. Spotting the Right Feedback and Distraction

5.3. Qualitative Results

6. Emotion Classification via Machine Learning

6.1. Input Features

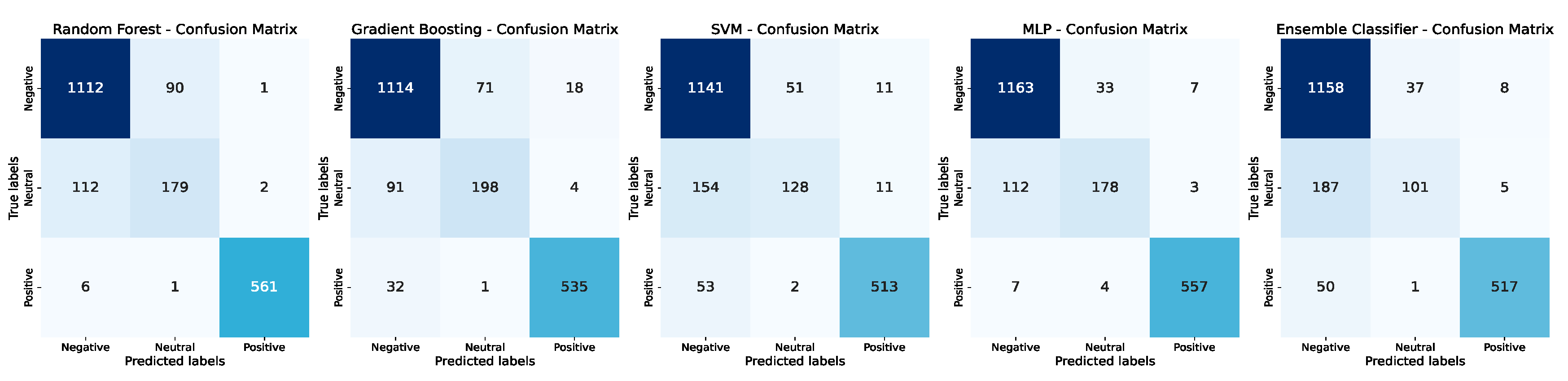

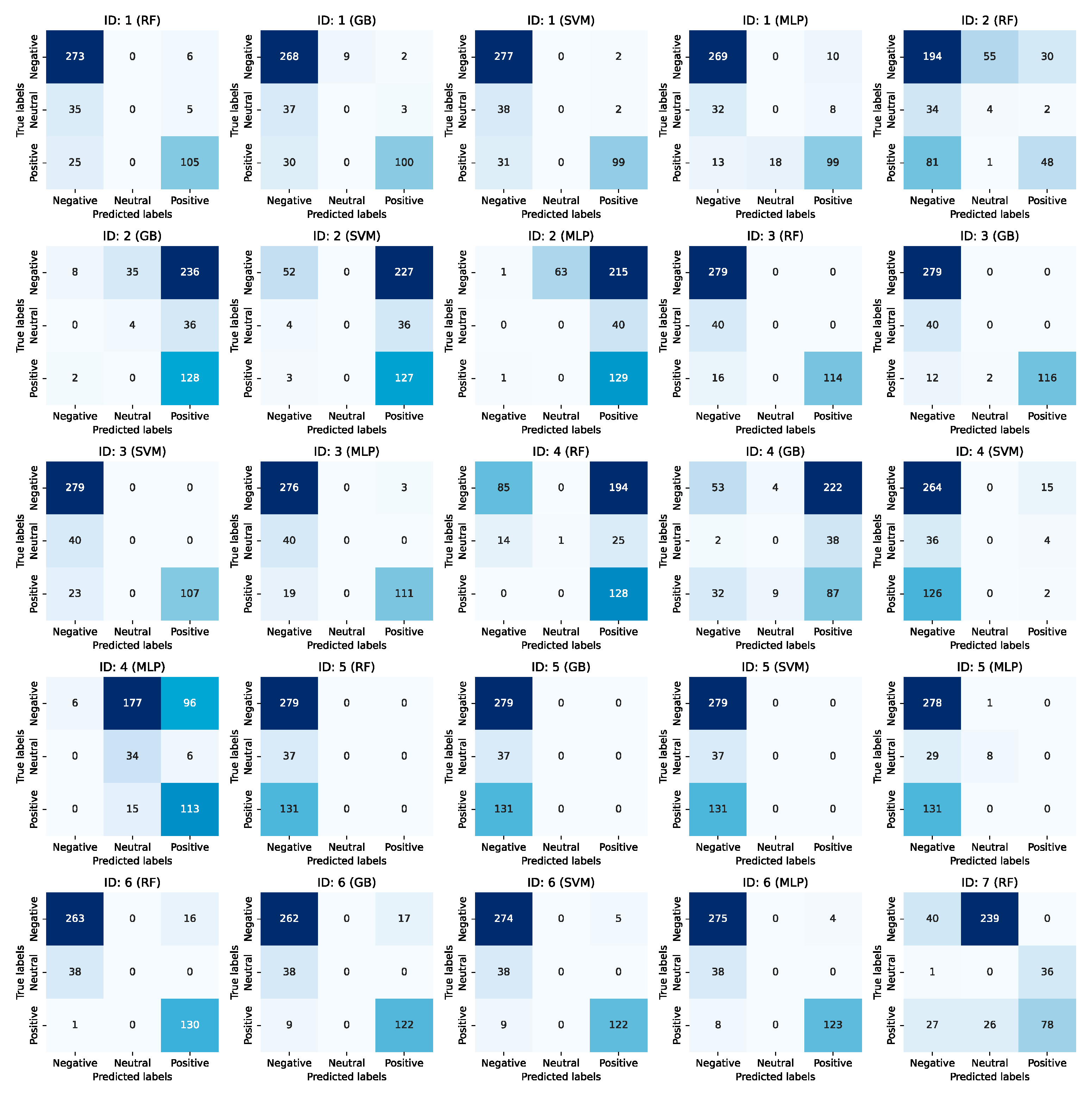

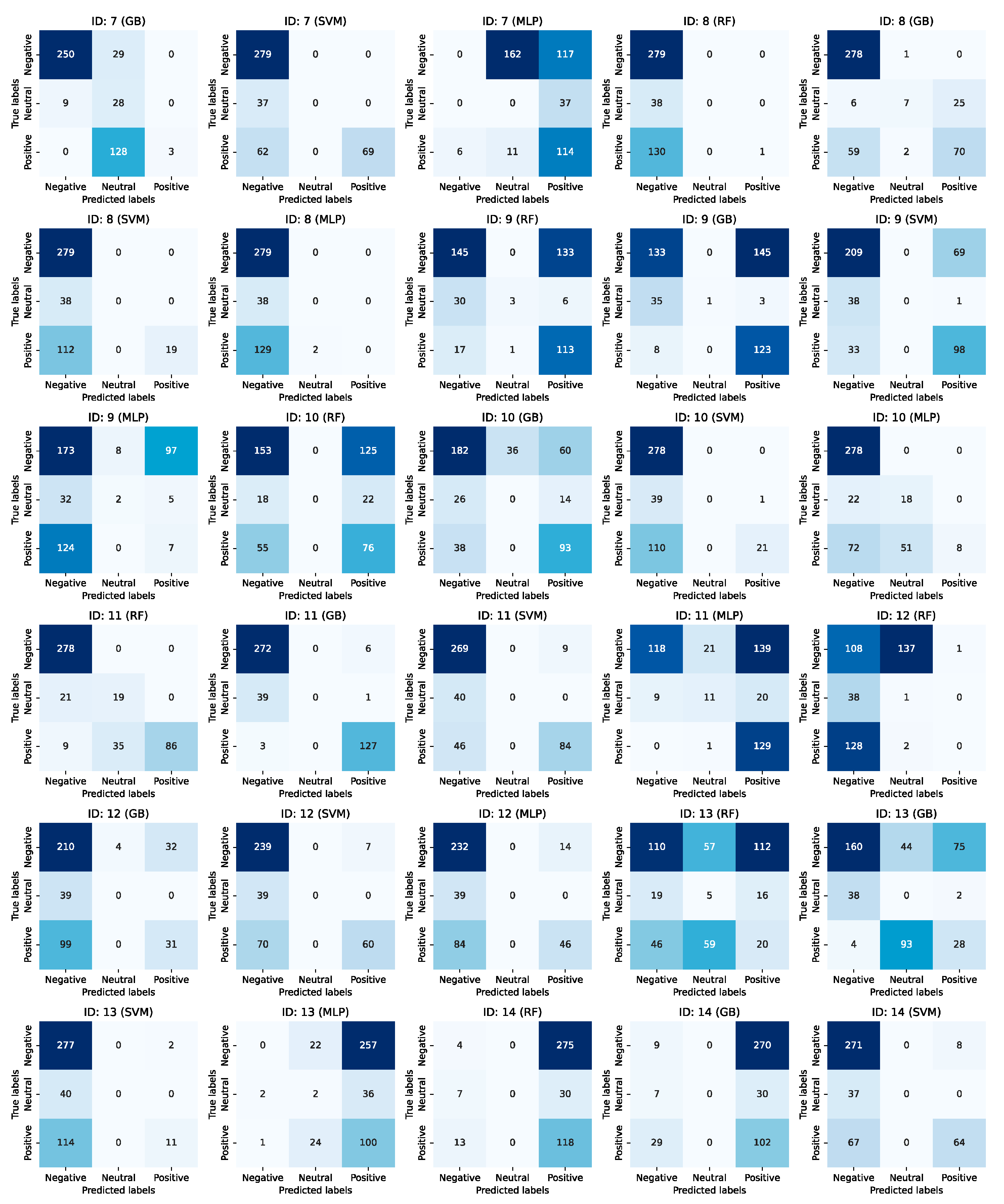

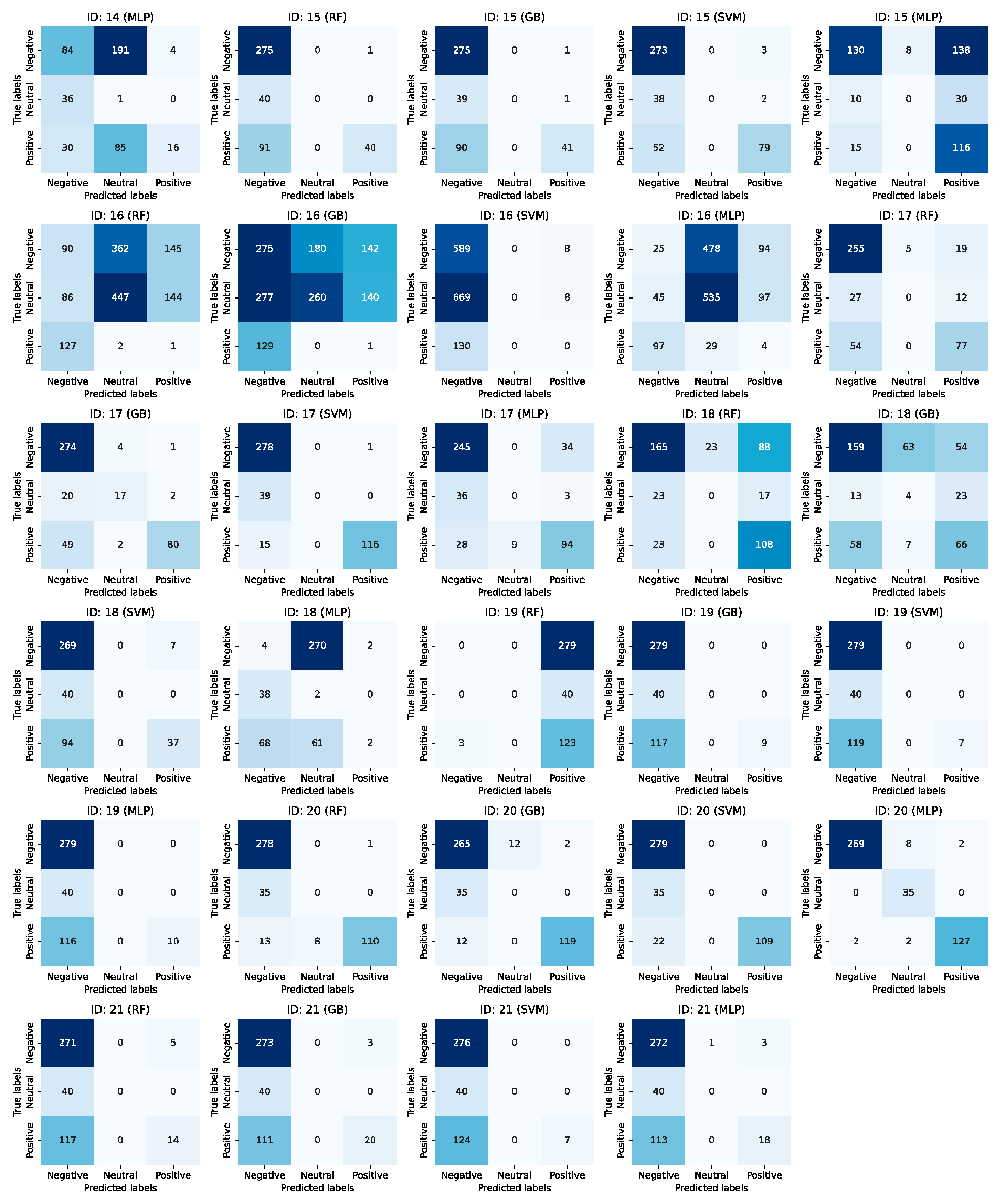

6.2. Model Training and Testing: Elicitation Phase

6.3. Model Testing: Feedback Phase

7. Design Recommendations

- D1 (Multimodal Feedback): for the feedback, we propose a combination of visual, auditory, and vibrotactile feedback to cater to individual preferences and enhance the overall effectiveness of the system. The study results indicated that participants had varying preferences for feedback modalities, so a multimodal approach ensures a more inclusive and personalized experience.

- D2 (Adaptive Feedback According to Emotional State): we also should make sure that we design the feedback system to adapt to the driver’s emotional state in real-time. We should also provide calming and soothing feedback for negative emotions using cool colors and relaxed music. For neutral emotions, we recommend using soft, neutral colors and ambient music to maintain balance. In contrast, we enhance positive emotions with warm, vibrant colors and upbeat music. The study clearly showed that adaptive feedback can significantly enhance the driver’s emotional well-being.

- D3 (Personalization Options): We should allow drivers to customize the feedback based on their preferences. The study showed that adaptive feedback can significantly enhance the driver’s emotional well-being. Options could include adjusting the intensity of vibrotactile feedback or selecting from various music genres.

- D4 (Unobtrusive and Non-distracting Feedback): We should also ensure that the feedback is subtle and does not distract or overwhelm the driver—which might be very critical. Some participants in the study found, for instance, strong vibrotactile feedback to be distracting. The feedback should be designed to be unobtrusive and not interfere with the primary task of driving. Therefore, it is crucial to ensure that the feedback does not interfere with driving tasks, as highlighted by the study results.

- D5 (Context-aware Feedback): We should also consider the driving context when providing feedback. The study indicated that the effectiveness of feedback modalities can vary depending on factors like road conditions, traffic, and time of day. In scenarios such as heavy traffic or challenging conditions, prioritize less distracting feedback like gentle vibrotactile cues or “soft ambient” lighting.

- D6 (Continuous Improvement through Machine Learning): Finally, we should use machine learning techniques to continuously enhance the emotion recognition model and feedback system as the study demonstrated the potential of using facial landmark data and machine learning algorithms to classify emotional states. By collecting diverse training data and exploring advanced feature engineering, the system can become more robust and accurate in recognizing emotions and providing appropriate feedback.

8. Discussion

8.1. Establishing a Systematic Feedback Approach

8.2. Alignment with Previous Research

8.3. Addressing Research Questions

8.4. Limitations of the Study

9. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Staiano, J.; Menéndez, M.; Battocchi, A.; De Angeli, A.; Sebe, N. UX_Mate: From Facial Expressions to UX Evaluation. In Proceedings of the Designing Interactive Systems Conference, Newcastle Upon Tyne, UK, 11–15 June 2012; ACM: New York, NY, USA, 2012; pp. 741–750. [Google Scholar]

- Xiao, H.; Li, W.; Zeng, G.; Wu, Y.; Xue, J.; Zhang, J.; Li, C.; Guo, G. On-Road Driver Emotion Recognition Using Facial Expression. Appl. Sci. 2022, 12, 807. [Google Scholar] [CrossRef]

- Kosch, T.; Hassib, M.; Reutter, R.; Alt, F. Emotions on the Go: Mobile Emotion Assessment in Real-Time Using Facial Expressions. In Proceedings of the International Conference on Advanced Visual Interfaces, Salerno, Italy, 28 September–2 October 2020; ACM: New York, NY, USA, 2020; pp. 1–9. [Google Scholar]

- Antoniou, N.; Katsamanis, A.; Giannakopoulos, T.; Narayanan, S. Designing and Evaluating Speech Emotion Recognition Systems: A Reality Check Case Study with IEMOCAP. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Hashem, A.; Arif, M.; Alghamdi, M. Speech Emotion Recognition Approaches: A Systematic Review. Speech Commun. 2023, 154, 102974. [Google Scholar] [CrossRef]

- Tscharn, R.; Latoschik, M.E.; Löffler, D.; Hurtienne, J. “Stop over There”: Natural Gesture and Speech Interaction for Non-Critical Spontaneous Intervention in Autonomous Driving. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; pp. 91–100. [Google Scholar] [CrossRef]

- Dobbins, C.; Fairclough, S. Detecting Negative Emotions during Real-Life Driving via Dynamically Labelled Physiological Data. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Athens, Greece, 19–23 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 830–835. [Google Scholar]

- Healey, J.A.; Picard, R.W. Detecting Stress during Real-World Driving Tasks Using Physiological Sensors. IEEE Trans. Intell. Transp. Syst. 2005, 6, 156–166. [Google Scholar] [CrossRef]

- Zepf, S.; Dittrich, M.; Hernandez, J.; Schmitt, A. Towards Empathetic Car Interfaces: Emotional Triggers While Driving. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Damasio, A.R. Emotions and Feelings; Cambridge University Press: Cambridge, UK, 2004; Volume 5, pp. 49–57. [Google Scholar]

- Wagener, N.; Niess, J.; Rogers, Y.; Schöning, J. Mood Worlds: A Virtual Environment for Autonomous Emotional Expression. In Proceedings of the CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; ACM: New York, NY, USA, 2022; pp. 1–16. [Google Scholar]

- Russell, J.A. A Circumplex Model of Affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Ekkekakis, P.; Russell, J.A. The Measurement of Affect, Mood, and Emotion: A Guide for Health-Behavioral Research, 1st ed.; Cambridge University Press: Cambridge, UK, 2013; ISBN 978-1-107-64820-3. [Google Scholar]

- Ayoub, J.; Zhou, F.; Bao, S.; Yang, X.J. From Manual Driving to Automated Driving: A Review of 10 Years of AutoUI. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; ACM: New York, NY, USA, 2019; pp. 70–90. [Google Scholar]

- Mehrabian, A.; Russell, J.A. An Approach to Environmental Psychology; MIT Press: Cambridge, MA, USA, 1974; ISBN 0-262-13090-4. [Google Scholar]

- Plutchik, R. The Nature of Emotions: Human Emotions Have Deep Evolutionary Roots, a Fact That May Explain Their Complexity and Provide Tools for Clinical Practice. Am. Sci. 2001, 89, 344–350. [Google Scholar] [CrossRef]

- Bradley, M.M.; Greenwald, M.K.; Petry, M.C.; Lang, P.J. Remembering Pictures: Pleasure and Arousal in Memory. J. Exp. Psychol. Learn. Mem. Cogn. 1992, 18, 379–390. [Google Scholar] [CrossRef] [PubMed]

- Watson, D.; Clark, L.A.; Tellegen, A. Development and Validation of Brief Measures of Positive and Negative Affect: The PANAS Scales. J. Pers. Soc. Psychol. 1988, 54, 1063–1070. [Google Scholar] [CrossRef] [PubMed]

- Bradley, M.M.; Lang, P.J. Measuring Emotion: The Self-Assessment Manikin and the Semantic Differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef] [PubMed]

- Dillen, N.; Ilievski, M.; Law, E.; Nacke, L.E.; Czarnecki, K.; Schneider, O. Keep Calm and Ride Along: Passenger Comfort and Anxiety as Physiological Responses to Autonomous Driving Styles. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; ACM: New York, NY, USA, 2020; pp. 1–13. [Google Scholar]

- Hayashi, E.C.S.; Posada, J.E.G.; Maike, V.R.M.L.; Baranauskas, M.C.C. Exploring New Formats of the Self-Assessment Manikin in the Design with Children. In Proceedings of the 15th Brazilian Symposium on Human Factors in Computing Systems, São Paulo, Brazil, 4–7 October 2016; ACM: New York, NY, USA, 2016; pp. 1–10. [Google Scholar]

- Wampfler, R.; Klingler, S.; Solenthaler, B.; Schinazi, V.R.; Gross, M.; Holz, C. Affective State Prediction from Smartphone Touch and Sensor Data in the Wild. In Proceedings of the CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; ACM: New York, NY, USA, 2022; pp. 1–14. [Google Scholar]

- Ma, Z.; Mahmoud, M.; Robinson, P.; Dias, E.; Skrypchuk, L. Automatic Detection of a Driver’s Complex Mental States. In Computational Science and Its Applications—ICCSA 2017, Proceedings of the 17th International Conference, Trieste, Italy, 3–6 July 2017; Gervasi, O., Murgante, B., Misra, S., Borruso, G., Torre, C.M., Rocha, A.M.A.C., Taniar, D., Apduhan, B.O., Stankova, E., Cuzzocrea, A., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzeralnd, 2017; Volume 10406, pp. 678–691. ISBN 978-3-319-62397-9. [Google Scholar]

- Paschero, M.; Del Vescovo, G.; Benucci, L.; Rizzi, A.; Santello, M.; Fabbri, G.; Mascioli, F.M.F. A Real Time Classifier for Emotion and Stress Recognition in a Vehicle Driver. In Proceedings of the 2012 IEEE International Symposium on Industrial Electronics, Hangzhou, China, 28–31 May 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1690–1695. [Google Scholar]

- Tawari, A.; Trivedi, M.M. Speech Emotion Analysis: Exploring the Role of Context. IEEE Trans. Multimed. 2010, 12, 502–509. [Google Scholar] [CrossRef][Green Version]

- Grimm, M.; Kroschel, K.; Harris, H.; Nass, C.; Schuller, B.; Rigoll, G.; Moosmayr, T. On the Necessity and Feasibility of Detecting a Driver’s Emotional State While Driving. In Affective Computing and Intelligent Interaction; Paiva, A.C.R., Prada, R., Picard, R.W., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4738, pp. 126–138. ISBN 978-3-540-74888-5. [Google Scholar]

- Hoch, S.; Althoff, F.; McGlaun, G.; Rigoll, G. Bimodal Fusion of Emotional Data in an Automotive Environment. In Proceedings of the Proceedings. (ICASSP ’05). IEEE International Conference on Acoustics, Speech, and Signal Processing, Philadelphia, PA, USA, 23 March 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 2, pp. 1085–1088. [Google Scholar]

- Rigas, G.; Goletsis, Y.; Fotiadis, D.I. Real-Time Driver’s Stress Event Detection. IEEE Trans. Intell. Transp. Syst. 2012, 13, 221–234. [Google Scholar] [CrossRef]

- Paredes, P.E.; Ordonez, F.; Ju, W.; Landay, J.A. Fast & Furious: Detecting Stress with a Car Steering Wheel. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018; pp. 1–12. [Google Scholar]

- Taib, R.; Tederry, J.; Itzstein, B. Quantifying Driver Frustration to Improve Road Safety. In Proceedings of the CHI’14 Extended Abstracts on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April 2014; ACM: New York, NY, USA, 2014; pp. 1777–1782. [Google Scholar]

- Zepf, S.; Hernandez, J.; Schmitt, A.; Minker, W.; Picard, R.W. Driver Emotion Recognition for Intelligent Vehicles: A Survey. ACM Comput. Surv. 2020, 53, 64. [Google Scholar] [CrossRef]

- Fakhrhosseini, S.M.; Landry, S.; Tan, Y.Y.; Bhattarai, S.; Jeon, M. If You’re Angry, Turn the Music on: Music Can Mitigate Anger Effects on Driving Performance. In Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seattle, WA, USA, 17–19 September 2014; ACM: New York, NY, USA, 2014; pp. 1–7. [Google Scholar]

- Lee, J.; Elhaouij, N.; Picard, R.W. AmbientBreath: Unobtrusive Just-in-Time Breathing Intervention Using Multi-Sensory Stimulation and Its Evaluation in a Car Simulator. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 71. [Google Scholar] [CrossRef]

- Balters, S.; Gowda, N.; Ordonez, F.; Paredes, P. Individualized Stress Detection Using an Unmodified Car Steering Wheel. Sci. Rep. 2021, 11, 20646. [Google Scholar] [CrossRef] [PubMed]

- Paredes, P.E.; Zhou, Y.; Hamdan, N.A.-H.; Balters, S.; Murnane, E.; Ju, W.; Landay, J.A. Just Breathe: In-Car Interventions for Guided Slow Breathing. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 28. [Google Scholar] [CrossRef]

- Choi, K.Y.; Lee, J.; ElHaouij, N.; Picard, R.; Ishii, H. aSpire: Clippable, Mobile Pneumatic-Haptic Device for Breathing Rate Regulation via Personalizable Tactile Feedback. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; ACM: New York, NY, USA, 2021; pp. 1–8. [Google Scholar]

- Lee, J.-H.; Spence, C. Assessing the Benefits of Multimodal Feedback on Dual-Task Performance under Demanding Conditions. In Proceedings of the People and Computers XXII Culture, Creativity, Interaction (HCI), Liverpool, UK, 1–5 September 2008. [Google Scholar]

- Roumen, T.; Perrault, S.T.; Zhao, S. NotiRing: A Comparative Study of Notification Channels for Wearable Interactive Rings. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; ACM: New York, NY, USA, 2015; pp. 2497–2500. [Google Scholar]

- Schaefer, A.; Nils, F.; Sanchez, X.; Philippot, P. Assessing the effectiveness of a large database of emotion-eliciting films: A new tool for emotion researchers. Cognit. Emot. 2010, 24, 1153–1172. [Google Scholar] [CrossRef]

- Juckel, G.; Mergl, R.; Brüne, M.; Villeneuve, I.; Frodl, T.; Schmitt, G.; Zetzsche, T.; Leicht, G.; Karch, S.; Mulert, C.; et al. Is evaluation of humorous stimuli associated with frontal cortex morphology? A pilot study using facial micro-movement analysis and MRI. Cortex 2011, 47, 569–574. [Google Scholar] [CrossRef] [PubMed]

- Wilson, G.; Davidson, G.; Brewster, S.A. In the Heat of the Moment: Subjective Interpretations of Thermal Feedback During Interaction. In Proceedings of the Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18 April 2015; ACM: New York, NY, USA, 2015; pp. 2063–2072. [Google Scholar] [CrossRef]

- Ju, Y.; Zheng, D.; Hynds, D.; Chernyshov, G.; Kunze, K.; Minamizawa, K. Haptic Empathy: Conveying Emotional Meaning through Vibrotactile Feedback. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8 May 2021; ACM: New York, NY, USA, 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Wilson, G.; Dobrev, D.; Brewster, S.A. Hot Under the Collar: Mapping Thermal Feedback to Dimensional Models of Emotion. In Proceedings of the Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7 May 2016; ACM: New York, NY, USA, 2016; pp. 4838–4849. [Google Scholar] [CrossRef]

- Elliot, A.J.; Maier, M.A. Color and Psychological Functioning. Curr. Dir. Psychol. Sci. 2007, 16, 250–254. [Google Scholar] [CrossRef]

- Juslin, P.N. Handbook of Music and Emotion: Theory, Research, Applications; Oxford University Press: Oxford, UK, 1993; ISBN 978-0-19-923014-3. [Google Scholar]

- Thoma, M.V.; La Marca, R.; Brönnimann, R.; Finkel, L.; Ehlert, U.; Nater, U.M. The Effect of Music on the Human Stress Response. PLoS ONE 2013, 8, e70156. [Google Scholar] [CrossRef] [PubMed]

- Thürmel, V. I Know How You Feel: An Investigation of Users’ Trust in Emotion-Based Personalization Systems. In AMCIS 2021 Proceedings; Association for Information Systems: Atlanta, GA, USA, 2021; p. 9. [Google Scholar]

- Pugh, Z.H.; Choo, S.; Leshin, J.C.; Lindquist, K.A.; Nam, C.S. Emotion depends on context, culture, and their interaction: Evidence from effective connectivity. Soc. Cogn. Affect. Neurosci. 2022, 17, 206–217. [Google Scholar] [CrossRef] [PubMed]

- Cowen, A.S.; Keltner, D. Self-report captures 27 distinct categories of emotion bridged by continuous gradients. Proc. Natl. Acad. Sci. USA 2017, 114, E7900–E7909. [Google Scholar] [CrossRef]

| Emotion | Visual Feedback | Auditory Feedback | Vibrotactile Feedback |

|---|---|---|---|

| Positive | Orange | Upbeat | Subtle Vibration (lowest speed) |

| Neutral | Blue | Calm & Relaxed | Moderate Vibration (medium speed) |

| Negative | Green | Slow | Intense Vibration (high speed) |

| Model | F1-Score (User-Dependent) |

|---|---|

| Random Forest | 0.8969 (SD = 0.0094) |

| Gradient Boosting | 0.8882 (SD = 0.0060) |

| SVM | 0.8502 (SD = 0.0092) |

| MLP Ensemble | 0.9202 (SD = 0.0083) 0.8200 (SD = 0.0199) |

| Model | F1-Score (User-Independent) |

|---|---|

| Random Forest | 0.5349 (SD = 0.2259) |

| Gradient Boosting | 0.5836 (SD = 0.2113) |

| SVM | 0.6300 (SD = 0.1678) |

| MLP Ensemble | 0.4880 (SD = 0.2621) 0.4163 (SD = 0.1360) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mwaita, K.F.; Bhaumik, R.; Ahmed, A.; Sharma, A.; De Angeli, A.; Haller, M. Emotion-Aware In-Car Feedback: A Comparative Study. Multimodal Technol. Interact. 2024, 8, 54. https://doi.org/10.3390/mti8070054

Mwaita KF, Bhaumik R, Ahmed A, Sharma A, De Angeli A, Haller M. Emotion-Aware In-Car Feedback: A Comparative Study. Multimodal Technologies and Interaction. 2024; 8(7):54. https://doi.org/10.3390/mti8070054

Chicago/Turabian StyleMwaita, Kevin Fred, Rahul Bhaumik, Aftab Ahmed, Adwait Sharma, Antonella De Angeli, and Michael Haller. 2024. "Emotion-Aware In-Car Feedback: A Comparative Study" Multimodal Technologies and Interaction 8, no. 7: 54. https://doi.org/10.3390/mti8070054

APA StyleMwaita, K. F., Bhaumik, R., Ahmed, A., Sharma, A., De Angeli, A., & Haller, M. (2024). Emotion-Aware In-Car Feedback: A Comparative Study. Multimodal Technologies and Interaction, 8(7), 54. https://doi.org/10.3390/mti8070054