Optimizations for Computing Relatedness in Biomedical Heterogeneous Information Networks: SemNet 2.0

Abstract

:1. Introduction

1.1. Automating the LBD Process

1.2. Overview of SemNet

1.3. Improving LBD Efficiency and Efficacy with SemNet 2.0

1.4. Use Case Example: Alzheimer’s Disease and Metabolism

1.5. Definitions and Mathematical Preliminaries

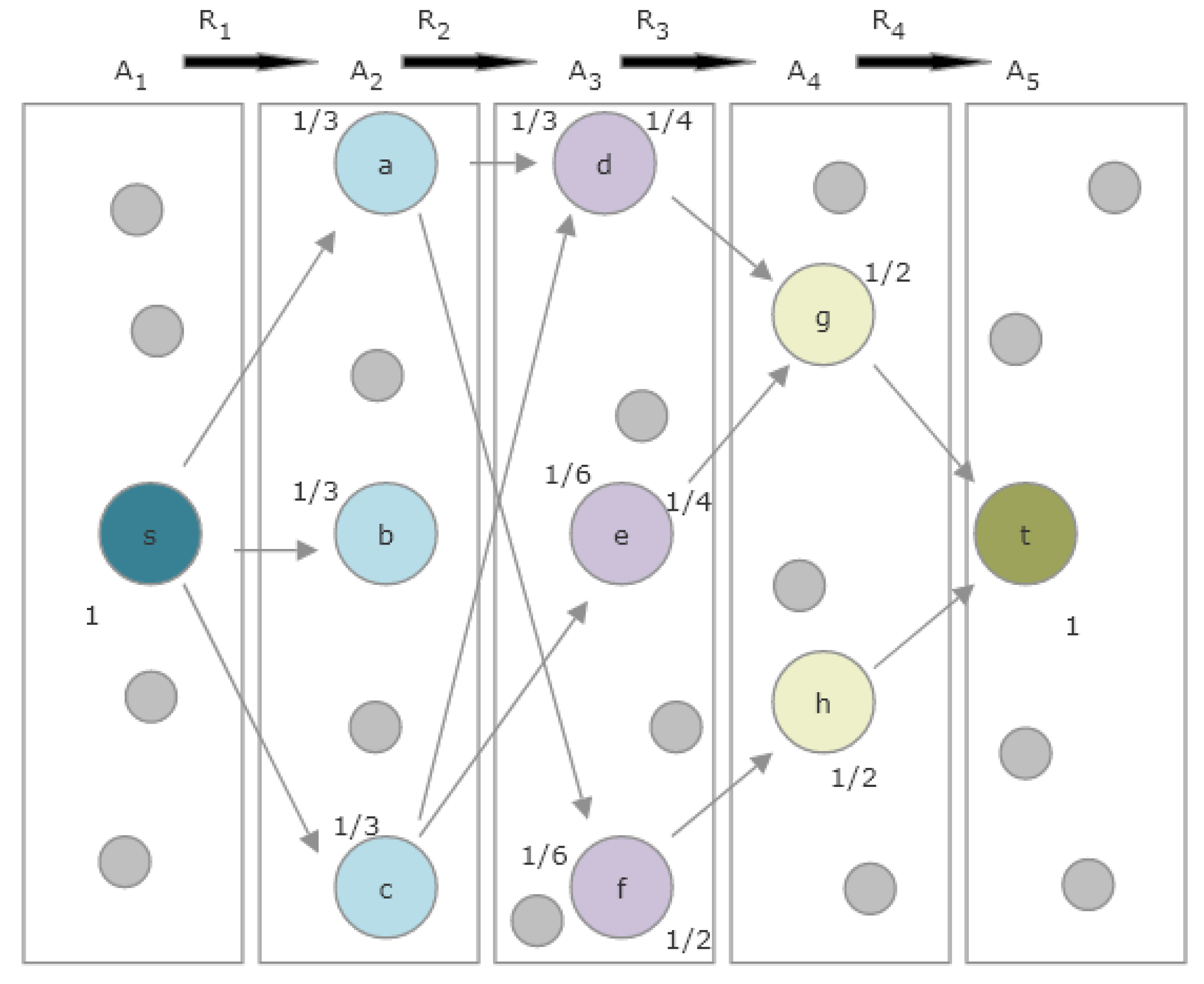

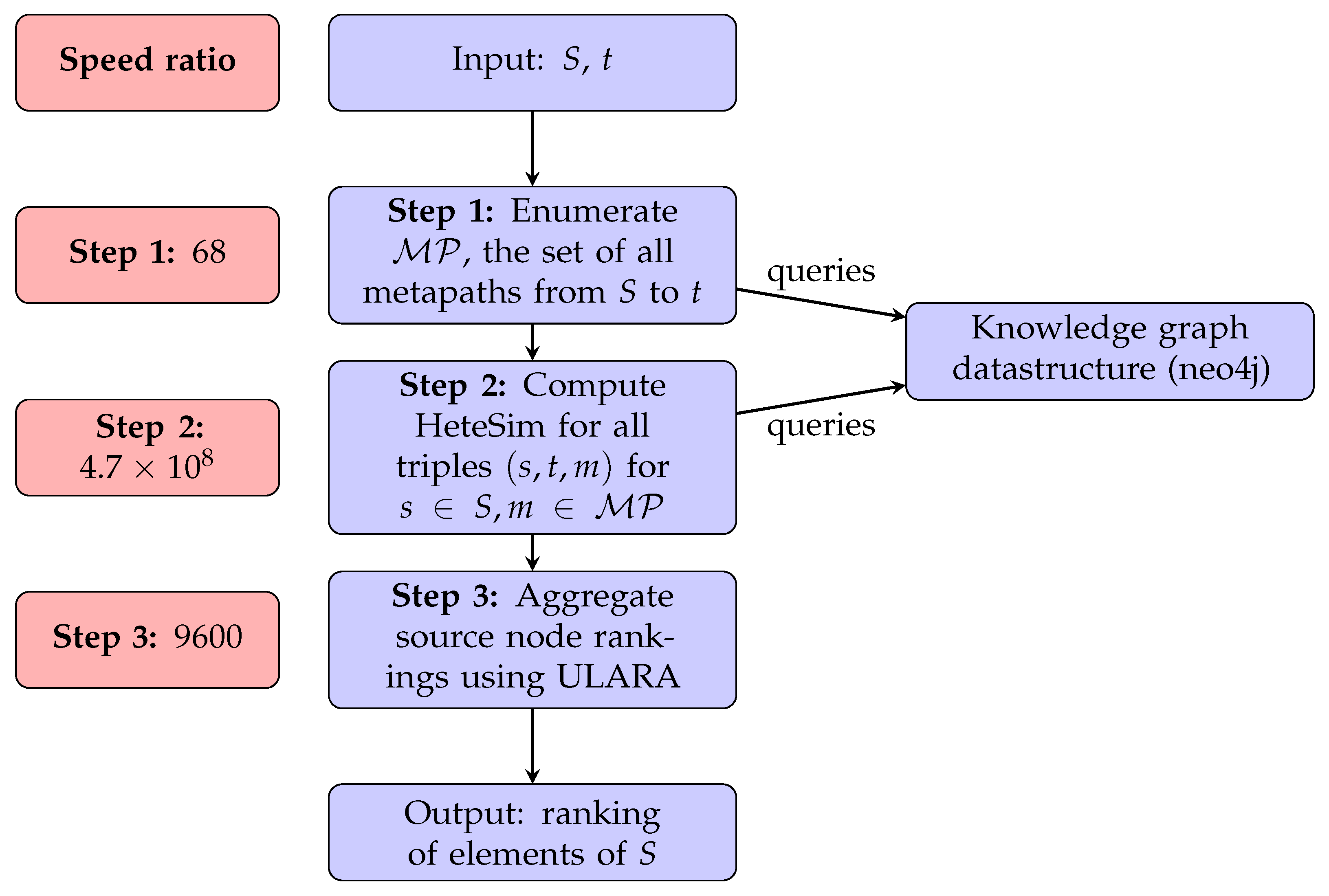

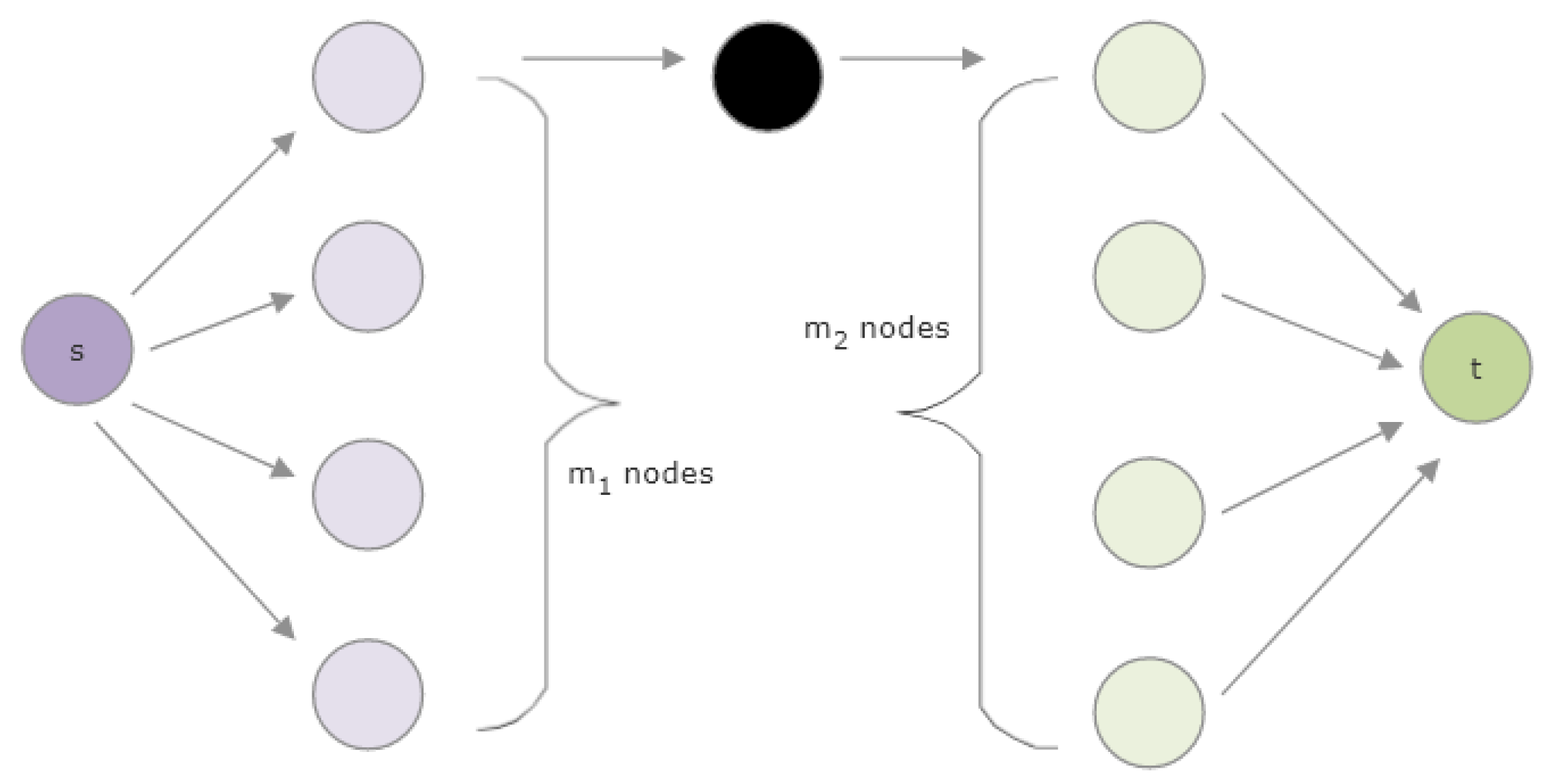

1.6. Overview of SemNet’s Existing HeteSim Implementation

2. Methods

2.1. A New Method for Combining HeteSim Scores from Multiple Metapaths

2.1.1. Background on ULARA

2.1.2. A Flaw in ULARA

2.1.3. Implications for SemNet

2.2. Computational Analysis of HeteSim Runtimes: SemNet Version 1

2.3. Development, Implementation, and Testing of Algorithms

2.3.1. Knowledge Graph Data Structure

2.3.2. Development of Approximation Algorithms

2.3.3. Implementation and Testing

2.3.4. User Study Methods

3. Results

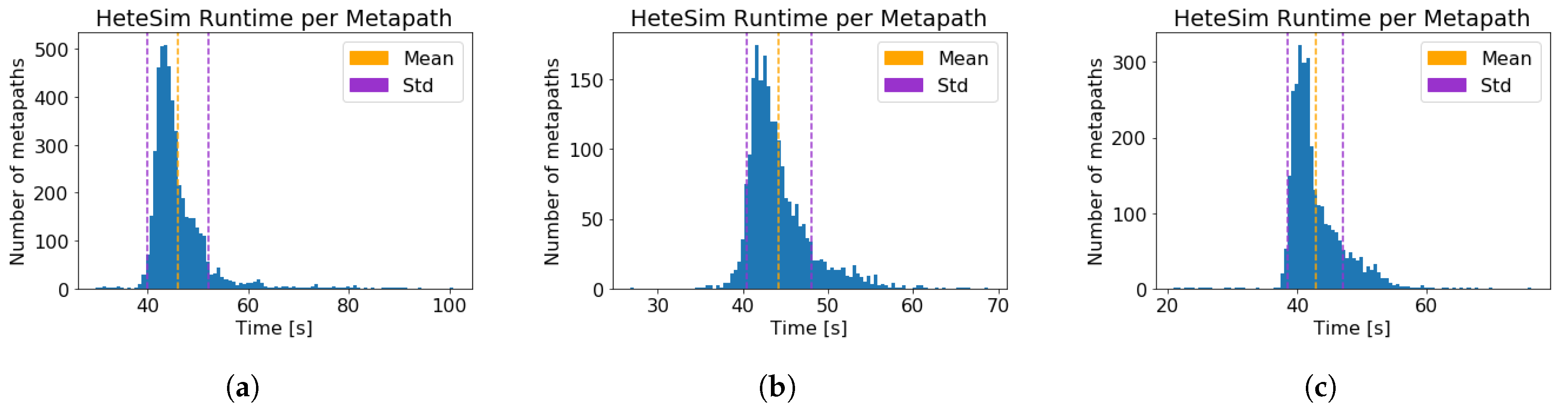

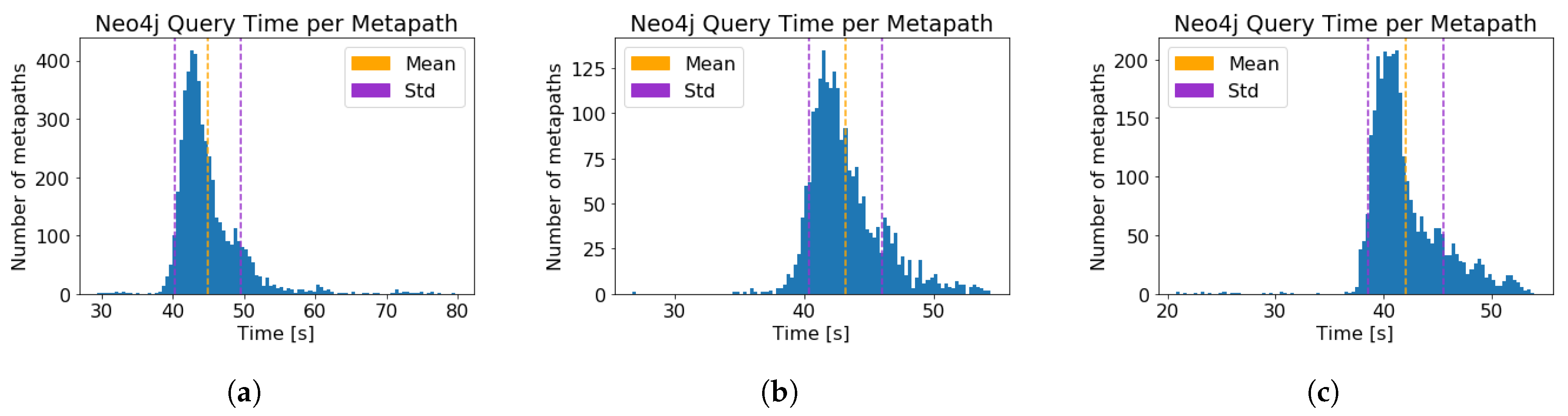

3.1. Computational Analysis of HeteSim Runtimes: SemNet Version 1

3.2. Algorithms

3.2.1. Deterministic HeteSim

| Algorithm 1: HeteSim. |

| Input: start node s, end node t, metapath of even length {odd relevance paths must be preprocessed} Output: HeteSim score Construct , return |

| Algorithm 2: oneSidedHS subroutine. |

| Input: start node s, metapath Output: vector , the one-sided HeteSim vector for to do {Vector of zeros, indexed by elements of } end for for to do for do end for end for return |

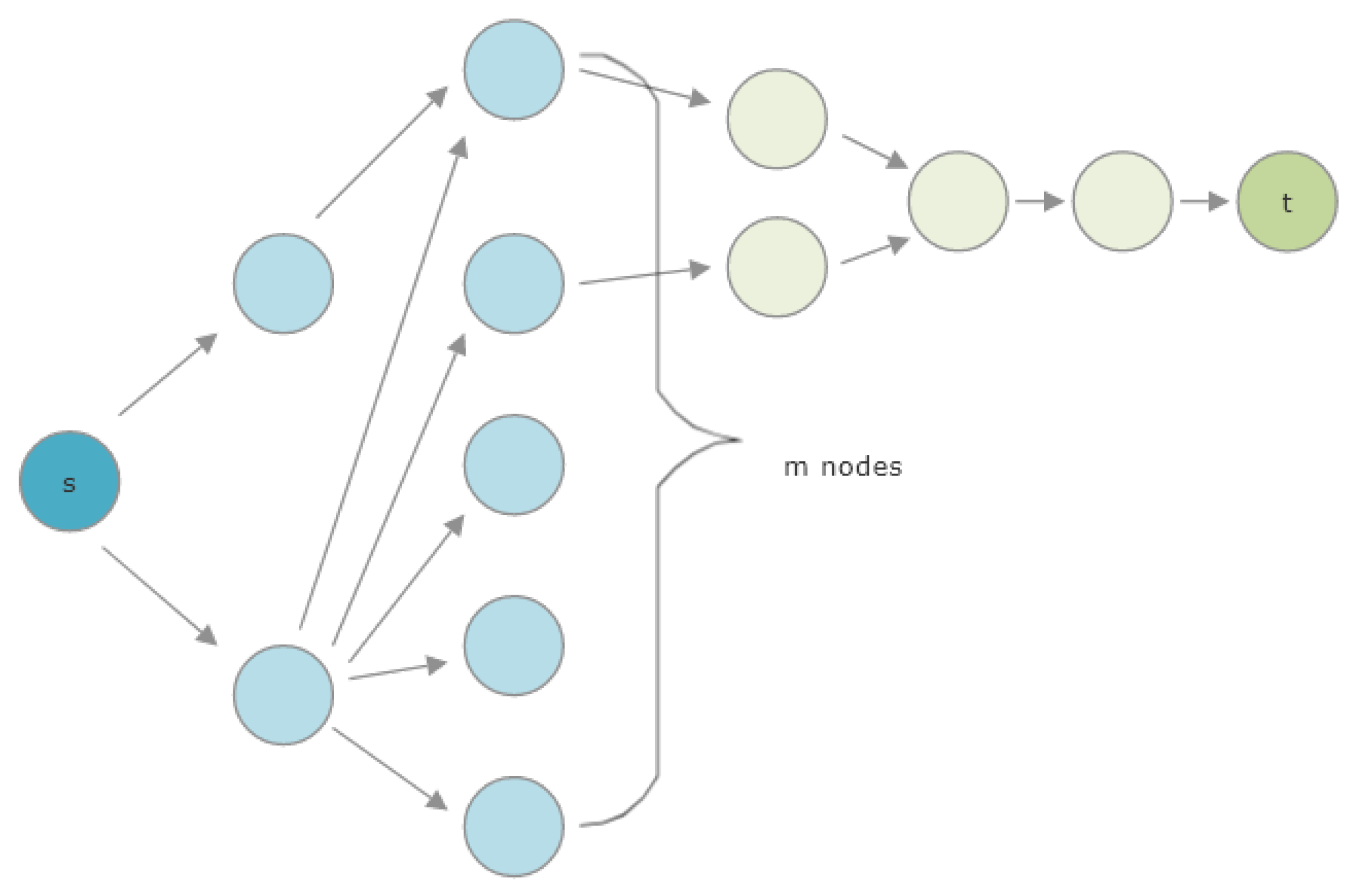

3.2.2. Pruning the Graph

3.2.3. Pruned HeteSim

| Algorithm 3: Randomized Pruned HeteSim. |

| Input: start node s, end node t, relevance path of even length, error tolerance , success probability r {odd relevance paths must be preprocessed} Output: approximate HeteSim score breadthFirstSearch(s, ) breadthFirstSearch(t, ) return RandomizedPrunedHeteSimGivenN(s, t, , N) |

| Algorithm 4: RandomizedPrunedHeteSimGivenN subroutine. |

| Input: start node s, end node t, relevance path of even length, number of iterations N Output: approximate HeteSim score for to length( do B[i] end for {array of 0 s indexed by elements of K} {random walks from s} for to N do restrictedRandomWalkOnMetapath(s, , B) end for {random walks from t} for to length( do B end for for to N do restrictedRandomWalkOnMetapath(t, , B) end for {compute approximate probability vectors and approximate pruned HeteSim} return |

| Algorithm 5: restrictedRandomWalkOnMetapath subroutine. |

| Input: start node s, metapath , badNodes B Output: (B, node), where node is the final node reached, and B is the updated list of dead-end nodes nodeStack ← [ ] while do neighbors(x, ) if then {pick a neighbor with probability proportional to edge weight} edgeweight(x, y) SelectWithProbability([(y, edgeWeight()/w) for ]) nodeStack.push(x) else {x is a dead end} B[i-1] ← B[i-1] nodeStack.pop() end if end while return |

3.2.4. Runtime Analysis of the Pruned HeteSim Algorithm

3.2.5. Deterministic Aggregation

| Algorithm 6: Exact Mean HeteSim score. |

| Input: set of start nodes S, end node t, path length p Output: vector of mean HeteSim scores h, indexed by elements of S Construct M, the set of all metapaths between any element of S and t for do HSscores = [] for do HSscores.append(HeteSim(s, t, m)) end for h[s] = mean(HSscores) end for return h |

3.2.6. Randomized Aggregation

| Algorithm 7: Approximate Mean HeteSim score. |

| Input: set of start nodes S, end node t, path length p, approximation parameters and r Output: vector of approximate mean HeteSim scores h, indexed by elements of S, with error bounds as in Corollary 3 Construct M, the set of all metapaths of length p between any element of S and t if then select with uniformly at random else end if for do HSscores = [] for do HSscores.append(HeteSim(s, t, m)) end for h[s] = mean(HSscores) end for return h |

3.3. Algorithm Runtimes: SemNet Version 2

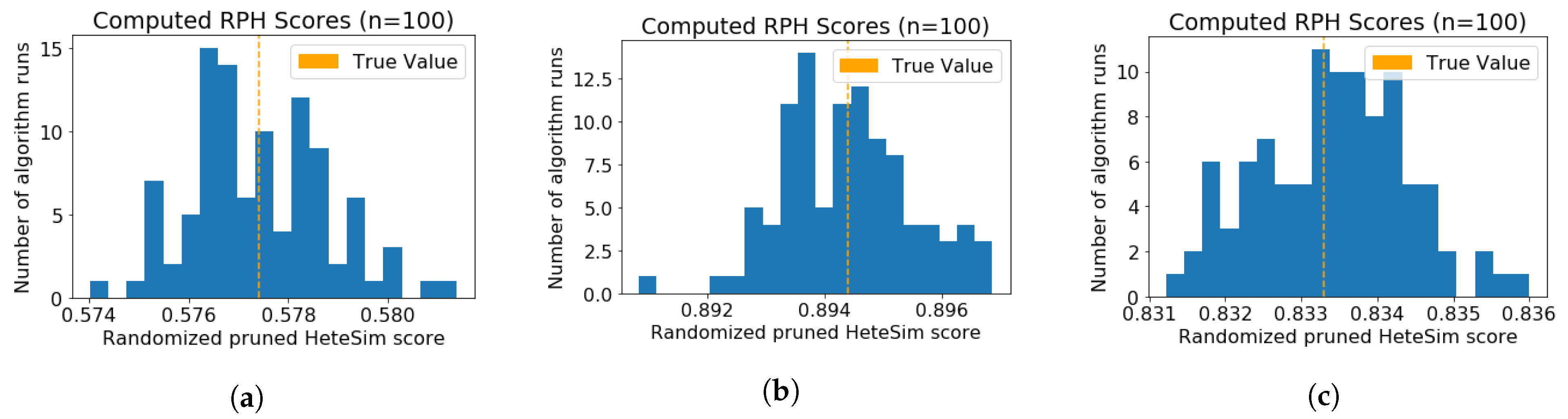

3.3.1. Verification of Randomized Algorithm Performance

| Algorithm 8: Approximate Mean Pruned HeteSim score. |

| Input: set of start nodes S, end node t, path length p, approximation parameters and r Output: vector of approximate mean HeteSim scores h, indexed by elements of S, with error bounds as in Theorem 3 Construct M, the set of all metapaths of length p between any element of S and t if then select with uniformly at random else end if for do PHSscores = [] for do PHSscores.append(RandomizedPrunedHeteSimGivenN(s, t, m, N)) end for h[s] = mean(PHSscores) end for return h |

3.3.2. Comparison of Algorithm Runtimes

3.4. Study Assessing User Friendliness of SemNet Version 2

3.5. Assessing Highly Ranked Metabolic Nodes to Alzheimer’s Disease

4. Discussion

4.1. Computational Improvements

4.2. Mathematical Limitations

4.3. Limitations and Future Directions

4.4. Related Work

4.4.1. Biomedical Knowledge Graphs

4.4.2. Related Algorithms

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Technical Lemmas

Appendix A.2. Proofs and Theorems

Appendix B

Appendix B.1. Analysis of Just-in-Time (JIT) Dead-End Removal

References

- PubMed Overview. Available online: https://pubmed.ncbi.nlm.nih.gov/about/ (accessed on 10 November 2021).

- Swanson, D. Fish oil, Raynaud’s syndrome, and undiscovered public knowledge. Perspect. Biol. Med. 1986, 30, 7–18. [Google Scholar] [PubMed]

- Henry, S.; Wijesinghe, D.S.; Myers, A.; McInnes, B.T. Using Literature Based Discovery to Gain Insights Into the Metabolomic Processes of Cardiac Arrest. Front. Res. Metr. Anal. 2021, 6, 32. [Google Scholar] [CrossRef] [PubMed]

- McCoy, K.; Gudapati, S.; He, L.; Horlander, E.; Kartchner, D.; Kulkarni, S.; Mehra, N.; Prakash, J.; Thenot, H.; Vanga, S.V.; et al. Biomedical Text Link Prediction for Drug Discovery: A Case Study with COVID-19. Pharmaceutics 2021, 13, 794. [Google Scholar] [CrossRef] [PubMed]

- Cameron, D.; Kavuluru, R.; Rindflesch, T.C.; Sheth, A.P.; Thirunarayan, K.; Bodenreider, O. Context-driven automatic subgraph creation for literature-based discovery. J. Biomed. Inform. 2015, 54, 141–157. [Google Scholar] [CrossRef] [Green Version]

- Crichton, G.; Baker, S.; Guo, Y.; Korhonen, A. Neural networks for open and closed Literature-based Discovery. PLoS ONE 2020, 15, e0232891. [Google Scholar] [CrossRef]

- Sang, S.; Yang, Z.; Wang, L.; Liu, X.; Lin, H.; Wang, J. SemaTyP: A knowledge graph based literature mining method for drug discovery. BMC Bioinform. 2020, 19, 193. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kilicoglu, H.; Shin, D.; Fiszman, M.; Rosemblat, G.; Rindflesch, T. SemMedDB: A PubMed-scale repository of biomedical semantic predications. Bioinformatics 2012, 28, 3158–3160. [Google Scholar] [CrossRef] [Green Version]

- Himmelstein, D.; Lizee, A.; Hessler, C.; Brueggeman, L.; Chen, S.; Hadley, D.; Green, A.; Khankhanian, P.; Baranzini, S. Systematic integration of biomedical knowledge prioritizes drugs for repurposing. eLife 2017, 6, e26726. [Google Scholar] [CrossRef]

- Li, Y.; Shi, C.; Yu, P.S.; Chen, Q. HRank: A Path based Ranking Framework in Heterogeneous Information Network. In Web-Age Information Management; Springer International Publishing: New York, NY, USA, 2014; pp. 553–565. [Google Scholar]

- Ng, M.K.; Li, X.; Ye, Y. MultiRank: Co-ranking for objects and relations in multi-relational data. In Proceedings of the Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 21–24 August 2011; pp. 1217–1225. [Google Scholar]

- Shi, C.; Kong, X.; Huang, Y.; Yu, P.S.; Wu, B. HeteSim: A General Framework for Relevance Measure in Heterogeneous Networks. IEEE Trans. Knowl. Data Eng. 2014, 26, 2479–2492. [Google Scholar] [CrossRef] [Green Version]

- Sedler, A.R.; Mitchell, C.S. SemNet: Using Local Features to Navigate the Biomedical Concept Graph. Front. Bioeng. Biotechnol. 2019, 7, 156. [Google Scholar] [CrossRef] [Green Version]

- Klementiev, A.; Roth, D.; Small, K. An Unsupervised Learning Algorithm for Rank Aggregation. In Machine Learning: ECML 2007; Kok, J.N., Koronacki, J., Mantaras, R.L.D., Matwin, S., Mladenič, D., Skowron, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 616–623. [Google Scholar]

- Zeng, X.; Liao, Y.; Liu, Y.; Zou, Q. Prediction and Validation of Disease Genes Using HeteSim Scores. IEEE/ACM Trans. Comput. Biol. Bioinform. 2017, 14, 687–695. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Y.; Zhang, J.; Deng, L. Prediction of lncRNA-protein interactions using HeteSim scores based on heterogeneous networks. Sci. Rep. 2017, 7, 3664. [Google Scholar] [CrossRef] [PubMed]

- Qu, J.; Chen, X.; Sun, Y.Z.; Zhao, Y.; Cai, S.B.; Ming, Z.; You, Z.H.; Li, J.Q. In Silico Prediction of Small Molecule-miRNA Associations Based on the HeteSim Algorithm. Mol. Ther. Nucleic Acids 2019, 14, 274–286. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Shi, W.; Deng, L. Prediction of Disease Comorbidity Using HeteSim Scores Based on Multiple Heterogeneous Networks. Curr. Gene Ther. 2019, 19, 232–241. [Google Scholar] [CrossRef] [PubMed]

- Fan, C.; Lei, X.; Guo, L.; Zhang, A. Predicting the Associations Between Microbes and Diseases by Integrating Multiple Data Sources and Path-based HeteSim Scores. Neurocomputing 2019, 323, 76–85. [Google Scholar] [CrossRef]

- Wang, J.; Kuang, Z.; Ma, Z.; Han, G. GBDTL2E: Predicting lncRNA-EF Associations Using Diffusion and HeteSim Features Based on a Heterogeneous Network. Front. Genet. 2020, 11, 272. [Google Scholar] [CrossRef] [PubMed]

- Garey, M.R.; Graham, R.L.; Ullman, J.D. An Analysis of Some Packing Algorithms. Available online: https://mathweb.ucsd.edu/~ronspubs/73_08_packing.pdf (accessed on 10 January 2022).

- Johnson, D.S. Approximation algorithms for combinatorial problems. J. Comput. Syst. Sci. 1974, 9, 256–278. [Google Scholar] [CrossRef] [Green Version]

- Du, D.Z.; Ko, K.I.; Hu, X. Design and Analysis of Approximation Algorithms; Springer Science & Business Media: New York, NY, USA, 2011; Volume 62. [Google Scholar]

- Vazirani, V.V. Approximation Algorithms; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Williamson, D.P.; Shmoys, D.B. The Design of Approximation Algorithms; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- What is a Graph Database? Available online: https://neo4j.com/developer/graph-database/#:~:text=Neo4j%20is%20an%20open%2Dsource,been%20publicly%20available%20since%202007 (accessed on 10 January 2022).

- Weller, J.; Budson, A. Current understanding of Alzheimer’s disease diagnosis and treatment. F1000Research 2018, 7, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Thakur, N.; Han, C.Y. An Ambient Intelligence-Based Human Behavior Monitoring Framework for Ubiquitous Environments. Information 2021, 12, 81. [Google Scholar] [CrossRef]

- Hakansson, K.; Rovio, S.; Helkala, E.L.; Vilska, A.R.; Winblad, B.; Soininen, H.; Nissinen, A.; Mohammed, A.H.; Kivipelto, M. Association between mid-life marital status and cognitive function in later life: Population based cohort study. BMJ 2009, 339. [Google Scholar] [CrossRef] [Green Version]

- Silva, M.V.F.; Loures, C.D.M.G.; Alves, L.C.V.; de Souza, L.C.; Borges, K.B.G.; Carvalho, M.D.G. Alzheimer’s disease: Risk factors and potentially protective measures. J. Biomed. Sci. 2019, 26, 33. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Prakash, J.; Wang, V.; Quinn, R.E.; Mitchell, C.S. Unsupervised Machine Learning to Identify Separable Clinical Alzheimer’s Disease Sub-Populations. Brain Sci. 2021, 11, 977. [Google Scholar] [CrossRef]

- Huber, C.M.; Yee, C.; May, T.; Dhanala, A.; Mitchell, C.S. Cognitive decline in preclinical Alzheimer’s disease: Amyloid-beta versus tauopathy. J. Alzheimer’s Dis. 2018, 61, 265–281. [Google Scholar] [CrossRef] [Green Version]

- Johnson, E.; Dammer, E.; Duong, D.; Ping, L.; Zhou, M.; Yin, L.; Higginbotham, L.; Guajardo, A.; White, B.; Troncoso, J.; et al. Large-scale proteomic analysis of Alzheimer’s disease brain and cerebrospinal fluid reveals early changes in energy metabolism associated with microglia and astrocyte activation. Nat. Med. 2020, 26, 769–780. [Google Scholar] [CrossRef]

- O’Barr, S.A.; Oh, J.S.; Ma, C.; Brent, G.A.; Schultz, J.J. Thyroid hormone regulates endogenous amyloid-beta precursor protein gene expression and processing in both in vitro and in vivo models. Thyroid 2006, 16, 1207–1213. [Google Scholar] [CrossRef] [PubMed]

- Matsuzaki, T.; Sasaki, K.; Tanizaki, Y.; Hata, J.; Fujimi, K.; Matsui, Y.; Sekita, A.; Suzuki, S.; Kanba, S.; Kiyohara, Y.; et al. Insulin resistance is associated with the pathology of Alzheimer disease. Neurology 2010, 75, 764–770. [Google Scholar] [CrossRef] [PubMed]

- TPS Foundation Time. Available online: https://docs.python.org/3/library/time.html (accessed on 10 January 2022).

- Gorelick, M.; Ozsvald, I. High Performance Python: Practical Performant Programming for Humans; O’Reilly Media: Sebastopol, CA, USA, 2020. [Google Scholar]

- Jupyter, P. Jupyter Notebook. Available online: https://jupyter.org/ (accessed on 10 January 2022).

- TPS Foundation Python. Available online: https://www.python.org/ (accessed on 10 January 2022).

- Alon, N.; Spencer, J.H. The Probabilistic Method; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- McDiarmid, C. On the method of bounded differences. Surv. Comb. 1989, 141, 148–188. [Google Scholar]

- Liu, Q.; Zhang, J. Lipid metabolism in Alzheimer’s disease. Neurosci. Bull. 2014, 30, 331–345. [Google Scholar] [CrossRef] [Green Version]

- Chen, Z.; Zhong, C. Decoding Alzheimer’s disease from perturbed cerebral glucose metabolism: Implications for diagnostic and therapeutic strategies. Prog. Neurobiol. 2013, 108, 21–43. [Google Scholar] [CrossRef] [Green Version]

- Alford, S.; Patel, D.; Perakakis, N.; Mantzoros, C.S. Obesity as a risk factor for Alzheimer’s disease: Weighing the evidence. Obes. Rev. 2018, 19, 269–280. [Google Scholar] [CrossRef]

- Li, J.; Deng, J.; Sheng, W.; Zuo, Z. Metformin attenuates Alzheimer’s disease-like neuropathology in obese, leptin-resistant mice. Pharmacol. Biochem. Behav. 2012, 101, 564–574. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hui, Z.; Zhijun, Y.; Yushan, Y.; Liping, C.; Yiying, Z.; Difan, Z.; Chunglit, C.T.; Wei, C. The combination of acyclovir and dexamethasone protects against Alzheimer’s disease-related cognitive impairments in mice. Psychopharmacology 2020, 237, 1851–1860. [Google Scholar] [CrossRef] [PubMed]

- Sun, M.K.; Alkon, D.L. Carbonic anhydrase gating of attention: Memory therapy and enhancement. Trends Pharmacol. Sci. 2002, 23, 83–89. [Google Scholar] [CrossRef]

- Liu, S.; Zeng, F.; Wang, C.; Chen, Z.; Zhao, B.; Li, K. Carbonic anhydrase gating of attention: Memory therapy and enhancement. Sci. Rep. 2015, 5. [Google Scholar] [CrossRef] [Green Version]

- Valiant, L.G. The Complexity of Enumeration and Reliability Problems. SIAM J. Comput. 1979, 8, 410–421. [Google Scholar] [CrossRef]

- Saha, T.K.; Hasan, M.A. Finding Network Motifs Using MCMC Sampling. In Complex Networks VI; Springer International Publishing: New York, NY, USA, 2015; pp. 13–24. [Google Scholar]

- Himmelstein, D.; Baranzini, S. Heterogeneous Network Edge Prediction: A Data Integration Approach to Prioritize Disease-Associated Genes. PLoS Comput. Biol. 2015, 11, e1004259. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hu, W.; Fey, M.; Zitnik, M.; Dong, Y.; Ren, H.; Liu, B.; Catasta, M.; Leskovec, J. Open Graph Benchmark: Datasets for Machine Learning on Graphs. arXiv 2020, arXiv:2005.00687. [Google Scholar]

- Ioannidis, V.N.; Song, X.; Manchanda, S.; Li, M.; Pan, X.; Zheng, D.; Ning, X.; Zeng, X.; Karypis, G. DRKG—Drug Repurposing Knowledge Graph for COVID-19. 2020. Available online: https://github.com/gnn4dr/DRKG/ (accessed on 10 January 2022).

- Xu, J.; Kim, S.; Song, M.; Jeong, M.; Kim, D.; Kang, J.; Rousseau, J.F.; Li, X.; Xu, W.; Torvik, V.I.; et al. Building a PubMed knowledge graph. Sci. Data 2020, 7, 205. [Google Scholar] [CrossRef]

- Yang, B.; tau Yih, W.; He, X.; Gao, J.; Deng, L. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. arXiv 2014, arXiv:1412.6575. [Google Scholar]

- Sun, Z.; Deng, Z.H.; Nie, J.Y.; Tang, J. RotatE: Knowledge Graph Embedding by Relational Rotation in Complex Space. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Zhang, S.; Tay, Y.; Yao, L.; Liu, Q. Quaternion Knowledge Graph Embeddings. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Chami, I.; Wolf, A.; Juan, D.C.; Sala, F.; Ravi, S.; Ré, C. Low-Dimensional Hyperbolic Knowledge Graph Embeddings. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6901–6914. [Google Scholar]

- Das, R.; Godbole, A.; Monath, N.; Zaheer, M.; McCallum, A. Probabilistic Case-based Reasoning for Open-World Knowledge Graph Completion. In Findings of the Association for Computational Linguistics: EMNLP 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 4752–4765. [Google Scholar] [CrossRef]

- Wang, H.; Ren, H.; Leskovec, J. Relational Message Passing for Knowledge Graph Completion. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Singapore, 14–18 August 2021; pp. 1697–1707. [Google Scholar]

- Hu, Z.; Dong, Y.; Wang, K.; Sun, Y. Heterogeneous Graph Transformer. In Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 2704–2710. [Google Scholar]

| Source Node | Insulin | Hypothyroidism | Amyloid |

|---|---|---|---|

| Number of metapaths | 4873 | 2148 | 3095 |

| Total computation time (min) | |||

| Computation time per metapath (s) (±std) | |||

| Neo4j query time, per metapath (s) (±std) | |||

| Time per metapath, excluding query time (s) (±std) |

| Source Node | Insulin | Hypothyroidism | Amyloid |

|---|---|---|---|

| Num metapaths (SemNet 1) | 4873 | 2148 | 3095 |

| SemNet 1: Step 1 (s) | |||

| SemNet 1: Step 2 (s) | 220,000 ± 2300 | 96,000 ± 270 | 220,000 ± 2700 |

| SemNet 1: Step 3 (s) | |||

| Num metapaths (SemNet 2) | 4521 | 2130 | 3060 |

| SemNet 2: Step 1 (s) | |||

| SemNet 2: Step 2 (s) | |||

| SemNet 2: Step 3 (s) | |||

| Runtime ratio: Step 1 | 68 | 184 | 200 |

| Runtime ratio: Step 2 | |||

| Runtime ratio: Step 3 | 9600 | 470 | 9600 |

| Algorithm | Runtime (s) |

|---|---|

| Mean exact HeteSim | |

| Approximate mean HeteSim |

| Source Node | Deterministic HeteSim | Randomized Pruned HeteSim |

|---|---|---|

| Insulin | ||

| Hypothyroidism | ||

| Amyloid |

| Source Node | Insulin | Hypothyroidism | Amyloid |

|---|---|---|---|

| Max iterations (N) | 28,019,926 | 8,547,987 | 12,790,378 |

| Min iterations (N) | 5,308,942 | 1,666,564 | 3,229,242 |

| Mean iterations (N) | 10,068,473 | 2,632,969 | 5,206,723 |

| Max runtime (s) | 14,588 | 3138 | 5052 |

| Min runtime (s) | 420 | 99 | 247 |

| Mean runtime (s) | 3491 | 438 | 1193 |

| Max metapath instances | 488 | 167 | 240 |

| Min metapath instances | 109 | 39 | 70 |

| Source Node | Insulin | Hypothyroidism | Amyloid |

|---|---|---|---|

| Max runtime (s) | |||

| Min runtime (s) | |||

| Mean runtime (s) (±std) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kirkpatrick, A.; Onyeze, C.; Kartchner, D.; Allegri, S.; Nakajima An, D.; McCoy, K.; Davalbhakta, E.; Mitchell, C.S. Optimizations for Computing Relatedness in Biomedical Heterogeneous Information Networks: SemNet 2.0. Big Data Cogn. Comput. 2022, 6, 27. https://doi.org/10.3390/bdcc6010027

Kirkpatrick A, Onyeze C, Kartchner D, Allegri S, Nakajima An D, McCoy K, Davalbhakta E, Mitchell CS. Optimizations for Computing Relatedness in Biomedical Heterogeneous Information Networks: SemNet 2.0. Big Data and Cognitive Computing. 2022; 6(1):27. https://doi.org/10.3390/bdcc6010027

Chicago/Turabian StyleKirkpatrick, Anna, Chidozie Onyeze, David Kartchner, Stephen Allegri, Davi Nakajima An, Kevin McCoy, Evie Davalbhakta, and Cassie S. Mitchell. 2022. "Optimizations for Computing Relatedness in Biomedical Heterogeneous Information Networks: SemNet 2.0" Big Data and Cognitive Computing 6, no. 1: 27. https://doi.org/10.3390/bdcc6010027

APA StyleKirkpatrick, A., Onyeze, C., Kartchner, D., Allegri, S., Nakajima An, D., McCoy, K., Davalbhakta, E., & Mitchell, C. S. (2022). Optimizations for Computing Relatedness in Biomedical Heterogeneous Information Networks: SemNet 2.0. Big Data and Cognitive Computing, 6(1), 27. https://doi.org/10.3390/bdcc6010027