Position Measurement Based on Fisheye Imaging †

Abstract

:1. Introduction

2. Theoretical Model and Image Acquisition System

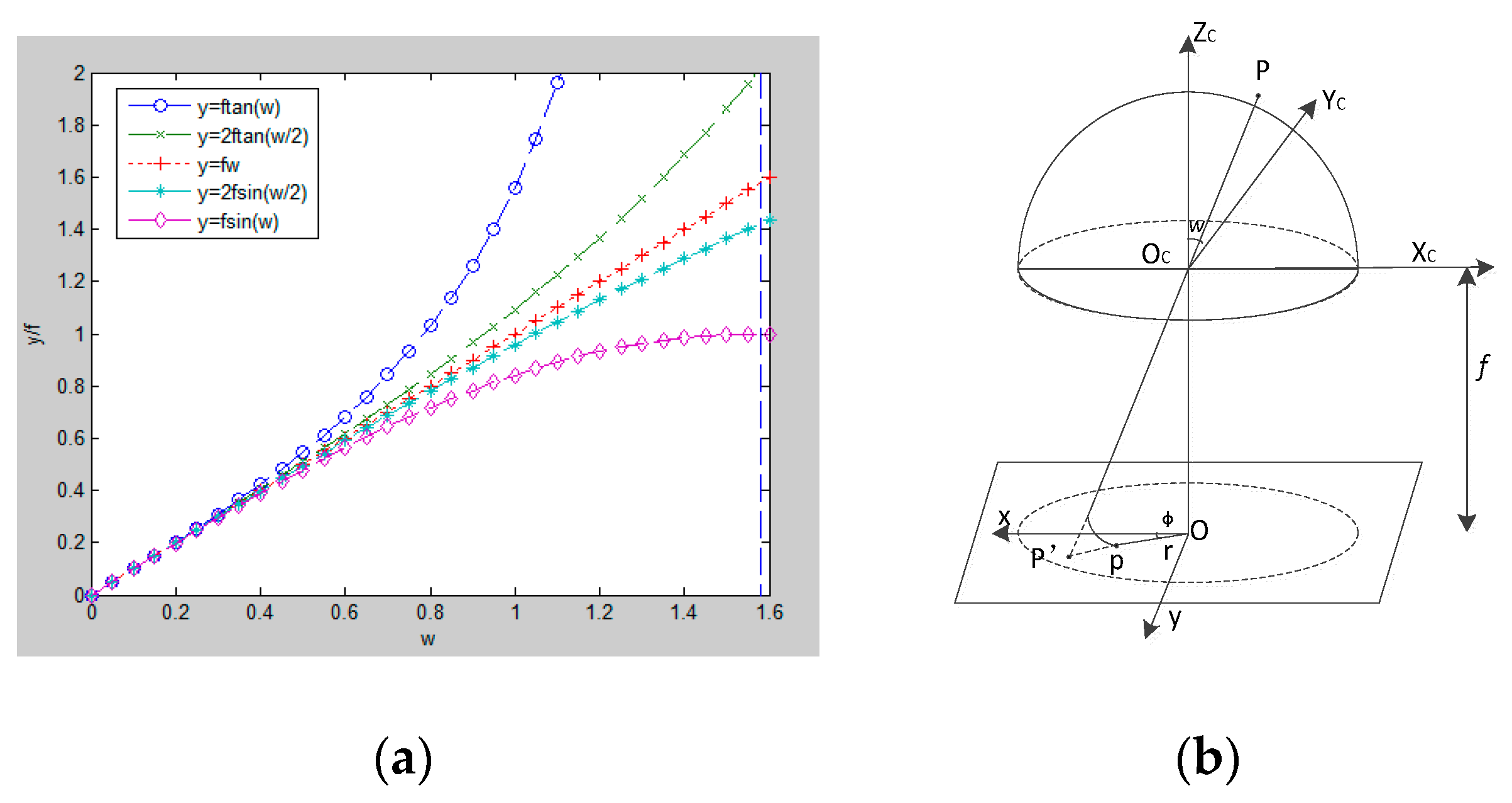

2.1. Fisheye Lens Imaging Model

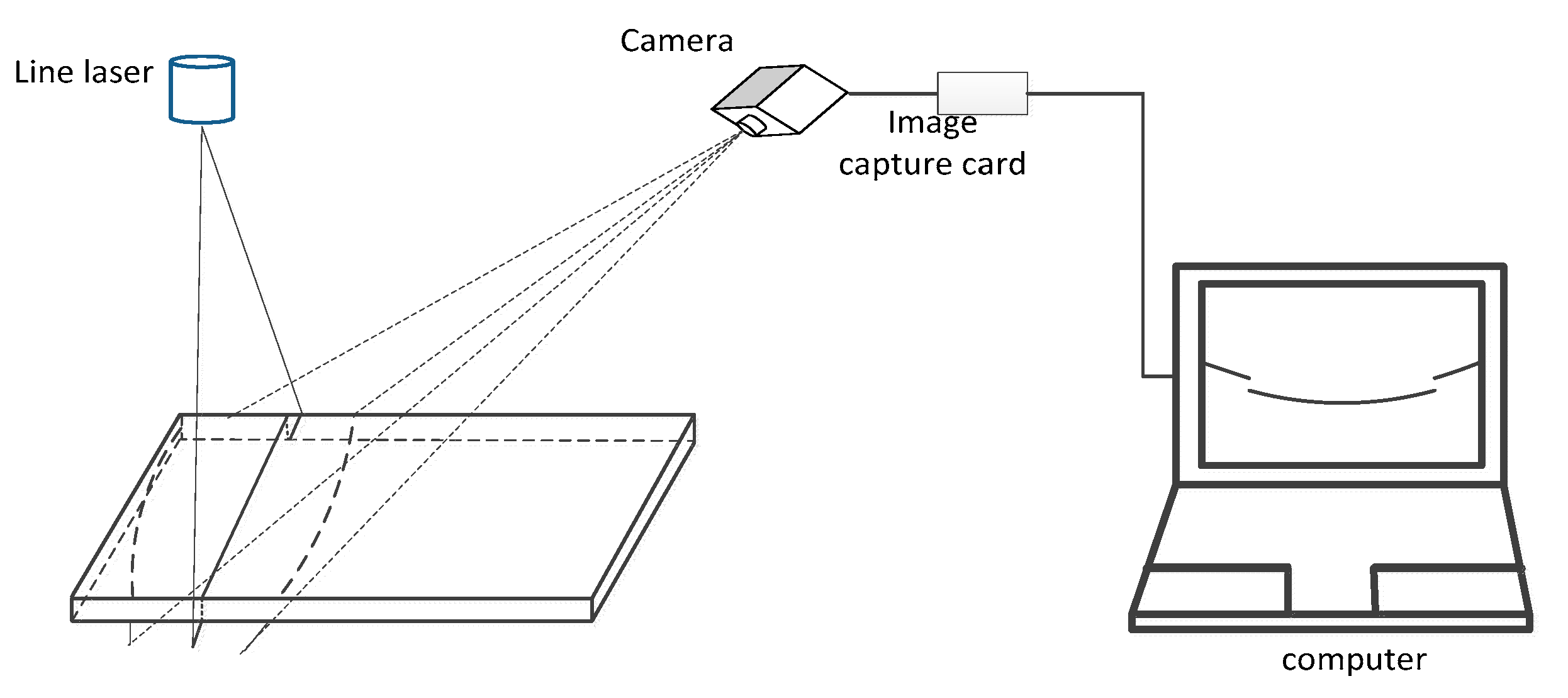

2.2. Image Acquisition System

3. Establish a Network Model

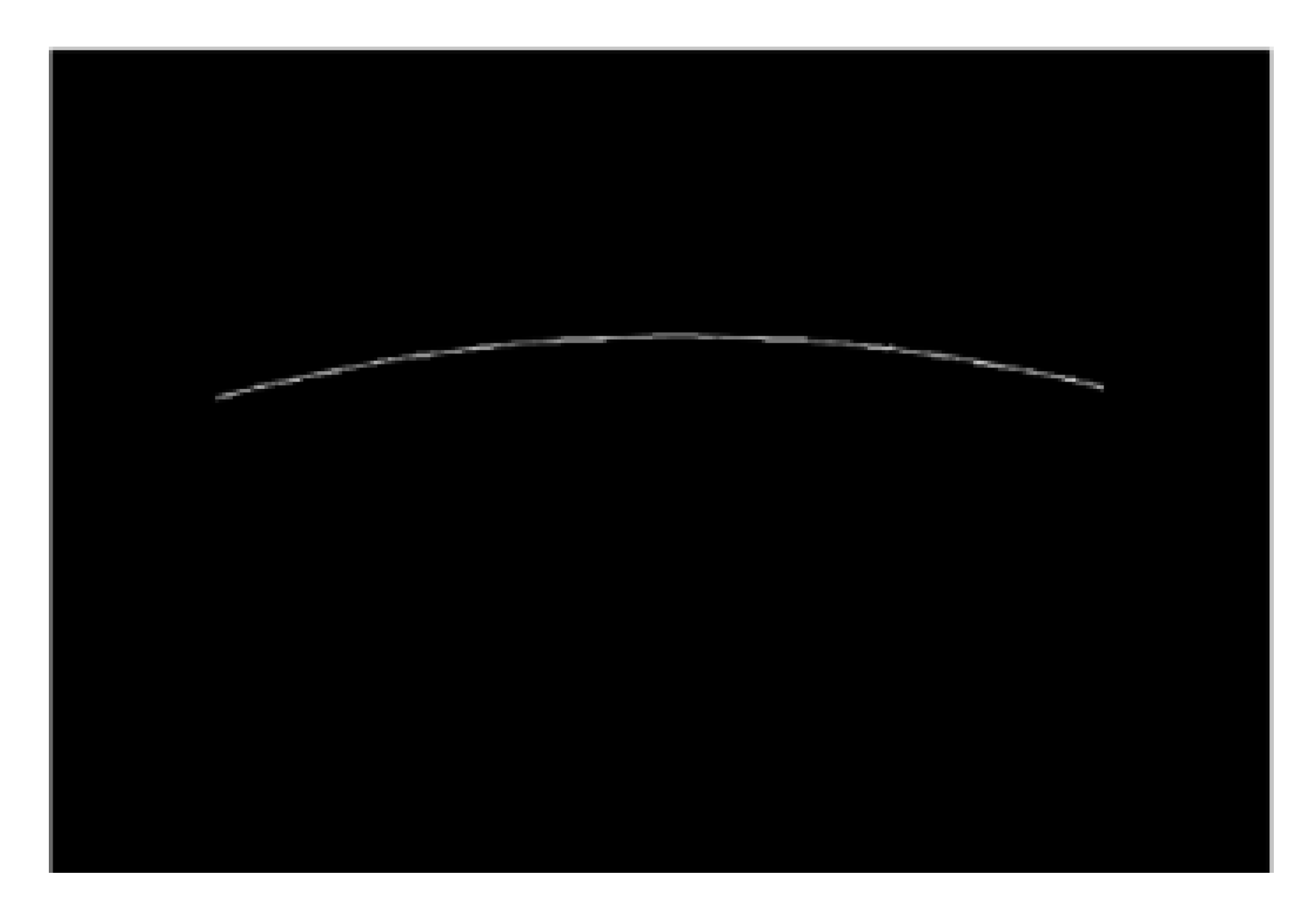

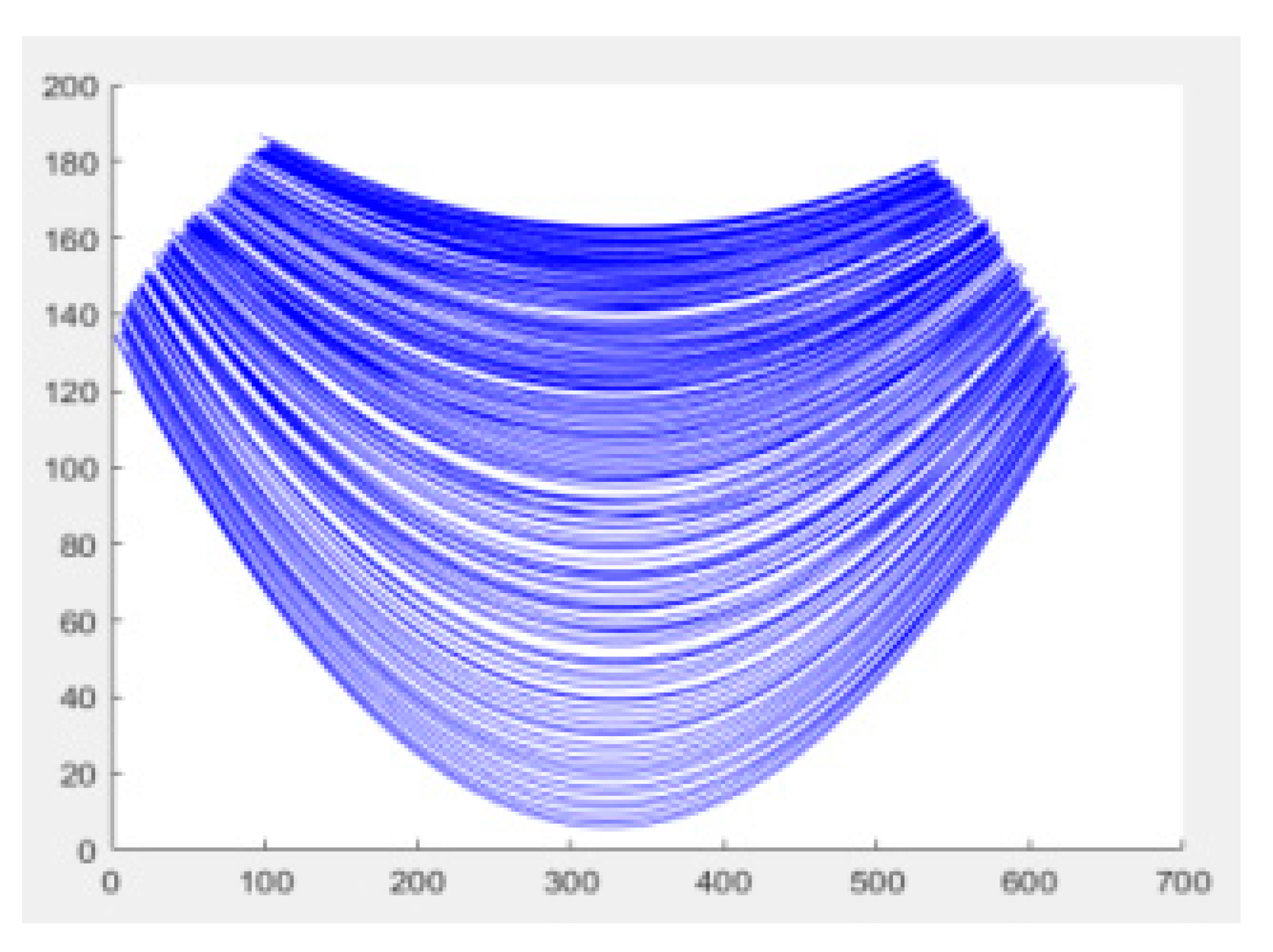

3.1. Building a Data Set

3.2. Establish a Network Model

4. Results and Discussions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chu, G. Research on Fisheye Binocular Vision Imaging and Positioning Technology. Master’s Thesis, Yan Shan University, Yanshan, China, 2016. [Google Scholar]

- Moreau, J.; Ambellouis, S.; Ruichek, Y. 3D reconstruction of urban environments based on fisheye stereovision. In Proceedings of the 2012 Eighth International Conference on Signal Image Technology and Internet Based Systems, Naples, Italy, 25–29 November 2012. [Google Scholar]

- Tang, Y.; Zong, M.; Jiang, J.; Chen, M.; Zhu, Y. Design of the same-directional binocular stereo omnidirectional sensor. J. Sens. Technol. 2010, 23, 791–798. [Google Scholar]

- Devernay, F.; Faugeras, O. Straight lines have to be straight. Mach. Vis. Appl. 2001, 13, 14–24. [Google Scholar] [CrossRef]

- Wu, J.; Yang, K.; Zhang, N. Analysis of target monitoring and measurement system based on fisheye lens. Opt. Technol. 2009, 35, 599–603. [Google Scholar]

- Zhan, Z.; Wang, X.; Peng, M. Research on key algorithms for panoramic image measurement of fisheye lens. Surv. Mapp. Bull. 2015, 1, 70–74. [Google Scholar]

- Xu, X. Research on Image Measuring System of Borehole Inner Wall. Master’s Thesis, Changchun University of Science and Technology, Changchun, China, 2011. [Google Scholar]

- Pan, H.; Wang, M.; Xu, J. Study on the Influence of Imaging Distance on Image Distortion Coefficient. J. Metrol. 2014, 3, 221–225. [Google Scholar]

- Kannala, J.; Brandt, S. A generic camera model and calibration method for conventional, wide-eye and fisheye lenses. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1335–1340. [Google Scholar] [CrossRef]

| No. | Image Signal File 1 | Point 1 | Point 2 | … | Point 9 | Point10 | Distance(mm) |

|---|---|---|---|---|---|---|---|

| 1 | Ima_300.jpg | (36,78) | (49,67) | … | (143,57) | (155,63) | 300 |

| 2 | Ima_305.jpg | (35,77) | (49,65) | … | (143,56) | (156,62) | 305 |

| 3 | Ima_310.jpg | (34,76) | (46,66) | … | (142,53) | (153,59) | 310 |

| … | … | … | … | … | … | … | … |

| 340 | Ima_1995.jpg | (9,48) | (21,37) | … | (114,9) | (127,11) | 1995 |

| 341 | Ima_2000.jpg | (8,47) | (20,37) | … | (113,8) | (127,9) | 2000 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Li, K.; Chen, Y.; Li, Z.; Han, Y. Position Measurement Based on Fisheye Imaging. Proceedings 2019, 15, 38. https://doi.org/10.3390/proceedings2019015038

Li X, Li K, Chen Y, Li Z, Han Y. Position Measurement Based on Fisheye Imaging. Proceedings. 2019; 15(1):38. https://doi.org/10.3390/proceedings2019015038

Chicago/Turabian StyleLi, Xianjing, Kun Li, Yanwen Chen, ZhongHao Li, and Yan Han. 2019. "Position Measurement Based on Fisheye Imaging" Proceedings 15, no. 1: 38. https://doi.org/10.3390/proceedings2019015038

APA StyleLi, X., Li, K., Chen, Y., Li, Z., & Han, Y. (2019). Position Measurement Based on Fisheye Imaging. Proceedings, 15(1), 38. https://doi.org/10.3390/proceedings2019015038