Monitoring Food Intake in an Aging Population: A Survey on Technological Solutions †

Abstract

:1. Introduction

2. Motivation

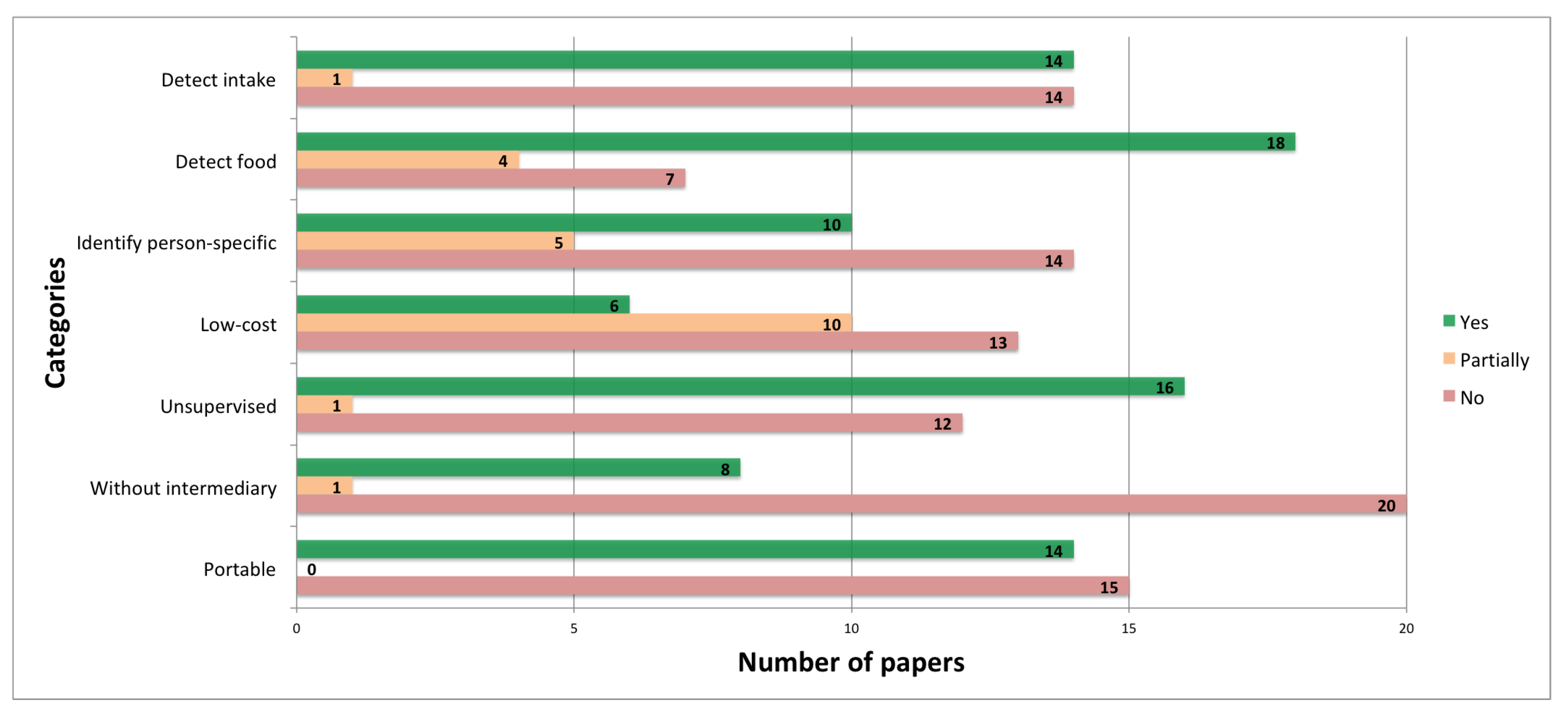

- Detect intake. The system, of course, must detect the food intake of the person and the moment when he or she is eating.

- Detect food. Furthermore, the system must detect the food type and its quantity so it can evaluate the nutrients intake of the elderly.

- Identify specific person. It is important that the system will be able to identify the person who is eating at each moment.

- Low-cost. Also, it is important that the system implementation is not expensive, because in this environments big investments usually can not be undertaken.

- Unsupervised. Also, the system must be usable without supervision, since the most independence possible is sought and the elder should not need any assistance or supervision to use the system.

- Without intermediary. In addition, the system must run without intermediaries, since, in many cases, the rural environments have not enough infrastructure to support the presence of intermediaries.

- Portable. And finally, the system must be portable, so it can be deployed in the different places where the elderly live.

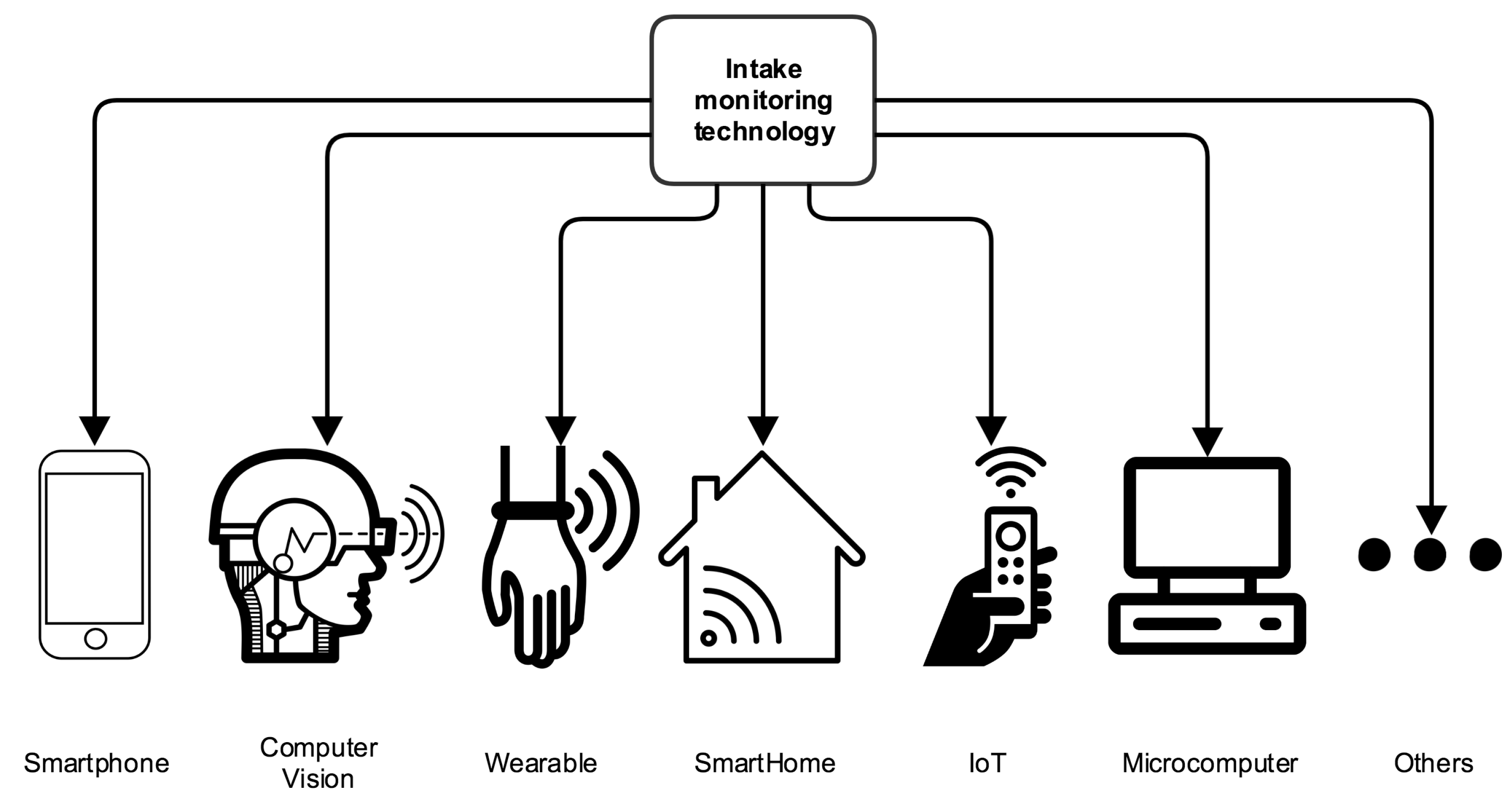

3. Food Intake Monitoring Techniques

- Smartphone. This category includes Smartphone technologies, such as proposals based on devices or specific sensors of the smartphone, proposals based on smartphone applications (Apps), etc.

- Computer Vision. Applications, techniques and/or algorithms that can obtain a high-level understanding from digital images or videos.

- Wearables. Solutions based on electronic devices that can be worn on the body, either as an accessory or as part of the material used in clothing.

- Smart Home. This category incorporates advanced automation systems to provide the persons with sophisticated monitoring and control over the home’s functions.

- IoT. Approaches based on Internet of Things technology, such as devices with sensors inside and connected to the Internet which send the data taken. When an IoT device has been designed to be used at home we would regard it as Smart Home technology.

- Microcomputers. Technologies based on a small computer, especially used for writing documents or small processing programs.

- Others. Solutions that can not be included in the preceding categories.

3.1. Smartphone

3.2. Computer Vision

3.3. Wearable

3.4. Smart Home

3.5. IoT

3.6. Microcomputer

3.7. Others

4. Discussion

) or not (

) or not (  ) with the features described above. It should be explained, that in some cases, the features may be meeting only partially or do not specify on the article itself. This situation will be indicated by the symbol (

) with the features described above. It should be explained, that in some cases, the features may be meeting only partially or do not specify on the article itself. This situation will be indicated by the symbol (  ).

). ) because of they do not indicate if the identification is carried out by the own system or by the assistant. In some works are supposed the person identification but do not make it a reliable manner.

) because of they do not indicate if the identification is carried out by the own system or by the assistant. In some works are supposed the person identification but do not make it a reliable manner.5. Conclusions

- In the smart home category, the work [36] is very curious because making use of a usual appliance such as the fridge. This device monitors the purchase and the food taken out itself but can detect neither the intake moment nor identify the person. Neither, this solution is portable, which complicates things.

- The best options are found in the smartphone category. The works of [18] or [19] have developed a mobile application (App) that send a photo taken with the smartphone to external web service and thereafter receive the data extracted from that photo. These solutions detect the intake and the food without supervision, but they cannot identify the person who is eating. On the other hand, the project [16] is another App, but on this occasion not make on-line processing, though the insertion of data is carried out by the dietists and other health professionals (supervised manner and with intermediaries).

Acknowledgments

References

- Bloom, D.E.; Chatterji, S.; Kowal, P.; Lloyd-Sherlock, P.; McKee, M.; Rechel, B.; Rosenberg, L.; Smith, J.P. Macroeconomic implications of population ageing and selected policy responses. Lancet 2015, 385, 649–657. [Google Scholar] [CrossRef]

- Morley, J.E.; Silver, A.J. Nutritional Issues in Nursing Home Care. Ann. Internal Med. 1995, 123, 850. [Google Scholar] [CrossRef] [PubMed]

- Sayer, A.A.; Cooper, C. Early diet and growth: Impact on ageing. Proc. Nutr. Soc. 2002, 61, 79–85. [Google Scholar] [CrossRef] [PubMed]

- Darnton-Hill, I.; Nishida, C.; James, W.P.T. A life course approach to diet, nutrition and the prevention of chronic diseases. Public Health Nutr. 2004, 7, 101–21. [Google Scholar] [CrossRef]

- GARIBALLA, S. Malnutrition in hospitalized elderly patients: When does it matter? Clin. Nutr. 2001, 20, 487–491. [Google Scholar] [CrossRef] [PubMed]

- Evans, C. Malnutrition in the elderly: A multifactorial failure to thrive. Perm. J. 2005, 9, 38–41. [Google Scholar] [CrossRef]

- Ledikwe, J.H.; Smiciklas-Wright, H.; Mitchell, D.C.; Jensen, G.L.; Friedmann, J.M.; Still, C.D. Nutritional risk assessment and obesity in rural older adults: A sex difference. Am. J. Clin. Nutr. 2003, 77, 551–558. [Google Scholar] [CrossRef]

- Droogsma, E.; Van Asselt, D.Z.B.; Scholzel-Dorenbos, C.J.M.; Van Steijn, J.H.M.; Van Walderveen, P.E.; Van Der Hooft, C.S. Nutritional status of community-dwelling elderly with newly diagnosed Alzheimer’s disease: Prevalence of malnutrition and the relation of various factors to nutritional status. J. Nutr. Health Aging 2013, 17, 606–610. [Google Scholar] [CrossRef]

- Boulos, C.; Salameh, P.; Barberger-Gateau, P. Malnutrition and frailty in community dwelling older adults living in a rural setting. Clin. Nutr. 2016, 35, 138–143. [Google Scholar] [CrossRef]

- Khanam, M.A.; Qiu, C.; Lindeboom, W.; Streatfield, P.K.; Kabir, Z.N.; Wahlin, A.A. The metabolic syndrome: prevalence, associated factors, and impact on survival among older persons in rural Bangladesh. PLoS ONE 2011, 6, e20259. [Google Scholar] [CrossRef]

- Crockett, S.J.; Tobelmann, R.C.; Albertson, A.M.; Jacob, B.L. Nutrition Monitoring Application in the Food Industry. Nutr. Today 2002, 37, 130–135. [Google Scholar] [CrossRef] [PubMed]

- Kalantarian, H.; Alshurafa, N.; Sarrafzadeh, M. A Survey of Diet Monitoring Technology. IEEE Pervasive Comput. 2017, 16, 57–65. [Google Scholar] [CrossRef]

- Research2guidance. mHealth Economics 2016–Current Status and Trends of the mHealth App Market. Technical report. 2016. Available online: http://research2guidance.com/product/mhealth-app-developer-economics-2016/ (accessed on 7 May 2018).

- Krebs, P.; Duncan, D.T. Health App Use among US Mobile Phone Owners: A National Survey. JMIR mHealth uHealth 2015, 3, e101. [Google Scholar] [CrossRef] [PubMed]

- Aitken, M. Patient Adoption of mHealth; IMS Institute for Healthcare Informatics: New York, NY, USA, 2015. [Google Scholar]

- Chen, J.; Gemming, L.; Hanning, R.; Allman-Farinelli, M. Smartphone apps and the nutrition care process: Current perspectives and future considerations. Patient Educ. Counsel. 2018, 101, 750–757. [Google Scholar] [CrossRef]

- Ahmad, Z.; Khanna, N.; Kerr, D.A.; Boushey, C.J.; Delp, E.J. A Mobile Phone User Interface for Image-Based Dietary Assessment. Proc. SPIE Int. Soc. Opt. Eng. 2014, 9030. [Google Scholar] [CrossRef]

- Ocay, A.B.; Fernandez, J.M.; Palaoag, T.D. NutriTrack: Android-based food recognition app for nutrition awareness. In Proceedings of the 2017 3rd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2017; pp. 2099–2104. [Google Scholar]

- Kohila, R.; Meenakumari, R. Predicting calorific value for mixed food using image processing. In Proceedings of the 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 17–18 March 2017; pp. 1–4. [Google Scholar]

- Kitamura, K.; Yamasaki, T.; Aizawa, K. FoodLog: capture, analysis and retrieval of personal food images via web. In Proceedings of the ACM multimedia 2009 workshop on Multimedia for cooking and eating activities—CEA ’09; ACM Press: New York, NY, USA, 2009; p. 23. [Google Scholar]

- Noronha, J.; Hysen, E.; Zhang, H.; Gajos, K.Z. Platemate. In Proceedings of the 24th annual ACM symposium on User interface software and technology—UIST ’11; ACM Press: New York, NY, USA, 2011; p. 1. [Google Scholar]

- Jiang, H.; Starkman, J.; Liu, M.; Huang, M.C. Food Nutrition Visualization on Google Glass: Design Tradeoff and Field Evaluation. IEEE Consum. Electron. Mag. 2018, 7, 21–31. [Google Scholar] [CrossRef]

- Amft, O. A wearable earpad sensor for chewing monitoring. In Proceedings of the 2010 IEEE Sensors, Kona, HI, USA, 1–4 November 2010; pp. 222–227. [Google Scholar]

- Amft, O.; Stäger, M.; Lukowicz, P.; Tröster, G. Analysis of Chewing Sounds for Dietary Monitoring; Springer: Berlin/Heidelberg, Germany, 2005; pp. 56–72. [Google Scholar]

- Amft, O.; Troster, G. On-Body Sensing Solutions for Automatic Dietary Monitoring. IEEE Pervasive Comput. 2009, 8, 62–70. [Google Scholar] [CrossRef]

- Paßler, S.; Fischer, W.J. Food Intake Activity Detection Using a Wearable Microphone System. In Proceedings of the 2011 Seventh International Conference on Intelligent Environments, Nottingham, UK, 25–28 July 2011; pp. 298–301. [Google Scholar]

- Kalantarian, H.; Alshurafa, N.; Sarrafzadeh, M. A Wearable Nutrition Monitoring System. In Proceedings of the 2014 11th International Conference on Wearable and Implantable Body Sensor Networks, Zurich, Switzerland, 16–19 June 2014; pp. 75–80. [Google Scholar]

- Bo, D.; Biswas, S. Wearable diet monitoring through breathing signal analysis. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2013, 1186–1189. [Google Scholar] [CrossRef]

- Kim, H.J.; Kim, M.; Lee, S.J.; Choi, Y.S. An analysis of eating activities for automatic food type recognition. In Proceedings of the 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference, Hollywood, CA, USA, 3–6 December 2012. [Google Scholar]

- Sen, S.; Subbaraju, V.; Misra, A.; Balan, R.K.; Lee, Y. The case for smartwatch-based diet monitoring. In Proceedings of the 2015 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), St. Louis, MO, USA, 23–27 March 2015; pp. 585–590. [Google Scholar] [CrossRef]

- Moschetti, A.; Fiorini, L.; Esposito, D.; Dario, P.; Cavallo, F. Toward an Unsupervised Approach for Daily Gesture Recognition in Assisted Living Applications. IEEE Sens. J. 2017, 17, 8395–8403. [Google Scholar] [CrossRef]

- Fontana, J.M.; Farooq, M.; Sazonov, E. Automatic Ingestion Monitor: A Novel Wearable Device for Monitoring of Ingestive Behavior. IEEE Trans. Biomed. Eng. 2014, 61, 1772–1779. [Google Scholar] [CrossRef]

- Lazaro, J.P.; Fides, A.; Navarro, A.; Guillen, S. Ambient Assisted Nutritional Advisor for elderly people living at home. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2010, 198–203. [Google Scholar] [CrossRef]

- Aarts, E.; Marzano, S. The New Everyday: Views on Ambient Intelligence; 010 Publishers: Tilburg, The Netherlands, 2003; p. 352. [Google Scholar]

- Luo, S.; Xia, H.; Gao, Y.; Jin, J.S.; Athauda, R. Smart Fridges with Multimedia Capability for Better Nutrition and Health. In Proceedings of the 2008 International Symposium on Ubiquitous Multimedia Computing, Hobart, Australia, 13–15 October 2008; pp. 39–44. [Google Scholar]

- Lee, Y.; Huang, M.C.; Zhang, X.; Xu, W. FridgeNet: A Nutrition and Social Activity Promotion Platform for Aging Populations. IEEE Intell. Syst. 2015, 30, 23–30. [Google Scholar] [CrossRef]

- Thong, Y.J.; Nguyen, T.; Zhang, Q.; Karunanithi, M.; Yu, L. Predicting food nutrition facts using pocket-size near-infrared sensor. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Seogwipo, South Korea, 11–15 July 2017; pp. 742–745. [Google Scholar]

- Cozzolino, D. Near Infrared Spectroscopy and Food Authenticity. In Advances in Food Traceability Techniques and Technologies; Elsevier: Amsterdam, The Netherlands, 2016; pp. 119–136. [Google Scholar]

- Bidlack, W.R. Interrelationships of food, nutrition, diet and health: the National Association of State Universities and Land Grant Colleges White Paper. J. Am. Coll. Nutr. 1996, 15, 422–433. [Google Scholar] [CrossRef]

- Pedram, M.; Rokni, S.A.; Fallahzadeh, R.; Ghasemzadeh, H. A beverage intake tracking system based on machine learning algorithms, and ultrasonic and color sensors. In Proceedings of the 16th ACM/IEEE International Conference on Information Processing in Sensor Networks—IPSN ’17; ACM Press: New York, NY, USA, 2017; pp. 313–314. [Google Scholar]

- Badia-Melis, R.; Ruiz-Garcia, L. Real-Time Tracking and Remote Monitoring in Food Traceability. In Advances in Food Traceability Techniques and Technologies; Elsevier: Amsterdam, The Netherlands, 2016; pp. 209–224. [Google Scholar]

- LEE, R.D.; NIEMAN, D.C.; RAINWATER, M. Comparison of Eight Microcomputer Dietary Analysis Programs with the USDA Nutrient Data Base for Standard Reference. J. Am. Diet. Assoc. 1995, 95, 858–867. [Google Scholar] [CrossRef]

- Bassham, S.; Fletcher, L.; Stanton, R. Dietary analysis with the aid of a microcomputer. J. Microcomput. Appl. 1984, 7, 279–289. [Google Scholar] [CrossRef]

- Dare, D.; Al-Bander, S.Y. A computerized diet analysis system for the research nutritionist. J. Am. Diet. Assoc. 1987, 87, 629–32. [Google Scholar] [CrossRef]

- Adelman, M.O.; Dwyer, J.T.; Woods, M.; Bohn, E.; Otradovec, C.L. Computerized dietary analysis systems: A comparative view. J. Am. Diet. Assoc. 1983, 83, 421–429. [Google Scholar] [CrossRef]

- Hezarjaribi, N.; Mazrouee, S.; Ghasemzadeh, H. Speech2Health: A Mobile Framework for Monitoring Dietary Composition From Spoken Data. IEEE J. Biomed. Health Inform. 2018, 22, 252–264. [Google Scholar] [CrossRef]

- Hezarjaribi, N.; Reynolds, C.A.; Miller, D.T.; Chaytor, N.; Ghasemzadeh, H. S2NI: A mobile platform for nutrition monitoring from spoken data. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2016, 1991–1994. [Google Scholar] [CrossRef]

- Mankoff, J.; Hsieh, G.; Hung, H.C.; Lee, S.; Nitao, E. Using Low-Cost Sensing to Support Nutritional Awareness; Springer: Berlin/Heidelberg, Germany, 2002; pp. 371–378. [Google Scholar]

| Identify | Without | |||||||

|---|---|---|---|---|---|---|---|---|

| Type | References | Detect Intake | Detect Food | Person-Specific | Low-Cost | Unsupervised | Intermediary | Portable |

| Smartphone | [16] |  |  |  |  |  |  |  |

| [17] |  |  |  |  |  |  |  | |

| [18] |  |  |  |  |  |  |  | |

| [19] |  |  |  |  |  |  |  | |

| Computer Vision | [20] |  |  |  |  |  |  |  |

| [21] |  |  |  |  |  |  |  | |

| [22] |  |  |  |  |  |  |  | |

| Wearable | [23] |  |  |  |  |  |  |  |

| [24] |  |  |  |  |  |  |  | |

| [25] |  |  |  |  |  |  |  | |

| [26] |  |  |  |  |  |  |  | |

| [27] |  |  |  |  |  |  |  | |

| [28] |  |  |  |  |  |  |  | |

| [29] |  |  |  |  |  |  |  | |

| [30] |  |  |  |  |  |  |  | |

| [31] |  |  |  |  |  |  |  | |

| [32] |  |  |  |  |  |  |  | |

| Smart Home | [33] |  |  |  |  |  |  |  |

| [35] |  |  |  |  |  |  |  | |

| [36] |  |  |  |  |  |  |  | |

| IoT | [37] |  |  |  |  |  |  |  |

| [38] |  |  |  |  |  |  |  | |

| [40] |  |  |  |  |  |  |  | |

| Microcomp. | [43] |  |  |  |  |  |  |  |

| [44] |  |  |  |  |  |  |  | |

| [45] |  |  |  |  |  |  |  | |

| Others | [46] |  |  |  |  |  |  |  |

| [47] |  |  |  |  |  |  |  | |

| [48] |  |  |  |  |  |  |  |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moguel, E.; Berrocal, J.; Murillo, J.M.; Garcia-Alonso, J.; Mendes, D.; Fonseca, C.; Lopes, M. Monitoring Food Intake in an Aging Population: A Survey on Technological Solutions. Proceedings 2018, 2, 445. https://doi.org/10.3390/proceedings2190445

Moguel E, Berrocal J, Murillo JM, Garcia-Alonso J, Mendes D, Fonseca C, Lopes M. Monitoring Food Intake in an Aging Population: A Survey on Technological Solutions. Proceedings. 2018; 2(19):445. https://doi.org/10.3390/proceedings2190445

Chicago/Turabian StyleMoguel, Enrique, Javier Berrocal, Juan M. Murillo, José Garcia-Alonso, David Mendes, Cesar Fonseca, and Manuel Lopes. 2018. "Monitoring Food Intake in an Aging Population: A Survey on Technological Solutions" Proceedings 2, no. 19: 445. https://doi.org/10.3390/proceedings2190445

APA StyleMoguel, E., Berrocal, J., Murillo, J. M., Garcia-Alonso, J., Mendes, D., Fonseca, C., & Lopes, M. (2018). Monitoring Food Intake in an Aging Population: A Survey on Technological Solutions. Proceedings, 2(19), 445. https://doi.org/10.3390/proceedings2190445