Steps towards Industrial Validation Experiments †

Abstract

:1. Introduction

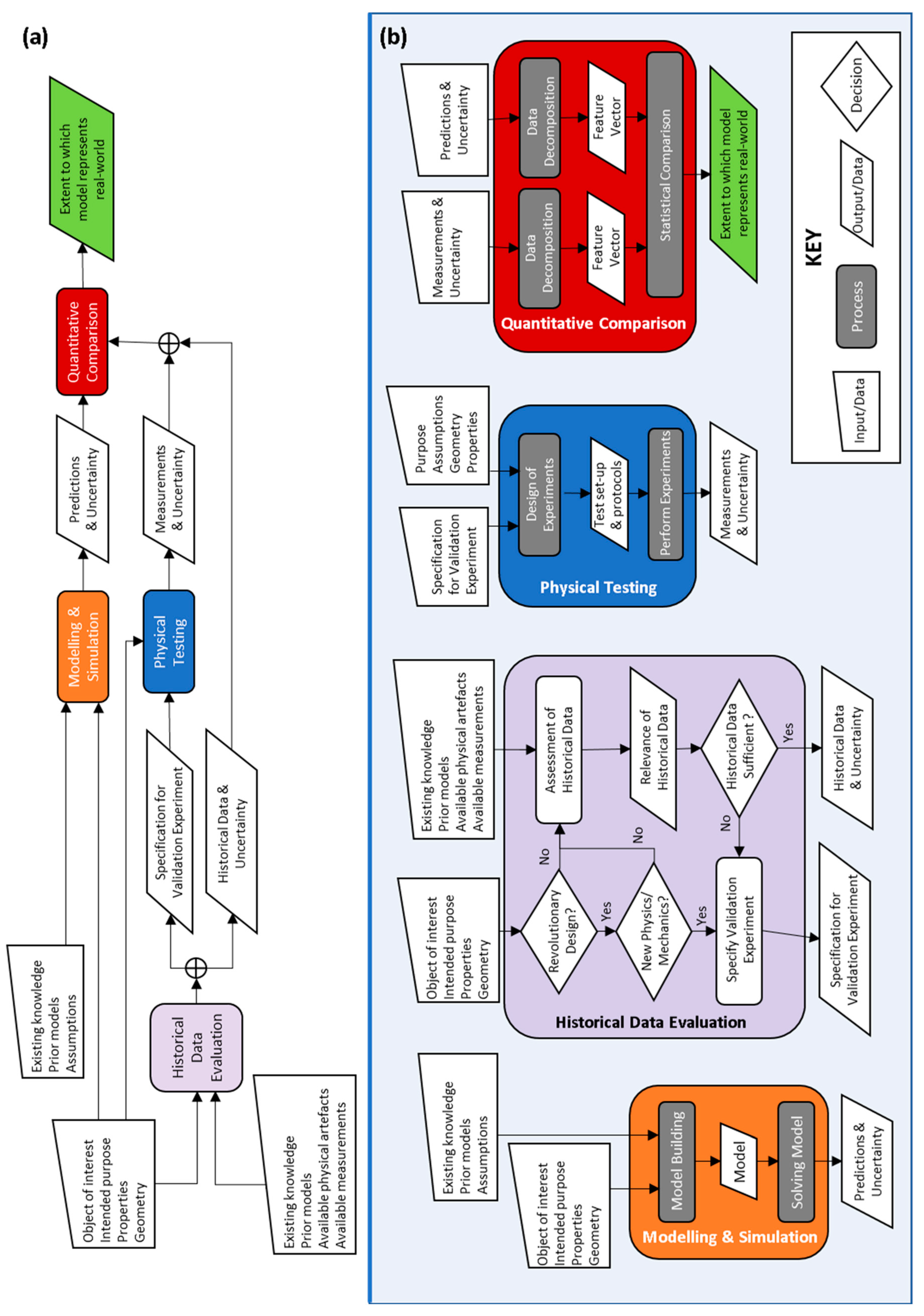

2. Validation in an Industrial Context

2.1. Prerequisites for a Significant Validation Outcome

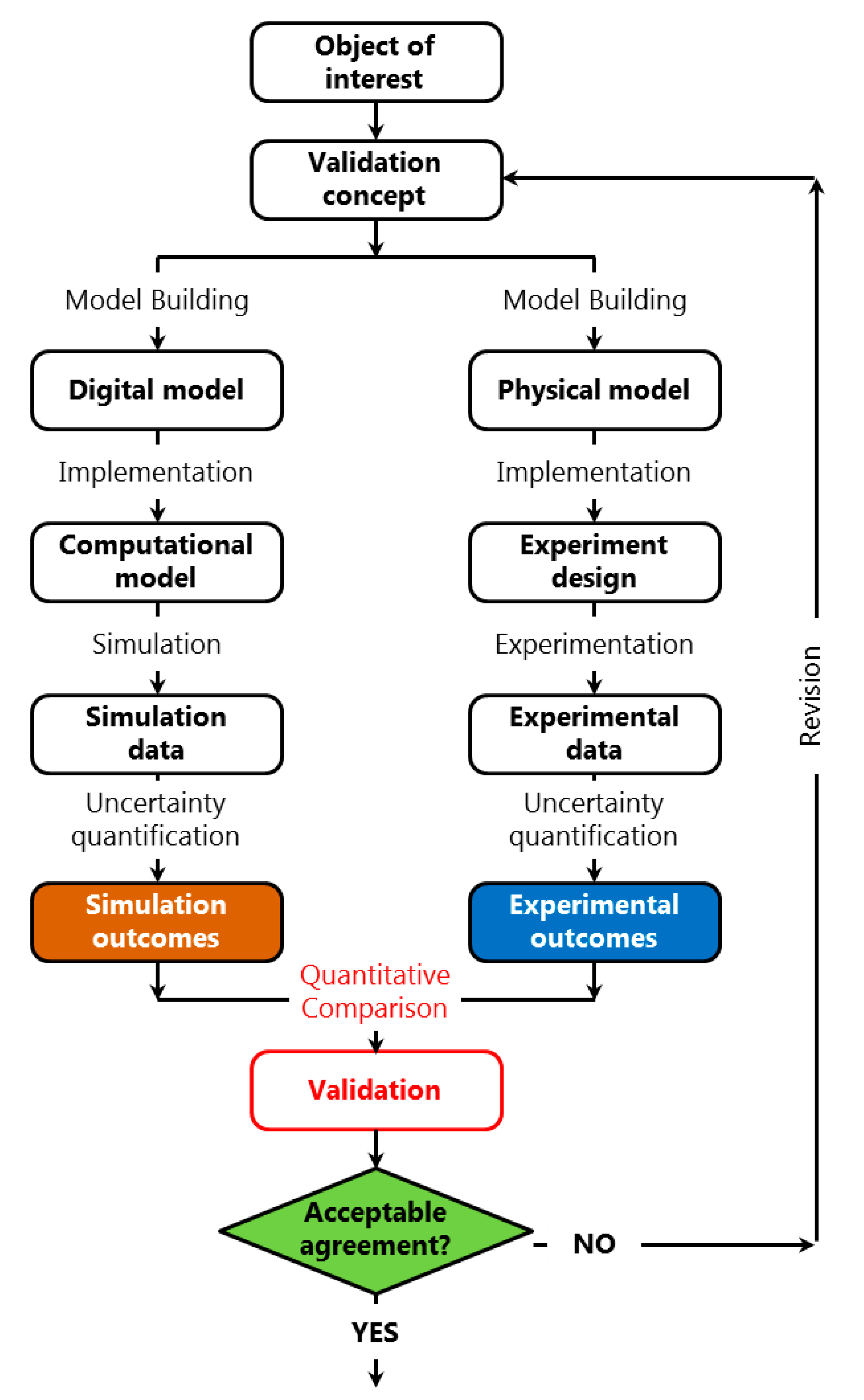

2.2. Quantitative Comparison Using a Validation Metric

2.3. Incorporation of Historical Data

3. Proposed Validation Flowchart

4. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- ASME. Guide for Verification and Validation in Computational Solid Mechanics; American Society of Mechanical Engineers ASME V&V 10-2006: New York, NY, USA, 2006. [Google Scholar]

- ASME. An Illustration of the Concepts of Verification and Validation in Computational Solid Mechanics; American Society of Mechanical Engineers ASME V&V 10.1-2012: New York, NY, USA, 2012. [Google Scholar]

- European Committee for Standardisation (CEN). Validation of computational solid mechanics models. In CEN Workshop Agreement, CWA 16799:2014 E. Available online: https://www.cen.eu/work/areas/Materials/Pages/WS-71.aspx (accessed on 30 April 2018).

- EU framework programme 7 project VANESSA: VAlidation of Numerical Engineering Simulations—Standardisation Action (Grant Agreement No. 319116). Available online: http://www.engineeringvalidation.org/vanessa (accessed on 30 April 2018).

- Hack, E.; Lampeas, G.; Patterson, E.A. An evaluation of a protocol for the validation of computational solid mechanics models. J. Strain Anal. Eng. Des. 2016, 51, 5–13. [Google Scholar] [CrossRef]

- MOTIVATE, Matrix Optimization for Testing by Interaction of Virtual and Test Environments, H2020 Clean Sky 2 Project (Grant Agreement No. 754660). Available online: www.engineeringvalidation.org (accessed on 30 April 2018).

- Sutton, M.A.; Orteu, J.-J.; Schreier, H. Image Correlation for Shape, Motion and Deformation Measurements; Springer: New York, NY, USA, 2009. [Google Scholar]

- Reu, P.L. , A study of the influence of calibration uncertainty on the global uncertainty for digital image correlation using a Monte Carlo approach. Exp. Mech. 2013, 53, 1661–1680. [Google Scholar] [CrossRef]

- Hack, E.; Lin, X.; Patterson, E.A.; Sebastian, C.M. , A reference material for establishing uncertainties in full-field displacement measurements. Meas. Sci. Technol. 2015, 26, 075004. [Google Scholar] [CrossRef]

- Lampeas, G.; Pasialis, V.P.; Lin, X.; Patterson, E.A. On the validation of solid mechanics models using optical measurements and data decomposition. Simul. Model. Pract. Theory 2015, 52, 92–107. [Google Scholar] [CrossRef]

- Sargent, R.G. An introduction to verification and validation of simulation models. In Proceedings of the 2013 Winter Simulation Conference, Washington DC, USA, 8–11 December 2013; pp. 321–327. [Google Scholar]

- Oberkampf, W.L.; Barone, M.F. Measures of agreement between computation and experiment: Validation metrics. J. Comput. Phys. 2006, 217, 5–36. [Google Scholar] [CrossRef]

- Dvurecenska, K.; Patelli, E.; Patterson, E.A. What’s the probability that a simulation agrees with your experiment? In Proceedings of the Photomechanics 2018 conference, Toulouse, France, 19–22 March 2018; pp. 65–67. [Google Scholar]

- Chair of CEN Workshop WS71. E-Mail: [email protected].

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hack, E.; Burguete, R.; Dvurecenska, K.; Lampeas, G.; Patterson, E.; Siebert, T.; Szigeti, E. Steps towards Industrial Validation Experiments. Proceedings 2018, 2, 391. https://doi.org/10.3390/ICEM18-05216

Hack E, Burguete R, Dvurecenska K, Lampeas G, Patterson E, Siebert T, Szigeti E. Steps towards Industrial Validation Experiments. Proceedings. 2018; 2(8):391. https://doi.org/10.3390/ICEM18-05216

Chicago/Turabian StyleHack, Erwin, Richard Burguete, Ksenija Dvurecenska, George Lampeas, Eann Patterson, Thorsten Siebert, and Eszter Szigeti. 2018. "Steps towards Industrial Validation Experiments" Proceedings 2, no. 8: 391. https://doi.org/10.3390/ICEM18-05216