An Efficient Algorithm for Cleaning Robots Using Vision Sensors †

Abstract

:1. Introduction

2. Related Works

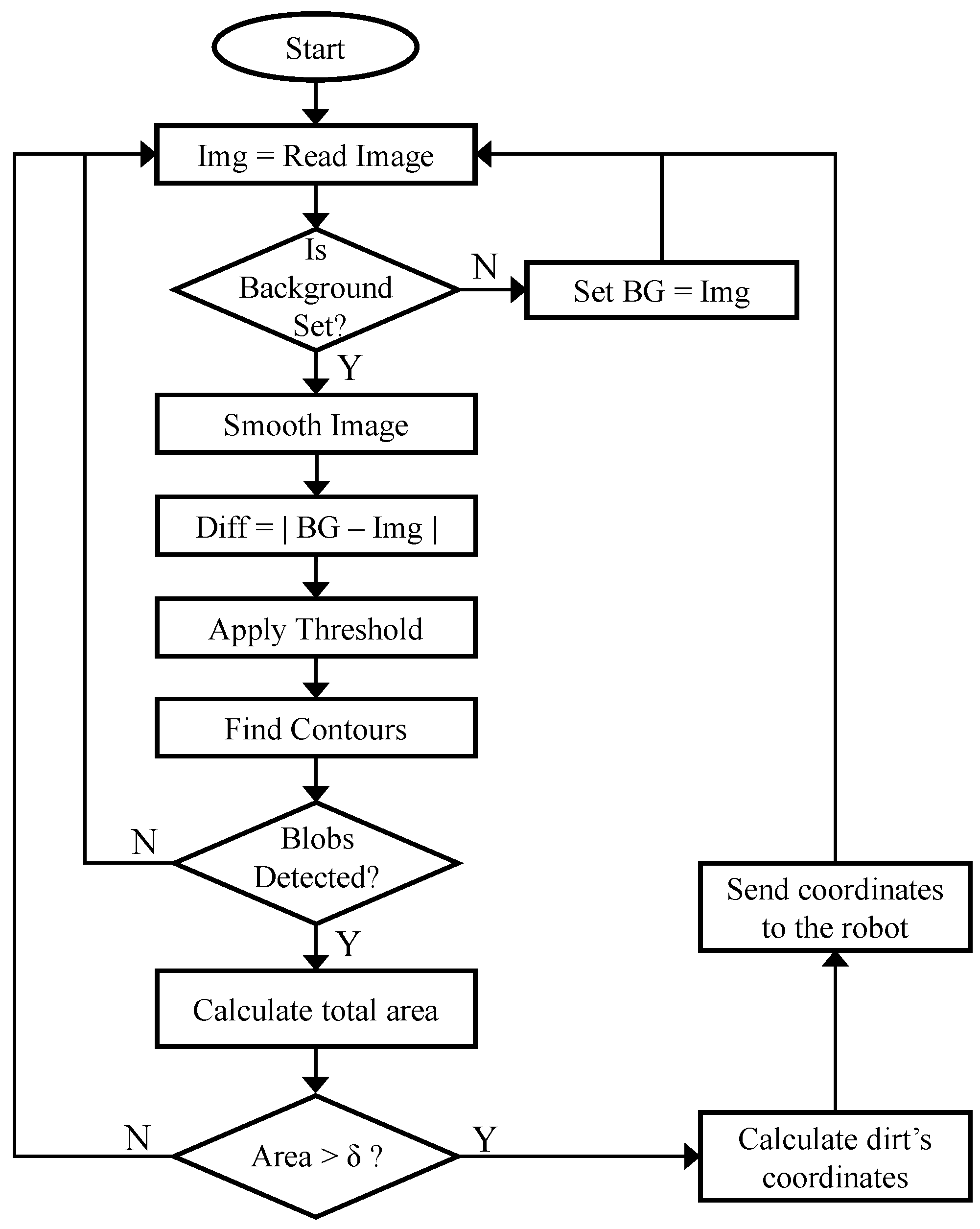

3. Dirt Detection and Robot Notification Algorithm

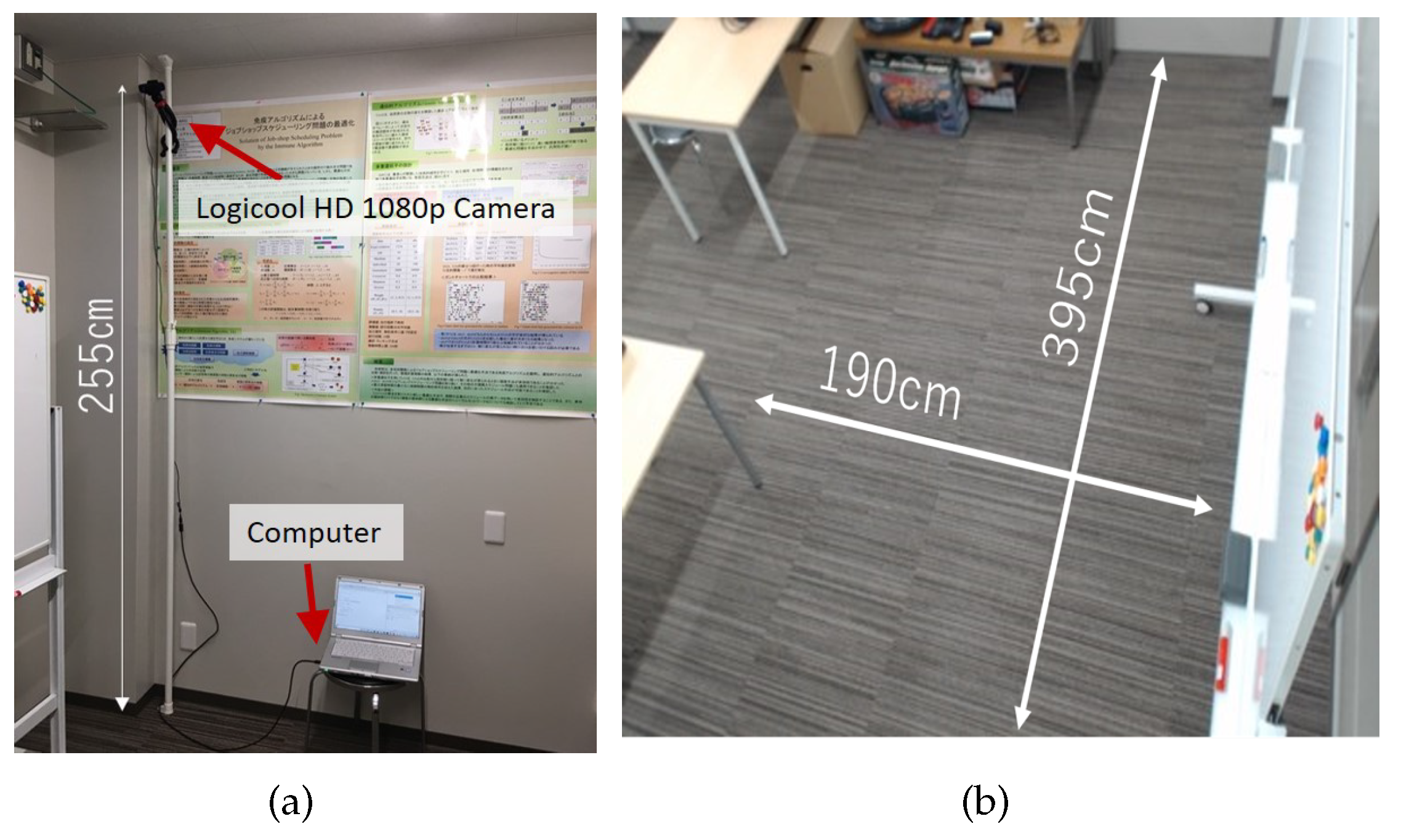

4. Experiment and Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Website. iRobot Roomba 2019. Available online: https://www.irobot.com/roomba (accessed on 10 October 2019).

- Bormann, R.; Weisshardt, F.; Arbeiter, G.; Fischer, J. Autonomous dirt detection for cleaning in office environments. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1260–1267. [Google Scholar] [CrossRef]

- Bormann, R.; Fischer, J.; Arbeiter, G.; Weisshardt, F.; Verl, A. A Visual Dirt Detection System for Mobile Service Robots. In Proceedings of the ROBOTIK 2012—7th German Conference on Robotics, Munich, Germany, 21–22 May 2012; pp. 1–6. [Google Scholar]

- Milinda, H.G.T.; Madhusanka, B.G.D.A. Mud and dirt separation method for floor cleaning robot. In Proceedings of the 2017 Moratuwa Engineering Research Conference (MERCon), Moratuwa, Sri Lanka, 29–31 May 2017; pp. 316–320. [Google Scholar] [CrossRef]

- Gao, X.; Kikuchi, K. Study on a Kind of Wall Cleaning Robot. In Proceedings of the 2004 IEEE International Conference on Robotics and Biomimetics, Shenyang, China, 22–26 August 2004; pp. 391–394. [Google Scholar] [CrossRef]

- Seemuang, N. A cleaning robot for greenhouse roofs. In Proceedings of the 2017 2nd International Conference on Control and Robotics Engineering (ICCRE), Bangkok, Thailand, 1–3 April 2017; pp. 49–52. [Google Scholar] [CrossRef]

- Yuan, F.-C.; Hu, S.-J.; Sun, H.-L.; Wang, L.-Z. Design of cleaning robot for swimming pools. In Proceedings of the MSIE 2011, Harbin, China, 8–9 January 2011; pp. 1175–1178. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L. Saliency Detection: A Spectral Residual Approach. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Jeon, S.; Jang, M.; Lee, D.; Lee, C.; Cho, Y. Strategy for cleaning large area with multiple robots. In Proceedings of the 2013 10th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Korea, 30 October–2 November 2013; pp. 652–654. [Google Scholar] [CrossRef]

- Jeon, S.; Jang, M.; Lee, D.; Cho, Y.; Lee, J. Multiple robots task allocation for cleaning a large public space. In Proceedings of the 2015 SAI Intelligent Systems Conference (IntelliSys), London, UK, 10–11 November 2015; pp. 315–319. [Google Scholar] [CrossRef]

- Guangling, L.; Yonghui, P. System Design and Obstacle Avoidance Algorithm Research of Vacuum Cleaning Robot. In Proceedings of the 2015 14th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES), Guiyang, China, 18–24 August 2015; pp. 171–175. [Google Scholar] [CrossRef]

- Lee, J.H.; Choi, J.S.; Lee, B.H.; Lee, K.W. Complete coverage path planning for cleaning task using multiple robots. In Proceedings of the 2009 IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; pp. 3618–3622. [Google Scholar] [CrossRef]

- Liu, S.; Zheng, L.; Wang, S.; Li, R.; Zhao, Y. Cognitive abilities of indoor cleaning robots. In Proceedings of the 2016 12th World Congress on Intelligent Control and Automation (WCICA), Guilin, China, 12–15 June 2016; pp. 1508–1513. [Google Scholar] [CrossRef]

- Choi, Y.-H.; Jung, K.-M. Windoro: The world’s first commercialized window cleaning robot for domestic use. In Proceedings of the 2011 8th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Incheon, Korea, 23–26 November 2011; pp. 131–136. [Google Scholar] [CrossRef]

- Website. Neato Robot 2019. Available online: https://www.neatorobotics.com/jp/ja/ (accessed on 10 October 2019).

- Rankin, A.L.; Matthies, L.H. Passive sensor evaluation for unmanned ground vehicle mud detection. J. Field Robot. 2010, 27, 473–490. [Google Scholar] [CrossRef]

- Ravankar, A.; Ravankar, A.A.; Hoshino, Y.; Emaru, T.; Kobayashi, Y. On a Hopping-points SVD and Hough Transform Based Line Detection Algorithm for Robot Localization and Mapping. Int. J. Adv. Robot. Syst. 2016, 13, 98. [Google Scholar] [CrossRef]

- Ravankar, A.A.; Ravankar, A.; Emaru, T.; Kobayashi, Y. A hybrid topological mapping and navigation method for large area robot mapping. In Proceedings of the 2017 56th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Kanazawa, Japan, 19–22 September 2017; pp. 1104–1107. [Google Scholar] [CrossRef]

- Hart, P.; Nilsson, N.; Raphael, B. A Formal Basis for the Heuristic Determination of Minimum Cost Paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Dijkstra, E.W. A Note on Two Problems in Connexion with Graphs. Numerische Mathematik 1959, 1, 269–271. [Google Scholar] [CrossRef]

- Ravankar, A.; Ravankar, A.; Kobayashi, Y.; Emaru, T. Symbiotic Navigation in Multi-Robot Systems with Remote Obstacle Knowledge Sharing. Sensors 2017, 17, 1581. [Google Scholar] [CrossRef] [PubMed]

- Ravankar, A.; Ravankar, A.A.; Hoshino, Y.; Kobayashi, Y. On Sharing Spatial Data with Uncertainty Integration Amongst Multiple Robots Having Different Maps. Appl. Sci. 2019, 9, 2753. [Google Scholar] [CrossRef]

- Ravankar, A.; Ravankar, A.A.; Kobayashi, Y.; Emaru, T. Can robots help each other to plan optimal paths in dynamic maps? In Proceedings of the 2017 56th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Kanazawa, Japan, 19–22 September 2017; pp. 317–320. [Google Scholar] [CrossRef]

- Ravankar, A.; Ravankar, A.A.; Kobayashi, Y.; Jixin, L.; Emaru, T.; Hoshino, Y. A novel vision based adaptive transmission power control algorithm for energy efficiency in wireless sensor networks employing mobile robots. In Proceedings of the 2015 Seventh International Conference on Ubiquitous and Future Networks, Sapporo, Japan, 7–10 July 2015; pp. 300–305. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ravankar, A.; Ravankar, A.A.; Watanabe, M.; Hoshino, Y. An Efficient Algorithm for Cleaning Robots Using Vision Sensors. Proceedings 2020, 42, 45. https://doi.org/10.3390/ecsa-6-06578

Ravankar A, Ravankar AA, Watanabe M, Hoshino Y. An Efficient Algorithm for Cleaning Robots Using Vision Sensors. Proceedings. 2020; 42(1):45. https://doi.org/10.3390/ecsa-6-06578

Chicago/Turabian StyleRavankar, Abhijeet, Ankit A. Ravankar, Michiko Watanabe, and Yohei Hoshino. 2020. "An Efficient Algorithm for Cleaning Robots Using Vision Sensors" Proceedings 42, no. 1: 45. https://doi.org/10.3390/ecsa-6-06578

APA StyleRavankar, A., Ravankar, A. A., Watanabe, M., & Hoshino, Y. (2020). An Efficient Algorithm for Cleaning Robots Using Vision Sensors. Proceedings, 42(1), 45. https://doi.org/10.3390/ecsa-6-06578