1. Introduction

Human-robot collaboration is currently becoming more useful for assembly operations. Some assembly tasks such as snap-joining induce high acceleration during the engagement or disengagement of two parts [

1]. A combined motion tracking approach for both humans and robots is necessary to develop a concept of safe human-robot physical collaboration [

2]. In assembly tasks such as snap-joining, basic motions such as pick—reach—join—apply force—move are the typical activities to be applied. Such motions require a control mechanism to ensure the position and orientation of joint variables. Similarly, the safety issue has to be ensured for the human worker, particularly to address the motion outliers created due to the high acceleration. If a real-time motion tracking is assumed, the robot motion is prescribed by the motion planning algorithm. In the same way, joint state motion tracking can be used to monitor and control. This approach is considered to develop a closed-loop control for controlling the robot in real-time [

3]. Therefore, real-time motion tracking, path planning, assembly task description and system configurations are crucial to derive a concept for real-time assembly operation to create a safe physical collaboration between humans and robots.

Real-time motion tracking for human-robot collaboration can be considered from the perspective of tracking systems and system capabilities. Different tracking systems have been employed to capture motions. Such systems can be optical systems or inertial measurement units [

4,

5,

6,

7]. Path planning in assembly tasks considers the modality of human motion behavior, task descriptions and robot motions. Planning assembly tasks in another way defines the sequence of operation and component attributes. For tasks that involve applied force, parameters such as the magnitude of applied force, the orientation of action, controlling mechanisms and retraction methods are considered. In snap-joining usually force is applied along a specific orientation. The work of [

8] considered assembly tasks for elastic parts using dual robot arms and a human. The assembly process involved the insertion of o-rings into a cylinder. The human hand movement was captured to derive a concept and a strategy for generating robot motion. In this case, a leap sensor was used to capture a finger movement.

Monitoring and visualization for hybrid systems nowadays are more simplified due to the capabilities of middleware systems to communicate via user datagram protocol (UDP) / transmission control protocol (TCP) communications. In the robotics field, some industrial robots are being supported by the robot operating system industrial (ROS-I). ROS-I communities actively share developments and concepts. This system is advantageous to implement user-oriented controlling techniques. ROS-I is a meta-operating system, which is mainly developed for industrial systems [

9]. Various plugins such as MoveIt! and Gazebo are commonly used to generate motion trajectories and to model the geometry simultaneously. However, geometric visualization and rendering of Gazebo are not good enough. In this regard, gaming software such as Unity3D can be considered as a potential alternative to obtain good graphical rendering, particularly for virtual commissioning or process monitoring. Recent works in this regard show Unity3D can be coupled with a robot operating system such as ROS-I to enable real-time streaming or offline simulation. Mainly, the authors in [

10] presented a framework to simulate and monitor industrial processes using Unity3D. However, this work does not address how the real-time motion tracking can be applied to human-robot collaboration. Regarding the application of Unity3D for human-robot interaction, initial work is presented in [

11], which considers a Unity3D-based robot engine to control robots. Recently, ROS# [

12] was developed by Siemens to simplify ROS-I and Unity3D systems interfacing through WebSockets.

In this work, our task is to investigate a method to implement a simplified and decentralized motion tracking using a consistent file exchange format both for human and robot models in real-time using low-cost motion capturing systems. In this context, we implement digital models for both the human and the robot that applies a kinematic model to control the movement of the human and robot skeletons. We combine robot joint motions and human motion in a single intuitive graphical interface to track motions using sensory systems easily. In this regard, a low-cost motion tracking system which is primarily commercialized for gaming is used to develop the concept of motion tracking in human-robot collaborative environments. The generic model controller achieves the overall objective for both robot and human joints in the Unity3D environment. In the meantime, ROS-I and Steam VR systems are employed to facilitate the communication between the graphical controllers and data flow. In the model, kinematic trees are used to prescribe joint motions during each motion step. Finally, the motion data is visualized and analyzed in terms of distribution and position.

2. Materials and Methods

As pointed out in the introduction section, robot operating systems (ROS) are famous for robot control, allowing users to implement their desired motion controlling strategies using a programmatic approach [

9,

13,

14]. This approach is essential to integrate sensors and high level controlling strategies whenever it is necessary. However, the community of ROS-I commonly uses Python and C++ programming languages to develop drivers and controllers. Gaming software such as Unity3D natively uses C# language, which makes ROS-I integration not straightforward. A ROS# asset developed by Siemens was recently used to simplify the system configuration and it was released under the license of Apache License 2.0. It provides a simplistic interface approach between ROS-I and Unity3D systems based on WebSockets. We applied this framework to integrate the robot system, HTC Vive system and ROS-I system on a single machine, i.e., the Linux operating system ROS-I was the master that controlled the communication data from each system and Unity3D was used to control the digital models for virtual process verification. As a gaming engine, the Unity3D environment has a better graphical rendering capability to simulate a virtual scene at a high frame rate. Therefore, it was easy to observe the details of motion behaviors during real-time tracking.

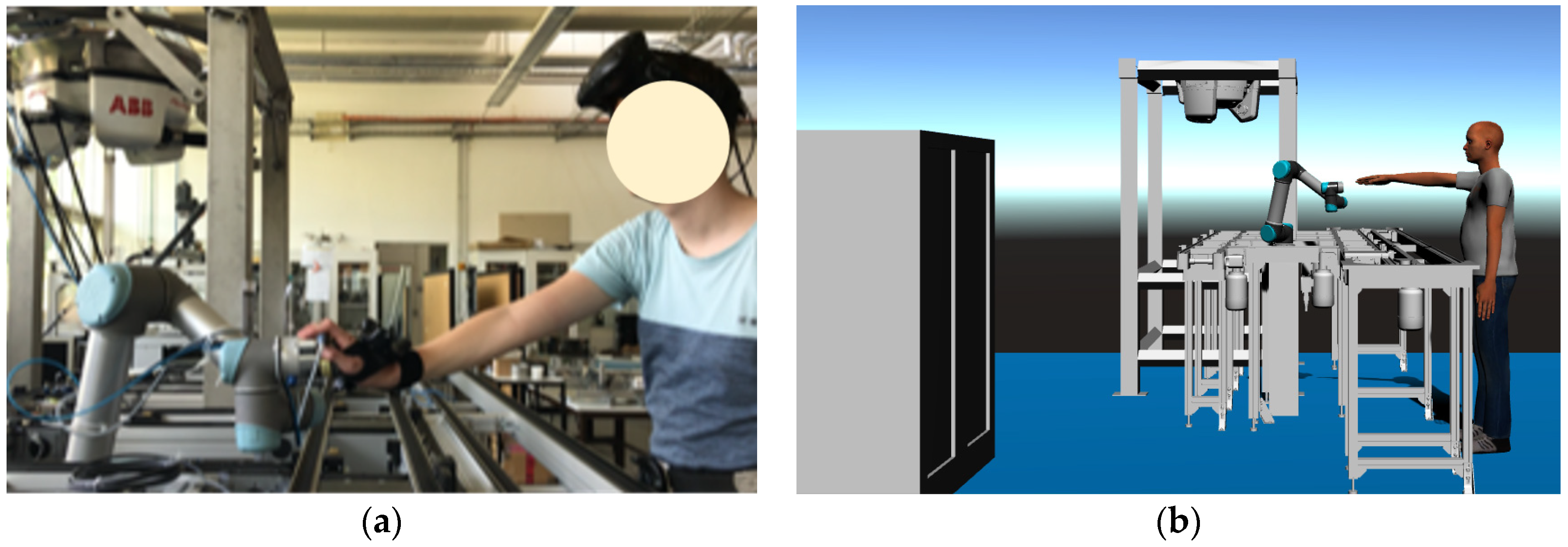

In general, the human-robot collaborative assembly task involves a method to represent the digital human model, which is used to map tracking systems into joint variables. In this context, we created a human avatar using make-human packages [

15]. The digital human model was configured according to the worker size before it was exported as an FBX file. In this model, there are 53 joints. However, only 9 trackers were attached to the human body and the remaining body joints were neglected. Those HTC Vive trackers were connected to the body using different size straps (see

Figure 1).

At the same time, the geometric model for the collaborative robot, e.g., universal robot, was imported into the Unity3D environment. A unified robot descriptive file importer in ROS# helped to configure the digital robot model into Unity3D easily. Finally, by launching the file server, we enabled ROS-I communication through WebSocket using a single computer that used a Linux operating system (see

Figure 2).

Developing the tracking system in general allows trajectory planning for the robot motion then the robot follows the defined trajectory. The assembly task is a sequence of motions such as pick—reach—join—apply force—move. The concept is that the human worker pushes the second object to be assembled on the first object, which is gripped by the robot gripper. Then, the human worker will apply pressure to fit both objects together. The concept of the experimental design is shown in

Figure 3. The human operator was tracked by the HTC Vive trackers and the robot system was tracked through the ROS-I system.

3. Results

Human-robot motion collaboration using a low-cost tracking system was applied to derive a concept of how a human worker behaves during an assembly process. The motion was parameterized into different levels for both humans and robots. For the human, the task was defined as a sequence of motions such as reach—join—apply force—release. The robot remained at the position during an assembly process based on a wait function. Once the assembly process was performed the robot started to move to the target position. Therefore, the task execution was monitored based on time-space (see

Table 1).

The overview of the motion phenomenon is demonstrated in

Figure 4. In this regard, the motion of the right-hand wrist and the robot tool center position is shown. This motion was motion-captured during the real-time execution.

In this work, the motion was captured for human joints and robot joints. The results were visualized in two-dimensional spaces to describe how the X-components and Y-components behaved.

Figure 5a shows the projection of XY-components, which shows the motion of the arm moves towards the robot tool center position. In the same way, the distribution of X-components is shown in

Figure 5b. The labels A and B show the motion toward the robot tool center position and the position of the object which was not constrained respectively.

This work is a part of ongoing research and therefore further analysis regarding time-space and positioning accuracy will be considered in the next work.

4. Discussion

In this work, we presented a concept of real-time motion tracking for assembly processes in human-robot collaborative environments using a configuration of different systems such as ROS-I and Unity3D. The key achievements of this work were generic model controllers for human and robot motion tracking using a kinematic tree structure. In the same manner, consistent motion capturing formats and exchanges were investigated. Similarly, the capability of low cost and commercially available gaming equipment such as HTC Vive was tested. In the overall process, a single operating system (i.e., Linux (Ubuntu 18.04)) was used to run both ROS-I and Unity3D systems. In this configuration, a concept of an assembly process was described into reach—join—apply force—release—move motion types which were defined to be executed by the human and robot. Depending on the preliminary evaluation, await function was defined to classify tasks and its description for each. This approach does not optimize the assembly process and cycle time. In the same manner, real-time robot control was not implemented to avoid a probability of collision of the human operator moves to the robot path before it reached the assembly position. In this regard, this work serves as an initial step to address safety parameters.

From the result of this work, it can be concluded that a real-time tracking in human-robot collaborative assembly environment can be considered to maximize human worker safety. In this regard, the reliability and robustness of the system regarding system disturbances need to be considered.