Matlab Framework for Image Processing and Feature Extraction Flexible Algorithm Design †

Abstract

1. Introduction

2. Materials and Methods

- Tag: unique identifier of a step, typically the name of the function associated with this step, followed by a numeric index accounting for possible multiple uses of the same function;

- Active: a Boolean (true/false) value indicating if this step is to be considered or not when running the algorithm (to allow maximum flexibility in testing algorithms);

- InParamLinks: a list of strings linking this step function’s parameters to values returned by functions in previous steps, wherever the case (not a typical situation, but implemented for flexibility).

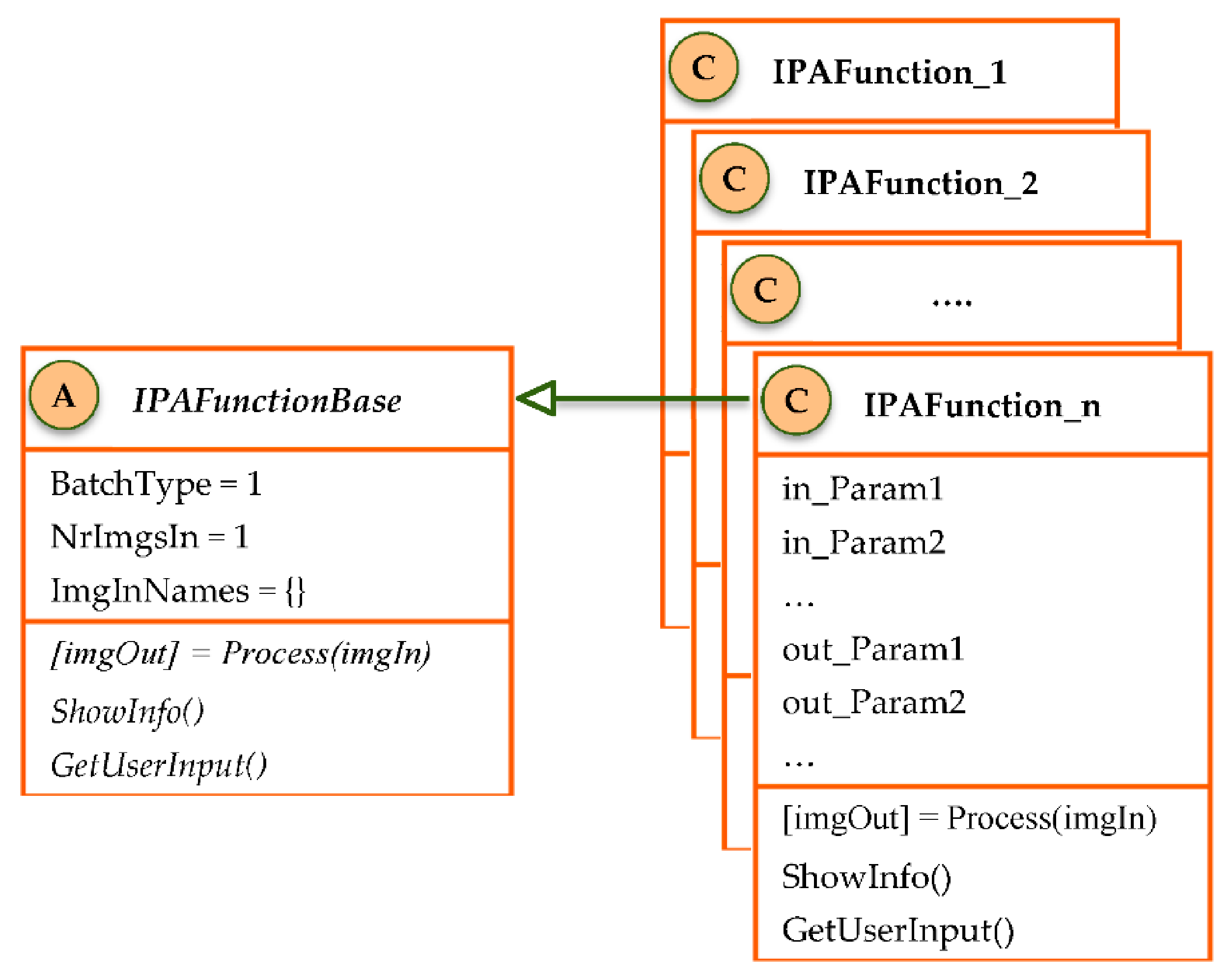

- BatchType (ReadOnly): the default value (1) indicates the function will treat input images as a batch, processing each of them separately. A value of 2 should be set in the derived classes for the functions that aggregate all input functions and return a single output.

- NrImgsIn (ReadOnly): number of image inputs. The default value of 1 means the function processes images resulted from only 1 of the previous steps (or the original images), while greater values can be used in cases where input images originate from multiple previous steps.

- ImgInNames: a list of strings containing the tag(s) of the previous step(s) providing the input images. It should have a number of elements equal to NrImgsIn or be empty. If empty, the engine assumes only 1 input image, the one provided by the previous step (most common scenario).

- Process: abstract method that needs to be overridden in the derived classes, implementing the logic of the image processing function. It has only 1 argument, the input image(s), and 1 output, the processed image(s). Possible additional arguments and results are implemented in the derived classes as public properties.

- ShowInfo: implemented in derived classes only in the case of functions returning information other than images. It typically outputs feature extraction data in a visual form (GUI, graphs). It can optionally be called by the Process method to show the information when the algorithm runs; however, it is a separate method and can be accessed from the application GUI at any time.

- GetUserInput: used in the case of functions requiring the user to provide coordinates of points from the original image(s). Useful for functions extracting scale data from images providing such information and in some other fringe scenarios.

- in_ParamName parameters: properties whose names begin with the “in_” particle are considered input arguments for the image processing function. These can be set on the app GUI at algorithm construction time.

- out_ParamName parameters: properties whose names begin with the “out_” particle are results returned by the function other than images. They can be end results (feature extraction information) or intermediate data used by the “in_” parameters of subsequent steps.

3. Results and Discussion

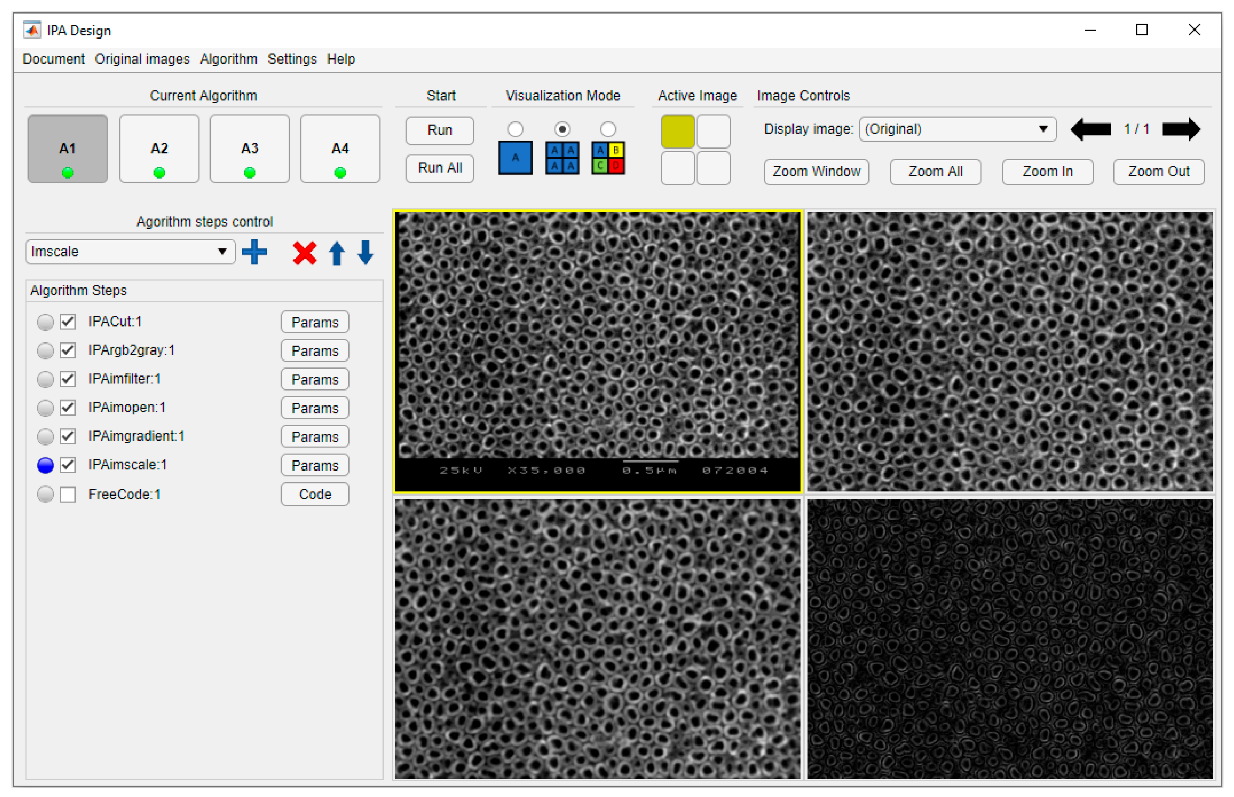

- Tools area. Fixed interface area with menus and controls for all operations except algorithm creation: saving and loading documents and algorithms, managing function paths, running algorithms, and controlling and navigating the image display area.

- Algorithm area. Dynamically generated and managed part of the interface, consisting of a list of controls associated with algorithm steps. Each line contains a control for the selection of the step, a checkbox associated with the Active field, a static text specifying the tag of the step (containing the name of the associated class), and a button opening the parameters window. Steps can be added, deleted, or reordered using the controls in the upper area.

- Image display area. Main panel for displaying the original and transformed images. It can show a single image belonging to the active algorithm, four images from different steps of the active algorithm, or four images from specified steps on each of the four algorithms in a document.

4. Conclusions and Further Research

Funding

Acknowledgments

Conflicts of Interest

References

- The MathWorks, I. MATLAB Image Processing Toolbox (R2019a), Natick, MA, USA. Available online: https://www.mathworks.com/products/image.html (accessed on 20 June 2020).

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 4th ed.; Pearson: New York, NY, USA, 2018. [Google Scholar]

- Nixon, M.S.; Aguado Gonzalez, A.S. Feature Extraction & Image Processing for Computer Vision, 4th ed.; Academic Press: London, UK, 2020. [Google Scholar]

- Petrou, M.M.P.; Petrou, C. Image Processing: The Fundamentals, 2nd ed.; John Wiley & Sons Ltd.: Chichester, UK, 2010. [Google Scholar]

- Schwarzbach, J. A simple framework (ASF) for behavioral and neuroimaging experiments based on the psychophysics toolbox for MATLAB. Behav. Res. 2011, 43, 1194–1201. [Google Scholar] [CrossRef] [PubMed]

- Pan, F.; Xi, X.; Wang, C. A MATLAB-based digital elevation model (DEM) data processing toolbox (MDEM). Environ. Model. Softw. 2019, 122, 104566. [Google Scholar] [CrossRef]

- Cazacu, R.; Grama, L.; Mocian, I. An OOP MATLAB Extensible Framework for the Implementation of Genetic Algorithms. Part I: The Framework. Procedia Technol. 2015, 19, 193–200. [Google Scholar]

- Li, L.; Gong, M.; Chui, Y.H.; Schneider, M. A MATLAB-based image processing algorithm for analyzing cupping profiles of two-layer laminated wood products. Measurement 2014, 53, 234–239. [Google Scholar] [CrossRef]

- Collette, R.; King, J.; Keiser, D.; Miller, B.; Madden, J.; Schulthess, J. Fission gas bubble identification using MATLAB’s image processing toolbox. Mater. Charact. 2016, 118, 284–293. [Google Scholar] [CrossRef]

- Caudrová Slavíková, P.; Mudrová, M.; Petrová, J.; Fojt, J.; Joska, L.; Procházka, A. Automatic characterization of titanium dioxide nanotubes by image processing of scanning electron microscopic images. Nanomater. Nanotechnol. 2016, 6. [Google Scholar] [CrossRef]

- Strnad, G.; Cazacu, R.; Chetan, P.; German-Sallo, Z.; Jakab-Farkas, L. Optimized anodization setup for the growth of TiO2 nanotubes on flat surfaces of titanium based materials. MATEC Web Conf. 2017, 137, 02011. [Google Scholar] [CrossRef]

- El Ruby Mohamed, A.; Rohani, S. Modified TiO2 nanotube arrays (TNTAs): Progressive strategies towards visible light responsive photoanode, a review. Energy Environ. Sci. 2011, 4, 1065–1086. [Google Scholar] [CrossRef]

- Ribeiro, A.; Gemini-Piperni, S.; Alves, S.A. Titanium dioxide nanoparticles and nanotubular surfaces: Potential applications in nanomedicine. In Metal Nanoparticles in Pharma, 1st ed.; Rai, M., Shegokar, R., Eds.; Springer International Publishing: Basel, Switzerland, 2017; pp. 101–121. [Google Scholar]

- Strnad, G.; Portan, D.; Jakab-Farkas, L.; Petrovan, C.; Russu, O. Morphology of TiO2 surfaces for biomedical implants developed by electrochemical anodization. Mater. Sci. Forum 2017, 907, 91–98. [Google Scholar] [CrossRef]

- Gulati, K.; Saso, I. Dental implants modified with drug releasing titania nanotubes: Therapeutic potential and developmental challenges. Expert Opin. Drug Deliv. 2017, 14, 1009–1024. [Google Scholar] [CrossRef] [PubMed]

- Gulati, K.; Maher, S.; Findlay, D.; Losic, D. Titania nanotubes for orchestrating osteogenesis at the bone–implant interface. Nanomed. J. 2016, 11, 1847–1864. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cazacu, R. Matlab Framework for Image Processing and Feature Extraction Flexible Algorithm Design. Proceedings 2020, 63, 72. https://doi.org/10.3390/proceedings2020063072

Cazacu R. Matlab Framework for Image Processing and Feature Extraction Flexible Algorithm Design. Proceedings. 2020; 63(1):72. https://doi.org/10.3390/proceedings2020063072

Chicago/Turabian StyleCazacu, Razvan. 2020. "Matlab Framework for Image Processing and Feature Extraction Flexible Algorithm Design" Proceedings 63, no. 1: 72. https://doi.org/10.3390/proceedings2020063072

APA StyleCazacu, R. (2020). Matlab Framework for Image Processing and Feature Extraction Flexible Algorithm Design. Proceedings, 63(1), 72. https://doi.org/10.3390/proceedings2020063072