Optimizing the Scale of Observation for Intertidal Habitat Classification through Multiscale Analysis

Abstract

:1. Introduction

2. Materials and Methods

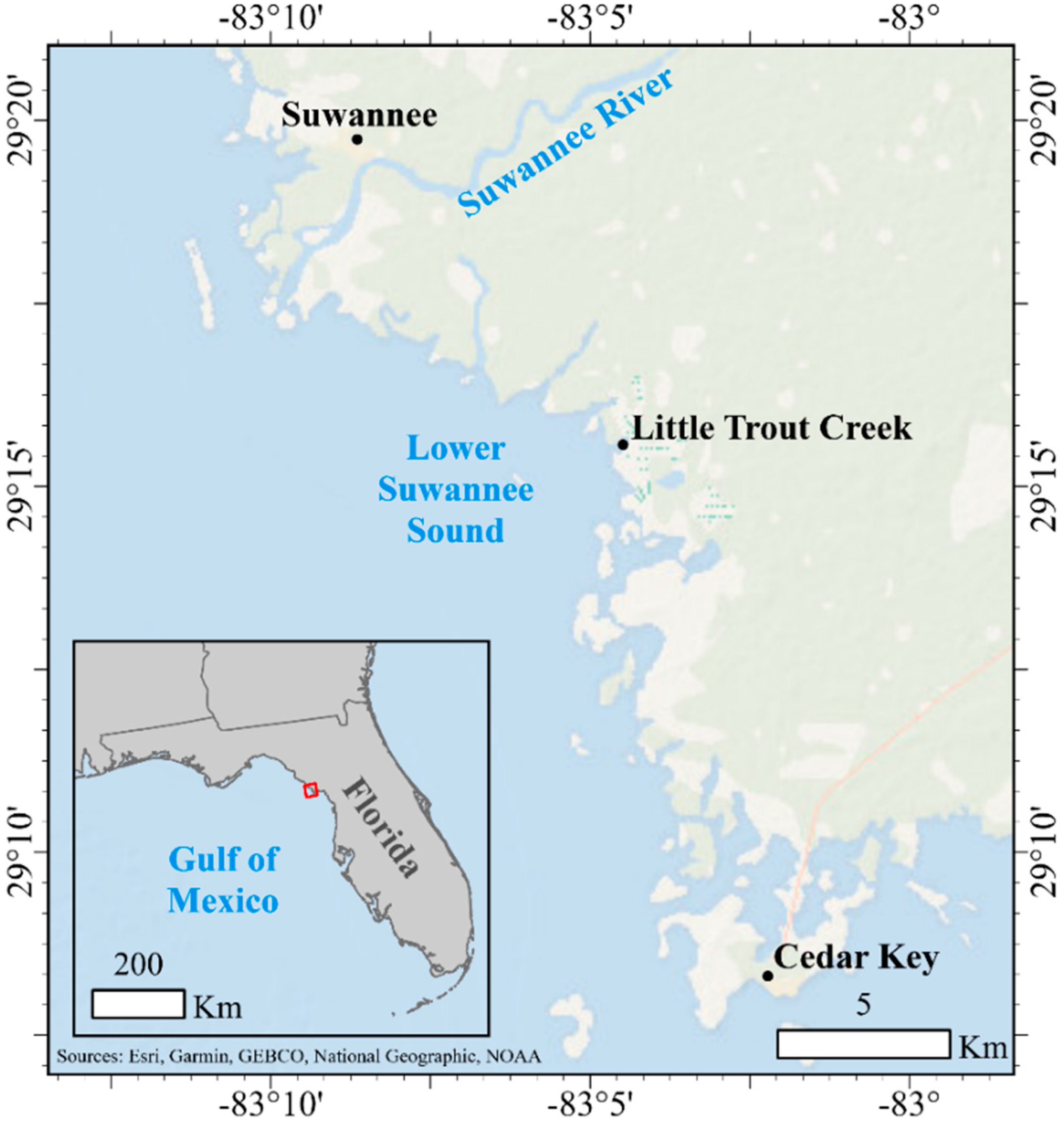

2.1. Study Site and UAS Survey

2.2. Imagery Processing

2.3. GEOBIA—Segmentation Optimization on Representative Subset Area

2.4. GEOBIA-Segmentation and Classification of the Entire Scene

2.5. Accuracy Assessment

2.6. Multiscale Analysis

3. Results

3.1. Imagery Processing

3.2. Segmentation Optimization

3.3. Segmentation and Classification

3.4. Accuracy Assessment

3.5. Variable Importance

3.6. Mode of Classifications

3.7. Multiscale Classification

4. Discussion

4.1. Classification Performance

4.2. Considerations, Challenges, and Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Parameter | Value Used |

|---|---|

| Training threshold | 0.75 |

| Balance method | “ubOver” (over-sampling) |

| Evaluation metric | “Kappa” (kappa coefficient) |

| Evaluation method | “10FCV” (10-fold cross-validation) |

| Min train cases | 20 |

| Min cases by class train | 5 |

| Min cases by class test | 5 |

| Population size | 20 |

| Probability of crossover | 0.8 |

| Probability of mutation | 0.2 |

| Max number of iterations | 5 |

| Run | 20 |

| Keep best | TRUE |

References

- Wiens, J.A. Landscape Mosaics and Ecological Theory. In Mosaic Landscapes and Ecological Processes; Hansson, L., Fahrig, L., Merriam, G., Eds.; Springer: Dordrecht, The Netherlands, 1995; pp. 1–26. [Google Scholar]

- Wedding, L.M.; Lepczyk, C.A.; Pittman, S.J.; Friedlander, A.M.; Jorgensen, S. Quantifying Seascape Structure: Extending Terrestrial Spatial Pattern Metrics to the Marine Realm. Mar. Ecol. Prog. Ser. 2011, 427, 219–232. [Google Scholar] [CrossRef]

- Olds, A.D.; Nagelkerken, I.; Huijbers, C.M.; Gilby, B.L.; Pittman, S.J.; Schlacher, T.A. Connectivity in Coastal Seascapes. In Seascape Ecology, 1st ed.; Pittman, S.J., Ed.; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 2018; pp. 261–364. [Google Scholar]

- Pittman, S.J. Introducing Seascape Ecology. In Seascape Ecology, 1st ed.; Pittman, S.J., Ed.; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 2018; pp. 1–25. [Google Scholar]

- Meyer, D.L.; Townsend, E.C.; Thayer, G.W. Stabilization and Erosion Control Value of Oyster Cultch for Intertidal Marsh. Restor. Ecol. 1997, 5, 93–99. [Google Scholar] [CrossRef]

- Grabowski, J.H.; Hughes, A.R.; Kimbro, D.L.; Dolan, M.A. How Habitat Setting Influences Restored Oyster Reef Communities. Ecology 2005, 86, 1926–1935. [Google Scholar] [CrossRef]

- Hosack, G.R.; Dumbauld, B.R.; Ruesink, J.L.; Armstrong, D.A. Habitat Associations of Estuarine Species: Comparisons of Intertidal Mudflat, Seagrass (Zostera Marina), and Oyster (Crassostrea Gigas) Habitats. Estuaries Coasts 2006, 29, 1150–1160. [Google Scholar] [CrossRef]

- Smyth, A.R.; Piehler, M.F.; Grabowski, J.H. Habitat Context Influences Nitrogen Removal by Restored Oyster Reefs. J. Appl. Ecol. 2015, 52, 716–725. [Google Scholar] [CrossRef]

- Windle, A.E.; Poulin, S.K.; Johnston, D.W.; Ridge, J.T. Rapid and Accurate Monitoring of Intertidal Oyster Reef Habitat Using Unoccupied Aircraft Systems and Structure from Motion. Remote Sens. 2019, 11, 2394. [Google Scholar] [CrossRef]

- Espriella, M.C.; Lecours, V.; Frederick, P.C.; Camp, E.V.; Wilkinson, B. Quantifying Intertidal Habitat Relative Coverage in a Florida Estuary Using UAS Imagery and GEOBIA. Remote Sens. 2020, 12, 677. [Google Scholar] [CrossRef]

- Ridge, J.T.; Gray, P.C.; Windle, A.E.; Johnston, D.W. Deep Learning for Coastal Resource Conservation: Automating Detection of Shellfish Reefs. Remote Sens. Ecol. Conserv. 2020, 6, 431–440. [Google Scholar] [CrossRef]

- Fraser, B.T.; Congalton, R.G. Issues in Unmanned Aerial Systems (UAS) Data Collection of Complex Forest Environments. Remote Sens. 2018, 10, 908. [Google Scholar] [CrossRef]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; van Aardt, J.; Kunneke, A.; Seifert, T. Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H. Remote Sensing of the Environment with Small Unmanned Aircraft Systems (UASs), Part 1: A Review of Progress and Challenges. J. Unmanned Veh. System 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Borra-Serrano, I.; Peña, J.M. Assessing UAV-Collected Image Overlap Influence on Computation Time and Digital Surface Model Accuracy in Olive Orchards. Precis. Agric. 2018, 19, 115–133. [Google Scholar] [CrossRef]

- Lecours, V.; Devillers, R.; Schneider, D.; Lucieer, V.; Brown, C.; Edinger, E. Spatial Scale and Geographic Context in Benthic Habitat Mapping: Review and Future Directions. Mar. Ecol. Prog. Ser. 2015, 535, 259–284. [Google Scholar] [CrossRef]

- Misiuk, B.; Lecours, V.; Dolan, M.F.J.; Robert, K. Evaluating the Suitability of Multi-Scale Terrain Attribute Calculation Approaches for Seabed Mapping Applications. Mar. Geod. 2021, 44, 327–385. [Google Scholar] [CrossRef]

- Miyamoto, M.; Kiyota, M.; Murase, H.; Nakamura, T.; Hayashibara, T. Effects of Bathymetric Grid-Cell Sizes on Habitat Suitability Analysis of Cold-Water Gorgonian Corals on Seamounts. Mar. Geod. 2017, 40, 205–223. [Google Scholar] [CrossRef]

- Misiuk, B.; Lecours, V.; Bell, T. A Multiscale Approach to Mapping Seabed Sediments. PLoS ONE 2018, 13, e0193647. [Google Scholar]

- Florinsky, I.V.; Kuryakova, G.A. Determination of Grid Size for Digital Terrain Modelling in Landscape Investigations—Exemplified by Soil Moisture Distribution at a Micro-Scale. Int. J. Geogr. Inf. Sci. 2000, 14, 815–832. [Google Scholar] [CrossRef]

- Gottschalk, T.K.; Aue, B.; Hotes, S.; Ekschmitt, K. Influence of Grain Size on Species–Habitat Models. Ecol. Model. 2011, 222, 3403–3412. [Google Scholar] [CrossRef]

- Dolan, M.F.J.; Van Lancker, V.; Guinan, J.; Al-Hamdani, Z.; Leth, J.; Thorsnes, T. Terrain Characterization from Bathymetry Data at Various Resolutions in European Waters—Experiences and Recommendations; Geological Survey of Norway Report No. 2012.045; Geological Survey of Norway: Trondheim, Norway, 2012.

- Blanchet, H.; Gouillieux, B.; Alizier, S.; Amouroux, J.-M.; Bachelet, G.; Barillé, A.-L.; Dauvin, J.-C.; de Montaudouin, X.; Derolez, V.; Desroy, N.; et al. Multiscale Patterns in the Diversity and Organization of Benthic Intertidal Fauna among French Atlantic Estuaries. J. Sea Res. 2014, 90, 95–110. [Google Scholar] [CrossRef]

- Azhar, M.; Schenone, S.; Anderson, A.; Gee, T.; Cooper, J.; van der Mark, W.; Hillman, J.R.; Yang, K.; Thrush, S.F.; Delmas, P. A Framework for Multiscale Intertidal Sandflat Mapping: A Case Study in the Whangateau Estuary. ISPRS J. Photogramm. Remote Sens. 2020, 169, 242–252. [Google Scholar] [CrossRef]

- Seavey, J.R.; Pine III, W.E.; Frederick, P.; Sturmer, L.; Berrigan, M. Decadal Changes in Oyster Reefs in the Big Bend of Florida’s Gulf Coast. Ecosphere 2011, 2, 1–14. [Google Scholar] [CrossRef]

- Radabaugh, K.R.; Geiger, S.P.; Moyer, P.P. Oyster Integrated Mapping and Monitoring Program Report for the State of Florida; FWRI Technical Report No. 22; Fish and Wildlife Research Institute, Florida Fish and Wildlife Conservation Commission: St. Petersburg, FL, USA, 2019. [Google Scholar]

- McCarthy, M.J.; Dimmitt, B.; Muller-Karger, F.E. Rapid Coastal Forest Decline in Florida’s Big Bend. Remote Sens. 2018, 10, 1721. [Google Scholar] [CrossRef]

- Vitale, N.; Brush, J.; Powell, A. Loss of Coastal Islands Along Florida’s Big Bend Region: Implications for Breeding American Oystercatchers. Estuaries Coasts 2021, 44, 1173–1182. [Google Scholar] [CrossRef]

- Main, M.B.; Allen, G.M. Florida’s Environment: North Central Region; Wildlife Ecology and Conservation Department, Florida Cooperative Extension Service, Institute of Food and Agricultural Sciences, University of Florida: Gainesville, FL, USA, 2007. [Google Scholar]

- Moore, J.F.; Pine, W.E., III; Frederick, P.C.; Beck, S.; Moreno, M.; Dodrill, M.J.; Boone, M.; Sturmer, L.; Yurek, S. Trends in Oyster Populations in the Northeastern Gulf of Mexico: An Assessment of River Discharge and Fishing Effects over Time and Space. Mar. Coast. Fish. 2020, 12, 191–204. [Google Scholar] [CrossRef]

- Pix4D Mapper [Computer Software]. Available online: https://www.pix4d.com/product/pix4dmapperphotogrammetry-software (accessed on 1 March 2022).

- ESRI ArcGIS Pro v 2.4 [Computer Software]. Available online: https://pro.arcgis.com/es/pro-app (accessed on 1 March 2022).

- Alvarez-Berastegui, D.; Ciannelli, L.; Aparicio-Gonzalez, A.; Reglero, P.; Hidalgo, M.; López-Jurado, J.L.; Tintoré, J.; Alemany, F. Spatial Scale, Means and Gradients of Hydrographic Variables Define Pelagic Seascapes of Bluefin and Bullet Tuna Spawning Distribution. PLoS ONE 2014, 9, e109338. [Google Scholar] [CrossRef]

- Scales, K.L.; Hazen, E.L.; Jacox, M.G.; Edwards, C.A.; Boustany, A.M.; Oliver, M.J.; Bograd, S.J. Scale of Inference: On the Sensitivity of Habitat Models for Wide-Ranging Marine Predators to the Resolution of Environmental Data. Ecography 2017, 40, 210–220. [Google Scholar] [CrossRef]

- Pittman, S.J.; Brown, K.A. Multi-Scale Approach for Predicting Fish Species Distributions across Coral Reef Seascapes. PLoS ONE 2011, 6, e20583. [Google Scholar] [CrossRef]

- Schneider, D.C. Scale and Scaling in Seascape Ecology. In Seascape Ecology, 1st ed.; Pittman, S.J., Ed.; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 2018; pp. 89–117. [Google Scholar]

- Gibbes, C.; Adhikari, S.; Rostant, L.; Southworth, J.; Qiu, Y. Application of Object Based Classification and High Resolution Satellite Imagery for Savanna Ecosystem Analysis. Remote Sens. 2010, 2, 2748–2772. [Google Scholar] [CrossRef]

- Blaschke, T. Object Based Image Analysis for Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Diesing, M.; Green, S.L.; Stephens, D.; Lark, R.M.; Stewart, H.A.; Dove, D. Mapping Seabed Sediments: Comparison of Manual, Geostatistical, Object-Based Image Analysis and Machine Learning Approaches. Cont. Shelf Res. 2014, 84, 107–119. [Google Scholar] [CrossRef]

- Gonçalves, J.; Pôças, I.; Marcos, B.; Mücher, C.A.; Honrado, J.P. SegOptim—A New R Package for Optimizing Object-Based Image Analyses of High-Spatial Resolution Remotely-Sensed Data. Int. J. Appl. Earth Obs. Geoinf. 2019, 76, 218–230. [Google Scholar] [CrossRef]

- Grizonnet, M.; Michel, J.; Poughon, V.; Inglada, J.; Savinaud, M.; Cresson, R. Orfeo ToolBox: Open Source Processing of Remote Sensing Images. Open Geospat. Data Softw. Stand. 2017, 2, 15. [Google Scholar] [CrossRef]

- Michel, J.; Youssefi, D.; Grizonnet, M. Stable Mean-Shift Algorithm and Its Application to the Segmentation of Arbitrarily Large Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 952–964. [Google Scholar] [CrossRef]

- OTB Development Team. OTB CookBook Documentation; CNES: Paris, France, 2018.

- Jensen, J.R. Introductory Digital Image Processing: A Remote Sensing Perspective, 3rd ed.; Pearson Education: Upper Saddle River, NJ, USA, 2005. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications in remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Fung, T.; LeDrew, E. The determination of optimal threshold levels for change detection using various accuracy indices. Photogramm. Eng. Remote Sens. 1988, 54, 1449–1454. [Google Scholar]

- Story, M.; Congalton, R.G. Accuracy assessment: A user’s perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Luz Calle, M.; Urrea, V. Letter to the Editor: Stability of Random Forest Importance Measures. Brief. Bioinform. 2011, 12, 86–89. [Google Scholar] [CrossRef]

- Han, H.; Guo, X.; Yu, H. Variable Selection Using Mean Decrease Accuracy and Mean Decrease Gini Based on Random Forest. In Proceedings of the 2016 7th IEEE International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 26–28 August 2016; pp. 219–224. [Google Scholar]

- Landis, J.R.; Kock, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Langford, W.T.; Gergel, S.E.; Dietterich, T.G.; Cohen, W. Map Misclassification Can Cause Large Errors in Landscape Pattern Indices: Examples from Habitat Fragmentation. Ecosystems 2006, 9, 474–488. [Google Scholar] [CrossRef]

- Edwards, G.; Lowell, K.E. Modeling Uncertainty in Photointerpreted Boundaries. Photogramm. Eng. Remote Sens. 1996, 15, 377–391. [Google Scholar]

- Plourde, L.; Congalton, R. Sampling Method and Sample Placement: How Do They Affect the Accuracy of Remotely Sensed Maps? Photogramm. Eng. Remote Sens. 2003, 69, 289–297. [Google Scholar] [CrossRef]

- Fiorentino, D.; Lecours, V.; Brey, T. On the Art of Classification in Spatial Ecology: Fuzziness as an Alternative for Mapping Uncertainty. Front. Ecol. Evol. 2018, 6, 231. [Google Scholar] [CrossRef]

- Wiens, J.A. Spatial Scaling in Ecology. Funct. Ecol. 1989, 3, 385–397. [Google Scholar] [CrossRef]

- Willis, K.J.; Whittaker, R.J. Species Diversity—Scale Matters. Science 2002, 295, 1245–1248. [Google Scholar] [CrossRef] [PubMed]

- Lecours, V.; Espriella, M. Can Multiscale Roughness Help Computer-Assisted Identification of Coastal Habitats in Florida? In Proceedings of the Geomorphometry 2020 Conference, Perugia, Italy, 22–26 June 2020; IRPI CNR: Perugia, Italy, 2020. [Google Scholar]

- Goodchild, M.F. Scale in GIS: An Overview. Geomorphology 2011, 130, 5–9. [Google Scholar] [CrossRef]

- Bradter, U.; Kunin, W.E.; Altringham, J.D.; Thom, T.J.; Benton, T.G. Identifying Appropriate Spatial Scales of Predictors in Species Distribution Models with the Random Forest Algorithm. Methods Ecol. Evol. 2013, 4, 167–174. [Google Scholar] [CrossRef]

- Chand, S.; Bollard, B. Low Altitude Spatial Assessment and Monitoring of Intertidal Seagrass Meadows beyond the Visible Spectrum Using a Remotely Piloted Aircraft System. Estuar. Coast. Shelf Sci. 2021, 255, 107299. [Google Scholar] [CrossRef]

- Mondejar, J.P.; Tongco, A.F. Near Infrared Band of Landsat 8 as Water Index: A Case Study around Cordova and Lapu-Lapu City, Cebu, Philippines. Sustain Environ. Res. 2019, 29, 16. [Google Scholar] [CrossRef]

| Resolution | Spectral Radius | Spatial Radius | Min Size (Pixels) | Min Size (m2) |

|---|---|---|---|---|

| 3 cm | 56 | 56 | 8286 | 7.46 |

| 5 cm | 63 | 61 | 2932 | 7.33 |

| 7 cm | 65 | 71 | 1626 | 7.97 |

| 9 cm | 71 | 39 | 931 | 7.54 |

| 11 cm | 62 | 70 | 740 | 8.96 |

| 13 cm | 69 | 55 | 455 | 7.69 |

| 15 cm | 63 | 45 | 329 | 7.40 |

| 17 cm | 67 | 55 | 261 | 7.54 |

| 19 cm | 86 | 59 | 207 | 7.47 |

| 21 cm | 76 | 77 | 161 | 7.10 |

| 23 cm | 86 | 73 | 133 | 7.04 |

| 25 cm | 74 | 39 | 113 | 7.06 |

| 27 cm | 66 | 60 | 107 | 7.80 |

| 29 cm | 89 | 72 | 92 | 7.73 |

| 31 cm | 71 | 45 | 77 | 7.40 |

| Marsh | Mud | Oyster | Water | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PA | UA | PA | UA | PA | UA | PA | UA | Overall | Kappa | |

| 3 cm | 92% | 90% | 49% | 86% | 87% | 74% | 96% | 75% | 80% | 0.736 |

| 5 cm | 92% | 86% | 73% | 77% | 78% | 80% | 88% | 86% | 82% | 0.764 |

| 7 cm | 88% | 89% | 73% | 71% | 72% | 84% | 87% | 76% | 79% | 0.727 |

| 9 cm | 91% | 92% | 69% | 83% | 75% | 77% | 90% | 74% | 81% | 0.743 |

| 11 cm | 93% | 92% | 80% | 74% | 75% | 78% | 77% | 83% | 81% | 0.749 |

| 13 cm | 89% | 93% | 72% | 74% | 74% | 76% | 83% | 77% | 79% | 0.723 |

| 15 cm | 88% | 95% | 74% | 74% | 81% | 71% | 77% | 82% | 80% | 0.728 |

| 17 cm | 91% | 89% | 78% | 75% | 73% | 84% | 86% | 80% | 82% | 0.757 |

| 19 cm | 85% | 95% | 56% | 80% | 80% | 76% | 93% | 69% | 78% | 0.713 |

| 21 cm | 93% | 91% | 72% | 78% | 78% | 82% | 87% | 78% | 82% | 0.759 |

| 23 cm | 88% | 96% | 67% | 74% | 78% | 81% | 87% | 72% | 80% | 0.732 |

| 25 cm | 88% | 90% | 72% | 78% | 78% | 81% | 89% | 77% | 81% | 0.751 |

| 27 cm | 95% | 89% | 67% | 83% | 82% | 71% | 82% | 83% | 81% | 0.745 |

| 29 cm | 85% | 90% | 60% | 82% | 73% | 84% | 97% | 64% | 78% | 0.708 |

| 31 cm | 87% | 91% | 75% | 79% | 79% | 83% | 89% | 77% | 82% | 0.763 |

| Mode | 95% | 93% | 76% | 87% | 86% | 84% | 91% | 83% | 87% | 0.821 |

| Multiscale | 92% | 89% | 58% | 88% | 86% | 86% | 96% | 75% | 83% | 0.778 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Espriella, M.C.; Lecours, V. Optimizing the Scale of Observation for Intertidal Habitat Classification through Multiscale Analysis. Drones 2022, 6, 140. https://doi.org/10.3390/drones6060140

Espriella MC, Lecours V. Optimizing the Scale of Observation for Intertidal Habitat Classification through Multiscale Analysis. Drones. 2022; 6(6):140. https://doi.org/10.3390/drones6060140

Chicago/Turabian StyleEspriella, Michael C., and Vincent Lecours. 2022. "Optimizing the Scale of Observation for Intertidal Habitat Classification through Multiscale Analysis" Drones 6, no. 6: 140. https://doi.org/10.3390/drones6060140