1. Introduction

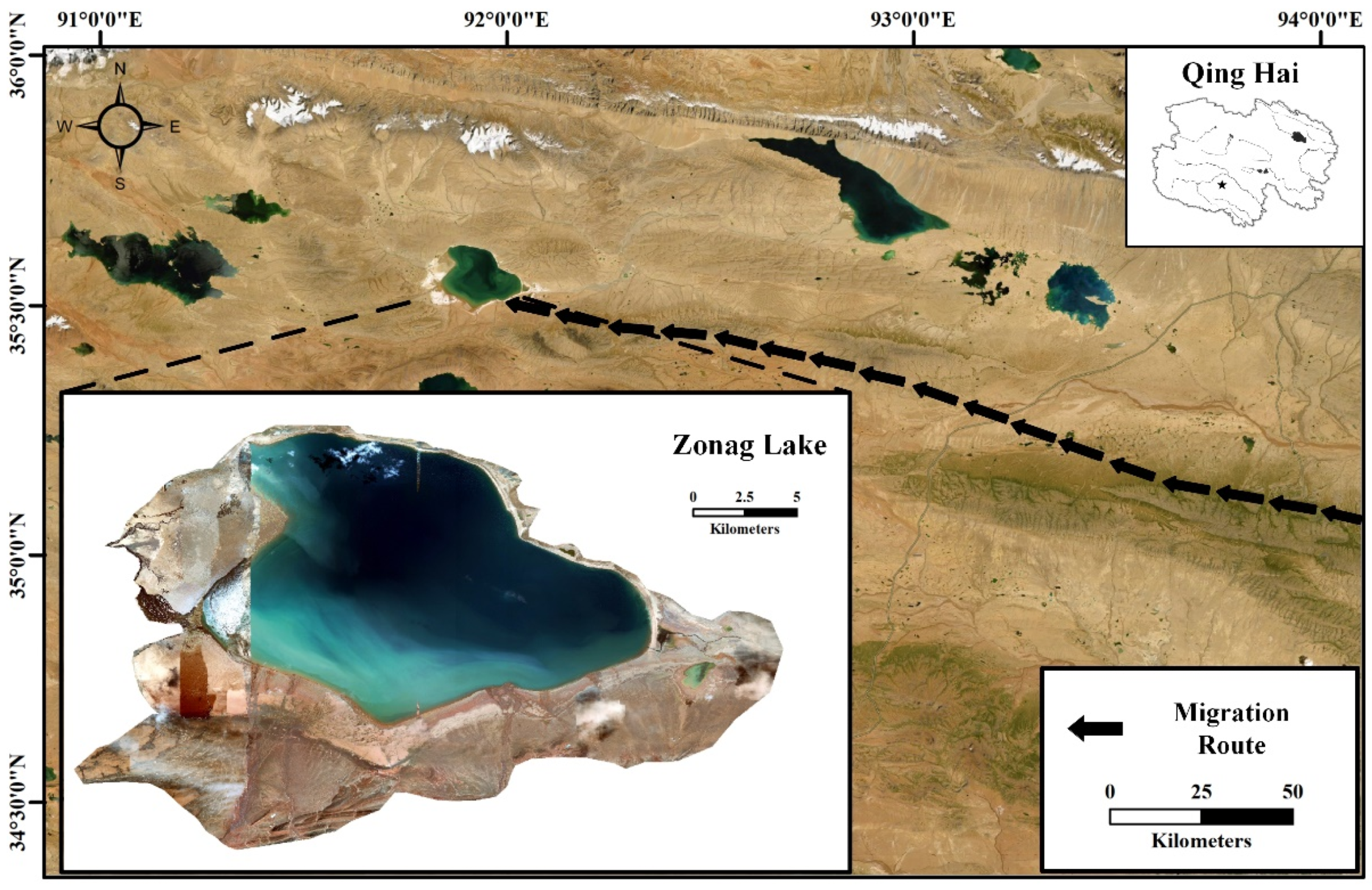

Located between the Tanggula Mountains and the Kunlun Mountains, Hoh Xil is one of the main water sources of the Yangtze River and the Yellow River [

1]. It is a significant habitat for wildlife, such as Tibetan antelopes, black-necked cranes, lynxes, and wild yaks. Hoh Xil National Nature Reserve (HXNNR) was established in 1995. It was majorly divided into four national nature reserves, the Qiangtang, the Arjinshan, the Sanjiangyuan, and the Hoh Xil, located in Qinghai province. Furthermore, HXNNR joined the World Heritage List in 2017 for its unique biodiversity and environmental conditions [

2], of which Tibetan antelopes constitute a highly representative population.

However, wild Tibetan antelopes have been listed as endangered by the International Union for Conservation of Nature (IUCN) [

3]. Facing this heated issue, they are protected by the Wildlife Protection Law of China, and the Convention on International Trade in Endangered Species of Wild Fauna and Flora (CITES). Over the past century, hunting and grazing have been the main threats to the survival rate of the Tibetan antelope population. Worse, Tibetan antelopes’ poachers killed large numbers of antelopes for their hides and skins due to the enormous financial profits. From 1900 to 1998, the Tibetan antelope population declined from a million to less than 70,000, with a mortality rate of nearly 85%. In recent two decades, the Chinese government and wildlife conservation organizations have acted to constrain poaching, which has gained progress. Still, the deterioration of the antelopes’ natural habitat and environmental degradation continue to threaten their survival [

4]. Owing to the shortage of funds and labor, reserve staff can only monitor limited areas but not the entire reserve. However, artificial ground investigation caused potential damage to the protection of fragile regions [

5].

Traditional survey methods mainly include field surveys by vehicle or on foot. Nevertheless, Tibetan antelopes, especially females in pregnancy, are sensitive to the appearance of humans and vehicles, so surveys are usually conducted from a distance. This method can be appropriate during the non-migratory season when small groups are evenly distributed across the plateau. Still, it may lead to biased data where terrain features block sight lines, or animals are herded [

6]. Moreover, some population surveys were conducted by land-based rovers remotely piloted within survey sites [

7], but this method is not suitable for surveys in larger areas or uneven, rocky, and steeply sloping terrain. It is arduous to determine the population size with ground survey methods, as animal movements can cause violent fluctuations in density estimates. In addition, severe weather conditions in unpopulated areas often create much uncertainty at high altitudes, making it difficult for observers to access investigation areas [

8]. Moreover, some zoologists tracked individuals through collars with global positioning system devices to identify calving grounds, migration corridors, and suitable habitats [

1,

9]. However, they cannot accurately and quickly estimate the number of animals or their distribution range, so new observation methods are needed.

In recent years, aerial surveys by UAVs in unpopulated areas, poor-developed transportation, and a fragile environment have become promising options [

5,

6]. Compared to full-size helicopters, small UAVs produce less noise flying at altitudes that can effectively obtain ground information and significantly reduce the disturbance to wildlife [

10]. Fixed-wing UAVs have also been applied to wildlife surveys [

11]. However, they are not suitable for the large-area survey missions in this survey, considering the take-off and landing environments and portability. Moreover, fixed-wing UAVs may also disturb wildlife as they may be perceived as avian predators [

12]. In contrast, rotary-wing UAVs are more controllable and can obtain higher-resolution images, as UAVs can fly at lower speeds and altitudes without disturbing Tibetan antelopes [

6]. Besides, using commercial rotary-wing UAVs can reduce the difficulty of flight operations for scientific investigations and allow researchers to focus on data analysis [

13]. Moreover, UAV airports have been successfully applied in multiple fields recently [

14], making regular unmanned aerial surveys possible. Utilizing multiple UAVs in an autonomous system can reduce manual requirements and conduct surveys with higher frequency and efficiency with the help of the short window period of weather, environments, and animal migration.

The efficiency of UAVs in conducting aerial surveys depends largely on the strategies the area coverage paths are planned. Generally, the complete coverage path-planning (CCPP) problem of a single UAV can be solved by sweep-style patterns, which guide the UAV back and forth over a rectangular space [

15]. Other similar spatial sweep-style patterns include spiral patterns [

16] and Hilbert curves [

17]. Robotics designed to solve cyclic paths may encounter many tracebacks in sweep-style patterns [

18]. Meanwhile, some tracebacks also occur in graphic search methods, such as wavefront methods [

19,

20]. Approaches that focus on minimizing the number of turns are often improved based on the minimum energy method [

21,

22], which is more suitable for fixed-wing or fast-moving aircraft. In contrast, we pay more attention to rotary-wing UAVs, consuming less energy during low-speed turns. In these path planning methods, the distance between the starting and ending points of the trajectories is often long. At the same time, unnecessary tracebacks may exist if the take-off and landing positions are far from the survey area. Moreover, it is time-consuming and inefficient for multiple sorties by a single UAV to perform coverage survey tasks in large areas. Presently, it is becoming a trend for multiple UAV formations to accomplish tasks jointly [

23].

Most multi-UAV CCPP algorithms are extended based on the single-UAV area coverage research [

24]. The common multi-UAV CCPP can be divided into two ways according to whether the task assignment is performed or not. In the multi-UAV CCPP without task assignment, each UAV may avoid collision with others by communicating with or regarding them as moving obstacles. After that, they complete area coverage tasks wholly and jointly. For instance, the path planning of each UAV is randomly generated by random coverage methods [

25] and with a high traceback rate. There is also an improvement in the spanning tree coverage algorithm that employs multiple UAVs for complete coverage of a task area where there is acquired environmental information [

26]. However, this method has poor robustness, and a planned trajectory’s quality depends on the robot’s initial position. In contrast, the research idea of the task assignment-based multi-UAV CCPP method is to divide a task area according to a certain way and assign the multiply divided sub-regions to each UAV. Then, each UAV plans its sub-region coverage trajectory within the region by a single UAV CCPP algorithm. For instance, task assignments of the sweep-style pattern [

18] and polygonal area coverage [

27] have been widely studied with high backtracking rates. Moreover, many of these methods are often used to find UAVs that satisfy appointed duration requirements. Moreover, many of these methods are often used to find UAVs that meet some endurance requirements. However, this paper mainly emphasizes generating optimal paths that satisfy a maximum path length constraint decided by UAV’s endurance conditions.

Therefore, we formulate the multi-robot CCPP problem according to the satisfiability modulo theory (SMT), which is a generalization of Boolean satisfiability because it allows us to encode all the specific survey-related constraints. Compared to the traditional traveling salesman problem (TSP), our method focuses more on finding feasible paths that satisfy a maximum path length constraint, allowing for vertex revisitation. Because the UAV’s battery constraints dictate the maximum path length, we can find solutions tailored to the limitations of the chosen UAV rather than finding a vehicle that would need to meet some endurance requirement. Although, our planning method is inherently a non-deterministic polynomial complete problem. The coverage problem can also be encoded as a MILP [

28,

29], it takes 4 to 6 days to solve problems for the same area, even using the most advanced solver on a powerful workstation. In contrast, the SMT method can generate available solutions in a few hours on a laptop [

30].

The raw image shot by UAVs were assembled to form a large patchwork map. Then, this larger image is adopted for analysis. For example, ecologists leverage it to count flora and fauna for population analysis [

31] or wildfire risk assessment in forests and grasslands [

32]. However, most current counting methods can only be applied to specific types of objects, such as people [

33], plants [

34], and animals [

31]. Meanwhile, they usually require images with tens of thousands or even millions of annotated object instances for training. Some works tackle the issue of expensive annotation cost by adopting a counting network trained on a source domain to any target domain using labels for only a few informative samples from the target domain [

35]. However, even these approaches require many labeled data in the source domain. Moreover, obtaining this type of annotation is costly and arduous by annotating millions of objects on thousands of training images, especially when the photos of endangered animals are scarcer. The few-shot image classification task only requires learning about known or similar categories to classify images without training in a test, which is a promising solution [

36,

37]. Most existing works for few-shot object counting involve the dot annotation map with a Gaussian window of a fixed size, typically 15 × 15, generating a smoothed target density map for training the density estimation network. However, there are huge variations in the size of different target objects, and using a fixed-size Gaussian window will lead to significant errors in the density map. Therefore, to solve this problem, this paper uses Gaussian smoothing with adaptive window size to generate target density maps suitable for objects of different sizes. Once UAV aerial survey data have been collected and images have been analyzed to acquire accurate survey data, which can provide information on species distribution and population size, it also enables a deeper understanding of animals’ seasonal migrations and habitat changes. That is critical for preparing proper wildlife conservation policies and provides affluent data for ecologists. The focus of this paper is on the development of a multi-UAV system for conducting aerial population counting.

In this paper, we considered a path planning method with a set of aerial robots for population counting, which plans the trajectories of these robots for aerial photogrammetry. UAVs acquire a set of images covering the entire area with overhead cameras. It requires the images to have higher resolution and ensure enough overlap to stitch the images further together. Besides, aerial surveys should be conducted as soon as possible, owing to changing weather, light, and dynamic ground conditions. So it is necessary to require all UAVs to perform their tasks simultaneously. The collected UAV data and analyzed images will promote the sustainable development of population ecology. This paper focuses on developing a multi-UAV system for conducting aerial surveys to provide an autonomous multi-robot solution for UAV surveys and planning suitably for most survey areas.

This research is aimed at: (1) developing and testing a multi-UAVs CCPP algorithm for fast and repeatable unmanned aerial surveys on a large area that optimizes mission time and distance. Meanwhile, it compensates for the short battery life of small UAVs by minimizing tracebacks when investigating large areas. (2) Developing and testing a population counting method applicable to various species that does not require pre-training and large data sets.

The method in this paper is demonstrated in an extensive field survey of Tibetan antelope populations at the HXNNR, China. In this task, the celerity and repeatability of aerial surveys were conducted under extreme conditions on a large area. So, it can demonstrate the effectiveness of our system in facilitating the development of population ecology. In this paper, an investigating system of four UAVs observed a herd containing approximately 20,000 Tibetan antelopes distributed over an area of more than 6 km2, which reduced the survey time from 4 to 6 days to about 2 h. The method put forward in this paper can complete aerial surveys in shorter distance and less mission time. For instance, this method reduced the total distance and mission time by 2.5% and 26.3%, respectively. Furthermore, the effectiveness increased by 5.3% compared to the sweep-style pattern over an area of the same size for the Tibetan antelope herd survey described in this paper.

4. Discussion

The study demonstrates that daily monitoring in protected areas is greatly complemented by surveys of wildlife, which are conducted by small, low-cost, multiple rotary-wing UAVs in large areas. However, the influence of UAVs’ noise and appearance on wildlife should be considered during surveys. As we only surveyed and analyzed Tibetan antelopes, prior detailed testing on the UAV’s influence of noise and movements should be conducted when this method is applied to other animals. Moreover, our method extends the field of UAV application and provides an autonomous multi-robot solution for UAV path planning, which is suitable for any size of the survey area. It can also select the appropriate path according to the existing UAV parameters and allows them to optimize the take-off point of each flight.

The flexible deployment of multiple UAVs can significantly improve the path efficiency for completing area coverage missions and reducing manual survey time and manpower consumption, especially in remote and unpopulated areas. The results of multi-UAV trajectory planning (

Table 2 and

Table 3) have been visualized in

Figure 11,

Figure 12 and

Figure 13. Regarding the total distance and the mission time, our method has more edges over the current multi-UAV CCPP methods. In one aspect, the shorter total distance means less energy consumption, which indicates a group of recharged UAVs can complete more flights when deployed at a UAV airport. In another aspect, a shorter mission time means that a quicker survey, allowing more surveys to be done within a window period to reduce da-ta errors and learn about timing-related issues. It can provide ecologists with more options for their survey methods. Compared to the method where only the total distance is done, both the total distance and mission time decline when these two indicators are optimized simultaneously. This is because the planner divides and distributes the entire survey route task to each with different take-off points evenly while acquiring the optimal solution for the area to survey on its own.

The few-shot object counting method can be used without pre-training the model via images of the target population. This improves the universality of the counting method to a certain extent, and it can be applied to many different fields. When using this method to test the photos of Tibetan antelope taken by UAVs, it has good applicability to images of different densities and perspectives. It can meet the requirements of population counting. A particular animal can be repeatedly photographed when images are stitched together because of the possible animal movements during a multi-UAV survey. Ecologists focusing on data research need to correct the survey results to ensure the accuracy of data results, which has become a necessary research subject [

48]. Therefore, it is essential to reduce the mission time of such investigations. The information obtained directly from traditional ground surveys is often one-dimensional. The distribution of all animals may be visually linear (

Figure 14) if interpreted from an image perspective when surface observation is conducted on the ground [

49]. These factors often lead to significant errors in the results, which is not conducive to animal counting. When compared with traditional field survey methods by vehicle or on foot, UAV surveys have a better field of view (

Figure 7,

Figure 8 and

Figure 9) and have reduced the impact of animal movements on the data to a large extent, which can improve the survey accuracy.

With the current development of commercial multi-rotary-wing UAVs and UAV airports, an untrained layman may acquire the skills to control a rotary-wing UAV quickly. The aerial animal counting system with multi-UAVs, developed by us, aims to enable scientists to focus on experimental design and data analysis rather than flight control skills. The survey deployment can be made before the survey window through a simple setup that determines the surveyed areas, the take-off areas, and the UAVs’ information. The current artificial intelligence methods can quickly assist humans in completing many of tedious counting tasks. The approach proposed in this paper can replace humans in performing uninterrupted unmanned count surveys, and we only need to ensure that UAVs will not influence the ecology of the survey areas.

However, more sorties of UAVs are often required for conducting simultaneous surveys of large areas, due to UAV endurance limitations. Besides, excessively high-flight altitudes, which means reducing the impact of UAV noise on animals, may not be conducive to animal counting in feature identification. It does harm to the applicability of the multi-UAV survey method because of camera resolution. UAV aerial surveys will also integrate more features by combining visible light and multispectral and thermal infrared cameras to improve information accuracy further.

5. Conclusions

A general and flexible CCPP method was provided in this paper for a group of UAVs to count animals. This planning algorithm enables scientists to employ multiple drones to conduct regular unmanned surveys in the uninhabited region in fragile environments with poor access. Moreover, multiple UAVs allow faster surveys with higher frequencies, lower costs, and greater resolution than helicopters and better leverage for rare observation opportunities by reducing wildlife disturbance from human activities. We have implemented our algorithms on a set of UAVs and conducted field experiments. The rapid and repeatable aerial surveys of large-scale environments demonstrated the utility of our system in facilitating population ecology. The results unveil that, in this paper, a herd containing approximately 20,000 Tibetan antelopes distributed over an area of more than 6 km2 was observed by a measuring system of four UAVs, which reduced the survey time from 4 to 6 days to about 2 h. Our method can complete aerial surveys in shorter trajectories and mission time. With this method, the trajectories and time were reduced by 2.5% and 26.3%, respectively, and the effectiveness increased by 5.3% when compared to sweep-style paths over an area of the same size.

Different from the target detector methods commonly used in current counting methods, the current work mainly focuses on one specific category at a time, such as people, cars, and plants. In this paper, we use a general few-shot adaptive matching counting method, which can count effectively without pre-training through the objects to be measured. We tested the photos of Tibetan antelope taken from the UAV, and the accuracy can reach more than 97%. The survey data will facilitate the development of animal protection policies. The results of the later survey can contribute to the development of animal protection policy, which is conducive to protecting and promoting ecological sustainability. However, animals may move in the process of multi-drone surveys, which makes photos of them possible to be duplicated when stitched together. In the future, reasonable corrections based on the survey results can further improve the accuracy of the survey. Other investigations that require fast aerial surveys under unpopulated circumstances can also benefit from our algorithms, such as surveys of other wildlife populations, forests, and shrublands.