Abstract

This article presents a novel autonomous navigation approach that is capable of increasing map exploration and accuracy while minimizing the distance traveled for autonomous drone landings. For terrain mapping, a probabilistic sparse elevation map is proposed to represent measurement accuracy and enable the increasing of map quality by continuously applying new measurements with Bayes inference. For exploration, the Quality-Aware Best View (QABV) planner is proposed for autonomous navigation with a dual focus: map exploration and quality. Generated paths allow for visiting viewpoints that provide new measurements for exploring the proposed map and increasing its quality. To reduce the distance traveled, we handle the path-cost information in the framework of control theory to dynamically adjust the path cost of visiting a viewpoint. The proposed methods handle the QABV planner as a system to be controlled and regulate the information contribution of the generated paths. As a result, the path cost is increased to reduce the distance traveled or decreased to escape from a low-information area and avoid getting stuck. The usefulness of the proposed mapping and exploration approach is evaluated in detailed simulation studies including a real-world scenario for a packet delivery drone.

1. Introduction

The academic research on drones is increasing as drone applications have been successfully used in search and rescue missions [1,2], warehouse management [3,4], surveillance and inspection [5,6], agriculture [7], and package delivery [8]. Although most drone autopilots have autonomous take-off and cruise functions [9,10], landing in an unknown environment is still one of the challenges to be tackled. To accomplish a safe landing, the drone must be aware of the area underneath to pick a safe landing area autonomously. In this context, large unmanned aerial vehicles use lidar-based active scanners to generate elevation maps [11,12], yet the usage of these scanners increases not only the weight but also its energy consumption. Alternatively, drones are equipped with camera systems that are lightweight and energy efficient. In developing a camera-based method for autonomous landing, the main challenges are (1) terrain mapping and (2) exploration.

For terrain mapping, a single-camera setup can be used for distance measurements of the terrain. In one of the earliest works [13], a method based on the recursive multi-frame planar parallax algorithm [14] is presented to map the environment via a single camera. In [15], a dense motion stereo algorithm processing a single camera is presented to concurrently assess feasible rooftop landing sites for a drone. In [16], a drone equipped with a single camera explores a previously unknown environment and generates a 3D point cloud map with a monocular visual simultaneous localization and mapping system (SLAM). Then, a grid map is generated from the point cloud data and appropriate landing zones are selected. In [17], fast semi-direct monocular visual odometry (SVO) [18] with a single camera is used for generating a sparse elevation map with Bayes inference to select a safe landing area away from obstacles. SVO has the highest computational efficiency among monocular state estimation and mapping techniques according to [19]. On the other hand, a stereo-camera setup is useful for depth measurements based on [20,21,22]. Compared to a single camera, a stereo camera (compromising a depth sensor) provides more accurate measurements, but they are heavier and more expensive than a single camera. Additionally, the resulting measurement error is a challenge. For instance, in [23], a stereo-camera system is used for the depth map generation of catastrophe-struck environments. In the depth map, measurements from higher altitudes have higher costs due to lower accuracy, and landing area selection is performed by considering the cost.

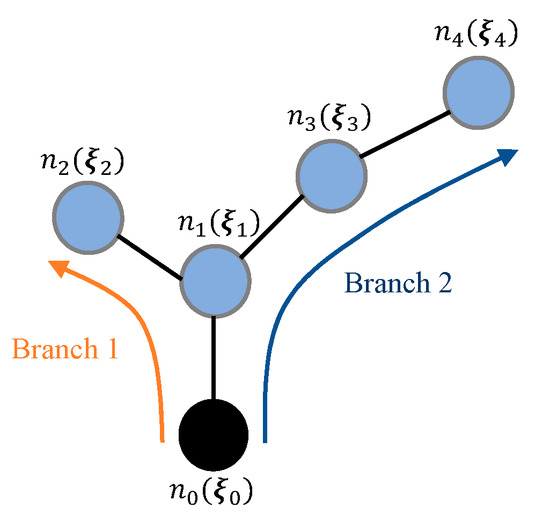

For exploration, there are many methods in the literature for path planning. In [13], a box-search method as a global plan combined with a predictive controller acts as a local path planner with online sensor information. In [15], to improve the landing site assessment, a path planner calculates optimal polynomial trajectories while ensuring the requirement for providing visual monitoring of a point of interest. In [24], the use of SVO is extended to a perception-aware receding horizon approach for drones to solve the active SLAM [25] problem and reach a target location safely and accurately. They generate arc-shaped candidate trajectories with [26] by using the information from SVO, then they select the best trajectory that maximizes the following metrics: collision probability, perception quality, and distance to the target location. To calculate the perception quality of each candidate trajectory, position samples are taken from each trajectory using a constant time interval. Then for each sample, the position estimation error is calculated by using the visible landmarks from SVO. The intuition is that more visible landmarks result in less position estimation error and accurate pose estimation along the trajectory can help better triangulate new landmarks, which is beneficial for the pose estimation afterward. In [20], the receding horizon “Next-Best-View” (NBV) planner is employed. The NBV planner makes use of rapidly-exploring random tree (RRT) [27] and suggests a receding horizon planning strategy. In every planning iteration, an RRT is generated and the best branch of the tree (i.e., a sequence of waypoints) is determined for map exploration. The intuition is that when a drone visits a waypoint in an RRT branch that provides a better viewpoint for map exploration, the exploration will be faster. The NBV planner selects the best branch with this intuition, but only the first step is executed as is in the receding horizon fashion. This process continues until the environment is explored except for residual locations such as occluded places and narrow pockets between objects. To improve the NBV planner regarding global map exploration, Schmit et al. [21] proposed using RRT* [28]; it obtains global exploration by continuously expanding a single trajectory tree, allowing the algorithm to maximize a single objective function. This enables the method to reason about the global utility of a path and refine trajectories to maximize their value. For the same purpose, Selin et al. [22] suggest using frontier exploration planning [29] as a global planner while using the NBV planner as a local planner.

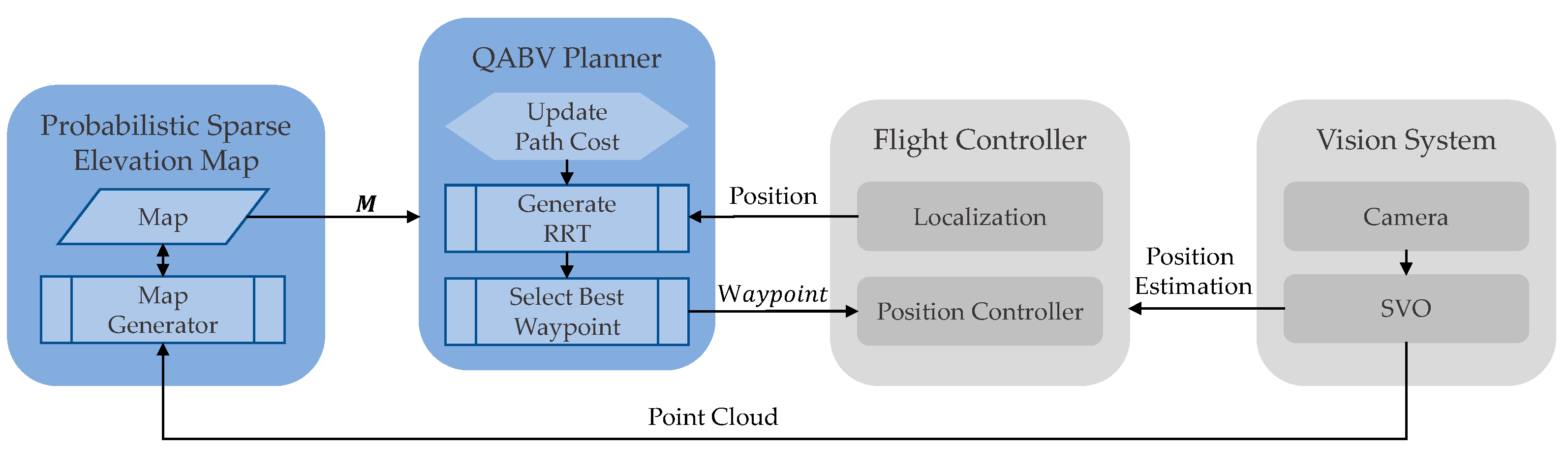

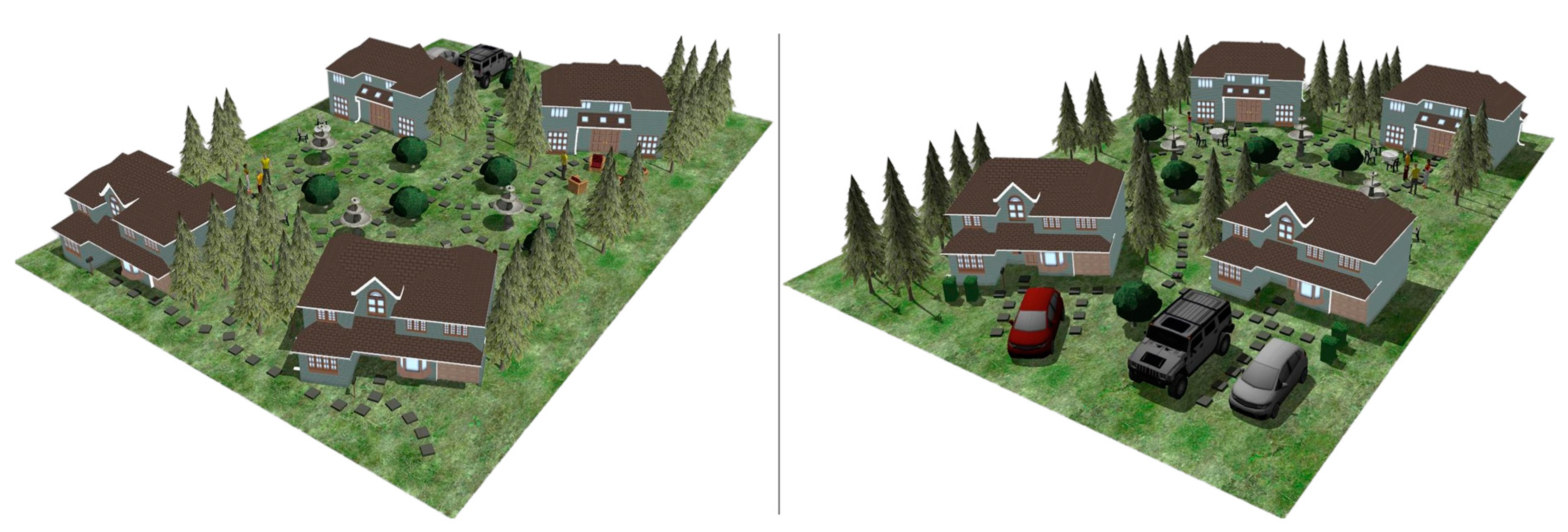

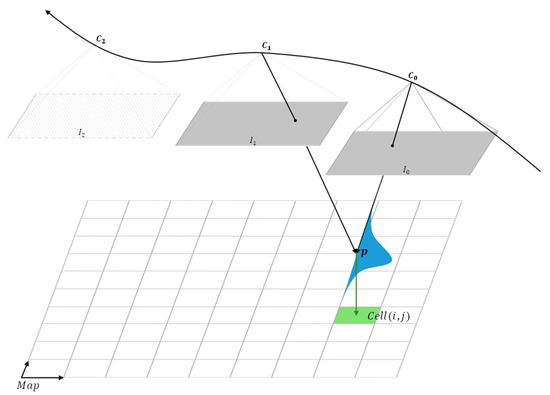

In this study, we propose a novel autonomous navigation approach by considering map exploration and accuracy while minimizing the distance traveled for autonomous drone landings. The proposed overall approach is illustrated in Figure 1.

Figure 1.

Probabilistic sparse elevation map generation and the QABV planner, marked in blue, are the main components of the proposed autonomous navigation approach for map exploration and accuracy toward drone landing. The flight controller and the vision system are used by the proposed approach during the map generation process.

For terrain mapping, we propose a “Probabilistic Sparse Elevation Map” to represent the area underneath a drone. As illustrated in Figure 1, the SVO approach takes image frames from a down-looking single camera attached to the drone, estimates the drone position, and generates point cloud data where each point is represented with a normal distribution. To generate the proposed map, we use point cloud data as height measurements and apply new measurements continuously with Bayes inference to increase the accuracy of the measurements. During this process, a path planner is also required to process map information and guide the drone to take the necessary measurements for map exploration and quality. When the map generation is completed, the final map can be used to identify safe landing areas for the drone.

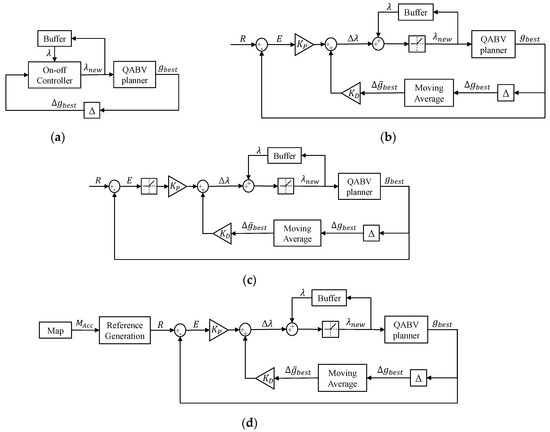

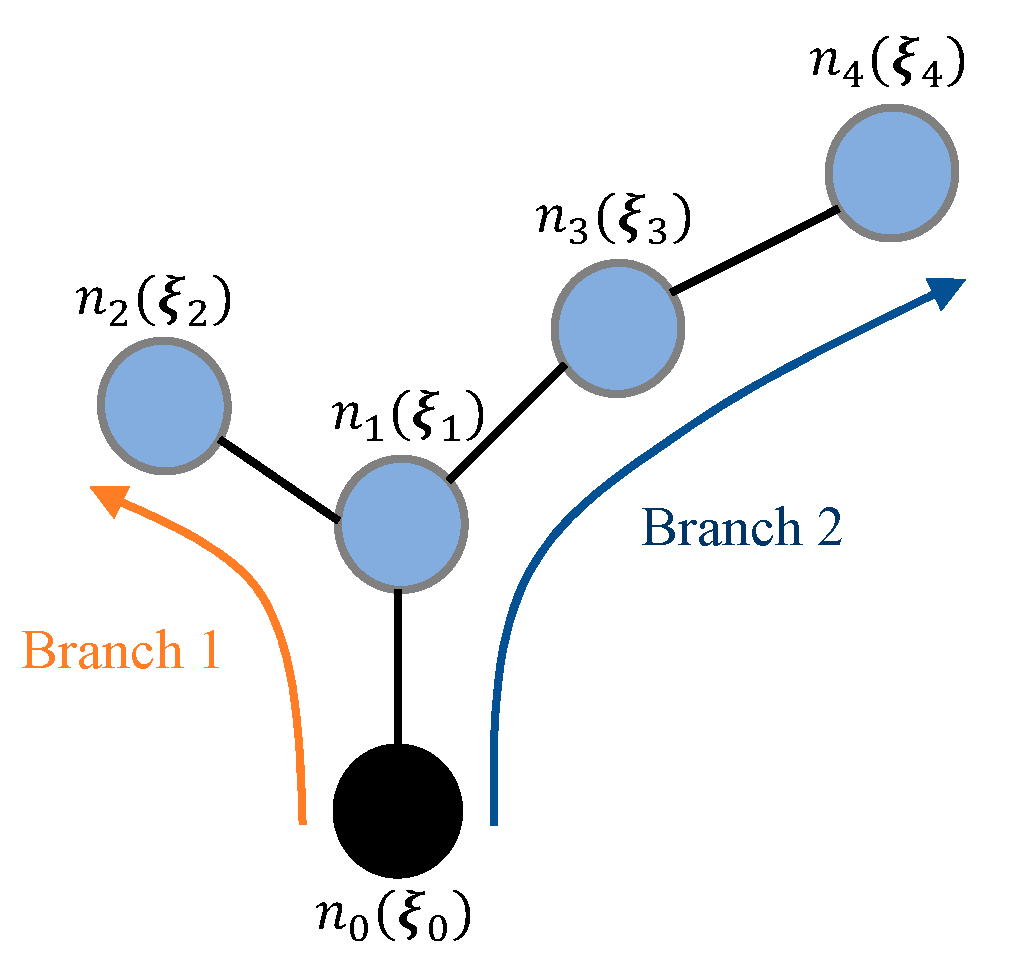

For exploration, we propose the “Quality-Aware Best View (QABV) planner” to increase not only the accuracy of the map but also to decrease the traveled distance. To accomplish such a goal, we develop a novel information gain and dynamic path cost that allows the QABV planner to generate local waypoints considering map accuracy and exploration with traveled distance. In every planning iteration of QABV, an RRT with root node drone position is built and the best branch of the RRT is determined according to the information gain, which comprises the sum of the information contribution of each visible map cell for the subject branch. The intuition is that the more information we obtain from the visible area when we follow the RRT branch, the higher the map quality and exploration will increase. In this context, we calculate the information contribution of a visible map cell using its variance from the proposed probabilistic sparse elevation map information if it is updated before. If it is not, then we estimate its information contribution by calculating the height deviation of the visible area. After determining the best branch, the first node of the branch is sent to the position controller as the best waypoint. Through the proposed dynamic path cost approach, the QABV is capable of increasing the map exploration and accuracy while not increasing the traveled distance, thus ensuring traveling efficiency. We proposed using the node count between the subject node and the root node as the path cost and handling the dynamic path cost problem within the framework of control theory. We developed four novel approaches to increase efficiency by handling the QABV planner as the system to be controlled in order to regulate the information contribution of the generated paths. As a result, the path cost is increased to reduce the distance traveled or decreased to escape from a low-information area and avoid getting stuck.

The main contributions and outcomes of the study are summarized as follows:

- A probabilistic sparse elevation map generation algorithm with Bayes inference using SVO point cloud data and a down-looking single-camera setup. The generated map enables the representation of the sensor measurement accuracy to increase map quality by cooperating with the planner.

- A novel QABV planner for autonomous navigation with a dual focus: map exploration and quality. Generated paths via the novel information gain allow for visiting viewpoints that provide new measurements that increase the performance of the probabilistic sparse elevation map concerning exploration and accuracy.

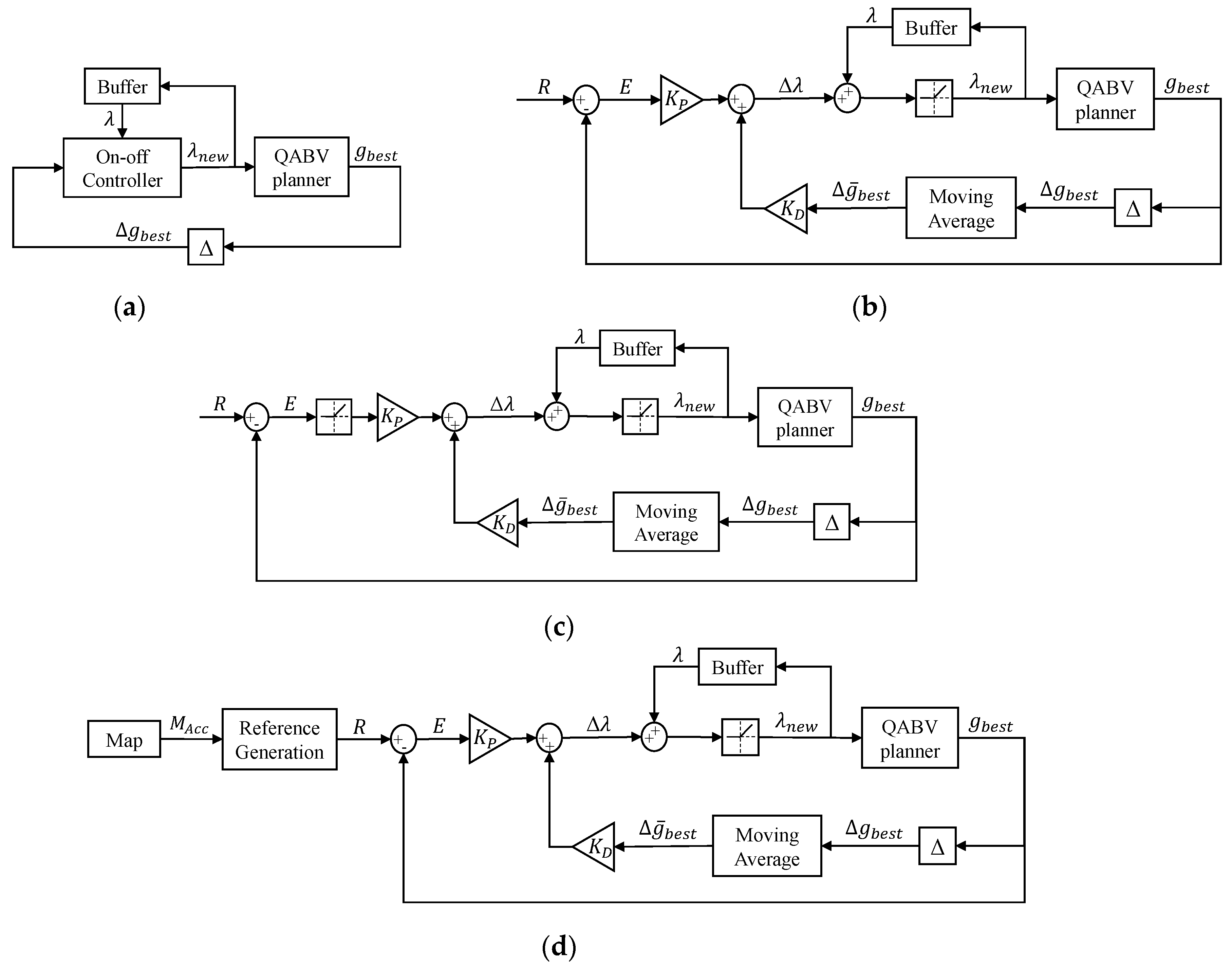

- A novel dynamic path cost representation for the QABV planner to reduce the distance traveled and escape from a low-information area. To dynamically adjust the path cost, we propose four control methods: method 1 offers a simple approach with an on–off controller while method 2 employs a PD controller to adjust the path cost, and method 3 switches its control action depending on the reference while method 4 generates its reference depending on the map information.

- To show the performance improvements of the proposed approach, we present comparative simulation results. We demonstrate that the developed QABV planners have significantly better performances compared to the NBV planner regarding measurement accuracy and distance traveled.

- To verify the performance, we also solve the safe landing problem of a delivery drone within a realistic simulation environment. The results verify the usefulness of the proposed mapping and exploration approach.

2. Probabilistic Sparse Elevation Map Generation with SVO Point Cloud Data

In this section, we present the generation of the proposed probabilistic sparse elevation map that enhances the SVO point cloud data through the deployment of Bayes inference. The generated map provides measurement accuracy alongside the height of the terrain, which is processed by the proposed QABV planner to increase the accuracy of a location through Bayes inference.

2.1. SVO Point Cloud Data Generation

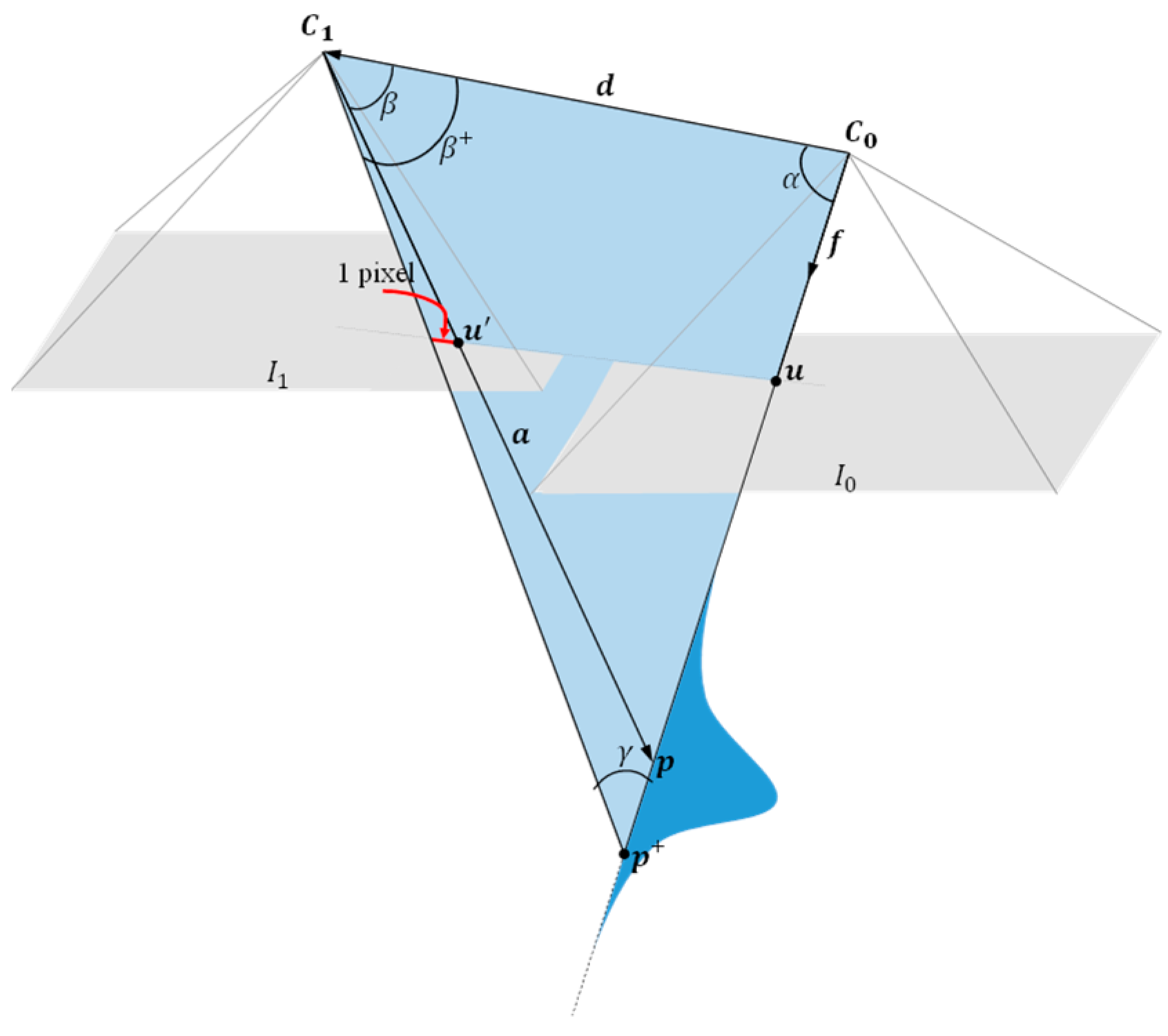

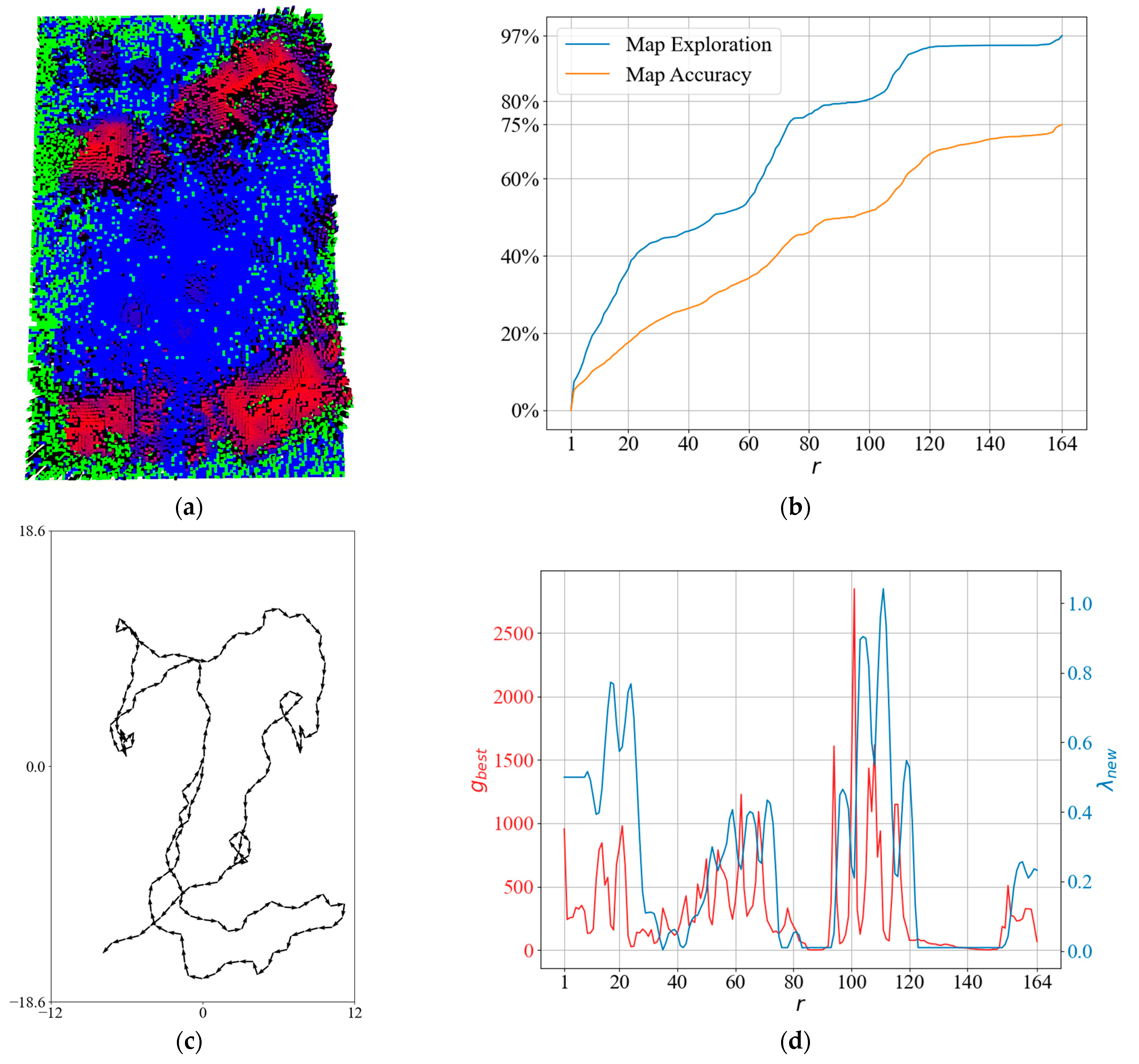

SVO estimates the position of image features (e.g., edges, points, and lines), and the estimation accuracy is defined by its uncertainty model [17,30]. In a single down-looking camera scenario, as illustrated in Figure 2, SVO first finds the transition vector between camera positions, and then solves the feature correspondence problem between consecutive images and . Once an image feature correspondence is found between two images as and , the position of the feature is estimated with triangulation.

Figure 2.

Illustration of the position estimation approach with SVO through a single down-looking camera.

As shown in Figure 2, the accuracy of this estimation is modeled as and corresponds to the assumption that a pixel error has occurred along the epipolar line passing through and while solving the feature correspondence problem. In this case, the position of the feature occurs in , and the distance between them determines . To provide a clear explanation of the calculation of and , let us define the unit vector and the vector as follows:

By using vector algebra, we can define the angle between vectors and as:

and the angle between vectors and as follows:

We can add the angle spanning a pixel to to find when is the camera focal length as [30]:

Now, we can calculate the angle illustrated in Figure 2 by using the triangle sum theorem as follows:

Then, the norm of is defined via the law of sines:

Therefore, when there is a pixel error while solving the feature correspondence problem for , the measurement uncertainty is [30]:

2.2. Probabilistic Sparse Elevation Map Generation via Bayesian Inference

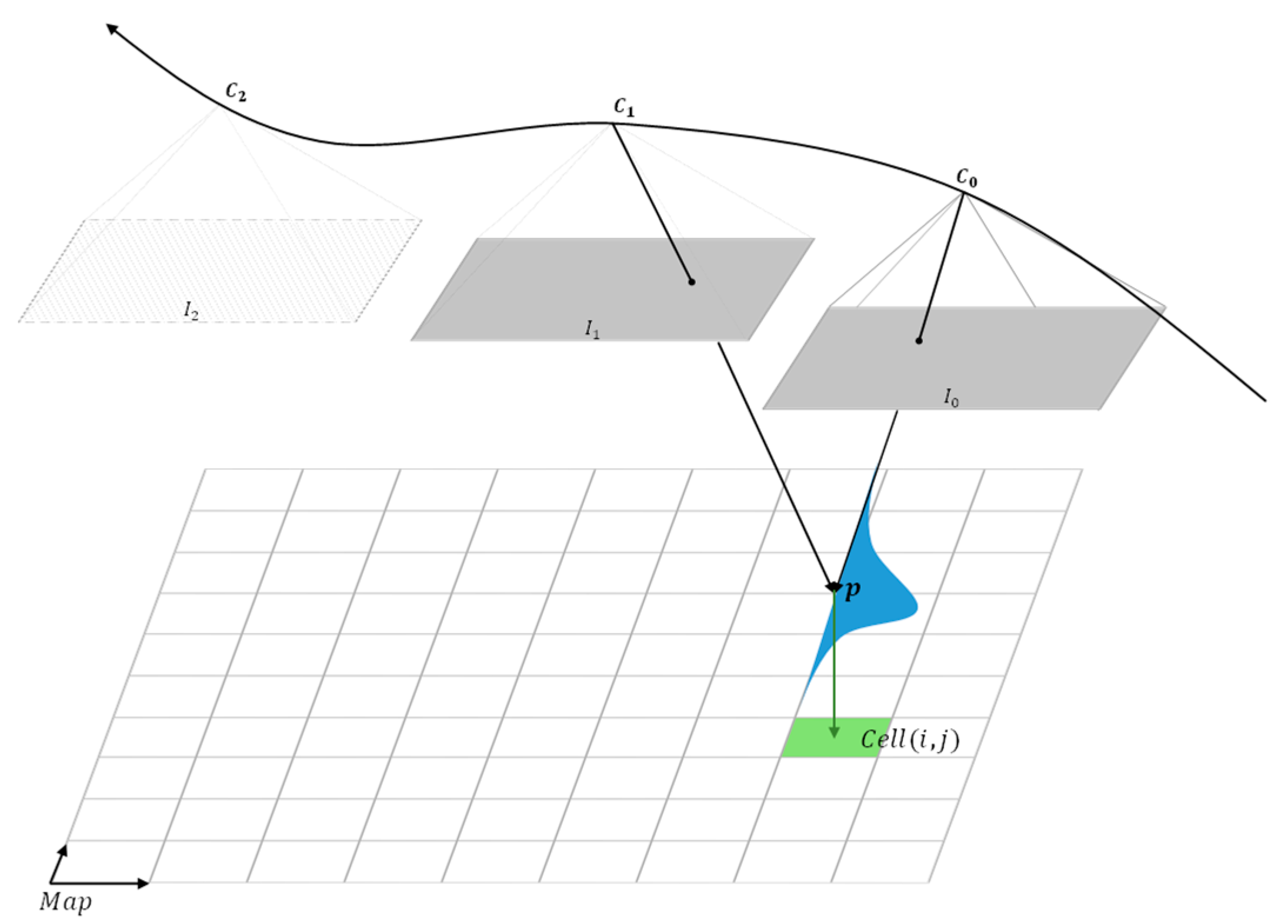

The proposed probabilistic sparse elevation map uses a 2D matrix to represent the area underneath the drone on a grid, with each element () corresponding to a point on the grid and representing a height measurement. During the generation of the map, each point in the SVO point cloud, along with its corresponding uncertainty (), is continuously processed using Bayesian inference to update the corresponding in . This process takes place as the drone flies over an area, as shown in Figure 3.

Figure 3.

Update of a map cell with a point from SVO point cloud. By analyzing consecutive images from a single down-looking camera, the SVO algorithm does a probabilistic measurement of a point represented as normal distribution . is updated with and after matching in the 2D map .

In Algorithm 1, the pseudo-code of the proposed map generation approach is presented. Here, each of is initialized with an unmapped cell defined as follows:

Then, parses the SVO data stream and extracts every together with its . For each in the point cloud, retrieves the corresponding according to the and positions of so that it can be updated. In updating :

- If it is an unmapped cell, then its height and variance are updated via .

- If it is updated before but has more than height error (i.e., a threshold value), then it is defined as an inaccurate cell as follows:

The computational complexity of Algorithm 1 cannot be calculated since satisfying the stop criterion is dependent on external processes such as the QABV planner and SVO; however, for a single run inside the while loop, the complexity of the is where is the size of the point cloud data. The for loop runs for every point in the cloud, and inside the loop, every operation costs the same amount of time, so its complexity is . Lastly, the complexity of checking either of the stop criterion defined in (13) and (14) is , where is the size of . In this case, total complexity is .

| Algorithm 1. Sparse Elevation Map Generation via Bayes Inference |

| 1: Initialize with unmapped cells 2: while the stop criterion is not satisfied ( or ) 3: 4: for each in do 5: 6: if is 7: 8: 9: else if is 10: 11: 12: end if 13: end for 14: end while |

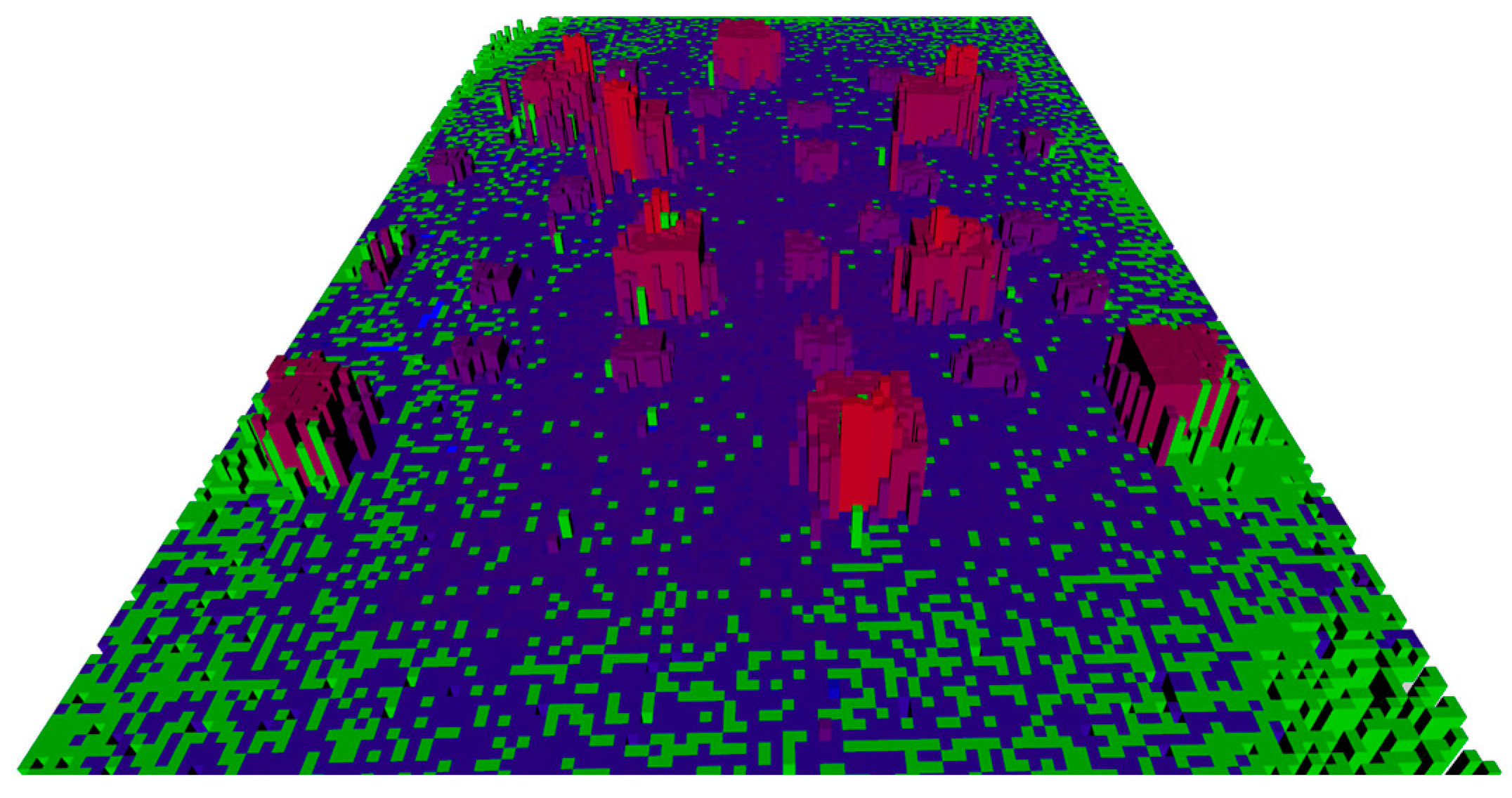

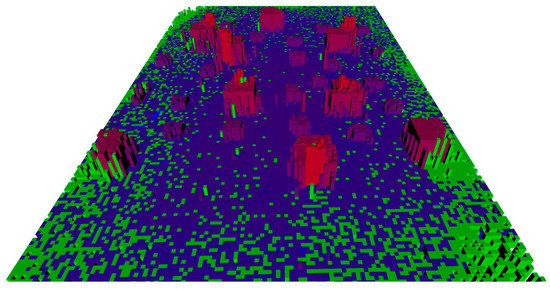

Figure 4 is an example 3D illustration of the proposed probabilistic sparse elevation map constructed from . Different than showing only height information such as in [17], our map keeps the variance information of the SVO point cloud and presents height information in a probabilistic way.

Figure 4.

An example of constructed 3D sparse elevation map with SVO point cloud data. Here, the ground area is 12 m × 18.6 m and it consists of 10 cm × 10 cm map cells. Color codes indicate the status of the cells; green indicates inaccurate cells, and color tones between blue and red indicate cell height: blue for low, and red for high height. Void sections indicate unmapped cells.

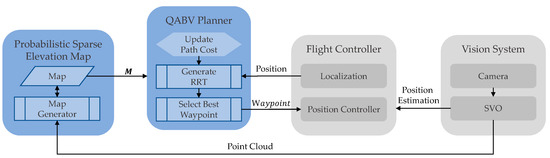

4. Comparative Performance Analysis

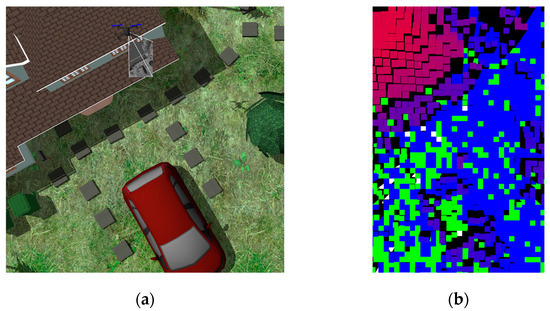

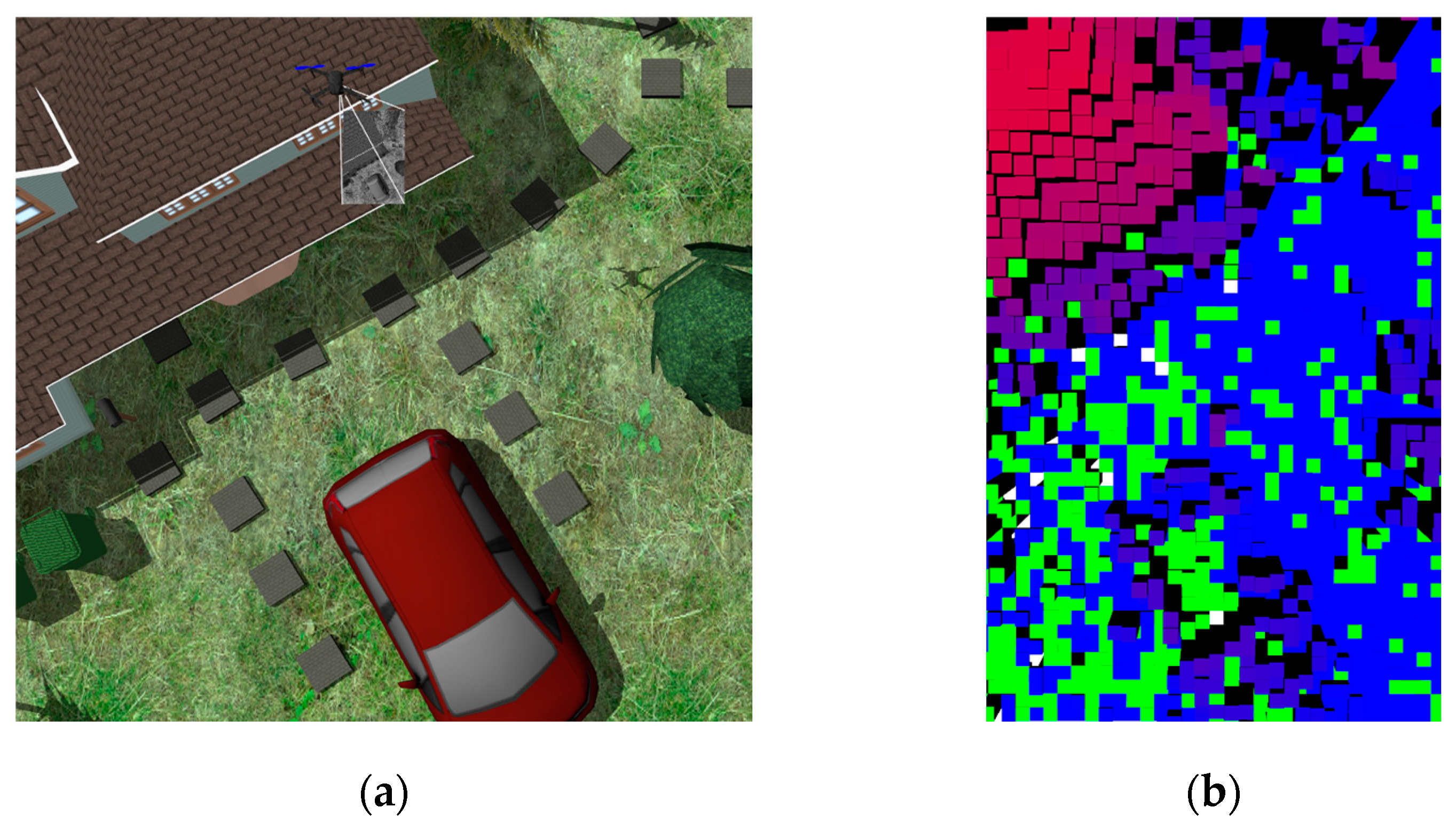

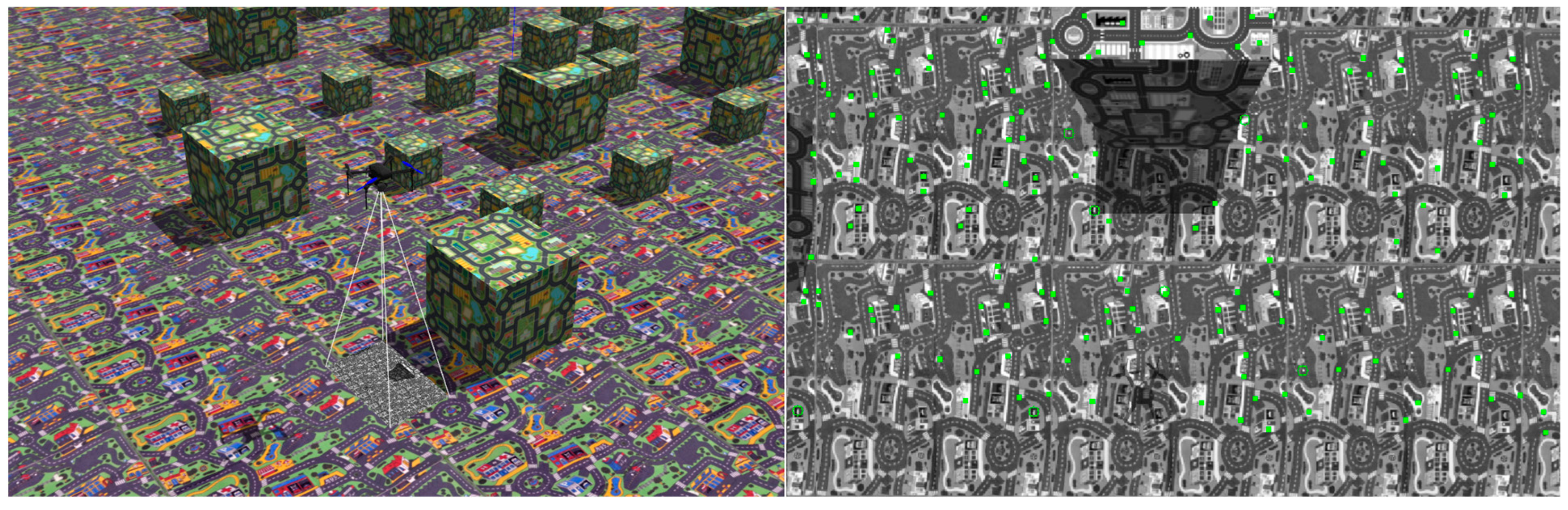

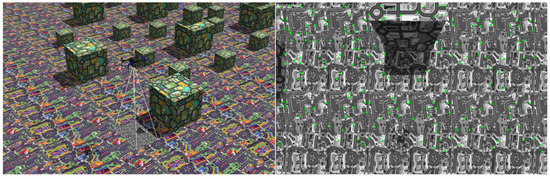

In this section, to validate our proposed approach and show its superiority, we present comparative experimental results. All experiments were conducted in Gazebo [34] simulation environment and the test drone used in the environment is specified in Table 1. The test drone has a down-looking camera that is used by SVO for position estimation and the creation of the point cloud. Figure 9 shows the simulation environment alongside a sample snapshot from the down-looking camera.

Table 1.

Specifications of the simulation environment.

Figure 9.

The test drone flies at a 2 m altitude in the simulation environment on the left side. On the right side, a sample picture captured by the down-looking camera is shown. The camera can see a 4 m × 6.2 m ground area at a 2 m altitude. Green points in the picture are corner features that are measured by SVO for position estimation and point cloud data.

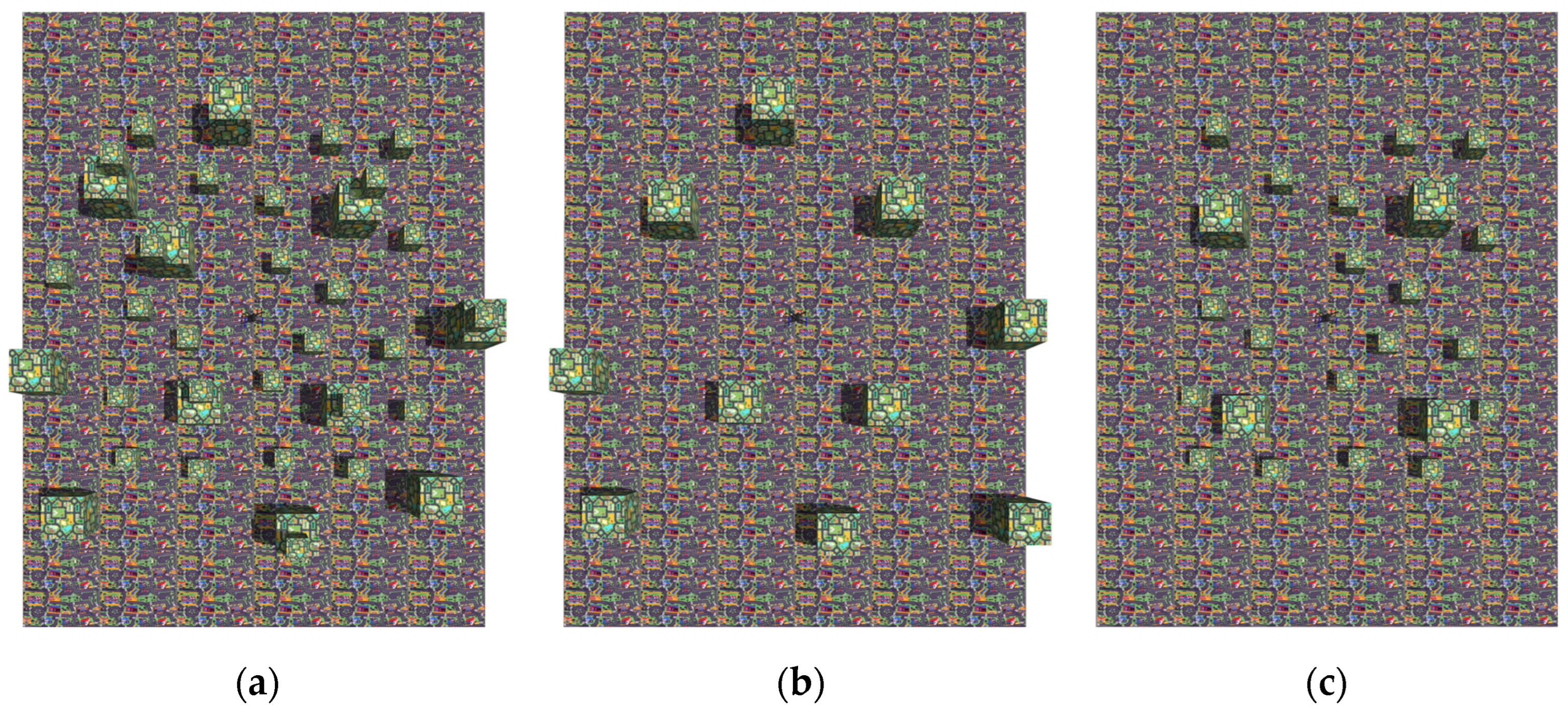

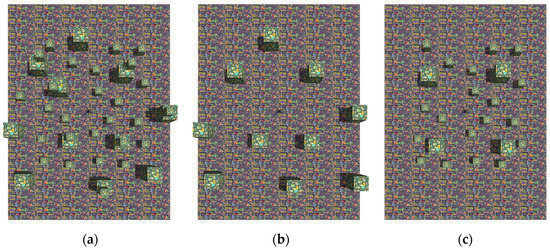

The performance of the NBV planner and our proposal QABV planner are tested against 3 different maps shown in Figure 10. To compare the performances of the planners, the following criteria are used:

Figure 10.

Test maps in Gazebo simulation environment: (a) map 1; (b) map 2; and (c) map 3. In the maps, cubes with side lengths of 1 m and 0.5 m are put on a flat surface with different positions, and each map is 18.6 m × 12 m in size.

- Map exploration as defined in (13);

- Map accuracy as defined in (14);

- Distance traveled to indicate the path length followed by the drone during the execution of Algorithm 1.

To consider the random behavior of RRT and to have a general understanding of the performance of the planners, all tests are repeated three times for each map.

In the test scenarios, the drone flies at a 2 m constant height on the XY plane in the map. ArduPilot flight controller [10] is used for the position controller of the drone and position estimations are supported by SVO. In our simulation studies, there was a need for the closed-loop system model since both planners require a system model of the drone to generate a feasible node tree considering the dynamic and kinematic constraints on the XY plane. In this context, we performed a naïve system identification experiment with a sampling (i.e., planning) period and fixed the linear velocity . The XY dynamics are obtained via the system identification toolbox of Matlab® [35] and are as follows:

where is the yaw angle .

4.1. NBV Planner Results

NBV planner focuses on map exploration and does not consider map accuracy as given in (16). Thus, we defined the stop criterion as 95% map exploration. Additionally, as an enhancement to the NBV planner and for a fair comparison, we used the map representation defined in Section 2 instead of the occupancy map in the original study [20].

In Table 2, the averaged test results are shown for each map when in (16) is set as 0.5. On average, the drone travels 79.29 m to explore 95% of the map and 74.2% of the explored map is accurate according to Bayes inference defined in (10) in Section 2 with SVO point cloud measurements.

Table 2.

Experiment results for the NBV planner.

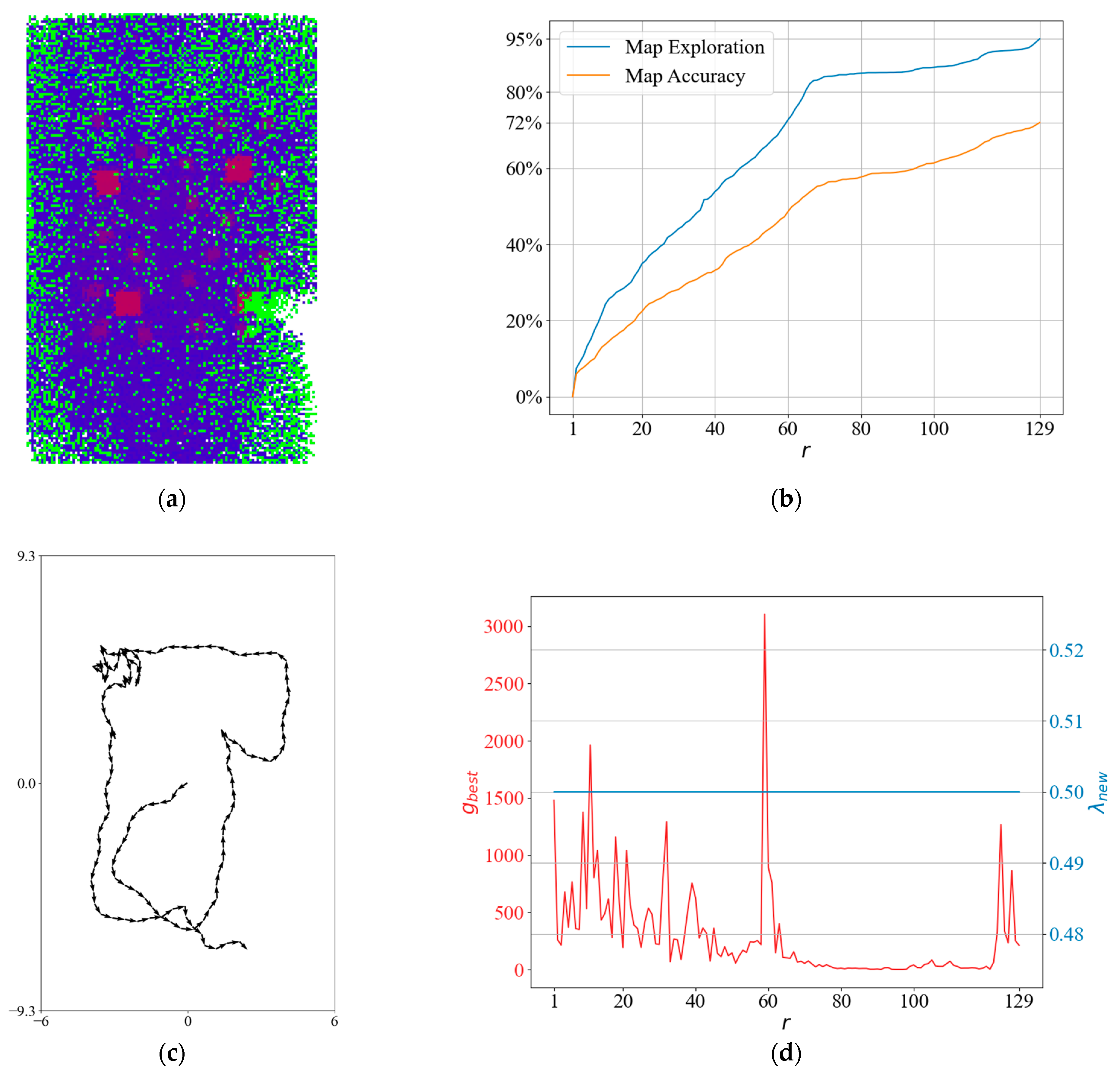

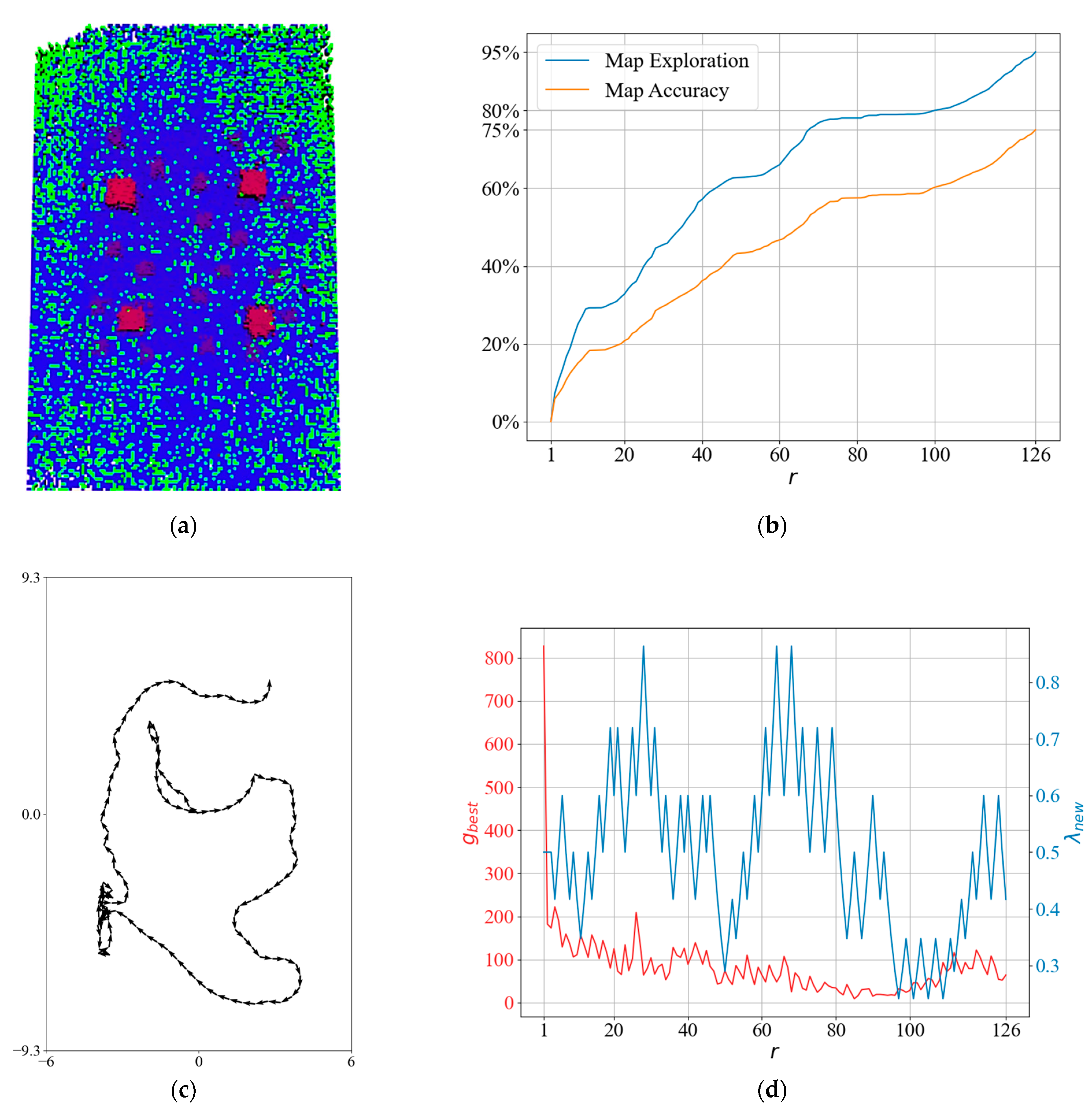

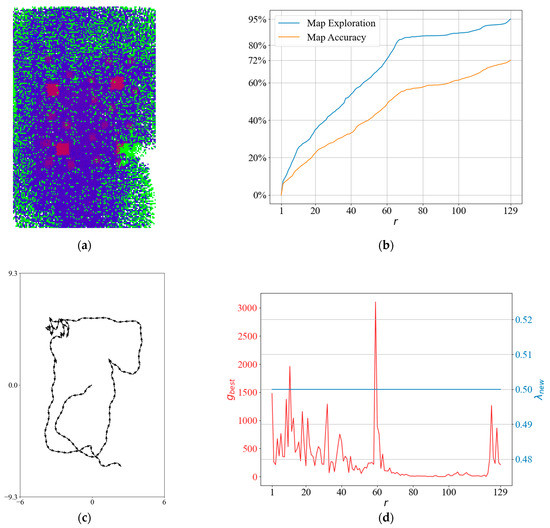

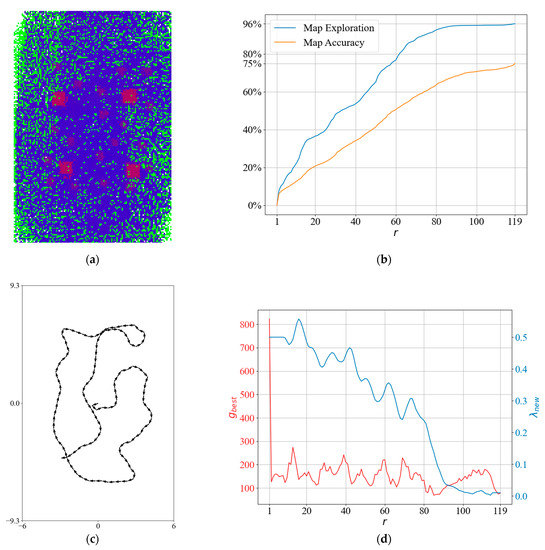

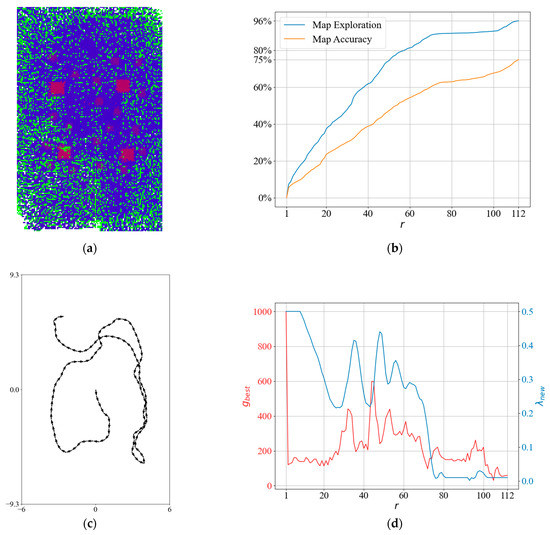

To obtain more insight into the NBV planner, we can look at the test result in Figure 11. The resultant elevation map from the top view is shown in Figure 11a. Although inaccurate cells also need to be visited again to become accurate with Bayes inference, the path created by the planner focuses on only exploring empty cells. Furthermore, the drone got stuck as mentioned in (P-iii). Because in Figure 11b, map exploration, and accuracy remain stable at iterations between 70 and 100, and a tangle occurs in Figure 11c. This results in low , as we can see from Figure 11d, and the drone stays at the local minimum until the local minimum gain drops off.

Figure 11.

A detailed result for the NBV planner: (a) generated sparse elevation map (top view); (b) map rxploration and map accuracy; (c) the planned path; and (d) and .

4.2. QABV-1 Planner Results

The QABV-1 planner focuses on map accuracy and exploration at the same time as given in (22) and (26) in Section 3, so the stop criteria are chosen as 75%, 80%, and 85% map accuracy as defined in (14). We set in Algorithm 3 to the same value of NBV as .

In the QABV-1 planner tests, we examined how is affecting the performance by analyzing the results for Table 3, Table 4 and Table 5 show the averaged test results for each test map and each value. As can be seen from the results, the drone generally travels more in Map 1 to achieve similar results in other maps. The reason for this behavior can be explained by the number and different positions of the cubes. Because of the nature of the camera view angle, cubes occlude the area behind if they are intercepting the line of sight of the camera. The planner finds alternative routes for those occluded areas which naturally causes longer distance traveled.

Table 3.

QABV-1 planner test results for .

Table 4.

QABV-1 planner test results for .

Table 5.

QABV-1 planner test results for .

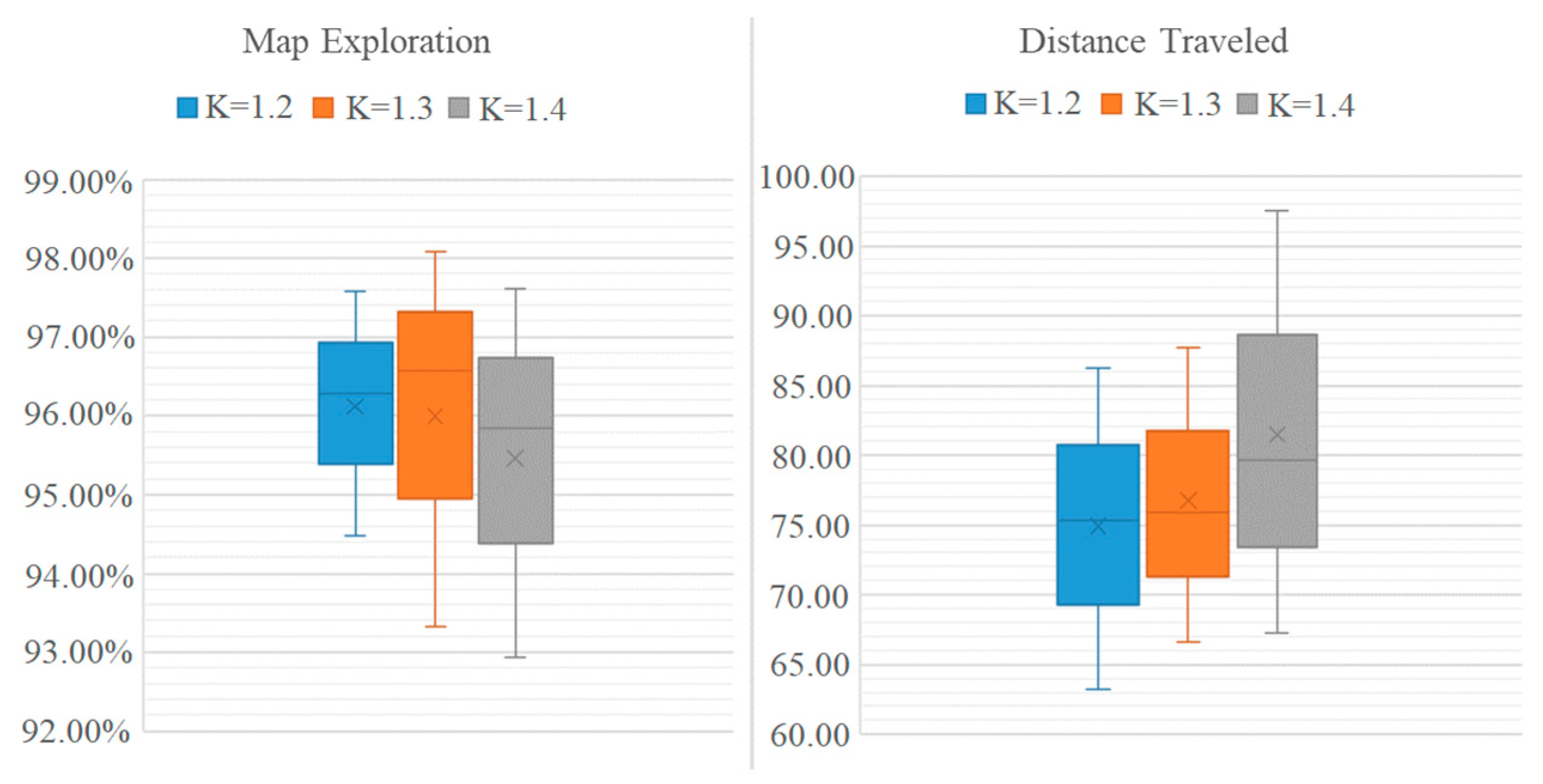

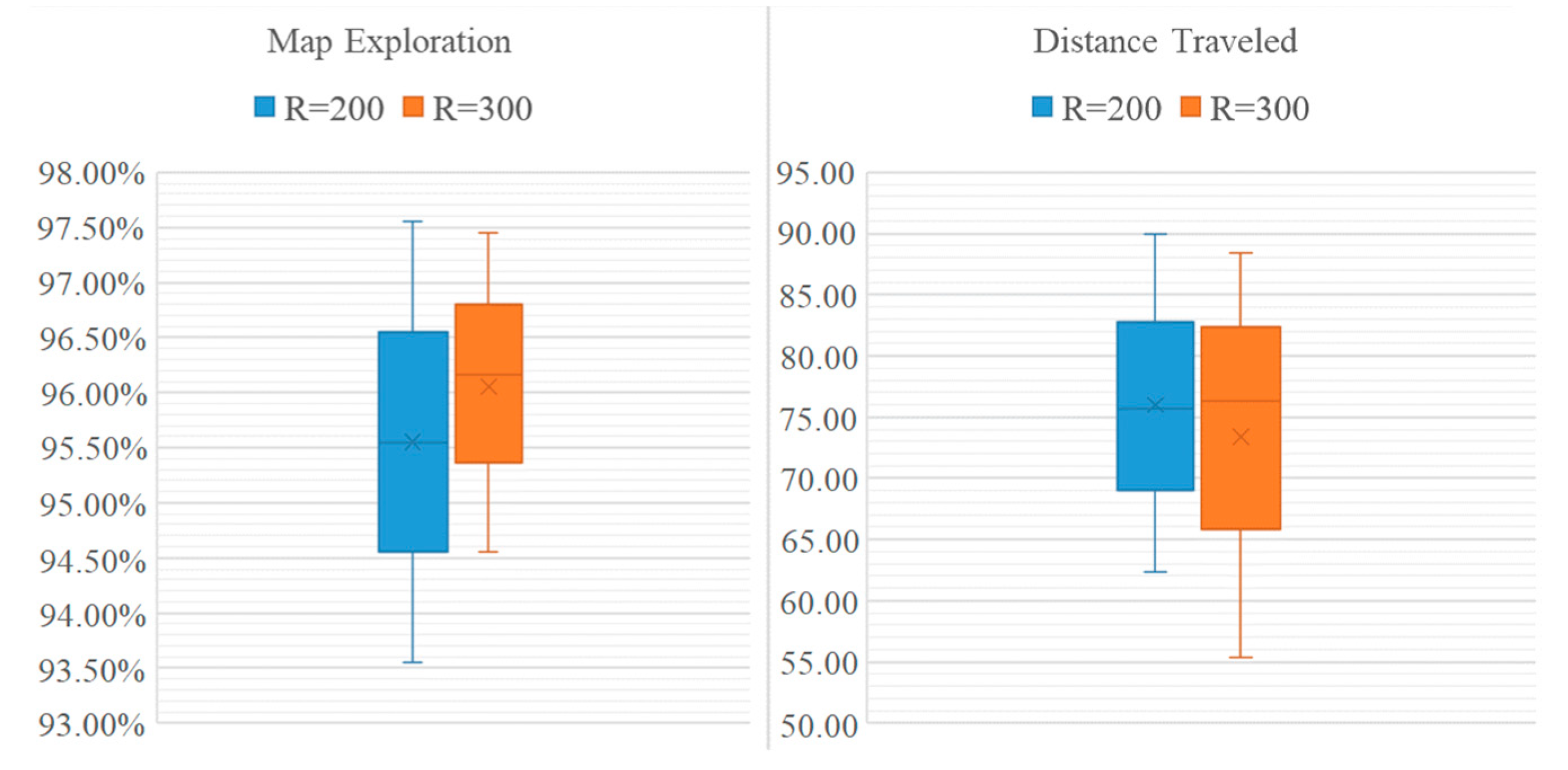

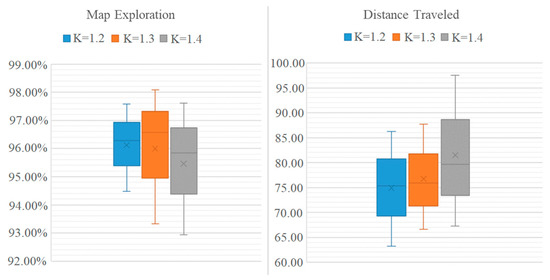

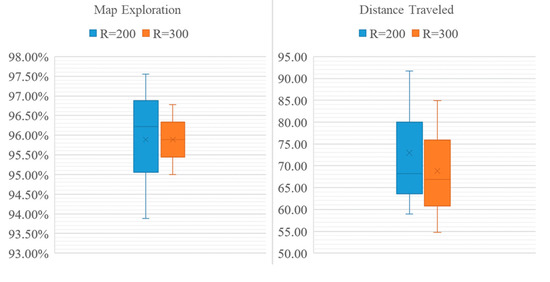

To compare the performance of the QABV-1 planner against different values, we qabv-1can look at the average performance of the method on the test maps. In Figure 12, the average performances of map exploration and distance traveled are presented. Map accuracy is the stop criterion, which is why it is the same for all test cases. As can be seen from the figure, QABV-1 performs better with because the drone travels less while having a better map exploration on average compared to and .

Figure 12.

Analyzing test results for different hyperparameters for QABV-1 planner. Average map exploration and distance traveled from 75%, 80%, and 85% map accuracy test results are shown.

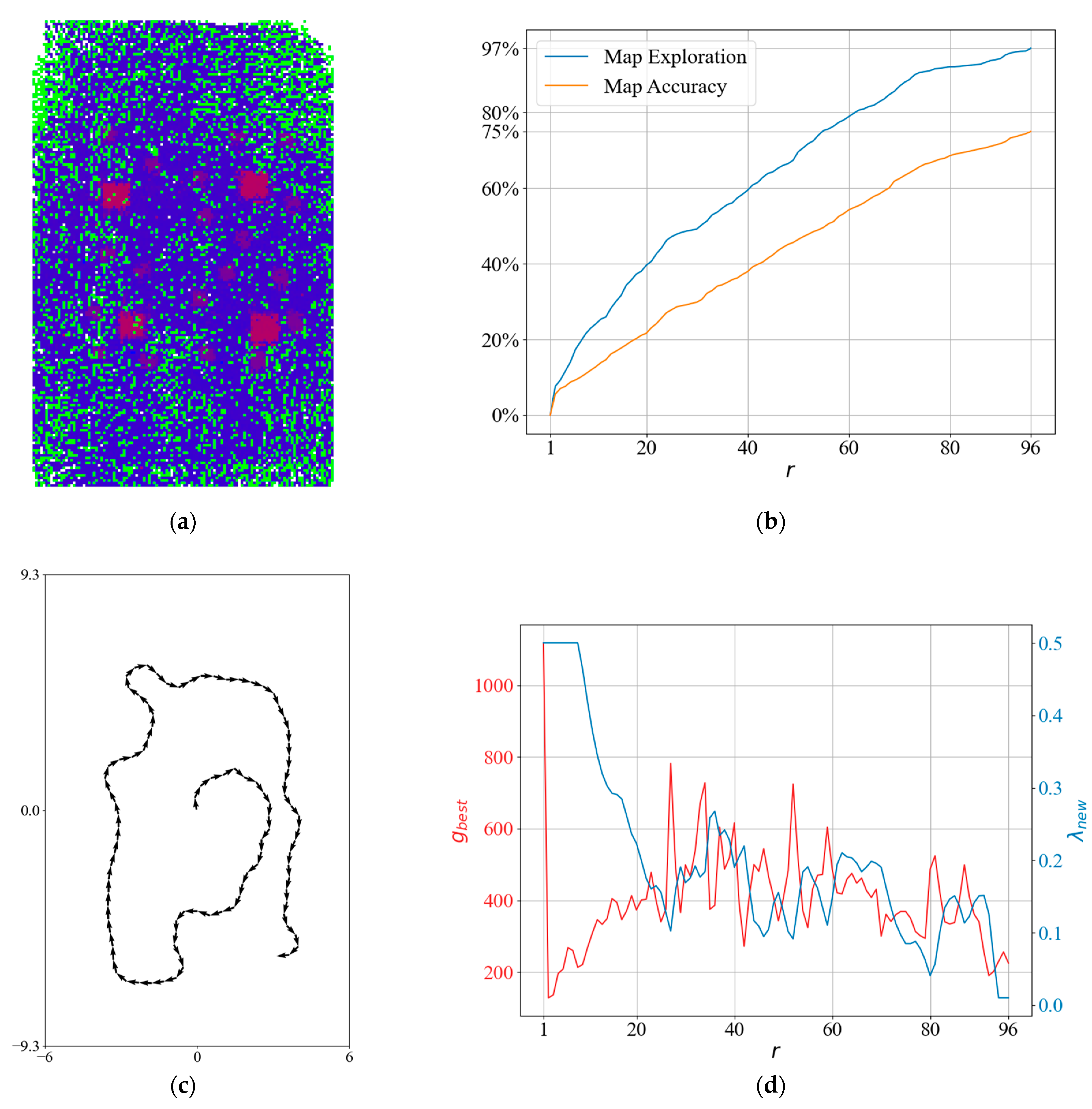

To obtain more insight into the QABV-1 planner, we can look at the test result in Figure 13 when and the stop criterion is 75% map accuracy. The resultant elevation map is shown in Figure 13a. Notice that QABV-1 is updating during map exploration differently than NBV. Although the drone stays in a low-gain area during iterations between 70 and 100 because map statistics remain stable in Figure 13b, and a tangle occurs in the followed path in Figure 13c, QABV-1 decreases to escape from the low-gain area and go to high-gain areas at a longer distance. After that, QABV-1 starts to increase to reduce the distance traveled. After iteration 70, is decreased according to the decreasing value and is increased again after iteration 100 with the increasing value (See Figure 13d).

Figure 13.

A detailed result of the QABV-1 planner: (a) generated sparse elevation map (top view); (b) map exploration and map accuracy; (c) the planned path when ; and (d) and .

4.3. QABV-2 Planner Results

In the QABV-2 planner tests, we set the moving average filter size as , , and and to scale with the magnitude of and in (35). Note that we set as there is an inverse relation between and . We examined the performance for . Table 6 and Table 7 show the averaged test results for test maps.

Table 6.

QABV-2 planner test results for .

Table 7.

QABV-2 planner test results for .

To compare the performance of the QABV-2 planner against different values, we can look at the average performance of the method on the test maps. In Figure 14, the average performance of map exploration and distance traveled are shown. As can be seen from the figure, QABV-2 performs better with because the drone travels less while having more map exploration on average compared to .

Figure 14.

Analyzing test results for different hyperparameters for QABV-2 planner. Average map exploration and distance traveled from 75%, 80%, and 85% map accuracy test results are shown.

To obtain more insight into the QABV-2 planner, we can look at the test result in Figure 15 when and the stop criterion is 75% map accuracy. The resultant elevation map from the top view is shown in Figure 15a. Notice that QABV-2 updates more smoothly by using a PD controller than QABV-1 in Figure 13. On the other hand, QABV-2 waits before updating more than QABV-1 until enough information is gathered because of the moving average filter that is defined in (36) in Section 3.2.2; however, QABV-1 only waits for the calculation of and starts updating in the third iteration.

Figure 15.

A detailed result of the QABV-2 planner: (a) generated sparse elevation map (top view); (b) map exploration and map accuracy; (c) the planned path when ; and (d) and .

4.4. QABV-3 Planner Results

In the QABV-3 planner tests, we used the same values as the QABV-2 planner tests. Table 8 and Table 9 show the averaged test results for each test map and each value.

Table 8.

QABV-3 planner test results for .

Table 9.

QABV-3 planner test results for .

To compare the performance of the QABV-3 planner against different values, we can look at the average performance of the method on the test maps. In Figure 16, the average performance of map exploration and distance traveled are shown. As can be seen from the figure, QABV-3 performs better with because the drone travels less while having more map exploration on average compared to .

Figure 16.

Analyzing test results for different hyperparameters for QABV-3 planner. Average map exploration and distance traveled from 75%, 80%, and 85% map accuracy test results are shown.

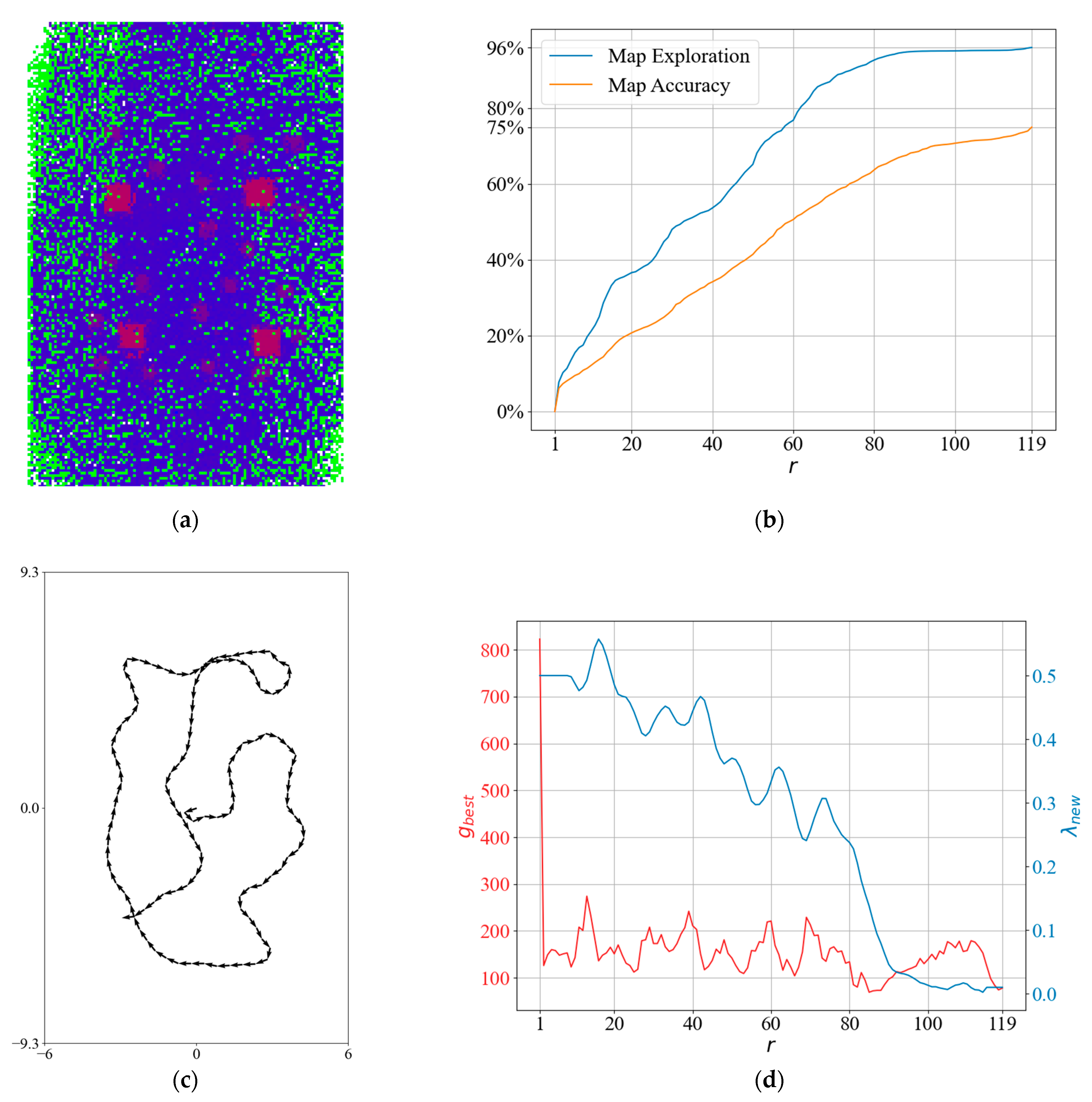

To obtain more insight into the QABV-3 planner, we can look at the test result in Figure 17 when and the stop criterion is 75% map accuracy. The resultant elevation map from the top view is shown in Figure 17a. Notice that QABV-3 allows for with its switching controller compared to QABV-2 in Figure 15.

Figure 17.

A detailed result of the QABV-3 planner: (a) generated sparse elevation map (top view); (b) map exploration and map accuracy; (c) the planned path when ; and (d) and λ.

4.5. QABV-4 Planner Results

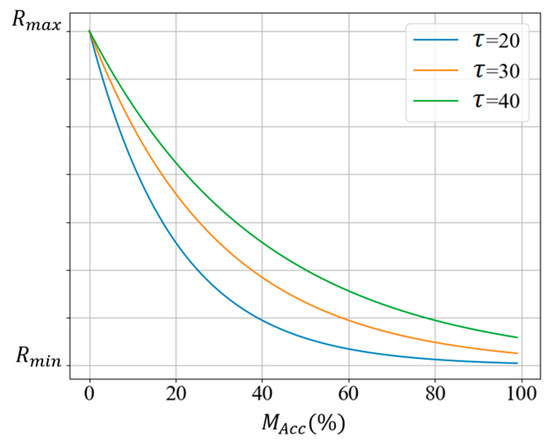

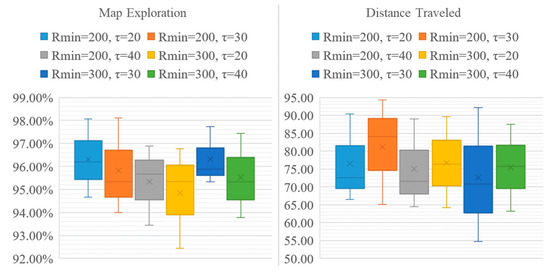

In the QABV-4 tests, we set and examined the performance for and . The remaining parameters are set to the same values as QABV-3. Table 10 and Table 11 show the results for and , respectively.

Table 10.

QABV-4 planner test results for .

Table 11.

QABV-4 planner test results for .

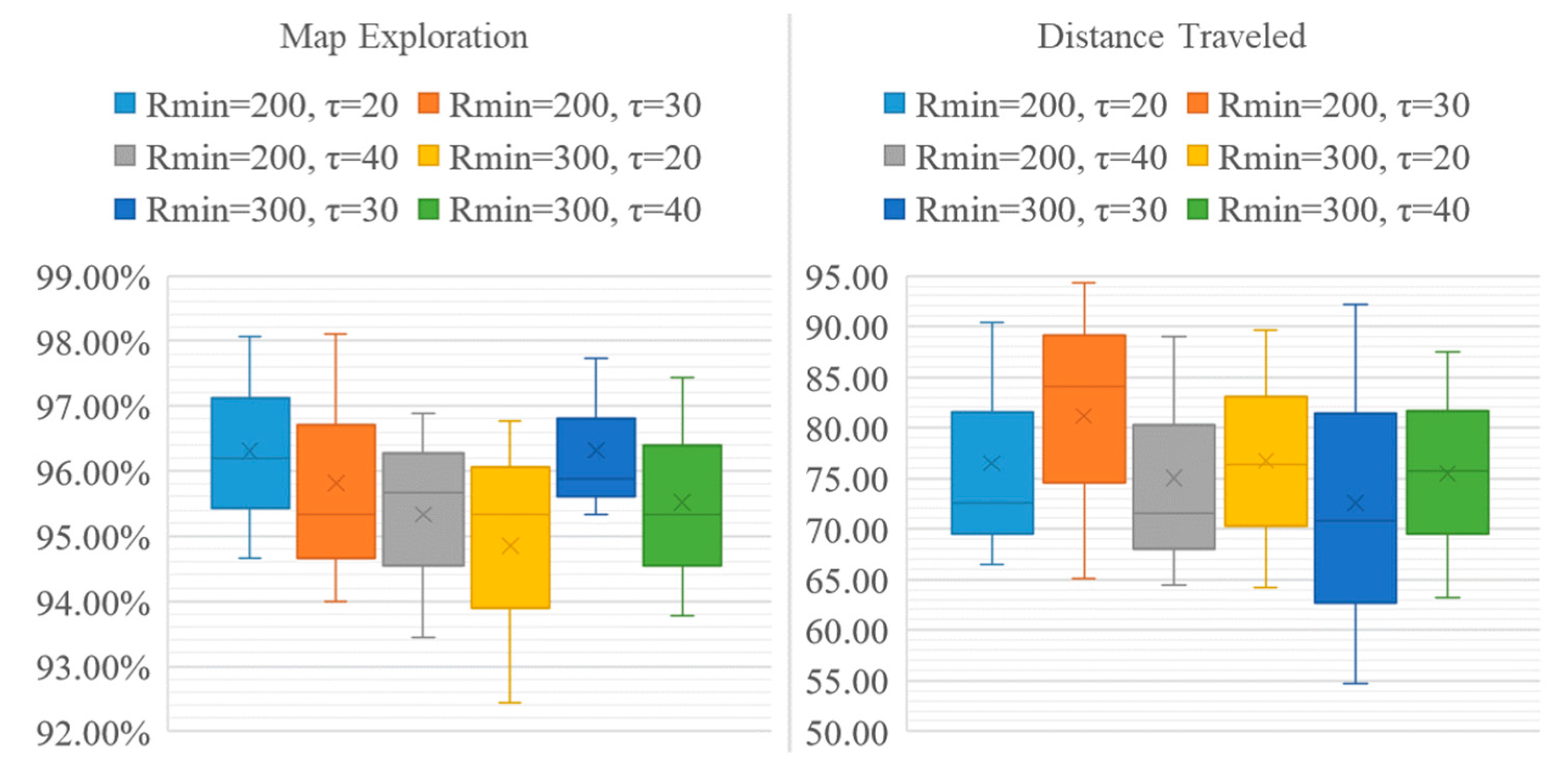

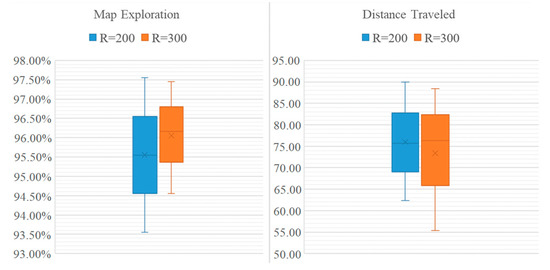

To compare the performance of the QABV-4 planner with different and settings, we can examine the average performances presented in Figure 18. As can be seen, QABV-4 performs better with and since the drone travels less while having more map exploration on average than other combinations.

Figure 18.

Analyzing test results for different hyperparameters for QABV-4 planner. Average map exploration and distance traveled for 75%, 80%, and 85% map accuracy test results are shown.

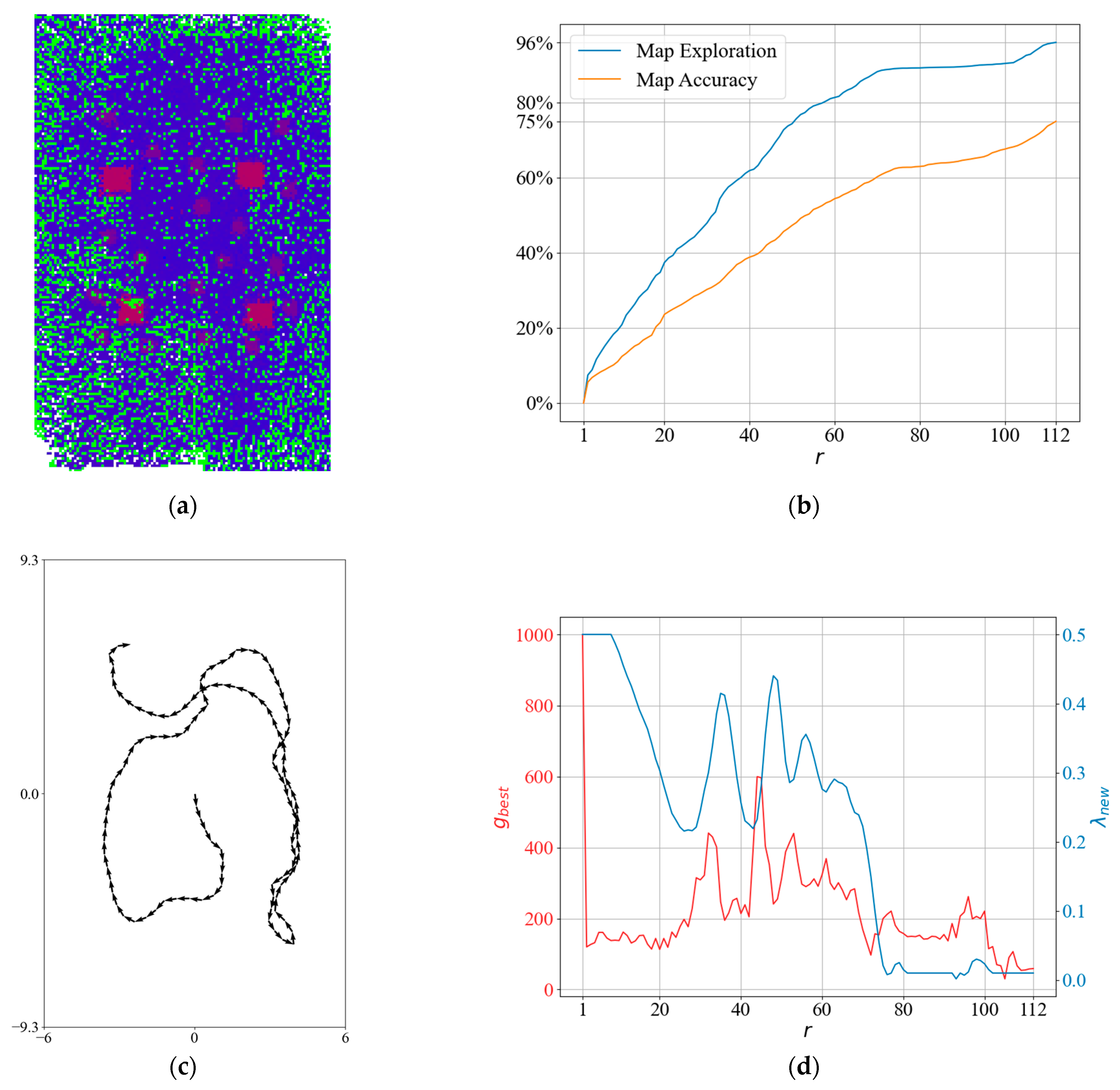

To obtain more insight into the QABV-4 planner, we can look at the test result in Figure 19 when , and the stop criterion is 75% map accuracy. The resultant elevation map from the top view is shown in Figure 19a. In Figure 19b, notice that map exploration and accuracy remain stable at iterations between 70 and 100 because in Figure 19c, we can see that the planned path is overlapping. In Figure 19d, notice that λ is decreased at the same iterations to keep at . Also notice that λ is not increased at the first iterations as is in QABV-2 in Figure 15d because is set to at the first iteration according to (39) in Section 3.2.4, and λ is decreased to elevate . On the other hand, in the QABV-2 test, is set to 300, and λ is increased to reduce .

Figure 19.

A detailed result of the QABV-4 planner: (a) generated sparse elevation map (top view); (b) map exploration and map accuracy; (c) the planned path when ; and (d) and λ.

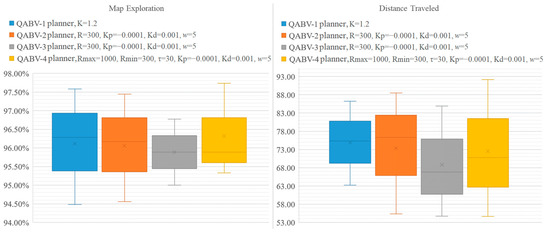

4.6. Overall Performance Comparison

To make an overall performance comparison, we tabulate the baseline NBV planner results against the best resulting QABV planners which are:

- QABV-1 when ;

- QABV-2 when ;

- QABV-3 when ;

- QABV-4 when .

As tabulated in Table 12, when the test stop criterion is 75% map accuracy, the QABV planner significantly reduces the distance traveled compared to the NBV planner:

Table 12.

Baseline NBV planner performance results against the best resulting QABV planners.

- 20.28% less distance traveled using QABV-1;

- 30.19% less distance traveled using QABV-2;

- 31% less distance traveled using QABV-3;

- 31.02% less distance traveled using QABV-4;

while having negligible differences in map exploration and accuracy.

From the results presented in Table 13, when the test stop criterion is 80% map accuracy, the QABV planner continues to reduce the distance traveled although 7.8% more map accuracy is being asked compared to the NBV planner:

Table 13.

The best resulting QABV planners with stop criteria of 80% and 85% map accuracy.

- 5% less distance traveled using QABV-1;

- 3.72% less distance traveled using QABV-2;

- 15.69% less distance traveled using QABV-3;

- 10.76% less distance traveled using QABV-4;

while having negligible differences in map exploration; however, the distance traveled increases when the stop criterion is 85% map accuracy.

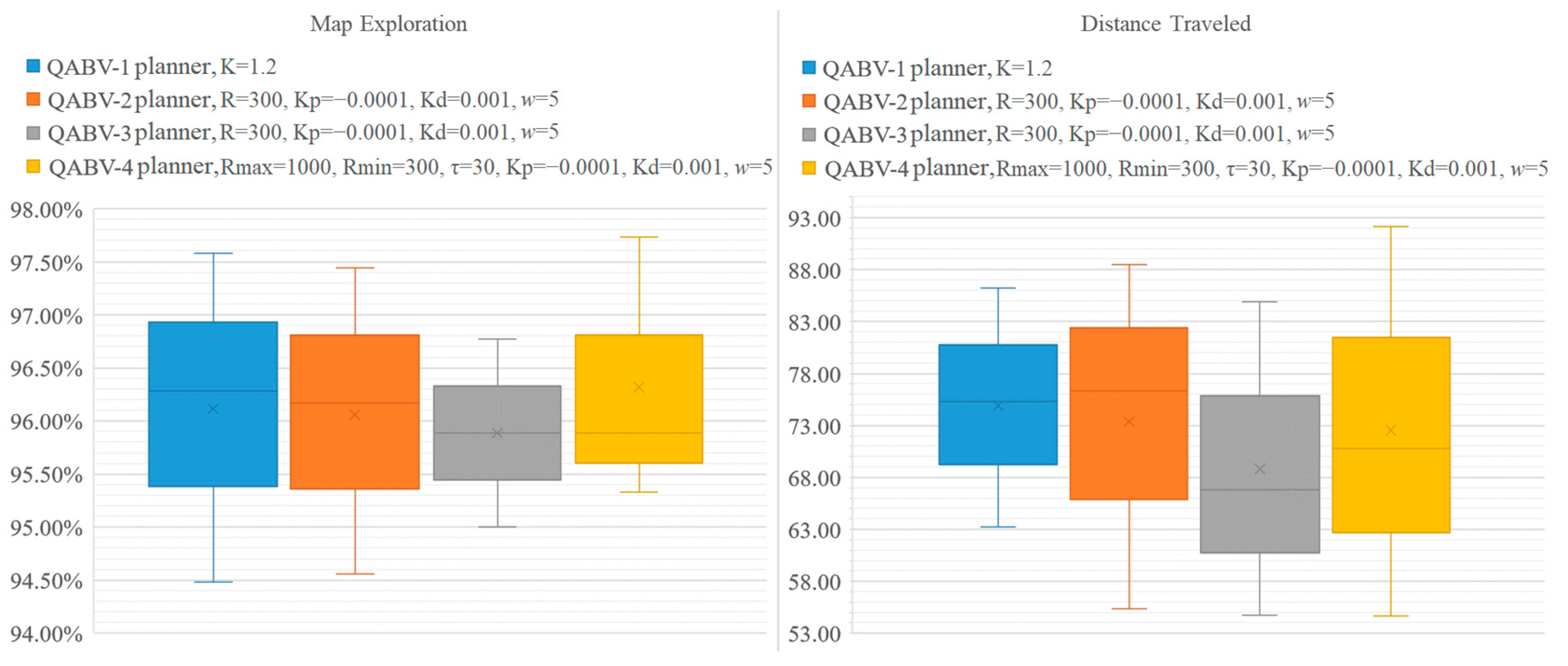

Lastly, to compare the performance of the dynamic λ update methods, Figure 20 visualizes the test results in Table 12 and Table 13 for average map exploration and distance traveled. Referring to the figure, the average map exploration is around 96% for each method, and QABV-4 performs slightly better than others; however, the average distance traveled differs between the methods, and QABV-3 performs significantly better than the others with 68.82 m. Although the map exploration performance of the QABV-4 is slightly better, its performance in reducing the distance traveled makes the QABV-3 to be the first choice of implementation. On the other hand, QABV-1 is the least performant method but the easiest to implement at the same time.

Figure 20.

Analyzing test results for different QABV planner dynamic λ update methods. Average map exploration and distance traveled for 75%, 80%, and 85% map accuracy test results are shown.

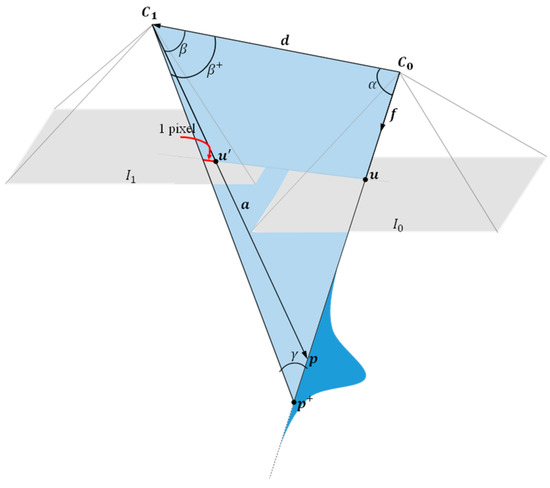

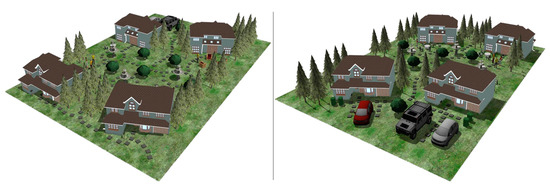

5. A Real-World Scenario

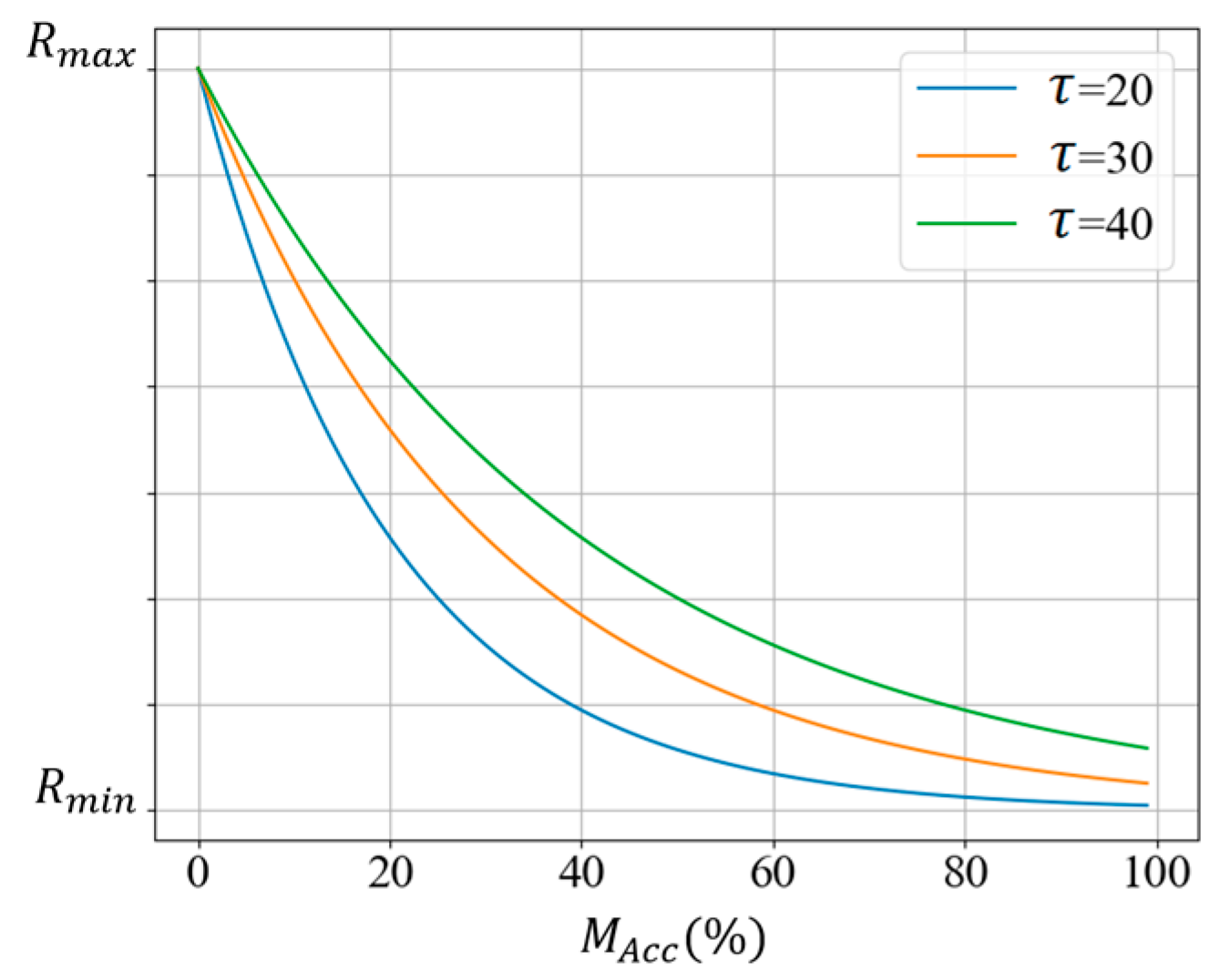

To see the performance of the proposed planner in a real-world scenario such as delivering a package to a residential area, the map in Figure 21 is generated. In the scenario, the drone flies at a 5 m constant height on the x-y plane. Thus, the down-looking camera of the drone can see a broader area than before since the drone flight altitude is increased. Additionally, the distance between adjacent nodes in the RRT tree is increased in response to a broader visible area of the camera.

Figure 21.

Test map for real-world scenario tests. The map is 37.2 m × 24 m in size, and it represents the backyard of the houses.

Table 14 shows test results when the stop criterion is 95% map exploration for the NBV planner and 75% map accuracy for the QABV planner. Tests are repeated for each dynamic λ update method with the same parameters as given in Section 4.6. Referring to Table 14, there is a noticeable increase in the average distance traveled results because of two main reasons. First, the map area is doubled, and second, big objects are causing occlusions for the camera; more specifically, the houses in the environment are affecting the visibility of the area behind since they are closer to the camera because of their height.

Table 14.

Performance measures of NBV and QABV planners in real-world scenario test.

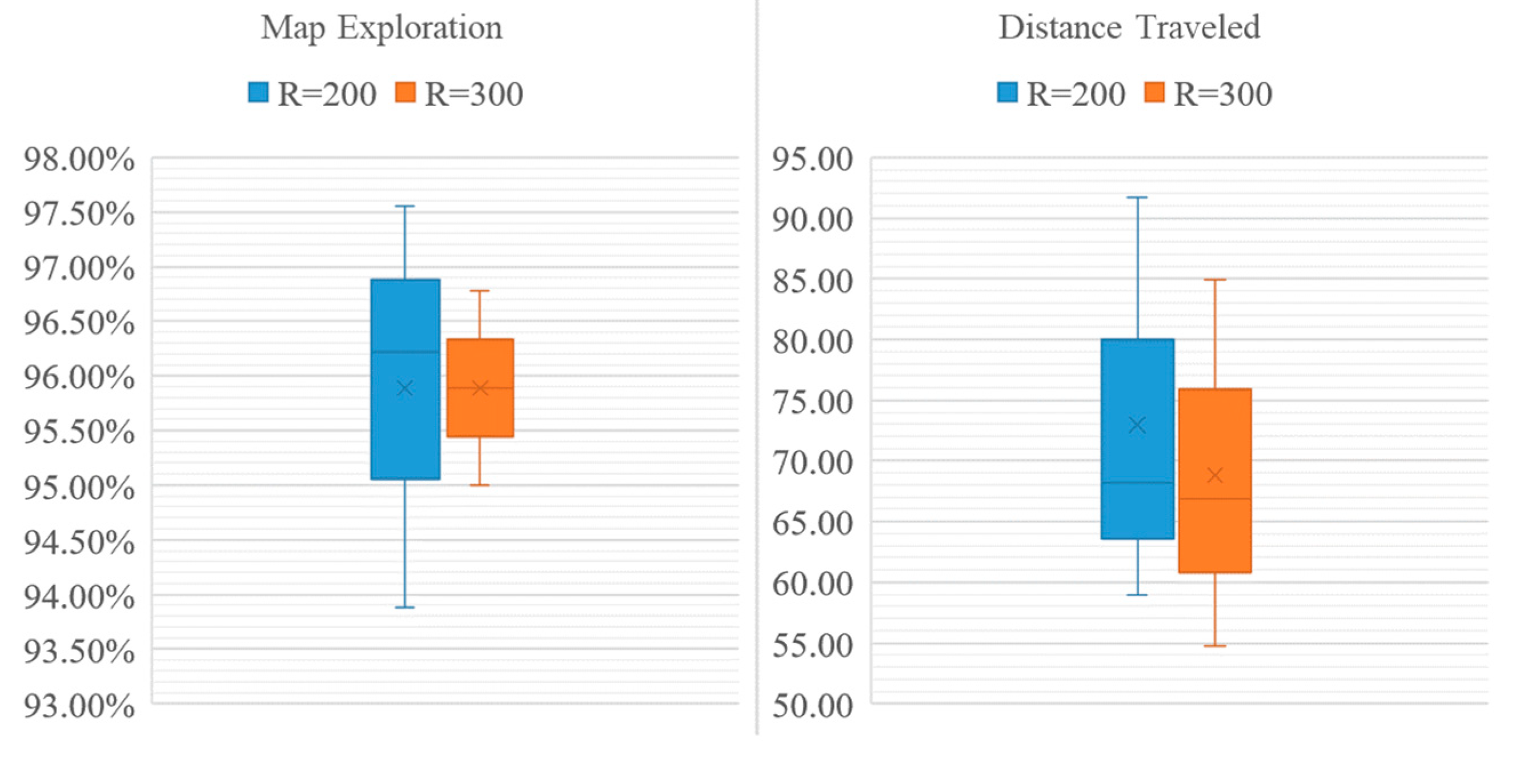

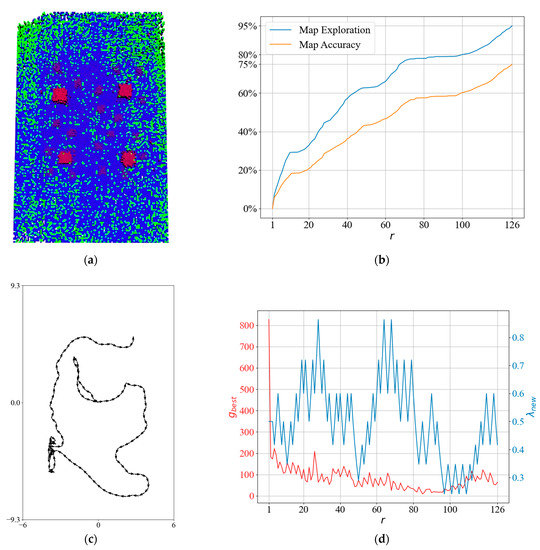

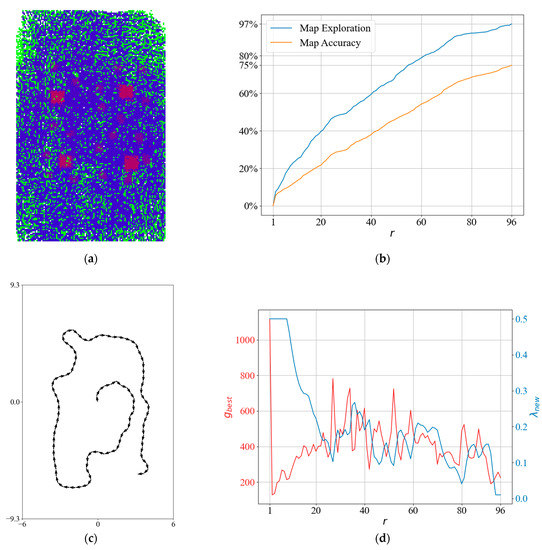

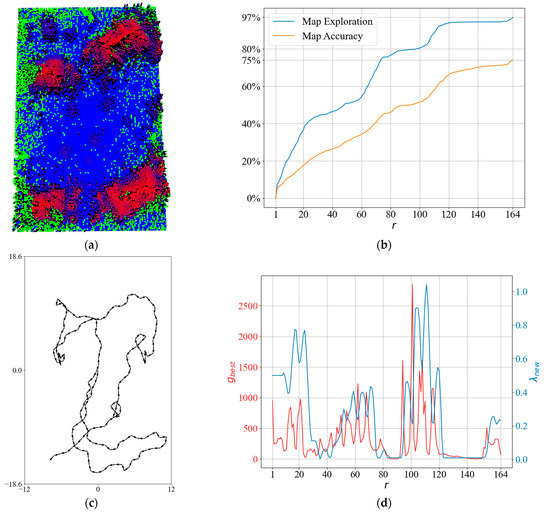

When the planners are calculating the gain of the RRT nodes, the nodes nearby the houses obtain low gains because of the occlusion. That is why in Figure 22, we can see that the drone prefers to travel in the non-occluded area until the information depletes because in Figure 22a, the middle of the map is marked with blue cells indicating that the garden is relatively lower than other objects, and the drone prefers to stay in this region more as we can see from the tangles in the followed path in Figure 22c; however, when the drone finds itself in a low-information gain area, the dynamic cost function decreases λ and the QABV planner selects the nodes nearby the houses as the best viewpoints.

Figure 22.

A detailed result of the QABV-3 planner in a real-world scenario: (a) generated sparse elevation map (top view); (b) map exploration and map accuracy; (c) the planned path when ; and (d) and λ.

6. Conclusions and Future Work

In this study, we proposed a new terrain mapping and exploration system to be used for autonomous drone landings. For mapping, we presented the probabilistic sparse elevation map to enable the representation of sensor measurement error. For exploration, we presented the QABV planner for autonomous drone navigation with a dual focus: map exploration and quality. We showed that by exploiting the probabilistic nature of the proposed map with the novel information gain function, the QABV planner increases the measurement accuracy while exploring the map.

To reduce the distance traveled, we presented that using the node count as path cost is very effective in our scenario rather than the distance between adjacent nodes as was in the NBV planner. Furthermore, we presented four different control methods to dynamically adjust the path cost. We showed that the QABV planner can be seen as a system to be controlled via λ such that shorter paths are generated when the drone is in an information-rich area, or longer paths are allowed to exit from an information-poor area and to avoid getting stuck. In simulation studies, we tested our proposals against the NBV planner and verified their usefulness. In particular, QABV-3 and QABV-4 achieved superior results regarding the distance traveled and map quality. Although QABV-4 has slightly better map exploration performance, its performance in reducing the distance traveled makes the QABV-3 the first option for implementation.

One limitation of our proposed system is that the drone flies at a constant altitude while the probabilistic sparse elevation map is generated. This may limit the accuracy and resolution of the map, especially in areas with varying terrain or elevation. Another limitation is the use of hyperparameters in the λ update methods such as K, R, and which must be determined in a heuristic mannner. In the test results section, our analysis shows that the performance of the methods is sensitive to these parameters, and better-determined values can result in better performances.

As for our future work, we will focus on addressing these limitations by exploring adaptive control methods for generating elevation maps and automating the determination of hyperparameters. Furthermore, we plan to work on the proposed approach and deploy it in a real-world environment to examine how it performs in the presence of real-world uncertainties.

Author Contributions

Conceptualization, O.S. and T.K.; methodology, O.S. and T.K.; software, O.S.; investigation, O.S.; data curation, O.S.; writing—original draft preparation, O.S.; writing—review and editing, O.S. and T.K.; visualization, O.S.; supervision, T.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, S.; Han, Y.; Chen, J.; Zhang, Z.; Wang, G.; Du, N. A Deep-Learning-Based Sea Search and Rescue Algorithm by UAV Remote Sensing. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; IEEE: New York, NY, USA, 2018; pp. 1–5. [Google Scholar]

- Wang, C.; Liu, P.; Zhang, T.; Sun, J. The Adaptive Vortex Search Algorithm of Optimal Path Planning for Forest Fire Rescue UAV. In Proceedings of the 2018 IEEE 3rd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 October 2018; IEEE: New York, NY, USA, 2018; pp. 400–403. [Google Scholar]

- Chen, S.; Meng, W.; Xu, W.; Liu, Z.; Liu, J.; Wu, F. A Warehouse Management System with UAV Based on Digital Twin and 5G Technologies. In Proceedings of the 2020 7th International Conference on Information, Cybernetics, and Computational Social Systems (ICCSS), Guangzhou, China, 13–15 November 2020; IEEE: New York, NY, USA, 2020; pp. 864–869. [Google Scholar]

- Liu, H.; Chen, Q.; Pan, N.; Sun, Y.; An, Y.; Pan, D. UAV Stocktaking Task-Planning for Industrial Warehouses Based on the Improved Hybrid Differential Evolution Algorithm. IEEE Trans. Ind. Inform. 2021, 18, 582–591. [Google Scholar] [CrossRef]

- Masmoudi, N.; Jaafar, W.; Cherif, S.; Abderrazak, J.B.; Yanikomeroglu, H. UAV-Based Crowd Surveillance in Post COVID-19 Era. IEEE Access 2021, 9, 162276–162290. [Google Scholar] [CrossRef]

- Song, H.; Yoo, W.-S.; Zatar, W. Interactive Bridge Inspection Research Using Drone. In Proceedings of the 2022 IEEE 46th Annual Computers, Software, and Applications Conference (COMPSAC), Alamitos, CA, USA, 27 June–1 July 2022; IEEE: New York, NY, USA, 2022; pp. 1002–1005. [Google Scholar]

- Worakuldumrongdej, P.; Maneewam, T.; Ruangwiset, A. Rice Seed Sowing Drone for Agriculture. In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 15–18 October 2019; IEEE: New York, NY, USA, 2019; pp. 980–985. [Google Scholar]

- Raivi, A.M.; Huda, S.M.A.; Alam, M.M.; Moh, S. Drone Routing for Drone-Based Delivery Systems: A Review of Trajectory Planning, Charging, and Security. Sensors 2023, 23, 1463. [Google Scholar] [CrossRef] [PubMed]

- PX4 Autopilot. Available online: https://px4.io/ (accessed on 19 February 2023).

- ArduPilot. Available online: https://ardupilot.org/copter (accessed on 19 February 2023).

- Johnson, A.E.; Klumpp, A.R.; Collier, J.B.; Wolf, A.A. Lidar-Based Hazard Avoidance for Safe Landing on Mars. J. Guid. Control. Dyn. 2002, 25, 1091–1099. [Google Scholar] [CrossRef]

- Scherer, S.; Chamberlain, L.; Singh, S. Autonomous Landing at Unprepared Sites by a Full-Scale Helicopter. Robot. Auton. Syst. 2012, 60, 1545–1562. [Google Scholar] [CrossRef]

- Templeton, T.; Shim, D.H.; Geyer, C.; Sastry, S.S. Autonomous Vision-Based Landing and Terrain Mapping Using an MPC-Controlled Unmanned Rotorcraft. In Proceedings of the Proceedings 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; IEEE: New York, NY, USA, 2007; pp. 1349–1356. [Google Scholar]

- Geyer, C.; Templeton, T.; Meingast, M.; Sastry, S.S. The Recursive Multi-Frame Planar Parallax Algorithm. In Proceedings of the Third International Symposium on 3D Data Processing, Visualization, and Transmission (3DPVT’06), Chapel Hill, NC, USA, 14–16 June 2006; IEEE: New York, NY, USA, 2006; pp. 17–24. [Google Scholar]

- Desaraju, V.R.; Michael, N.; Humenberger, M.; Brockers, R.; Weiss, S.; Nash, J.; Matthies, L. Vision-Based Landing Site Evaluation and Informed Optimal Trajectory Generation toward Autonomous Rooftop Landing. Auton. Robot. 2015, 39, 445–463. [Google Scholar] [CrossRef]

- Yang, T.; Li, P.; Zhang, H.; Li, J.; Li, Z. Monocular Vision SLAM-Based UAV Autonomous Landing in Emergencies and Unknown Environments. Electronics 2018, 7, 73. [Google Scholar] [CrossRef]

- Forster, C.; Faessler, M.; Fontana, F.; Werlberger, M.; Scaramuzza, D. Continuous On-Board Monocular-Vision-Based Elevation Mapping Applied to Autonomous Landing of Micro Aerial Vehicles. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; IEEE: New York, NY, USA, 2015; pp. 111–118. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast Semi-Direct Monocular Visual Odometry. In Proceedings of the 2014 IEEE international conference on robotics and automation (ICRA), Hong Kong, China, 31 May–5 June 2014; IEEE: New York, NY, USA, 2014; pp. 15–22. [Google Scholar]

- Delmerico, J.; Scaramuzza, D. A Benchmark Comparison of Monocular Visual-Inertial Odometry Algorithms for Flying Robots. In Proceedings of the 2018 IEEE international conference on robotics and automation (ICRA), Brisbane, Australia, 21–26 May 2018; IEEE: New York, NY, USA, 2018; pp. 2502–2509. [Google Scholar]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding Horizon"next-Best-View" Planner for 3d Exploration. In Proceedings of the 2016 IEEE international conference on robotics and automation (ICRA), Stockholm, Sweden, 16–20 May 2016; IEEE: New York, NY, USA, 2016; pp. 1462–1468. [Google Scholar]

- Schmid, L.; Pantic, M.; Khanna, R.; Ott, L.; Siegwart, R.; Nieto, J. An Efficient Sampling-Based Method for Online Informative Path Planning in Unknown Environments. IEEE Robot. Autom. Lett. 2020, 5, 1500–1507. [Google Scholar] [CrossRef]

- Selin, M.; Tiger, M.; Duberg, D.; Heintz, F.; Jensfelt, P. Efficient Autonomous Exploration Planning of Large-Scale 3-d Environments. IEEE Robot. Autom. Lett. 2019, 4, 1699–1706. [Google Scholar] [CrossRef]

- Mittal, M.; Mohan, R.; Burgard, W.; Valada, A. Vision-Based Autonomous UAV Navigation and Landing for Urban Search and Rescue. In Proceedings of the Robotics Research: The 19th International Symposium ISRR, Hanoi, Vietnam, 6–10 October 2019; Springer: Cham, Switzerland, 2022; pp. 575–592. [Google Scholar]

- Zhang, Z.; Scaramuzza, D. Perception-Aware Receding Horizon Navigation for MAVs. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; IEEE: New York, NY, USA, 2018; pp. 2534–2541. [Google Scholar]

- Davison, A.J.; Murray, D.W. Simultaneous Localization and Map-Building Using Active Vision. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 865–880. [Google Scholar] [CrossRef]

- Mueller, M.W.; Hehn, M.; D’Andrea, R. A Computationally Efficient Algorithm for State-to-State Quadrocopter Trajectory Generation and Feasibility Verification. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; IEEE: New York, NY, USA, 2013; pp. 3480–3486. [Google Scholar]

- LaValle, S.M. Rapidly-Exploring Random Trees: A New Tool for Path Planning. Annu. Res. Rep. 1998. [Google Scholar]

- Karaman, S.; Frazzoli, E. Sampling-Based Algorithms for Optimal Motion Planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

- Yamauchi, B. A Frontier-Based Approach for Autonomous Exploration. In Proceedings of the 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97.’Towards New Computational Principles for Robotics and Automation, Monterey, CA, USA, 10–11 July 1997; IEEE: New York, NY, USA, 1997; pp. 146–151. [Google Scholar]

- Pizzoli, M.; Forster, C.; Scaramuzza, D. REMODE: Probabilistic, Monocular Dense Reconstruction in Real Time. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; IEEE: New York, NY, USA, 2014; pp. 2609–2616. [Google Scholar]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An Efficient Probabilistic 3D Mapping Framework Based on Octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Ogata, K. Two-Position or On–Off Control Action. In Modern Control Engineering; Prentice Hall: Upper Saddle River, NJ, USA, 2010; Volume 5, pp. 22–23. [Google Scholar]

- Ogata, K. PI-D Control. In Modern Control Engineering; Prentice Hall: Upper Saddle River, NJ, USA, 2010; Volume 5, pp. 590–591. [Google Scholar]

- Gazebo. Available online: https://gazebosim.org/home (accessed on 22 February 2023).

- System Identification Toolbox. Available online: https://nl.mathworks.com/products/sysid.html (accessed on 22 February 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).