Measurement of Cracks in Concrete Bridges by Using Unmanned Aerial Vehicles and Image Registration

Abstract

1. Introduction

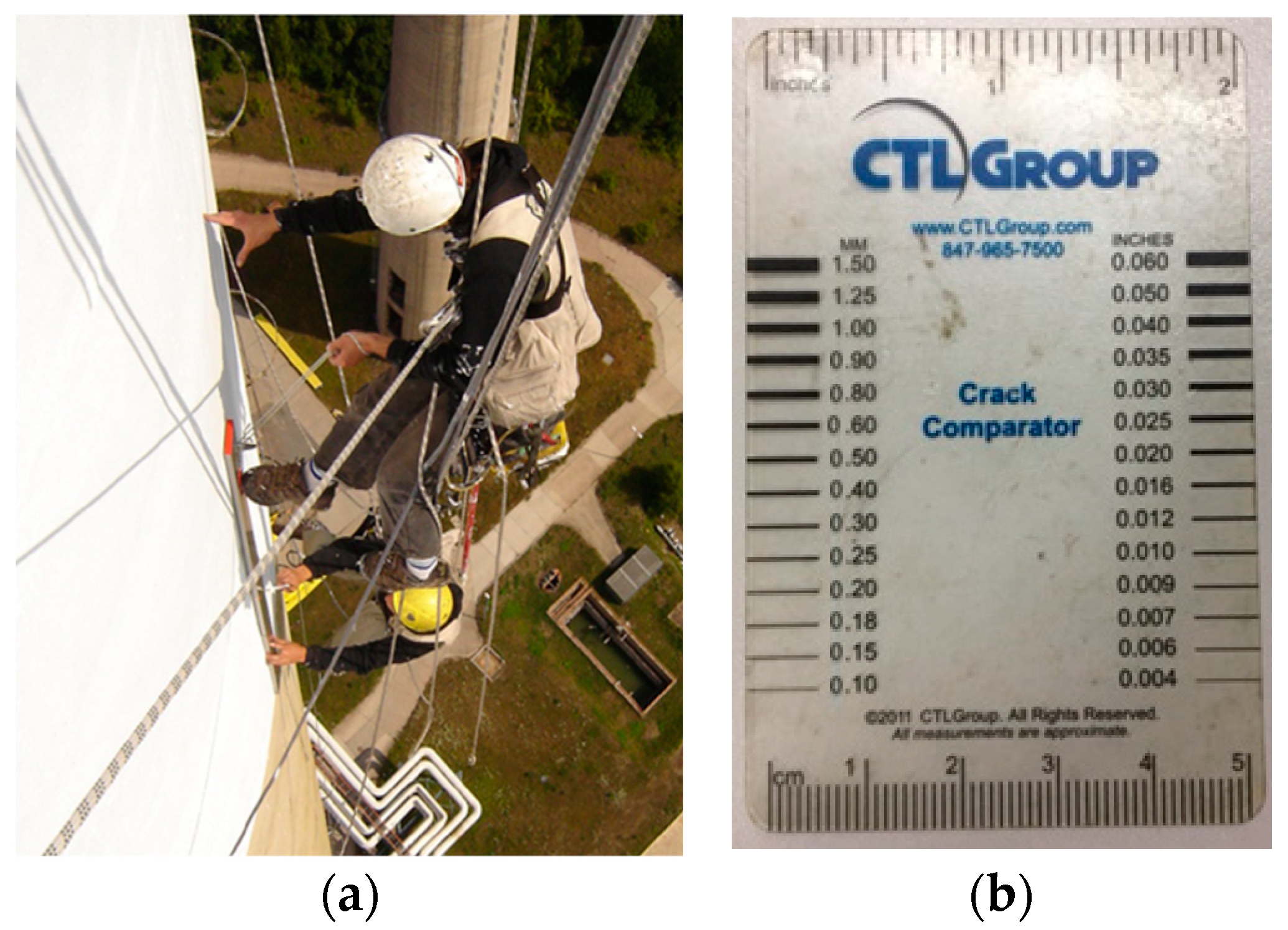

1.1. Background

1.2. Concrete Bridge Crack Inspection with UAV

1.3. Research Objective

2. Methodology

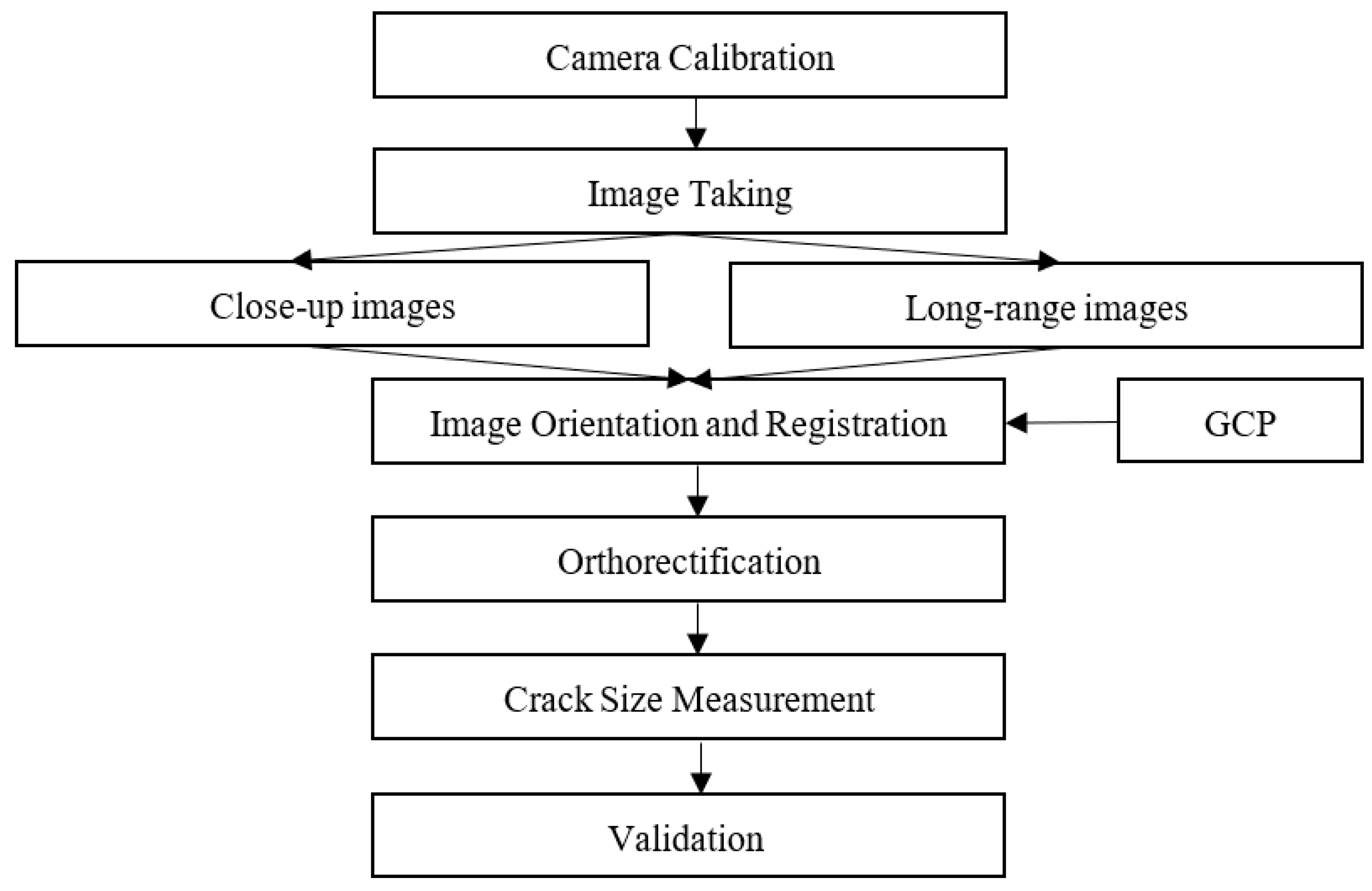

2.1. Workflow

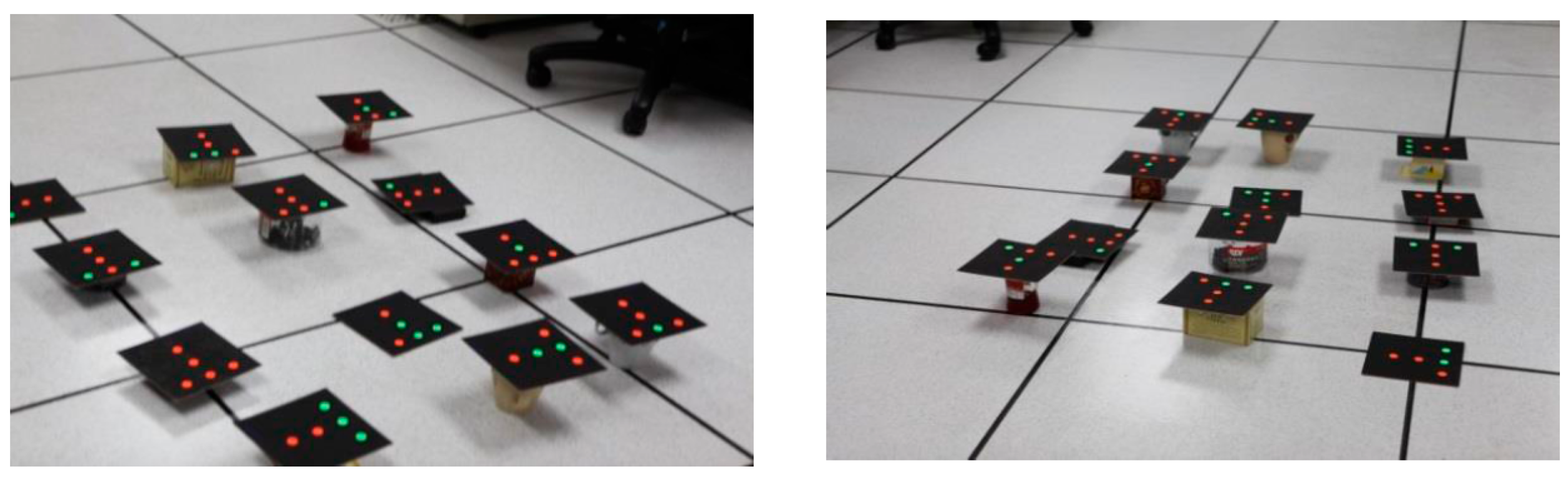

2.2. Camera Calibration

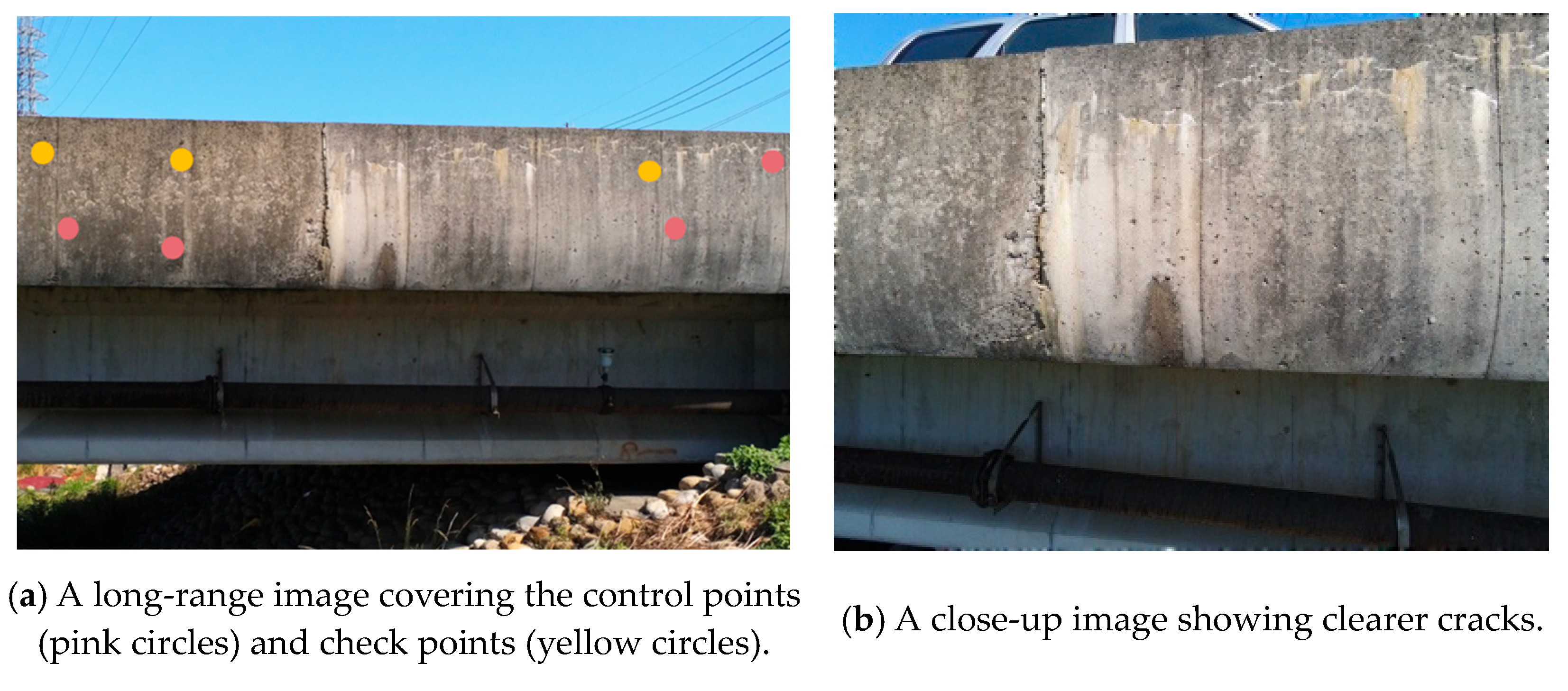

2.3. Image Taking

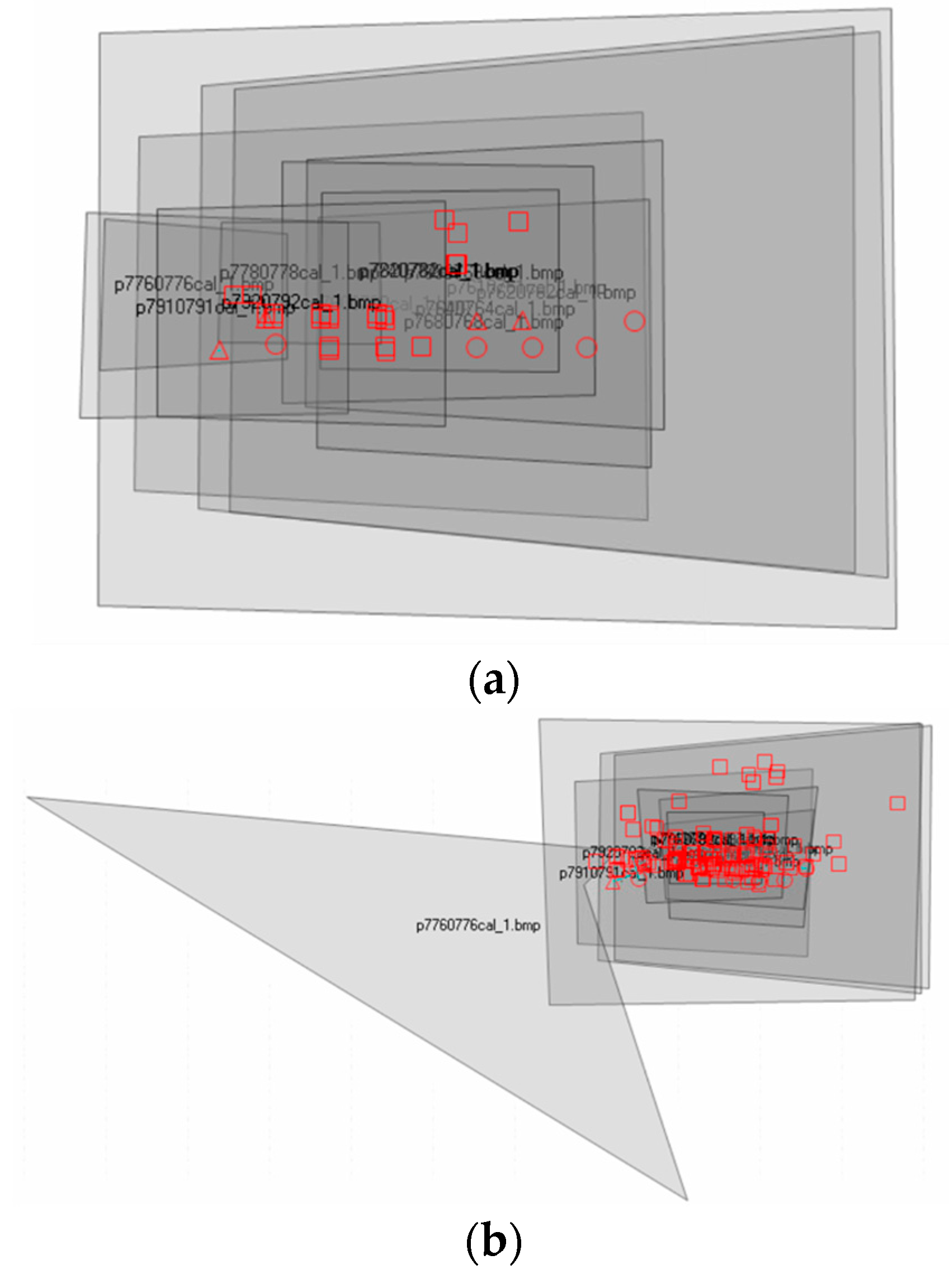

- (1)

- For long-range images, there should be at least three control points included to establish the absolute coordinate system, as shown in Figure 5a.

- (2)

- In order to clearly identify cracks and measure crack widths precisely, close-up images are taken, as shown in Figure 5b.

- (3)

- Image registrations are required to register close-up images to the absolute coordinate system via long-range images; thus we must ensure the sufficiency of overlapped regions between close-up images and long-range images.

- (4)

- Considering the flight safety, at least 1-m distance between the UAV and the bridge is suggested while taking images. To avoid crashes with obstacles, the images are taken from different orientations.

2.4. Image Orientation and Registration

2.4.1. Scale-Invariant Feature Transform (SIFT)

2.4.2. Automatic tie Point Generation in LPS

2.5. Orthorectification

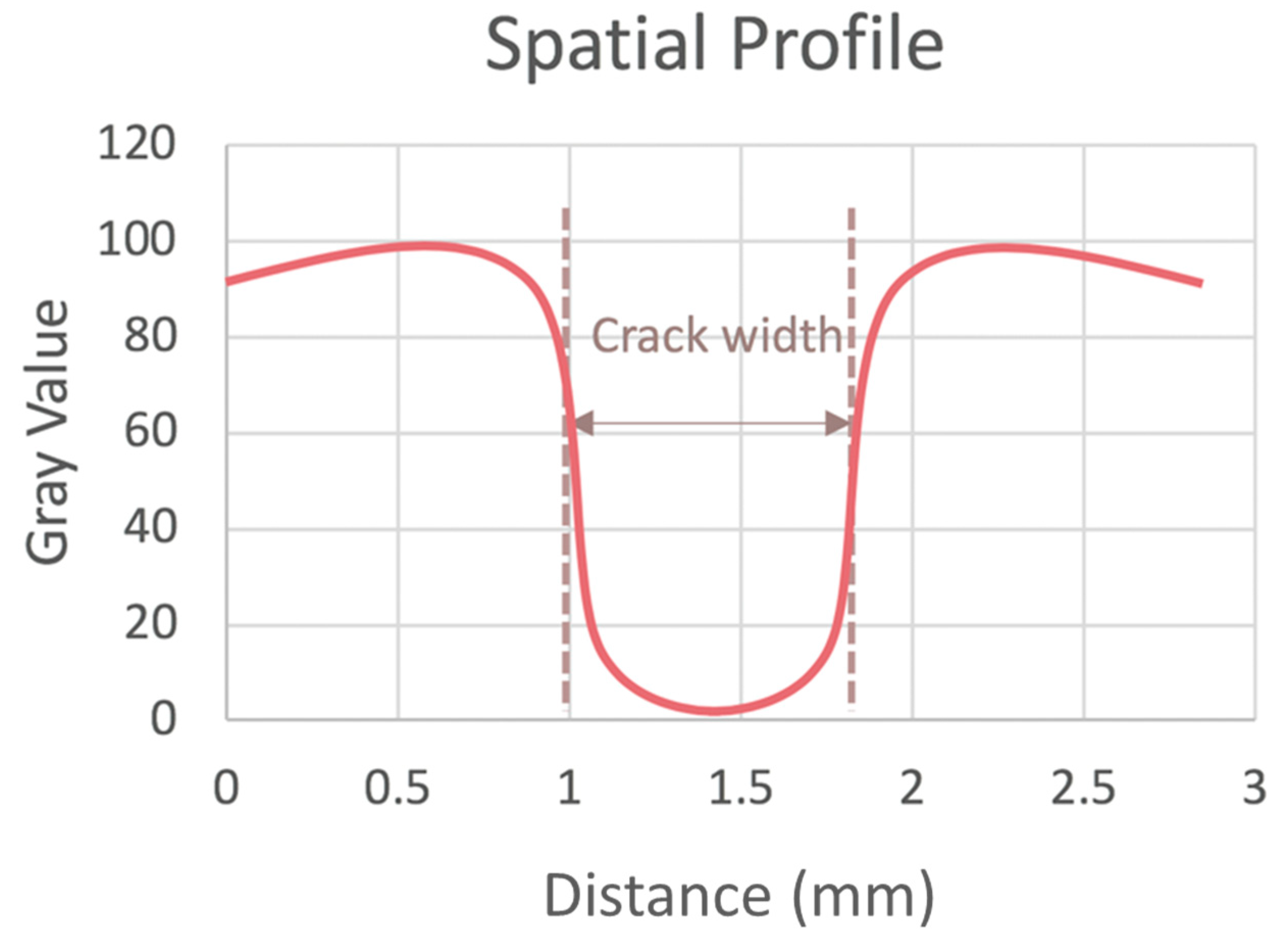

2.6. Crack Width Measurement

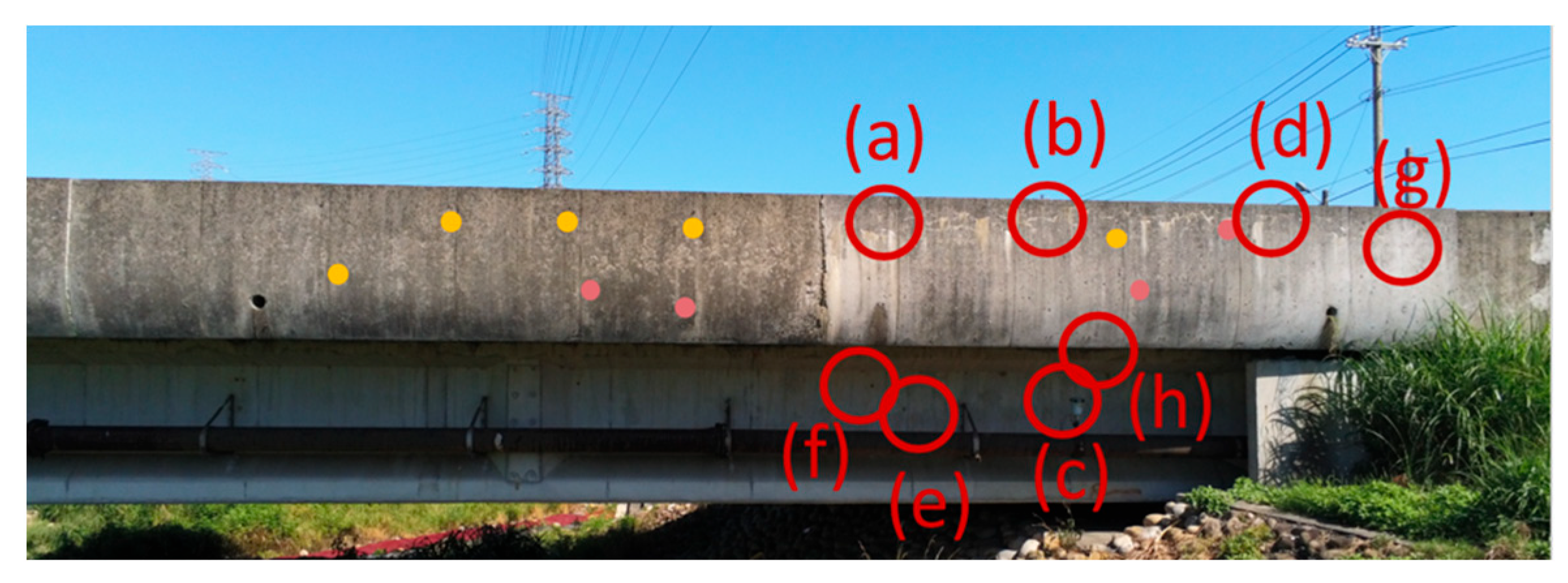

3. Results

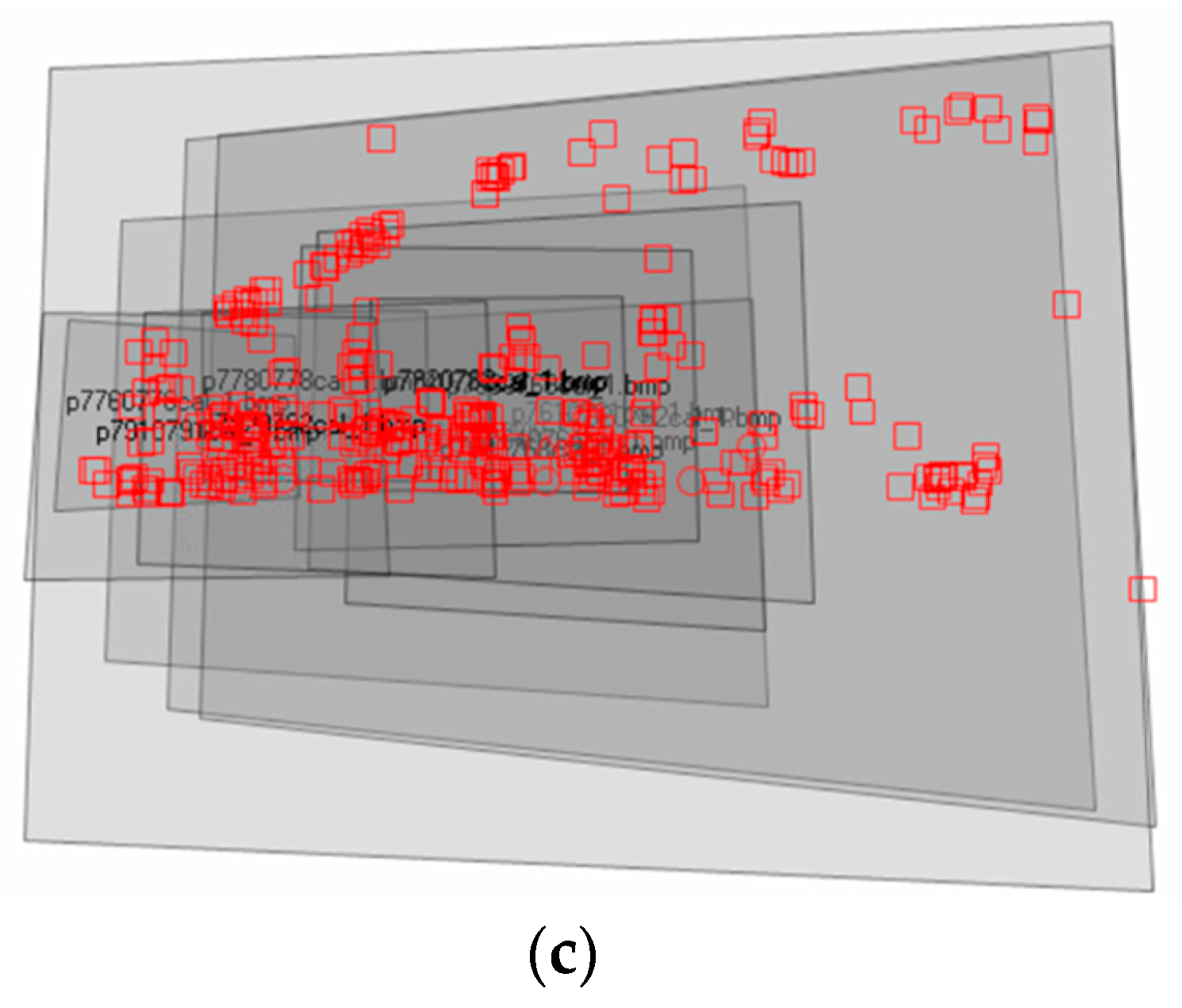

3.1. Image Orientation and Registration

- Method (1): Manually select tie points with features such as nails and dots on the bridge.

- Method (2): Randomly input 250 tie points generated from SIFT.

- Method (3): Randomly input 110 tie points generated from SIFT as the initial tie points and perform automatic tie generation with LPS until 350 tie points are reached.

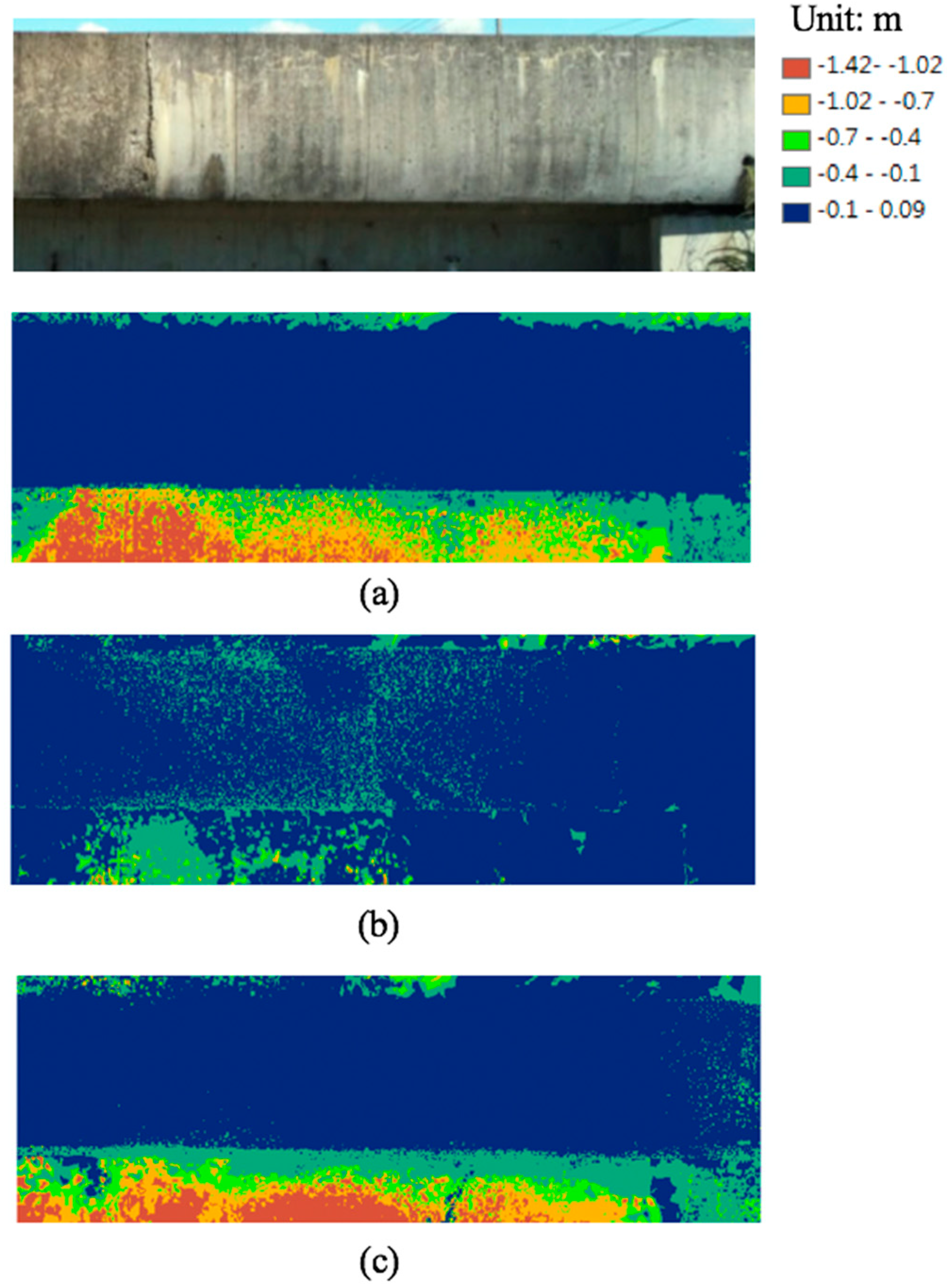

3.2. Orthorectification

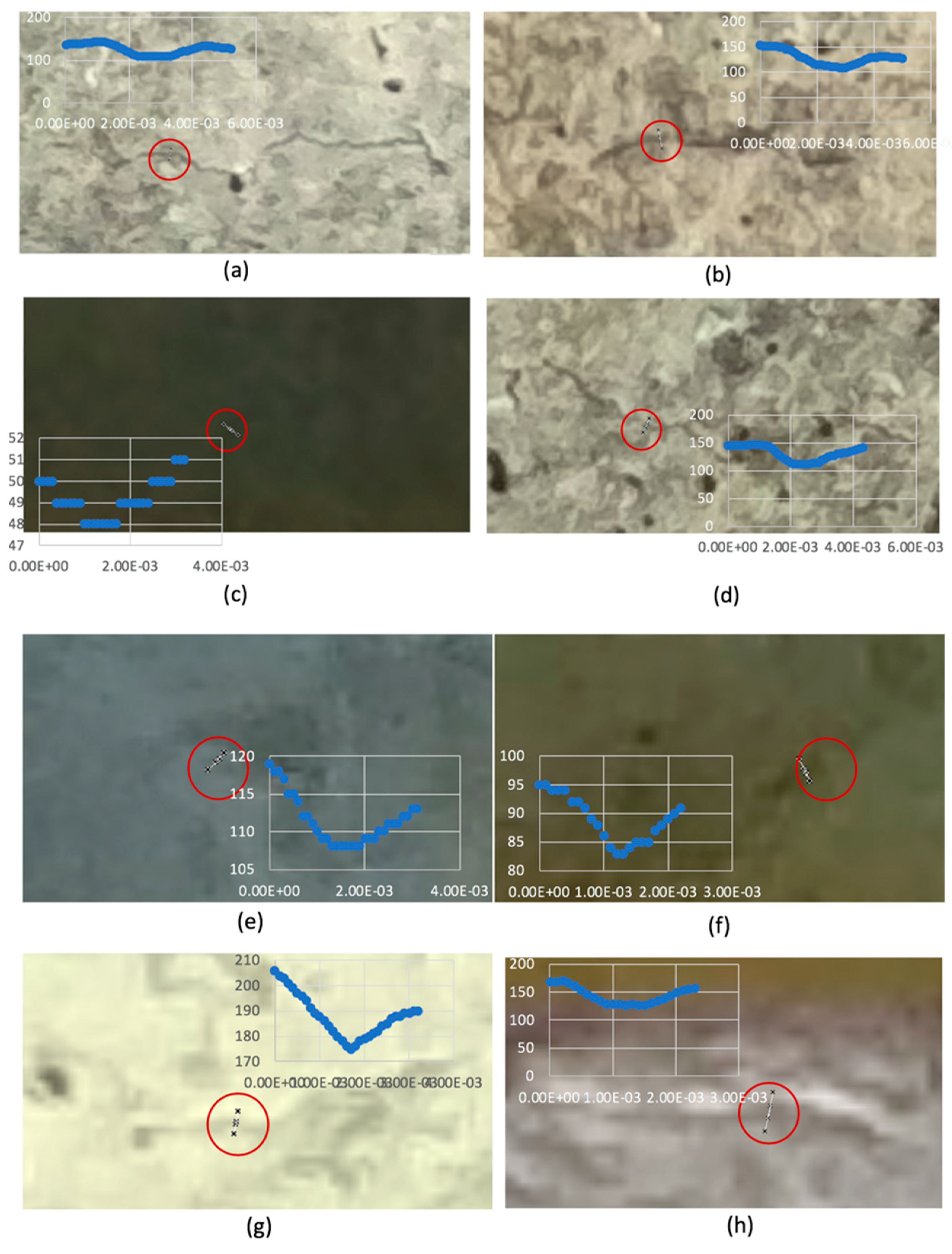

3.3. Crack Size Measurement

- (1)

- M1: Measure cracks manually in the ortho-images that the tie points retrieved manually.

- (2)

- M2: Measure cracks with inflection points in the ortho-images that the tie points retrieved manually.

- (3)

- M3: Measure cracks manually in the ortho-images that the tie points retrieved only from SIFT.

- (4)

- M4: Measure cracks with inflection points in the ortho-images that the tie points retrieved only from SIFT.

- (5)

- M5: Measure cracks manually in the ortho-images that the tie points retrieved from SIFT and LPS.

- (6)

- M6: Measure cracks with inflection points in the ortho-images that the tie points retrieved from SIFT and LPS.

- First of all, as aforementioned, M3 and M4 have large errors in block triangulation, and three ortho-images cannot be generated that are required for cracks (b), (c), (d), (g), and (h). Hence, as shown in Table 9, there is no measurement from M3 and M4 for these cracks. With only three measurements, we decided to exclude these two methods from Table 8. Please also notice that these unavailable measurements are not shown in Figure 12.

- In addition to M3 and M4, M6 (i.e., the most automatic solution) has the worst results, as shown in Figure 12. As shown in Table 9, M6 achieves 1.13 mm RMSD, 1.04 mm MD, and 187.1% MRD, which shows that the distribution of tie points is more important than the number of tie points. Moreover, tie points selected with SIFT are not accurate enough to establish an accurate 3D absolute coordinate system. The errors from the tie point selection and the generation of DEMs propogate the final crack size measurements.

- In the experiment, the ortho-images are resampled to a 0.1 mm resolution, which is smaller than the original pixel size of the images (i.e., about 0.5 mm). During the interpolation process, non-crack pixels near crack edges receive values from crack pixels, which can eventually be mistaken as cracks. Therefore, the crack width measurements from ortho-images are always larger than the in situ measurements, which also causes displacement of the inflection points during crack size measurements.

- In addition, as shown in Table 9, crack size measurements from the inflection points with the M2, M4, and M6 solutions have obviously larger differences than the ones measured manually in cracks (c), (d), (e), (f), (g), and (h).

- As the reference data were measured by three surveyors and the cracks were very thin, surveyors faced difficulties when identifying the precise locations of crack edges. To be specific, there were 0.05 mm to 0.3 mm differences between the measurements from three surveyors, implying that it is hard to retreive very accurate ground truth data for the validation of crack size measurements.

- Overall, these observations in Table 9 indicate that manual work may still be more reliable than automatic processes when measuring very small targets (i.e., cracks) from UAV images.

- Furthermore, we discussed some detailed observations as follows.

- As shown in Figure 11, both cracks (a) and (b) are large and clear on the images. Therefore, as identifying the edges of these cracks is relatively easy, these two cracks have smaller differences in the in situ measurements. This also shows that the depths of the cracks and the illlumination of the crack areas strongly influenced the clearance of the images and further impacted the accuracy of the crack measurements.

- As shown in Figure 10, cracks (c), (e), and (f) are situated at the lower part of the bridge, where the low illumination conditions result in low gray values and low contrast, which cause difficulties in identifying the edges of cracks. Furthermore, the generated DEMs for the lower part of the bridge have large errors. As a result, these three cracks have larger differences in the in situ measurements than the ones located in the upper part of the bridge that receive enough illumination.

- Furthermore, for thin and shallow cracks, such as cracks (c), (e), and (f), which are blurry in the images, determining the edges of these cracks is a difficult task that may result in larger uncertainty. Thus, in Table 9, the accuracies of these cracks are relatively worse than other cracks when using both the manual and automatic methods.

- Moreover, since crack (e) is located in a concrete erosion area, the crack edges cannot be precisely identified, which also results in a large difference, as shown in Figure 12. The erosion areas and the texures of the concrete can cause huge errors for crack size measurements.

- In general, based on our experience, high-resolution images are always preferable. Multiple images taken from different orientations to measure the same cracks would also be helpful and more reliable.

4. Suggestions

- During our experiment, since the cracks are very thin, where some are close to the image resolution, identifying the crack edges is a very difficult task for both manual measurements and image processing. Therefore, higher-resolution cameras are required to take clearer images for crack size measurements.

- Taking images of cracks under low illumination condition results in a low contrast, which increases the difficulty and uncertainty when identifying the crack edges. Therefore, lighting equipment should be included.

- Identifying the edges of cracks that are located in concrete erosion areas is also difficult, where multiple images taken from different orientations can help to reduce the uncertainty.

- Since taking images closer can help obtain higher-resolution close-up images, the distance between the UAV and the bridge could be shortened if some safety equipment was included.

5. Conclusions

- (1)

- We propose a safe and efficient concrete crack inspection method by using UAVs.

- (2)

- We register close-up images and long-range images to establish the absolute coordinates of the cracks.

- (3)

- Compared to the in situ measurements from three surveyors, the best proposed approach can measure crack widths with a 0.18 mm root mean square difference (RMSD), 0.15 mm mean difference, and 25.41% mean relative difference.

- (4)

- The manual crack size measurements with automatic tie point generation combined with SIFT and LPS perform an acceptable result with a 0.46 mm RMSD, 0.4 mm mean difference, and 72.42% mean relative difference.

- (5)

- Even for surveyors collecting in situ measurements, it is still challenging to identify the edges of cracks, which means that the results could still be affected by subjective judgements.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mohan, A.; Poobal, S. Crack detection using image processing: A critical review and analysis. Alex. Eng. J. 2018, 57, 787–798. [Google Scholar] [CrossRef]

- Ministry of Transportation and Communications. The maintenance and inspection regulation of railway. In The Regulation of the Standard in Engineering Department; Ministry of Transportation and Communications for Railways: Taipei, Taiwan, 2018. Available online: https://www.rootlaw.com.tw/LawContent.aspx?LawID=A040110051025100-1090324 (accessed on 19 October 2019).

- ACI 224R-90. Control of Cracking in Concrete Structures; America Concrete Institute: Farmington Hills, MI, USA, 2008; Available online: https://www.researchgate.net/profile/Saad-Altaan/post/What-are-the-types-of-crack-and-their-causes-and-remedies-in-concrete-structures/attachment/5b4c79f8b53d2f89289a56d8/AS%3A648972947443713%401531738616217/download/224R_01.pdf (accessed on 15 April 2020).

- National Academies of Sciences. Engineering, and Medicine Control of Concrete Cracking in Bridges; The National Academies Press: Washington, DC, USA, 2017. [Google Scholar] [CrossRef]

- Sutter, B.; Arnaud, L.; Pham, M.T.; Gouin, O.; Jupille, N.; Kuhn, M.; Lulé, P.; Michaud, P.; Rémy, P. A semi-autonomous mobile robot for bridge inspection. Autom. Constr. 2018, 91, 111–119. [Google Scholar] [CrossRef]

- Oh, J.-K.; Jang, G.; Oh, S.; Lee, J.H.; Yi, B.-J.; Moon, Y.S.; Lee, J.S.; Choi, Y. Bridge inspection robot system with machine vision. Autom. Constr. 2009, 18, 929–941. [Google Scholar] [CrossRef]

- Adhikari, R.S.; Moselhi, O.; Bagchi, A. Image-Based Retrieval of Concrete Crack Properties for Bridge Inspection. Autom. Constr. 2013, 39, 180–194. [Google Scholar] [CrossRef]

- Duque, L. UAV-Based Bridge Inspection and Computational Simulations. Master’s Thesis, South Dakota State University, Brookings, SD, USA, 2017. Available online: https://www.proquest.com/dissertations-theses/uav-based-bridge-inspection-computational/docview/2009047567/se-2 (accessed on 15 April 2020).

- Muhammad, O.; Lee, M.; Mojgan, H.M.; Hewitt, S.; Parwaiz, M. Use of gaming technology to bring bridge inspection to the office. Struct. Infrastruct. Eng. 2019, 15, 1292–1307. [Google Scholar] [CrossRef]

- Hallermann, N.; Morgenthal, G. Visual Inspection Strategies for Large Bridges Using Unmanned Aerial Vehicles (UAV); IABMAS: Shanghai, China, 2014. [Google Scholar]

- Sankarasrinivasan, S.; Balasubramanian, E.; Karthik, K.; Chandrasekar, U.; Gupta, R. Health Monitoring of Civil Structures with Integrated UAV and Image Processing System. Procedia Comput. Sci. 2015, 54, 508–515. [Google Scholar] [CrossRef]

- Eschmann, C.; Wundsam, T. Web-Based Georeferenced 3D Inspection and Monitoring of Bridges with Unmanned Aircraft Systems. J. Surv. Eng. 2017, 143, 04017003. [Google Scholar] [CrossRef]

- Kim, H.; Lee, J.; Ahn, E.; Cho, S.; Shin, M.; Sim, S.H. Concrete Crack Identification Using a UAV Incorporating Hybrid Image Processing. Sensors 2017, 17, 2052. [Google Scholar] [CrossRef]

- Sauvola, J.; Pietikäinen, M. Adaptive document image binarization. Pattern Recognit. 2000, 33, 225–236. [Google Scholar] [CrossRef]

- Rehak, M.; Mabillard, R.; Skaloud, J. A Micro-UAV with the Capability of Direct Georeferencing. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; XL-1/W2; ISPRS: Prague, Czech Republic, 2013; pp. 317–323. [Google Scholar] [CrossRef]

- Gupta, R. Four Key GPS Test Consideration for Drone and UAV Developers; Spirent: Crawley, UK, 2015; Available online: https://www.spirent.com/blogs/gps_test_considerations_for_drones_and_uva_developers (accessed on 15 April 2020).

- Tahar, K.N.; Kamarudin, S.S. UAV Onboard Gps in Positioning Determination. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences; XLI-B1; ISPRS: Prague, Czech Republic, 2016; pp. 1037–1042. [Google Scholar] [CrossRef]

- Rau, J.Y.; Hsiao, K.W.; Jhan, J.P.; Wang, J.C.; Fang, W.C.; Wang, J.L. Bridge Crack Detection Using Multi-Rotary Uav and Object-Base Image Analysis. Commission 2017, VI, WG VI/4. Available online: https://www.int-arch-photogramm-remote-sens-spatial-inf-sci.net/XLII-2-W6/311/2017/isprs-archives-XLII-2-W6-311-2017.pdf (accessed on 19 November 2019).

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Lang, S.; Albrecht, F.; Blaschke, T. OBIA- Tutorial, Centre for Geoinformatics (Z_GIS); Paris-Lodron University Salzburg: Salzburg, Austria, 2011; Available online: https://studylib.net/doc/14895995/obia-%E2%80%93-tutorial-introduction-to-object-based-image-analys (accessed on 15 April 2020).

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef]

- Prasanna, P.; Dana, K.J.; Gucunski, N.; Basily, B.B.; La, H.M.; Lim, R.S.; Parvardeh, H. Automated Crack Detection on Concrete Bridges. IEEE Trans. Autom. Sci. Eng. 2016, 13, 591–599. [Google Scholar] [CrossRef]

- Xu, H.; Su, X.; Wang, Y.; Cai, H.; Cui, K.; Chen, X. Automatic Bridge Crack Detection Using a Convolutional Neural Network. Appl. Sci. 2019, 9, 2867. [Google Scholar] [CrossRef]

- Özgenel, Ç.F. Concrete Crack Images for Classification; V2; Mendeley Data. 2019. Available online: https://data.mendeley.com/datasets/5y9wdsg2zt/2 (accessed on 25 March 2019). [CrossRef]

- Maguire, M.; Dorafshan, S.; Thomas, R.J. SDNET2018: A Concrete Crack Image Dataset for Machine Learning Applications; Utah State University: Logan, UT, USA, 2018. [Google Scholar] [CrossRef]

- Zhang, Q.; Barri, K.; Babanajad, S.K.; Alavi, A.H. Real-Time Detection of Cracks on Concrete Bridge Decks Using Deep Learning in the Frequency Domain. Engineering 2021, 7, 1786–1796. [Google Scholar] [CrossRef]

- Choi, D.; Bell, W.; Kim, D.; Kim, J. UAV-Driven Structural Crack Detection and Location Determination Using Convolutional Neural Networks. Sensors 2021, 21, 2650. [Google Scholar] [CrossRef]

- Wang, J.; He, X.; Faming, S.; Lu, G.; Cong, H.; Jiang, Q. A Real-Time Bridge Crack Detection Method Based on an Improved Inception-Resnet-v2 Structure. IEEE Access 2021, 9, 93209–93223. [Google Scholar] [CrossRef]

- Zhang, J.; Qian, S.; Tan, C. Automated bridge crack detection method based on lightweight vision models. Complex Intell. Syst. 2022, 9, 1–14. [Google Scholar] [CrossRef]

- iWitness. iWitnessPRO Photogrammetry Software. Available online: https://www.iwitnessphoto.com/iwitnesspro_photogrammetry_software/ (accessed on 25 March 2019).

- Fraser, C.S. Digital Camera Selif-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctiveimage features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- LPS Manager. Introduction to Photogrammetry. User’s Guide. 2010. Available online: http://geography.middlebury.edu/data/gg1002/handouts/lps_pm.pdf (accessed on 18 June 2019).

- Förstner, W.; Gülch, D. A Fast Operator for Detection and Precise Location of Distinct Points, Corners and Centres of Circular Features. In International Archives of the Photogrammetry, Remote Sensing and Spatial Sciences, Intercommission Workshop; ISPRS: Interlaken, Switzerland, 1987; Available online: https://cseweb.ucsd.edu/classes/sp02/cse252/foerstner/foerstner.pdf (accessed on 15 April 2020).

- Remondino, F.; El-Hakim, S.F.; Gruen, A.; Zhang, L. Turning images into 3-D models. IEEE Signal Process. Mag. 2008, 25, 55–65. [Google Scholar] [CrossRef]

- Wang, Y. Principles and applications of structural image matching. J. Photogramm. Remote Sens. 1998, 53, 154–165. [Google Scholar] [CrossRef]

- Leprince, S.; Barbot, S.; Ayoub, F.; Avouac, J.P. Automatic and Precise Orthorectification, Coregistration, and Subpixel Correlation of Satellite Images, Application to Ground Deformation Measurements. Geosci. Remote Sens. IEEE Trans. 2007, 45, 1529–1558. [Google Scholar] [CrossRef]

- Laliberte, A.S. Acquisition, orthorectification, and object-based classification of Unmanned Aerial Vehicle (UAV) imagery for rangeland monitoring. Photogramm. Eng. Remote Sens. 2010, 76, 661–672. Available online: https://www.researchgate.net/publication/267327287_Acquisition_orthorectification_and_object-based_classification_of_Unmanned_Aerial_Vehicle_UAV_imagery_for_rangeland_monitoring (accessed on 15 April 2020). [CrossRef]

- Sun, Y.L.; Wang, J. Performance Analysis of SIFT Feature Extraction Algorithm in Application to Registration of SAR Image. In Proceedings of the MATEC Web of Conferences, Hong Kong, China, 26–27 April 2016; Volume 44, p. 01063. [Google Scholar] [CrossRef]

- Bhatta, B. Urban Growth Analysis and Remote Sensing: A Case Study of Kolkata, India 1980–2010; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

| Exposure Condition | Crack Width (in.) |

|---|---|

| Dry air or protective membrane | 0.016 |

| Humidity, moist air, soil | 0.012 |

| Deicing chemicals | 0.007 |

| Seawater, seawater spray, wetting, and drying | 0.006 |

| Water-retaining structures | 0.004 |

| Crack in Width x (in.) | Method of Maintenance |

|---|---|

| 0.012 < x < 0.025 | The beam should be repaired by filling the cracks and coating the end for 4 ft with an approved sealant. |

| 0.025 < x < 0.05 | The beam should be filled by epoxy injection and the end for 4 ft of the beam web coated with an approved sealant. |

| x > 0.05 | The beam should be rejected unless shown that the structural capacity and long-term durability are sufficient. |

| Total Image Unit Weight RMSE: 1.2 (μm) | |||

|---|---|---|---|

| Control Point RMSE | Check Point RMSE | ||

| Ground X (mm) | - | Ground X (mm) | 6.4 |

| Ground Y (mm) | - | Ground Y (mm) | 6.6 |

| Ground Z (mm) | - | Ground Z (mm) | 39.1 |

| Image X (μm) | 0.9 | Image X (μm) | 2.4 |

| Image Y (μm) | 1.3 | Image Y (μm) | 1.9 |

| Total Image Unit Weight RMSE: 68.1 (μm) | |||

|---|---|---|---|

| Control Point RMSE | Check Point RMSE | ||

| Ground X (mm) | - | Ground X (mm) | 152 |

| Ground Y (mm) | - | Ground Y (mm) | 387.8 |

| Ground Z (mm) | - | Ground Z (mm) | 290.9 |

| Image X (μm) | 5448.6 | Image X (μm) | 178.6 |

| Image Y (μm) | 1222.7 | Image Y (μm) | 744.5 |

| Total Image Unit Weight RMSE: 11 (μm) | |||

|---|---|---|---|

| Control Point RMSE | Check Point RMSE | ||

| Ground X (mm) | - | Ground X (mm) | 19.9 |

| Ground Y (mm) | - | Ground Y (mm) | 4.3 |

| Ground Z (mm) | - | Ground Z (mm) | 11 |

| Image X (μm) | 8.5 | Image X (μm) | 1.6 |

| Image Y (μm) | 5 | Image Y (μm) | 1.6 |

| Crack Size Measurement | Manual Measurement | Measurement with Inflection Point | |

|---|---|---|---|

| Tie Point Generation | |||

| Manual tie point selection | M1 | M2 | |

| Tie point generation with SIFT | M3 | M4 | |

| Tie point generation with SIFT-LPS | M5 | M6 | |

| Crack | (a) | (b) | (c) | (d) | (e) | (f) | (g) | (h) | |

|---|---|---|---|---|---|---|---|---|---|

| Surveyor | |||||||||

| Surveyor 1 | 1.4 | 1.4 | 0.55 | 0.9 | 0.35 | 0.45 | 0.55 | 0.6 | |

| Surveyor 2 | 1.3 | 1.2 | 0.5 | 0.95 | 0.35 | 0.45 | 0.45 | 0.65 | |

| Surveyor 3 | 1.4 | 1.1 | 0.6 | 1 | 0.35 | 0.45 | 0.4 | 0.65 | |

| avg. measurement | 1.37 | 1.23 | 0.55 | 0.95 | 0.35 | 0.45 | 0.47 | 0.63 | |

| Method | Mean Difference | Mean Relative Difference | Root Mean Square Difference |

|---|---|---|---|

| M1 | 0.15 | 25.41% | 0.18 |

| M2 | 0.80 | 147.37% | 0.9 |

| M5 | 0.40 | 72.42% | 0.46 |

| M6 | 1.04 | 187.1% | 1.13 |

| Crack | (a) | (b) | (c) | (d) | (e) | (f) | (g) | (h) |

|---|---|---|---|---|---|---|---|---|

| M1 | 1.5 | 1.4 | 0.6 | 1.2 | 0.7 | 0.5 | 0.5 | 0.8 |

| AD | 0.13 | 0.17 | 0.05 | 0.25 | 0.35 | 0.05 | 0.03 | 0.17 |

| RD | 9.76% | 13.51% | 9.09% | 26.32% | 100% | 11.11% | 7.14% | 26.32% |

| M2 | 1.5 | 1.67 | 1.67 | 2.22 | 1.64 | 0.9 | 1.55 | 1.24 |

| AD | 0.13 | 0.44 | 1.12 | 1.27 | 1.29 | 0.45 | 1.08 | 0.61 |

| RD | 9.76% | 35.41% | 203.64% | 133.68% | 368.57% | 100% | 232.14% | 95.79% |

| M3 | 1.5 | - | - | - | 1.3 | 0.9 | - | - |

| AD | 0.13 | - | - | - | 0.95 | 0.45 | - | - |

| RD | 9.73% | - | - | - | 271.14% | 100% | - | - |

| M4 | 2.08 | - | - | - | 2.38 | 1 | - | - |

| AD | 0.71 | - | - | - | 2.03 | 0.55 | - | - |

| RD | 51.82% | - | - | - | 580% | 122.22% | - | - |

| M5 | 1.4 | 1.7 | 1.4 | 1.3 | 0.9 | 0.7 | 0.9 | 0.9 |

| AD | 0.03 | 0.47 | 0.85 | 0.35 | 0.55 | 0.25 | 0.43 | 0.27 |

| RD | 2.44% | 37.84% | 154.55% | 36.84% | 157.14% | 55.56% | 92.86% | 42.11% |

| M6 | 1.42 | 2.33 | 1.67 | 2.33 | 1.67 | 1.39 | 2.14 | 1.33 |

| AD | 0.05 | 1.10 | 1.12 | 1.38 | 1.32 | 0.94 | 1.67 | 0.70 |

| RD | 3.90% | 88.92% | 203.64% | 145.26% | 377.14% | 208.89% | 358.57% | 110.47% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.-Y.; Huang, C.-Y.; Wang, C.-Y. Measurement of Cracks in Concrete Bridges by Using Unmanned Aerial Vehicles and Image Registration. Drones 2023, 7, 342. https://doi.org/10.3390/drones7060342

Li H-Y, Huang C-Y, Wang C-Y. Measurement of Cracks in Concrete Bridges by Using Unmanned Aerial Vehicles and Image Registration. Drones. 2023; 7(6):342. https://doi.org/10.3390/drones7060342

Chicago/Turabian StyleLi, Hsuan-Yi, Chih-Yuan Huang, and Chung-Yue Wang. 2023. "Measurement of Cracks in Concrete Bridges by Using Unmanned Aerial Vehicles and Image Registration" Drones 7, no. 6: 342. https://doi.org/10.3390/drones7060342

APA StyleLi, H.-Y., Huang, C.-Y., & Wang, C.-Y. (2023). Measurement of Cracks in Concrete Bridges by Using Unmanned Aerial Vehicles and Image Registration. Drones, 7(6), 342. https://doi.org/10.3390/drones7060342