1. Introduction

Recently, unmanned aerial vehicles (UAVs) have been extensively utilized in both civilian and military applications [

1]. When a UAV is performing a task, it needs to constantly verify its position through the navigation system, in order to make adjustments. Currently, there are three main categories of navigation method: inertial navigation [

2], satellite navigation, and vision-based navigation [

3,

4]. Inertial navigation is prone to accumulating positioning errors over time, resulting in poor long-term accuracy [

5]. Satellite navigation is susceptible to interference, due to its reliance on radio communication. Therefore, selecting an appropriate UAV navigation method according to the specific task is crucial. Presently, navigation in GNSS (global navigation satellite system) denial environments heavily relies on vision sensors, lidar, and inertial units [

6]. In recent years, with the rapid advancement of computer vision, vision-based navigation has emerged as a promising research direction, due to its excellent anti-interference ability and capability to capture richer environmental information. UAV visual navigation involves matching ground area images obtained from an image sensor with stored reference images, to obtain the flight position and other data. Image matching is critical in UAV visual navigation, as it directly influences the performance of the navigation system.

Obtaining UAV aerial images and satellite imagery typically involves different sensors and is done during different seasons. The non-linear differences arising from variations in imaging time and imaging mechanisms pose challenges for image matching. UAV aerial images are digital images captured through continuous shooting of the ground using a camera mounted on a UAV during flight. The optical lens and sensor in the camera convert the light in the scene into digital signals. On the other hand, satellite imagery is generated by remote sensing sensors on satellites that continuously scan the ground and convert the light into digital signals using optical lenses and sensors. The distinct imaging mechanisms of UAV aerial images and satellite images result in differences in data quality, resolution, and remote sensing information. Furthermore, seasonal changes also contribute to disparities between drone aerial images and satellite images. Lighting, tone, texture, and shape may differ due to seasonal variations, such as changes in vegetation growth, color, ground object coverage, and weather conditions. For instance, in summer, dense vegetation may cause occlusion or partial occlusion of ground objects. In winter, snow and ice cover may significantly alter the texture and shape of ground objects. These seasonal fluctuations can obscure keypoints with similar features, rendering many feature-based image matching methods ineffective.

With the rapid advancement of deep learning, particularly the widespread adoption of convolutional neural networks (CNNs) [

7,

8], CNN-based image matching methods have become commonplace, including their application to unmanned aerial vehicle (UAV) aerial image matching [

9]. In response to the issue of light changes, scale transformations, complex backgrounds, etc., Ref. [

10] presented a novel UAV visual positioning algorithm based on A-YOLOX and combining deep learning methods. However, there is currently a dearth of publicly available, realistic databases of UAV aerial images that capture seasonal changes, especially datasets containing images of winter scenes with snow. This paucity of data severely hampers research on matching UAV aerial images with satellite imagery in different seasons.

By conducting research on the matching technology of UAV aerial images and satellite imagery in different seasons, positional estimation of UAVs in complex seasonal environments can be achieved. This improves the navigational ability of UAVs in complex seasonal environments and provides technical support for their practical application in various challenging environments. The objective of this paper was to address the limitations of previous studies, particularly in the context of complex seasonal changes such as snow-covered UAV aerial images. A block matching method for UAV aerial images and satellite imagery in different seasons, based on a deep learning similarity measurement network, is proposed in this paper. The investigation of matching technology between UAV aerial images and satellite imagery in different seasons is of significant importance to the field of UAV visual navigation in complex seasonal environments.

The contributions of this paper are summarized below:

This paper represents one of the few dedicated works on the challenging task of matching season-changing UAV aerial images with satellite imagery. To the best of our knowledge, limited research has been conducted in this area, making our work a valuable contribution to the field;

A method is proposed for estimating the scaling factor of UAV aerial images, with the aim of mitigating the influence of scale differences between UAV aerial images and satellite images on the matching results;

A two-channel deep convolutional neural network, combined with a convolutional block attention model, is proposed, to learn specific seasonal feature representations of heterogeneous images in different seasons, particularly for snowy UAV aerial images and satellite imagery.

The rest of this paper is organized as follows:

Section 2 provides a review of the related literature, with a particular focus on image matching methods. In

Section 3, we elaborate on the design process of our proposed method, 2chADCNN, including details on the scale transformation of UAV aerial images, network model structure, and parameter settings.

Section 4 presents the specific experimental details and reports the experimental results. In

Section 5, we evaluate the performance of our method through comparative experiments. Finally, in

Section 6, we conclude our study and provide our thoughts on the experiments.

2. Related Works

Image matching refers to establishing reliable feature correspondences between two or more images, it is widely used in tasks such as image alignment, 3D reconstruction, and target localization and recognition [

11]. As a pre-processing step, a correct image matching result is crucial for 3D reconstruction and other computer vision tasks.

The existing image matching methods can be classified into three categories: area-based, feature-based, and learning-based methods [

12]. Area-based methods [

13,

14] typically use a small template image and compare its similarity with a large target image, to search for the most similar position. The traditional area-based matching methods based on gray information usually employ pixel-by-pixel comparisons, such as SAD (sum-of-absolute-differences) [

15], SSD (sum-of-squared-differences) [

16], and NCC (normalized-cross-correlation) [

17]. These methods have the advantages of simple operation and fast calculation; however, the accuracy is significantly reduced when image distortion, different lighting, and different sensors are present. Meanwhile, they require that the template and the target image have the same scale. In practical applications, it is crucial to choose an appropriate similarity measurement based on specific application scenarios and requirements, as the similarity measurement method is the core for template matching.

The feature-based matching method [

18] refers to extracting certain features from two or more images, then describing the extracted features in a specific way, and using a specific metric function for comparison, to determine whether they match or not. Point features, line features, and surface features are common image features. Meanwhile, feature-based matching methods can be divided into two types: traditional and learning-based. The traditional matching process based on feature points generally includes three steps: feature point detection, feature description, and feature matching. In 2004, David Lowe [

19] developed the SIFT (scale-invariant feature transform) algorithm, which addresses the limitation of scale invariance in the Harris [

20] corner detection operator. This algorithm enables the detection of feature points beyond corner points, while maintaining invariance for translation, rotation, and scaling. Subsequently, the authors of [

21,

22] proposed solutions for the high time complexity to the SIFT algorithm. In recent years, Li Jiayuan [

23] proposed the RIFT algorithm, which enhances the repeatability and accuracy of feature point detection through a feature description method based on local orientation statistics and a feature matching method based on rotation invariance. The above traditional methods have shown good performance. However, they still face challenges in handling large viewing angles, temporal differences, as well as scale and illumination variations between images [

24].

In recent years, motivated by the rapid advancement of deep learning networks, researchers have proposed various innovative learning-based methods to overcome the limitations of traditional feature matching techniques. These learning-based approaches can be categorized into three categories.

The first type of methods are learnable feature point detection approaches, such as Key.Net [

12] and TILDE [

25]. Among these, TILDE designs a repeatable key point detection framework that can extract feature points that are robust to changes in illumination and weather conditions. However, the training of candidate feature points in this framework relies on reproducible keypoints being extracted by the SIFT algorithm and prior knowledge to identify possible feature points.

The second type of methods are learnable local descriptor extraction approaches. The key to feature description in this type of methods is measuring the similarity between image patches. Learnable local descriptor extraction methods can be categorized into two categories based on the presence of a metric layer: metric learning and descriptor learning. Metric learning typically employs the Sigmoid function in the last layer to convert the continuous-valued output into a matching probability score ranging from 0 to 1. In 2015, Han [

26] proposed the MatchNet similarity measurement network, which utilizes two branch networks for feature extraction, and subsequently merges the two feature vectors via the measurement layer to generate a similarity score. The training process of MatchNet employs a contrastive loss function, which causes similar images to have close similarity scores and dissimilar images to have distinct similarity scores, thereby facilitating effective image similarity measurement. The study in [

27] improved on the two-channel model of DeepCompare [

28], and proposed BBS-2chDCNN. Satellite image matching based on a deep convolutional neural network is realized by learning the matching patterns between satellite images. This method is suitable for the matching of heterogeneous, multi-temporal, and different resolution satellite images. Descriptor learning methods without metric layers include PN-Net [

29], DeepCD [

30], L2-Net [

31], HardNet [

32], etc. This type of model outputs feature descriptors, which can directly replace traditional feature descriptors in some applications, while the similarity measure still uses traditional methods to calculate the distance between descriptors. The descriptor extraction effect directly affects the matching performance, so the design of the loss function and the selection of the input format of the training data are very important.

The third method is an end-to-end deep learning-based image matching approach. End-to-end methods such as LIFT [

33], D2-Net [

34], and LF-Net [

35] aim to jointly train the feature point detection and feature extraction networks to automate the process of detecting feature points and extracting features. This approach effectively enhances the stability of key points and the accuracy of features. Among these methods, SuperPoint [

36] performs feature point detection using an encoder–decoder network structure, which includes a feature localization decoder and a feature description decoder. It is first trained on synthetic images using a self-supervised learning method and then fine-tuned on real images, thereby avoiding the need for a large number of manual annotations. This algorithm extracts the position and descriptor of feature points simultaneously, resulting in improved feature extraction performance. Superglue [

37] employs a graph neural network (GNN) to learn the correspondence between image pairs, which can be used to match the feature points and feature descriptors extracted by the SuperPoint model. Instead of sequentially performing image feature detection, description, and matching, LoFTR [

38] builds pixel-level dense matches at a coarse level, and then refines good matches at a fine level. Considering that a lot of information will be lost when using methods based on a CNN structure, Ref. [

39] proposed a transformer-based network to extract more contextual information, which effectively improved the matching accuracy.

In recent years, an increasing number of methods have been proposed for UAV aerial image matching. Ref. [

40] was based on the RANSAC feature matching method, which utilizes SURF feature points to match UAV aerial images with Google satellite imagery. In response to the issue of redundant points in traditional matching algorithms based on local invariant features, Ref. [

41] proposed a UAV aerial image matching algorithm based on CenSurE-star, where the CenSurE-star filter was employed to extract feature points in the images. Ref. [

42] searched for matching positions of UAV aerial images on satellite imagery using a mutual information similarity measurement. Meanwhile, Ref. [

43] used normalized cross-correlation to calculate the similarity, while [

44] improved upon mutual information by employing the normalized information distance as a similarity measurement to achieve more accurate matching results. Additionally, Ref. [

45] proposed an image matching framework based on region division, utilizing an improved SIFT algorithm to merge the detected of Harris corners into keypoint sets. Notably, Ref. [

45] addressed the matching problem of season-changing UAV aerial images but ultimately employed remote sensing images to simulate UAV aerial images. In response to the issue of weather, light condition, and seasonal changes, Ref. [

46] proposed an image matching framework that exploited a CNN-based Siamese neural network with a contrastive learning method, but the situation of ice and snow cover in winter was not effectively resolved. As a consequence, limited research has been conducted on snow-covered UAV aerial images during winter, making further investigation in this area highly relevant.

Nowadays, the challenge of achieving absolute visual positioning of UAVs needs to overcome the differences in image features caused by seasonal changes in heterogeneous images. The traditional methods lack the ability to address the nonlinear differences introduced by seasonal changes. Deep learning-based methods, on the other hand, can learn different levels of feature representation through convolutional layers at various positions, making them more robust to seasonal changes. However, the selection of training samples significantly impacts the matching effectiveness of deep learning-based methods. Currently, there is a lack of publicly available and authentic UAV aerial image datasets with seasonal changes in the research field of UAV absolute visual localization, particularly datasets that include winter snowy images. To further advance research in this field, this paper focuses on the matching of UAV aerial images and satellite images (including winter snowy images) in different seasons.

3. Proposed Methodology

Seasonal changes pose significant challenges for matching UAV aerial images with satellite imagery, particularly in snow-covered scenes, where UAV aerial images often lack details compared to images from other seasons. Please refer to

Figure 1.

Based on a two-channel deep convolutional neural network [

27], this paper proposes a patch matching method dubbed 2chADCNN for UAV aerial images and satellite imagery in different seasons, to address the challenge of UAV visual positioning with changing seasons. A flowchart of the proposed algorithm is illustrated in

Figure 2.

First, the scaling factor of UAV aerial images is estimated based on the GSD (ground sampling distance) [

47] of both UAV aerial images and satellite imagery. The UAV aerial images are then scaled to enable image matching at consistent scales. Next, an attention model is integrated with the two-channel deep convolutional neural network model to provide additional supervision on low-level and high-level features of the images. A 2chADCNN similarity measurement network model is designed to learn similarity measures between UAV aerial image patches and satellite imagery sub-image patches in different seasonal scenes. Finally, a template matching scheme with a traversal search strategy is utilized to calculate and compare the similarity between a UAV aerial image patch and satellite imagery sub-image patch to complete the image matching task.

3.1. Problem Formulation

In this study, our aim was to solve the problem of UAV visual positioning in GNSS-denied environments via image matching. In what follows, we briefly formulate the problem in a formal way and then outline the general flow of processing.

Let be a UAV aerial image with dimensions of and be a satellite imagery. In order to eliminate the disparity in scales between UAV aerial images and satellite imagery, a scaling factor is proposed, to resize to , which is used as a template for searching for corresponding areas in the larger satellite imagery (with dimensions of 256 × 256, where 256 is greater than both and ). will be combined with a sub-image of to form a pair of image patches , where is obtained using a search strategy that involves sliding a window in the satellite imagery , with a fixed step size of pixels. The window size is set to match the size of the UAV aerial image .

Next, a two-channel pair of image patches

is fed into the trained 2chADCNN network in

Section 3.3 to obtain the similarity score

for each pair of image patches

. Finally, the position of

corresponding to the sub-image patch

with the highest similarity is found and saved through similarity comparison, expressed as:

where

is the step size (5 pixels was chosen based on experience). The position of

is the final location of

in the satellite imagery

.

In the following sections, we will present the scaling processing and 2chADCNN architecture.

3.2. Scaling

The disparity in scales between the aerial images captured by unmanned aerial vehicles (UAVs) and satellite images poses a common challenge in UAV absolute visual positioning. To address this issue, this paper proposes a scaling transformation method for UAV aerial images.

GSD is a metric that is very useful for photogrammetry and measurements, especially for UAV mapping and surveying. It represents the actual distance on the ground represented by each pixel. GSD is defined as the ground distance corresponding to the midpoint of two adjacent pixels in an image. This metric is related to the camera focal length, the resolution of the camera sensor, and the distance between the camera and object. The bigger the value of the image GSD, the lower the spatial resolution of the image and the less visible details. In this paper, we calculate the scale factor of the UAV aerial image based on the GSD ratio between the UAV aerial image and the satellite imagery.

The scaling factor

of the UAV aerial image

can be calculated using the following formula:

where

[

48] is the GSD of the UAV aerial images captured by the on-board camera of one drone and

[

49] is the GSD of satellite imagery,

where

f is the focal length of the lens.

is the distance from the center of the camera to the ground, and

d is the pixel size of the camera.

represents latitude information, and it is the latitude value of the geographic center of the area where the UAV collects data.

is the Earth’s equatorial length.

l is the satellite imagery level. (In this paper,

l is 15).

The UAV aerial image is scaled via

to ensure consistency in scale with the satellite imagery. This can enhance the accuracy of image matching between the two heterogeneous modalities. Then, let the resized UAV aerial image be

with

, and the size can be obtained by

where

w and

h are the width and height of the image

before resizing, and

and

are the width and height of the image

after resizing.

3.3. Network Architecture

Due to dense and powerful representations, feature points are key for various computer vision tasks. However, the world consists of higher-level geometric structures that are semantically more meaningful compared to points. Therefore, here, we need to explore how to extract better, more natural geometric cues about the structure of a scene. Considering that, in challenging conditions such as with significant variations in the image radiometric content (extreme illumination changes, historical vs. modern sensor, light vs. shadows etc.), deep-learning approaches work far better. In this paper, we propose a deep-learning approach with attention modules for place recognition for UAV navigation under challenging conditions. This focuses on multi-temporal and multi-season image matching and, in particular, deals with the impact of snow coverage.

The two-channel network architecture is a fast and accurate convolutional neural network (CNN) that requires less training and offers significant advantages in network response time compared to other deep learning networks. As a result, it is often employed for image matching problems. The 2chADCNN model deepens the network architecture and incorporates an attention model based on the two-channel approach to enhance its ability for nonlinear expression and feature learning.

The proposed 2chADCNN similarity measurement network consists of five commonly used layers in computer vision networks: a convolutional layer (conv), a fully connected layer (dense), a pooling layer, an activation function layer, and a flattening layer (flatten), along with a convolutional block attention model [

50]. Each attention module includes a channel attention module and a spatial attention module, as detailed in

Section 3.3.1. The input of 2chADCNN is a two-channel image synthesized from the preprocessed UAV template image patch and satellite sub-image patch. The output feature map is a one-dimensional scalar that represents the similarity score. The network structure is depicted in

Figure 3.

The image passes through the first layer of convolution and the first attention module to extract basic local features, such as edges and corners, in the lower layers, and assign weights to them. Subsequently, features are further extracted through three convolutional layers. Then, a maxpooling layer is employed for downsampling, to reduce the dimensions of the feature map, which can accelerate the model calculation speed and increase rotation invariance, thereby improving the model’s robustness. Global features are then extracted through three additional convolution layers, and a second attention module is connected after the last convolutional layer, to assign weights to the high-level image semantic features. Finally, the outputting feature map is a one-dimensional scalar through a flattening layer and two fully connected layers, which yields a similarity score ranging from 0 to 1. This score indicates the probability that two images belong to the same class, thus providing a classification result of either similar or dissimilar (a score close to 1 denotes similarity, while a score close to 0 implies dissimilarity).

The second to fourth convolutional layers of the 2chADCNN network use the same padding mode to fill the edge pixel of the image with zeros, in order to increase the image resolution. The ReLU activation function is used in all convolutional layers to enhance the nonlinear capability of the network. The first fully connected layer uses the tanh activation function to scale the extracted features to [−1, 1], enabling the last fully connected layer to better capture relationships between different features and improve the model classification accuracy. The sigmoid activation function is used in the last fully connected layer to map the output results to [0, 1] and produce classification probabilities. The sigmoid activation function is denoted as follows:

where the input

x is the single node value of the final output of the network. The range of

is from 0 to 1, which is the similarity probability of the output.

3.3.1. Attention Module

The convolutional block attention module (CBAM) [

50] represents a method for fusing channel attention and spatial attention; refer to

Figure 3.

The channel attention is focused on “what” is meaningful, depending on the feature map.Each channel of the input feature map

F of

is regarded as a feature (e.g., texture, style). First, the small blocks

in each channel are compressed into a single block through max pooling and average pooling. They are then fed into the multi-layer perceptron MLP, which outputs the weight matrix

of

through the sigmoid function. Finally, a mask operation with the original input image

F is performed. The channel attention part is expressed as

where

and

represents the sigmoid function.

is the average pooling function,

is the max pooling function,

is the multi-layer perceptron, and

F is the input feature map.

The spatial attention is focused on “where” is meaningful in the feature map. In the spatial layer, first, the feature map

of

processed by the channel layer is also subjected to max pooling and average pooling, to compress multiple channels into a single channel. They are then fed into the two-dimensional convolution of

, which outputs the weight matrix

with

through the sigmoid function. Finally, we perform a mask operation with the original input image

. The channel attention part is expressed as

where

and

represents the sigmoid function.

is the average pooling function,

is the max pooling function,

is the convolution function of

, and

is the input feature map.

The input feature map size and output feature map size of the attention model are exactly the same. Thus, it can be applied in the middle of any convolutional layer. In this paper, an attention module is added after the first convolutional layer and the last convolutional layer, to emphasize attention to meaningful low-level and high-level features in the two main dimensions of channel and space.

3.3.2. Training

2chADCNN is straightforward to construct and simple to train, enabling automatic learning of the image matching mode and realization of the object-oriented matching process. In this paper, the model is trained in a supervised manner, using binary cross-entropy loss, which is represented by the following function:

where

N is the number of network output nodes,

is the label, and

is the predicted probability that

N nodes are labeled

. In this experiment, the output node

, and the labels are 1 and 0.

The experiment was conducted on a machine with 8GB of RAM, equipped with an Intel i5 quad-core processor. The 2chADCNN network was implemented using TensorFlow. Input images were resized, stacked to a size of , and normalized before being fed into the network. A mini-batch size of 20 and an initial learning rate of were used. Different learning rates and optimizers were experimented with, and it was observed that using an Adam optimizer with a learning rate of resulted in a better validation accuracy compared to larger learning rates, despite the latter converging faster. To mitigate the issue of forgetting in neural networks, the loading order of samples was randomly shuffled. The Adam optimizer was employed for network training, and the network was trained for 10 epochs.

In order to account for the significant variations in image features caused by seasonal changes, it is essential to obtain images of the same scene captured during different seasons for the training dataset. Therefore, we utilized historical Google satellite imagery of selected areas in Shenyang, China, spanning four seasons. The training and validation sets were then created by cropping these images to a size of pixels, resulting in 20,000 pairs each. Each pair consisted of two images from the same scene captured during different seasons, which served as positive samples, while pairs of images from different scenes were used as negative samples. The labels were set to 1 for positive samples and 0 for negative samples, ensuring an equal number of positive and negative samples.

4. Results

Datasets serve as the cornerstone of data-driven deep learning approaches. Over the past decades, various research institutions have released numerous high-quality datasets for UAV aerial images, which have provided impartial platforms for validating research into UAV vision and have significantly accelerated the development of related fields. However, there is a scarcity of benchmarks designed specifically for UAV visual localization in different seasons, particularly in winter. To address this limitation, we proposed a method to synthesize winter UAV aerial images using an image enhancement technique based on brightness thresholding, which adjusts the local pixel brightness to 255 in the HLS color space. Our synthetic dataset was generated from a captured summer image dataset and the Village dataset [

51], totaling 210 images. Some examples of the synthetic images are presented in

Figure 4.

Real UAV aerial images were collected from DJI UAVs flying over the northern region of Shenyang in China, with a resolution of

. The flight heights ranged from 100 to 500 m. Aerial images were collected during both summer and snowy winter seasons, as these two seasons exhibit significant differences in image features compared to other seasons, as depicted in

Figure 5.

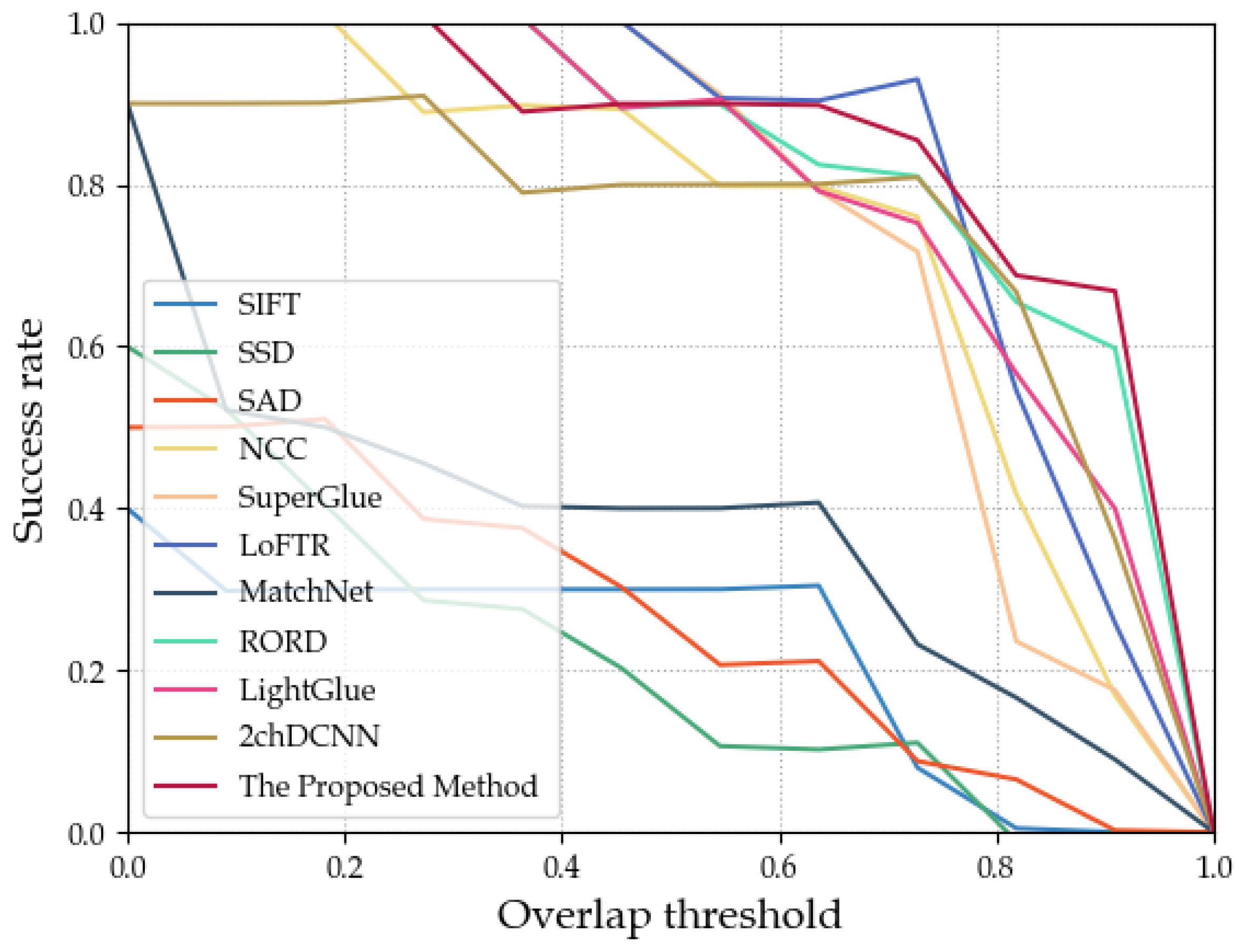

We tested the trained model on algorithmically synthesized UAV aerial images and satellite imagery datasets, as well as real UAV aerial images and satellite imagery datasets. Among them, there were UAV aerial images and corresponding satellite imagery datasets in different seasons, including synthetic winter snowy UAV aerial images paired with real spring satellite imagery (Syn-Winter), real summer UAV aerial images paired with real spring satellite imagery (Summer), and real winter snowy UAV aerial images paired with real spring satellite imagery (Winter).

In this paper, we utilized the overlapping rate of the predicted area of the UAV aerial image on the satellite image and the actual area on the ground as a measure of matching accuracy, expressed as

where

represents the area of the prediction area,

represents the area of the real area, and

calculates the area of the overlapping area between the prediction area and the real area. The larger the area of the overlapping area, the higher the accuracy of the overlap rate; that is, the better the matching result. In this chapter, the predicted area is represented by a purple box, and the real area is represented by a green box.

First,

Figure 6 presents the results of the positioning of four summer aerial images captured by UAVs at various flight heights. It is evident from the figure that the proposed method accurately determined the position of the UAV, with overlap rates exceeding 0.9 for all four pairs of test images.

Second, we conducted experiments on synthetic datasets. The accuracy of the overlap rate for the test images was greater than 0.8, which verified the effectiveness of the proposed method for matching the simulated snow scene images in winter with different flight heights. The matching results for the four pairs of syn-winter images are shown in

Figure 7.

Finally, we tested real winter snow scene drone aerial images and real spring satellite images. The matching results for the four pairs of real winter images are shown in

Figure 8. It can be observed from

Figure 8 that the accuracies of the overlap rates of the test images for matching real winter snowy UAV aerial images at different heights with spring satellite imagery were all greater than 0.8. This result confirmed the efficacy of the method proposed in this chapter for real winter snowy UAV aerial image matching. Next, we further validated the performance of the proposed method through a comparative experimental analysis.