Fast Opium Poppy Detection in Unmanned Aerial Vehicle (UAV) Imagery Based on Deep Neural Network

Abstract

:1. Introduction

- (1)

- To address the challenge posed by the varying size of opium poppies in different growth stages and its impact on detection performance, we introduce a novel attention model. This model integrates high-resolution and low-resolution features to bolster the model’s localization capabilities.

- (2)

- We propose a new training strategy to address the problem of poor accuracy of existing models because of occlusion and confused vegetation. Referring to human learning methods, we use a training strategy based on repetitive learning to find the hidden features of hard examples.

- (3)

- We design a lightweight opium poppy detection model (YOLOHLA-tiny) based on structured model pruning, which can achieve fast inference on embedded device platforms.

2. Related Works

2.1. UAV Remote Sensing

2.2. Opium Poppy Detection Based on CNN

2.3. Model Pruning

3. Materials and Methods

3.1. Image Acquisition and Processing

3.2. HLA Module

3.3. YOLOHLA Network

3.4. Repetitive Learning

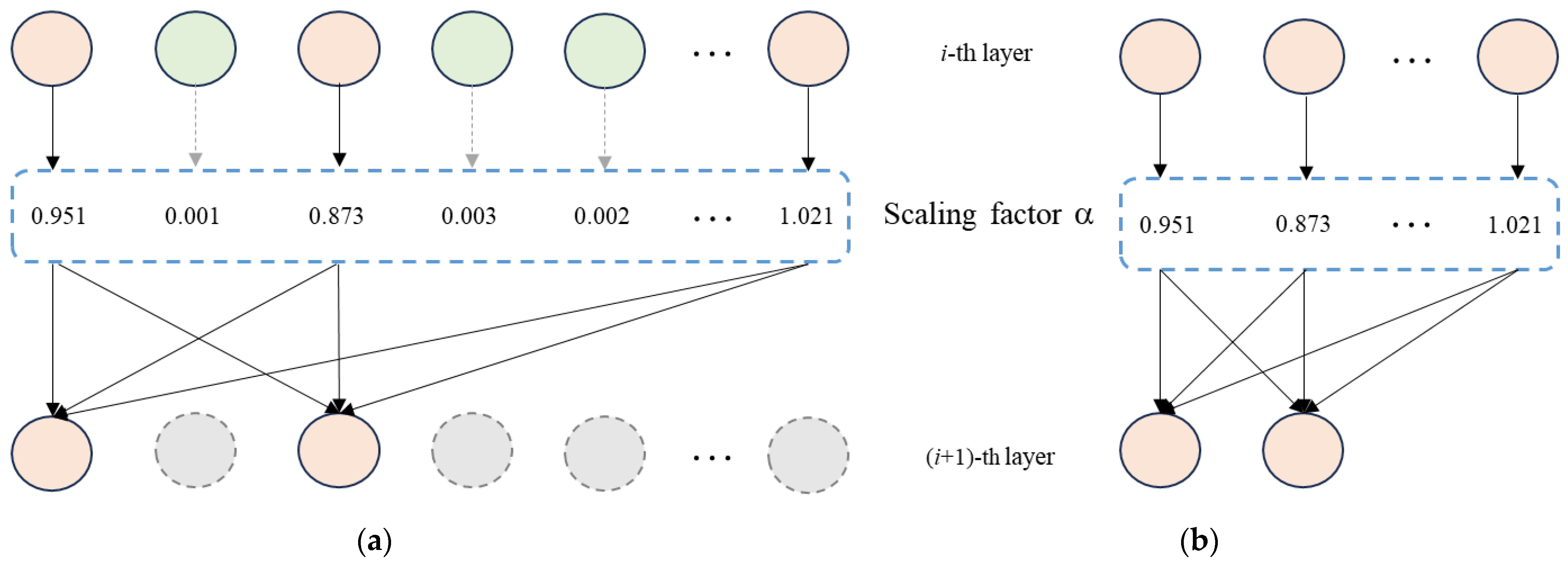

3.5. Structured Pruning of YOLOHLA

4. Experimental Results and Analysis

4.1. Implementation Details

- (1)

- Microsoft Corporation, Redmond, Washington, USA, CPU, Inter i7-12700F @ 48G;

- (2)

- NVIDIA Corporation, Santa Clara, California, USA, graphics card, GeForce RTX 3090 @ 24GB GPU;

- (3)

- operating system, 64-bit Ubuntu 20.04.2 LTS;

- (4)

- CUDA version 11.6;

- (5)

- Pytorch version 1.8.2.

4.2. Metrics

4.3. Comparison of Different Detectors

4.4. Comparison of Model Pruning

4.5. Results on Embedded Device

5. Ablation Studies

5.1. Impact of Attention Mechanism

5.2. Impact of Repetitive Learning

5.3. Impact of Pruning Ratio

6. Discussions

6.1. Comparisons on VisDrone2019 Dataset

6.2. Limitations

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bajpai, S.; Gupta, A.P.; Gupta, M.M.; Kumar, S. Inter-relationships between morphine and codeine in the Indian genetic resources of opium poppy. J. Herbs Spices Med. Plants. 2001, 8, 75–81. [Google Scholar] [CrossRef]

- Demir, S.; Başayiğit, L. Determination of opium poppy (Papaver somniferum) parcels using high-resolution satellite imagery. J. Indian Soc. Remote Sens. 2019, 47, 977–987. [Google Scholar] [CrossRef]

- Zhou, J.; Tian, Y.; Yuan, C.; Yin, K.; Yang, G.; Wen, M. Improved UAV opium poppy detection using an updated YOLOv3 model. Sensors 2019, 19, 4851. [Google Scholar] [CrossRef]

- Moshia, M.E.; Newete, S.W. Mexican poppy (Argemone mexicana) control in cornfield using deep learning neural networks: A perspective. Acta Agric. Scand. Sect. B—Soil. Plant Science 2019, 69, 228–234. [Google Scholar] [CrossRef]

- Liu, X.; Tian, Y.; Yuan, C.; Zhang, F.; Yang, G. Opium Poppy Detection Using Deep Learning. Remote Sens. 2018, 10, 1886. [Google Scholar] [CrossRef]

- Iqbal, F.; Lucieer, A.; Barry, K.; Wells, R. Poppy Crop Height and Capsule Volume Estimation from a Single UAS Flight. Remote Sens. 2017, 9, 647. [Google Scholar] [CrossRef]

- Luo, Z.; Yang, W.; Guo, R.; Yuan, Y. TransAttention U-Net for Semantic Segmentation of Poppy. Electronics 2023, 12, 487. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012; ChristopherSTAN; Changyu, L.; Laughing; Tkianai; Hogan, A.; Lorenzomammana; et al. ultralytics/yolov5, version 3.1.; Bug Fixes and Performance Improvements; Zenodo: Geneve, Switzerland, 2020.

- Tijtgat, N.; Van Ranst, W.; Goedeme, T.; Volckaert, B.; De Turck, F. Embedded real-time object detection for a UAV warning system. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2110–2118. [Google Scholar]

- Bejiga, M.B.; Zeggada, A.; Melgani, F. Convolutional neural networks for near real-time object detection from UAV imagery in avalanche search and rescue operations. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 693–696. [Google Scholar]

- Bao, W.; Zhu, Z.; Hu, G.; Zhou, X.; Zhang, D.; Yang, X. UAV remote sensing detection of tea leaf blight based on DDMA-YOLO. Comp. Electron. Agric. 2023, 205, 107637. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Ye, H.; Huang, W.; Huang, S.; Cui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Recognition of banana fusarium wilt based on UAV remote sensing. Remote Sens. 2020, 12, 938. [Google Scholar] [CrossRef]

- Alvarez-Vanhard, E.; Corpetti, T.; Houet, T. UAV & satellite synergies for optical remote sensing applications: A literature review. Sci. Remote Sens. 2021, 3, 100019. [Google Scholar]

- Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant. Sci. 2019, 24, 152–164. [Google Scholar]

- Wang, T.; Thomasson, J.A.; Yang, C.; Isakeit, T.; Nichols, R.L. Automatic classification of cotton root rot disease based on UAV remote sensing. Remote Sens. 2020, 12, 1310. [Google Scholar] [CrossRef]

- Pu, H.; Chen, X.; Yang, Y.; Tang, R.; Luo, J.; Wang, Y.; Mu, J. Tassel-YOLO: A New High-Precision and Real-Time Method for Maize Tassel Detection and Counting Based on UAV Aerial Images. Drones 2023, 7, 492. [Google Scholar] [CrossRef]

- Mao, H.; Yao, S.; Tang, T.; Li, B.; Yao, J.; Wang, Y. Towards real-time object detection on embedded systems. IEEE Trans. Emerg. Top. Comput. 2016, 6, 417–431. [Google Scholar] [CrossRef]

- Xiang, S.; Liang, Q.K.; Fang, L. Discrete Wavelet Transform-Based Gaussian Mixture Model for Remote Sensing Image Compression. IEEE Trans. Geosci. Remote Sens. 2023, 61, 3000112. [Google Scholar] [CrossRef]

- Xiang, S.; Liang, Q.K. Remote sensing image compression with long-range convolution and improved non-local attention model. Signal Process. 2023, 209, 109005. [Google Scholar] [CrossRef]

- Gou, G.; Wang, X.; Sui, H.; Wang, S.; Zhang, H.; Li, J. OwlFusion: Depth-Only Onboard Real-Time 3D Reconstruction of Scalable Scenes for Fast-Moving MAV. Drones 2023, 7, 358. [Google Scholar] [CrossRef]

- Wu, X.; Sahoo, D.; Hoi, S.C.H. Recent Advances in Deep Learning for Object Detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Chen, S.; Zou, X.; Zhou, X.; Xiang, Y.; Wu, M. Study on fusion clustering and improved YOLOv5 algorithm based on multiple occlusion of Camellia oleifera fruit. Comp. Electron. Agric. 2023, 206, 107706. [Google Scholar] [CrossRef]

- Liang, Q.K.; Xiang, S.; Hu, Y.; Coppola, G.; Zhang, D.; Sun, W. PD2SE-Net: Computer-assisted plant disease diagnosis and severity estimation network. Comp. Electron. Agric. 2019, 157, 518–529. [Google Scholar] [CrossRef]

- Wu, Q.; Liu, Y.; Li, Q.; Jin, S.; Li, F. The application of deep learning in computer vision. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 6522–6527. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar]

- Reckling, W.; Mitasova, H.; Wegmann, K.; Kauffman, G.; Reid, R. Efficient Drone-Based Rare Plant Monitoring Using a Species Distribution Model and AI-Based Object Detection. Drones 2021, 5, 110. [Google Scholar] [CrossRef]

- Krichen, M.; Adoni, W.Y.; Mihoub, A.; Alzahrani, M.Y.; Nahhal, T. Security Challenges for Drone Communications: Possible Threats, Attacks and Countermeasures. In Proceedings of the 2022 2nd International Conference of Smart Systems and Emerging Technologies (SMARTTECH), Riyadh, Saudi Arabia, 9–11 May 2022; pp. 184–189. [Google Scholar]

- Ko, Y.; Kim, J.; Duguma, D.G.; Astillo, P.V.; You, I.; Pau, G. Drone Secure Communication Protocol for Future Sensitive Applications in Military Zone. Sensors 2021, 21, 2057. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Wang, Q.; Wu, H.; Zhao, C.; Teng, G.; Li, J. Low-altitude remote sensing opium poppy image detection based on modified YOLOv3. Remote Sens. 2021, 13, 2130. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, C.; Wu, H.; Zhao, C.; Teng, G.; Yu, Y.; Zhu, H. A Two-Stage Low-Altitude Remote Sensing Papaver Somniferum Image Detection System Based on YOLOv5s+ DenseNet121. Remote Sens. 2022, 14, 1834. [Google Scholar] [CrossRef]

- Rominger, K.; Meyer, S.E. Application of UAV-based methodology for census of an endangered plant species in a fragile habitat. Remote Sens. 2019, 11, 719. [Google Scholar] [CrossRef]

- He, Q.; Zhang, Y.; Liang, L. Identification of poppy by spectral matching classification. Optik 2020, 200, 163445. [Google Scholar] [CrossRef]

- Pérez-Porras, F.J.; Torres-Sánchez, J.; López-Granados, F.; Mesas-Carrascosa, F.J. Early and on-ground image-based detection of poppy (Papaver rhoeas) in wheat using YOLO architectures. Weed Sci. 2023, 71, 50–58. [Google Scholar] [CrossRef]

- Li, H.; Kadav, A.; Durdanovic, L.; Samet, H.; Graf, H.P. Pruning Filters for Efficient ConvNets. In Proceedings of the 5th International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Liu, Z.; Sun, M.; Zhou, T.; Huang, G. Trevor Darrell, Rethinking the Value of Network Pruning. arXiv 2018, arXiv:1810.05270. [Google Scholar]

- Xia, M.; Zhong, Z.; Chen, D. Structured Pruning Learns Compact and Accurate Models. arXiv 2022, arXiv:2204.00408. [Google Scholar]

- Zhang, P.; Zhong, Y.; Li, X. SlimYOLOv3: Narrower, Faster and Better for Real-Time UAV Applications. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Li, J.; Zhang, K.; Gao, Z.; Yang, L.; Zhuo, L. SiamPRA: An Effective Network for UAV Visual Tracking. Electronics 2023, 12, 2374. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A database and web-based tool for image. Int. J. Comput. Vis. 2005, 77, 157–173. [Google Scholar] [CrossRef]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning Efficient Convolutional Networks through Network Slimming. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2755–2763. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Yu, G.; Chang, Q.; Lv, W.; Xu, C.; Cui, C.; Ji, W.; Dang, Q.; Deng, K.; Wang, G.; Du, Y.; et al. PP-PicoDet: A Better Real-Time Object Detector on Mobile Devices. arXiv 2021, arXiv:2111.00902. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Zhang, H.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Cascade retinanet: Maintaining consistency for single-stage object detection. arXiv 2019, arXiv:1907.06881. [Google Scholar]

- Fang, G.; Ma, X.; Song, M.; Mi, M.B.; Wang, X. DepGraph: Towards Any Structural Pruning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 16091–16101. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision(ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7380–7399. [Google Scholar] [CrossRef] [PubMed]

| Model | FLOPs | Param. | FPS | P | R | F1 | mAP |

|---|---|---|---|---|---|---|---|

| YOLOV4-tiny | 20.6 G | 8.69 M | 217 | 0.840 | 0.749 | 0.792 | 0.837 |

| YOLOV5s | 15.9 G | 6.70 M | 294 | 0.785 | 0.779 | 0.782 | 0.818 |

| YOLOV6-tiny | 36.5 G | 14.94 M | 169 | 0.882 | 0.861 | 0.871 | 0.873 |

| YOLOV7-tiny | 13.2 G | 5.74 M | 370 | 0.740 | 0.699 | 0.720 | 0.755 |

| YOLOV8s | 28.6 G | 10.65 M | 145 | 0.825 | 0.752 | 0.772 | 0.831 |

| PP-PicoDet | 8.3 G | 5.76 M | 251 | 0.808 | 0.734 | 0.769 | 0.792 |

| NanoDet | 3.4 G | 7.5 M | 196 | 0.784 | 0.744 | 0.763 | 0.714 |

| DETR | 100.9 G | 35.04 M | 117 | 0.812 | 0.763 | 0.787 | 0.852 |

| Faster R-CNN | 81.9 G | 36.13 M | 40 | 0.824 | 0.792 | 0.808 | 0.842 |

| RetinaNet | 91.0 G | 41.13 M | 41 | 0.813 | 0.828 | 0.820 | 0.795 |

| YOLOHLA | 13.8 G | 5.72 M | 323 | 0.839 | 0.770 | 0.803 | 0.842 |

| YOLOHLA + RL | 13.8 G | 5.72 M | 323 | 0.891 | 0.822 | 0.855 | 0.882 |

| Methods | P | R | F1 | mAP | FPS | Model Size |

|---|---|---|---|---|---|---|

| YOLOHLA | 0.839 | 0.770 | 0.803 | 0.842 | 323 | 20.8 MB |

| Torch pruning | 0.785 | 0.715 | 0.748 | 0.803 | 333 | 15.9 MB |

| DepGraph | 0.768 | 0.692 | 0.728 | 0.766 | 384 | 10.2 MB |

| YOLOHLA-Tiny | 0.843 | 0.731 | 0.783 | 0.834 | 456 | 7.8 MB |

| Methods | P | R | F1 | mAP | FPS |

|---|---|---|---|---|---|

| YOLOHLA | 0.839 | 0.770 | 0.803 | 0.842 | 128 |

| Torch pruning | 0.785 | 0.715 | 0.748 | 0.803 | 78 |

| DepGraph | 0.768 | 0.692 | 0.728 | 0.766 | 154 |

| YOLOHLA-Tiny | 0.843 | 0.731 | 0.783 | 0.834 | 172 |

| Model | P | R | F1 | mAP | FPS |

|---|---|---|---|---|---|

| YOLOV5s + SE | 0.795 | 0.730 | 0.761 | 0.795 | 278 |

| YOLOV5s + CA | 0.851 | 0.766 | 0.806 | 0.842 | 133 |

| YOLOV5s + ECA | 0.843 | 0.719 | 0.776 | 0.822 | 286 |

| YOLOV5s + CBAM | 0.840 | 0.720 | 0.775 | 0.819 | 294 |

| YOLOV5s + HLA | 0.839 | 0.770 | 0.803 | 0.842 | 323 |

| Model | P | R | F1 | mAP | FPS |

|---|---|---|---|---|---|

| YOLOV6-Tiny + SE | 0.901 | 0.829 | 0.864 | 0.870 | 169 |

| YOLOV6-Tiny + CA | 0.885 | 0.811 | 0.846 | 0.877 | 159 |

| YOLOV6-Tiny + ECA | 0.901 | 0.84 | 0.869 | 0.868 | 167 |

| YOLOV6-Tiny + CBAM | 0.906 | 0.829 | 0.866 | 0.866 | 159 |

| YOLOV6-Tiny + HLA | 0.908 | 0.851 | 0.878 | 0.882 | 154 |

| Pruning Ratios | P | R | F1 | mAP | FPS | Model Size |

|---|---|---|---|---|---|---|

| YOLOHLA | 0.839 | 0.770 | 0.803 | 0.842 | 323 | 20.8 MB |

| pr = 10% | 0.786 | 0.759 | 0.772 | 0.818 | 370 | 17.7 MB |

| pr = 20% | 0.782 | 0.568 | 0.658 | 0.72 | 385 | 14.5 MB |

| pr = 30% | 0.764 | 0.576 | 0.657 | 0.650 | 401 | 12.1 MB |

| pr = 40% | 0.670 | 0.502 | 0.574 | 0.544 | 417 | 9.8 MB |

| pr = 50% | 0.489 | 0.481 | 0.485 | 0.427 | 456 | 7.8 MB |

| Finetuning (pr = 50%) | 0.843 | 0.731 | 0.783 | 0.834 | 456 | 7.8 MB |

| Methods | P | R | F1 | mAP |

|---|---|---|---|---|

| YOLOV5S | 0.432 | 0.342 | 0.382 | 0.328 |

| YOLOV6-tiny | 0.476 | 0.4 | 0.435 | 0.371 |

| YOLOV7-tiny | 0.489 | 0.371 | 0.422 | 0.36 |

| YOLOHLA | 0.487 | 0.41 | 0.439 | 0.375 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Xia, W.; Xie, G.; Xiang, S. Fast Opium Poppy Detection in Unmanned Aerial Vehicle (UAV) Imagery Based on Deep Neural Network. Drones 2023, 7, 559. https://doi.org/10.3390/drones7090559

Zhang Z, Xia W, Xie G, Xiang S. Fast Opium Poppy Detection in Unmanned Aerial Vehicle (UAV) Imagery Based on Deep Neural Network. Drones. 2023; 7(9):559. https://doi.org/10.3390/drones7090559

Chicago/Turabian StyleZhang, Zhiqi, Wendi Xia, Guangqi Xie, and Shao Xiang. 2023. "Fast Opium Poppy Detection in Unmanned Aerial Vehicle (UAV) Imagery Based on Deep Neural Network" Drones 7, no. 9: 559. https://doi.org/10.3390/drones7090559

APA StyleZhang, Z., Xia, W., Xie, G., & Xiang, S. (2023). Fast Opium Poppy Detection in Unmanned Aerial Vehicle (UAV) Imagery Based on Deep Neural Network. Drones, 7(9), 559. https://doi.org/10.3390/drones7090559