Real-Time Registration of Unmanned Aerial Vehicle Hyperspectral Remote Sensing Images Using an Acousto-Optic Tunable Filter Spectrometer

Abstract

:1. Introduction

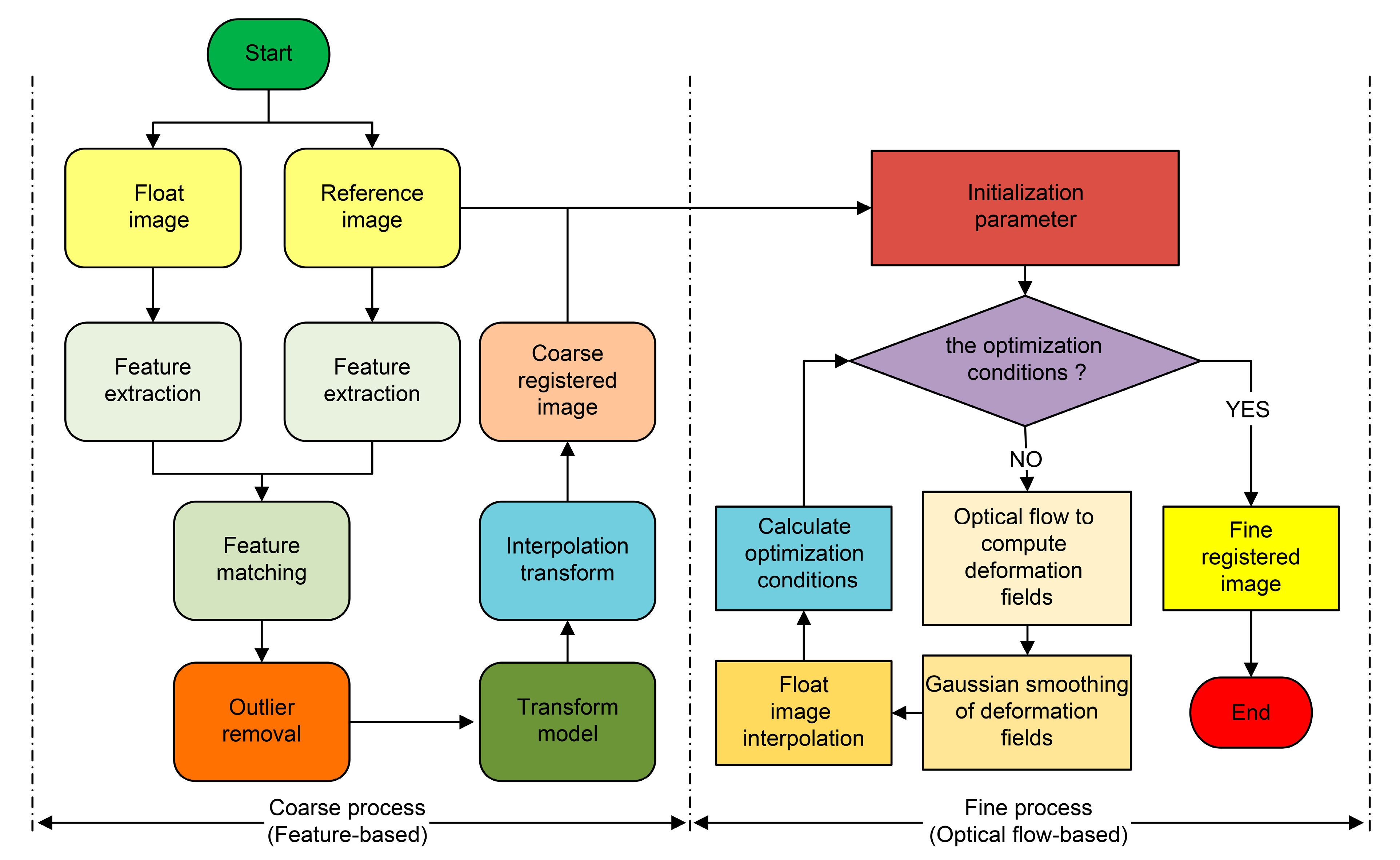

- To propose a new coarse-to-fine remote sensing image registration framework based on feature and optical flow theory. The data cube composed of collected spectral segments registers the data of each spectral segment (registration between adjacent spectral segments) after resolving image blurring and spectral segment drift of the AOTF spectrometer using a fast zoom lens. The performance of the proposed method was compared with that of other advanced algorithms.

- To construct a VS2023+CUDA+OPENCV development environment for the improved demons registration algorithm based on optical flow theory, which is highly suitable for distributed and parallel processing. Parallel processing using the compute unified device architecture (CUDA) was performed to achieve rapid registration and enable real-time processing.

- To verify the proposed method based on the existing AOTF unmanned airborne spectrometer platform. The data cubes on each shooting waypoint were registered online and met the requirements of real-time registration on unmanned aerial vehicles (UAVs) and real-time processing on aircraft.

2. Related Work

2.1. Airborne AOTF Spectrometer for UAVs Based on the Zoom Lens

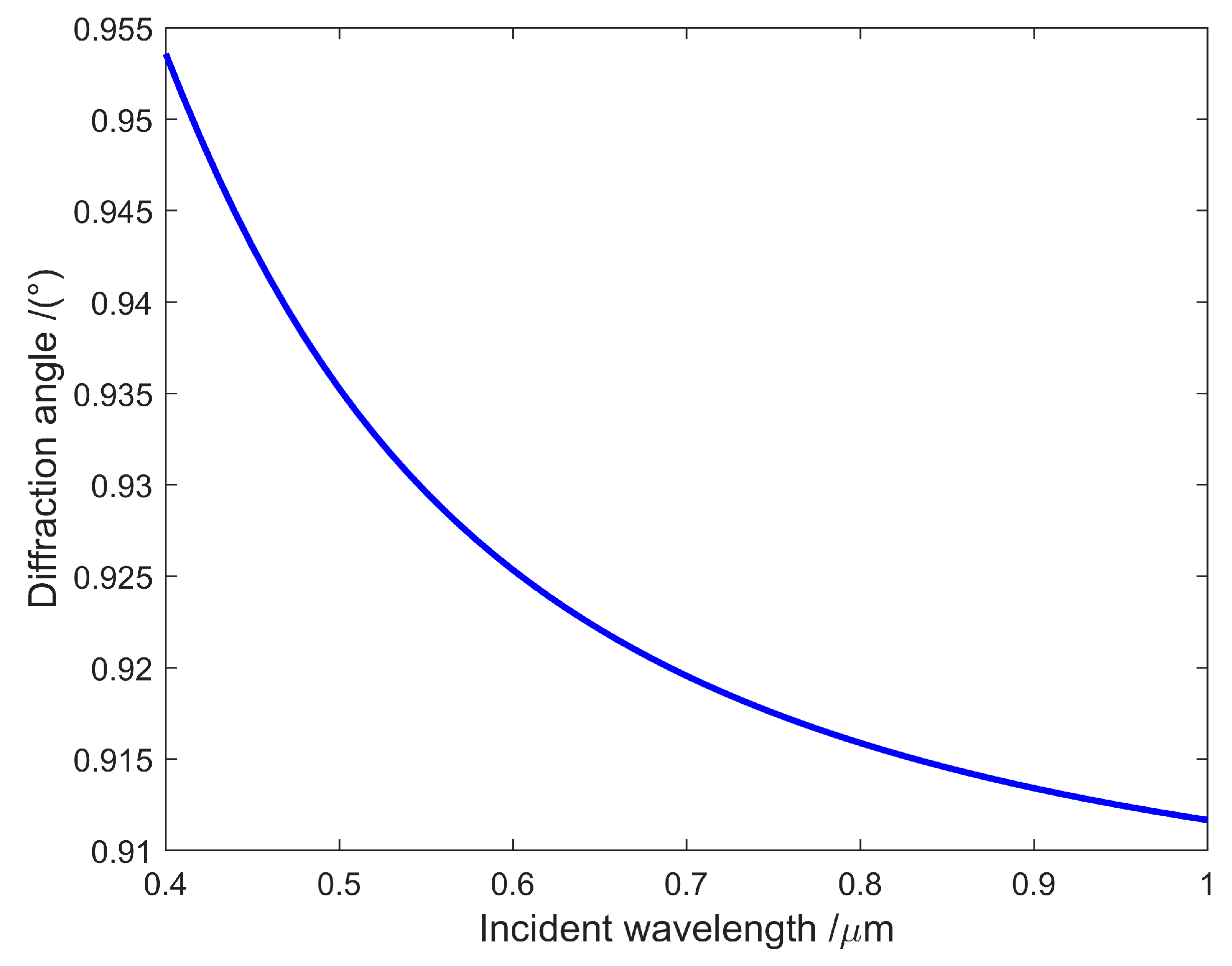

2.2. AOTF Spectral Characteristics

2.3. Image Registration Algorithm Based on Optical Flow Theory

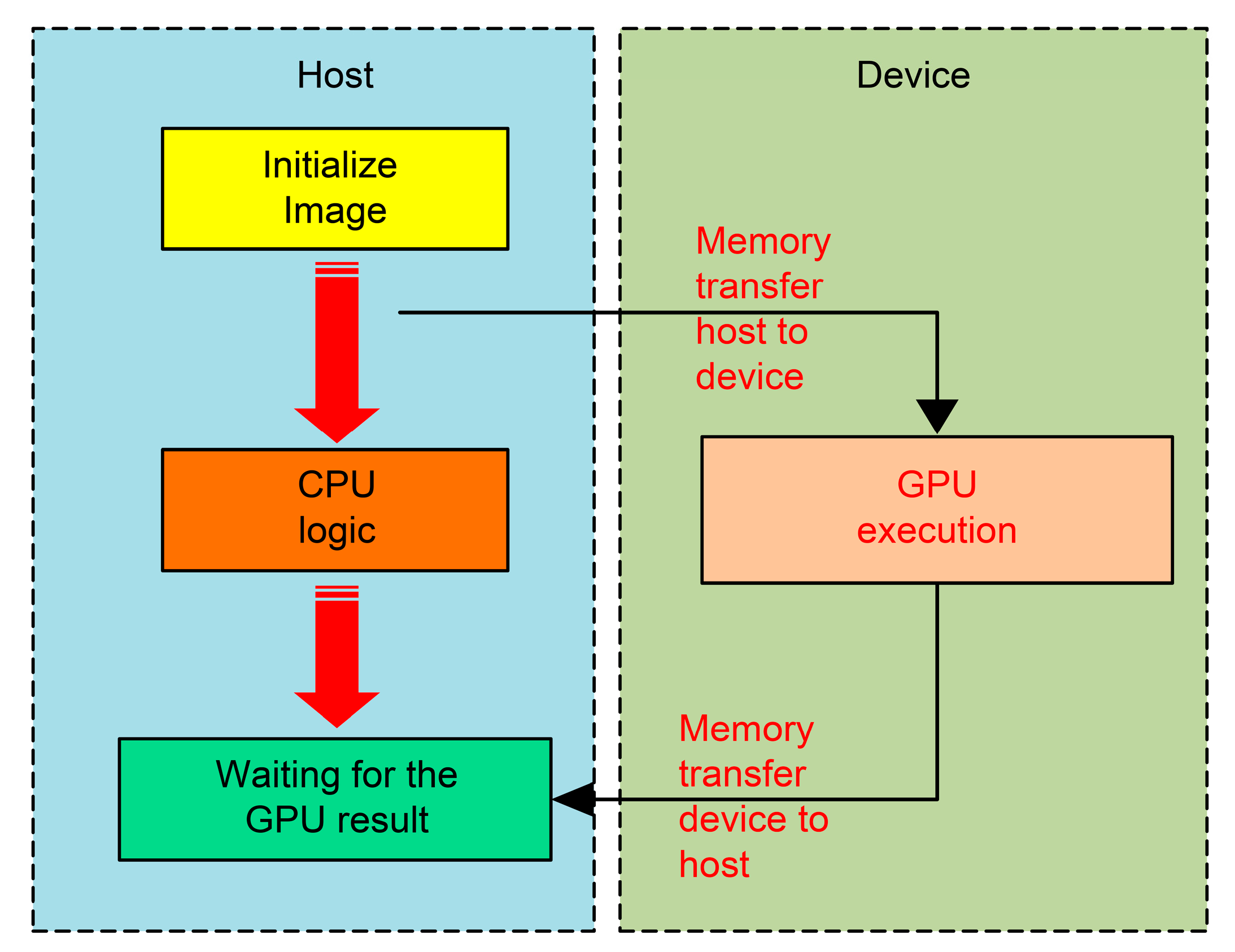

2.4. Basic Steps of GPU-Based Image Processing-Accelerated CUDA Program

3. Methodology

3.1. Algorithm Description

- At the beginning of registration, features of the floating and reference images, which can be one or a combination of Harris, Moravec, Haar-like, HOG, LBP, SIFT, SURF, BRIEF, SUSAN, FAST, CENSUS, FREAK, BRISK, ORB, etc., are extracted.

- Extracted features are matched to obtain feature pairs of floating and reference images. This can be completed by brute force matching, which calculates the distance between a feature point descriptor and all other feature point descriptors, ranks the obtained distances, and selects the closest distance as the matching point.

- The feature alignment exception matching is deleted using the exception elimination algorithm. Common methods include using a Hamming distance of less than twice the minimum distance, cross-matching, k-nearest neighbor matching, and random sampling consistency.

- The transformation model from the floating image to the reference image is calculated using the matching feature of removing abnormal pairs.

- The floating image is transformed to match the reference image by transforming the model and adopting appropriate interpolation transformation, thus obtaining the coarse registration result.

- The registration parameters are initialized. This may be the number of registration cycles, the similarity between the registered and reference images, or other parameters.

- It is determined whether the registration optimization conditions are met.

- If yes, the image is obtained after fine registration, and the process is completed.

- If not, the method based on optical flow theory is used to calculate the deformation displacement field.

- The deformation displacement field is subjected to Gaussian filtering.

- The filtered deformation displacement field is used to interpolate the floating image.

- The registration optimization conditions are calculated using normalization cross-correlation, mutual information, structure similarity index measure (SSIM), and difference in RMSE.

- Return to (2) and continue to judge.

| Algorithm 1: A specific algorithm for coarse-to-fine remote sensing image registration based on feature and optical flow theory |

| Input: floating image “image01”, reference image “image02” Output: image after registration 1: Detect ORB feature point position 2: Calculate descriptor according to ORB feature point position 3: Perform feature point matching 4: Constrain the matching points to obtain excellent matching points 5: Using the matching points, calculate the projection mapping matrix from the floating image to the reference image 6: Use projection mapping matrix to complete rough image registration 7: Normalize the floating image and reference image after coarse registration and resize the square 8: Find the gradient of the reference image 9: Perform iteration operations as follows 10: Calculate coordinate offset code 11: Determine the gradient of floating image and improve the demons algorithm to determine the gradient 12: Perform Gaussian smoothing for coordinate offset to reduce burrs 13: Apply the pixel resampling code 14: Perform until convergence 15: Complete fine image registration |

3.2. ORB Feature Point Extraction and Descriptor Construction

3.3. Demons Correlation Algorithm

4. Experiments and Discussion

4.1. Datasets

4.2. Evaluation Criteria

- 1.

- SSIM

- 2.

- RMSE

- 3.

- MI

- 4.

- UIQI

- 5.

- SAM

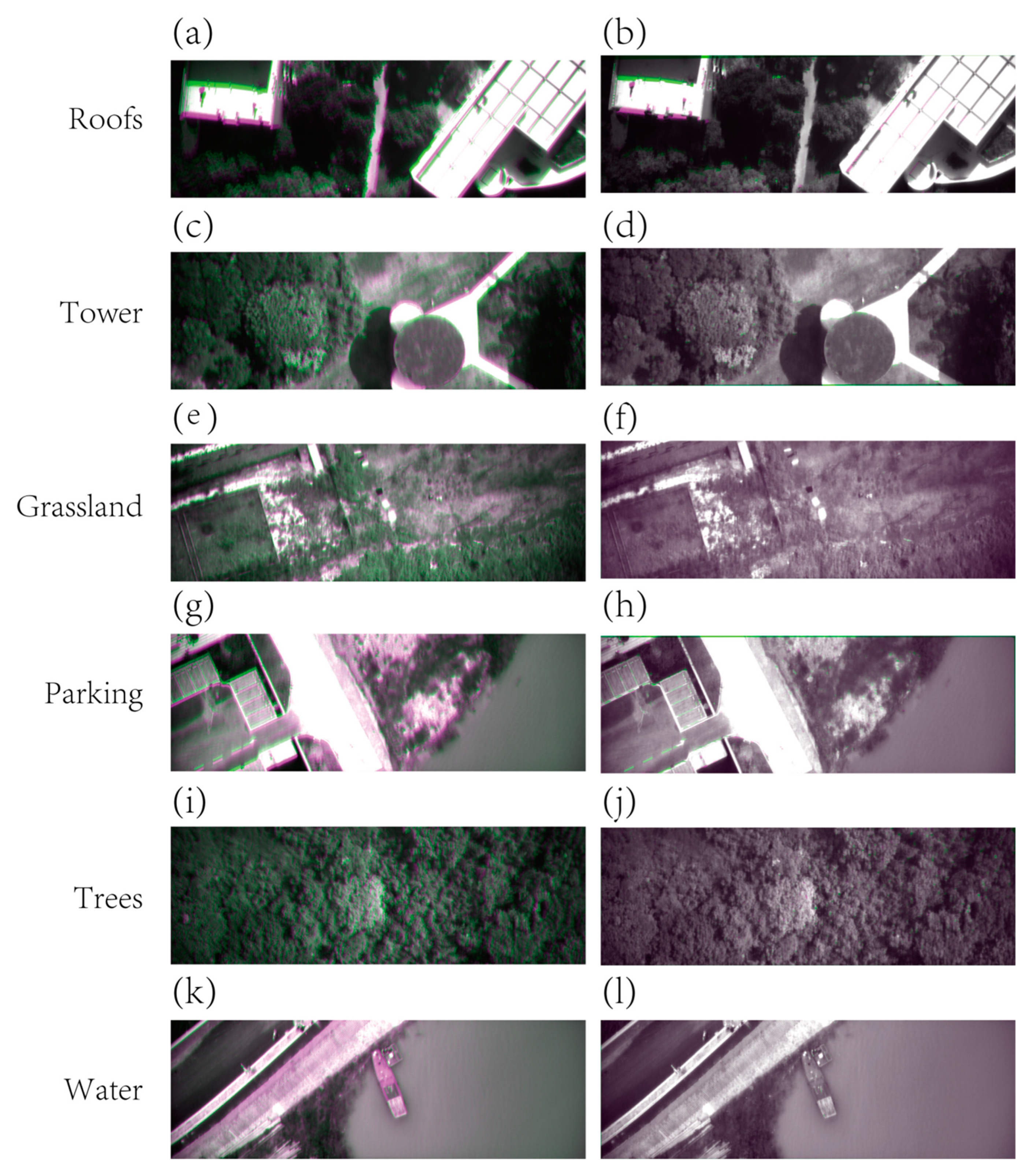

4.3. Ground Experiment Results and Analysis

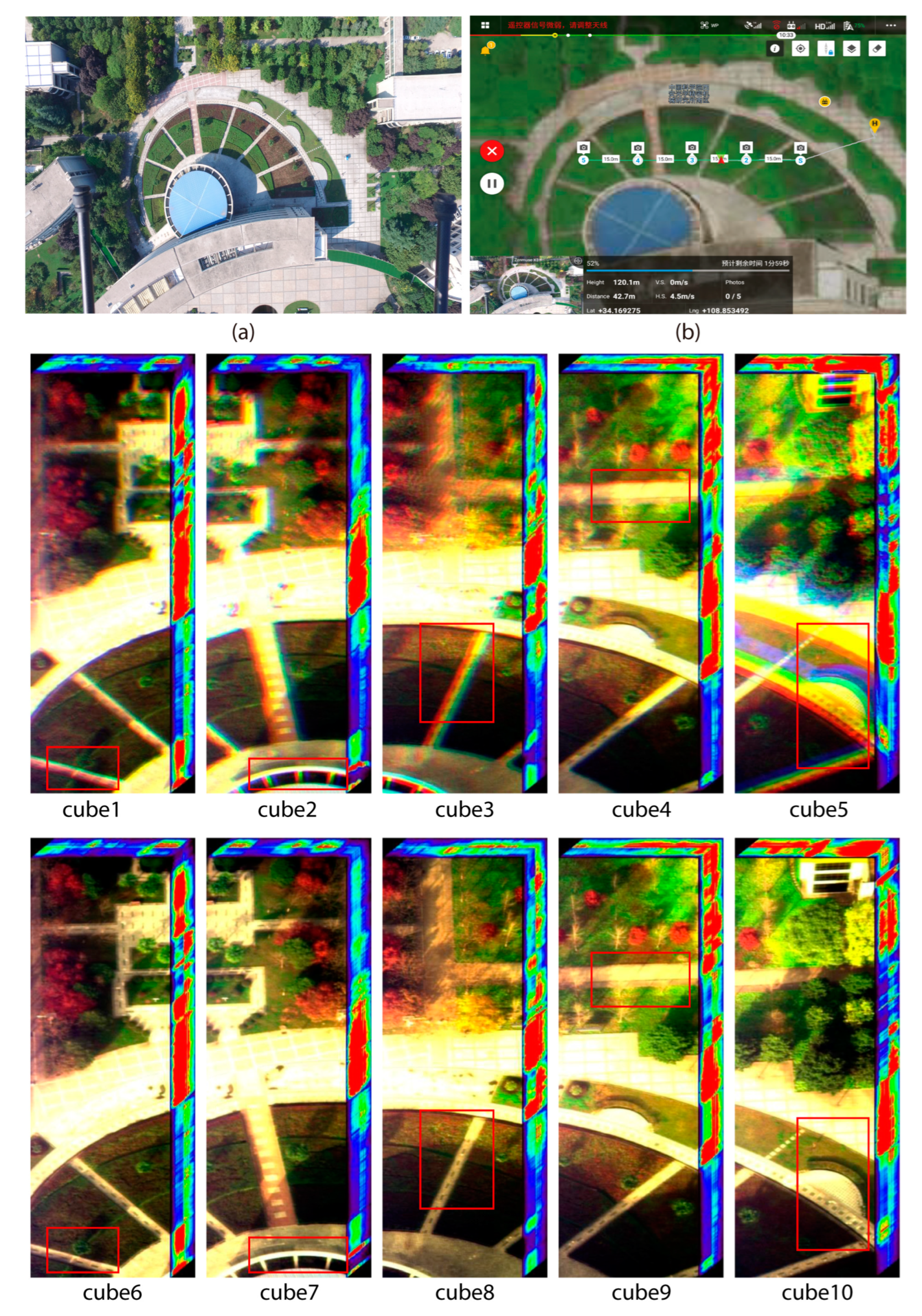

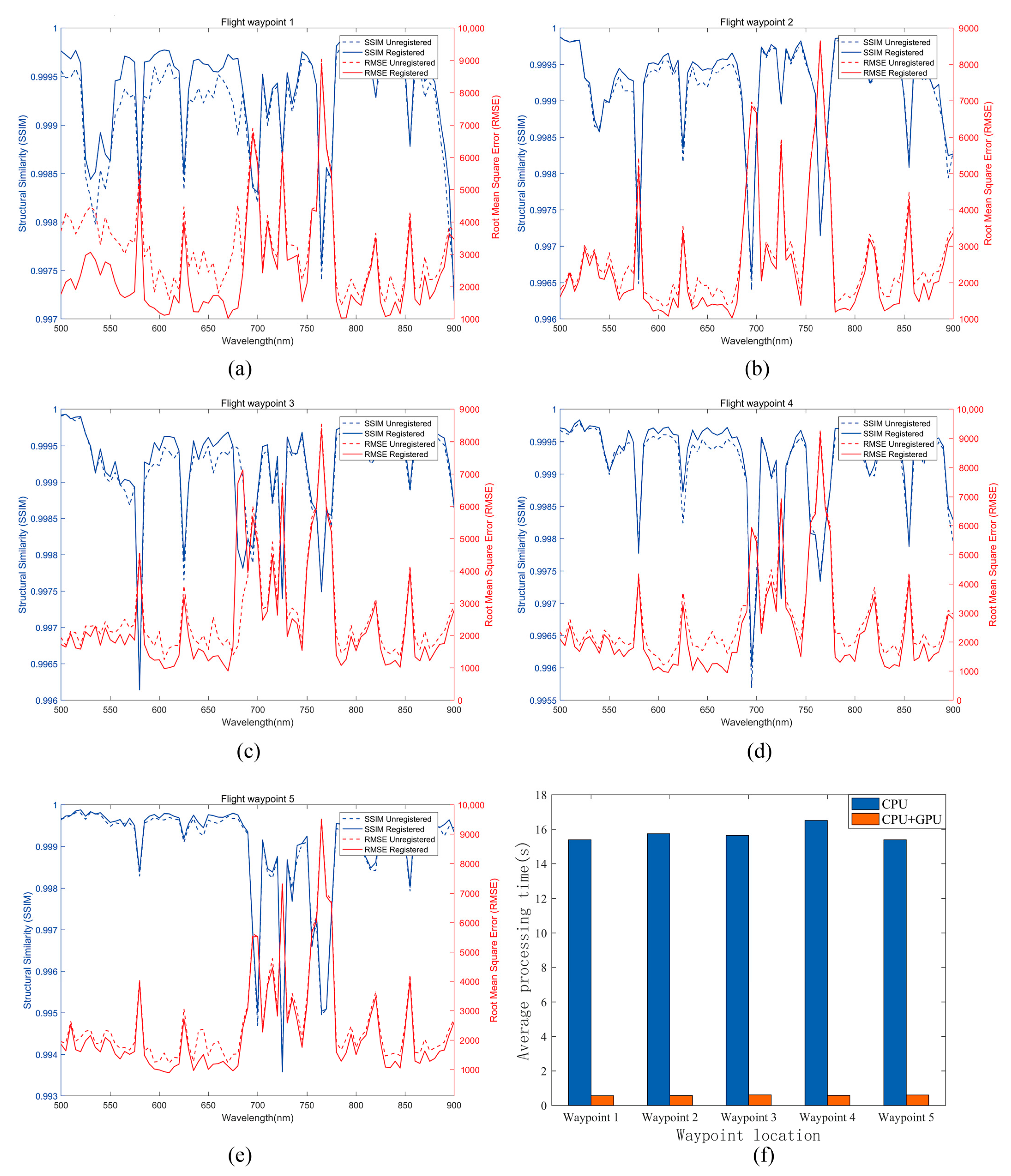

4.4. Real-Time Processing of the Remote Sensing Imaging Registration Experiment for Unmanned Aerial Vehicles

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, X.; Ai, W.; Feng, R.; Luo, S. Survey of remote sensing image registration based on deep learning. Natl. Remote Sens. Bull. 2023, 27, 267–284. [Google Scholar] [CrossRef]

- Jiang, J.; Shi, X. A robust point-matching algorithm based on integrated spatial structure constraint for remote sensing image registration. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1716–1720. [Google Scholar] [CrossRef]

- Yang, Z.; Dan, T.; Yang, Y. Multi-temporal remote sensing image registration using deep convolutional features. IEEE Access 2018, 6, 38544–38555. [Google Scholar] [CrossRef]

- Mo, Y.; Kang, X.; Duan, P.; Li, S. A robust UAV hyperspectral image stitching method based on deep feature matching. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Ordóñez, A.; Acción, Á.; Argüello, F.; Heras, D.B. HSI-MSER: Hyperspectral image registration algorithm based on MSER and SIFT. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 12061–12072. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, H.; Du, J.; Luo, B. Unified framework for the joint super-resolution and registration of multiangle multi/hyperspectral remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2369–2384. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.D.; Liu, J.; He, Y.; Shang, J. Recent advances of hyperspectral imaging technology and applications in agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Syed Taimoor Hussain Shah, S.A.Q.; ul Rehman, A. Classification and Segmentation Models for Hyperspectral Imaging—An Overview. In Intelligent Technologies and Applications; Springer: Cham, Switzerland, 2021; Volume 1382. [Google Scholar] [CrossRef]

- Wang, J.; Ding, N.; Zheng, Y.; Zhao, Y.; Gao, F.; Li, J.; Wang, J.; Gao, M.; Wu, J. Overall design technology of hyperspectral imaging system based on AOTF. In Proceedings of the International Symposium on Optoelectronic Technology and Application 2014: Imaging Spectroscopy; and Telescopes and Large Optics, Beijing, China, 18 November 2014. [Google Scholar]

- Nag, S.; Hewagama, T.; Georgiev, G.T.; Pasquale, B.; Aslam, S.; Gatebe, C.K. Multispectral snapshot imagers onboard small satellite formations for multi-angular remote sensing. IEEE Sens. J. 2017, 17, 5252–5268. [Google Scholar] [CrossRef]

- Jaiswal, B.; Singh, S.; Jain, A.; Sankarasubramanian, K.; Nandi, A. AOTF based spectro-polarimeter for observing Earth as an Exoplanet. arXiv 2023, arXiv:2302.10712. [Google Scholar] [CrossRef]

- Sharikova, M.O.; Balandin, I.A.; Batshev, V.I.; Kozlov, A.B. Spatial and spectral correction of an acousto-optical imaging spectrometer. J. Opt. Technol. 2023, 90, 684–690. [Google Scholar] [CrossRef]

- Zhang, H.; Zhao, H. Accurate design of a TeO2 noncollinear acousto-optic tunable filter with refractive index correction. Opt. Lett. 2023, 48, 3395–3398. [Google Scholar] [CrossRef]

- Zhao, H.; Cheng, C.; Guo, Q.; Ma, R.; Yang, Y. Analysis of phase mismatch for mercurous bromide-based non-collinear AOTF design in spectral imaging applications. Materials 2024, 17, 1703. [Google Scholar] [CrossRef]

- Yu, K.; Guo, Q.; Li, N.; Cheng, C.; Zhao, H. Spectral calibration method for mid-infrared AOTF imagers. Infrared Laser Eng. 2023, 52, 20230291. [Google Scholar] [CrossRef]

- Liu, H.; Hou, X.; Hu, B.; Yu, T.; Zhang, Z.; Liu, X.; Liu, J.; Wang, X.; Zhong, J.; Tan, Z. Image blurring and spectral drift in imaging spectrometer system with an acousto-optic tunable filter and its application in UAV remote sensing. Int. J. Remote Sens. 2022, 43, 6957–6978. [Google Scholar] [CrossRef]

- Tondewad, P.S.; Dale, M.P. Remote sensing image registration methodology: Review and discussion. Procedia Comput. Sci. 2020, 171, 2390–2399. [Google Scholar] [CrossRef]

- Li, L.; Han, L.; Ding, M.; Cao, H.; Hu, H. A deep learning semantic template matching framework for remote sensing image registration. ISPRS J. Photogramm. Remote Sens. 2021, 181, 205–217. [Google Scholar] [CrossRef]

- Ruiqi, L.; Bowu, Y.; Dou, Q.; Yi, L.; Baorui, D.; Shuang, W.; Huarong, J.; Biao, H.; Licheng, J. Deep Global Feature-Based Template Matching for Fast Multi-Modal Image Registration. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 5457–5460. [Google Scholar]

- Ye, Y.; Wang, M.; Hao, S.; Zhu, Q. A novel keypoint detector combining corners and blobs for remote sensing image registration. IEEE Geosci. Remote Sens. Lett. 2021, 18, 451–455. [Google Scholar] [CrossRef]

- Wu, G.-L.; Chang, H.-H. An accurate feature point matching algorithm for automatic remote sensing image registration. In Proceedings of the International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, Australia, 29 November–1 December 2017. [Google Scholar] [CrossRef]

- Zhang, T.; Zhao, R.; Chen, Z. Application of migration image registration algorithm based on improved SURF in remote sensing image mosaic. IEEE Access 2020, 8, 163637–163645. [Google Scholar] [CrossRef]

- Chen, S.; Zhong, S.; Xue, B.; Li, X.; Zhao, L.; Chang, C.-I. Iterative scale-invariant feature transform for remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3244–3265. [Google Scholar] [CrossRef]

- Jhan, J.-P.; Rau, J.-Y. A generalized tool for accurate and efficient image registration of UAV multi-lens multispectral cameras by N-SURF matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6353–6362. [Google Scholar] [CrossRef]

- Wu, Y.; Xiao, Z.; Liu, S.; Miao, Q.; Ma, W.; Gong, M.; Xie, F.; Zhang, Y. A two-step method for remote sensing images registration based on local and global constraints. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 5194–5206. [Google Scholar] [CrossRef]

- Ordóñez, Á.; Argüello, F.; Heras, D.B. GPU accelerated FFT-based registration of hyperspectral scenes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4869–4878. [Google Scholar] [CrossRef]

- Liu, Y.; Cao, H.; Zhao, Y.; He, Q.; Yang, Y.; Wang, L.; Lin, G.; Zhou, J. A remote sensing image registration algorithm based on multiple constraints and a variational Bayesian framework. Remote Sens. Lett. 2021, 12, 296–305. [Google Scholar] [CrossRef]

- Zhou, C.; Zhang, G.; Yang, Z.; Zhou, J. A novel image registration algorithm using wavelet transform and matrix-multiply discrete Fourier transform. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8002605. [Google Scholar] [CrossRef]

- Lee, W.; Sim, D.; Oh, S.-J. A CNN-based high-accuracy registration for remote sensing images. Remote Sens. 2021, 13, 1482. [Google Scholar] [CrossRef]

- Zeng, Y.; Ning, Z.; Liu, P.; Luo, P.; Zhang, Y.; He, G. A mosaic method for multi-temporal data registration by using convolutional neural networks for forestry remote sensing applications. Computing 2020, 102, 795–811. [Google Scholar] [CrossRef]

- Ye, Y.; Tang, T.; Zhu, B.; Yang, C.; Li, B.; Hao, S. A multiscale framework with unsupervised learning for remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5622215. [Google Scholar] [CrossRef]

- Ying, C.; Lei, C.; Qi, Z.; Wei, W.; Jiahao, W. Improved remote sensing image registration of residual block densely connected network based on reinforcement learning. In Proceedings of the 6th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Oita, Japan, 25–27 November 2021. [Google Scholar] [CrossRef]

- Fernández-Fabeiro, J.; Gonzalez-Escribano, A.; Llanos, D.R. Distributed programming of a hyperspectral image registration algorithm for heterogeneous GPU clusters. J. Parallel Distrib. Comput. 2021, 151, 86–93. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, Y.; Zhou, Y.; Ma, L.; Wang, B.; Zhang, F. Accelerating SAR image registration using swarm-intelligent GPU parallelization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5694–5703. [Google Scholar] [CrossRef]

- Ordóñez, Á.; Heras, D.B.; Argüello, F. Multi-GPU registration of high-resolution multispectral images using HSI-KAZE in a cluster system. In Proceedings of the 2022 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Kuala Lumpur, Malaysia, 17–22 July 2022. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, X. High-precision registration algorithm and parallel design method for high-resolution optical remote sensing images. Int. J. Pattern Recognit. Artif. Intell. 2021, 35, 2154020. [Google Scholar] [CrossRef]

- Jiang, Q.; Qiu, Y.; Wen, Y.; Wang, H.; Xu, W. Design of data acquisition system for AOTF polarization spectral imaging instrument. Infrared Laser Eng. 2012, 41, 218–222. [Google Scholar]

- Liu, H.; Yu, T.; Hu, B.; Hou, X.; Zhang, Z.; Liu, X.; Liu, J.; Wang, X.; Zhong, J.; Tan, Z.; et al. UAV-borne hyperspectral imaging remote sensing system based on acousto-optic tunable filter for water quality monitoring. Remote Sens. 2021, 13, 4069. [Google Scholar] [CrossRef]

- Jiachun, W.; Dapeng, Z.; Xianghua, D.; Qichao, W.; Zhigang, L. Design and experiment of hyper-spectral polarization imaging system based on AOTF. Infrared Laser Eng. 2017, 46, 1136002. [Google Scholar] [CrossRef]

- Ryu, S.Y.; You, J.-W.; Kwak, Y.; Kim, S. Design of a prism to compensate the image-shifting error of the acousto-optic tunable filter. Opt. Express 2008, 16, 17138–17147. [Google Scholar] [CrossRef]

- Ren, Y.; Cai, H.; Tan, J.; Tan, Y.; Zhang, X.; Zheng, F.; Ma, W. Imaging drift of acousto-optic modulator spectral camera. Chin. Opt. 2013, 6, 179–186. [Google Scholar] [CrossRef]

- Suhre, D.R.; Theodore, J.G. White-light imaging by use of a multiple passband acousto-optic tunable filter. Appl. Opt. 1996, 35, 4494–4501. [Google Scholar] [CrossRef]

- Feng, R.; Du, Q.; Luo, H.; Shen, H.; Li, X.; Liu, B. A registration algorithm based on optical flow modification for multi-temporal remote sensing images covering the complex-terrain region. Nation Remote Sens. Bull. 2021, 25, 630–640. [Google Scholar] [CrossRef]

- Horn, B.K.P.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar] [CrossRef]

- Tang, M.; Liang, K.; Qiu, J. Small insulator target detection based on multi-feature fusion. IET Image Proc. 2023, 17, 1520–1533. [Google Scholar] [CrossRef]

- Lei, S.; Zhu, F. UAV remote sensing image registration algorithm based on ORB and improved RANSAC. J. Nat. Sci. Heilongjiang Univ. 2020, 37, 8. [Google Scholar] [CrossRef]

- Zhang, G.; Guo, L.; Xiong, B.; Chu, J. Active demons algorithm based on multi-resolution and adaptive fractional differential. J. Comput. Res. Dev. 2018, 55, 2753–2763. [Google Scholar] [CrossRef]

- Chen, Q.; Liu, J.; Tang, Z.-H.; Li, J.-Q.; Wu, M. Detection and extraction of image edge curves and detailed features using fractional differentiation. Acta Electron. Sin. 2013, 41, 1873–1880. [Google Scholar] [CrossRef]

- Thirion, J.P. Image matching as a diffusion process: An analogy with Maxwell’s demons. Med. Image Anal. 1998, 2, 243–260. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Dong, L.; O’Daniel, J.; Mohan, R.; Garden, A.S.; Ang, K.K.; Kuban, D.A.; Bonnen, M.; Chang, J.Y.; Cheung, R. Validation of an accelerated ‘demons’ algorithm for deformable image registration in radiation therapy. Phys. Med. Biol. 2005, 50, 2887. [Google Scholar] [CrossRef] [PubMed]

- Santos-Ribeiro, A.; Nutt, D.J.; McGonigle, J. Inertial demons: A momentum-based diffeomorphic registration framework. In Medical Image Computing and Computer-Assisted Intervention-MICCAI 2016; Ourselin, S., Joskowicz, L., Sabuncu, M., Unal, G., Wells, W., Eds.; Springer Nature: Cham, Switzerland, 2016; pp. 37–45. [Google Scholar]

- Peng, M.; Li, G.; Zhou, X.; Ma, C.; Zhang, L.; Zhang, X.; Shang, K. A registration-error-resistant swath reconstruction method of ZY1-02D satellite hyperspectral data using SRE-ResNet. Remote Sens. 2022, 14, 5890. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, C.; Li, X. An intensity-independent stereo registration method of push-broom hyperspectral scanner and LiDAR on UAV platforms. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5540014. [Google Scholar] [CrossRef]

- Bin, J.; Zhang, H.; Bahrami, Z.; Zhang, R.; Liu, H.; Blasch, E.; Liu, Z. The registration of visible and thermal images through multi-objective optimization. Inf. Fusion 2023, 95, 186–198. [Google Scholar] [CrossRef]

- Wang, S.; Fan, F. Thangka. Hyperspectral image super-resolution based on a spatial-spectral integration network. Remote Sens. 2023, 15, 3603. [Google Scholar] [CrossRef]

- Guo, A.; Dian, R.; Li, S. A deep framework for hyperspectral image fusion between different satellites. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 7939–7954. [Google Scholar] [CrossRef]

- Fan, Y.G.; Chai, J.; Xu, M.; Wang, B.; Hou, Q. Improved fast Image registration algorithm based on ORB and RANSAC fusion. Opt. Precis. Eng. 2019, 27, 702–717. [Google Scholar] [CrossRef]

- Ordóñez, Á.; Argüello, F.; Heras, D.B.; Demir, B. GPU-accelerated registration of hyperspectral images using KAZE features. J. Supercomput. 2020, 76, 9478–9492. [Google Scholar] [CrossRef]

- Yan, Q.; Li, Q.; Zhang, T. Research on UAV Image Mosaic Based on Improved AKAZE Feature and VFC Algorithm, In Proceedings of the 2021 6th International Conference on Multimedia and Image Processing, Zhuhai, China, 8–10 January 2021.

- He, L.; Liu, J.; Li, G. Fast image registration approach based on improved BRISK. Infrared Laser Eng. 2014, 43, 2722–2727. [Google Scholar]

- Zhang, D.; Huang, H.; Shang, Z. Nonrigid image registration algorithm based on mutual information active demons. Laser Optoelectron. Prog. 2020, 57, 161009. [Google Scholar] [CrossRef]

- Sun, W.; Niessen, W.J.; Klein, S. Randomly perturbed B-splines for nonrigid image registration. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1401–1413. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Liu, Y. Study of image registration system based on FAST feature. Comput. Eng. Appl. 2016, 52, 167–170. [Google Scholar] [CrossRef]

- Hwooi, S.K.W.; Sabri, A.Q.M. Enhanced correlation coefficient as a refinement of image registration. In Proceedings of the IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuching, Malaysia, 12–14 September 2017. [Google Scholar] [CrossRef]

- Wu, Y.; Ma, W.; Gong, M.; Su, L.; Jiao, L. A novel point-matching algorithm based on fast sample consensus for image registration. IEEE Geosci. Remote Sens. Lett. 2015, 12, 43–47. [Google Scholar] [CrossRef]

- Sedaghat, A.; Mohammadi, N. High-resolution image registration based on improved SURF detector and localized GTM. Int. J. Remote Sens. 2019, 40, 2576–2601. [Google Scholar] [CrossRef]

- Chang, H.-H.; Wu, G.-L.; Chiang, M.-H. Remote sensing image registration based on modified SIFT and feature slope grouping. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1363–1367. [Google Scholar] [CrossRef]

- Ordóñez, Á.; Heras, D.B.; Argüello, F. SURF-based registration for hyperspectral images. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar] [CrossRef]

| Component | Parameter | Specification | Component | Parameter | Specification |

|---|---|---|---|---|---|

| AOTF filter (SGL30-V-12LE) | Wavelength | 400–1000 nm | Objective lens (M112FM16) | Focal length | 16 mm |

| FWHM | ≤8 nm | Image plane | 1/1.2″ | ||

| Diffraction efficiency | ≥75% | Aperture | F2.0–F16.0 | ||

| Separation angle | ≥4° | Collimating lens (V5014-MP) | Focal length | 50 mm | |

| Aperture angle | ≥3.6° | Image plane | 1″ | ||

| Primary deflection angle | ≥2.17° | Aperture | F1.4–F16.0 | ||

| Optical aperture | 12 × 12 mm | Linear polarizer (R5000490667) | Wavelength range | 300–2700 nm | |

| AOTF driver | Frequency range | 43–156 MHz | Extinction ratio | >800:1 | |

| Stability frequency | 10 Hz | Size | 25.4 mm | ||

| Frequency resolution | 0.1 MHz | CMOS camera (MV-CA050-20UM) | Detector | PYTHON5000 | |

| Motorized zoom lens (EL-16-40-TC-VIS-5D) | Aperture | 16 mm | Pixel size | 4.8 × 4.8 μm | |

| Response time | 5 ms | Resolution | 2592 × 2048 | ||

| Focal range | −10 to +10 diopters | Interface | USB 3.0 |

| Algorithms | Image Datasets | |||||

|---|---|---|---|---|---|---|

| Roofs | Tower | Grassland | Parking | Trees | Water | |

| VGG16 [3] | 0.1969 | 0.1058 | 0.1478 | 0.1925 | 0.0185 | 0.0967 |

| ORB [58] | 0.1740 | 0.1116 | 0.1093 | 0.1531 | 0.0550 | 0.0819 |

| KAZE [59] | 0.1741 | 0.0766 | 0.1787 | 0.1511 | 0.0064 | 0.0861 |

| AKAZE [60] | 0.1743 | 0.0772 | 0.1914 | 0.1505 | 0.0360 | 0.0851 |

| BRISK [61] | 0.1747 | 0.1053 | 0.1823 | 0.1502 | 0.0036 | 0.0797 |

| Demons [62] | 0.4500 | 0.4172 | 0.4868 | 0.4005 | 0.4006 | 0.3485 |

| FFD [63] | 0.1353 | 0.1319 | 0.3013 | 0.1279 | 0.1032 | 0.1510 |

| FAST [64] | 0.1742 | 0.0913 | 0.1825 | 0.1528 | 0.0453 | 0.0874 |

| ECC [65] | 0.5759 | 0.4210 | 0.5515 | 0.3294 | 0.3855 | 0.2110 |

| SIFT-FSC [66] | 0.1660 | 0.1556 | 0.3295 | 0.2227 | 0.1518 | 0.1921 |

| SURF-GTM [67] | 0.1662 | 0.1514 | 0.3343 | 0.2180 | 0.0716 | 0.1390 |

| SIFT [68] | 0.1724 | 0.0488 | 0.0620 | 0.1429 | 0.0637 | 0.0916 |

| SURF [69] | 0.1719 | 0.0635 | 0.1915 | 0.1507 | 0.0029 | 0.0869 |

| Proposed | 0.6661 | 0.7046 | 0.7281 | 0.6379 | 0.7557 | 0.5587 |

| Algorithms | Image Datasets | |||||

|---|---|---|---|---|---|---|

| Roofs | Tower | Grassland | Parking | Trees | Water | |

| VGG16 [3] | 32.6225 | 22.0038 | 21.2094 | 26.4478 | 20.0595 | 20.3647 |

| ORB [58] | 39.8232 | 24.1672 | 21.2804 | 31.5082 | 18.5401 | 13.0100 |

| KAZE [59] | 39.7697 | 24.6450 | 17.0694 | 31.7053 | 18.5665 | 14.4373 |

| AKAZE [60] | 39.7677 | 24.5997 | 16.9560 | 31.6814 | 18.8502 | 13.6026 |

| BRISK [61] | 39.6348 | 24.2775 | 17.2148 | 31.4204 | 19.2785 | 14.5604 |

| Demons [62] | 24.3949 | 12.1627 | 8.2701 | 16.5976 | 11.6533 | 10.4814 |

| FFD [63] | 26.6226 | 19.9050 | 18.3493 | 26.7028 | 19.4471 | 19.8807 |

| FAST [64] | 39.6481 | 24.6021 | 17.0724 | 31.5625 | 16.9122 | 14.3712 |

| ECC [65] | 14.9568 | 18.9901 | 17.0026 | 25.2498 | 15.7109 | 20.8675 |

| SIFT-FSC [66] | 43.5612 | 23.7221 | 19.7786 | 33.0675 | 18.8597 | 22.7754 |

| SURF-GTM [67] | 43.8527 | 23.7213 | 20.0389 | 33.6706 | 20.7168 | 23.0848 |

| SIFT [68] | 39.3893 | 29.7595 | 22.6991 | 30.6268 | 17.0580 | 13.8813 |

| SURF [69] | 39.6625 | 25.3289 | 17.1490 | 31.5230 | 19.0615 | 13.7371 |

| Proposed | 15.6390 | 6.6643 | 4.4905 | 11.7514 | 5.2154 | 4.2349 |

| Algorithms | Image Datasets | |||||

|---|---|---|---|---|---|---|

| Roofs | Tower | Grassland | Parking | Trees | Water | |

| VGG16 [3] | 1.1146 | 1.1160 | 0.9283 | 1.2110 | 0.3776 | 1.4651 |

| ORB [58] | 0.9307 | 1.1555 | 1.2037 | 1.1815 | 0.2629 | 1.6509 |

| KAZE [59] | 0.9566 | 1.1506 | 1.2057 | 1.1816 | 0.4022 | 1.7132 |

| AKAZE [60] | 0.9561 | 1.1509 | 1.2234 | 1.1816 | 0.3923 | 1.7153 |

| BRISK [61] | 0.9555 | 1.1923 | 1.2131 | 1.1801 | 0.3954 | 1.6833 |

| Demons [62] | 2.2066 | 2.5214 | 2.3533 | 2.2706 | 1.9019 | 2.4924 |

| FFD [63] | 1.1860 | 1.1899 | 1.1016 | 1.1602 | 0.4298 | 1.5313 |

| FAST [64] | 0.9548 | 0.7090 | 1.1472 | 1.2116 | 0.4442 | 1.6514 |

| ECC [65] | 1.9540 | 1.6585 | 1.4887 | 1.4983 | 0.8823 | 1.6697 |

| SIFT-FSC [66] | 0.9963 | 1.2388 | 1.1978 | 1.2059 | 0.5592 | 1.6172 |

| SURF-GTM [67] | 0.9886 | 1.2687 | 1.1945 | 1.2030 | 0.4320 | 1.5586 |

| SIFT [68] | 0.9643 | 0.7810 | 0.7534 | 1.1097 | 0.4581 | 1.7272 |

| SURF [69] | 0.9581 | 1.1029 | 1.2240 | 1.1853 | 0.3679 | 1.7154 |

| Proposed | 2.2073 | 2.5468 | 2.4975 | 2.3240 | 2.0256 | 2.5974 |

| Algorithm | Platform | Image Datasets | |||||

|---|---|---|---|---|---|---|---|

| Roofs | Tower | Grassland | Parking | Trees | Water | ||

| FFD | CPU | 2430.85 | 2442.26 | 2434.59 | 2439.99 | 2455.39 | 2447.01 |

| CPU+GPU | 133.12 | 132.93 | 132.93 | 132.81 | 132.70 | 133.03 | |

| Demons | CPU | 19.84 | 21.31 | 21.79 | 20.55 | 21.80 | 21.52 |

| CPU+GPU | 0.43 | 0.43 | 0.44 | 0.43 | 0.44 | 0.44 | |

| FAST | CPU | 1.59 | 2.45 | 7.03 | 3.64 | 2.73 | 3.01 |

| CPU+GPU | 0.45 | 0.48 | 0.43 | 0.58 | 0.50 | 0.43 | |

| ORB | CPU | 0.41 | 0.39 | 0.42 | 0.41 | 0.39 | 0.41 |

| CPU+GPU | 0.34 | 0.34 | 0.34 | 0.35 | 0.34 | 0.34 | |

| SURF | CPU | 2.02 | 2.70 | 3.37 | 2.02 | 4.27 | 1.57 |

| CPU+GPU | 0.22 | 0.26 | 0.29 | 0.23 | 0.77 | 0.22 | |

| Proposed | CPU | 15.53 | 16.47 | 16.89 | 15.95 | 16.24 | 16.54 |

| CPU+GPU | 0.52 | 0.49 | 0.50 | 0.49 | 0.50 | 0.49 | |

| Algorithm | Flight Point 3 (Cube 3) | ||||

|---|---|---|---|---|---|

| SSIM | RMSE | MI | UIQI | SAM | |

| VGG16 [3] | 0.0529 | 124.2055 | 0.3930 | 0.0580 | 0.6589 |

| ORB [58] | 0.2773 | 98.8223 | 1.3868 | 0.3415 | 0.5026 |

| KAZE [59] | 0.2146 | 108.3649 | 1.0637 | 0.2664 | 0.5507 |

| AKAZE [60] | 0.1898 | 110.5435 | 1.1167 | 0.2341 | 0.6031 |

| BRISK [61] | 0.1734 | 122.5900 | 0.6924 | 0.1950 | 0.5437 |

| Demons [62] | 0.3085 | 98.7734 | 1.4259 | 0.3802 | 0.5015 |

| FFD [63] | 0.0971 | 128.3170 | 0.3605 | 0.1071 | 0.5960 |

| FAST [64] | 0.1598 | 103.8626 | 1.0778 | 0.1955 | 0.5517 |

| ECC [65] | 0.1449 | 101.7744 | 1.2016 | 0.1806 | 0.5264 |

| SIFT-FSC [66] | 0.0581 | 119.1905 | 0.5133 | 0.0653 | 0.6890 |

| SURF-GTM [67] | 0.0376 | 124.9966 | 0.3564 | 0.0425 | 0.6706 |

| SIFT [68] | 0.1118 | 140.5192 | 0.1166 | 0.1154 | 0.6221 |

| SURF [69] | 0.0566 | 121.0245 | 0.4621 | 0.0632 | 0.6706 |

| Proposed | 0.3325 | 89.3380 | 1.5146 | 0.3998 | 0.4719 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Hu, B.; Hou, X.; Yu, T.; Zhang, Z.; Liu, X.; Liu, J.; Wang, X. Real-Time Registration of Unmanned Aerial Vehicle Hyperspectral Remote Sensing Images Using an Acousto-Optic Tunable Filter Spectrometer. Drones 2024, 8, 329. https://doi.org/10.3390/drones8070329

Liu H, Hu B, Hou X, Yu T, Zhang Z, Liu X, Liu J, Wang X. Real-Time Registration of Unmanned Aerial Vehicle Hyperspectral Remote Sensing Images Using an Acousto-Optic Tunable Filter Spectrometer. Drones. 2024; 8(7):329. https://doi.org/10.3390/drones8070329

Chicago/Turabian StyleLiu, Hong, Bingliang Hu, Xingsong Hou, Tao Yu, Zhoufeng Zhang, Xiao Liu, Jiacheng Liu, and Xueji Wang. 2024. "Real-Time Registration of Unmanned Aerial Vehicle Hyperspectral Remote Sensing Images Using an Acousto-Optic Tunable Filter Spectrometer" Drones 8, no. 7: 329. https://doi.org/10.3390/drones8070329