Abstract

Research on unmanned autonomous vehicles (UAVs) for search and rescue (SAR) missions is widespread due to its cost-effectiveness and enhancement of security and flexibility in operations. However, a significant challenge arises from the quality of sensors, terrain variability, noise, and the sizes of targets in the images and videos taken by them. Generative adversarial networks (GANs), introduced by Ian Goodfellow, among their variations, can offer excellent solutions for improving the quality of sensors, regarding super-resolution, noise removal, and other image processing issues. To identify new insights and guidance on how to apply GANs to detect living beings in SAR operations, a PRISMA-oriented systematic literature review was conducted to analyze primary studies that explore the usage of GANs for edge or object detection in images captured by drones. The results demonstrate the utilization of GAN algorithms in the realm of image enhancement for object detection, along with the metrics employed for tool validation. These findings provide insights on how to apply or modify them to aid in target identification during search stages.

1. Introduction

Search and rescue (SAR) is the process of finding and helping people injured, in danger, or lost, especially in hard-to-reach sites, such as deserts, forests, and crash debris. It is a highly significant field in saving lives in hazardous environments, environmental disasters, and accidents involving both people and animals worldwide [1,2,3,4,5,6,7,8,9]. The use of unmanned aerial vehicles (UAVs) proves to be particularly valuable in these SAR operations [10,11], especially in those specific areas, as this technology offers conveniences such as reduced operation costs and vehicle sizes, agility, safety, remote operation, and the use of sensors calibrated in various light spectra.

Regarding these sensors, one of the primary challenges to address is the quality of the camera, as the steps of mapping, remote sensing, target class identification, visual odometry [12], and UAV positioning rely on the analysis and interpretation of data captured by these sensors. Thus, processing the images generated by UAVs through computer vision algorithms aided by deep learning techniques is a highly important area of investigation in the deployment of these small vehicles, as some images generated by them may suffer from various quality problems and must be corrected to be used later to detect edges, objects, people, and animals in search and rescue scenarios.

The issues presented by sensors include motion blur, generated by the discrepancy between the velocity and instability of the UAV during image capture; the quality of the terrain where the image was captured; the distance from the targets (the farther away, the more difficult the identification process); video noise; and artifacts generated by camera quality, among others.

Some studies have been proposing algorithms to address these quality issues in UAV sensors. [13] proposed a method for removing non-uniform motion blur from multiple blurry images by addressing images blurred by unknown, spatially varying motion blur kernels, caused by different relative motions between the camera and the scene. Ref. [14] proposed a novel motion deblurring framework that addresses challenges in image deblurring, particularly in handling complex real-world blur scenarios and avoiding the over- and under-estimation of blur, which can lead to restored images remaining blurred or introducing unwanted distortion. They used BSDNEt to disentangle blur features from blurry images, model motion blur, synthesize blurry images based on extracted blur features, and demonstrate generalization and adaptability in handling different blur types. Regarding noise, Ref. [15] reviewed some techniques using the PSNR and SSIM among other metrics to evaluate the studies under analysis. To tackle brightness and contrast in images, Ref. [16] introduced a new method called Brightness Preserving Dynamic Histogram Equalization (BPDHE) to make images look better by enhancing their contrast while keeping their original brightness levels. By dividing the image’s histogram and adjusting different parts separately, BPDHE improves image quality without making some areas too bright or too dark. Ref. [17] handled the super-resolution enhancement on the images without using artificial intelligence in the research. The document covers many methods, such as iterative algorithms, image registration, adaptive filtering, and image interpolation, used to reconstruct high-resolution images from low-resolution inputs. It addresses the Stochastic SR approach, adaptive filtering approach, Iterative Back-Projection (IBP) method, and other signal processing techniques.

Generative adversary network [18] (GAN) solutions have shown interesting results in addressing image enhancement, as some variations of this algorithm can generate new high-quality images from degraded ones [18,19,20,21,22,23,24,25,26]. Ref. [27] conducted a review of these GAN-based techniques addressing super-resolution, also using the PSNR and SSIM to evaluate and compare results among important datasets, including people and animal data, but they did not address the SAR context in their study, or target detection. The use of GAN algorithms has the property of creating new images from a latent space [28], based on a specific trained dataset. This alternative differs from algorithms not oriented to the use of artificial intelligence, since they use filters to improve the image, and not necessarily create new data in the process. With this ability of GANs to generate new data, GANs have great potential to replace some of these filters, generating new and improved images from low-resolution ones. Finally, with these improved images, one can use other algorithms to detect objects, edges, people, and animals, such as YOLO, Faster R-CNN, and others, which can be very useful in search and rescue operations.

Considering the applicability of GAN networks for image enhancement, we conducted a systematic literature review focused on the utilization of GAN algorithms for improvements in target detection in images captured by UAVs, aiming to gain insights into the techniques and metrics employed in this task and potential adaptations for search and rescue applications.

2. Research Method

2.1. Research Definition

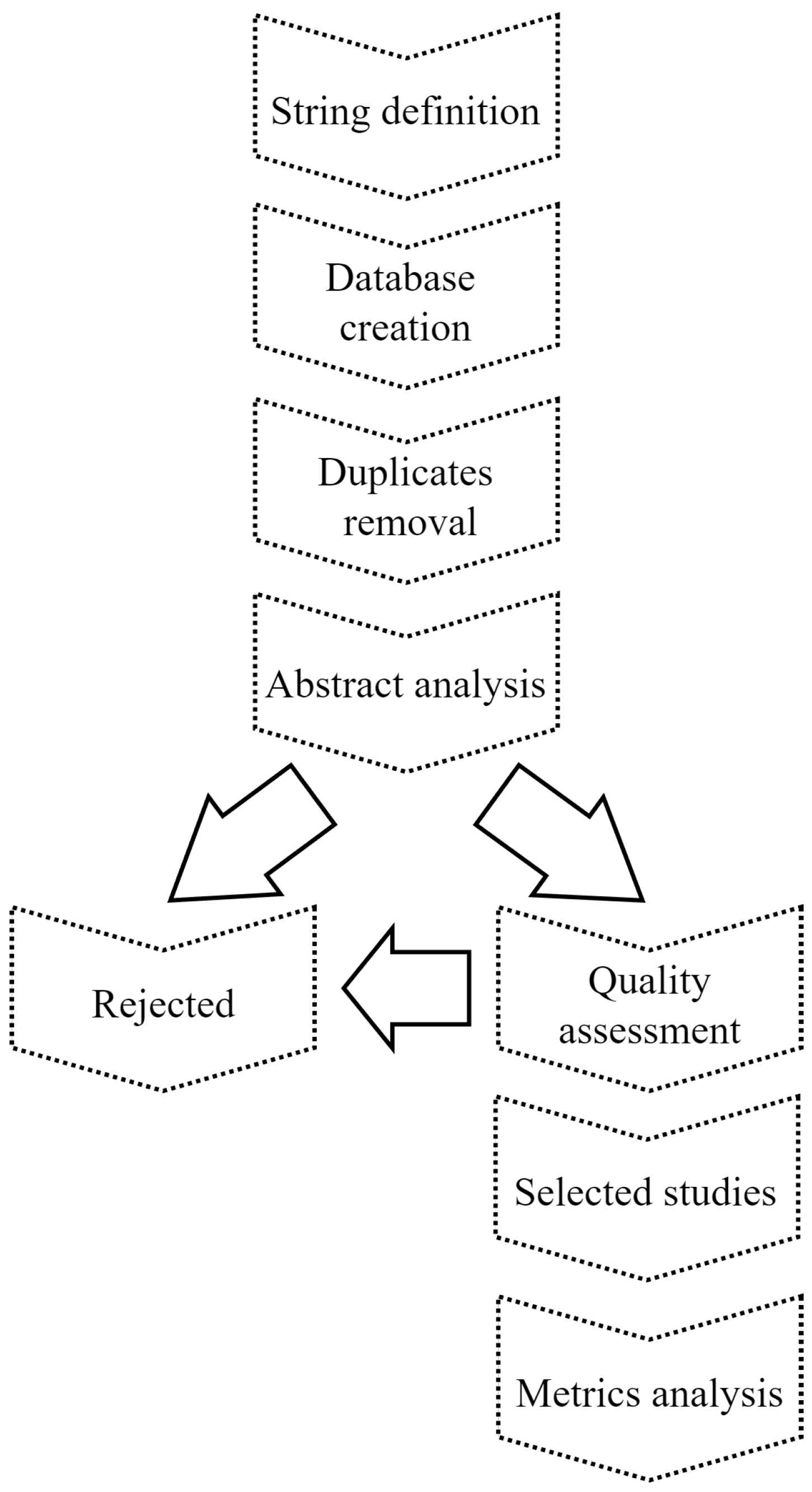

Our research was divided into three parts, as depicted in Figure 1. To initiate our research endeavor, we formulated the following search question.

- How can GAN algorithms help detect edges or objects in images generated by UAVs?

Figure 1.

Research overview for this systematic review.

Figure 1.

Research overview for this systematic review.

To offer additional guidance for our analysis, particularly concerning metrics and the utilization of pre-trained models within the studies under scrutiny, we put forth the following supplementary questions:

- How can GANs be addressed in search and rescue(SAR) operations?

- What benefits are gained from using a pre-trained model rather than training one from scratch?

- Which metrics are most suitable for validating these algorithms?

These questions served as guiding beacons, assisting in delineating the scope of our study analysis.

To study the role of generative adversarial networks (GANs) in aiding the detection of individuals and wildlife in search and rescue operations, we formulated a generic search string for edge and object detection in images generated by unmanned aerial vehicles (UAVs), intending to capture the broadest possible range of results in the application area of GANs for target detection in UAV-generated images. Below is the chosen search string tailored for this purpose.

- (“edge detection” OR “object detection”) AND (uav OR drones) AND (gan OR “generative adversarial networks”)

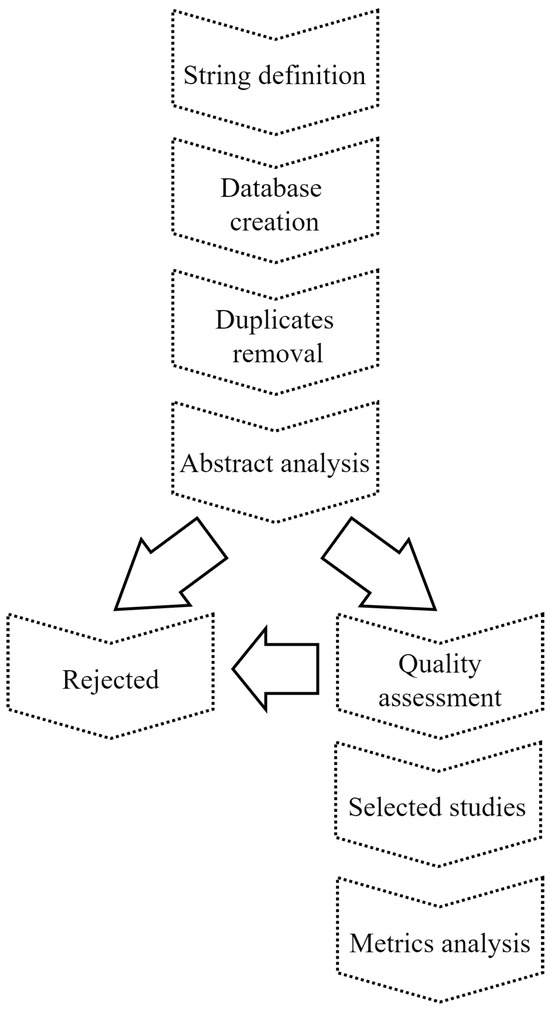

Following Parsifal [29], the subsequent phase unfolded, characterized by a methodical and systematic methodology. Figure 2 depicts the sequential progression followed during this stage.

Figure 2.

Flowchart illustrating the step-by-step process followed in the study analysis. Studies were scrutinized.

Table 1 summarizes the implementation of the PICOC framework in this review.

Table 1.

PICOC framework.

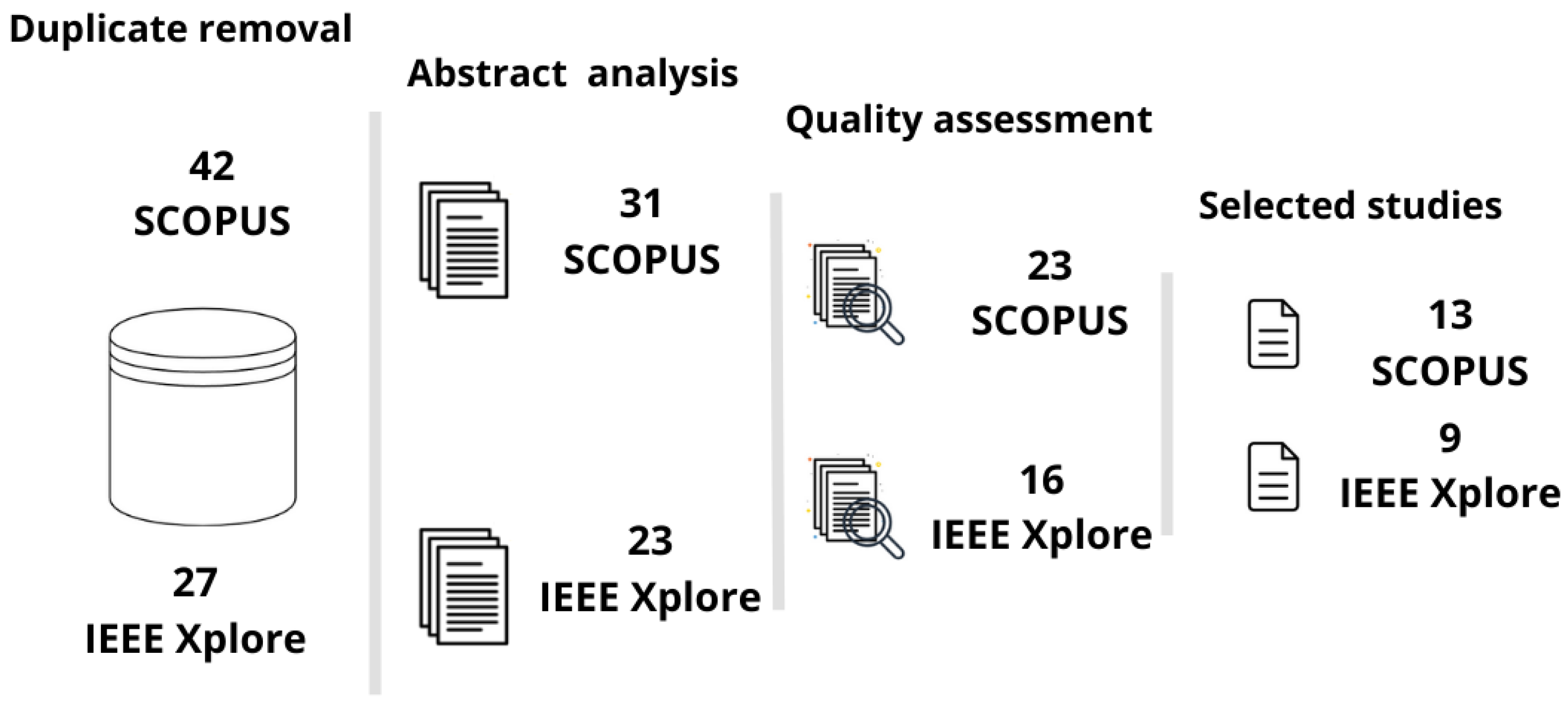

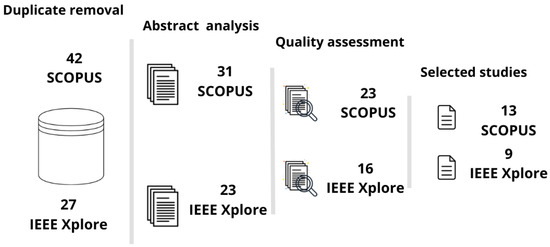

The search string yielded 42 results from Scopus and 27 from the IEEE Xplore databases. An outline of the study selection and quality assessment process is illustrated in Figure 3.

Figure 3.

Study selection process. After removing duplicates and secondary studies, 31 articles from the Scopus database and 23 from IEEE Xplore were subjected to abstract analysis. Subsequently, 23 from Scopus and 16 from IEEE Xplore were selected for quality assessment, while the remainder were rejected. Finally, 13 studies from Scopus and 9 from IEEE Xplore were selected for the evaluation of the metrics presented in validating the tools used for enhancing and detecting objects in images generated by UAVs.

2.2. Abstract Analysis

After removing duplicate entries, we assessed the abstracts of the papers to make preliminary selections, concentrating on the posed inquiries. Duplicate entries and secondary works were excluded during the initial screening process. Following this, we examined the abstracts of the articles to finalize our initial selections. Table 2 outlines the criteria utilized for rejecting papers at this stage.

Table 2.

Exclusion criteria.

Five documents obtained from the data collected from the SCOPUS database were excluded because they only contained informational notes from conference guides and did not present any significant studies. The three following articles were removed regarding criterion EX1: Ref. [30] addresses the application of GANs in protecting private images, given the growing volume of images collected on IOT devices; Ref. [31] addresses concerns regarding security and reliability in deep learning models, aimed at industrial cyber-physical systems (ICPSs); Ref. [32] addresses a two-stage insulator defect detection method based on Mask R-CNNs (masked convolutional neural networks), focusing on the use of unmanned aerial vehicles (UAVs) in the inspection of electrical power systems. Ref. [33] was removed according to EX2, given that it is not a primary study but a secondary one. It addresses a review conducted on different techniques (including GANs) used to identify vehicles in images generated by UAVs.

The following studies were removed regarding EX3. Ref. [34] discusses the automatic landing of UAVs in unknown environments based on the perception of 3D environments. However, the algorithm uses Random Forest and not a GAN. Ref. [35] augmented the GAN training dataset by adding transformed images with increased realism, using the PTL technique to deal with the degradation difference between the real and virtual training images. Edge or object detection is not mentioned in the article, just the improvement of the dataset. Ref. [36] details investigations into the detection of electromagnetic interference through spoofing in UAV GPSs.

2.3. Quality Assessment

The incorporation of a GAN is a requisite in our dataset; studies lacking its integration were excluded during the quality assessment process. Our evaluation of quality was conducted under the inclusion criteria outlined in Table 3.

Table 3.

Inclusion criteria.

In IC1, we evaluate whether the primary emphasis of the GAN solution proposed in the study is on detecting edges or objects, recognizing that numerous works may target alternative applications such as UAV landing or autonomous navigation. Given that our benchmark relies on pre-trained GAN models, IC2 examines whether the study utilizes a pre-trained model or if the authors train one from scratch using a specific dataset. A pivotal aspect of evaluating the study involves scrutinizing the metrics employed to assess the algorithm; thus, IC3 analyzes if the paper provides these metrics for the GAN. IC4 and IC5 enable us to ascertain if the GAN solution presented in the study is trained and/or applied to images in the visible light or infrared spectra, aligning with our benchmark’s focus on these two spectra. In IC6, we verify if the object or edge detection aims to identify people or animals, given our benchmark’s concentration on search and rescue operations. As YOLO stands out as one of the most commonly used tools for real-time object detection, in IC7, we verify if the paper employs any version of YOLO or a complementary tool. Each inclusion criterion (IC) is assigned a score of 0 if the paper fails to meet the criteria or does not mention it in the study, 0.5 if it partially meets the criteria, and 1 if it fully meets the criteria.

3. Results

Using the quality assessment framework, our objective was to meticulously review the studies to determine if they achieve the stated objectives of employing GANs for target detection oriented for SAR operation aid. Table 4 presents the scoring outcomes for each study. Initially, our analysis was directed toward verifying the inclusion criteria (IC). Subsequently, we scrutinized studies with a focus on detecting people or animals, followed by those targeting other objects, and then those addressing images in the infrared spectrum, further examining studies utilizing some version of YOLO, and finally those dealing with pre-trained models.

The following studies were excluded during the quality assessment stage, and are, therefore, not included in the results. Ref. [37] was inaccessible via our institutional tools and network; consequently, it was excluded from our review. Studies [38,39] were deemed false positives as they did not mention the utilization of GANs in their paper. Additionally, it was observed that they were authored by the same individuals and focused on similar research themes. Refs. [40,41] were rejected because the primary focus of the research was on creating datasets using GANs, which lies outside the scope of our systematic review.

Table 4.

From quality assessment. “Total” represents the cumulative points assigned to each study based on inclusion criteria. “Base” denotes the source database where the papers were indexed, while “Pub. type” indicates the publication format, distinguishing between journal papers (a) and conference papers (b). Target is the kind of search that the study aimed to identify.

Table 4.

From quality assessment. “Total” represents the cumulative points assigned to each study based on inclusion criteria. “Base” denotes the source database where the papers were indexed, while “Pub. type” indicates the publication format, distinguishing between journal papers (a) and conference papers (b). Target is the kind of search that the study aimed to identify.

| Study | Year | IC1 | IC2 | IC3 | IC4 | IC5 | IC6 | IC7 | Total | Citations | Base | Pub. Type | Target |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [42] | 2017 | 0.5 | 1.0 | 0.5 | 1.0 | 0.0 | 0.0 | 0.0 | 3.0 | - | IEEE Xplore | b | Roads |

| [43] | 2017 | 5.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 2.5 | - | IEEE Xplore | b | Transmission Lines |

| [44] | 2018 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 3.0 | - | IEEE Xplore | b | Stingrays |

| [45] | 2019 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | - | IEEE Xplore | a | Diverse entities |

| [46] | 2019 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 3.0 | - | IEEE Xplore | b | Cars |

| [47] | 2019 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 2.0 | 33 | SCOPUS | a | Diverse entities |

| [48] | 2019 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 3.0 | 8 | SCOPUS | b | Vehicles |

| [49] | 2019 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 3.0 | - | IEEE Xplore | b | Vehicles |

| [50] | 2020 | 0.0 | 0.0 | 1.0 | 0.5 | 0.0 | 0.0 | 1.0 | 2.5 | 12 | SCOPUS | a | Markers |

| [51] | 2020 | 0.5 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 3.5 | 15 | SCOPUS | a | Diverse entities |

| [52] | 2020 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 3.0 | 7 | SCOPUS | a | Insulators |

| [53] | 2020 | 0.5 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 3.5 | - | IEEE Xplore | b | Vehicles |

| [54] | 2021 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 4.0 | 5 | SCOPUS | a | Pedestrians |

| [55] | 2021 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 5.0 | 1 | SCOPUS | a | Diverse entities |

| [56] | 2021 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 2.0 | 23 | SCOPUS | a | Plants |

| [57] | 2021 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 3.0 | 0 | SCOPUS | a | Diverse entities |

| [58] | 2021 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 3.0 | 26 | SCOPUS | a | Small Entities |

| [59] | 2021 | 0.5 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 2.5 | 10 | SCOPUS | a | Insulators |

| [60] | 2021 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 2.0 | 6 | SCOPUS | a | Diverse entities |

| [61] | 2021 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 3.0 | - | IEEE Xplore | b | Pedestrians |

| [62] | 2021 | 0.0 | 0.0 | 0.0 | 0.5 | 0.0 | 1.0 | 1.0 | 2.5 | - | IEEE Xplore | b | Living beings |

| [63] | 2022 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 5.0 | 1 | SCOPUS | b | Debris |

| [64] | 2022 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 4.0 | 23 | SCOPUS | a | Pavement cracks |

| [65] | 2022 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 2.0 | 0 | SCOPUS | b | Transm. Lines defects |

| [66] | 2022 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 4.0 | 8 | SCOPUS | a | Wildfire |

| [67] | 2022 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 2.0 | 8 | SCOPUS | a | Anomaly Entities |

| [68] | 2022 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 3.0 | 8 | SCOPUS | a | Small drones |

| [69] | 2022 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 3.0 | 1 | SCOPUS | a | Peach tree crowns |

| [70] | 2022 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 2.0 | - | IEEE Xplore | b | UAVs |

| [71] | 2022 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 3.0 | - | IEEE Xplore | b | Human faces |

| [72] | 2022 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 2.0 | - | IEEE Xplore | b | Object distances from UAV |

| [73] | 2022 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 2.0 | - | IEEE Xplore | b | Diverse entities |

| [74] | 2023 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 4.0 | 1 | SCOPUS | b | Vehicles |

| [75] | 2023 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 3.0 | 2 | SCOPUS | a | Diverse entities |

| [76] | 2023 | 0.5 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 2.5 | - | IEEE Xplore | b | Small entities |

| [77] | 2023 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 5.0 | - | IEEE Xplore | b | Drones |

| [78] | 2023 | 1.0 | 0.0 | 1.0 | 0.5 | 0.0 | 0.0 | 1.0 | 3.5 | - | IEEE Xplore | b | Small objects |

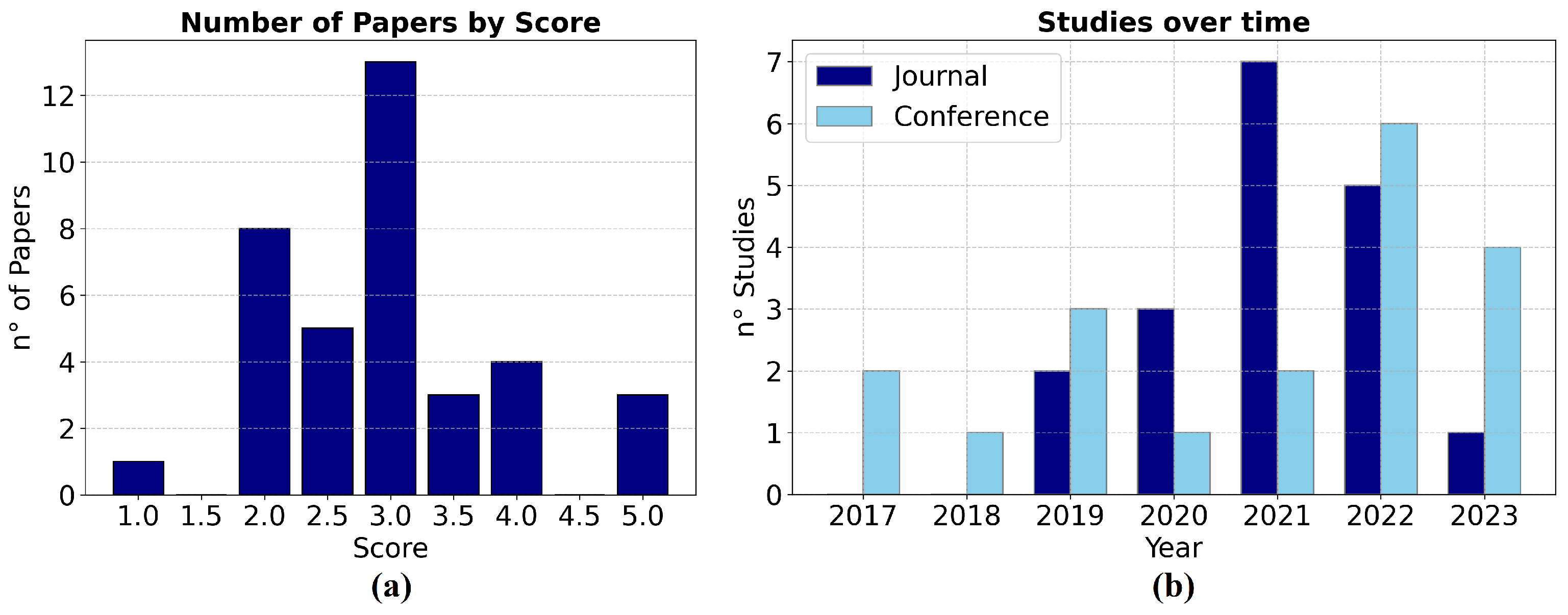

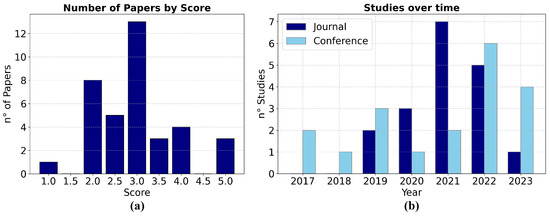

Figure 4a shows a bar chart relating the number of papers per score rate, and Figure 4b relates the number of studies per year and type of publication.

Figure 4.

(a) Studies divided by quality assessment score. (b) Number of studies under analysis over the publication years, classified by journal or conference publication.

3.1. Human and Animal Detection

From the articles focused on the detection of humans and animals, we obtained the following outcomes.

Ref. [54] proposes a weight GAN sub-network to enhance the local features of small targets and introduces sample balance strategies to optimize the imbalance among training samples, especially between positive and negative samples, as well as easy and hard samples, which is a technique for object detection that freely addresses issues of images generated by drone movement instability and tiny object size, which can hinder identification, lighting problems, rain, and fog, among others. The study reported improvements in detection performance compared to other methods, such as achieving a 5.46% improvement over Large Scale Images, a 3.91% improvement over SRGAN, a 3.59% improvement over ESRGAN, and a 1.23% improvement over Perceptual GANs. This work would be an excellent reference for addressing issues related to images taken from medium or high altitudes in SAR operations. The authors used accuracy as a metric, comparing its value with those of the the SRGAN, ESRGAN, and Perceptual GAN models. Other metrics presented for evaluating the work include the AP (average precision) and AR (average recall).

Ref. [44] used a Faster-RCNN, but for detecting stingrays. The work proposes the application of a GLO model (a variation of a GAN where the discriminator is removed and learns to map images to noise vectors by minimizing the reconstruction loss) to increase the dataset to improve object detection algorithms. The used model (C-GLO) learns to generate synthetic foreground objects (stingrays) given background patches using a single network, without relying on a pre-trained model for this specific task. In other words, the study utilized a modified GAN network to expand the dataset of stingray images in oceans, considering the scarcity of such images, which complicates the training of classification algorithms. Thus, the dataset was augmented through C-GLO, and the data were analyzed by Faster-RCNN. They utilized the AP metric to assess the performance of the RCNN applied tothe images of various latent code dimensions.

Ref. [61] proposes a model for generating pedestrian silhouette maps, used for their recognition; however, the application of GANs is not addressed. It was rejected because it lacked the use of GANs in the development.

Ref. [62] was also rejected due to its failure to incorporate GAN usage in development, despite utilizing YOLOv3 for target detection in UAV images.

3.2. Object Detection

In considering studies oriented to object detection, Ref. [47] aimed to address object detection challenges in aerial images captured by UAVs in the visible light spectrum. To enhance object detection in these images, the study proposes a GAN-based super-resolution method. This GAN solution is specifically designed to up-sample images with low-resolution object detection challenges, improving the overall detection accuracy in aerial imagery.

Ref. [74] utilized a GAN-based real-time data augmentation algorithm to enhance the training data for UAV vehicle detection tasks, specifically focusing on improving the accuracy of detecting vehicles, pedestrians, and bicycles in UAV images. In incorporating the GAN approach, along with enhancements like using FocalLoss and redesigning the target detection head combination, the study achieved a 4% increase in detection accuracy over the original YOLOv5 model.

Ref. [67] introduces a novel two-branch generative adversarial network architecture designed for detecting and localizing anomalies in RGB aerial video streams captured by UAVs at low altitudes. The primary purpose of the GAN-based method is to enhance anomaly detection and localization in challenging operational scenarios, such as identifying small dangerous objects like improvised explosive devices (IEDs) or traps in various environments. The GAN architecture consists of two branches: a detector branch and a localizer branch. The detector branch focuses on determining whether a given video frame depicts a normal scene or contains anomalies, while the localizer branch is responsible for producing attention maps that highlight abnormal elements within the frames when anomalies are detected. In the context of search and rescue operations, the GAN-based method can be instrumental in identifying and localizing anomalies or potential threats in real-time aerial video streams. For example, in search and rescue missions, the system could help in detecting hazardous objects, locating missing persons, or identifying obstacles in disaster-affected areas. By leveraging the GAN’s capabilities for anomaly detection and localization, search and rescue teams can enhance their situational awareness and response effectiveness in critical scenarios.

Ref. [57] proposes a novel end-to-end multi-task GAN architecture to address the challenge of small-object detection in aerial images. The GAN framework combines super-resolution (SR) and object detection tasks to generate super-resolved versions of input images, enhancing the discriminative detection of small objects. The generator in the architecture consists of an SR network with additional components such as a gradient guidance network (GGN) and an edge-enhancement network (EEN) to mitigate structural distortions and improve image quality. In the discriminator part of the GAN, a faster region-based convolutional neural network (FRCNN) is integrated for object detection. Unlike traditional GANs that estimate the realness of super-resolved samples using a single scalar, realness distribution is used as a measure of realness. This distribution provides more insights for the generator by considering multiple criteria rather than a single perspective, leading to an improved detection accuracy.

Ref. [68] introduces a novel detection network, the Region Super-Resolution Generative Adversarial Network (RSRGAN), to enhance the detection of small infrared targets. The GAN component of the RSRGAN focuses on the super-resolution enhancement of infrared images, improving the clarity and resolution of small targets like birds and leaves. This enhancement aids in accurate target detection, particularly in challenging scenarios. In the context of search and rescue operations, the application of the RSRGAN could be beneficial for identifying small targets in infrared imagery with greater precision. By enhancing the resolution of images containing potential targets, such as individuals in distress or objects in need of rescue, the RSRGAN could assist search and rescue teams in quickly and accurately locating targets in various environmental conditions. The improved detection capabilities offered by the RSRGAN could enhance the efficiency and effectiveness of search and rescue missions, ultimately contributing to saving lives and optimizing rescue efforts.

Ref. [48] concentrates on enhancing small-object detection in UAV aerial imagery captured by optical cameras mounted on unmanned aerial systems (UAVs). The proposed GAN solution, known as a classification-oriented super-resolution generative adversarial network (CSRGAN), aims to improve the classification results of tiny objects and enhance the detection performance by recovering discriminative features from the original small objects. In the context of search and rescue operations, the application of CSRGAN could be beneficial for identifying and locating small objects, such as individuals or objects, in aerial images. By enhancing the resolution and classification-oriented features of these small objects, CSRGAN could assist in improving the efficiency and accuracy of search and rescue missions conducted using UAVs. This technology could aid in quickly identifying and pinpointing targets in large areas, ultimately enhancing the effectiveness of search and rescue operations.

Ref. [60] focuses on LighterGAN, an unsupervised illumination enhancement GAN model designed to improve the quality of images captured in low-illumination conditions using urban UAV aerial photography. The primary goal of LighterGAN is to enhance image visibility and quality in urban environments affected by low illumination and light pollution, making them more suitable for various applications in urban remote sensing and computer vision algorithms. In the context of search and rescue operations, the application of LighterGAN could be highly beneficial. When conducting search and rescue missions, especially in low-light or nighttime conditions, having clear and enhanced images from UAV aerial photography can significantly aid in locating individuals or objects in need of assistance. By using LighterGAN to enhance images captured by UAVs in low illumination scenarios, search and rescue teams can improve their visibility, identify potential targets more effectively, and enhance their overall situational awareness during critical operations.

Ref. [43] explores the use of GANs to enhance image quality through a super-resolution deblurring algorithm. The GAN-based approach aims to improve the clarity of images affected by motion blur, particularly in scenarios like UAV (unmanned aerial vehicle) image acquisition. By incorporating defocused fuzzy kernels and multi-direction motion fuzzy kernels into the training samples, the algorithm effectively mitigates blur and enhances image data captured by UAVs.

Ref. [49] introduces a novel approach utilizing a GAN to address the challenge of small-object detection in aerial images captured by drones or unmanned aerial vehicles (UAVs). By leveraging the capabilities of GAN technology, the research focused on enhancing the resolution of low-quality images depicting small objects, thereby facilitating more accurate object detection algorithms.

Ref. [45] utilized a generative adversarial network (GAN) solution to augment typical, easily confused negative samples in the pretraining stage of a saliency-enhanced multi-domain convolutional neural network (SEMD) for remote sensing target tracking in UAV aerial videos. The GAN’s purpose is to enhance the network’s ability to distinguish between targets and the background in challenging scenarios by generating additional training samples. In SAR operations, the study can assist in distinguishing between targets and the background.

Ref. [46] introduces a generative adversarial network named VeGAN, trained to generate synthetic images of vehicles from a top-down aerial perspective for semantic segmentation tasks. By leveraging the GAN for content-based augmentation of training data, the study aimed to enhance the accuracy of a semantic segmentation network in detecting cars in aerial images. We can take the study as a basis for training for the identification of other targets.

Ref. [56] aimed to enhance maize plant detection and counting using deep learning algorithms applied to high-resolution RGB images captured by UAVs. To address the challenge of low-quality images affecting detection accuracy, the study proposes a GAN-based super-resolution method. This method aims to improve results on native low-resolution datasets compared to traditional up-sampling techniques. This study was rejected because it focused on agricultural purposes rather than SAR.

Ref. [58] utilized a generative adversarial network (GAN), specifically the CycleGAN model, for domain adaptation in bale detection for precision agriculture. The primary objective was to enhance the performance of the YOLOv3 object detection model in accurately identifying bales of biomass in various environmental conditions. The GAN is employed to transfer styles between images with diverse illuminations, hues, and styles, enabling the YOLOv3 model to effectively detect bales under different scenarios. We also rejected it because of its non-SAR purpose.

Ref. [69] utilized a conditional generative adversarial network (cGAN) for the automated extraction and clustering of peach tree crowns based on UAV images in a peach orchard. The primary focus was on monitoring and quantitatively characterizing peach tree crowns using remote sensing imagery. It was also rejected because it focuses on agriculture.

Ref. [70] proposes a novel approach using the Pix2Pix GAN architecture for unmanned aerial vehicle (UAV) detection. The GAN was applied to detect UAVs in images captured by optical sensors, aiming to enhance the efficiency of UAV detection systems. In utilizing the GAN framework, the study focused on improving the accuracy and effectiveness of identifying UAVs in various scenarios, including adverse weather conditions. We rejected this study because it was aimed at air defense through identifying UAVs in the air using some sensors on the ground.

Ref. [65] employed GANs to enhance transmission line images. The article does not mention YOLO; the study used a dataset from scratch. Therefore, we can conclude that the GAN was used for super-resolution. We cannot classify it as a study focused on SAR. Hence, we rejected the study at this stage.

3.3. Infrared Spectrum

Considering studies with images in the infrared spectrum, Ref. [55] employed GANs to facilitate the translation of color images to thermal images, specifically aiming to enhance the performance of color-thermal ReID (re-identification). This translation process involves converting probe images captured in the visible range to the infrared range. By utilizing the GAN framework for color-to-thermal image translation, the study aimed to improve the effectiveness of object recognition and re-identification tasks in cross-modality scenarios, such as detecting objects in thermal images and matching them with corresponding objects in color images. Yolo and another object detector were mentioned; the study utilized various metrics for evaluating the ThermalReID framework and modern baselines. For the object detection task, they used the Intersection over Union (IoU) and mean average precision (mAP) metrics. In the ReID task, they employed Cumulative Matching Characteristic (CMC) curves and normalized Area Under the Curve (nAUC) for evaluation purposes.

In [63], the primary objective of utilizing GANs was to address the challenge posed by the differing characteristics of thermal and RGB images, such as varying dimensions and pixel representations. By employing GANs, the study aimed to generate thermal images that are compatible with RGB images, ensuring a harmonious fusion of data from both modalities.

The StawGAN in [76] was used to enhance the translation of night-time thermal infrared images into daytime color images. The StawGAN model was specifically designed to improve the quality of target generation in the daytime color domain based on the input thermal infrared images. By leveraging the GAN architecture, which comprises a generator and a discriminator network, the StawGAN model aims to produce more realistic and well-shaped objects in the target domain, thereby enhancing the overall image translation process.

Ref. [77] employed a GAN as a sophisticated image processing technique to enhance the quality of input images for UAV target detection tasks. The primary objective of integrating GAN technology into the research framework was to elevate the accuracy and reliability of the target detection process, particularly in the context of detecting UAVs. By harnessing the capabilities of GANs as image fusion technology, the study focused on amalgamating images captured from diverse modalities, such as those obtained from both the infrared and visible light spectra. This fusion process is crucial, as it enriches the visual information available for identifying and pinpointing UAV targets within the imagery. Essentially, the GAN functions as a tool to generate fused images by adapting and refining the structures of both the generator and discriminator components within the network architecture. Through this innovative approach, the research aimed to enhance the precision and robustness of the target detection mechanism embedded within the YOLOv5 model. By leveraging the power of GAN-based image fusion, the study endeavored to optimize the focus and clarity of the target detection process, ultimately leading to an improved performance in identifying UAV targets within complex visual environments.

Ref. [72] focuses on utilizing a conditional generative adversarial network (CGAN), specifically the Pix2Pix model, to generate depth images from monocular infrared images captured by a camera. This application of CGAN aims to enhance collision avoidance during drone flights at night by providing crucial depth information for safe navigation. The research emphasizes the use of CGAN for converting infrared images into depth images, enabling the drone to determine distances to surrounding objects and make informed decisions to avoid collisions during autonomous flight operations in low-light conditions. This study can be leveraged in drone group operations, but in terms of ground object identification, it is not applicable. Therefore, we rejected the study.

Ref. [52] proposes a novel approach for insulator object detection in aerial images captured by drones utilizing a Wasserstein generative adversarial network (WGAN) for image deblurring. The primary purpose of the GAN solution was to enhance the clarity of insulator images that may be affected by factors such as weather conditions, data processing, camera quality, and environmental surroundings, leading to blurry images. By training the GAN on visible light spectrum images, the study aimed to improve the detection rate of insulators in aerial images, particularly in scenarios where traditional object detection algorithms may struggle due to image blurriness. It was rejected because it was not oriented toward search and rescue.

3.4. YOLO Versions

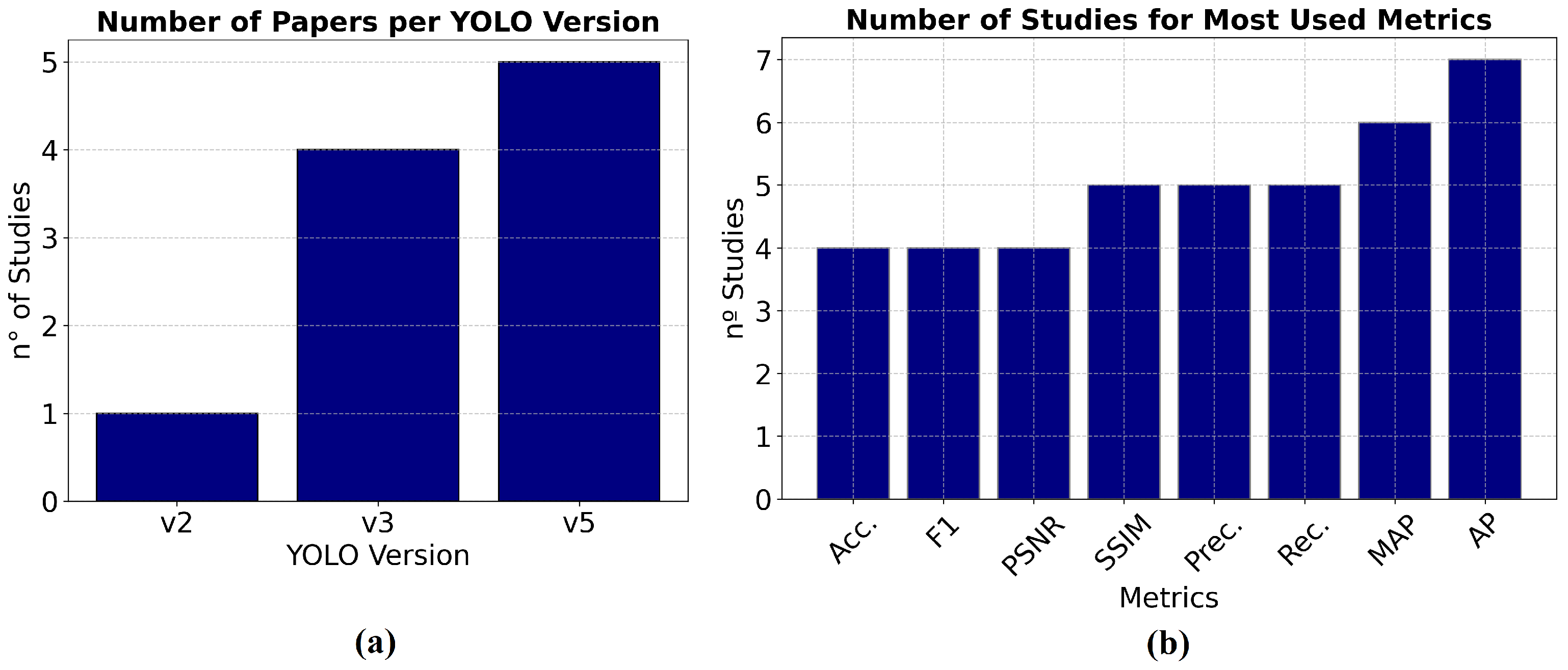

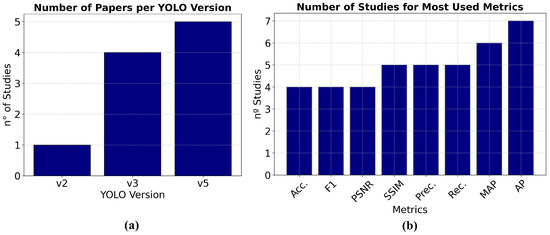

While some studies utilized Faster-RCNN [44,49,51] or custom object detection solutions [68], the majority of the selected ones employed some version of YOLO, with the most common being versions 3 and 5, as depicted in Figure 5a.

Figure 5.

(a) Number of studies regarding the YOLO version used. (b) Studies by the most used metrics.

Ref. [66] aimed to enhance wildfire detection using GANs to produce synthetic wildfire images. These synthetic images were utilized to address data scarcity issues and enhance the model’s detection capabilities. Additionally, Weakly Supervised Learning (WSOL) was applied for object localization and annotation, automating the labeling task and mitigating data shortage issues. The annotated data generated through WSOL were then used to train an improved YOLOv5-based detection network, enhancing the accuracy of the wildfire detection model. The integrated use of GANs for image generation, WSOL for annotation, and YOLOv5 for detection aimed to enhance the model’s performance and automate the wildfire detection process. This study could also aid in search and rescue operations, as the presence of fire in an area may indicate potential areas of interest during search efforts.

Ref. [75] is centered on image deblurring in the context of aerial remote sensing to enhance the object detection performance. It introduces the adaptive multi-scale fusion blind deblurred generative adversarial network (AMD-GAN) to address image blurring challenges in aerial imagery. The AMD-GAN leverages multi-scale fusion guided by image blurring levels to improve the deblurring accuracy and preserve texture details. In the study, the AMD-GAN was applied to deblur aerial remote sensing images, particularly in the visible light spectrum, to enhance object detection tasks. The YOLOv5 model was utilized for object detection experiments on both blurred and deblurred images. The results demonstrated that deblurring with the AMD-GAN significantly improves object detection indices, as evidenced by increased mean average precision (MAP) values and an enhanced detection performance compared to using blurred images directly with YOLOv5.

Ref. [78] engages on enhancing small-object detection in drone imagery through the use of a Collaborative Filtering Mechanism (CFM) based on a cycle generative adversarial network (CycleGAN). The purpose of the GAN in the study was to improve the object-detection performance by enhancing small-object features in drone imagery. The CFM, integrated into the YOLO-V5s model, filters out irrelevant features during the feature extraction process to enhance object detection. By applying the CFM module to YOLO-V5s and evaluating its performance on the VisDrone dataset, the study demonstrated significant improvements in the detection performance, highlighting the effectiveness of the GAN-based approach in enhancing object detection capabilities in drone imagery.

Ref. [64] aimed to develop a portable and high-accuracy system for detecting and tracking pavement cracks to ensure road integrity. To address the limited availability of pavement crack images for training, a GAN called PCGAN is introduced. PCGAN generates realistic crack images to augment the dataset for an improved detection accuracy using an improved YOLO v3 algorithm. The YOLO-MF model, a modified version of YOLO v3 with acceleration and median flow algorithms, was employed for crack detection and tracking. This integrated system enhances the efficiency and accuracy of pavement crack detection and monitoring for infrastructure maintenance. We rejected this study because it lacks relation to SAR operations.

Ref. [50] focuses on addressing the challenges of motion deblurring and marker detection for autonomous drone landing using a deep learning-based approach. To achieve this, the study proposes a two-phase framework that combines a slimmed version of the DeblurGAN model for motion deblurring with the YOLOv2 detector for object detection. The purpose of the DeblurGAN model is to enhance the quality of images affected by motion blur, making it easier for the YOLOv2 detector to accurately detect markers in drone landing scenarios. By training a variant of the YOLO detector on synthesized datasets, the study aimed to improve the marker detection performance in the context of autonomous drone landing. Overall, the study leveraged the DeblurGAN model for motion deblurring and the YOLOv2 detector for object detection to enhance the accuracy and robustness of marker detection in autonomous drone landing applications. We rejected it as its focus is on landing assistance rather than search and rescue.

Ref. [58] utilized a GAN solution, specifically the CycleGAN model, for domain adaptation in the context of bale detection in precision agriculture. The primary objective was to enhance the performance of the YOLOv3 object detection model for accurately detecting bales of biomass in various environmental conditions. The GAN was employed to transfer styles between images with diverse illuminations, hues, and styles, enabling the YOLOv3 model to be more robust and effective in detecting bales under different scenarios. By training the YOLOv3 model with images processed through the CycleGAN for domain adaptation, the study aimed to improve the accuracy and efficiency of bale detection, ultimately contributing to advancements in agricultural automation and efficiency. The study was rejected because its focus is more aligned with the application of UAVs in agriculture.

Ref. [59] introduces InsulatorGAN, a novel model based on conditional generative adversarial networks (GANs), designed for insulator detection in high-voltage transmission line inspection using unmanned aerial vehicles (UAVs). The primary purpose of InsulatorGAN is to generate high-resolution and realistic insulator detection images from aerial images captured by drones, addressing limitations in existing object detection models due to dataset scale and parameters. In the study, the authors leveraged the YOLOv3 neural network model for real-time insulator detection under varying image resolutions and lighting conditions, focusing on identifying ice, water, and snow on insulators. This application of YOLOv3 demonstrates the integration of advanced neural network models within the context of insulator detection tasks. While the study does not explicitly mention the use of pre-trained models or training from scratch for InsulatorGAN, the emphasis is on enhancing the quality and resolution of generated insulator images through the proposed GAN framework. By combining GAN technology with YOLOv3 for insulator detection, the study aimed to advance the precision and efficiency of detecting insulators in transmission lines using UAV inspection, contributing to the field of computer vision and smart grid technologies. We rejected it due to its lack of emphasis on search and rescue operations.

Figure 5a compiles the YOLO versions and the number of studies that utilize each one of them.

3.5. Pre-Trained Models

Considering the use of pre-trained models and weights, Ref. [51] aimed to predict individual motion and view changes in objects in UAV videos for multiple-object tracking. To achieve this, the study proposes a novel network architecture that includes a social LSTM network for individual motion prediction and a Siamese network for global motion analysis. Additionally, a GAN is introduced to generate more accurate motion predictions by incorporating global motion information and objects’ positions from the last frame. The GAN was specifically utilized to enhance the final motion prediction by leveraging the individual motion predictions and view changes extracted by the Siamese network. It plays a crucial role in generating refined motion predictions based on the combined information from the individual and global motion analysis components of the network. Furthermore, the Siamese network is initialized with parameters pre-trained on ImageNet and fine-tuned for the task at hand. This pre-training step helps the Siamese network learn relevant features from a large dataset like ImageNet, which can then be fine-tuned to extract changing information in the scene related to the movement of UAVs in the context of the study.

Ref. [73] focuses on using generative adversarial networks (GANs) to enhance object detection performance under adverse weather conditions by restoring images affected by weather corruption. Specifically, the Weather-RainGAN and Weather-NightGAN models were developed to address challenges related to weather-corrupted images, such as rain streaks and night scenes, to improve the object detection accuracy for various classes like cars, buses, trucks, motorcycles, persons, and bicycles in driving scenes captured in adverse weather conditions. The study can provide valuable insights into SAR scenarios in snow-covered regions or other severe weather conditions.

Ref. [71] introduces a GAN for a specific purpose, although the exact application domain is not explicitly mentioned in the provided excerpts. The GAN was crafted to achieve a particular objective within the context of the research, potentially linked to tasks in image processing or computer vision. Furthermore, the study incorporated the use of a pre-trained model, which served a specific purpose in developing or enhancing the proposed GAN solution. The application of the pre-trained model within the study likely aimed to leverage existing knowledge or features to improve the performance or capabilities of the GAN in its intended application domain. This study, despite its focus on human face recognition, was deemed unnecessary for SAR operations, as the UAV is anticipated to operate at high altitudes where facial images of potential individuals would not be readily identifiable. Therefore, we rejected this study.

Ref. [42] introduces a dual-hop generative adversarial network (DH-GAN) to recognize roads and intersections from aerial images automatically. The DH-GAN was designed to segment roads and intersections at the pixel level from RGB imagery. The first level of the DH-GAN focuses on detecting roads, while the second level is dedicated to identifying intersections. This two-level approach allows the end-to-end training of the network, with two discriminators ensuring accurate segmentation results. Additionally, the study utilized a pre-trained model within the DH-GAN architecture to enhance the intersection detection process. By incorporating the pre-trained model, the DH-GAN can effectively extract intersection locations from the road segmentation output. This integration of the pre-trained model enhances the overall performance of the DH-GAN in accurately identifying intersections within the aerial images. We declined it because it was not closely aligned with the target topic, as it focuses on road detection rather than the detection of objects, animals, or people.

Ref. [53] aimed to the enhance tracking performance in UAV videos by transferring contextual relations across views. To achieve this, a dual GAN learning mechanism is proposed. The tracking-guided CycleGAN (T-GAN) transfers contextual relations between ground-view and drone-view images, bridging appearance gaps. This process helps adapt to drone views by transferring contextual stable ties. Additionally, an attention GAN (A-GAN) refines these relations from local to global scales using attention maps. The pre-trained model, a Resnet50 model, is fine-tuned to output context operations for the actor–critic agent, which dynamically decides on contextual relations for vehicle tracking under changing appearances across views. Typically, SAR operations are conducted in remote or hard-to-reach areas, making ground-based image capture impractical. Therefore, we rejected thia study.

This systematic literature review yielded highly detailed and diverse results, considering that drone images can serve various purposes such as agriculture, automatic landing, face recognition, the identification of objects on power lines, and so on. Since our focus was primarily on SAR operations, we rejected papers that were unrelated to this topic. Table 5 and Table 6 display the selected results along with the metrics used on their work. Here, AP stands for average precision; AR means average recall; AUC, area under the receiver operating characteristic curve; ROC, receiver operating characteristic curve; MAP, Mean average precision; NIQE, natural image quality evaluator; AG, average gradient; PIQE, perception index for quality evaluation; PSNR, Peak Signal-to-Noise Ratio; SSIM, Structural Similarity Index, FID, Fréchet Inception Distance; DSC, the Dice Similarity Coefficient; S-Score, Segmentation Score; MAE, Mean Absolute Error; IS, Inception Score; SMD, Standard Mean Difference; EAV, Edge-Adaptive Variance; PI, Perceptual Index; IOU Intersection over Union; and and refer to the center position errors in the longitudinal and lateral driving direction. Figure 5b shows the most used metrics, along with the number of studies that use them.

Table 5.

Classic metrics from selected studies. The checkmarks indicate which study uses each metric listed in the table column.

Table 6.

Other metrics from studies.

Ref. [77] conducted a comprehensive comparison of the precision, recall, and mean average precision (MAP) metrics between their proposed improved versions of YOLOv5 and the following models: original YOLOv5; YOLOv5 + CBAM, and YOLOv5 + Image Fusionl. The results demonstrated the superior performance of their proposed models in terms of the object detection accuracy. This comparative analysis not only highlights the advancements achieved with the enhanced YOLOv5 variants but also underscores the importance of employing classical evaluation metrics in assessing the efficacy of GANs and YOLOv5 in practical applications.

Ref. [78] also uses Yolov5 and compares different techniques for object detection with a mAP of 0.5; mAP, 0.5:0.95; precision; and recall.

Ref. [51] presented a comprehensive array of metrics, including Identification Precision (IDP), Identification Recall (IDR), IDF1 score (F1 score), Multiple-Object Tracking Accuracy (MOTA), Multiple-Object Tracking Precision (MOTP), Mostly Tracked targets (MT), Mostly Lost targets (ML), number of False Positives (FPs), number of False Negatives (FNs), number of ID Switches (IDSs), and the number of times a trajectory is Fragmented (FMs). The authors utilized diverse datasets and compared object monitoring performances across various techniques, namely, Faster-RCNN, R-FCN, SSD, and RDN. This extensive metric evaluation renders this reference an excellent resource for validating metrics applied in target identification in SAR applications.

Ref. [75] conducted a comparison of the mean average precision (MAP), precision, and recall in object identification using the methods GT + YOLOV5, Blur + YOLOV5, and AMD-GAN + YOLOV5. It serves as an excellent resource for detection comparisons with YOLOV5. Regarding the GAN utilized, the study employed metrics such as the Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM), comparing these metrics with various methods, including AMD-GAN without NAS MFPN, AMD-GAN with NAS MFPN, AMD-GAN without AMS Fusion, and AMD-GAN with AMS Fusion.

Ref. [60] compared the performances of several algorithms, including LighterGAN; EnlightenGAN; CycleGAN; Retinex; and LIME (Low-Light Image Enhancement via Illumination Map Estimation), which showed the highest PIQE score; and DUAL (Dual Illumination Estimation for Robust Exposure Correction), with respect to the PIQE metric.

Ref. [73] employed the average precision (AP) and mean average precision (mAP) metrics to assess the performances of various restoration methods, including Gaussian Gray Denoising Restormer, Gaussian Colour Denoising Restormer, Weather-RainGAN, and Weather-NightGAN. It evaluated these metrics across different object classes such as car, bus, person, and motorcycle. This comparison serves as an excellent resource for applying these metrics in the context of object detection in works involving the application of GANs.

Ref. [76] utilized the PSNR and SSIM, in addition to the FID and IS, to compare image modality translation among the methods pix2pixHD, StarGAN v, 3 PearlGAN, TarGAN, and StawGAN. Furthermore, they compared the segmentation performances using metrics such asthe Dice Similarity Coefficient (DSC), S-Score, and Mean Absolute Error (MAE), specifically for TarGAN and StawGAN. This comprehensive evaluation provides insights into the effectiveness of these methods for image translation and segmentation tasks.

Ref. [49] compared the PSNR, SSIM, and average PI with SRGAN, ESRGAN, and their proposed model. They also used the MAP metric to evaluate different methods for object detection such as SSD and Faster R-CNN.

Ref. [44] used the average precision to compare augmentation methods to Faster R-CNN without augmentation, in the context of the detection of stingrays.

Ref. [46] employed the IOU metric to quantify the degree of overlap between predicted car regions and ground truth car regions in the images. Through the analysis of and values, the study shows how accurately the segmentation network was able to localize and position the detected cars within the images.

Table 7 and Table 8 present the selected studies divided into clusters, where each cluster represents a trend of application in the grouped studies, with columns indicating the method applied in UAV-generated images, the main advantage of its application, the main disadvantage, and an example of how the study can be addressed in search and rescue scenarios. Cluster A includes studies focusing on GANs for data augmentation, super-resolution, and motion prediction. Cluster B comprises studies utilizing GANs for deblurring, augmentation, and super-resolution. Cluster C consists of studies employing GANs for small-object detection, fusion, and anomaly detection. Cluster D encompasses studies utilizing GANs for Image translation and fusion. Cluster E includes studies focusing on GANs for weather correction, adverse condition handling, and deblurring.

Table 7.

Methods used in the selected studies, main advantages and disadvantages, and applicability to search and rescue (SAR)—part I. Cluster A: data augmentation and enhancement; Cluster B: super-resolution and deblurring.

Table 8.

Methods used in the selected studies, main advantages and disadvantages, and applicability to search and rescue (SAR) part II. Cluster C: anomaly and small-object detection; Cluster D: image translation and fusion; Cluster E: adverse condition handling.

4. Benchmark

To finish our analysis, we conducted the third part of this research as a benchmark regarding a state-of-art GAN model to evaluate the super-resolution feature from a pre-trained model, applied to some sample images from our data. Considering the second research sub-question—What benefits are gained from using a pre-trained model rather than training one from scratch?—we selected the Real-ESRGAN algorithm [26], as it provides a simple approach with pre-trained models that we can utilize to evaluate its application performance on our images, which are not related to the model provided by the algorithm, as some are in the infrared spectrum.

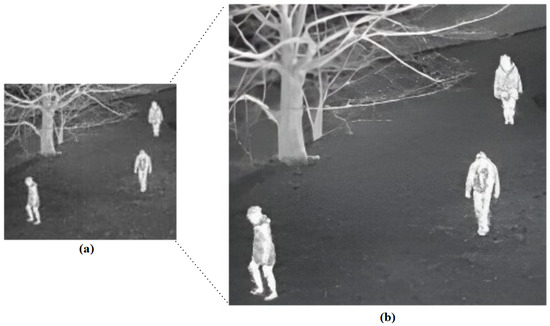

We chose an image that was extracted from a video recorded by a sensor in the infrared spectrum, where it is possible to observe the heat signature of three people walking on a lawn near a university. The original image, as shown in Figure 6a, contained 640 × 512 pixels, and after processing through the algorithm, as depicted in Figure 6b, its size was increased to 2560 × 2040. We cropped important parts of the image for comparison and observed a significant improvement in the contour features of the people, grass, and trees. This tells us that even with a pre-trained model different from that of our dataset, the results in super-resolution could lead to satisfactory results.

Figure 6.

Real-ESRGAN on infrared camera. (a) Original image. (b) After super-resolution.

5. Discussion

The search string was devised to retrieve results related to edge and object detection in general, considering these tasks as the initial steps of image preprocessing. The identification of whether the object is an animal, a person, a car, or something else would fall under subsequent algorithmic steps, which may be executed using detection algorithms such as YOLO, Faster-RCNN, and others, as presented by some studies in the analysis.

Out of the 38 articles analyzed during the quality assessment phase, 19 were conference papers. Additionally, it was observed that the majority of the articles found were recent, with an age of less than 5 years, indicating that research focused on the use of GANs in object detection in images generated for UAVs is recent, as is research focused on the use of GANs in edge detection or object detection. The results show that GAN networks are applied in the image enhancement stage, for subsequent application in edge or target detection, performed by other algorithms such as YOLO or similar ones. Furthermore, this study highlights various types of detection targets in the remote sensing literature, in addition to people and animals, such as smoke, insulators, and fire, among other targets related to agriculture. From the score analysis, we observe that the results are quite dispersed, with the majority of articles falling within the score intervals of 2 to 4, indicating considerable variability and different perspectives on the outcomes.

In light of the article analyses, Ref. [68] served as a valuable reference in this review. It utilized RSRGAN for image super-resolution and also introduced its own detection approach. This dual functionality makes it a good benchmark for future research as one can employ super-resolution methods, like those discussed in this review, to enhance the quality of UAV images, and subsequently apply other algorithms such as YOLO versions, Faster-RCNN, and others for target detection.

Regarding the detection algorithms, YOLO predominated, versions 3 and 5, with Faster-RCNN also being used for the same purpose.

In our analysis of the studies, we encountered a diverse array of metrics being employed, prompting us to adopt a qualitative approach in Table 5 and Table 6, where we merely acknowledge their usage with a checkmark, without delving into detailed quantitative analysis. We observed the application of conventional metrics across multiple datasets, indicating their widespread use for evaluating study outcomes. Additionally, metrics such as the PSNR and SSIM have been utilized to assess noise and image degradation in datasets, serving as quality indicators for super-resolution outcomes. The diverse applications of these metrics offer valuable insights and suggest potential avenues for their utilization in gauging the effectiveness of generation algorithms in future research endeavors.

The categorization of studies into clusters in this systematic literature review provides valuable guidance for selecting suitable references and methodologies to advance search and rescue (SAR) research and foster future investigations. Within the realm of data augmentation and enhancement presented in Cluster A, researchers can utilize this group of studies to augment target classes during the detection stages. In Cluster B, GANs can be employed to generate new data from degraded sources, essentially acting as an artificial filter by leveraging new data from either trained or pre-trained latent spaces. Concerning small-object detection, given that the primary targets in SAR (search and rescue) operations are people and animals, which occupy a very small portion of the images generated by UAVs, the studies related to small-object detection, presented in Cluster C, are the most important to consider for an approach focused on the application of GANs in SAR. In this context, the studies in that group provide significant insights into the application of GANs for addressing small targets in drone-generated images. Studies within Cluster D proposed methods for transforming images across different spectra, such as converting images from infrared to visible light; in other words, one can create daylight images from nightlight ones. Given the uncontrollable nature of weather and adverse conditions, studies within Cluster E offer valuable insights into the application of GANs to mitigate challenges associated with severe weather conditions.

Thus, we can address the primary research question as follows: Overall, studies have developed or modified GANs for super-resolution, with the majority employing models trained from scratch using specific datasets. Following this training, the algorithm is applied to the target images, with object identification conducted before and after, aiming to evaluate comparisons between the GAN algorithms used and their similarities, as well as the subsequent detection stage, where some version of YOLO is predominantly applied. Consequently, this entire process can be adapted for image enhancement with targets relevant to SAR, aiming to improve UAV sensors for the rapid and real-time identification of these targets.

In addressing the first sub-question, from the studies examined, it is evident that the application of GANs in SAR operations may focus on enhancing sensors for subsequent target detection. Regarding the second sub-question, a few studies have shown great results regarding pre-trained weights and models. Our framework, developed with Real-ESRGAN with default parameters and pre-trained model, demonstrates that the application of these approach yields satisfactory results in both visible light and infrared spectra, irrespective of the dataset used for pre-training, but further investigation with new and related pre-training models is needed to more accurately evaluate its impacts on practice. Regarding the third sub-question, our observations revealed the utilization of the PSNR and SSIM metrics to validate the results of GAN models. In addition to these traditional metrics, classic CNN metrics were also employed alongside various other metrics equally distributed to validate the detection algorithms and defect analyses, with average precision being the metric with the highest number of studies. This gives us some reference for comparing results and suggests a necessity for further studies aimed at reviewing and developing a framework capable of offering more profound insights into the most effective metrics to guide conclusions regarding the model under analysis.

Regarding the Parsif.al tool, during the quality assessment phase, it was observed that a duplicated article escaped the automatic removal filter of the tool. Additionally, the data extraction step could automatically retrieve data from the article detail tab, allowing for export in CSV format.

6. Conclusions

This systematic literature review reveals that the application of GAN networks in SAR contexts is currently under development, with a focus on super-resolution for subsequent object detection applications. Other identified areas of application include the enhancement of training datasets for networks and drone navigation purposes. The results indicate that studies concerning search and rescue operations might be primarily oriented toward image enhancement, dataset expansion, and object identification models for subsequent target identification using classical algorithms such as YOLO, Faster-RCNN, and others, with versions 3 and 5 of YOLO being the most prevalent in the evaluated studies. Potential areas for further investigation include real-time applications, target distance in photography, types of search targets, search region quality, and their impacts on photography. Although few of the resulting studies utilized pre-trained models, our benchmark demonstrated that the utilization of a trained model, regardless of the dataset used, has shown interesting results regarding super-resolution in both the visible light and infrared spectrum. Furthermore, the Super-ESRGAN is a great candidate to be applied to the first stage of image processing for SAR. The validation limitations of this work include the selection of only two research databases (SCOPUS and IEEE), which may overlook other relevant articles not indexed by these databases. Additionally, our benchmark employs pre-trained models on images with targets close to the ground, lacking a comparison with a potential dataset tailored to search and rescue situations where the UAV is scanning at high altitudes. Lastly, the search string focuses on edge and object detection, which could be reconsidered to include animals and people as targets, along with incorporating the term “Search and Rescue” into the search. As future work, we propose studying the behaviors of GAN algorithms in real time, utilizing embedded hardware in UAVs, for images captured at medium or high altitudes, to explore possibilities for target detection during real-time rescue operations.

Author Contributions

Conceptualization, V.C. and A.R.; methodology, V.C.; validation, P.F., N.S. and R.S.; investigation, V.C. and A.R.; resources, P.F., N.S. and R.S.; data curation, V.C.; writing—original draft preparation, V.C.; writing—review and editing, A.R. and V.C.; visualization, A.R.; supervision, P.F., N.S. and R.S. All authors have read and agreed to the published version of the manuscript.

Funding

The authors thank the Fundação de Amparo à Pesquisa do Estado de Minas Gerais (FAPEMIG), project APQ00234-18, for the financial support that made this research possible.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Dataset available on request from the authors.

Conflicts of Interest

The authors declare no conflict of interest.

Correction Statement

This article has been republished with a minor correction to the Funding statement. This change does not affect the scientific content of the article.

References

- McIntosh, S.; Brillhart, A.; Dow, J.; Grissom, C. Search and Rescue Activity on Denali, 1990 to 2008. Wilderness Environ. Med. 2010, 21, 103–108. [Google Scholar] [CrossRef] [PubMed]

- Curran-Sills, G.; Karahalios, A.; Dow, J.; Grissom, C. Epidemiological Trends in Search and Rescue Incidents Documented by the Alpine Club of Canada From 1970 to 2005. Wilderness Environ. Med. 2015, 26, 536–543. [Google Scholar] [CrossRef] [PubMed]

- Heggie, T.; Heggie, T.; Dow, J.; Grissom, C. Search and Rescue Trends and the Emergency Medical Service Workload in Utah’s National Parks. Wilderness Environ. Med. 2015, 19, 164–171. [Google Scholar] [CrossRef] [PubMed]

- Ciesa, M.; Grigolato, S.; Cavalli, R. Retrospective Study on Search and Rescue Operations in Two Prealps Areas of Italy. Wilderness Environ. Med. 2015, 26, 150–158. [Google Scholar] [CrossRef] [PubMed]

- Freitas, C.; Barcellos, C.; Asmus, C.; Silva, M.; Xavier, D. From Samarco in Mariana to Vale in Brumadinho: Mining dam disasters and Public Health. Cad. Saúde Pública 2019, 35, e00052519. [Google Scholar] [CrossRef] [PubMed]

- Sássi, C.; Carvalho, G.; De Castro, L.; Junior, C.; Nunes, V.; Do Nascimento, A. Gonçalves, One decade of environmental disasters in Brazil: The action of veterinary rescue teams. Front. Public Health 2021, 9, 624975. [Google Scholar] [CrossRef] [PubMed]

- Stoddard, M.; Pelot, R. Historical maritime search and rescue incident data analysis. In Governance of Arctic Shipping: Rethinking Risk, Human Impacts and Regulation; Chircop, A., Goerlandt, F., Aporta, C., Pelot, R., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 43–62. [Google Scholar]

- Wajeeha, N.; Torres, R.; Gundersen, O.; Karlsen, A. The Use of Decision Support in Search and Rescue: A Systematic Literature Review. ISPRS Int. J. Geo-Inf. 2023, 12, 182. [Google Scholar] [CrossRef]

- Levine, A.; Feinn, R.; Foggle, J.; Karlsen, A. Search and Rescue in California: The Need for a Centralized Reporting System. Wilderness Environ. Med. 2023, 34, 164–171. [Google Scholar] [CrossRef]

- Prata, I.; Almeida, A.; de Souza, F.; Rosa, P.; dos Santos, A. Developing a UAV platform for victim localization on search and rescue operations. In Proceedings of the 2022 IEEE 31st International Symposium on Industrial Electronics (ISIE), Anchorage, AK, USA, 1–3 June 2022; pp. 721–726. [Google Scholar]

- Lyu, M.; Zhao, Y.; Huang, C.; Huang, H. Unmanned aerial vehicles for search and rescue: A survey. Remote Sens. 2023, 15, 3266. [Google Scholar] [CrossRef]

- Braga, J.; Shiguemori, E.; Velho, H. Odometria Visual para a Navegação Autônoma de VANT. Rev. Cereus 2019, 11, 184–194. [Google Scholar] [CrossRef]

- Cho, S.; Matsushita, Y.; Lee, S. Removing non-uniform motion blur from images. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Li, Z.; Gao, Z.; Yi, H.; Fu, Y.; Chen, B. Image Deblurring with Image Blurring. IEEE Trans. Image Process. 2023, 32, 5595–5609. [Google Scholar] [CrossRef] [PubMed]

- Fan, L.; Zhang, F.; Fan, H.; Zhang, C. Brief review of image denoising techniques. Vis. Comput. Ind. Biomed. Art 2019, 2, 7. [Google Scholar] [CrossRef] [PubMed]

- Ibrahim, H.; Kong, N. Brightness preserving dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 2007, 53, 1752–1758. [Google Scholar] [CrossRef]

- Park, S.; Park, M.; Kang, M. Super-resolution image reconstruction: A technical overview. IEEE Signal Process. Mag. 2003, 20, 21–36. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Shi, W. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Yuan, Y.; Liu, S.; Zhang, J.; Zhang, Y.; Dong, C.; Lin, L. Unsupervised image super-resolution using cycle-in-cycle generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 701–710. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. Lect. Notes Comput. Sci. 2019, 11133, 63–79. [Google Scholar]

- Bell-Kligler, S.; Shocher, A.; Irani, M. Blind super-resolution kernel estimation using an internal-gan. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Zhang, K.; Gool, L.; Timofte, R. Deep unfolding network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 3217–3226. [Google Scholar]

- Zhang, M.; Ling, Q. Supervised pixel-wise GAN for face super-resolution. IEEE Trans. Multimed. 2020, 23, 1938–1950. [Google Scholar] [CrossRef]

- Zhang, K.; Liang, J.; Van Gool, L.; Timofte, R. Designing a practical degradation model for deep blind image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4791–4800. [Google Scholar]

- Wang, X.; Xie, L.; Dong, C.; Shan, Y. Real-esrgan: Training real-world blind super-resolution with pure synthetic data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 1905–1914. [Google Scholar]

- Wang, X.; Sun, L.; Chehri, A.; Song, Y. A Review of GAN-Based Super-Resolution Reconstruction for Optical Remote Sensing Images. Remote Sens. 2023, 15, 5062. [Google Scholar] [CrossRef]

- Bok, V.; Langr, J. GANs in Action: Deep Learning with Generative Adversarial Networks; Manning Publishing: New York, NY, USA, 2019. [Google Scholar]

- Parsif.al. Available online: https://parsif.al (accessed on 25 April 2024).

- Yu, J.; Xue, H.; Liu, B.; Wang, Y.; Zhu, S.; Ding, M. GAN-Based Differential Private Image Privacy Protection Framework for the Internet of Multimedia Things. Sensors 2021, 21, 58. [Google Scholar] [CrossRef] [PubMed]

- Gipiškis, R.; Chiaro, D.; Preziosi, M.; Prezioso, E.; Piccialli, F. The impact of adversarial attacks on interpretable semantic segmentation in cyber–physical systems. IEEE Syst. J. 2023, 17, 5327–5334. [Google Scholar] [CrossRef]

- Hu, M.; Ju, X. Two-stage insulator self-explosion defect detection method based on Mask R-CNN. In Proceedings of the 2nd IEEE International Conference on Intelligent Computing and Human-Computer Interaction (ICHCI), Shenyang, China, 17–19 November 2021; pp. 13–18. [Google Scholar]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A. Vehicle detection from UAV imagery with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6047–6067. [Google Scholar] [CrossRef] [PubMed]

- Gan, Z.; Xu, H.; He, Y.; Cao, W.; Chen, G. Autonomous landing point retrieval algorithm for uavs based on 3d environment perception. In Proceedings of the 2021 IEEE 7th International Conference on Virtual Reality (ICVR), Foshan, China, 20–22 May 2021; pp. 104–108. [Google Scholar]

- Shen, Y.; Lee, H.; Kwon, H.; Bhattacharyya, S. Progressive transformation learning for leveraging virtual images in training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 835–844. [Google Scholar]

- Chen, X.; Li, T.; Liu, H.; Huang, Q.; Gan, X. A GPS Spoofing Detection Algorithm for UAVs Based on Trust Evaluation. In Proceedings of the IEEE 13th International Conference on CYBER Technology in Automation Control and Intelligent Systems (CYBER), Qinhuangdao, China, 11–14 July 2023; pp. 315–319. [Google Scholar]

- More, D.; Acharya, S.; Aryan, S. SRGAN-TQT, an Improved Motion Tracking Technique for UAVs with Super-Resolution Generative Adversarial Network (SRGAN) and Temporal Quad-Tree (TQT); SAE Technical Paper; SAE: London, UK, 2022. [Google Scholar]

- Gong, Y.; Liu, Q.; Que, L.; Jia, C.; Huang, J.; Liu, Y.; Zhou, J. Raodat: An energy-efficient reconfigurable AI-based object detection and tracking processor with online learning. In Proceedings of the 2021 IEEE Asian Solid-State Circuits Conference (A-SSCC), Busan, Republic of Korea, 7–10 November 2021; pp. 1–3. [Google Scholar]

- Gong, Y.; Zhang, T.; Guo, H.; Liu, Q.; Que, L.; Jia, C.; Zhou, J. An energy-efficient reconfigurable AI-based object detection and tracking processor supporting online object learning. IEEE Solid-State Circuits Lett. 2022, 5, 78–81. [Google Scholar] [CrossRef]

- Kostin, A.; Gorbachev, V. Dataset Expansion by Generative Adversarial Networks for Detectors Quality Improvement. In Proceedings of the CEUR Workshop Proceedings, Saint Petersburg, Russia, 22–25 September 2020. [Google Scholar]

- Shu, X.; Cheng, X.; Xu, S.; Chen, Y.; Ma, T.; Zhang, W. How to construct low-altitude aerial image datasets for deep learning. Math. Biosci. Eng. 2021, 18, 986–999. [Google Scholar] [CrossRef] [PubMed]

- Costea, D.; Marcu, A.; Slusanschi, E.; Leordeanu, M. Creating roadmaps in aerial images with generative adversarial networks and smoothing-based optimization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2100–2109. [Google Scholar]

- Tian, B.; Yan, W.; Wang, W.; Su, Q.; Liu, Y.; Liu, G.; Wang, W. Super-Resolution Deblurring Algorithm for Generative Adversarial Networks. In Proceedings of the 2017 Second International Conference on Mechanical, Control and Computer Engineering (ICMCCE), Harbin, China, 8–10 December 2017; pp. 135–140. [Google Scholar]

- Chou, Y.; Chen, C.; Liu, K.; Chen, C. Stingray detection of aerial images using augmented training images generated by a conditional generative model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1403–1409. [Google Scholar]

- Bi, F.; Lei, M.; Wang, Y.; Huang, D. Remote sensing target tracking in UAV aerial video based on saliency enhanced MDnet. IEEE Access 2019, 7, 76731–76740. [Google Scholar] [CrossRef]

- Krajewski, R.; Moers, T.; Eckstein, L. VeGAN: Using GANs for augmentation in latent space to improve the semantic segmentation of vehicles in images from an aerial perspective. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1440–1448. [Google Scholar]

- Zhou, J.; Vong, C.; Liu, Q.; Wang, Z. Scale adaptive image cropping for UAV object detection. Neurocomputing 2019, 366, 305–313. [Google Scholar] [CrossRef]

- Chen, Y.; Li, J.; Niu, Y.; He, J. VeGAN: Small object detection networks based on classification-oriented super-resolution GAN for UAV aerial imagery. In Proceedings of the 2019 Chinese Control and Decision Conference (CCDC), Nanchang China, 3–5 June 2019; pp. 4610–4615. [Google Scholar]

- Xing, C.; Liang, X.; Bao, Z. VeGAN: Small object detection networks based on classification-oriented super-resolution GAN for UAV aerial imagery. A small object detection solution by using super-resolution recovery. In Proceedings of the 2019 IEEE 7th International Conference on Computer Science and Network Technology (ICCSNT), Dalian, China, 19–20 October 2019; pp. 313–316. [Google Scholar]