Abstract

Compared to images captured from ground-level perspectives, objects in UAV images are often more challenging to track due to factors such as long-distance shooting, occlusion, and motion blur. Traditional multi-object trackers are not well-suited for UAV multi-object tracking tasks. To address these challenges, we propose an online multi-object tracking network, OMCTrack. To better handle object occlusion and re-identification, we designed an occlusion perception module that re-identifies lost objects and manages occlusion without increasing computational complexity. By employing a simple yet effective hierarchical association method, this module enhances tracking accuracy and robustness under occlusion conditions. Additionally, we developed an adaptive motion compensation module that leverages prior information to dynamically detect image distortion, enabling the system to handle the UAV’s complex movements. The results from the experiments on the VisDrone2019 and UAVDT datasets demonstrate that OMCTrack significantly outperforms existing UAV video tracking methods.

1. Introduction

Multi-object tracking (MOT) is one of the core tasks in the field of computer vision, which is widely used in video surveillance, human–computer interaction, traffic management, behavior analysis, and other fields [1,2]. MOT technology is typically based on a detection-tracking framework and is divided into two main segments: object detection and data correlation. In the detection process, the system identifies the potential object bounding box in each frame of the video. In the data association link, cross-frame object matching is realized based on the appearance and motion characteristics of the object [3,4,5,6]. Due to their high degree of maneuverability and flexibility, UAVs can effectively overcome the limitations of the limited field of view and short tracking distance of fixed surveillance cameras; therefore, UAVs equipped with visual MOT systems have shown great potential in many fields, such as military reconnaissance, ground object strikes, public safety monitoring, and disaster rescue [7,8].

UAV-based visual object tracking technology benefits from a broad aerial perspective, allowing it to capture more extensive information and significantly enhance data collection capabilities. This can provide valuable insights for object intent recognition and support situational assessments such as object threat evaluation and attack intent analysis [9]. However, UAV visual object tracking faces considerable challenges compared to ground-based tracking [10,11]. As illustrated in Figure 1, during the detection stage, the wide aerial field of view introduces numerous interfering objects, and the overlap between objects and background reduces object distinguishability, making it difficult to construct an accurate object model. In the data association stage, when the UAV operates at high altitudes, the increased shadow amplitude, the decreased resolution and clarity, and the smaller scale of tracked objects result in a scarcity of features and textures, complicating feature extraction. Additionally, frequent occlusions between objects and between objects and background [12,13] can lead to tracking drift or mistakenly identifying new objects. The rapid movement of the UAV platform during tracking introduces challenges such as changes in viewing angle, motion blur [14], and frame rotation or translation, causing image distortion. These factors amplify the deviation between the predicted and actual tracking trajectories, with this error accumulating over time. As a result, the Euclidean distance between objects diminishes, especially in crowded scenes, further complicating object differentiation [15]. This increases the likelihood of tracking drift and trajectory loss, making robust, stable, and long-term UAV object tracking more difficult to achieve.

Figure 1.

Illustrations of challenging scenes in different drone capture videos.

To effectively address the aforementioned challenges and achieve robust UAV multi-object tracking, we propose a new multi-object tracker named OMCTrack. First, to tackle the issue of short-term occlusion in UAV multi-object tracking, we introduce an occlusion perception module that leverages both temporal and spatial cues to assess the object’s occlusion confidence and determine the occlusion degree using an object occlusion coefficient. We also implement an extended IoU to resolve short-term occlusion without increasing computational complexity. Second, to mitigate association confusion and ID switching in dense scenes, we propose a simple yet effective hierarchical association method that handles ambiguity from a finer-grained perspective. For non-occluded objects, we incorporate important geometric properties to supplement motion information, reducing incorrect association matches. For occluded objects, the extended IoU is used to expand the occluded object’s bounding box by combining prediction box information with a time expansion coefficient, thereby enhancing tracking accuracy and robustness under occlusion conditions. Additionally, to address challenges posed by perspective changes and motion blur caused by the UAV’s rapid movement, we designed an adaptive motion compensation module. This module adaptively detects the degree of image distortion during tracking and compensates for motion using an affine transformation matrix between the image distortion measurement and adjacent frames. This process eliminates the deviation between predicted estimates and actual positions, ensuring high real-time performance for the tracker.

We conducted experiments on two public UAV benchmark datasets, VisDrone2019 and UAVDT, to evaluate the effectiveness of our proposed algorithm. The experimental results demonstrate that the proposed method can accurately track multiple objects from a UAV perspective, predict occlusions, and reidentify lost objects during short-term occlusions. It also significantly reduces ID switching and the number of fragments. This approach fully accounts for the unique characteristics of objects as seen from a UAV, enhancing the overall performance of multi-object tracking in UAV scenarios. The main contributions of this paper are summarized as follows:

- (1)

- We propose an occlusion perception module to solve the problem of short-term occlusion in MOT, which can re-identify lost objects and reduce the number of ID switching and trajectory fragments without increasing the amount of calculation.

- (2)

- In order to solve the problem of association confusion in dense scenes, we propose a simple and effective hierarchical association method to deal with ambiguity from a more fine-grained perspective, use the geometric properties of the object to supplement the motion cues, and reduce incorrect association matching.

- (3)

- For the platform motion interference in the UAV tracking scenario, we designed an adaptive motion compensation module that can autonomously perceive the image distortion, reduce the limitations of the camera motion compensation method in terms of real-time performance, and reduce the influence of platform motion in the process of UAV tracking.

2. Related Work

In this section, we review recent research on multi-objective approaches within the tracking-by-detection paradigm. This includes discussions on data association, occlusion handling, and related methods for motion compensation.

2.1. Tracking-by-Detection

In the field of multi-object tracking (MOT), the objective is to accurately extract and maintain the trajectory of each object throughout a video sequence. The tracking-by-detection (TBD) framework is particularly notable for its effectiveness in this area. It decomposes the MOT task into three key subtasks: object detection, feature extraction, and data association. The TBD approach treats tracking as a data association problem by first detecting objects in each frame and then linking these detections across frames through data association techniques. This method excels at tracking newly appearing objects in videos; however, its performance is highly dependent on the accuracy of the detection phase. To enhance detection performance, various studies have employed advanced detectors. For instance, the MOT17 dataset utilizes detectors such as Faster R-CNN, Faster R-CNN, and SDP, while YOLOX is also widely adopted in MOT tasks due to its superior detection capabilities.

Additionally, to enhance the accuracy of cross-frame target association, some methods focus on improving the prediction of target motion states. The Kalman filter is a widely used tool for target motion prediction, and some studies incorporate camera motion compensation to aid in this prediction. For instance, the SORT algorithm, introduced in Ref. [16], is a real-time multi-target tracking method within the TBD framework. It employs Faster R-CNN for object detection and combines the Kalman filter with the Hungarian algorithm for data association. While SORT effectively handles short-term occlusions by calculating the IoU distance between bounding boxes in adjacent frames, it struggles with long-term occlusions and similar forms of target interference, leading to frequent identity switches. To address these limitations, the DeepSORT algorithm, proposed in Ref. [17], builds on SORT by incorporating a ReID network to extract appearance features and perform deep correlation measurements. This approach considers both motion and appearance information, reducing identity switches by 45% compared to SORT. The MF-SORT algorithm, introduced in ref. [18], enhances tracking efficiency by integrating target motion characteristics into the data association process, improving performance with static cameras. The Bot-SORT algorithm, detailed in Ref. [19], addresses camera shake interference using global motion compensation and combines IoU distance with cosine distance matrices for improved matching. Lastly, the ByteTrack algorithm, presented in Ref. [20], tackles the challenge of tracking low-detection-score targets by segmenting detection frames into high-score and low-score boxes and performing dual matching.

2.2. Occlusion Handling

With the wide field of view provided by UAVs, complex aerial environments present numerous challenges, particularly frequent occlusions, which significantly impact target detection and tracking. When an object is occluded, its visible appearance and motion characteristics are greatly diminished, increasing the risk of detection failure and potentially causing interruptions in the tracking trajectory. Effectively addressing the occlusion problem in multi-object tracking has thus become a key focus of research. To tackle this issue, researchers have proposed various algorithms, such as a hierarchical association algorithm using online discriminant appearance learning [21] and a multi-pedestrian detection and tracking method specifically designed for partial occlusion [22]. While these methods aim to mitigate local occlusion by improving detection, in real-world scenarios, even the most advanced detectors are prone to false alarms, missed detections, and inaccuracies. To reduce the impact of occlusion on object detection, the STAM algorithm [23] leverages non-occluded parts of the target for data association through an occlusion-aware and spatiotemporal attention mechanism, enhancing the robustness of feature extraction to some extent. However, this method increases computational complexity and can compromise real-time performance. The PermaTrack algorithm [24] addresses occlusion by combining simulated and real data, while the TADAM algorithm [25] integrates position prediction and data association into a unified model to improve tracking stability in occluded environments. The MeMOT algorithm [26] uses a memory bank to store appearance features of track fragments for easy retrieval and matching. Additionally, multi-view multi-object tracking technology has gained attention for its ability to overcome occlusion by leveraging the geometric consistency of targets from different viewing angles [27], a method that can compensate for the limitations of single-drone tracking by utilizing multiple UAVs working in tandem.

In the UAV multi-object tracking experiments, it was observed that occlusion often causes the object’s appearance characteristics to drift due to factors such as long-distance shooting and lighting changes. In such cases, kinematic features prove to be more robust and reliable compared to appearance-based features. To address this issue, this paper proposes an occlusion perception module that analyzes both temporal and spatial cues of the target. This module effectively re-identifies lost targets and reduces identity switching and trajectory fragmentation, all without adding significant computational overhead.

2.3. Motion Models

Many existing object detection and tracking algorithms rely on motion models to predict the trajectory of objects over time and space. The Kalman filter (KF) [28], a classic motion model, predicts and updates the target’s motion state periodically using recursive Bayesian estimation. To enhance prediction accuracy, researchers have explored advanced KF variants aimed at improving the model’s ability to predict target motion. However, in complex real-world scenarios, targets often exhibit non-linear motion due to camera movement, which can cause discrepancies between KF-based predictions and the actual target position. Addressing the impact of camera movement has become a key challenge in improving the robustness of tracking algorithms. To tackle this, researchers have introduced camera motion compensation (CMC) [29,30,31,32,33] techniques, which correct image distortion caused by camera movement through image registration methods, such as enhanced correlation coefficient (ECC) [34] maximization or ORB [35] feature matching.

Despite these advances, existing solutions have not fully overcome these challenges. A common approach is to correct image distortion using global motion compensation strategies, which can be categorized into two main types: offline and online methods. Offline methods, although faster, often require the pre-computation of affine transformation matrices between video frames, limiting their applicability to specific scenarios. Online methods, while more adaptable, tend to significantly impact the real-time performance of tracking algorithms due to their high computational complexity. Additionally, although ReID features can improve the discriminatory power of tracking algorithms, their performance is limited, particularly when handling small-sample, low-resolution, or visually similar targets. Furthermore, ReID’s high computational demands make it less suitable for resource-constrained UAV tracking tasks.

2.4. Datasets

This study focuses on two key datasets in the field of UAV multi-target tracking, VisDrone2019 and UAVDT, providing an in-depth analysis and discussion of related algorithms. Given the maneuverability of UAVs, particularly first-person view (FPV) UAVs, which can fly close to the ground, it is crucial to assess their target tracking performance in dynamic, horizontal perspectives. In this context, data from traffic speed cameras and car dashcams become especially relevant for study. A new dataset dedicated to traffic accident analysis, CADP, was introduced in ref. [36], where a significant performance degradation in pedestrian target detection was observed. Similarly, an analysis of the KITTI Vision Benchmark Suite in Ref. [37] highlights that algorithm performance in real-world scenarios often falls short of results achieved in controlled laboratory environments. Ref. [38] details the construction process of a traffic accident dataset, while Ref. [39] presents the BDD100K large-scale driving video dataset, which includes 100 K hours of footage across 10 tasks, aimed at evaluating advancements in image recognition technology within the autonomous driving domain. Moreover, Ref. [40] introduces a city-level traffic surveillance dataset, publishing vehicle trajectory data that encompass almost all the vehicles in a city. Collectively, these datasets not only enhance the diversity and complexity of UAV multi-target tracking tasks but also offer valuable references and insights for related research.

3. Proposed Method

3.1. Overall Framework

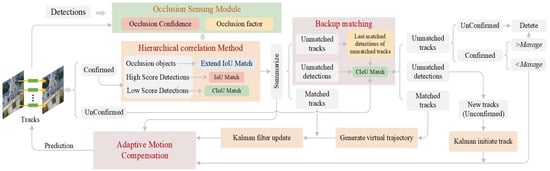

For a given video sequence or real-time video stream taken by a drone, the goal of our proposed OMCTracker network is to obtain the categories , bounding box , and tracking recognition of N objects in the video frame. Figure 2 shows the overall framework of OMCTracker. We use YOLOv8 as the detector to process the video frame to obtain the detection result (which means that the frame t contains N detection results). Detection is represented by , where represents the upper left coordinates of the bounding box, and represents the width and height of the bounding box, respectively. We use to represent the set of track fragments during the tracking process, represents the track fragment with the ID of , where represents the image position of the object in frame t, and is the initialization time.

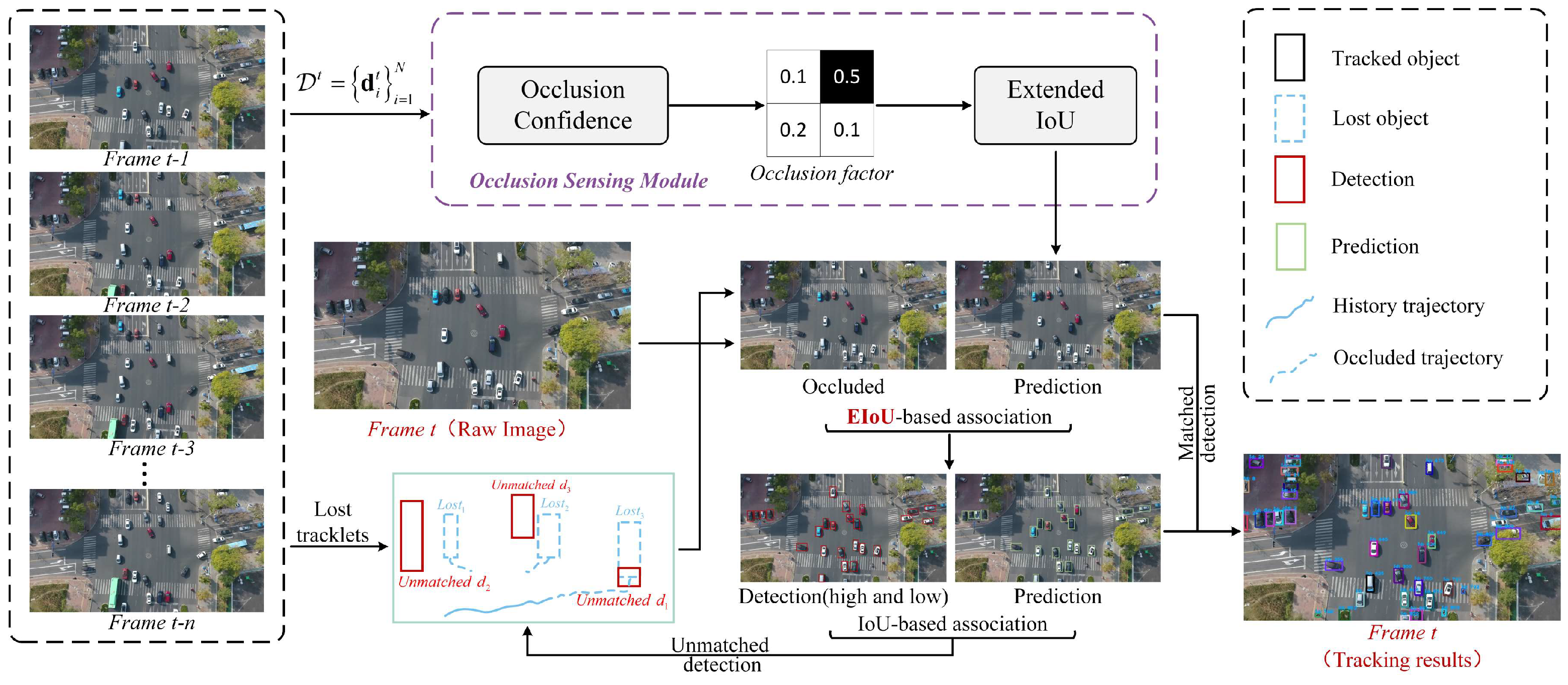

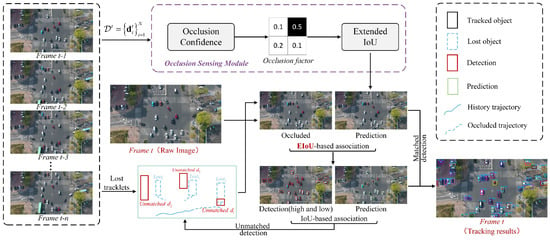

Figure 2.

OMCTrack algorithm diagram.

3.2. Hierarchical Correlation of Fused Occlusion Perception

During tracking, the object from occlusion to reproduction typically involves a series of variations in the detection confidence score, from high to low to high. Therefore, we adopt a multi-stage matching strategy and propose a simple and effective hierarchical association method, and the overall framework is shown in Figure 3. Correlating obvious objects first, and then vague objects, follows the observed pattern of human habit.

Figure 3.

The overall framework of the hierarchical association method with fused occlusion perception.

For each frame in the video, use the detector to output the object detection frame and detection score, and track the object trajectory starting from the detection frame in the initial frame. We use a hierarchical matching strategy, which first divides all detection boxes into high and low scores according to the detection score threshold. In the first matching stage, we calculate the similarity of the high-score frame and its prediction box through the commonly used IoU matrix and pass it to the Hungary algorithm to complete the matching. In the second matching stage, we used CIoU [41] as the similarity to match the low-resolution detection frame and the tracking trajectory that did not match the high-resolution detection frame in the first stage, as the low-resolution bounding box usually involves motion blur or significant occlusion, resulting in an unreliable representation of the appearance. For an object trajectory that is not successfully matched in the first two stages, we retain its historical trajectory information, pass it to OSM, extract the occlusion object according to the occlusion probability, and use the extended IoU to rematch it.

For tracks that do not match any of the detection frames but have a high enough score, we create new tracks. For tracks that do not have a matching detection box in the current frame, we leave them for 30 frames and match them when they reappear. In conclusion, our hierarchical matching strategy can effectively deal with the detection frame, and the retention and matching mechanism of unmatched trajectories improves the robustness of the tracking method. This hierarchical method realizes a systematic object association matching process.

3.2.1. Occlusion Sensing Module

Occlusion Confidence. From the perspective of UAVs, which have a broad aerial field of view, object tracking models often face challenges such as increased noise interference and mutual occlusion between objects and the background. This can lead to tracking drift and loss, particularly in dense environments, where short-term occlusions are common. Such occlusions can cause mismatching between objects, resulting in identity switches and tracking failures. In online tracking algorithms, an object that successfully matches both the detection and prediction frames is marked as active, while others are either retained or discarded. Occluded objects are frequently discarded, leading to ID switching. The occlusion confidence we propose is crucial for determining whether an object should be classified as occluded. Temporal cues are used to assess the object’s presence: if it is detected in consecutive video frames, its likelihood of being present increases; conversely, if it is not detected in several consecutive frames, this likelihood decreases, which may indicate false detection. Spatial cues are also considered: a larger bounding box indicates that the object covers a greater portion of the image, and, for objects of similar size, a larger bounding box suggests that the object is closer to the camera, reducing the likelihood of occlusion. By integrating both temporal and spatial cues, we define the confidence level of the object () as follows:

where represents hyperparameters. The number of frames from the start frame of the object ID to the current frame indicates the number of frames that the object has not matched consecutively, and if it is a successfully matched object, it is not within the scope of our discussion of occluding the object, and it will not enter the calculation range of . represents the bounding box area of the object, and is the average area of all objects in the current video frame. where is used to normalize the area of the object bounding box to eliminate the effect of camera position.

Global Occlusion Factor. In addition to assessing the occlusion state of an object based on occlusion confidence, we also introduce an object occlusion coefficient. The first type of occlusion occurs when other objects are situated between the camera and the target object, causing significant overlap between the bounding box of the occluding objects and the bounding box of the occluded object. In such cases, the Intersection over Union (IoU) decreases due to the large overlap with the occluding objects, leading to matching failures. To address this issue, we propose a global occlusion coefficient to evaluate the extent of mutual coverage between objects in the current frame. We define the global occlusion coefficient () for the object as follows:

where is the intersection of the bounding box of object i and the bounding box of object j, and is the area of the bounding box of objective i.

Local Occlusion Factor. The object in the image from the perspective of the drone is often occluded between the object and the background due to remote shooting. Based on this, we calculate the local occlusion coefficient of each object based on the bounding box information detected in the current frame, specifically,

where is the area of the detected object box in the current frame, and the object local occlusion coefficient reflects that it measures the area decay rate of the occluded object bounding box, and we use this indicator and occlusion confidence to determine the occlusion state between the object and the background.

3.2.2. Extended IoU

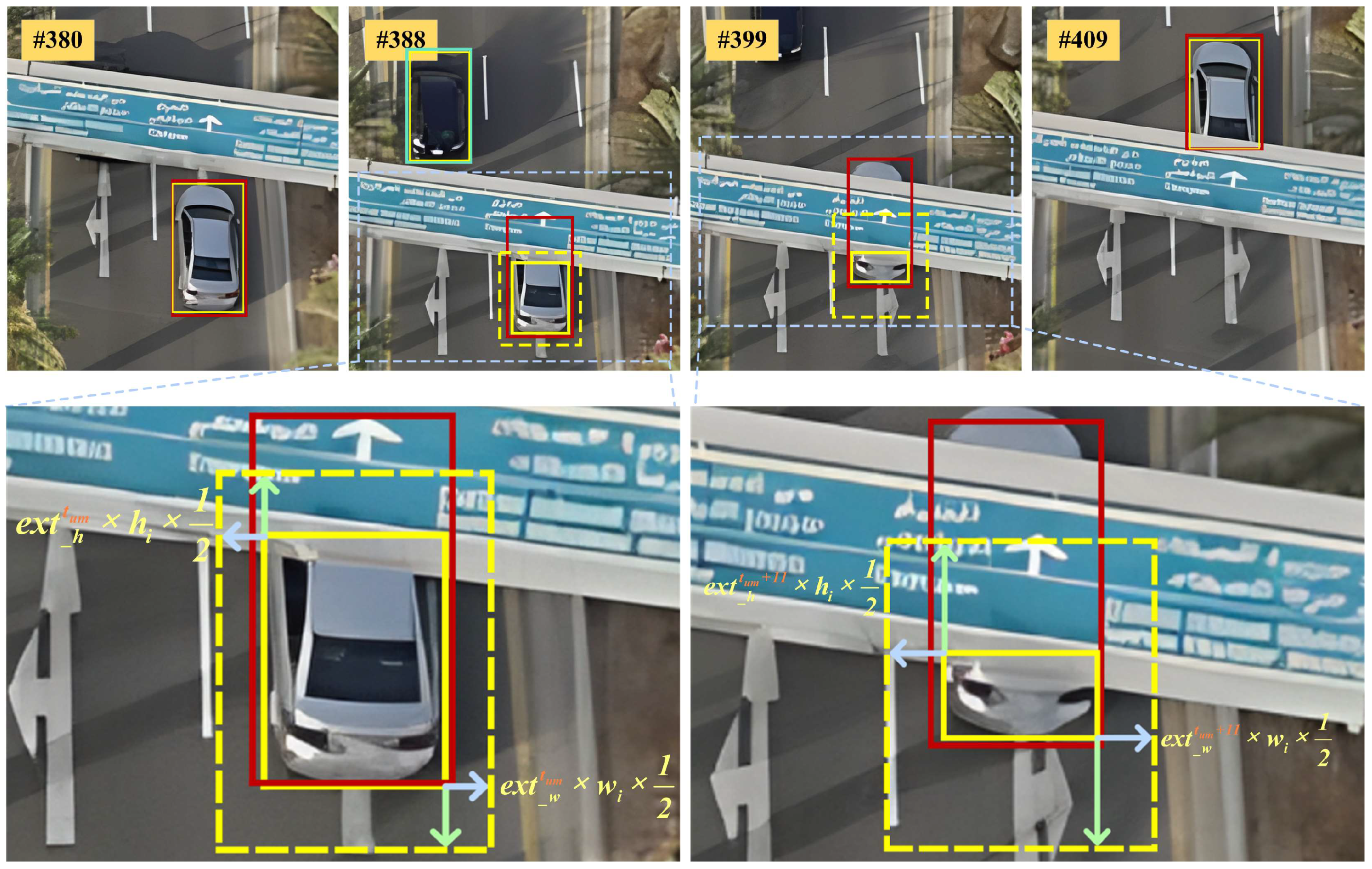

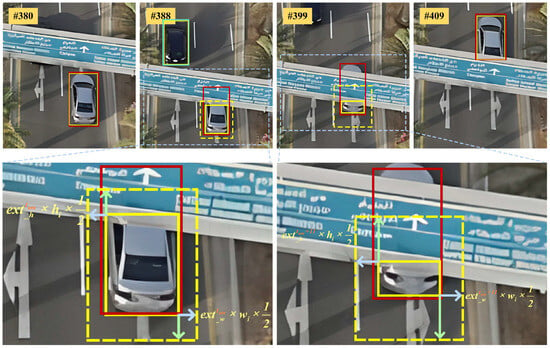

For occluded objects, we use an extended IoU to re-identify and match them, as shown in Figure 4. The bounding box of the occluded object is expanded based on its uncertainty, which increases progressively with each occluded frame. By calculating the IoU between the expanded bounding box and the remaining unmatched prediction boxes, the Hungarian algorithm is applied to match the occluded object. It is important to note that expanding the IoU only increases the uncertainty, while the actual size of the occluded object remains unchanged, and this is defined as follows:

where is the predicted bounding box, which is denoted by . is an extended bounding box, which is defined as

where is the bounding box parameter output by the detector, and are the width expansion coefficients and height expansion coefficients of the extended bounding box, which are defined as

where represents the number of consecutive unmatched frames for an object, with the expansion coefficient increasing frame by frame based on its occlusion state. Additionally, we do not rely solely on expanding the detection box of the occluded object. Since the direction of occlusion is uncertain, the aspect ratio of the occluded object may differ significantly from its actual aspect ratio, meaning that accurate rematching cannot be achieved by expanding the detection box alone. We consider the predicted bounding box to be more reliable as its aspect ratio is closer to the object’s true proportions. By combining the information from the prediction box with the time-based expansion coefficient, we expand the bounding box of the occluded object to enhance the tracker’s accuracy and robustness under occlusion conditions.

Figure 4.

Example of extended IoU application. The bounding box output by the detector is depicted with a yellow solid rectangle, the prediction box output by the Kalman filter is depicted with a green or red solid rectangle, and the extended bounding box is depicted with a yellow dashed rectangle. The extended factor increases frame by frame based on occlusion.

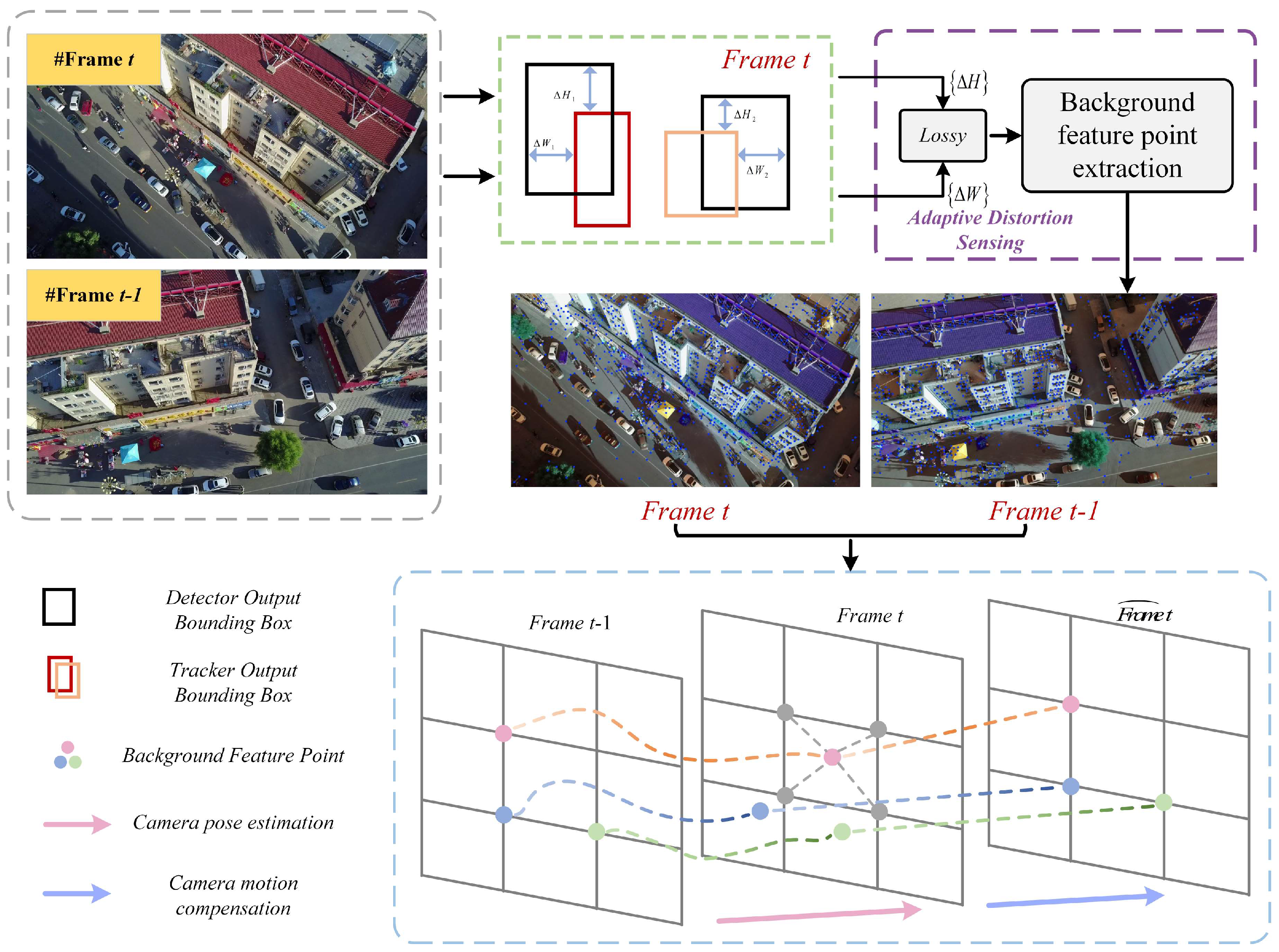

3.3. Adaptive Motion Compensation

3.3.1. Adaptive Distortion Sensing

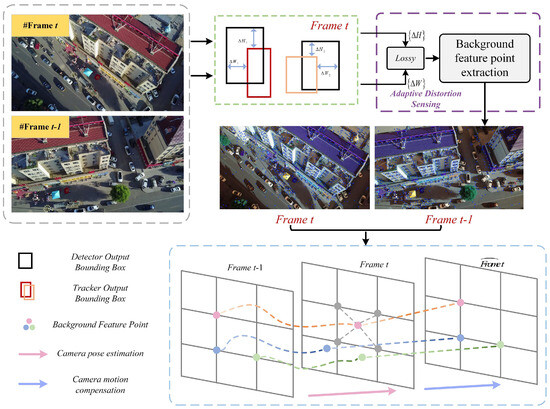

In UAV object tracking tasks, factors such as UAV movement, inevitable “shaking”, and other issues often lead to interference from camera motion, resulting in high noise levels and image distortion in the detection and prediction results. At any given moment t in the video frame, the cumulative estimation error of the Kalman filter is proportional to the number of objects being tracked in the image. This cumulative error is calculated using the spatiotemporal product, as illustrated in Figure 5. The UAV’s movement causes significant changes in the bounding box of the object, exacerbating the deviation between the Kalman filter’s predicted position and the actual position in the image. This leads to problems such as object loss and ID switching. A common and effective approach to address this is to use a camera motion compensation strategy, which estimates the rotation and translation vectors between adjacent frames, models image motion, and performs image restoration. As image distortion increases, the effectiveness of this compensation strategy becomes more pronounced. However, offline trackers that use pre-computed affine transformation matrices between adjacent frames are limited to specific scenarios and have significant drawbacks. Online compensation methods, on the other hand, often involve high computational complexity, which can severely impact the real-time performance of the tracker.

Figure 5.

The overall framework of the adaptive motion compensation.

To address this, we have developed an adaptive motion compensation module that autonomously detects the need for image distortion compensation in unknown scenes. This module dynamically assesses the degree of image distortion, mitigating the impact of camera motion compensation methods on real-time performance, and corrects online for dynamic background distortions. We introduce an image distortion metric that quantifies the difference between the object position predicted by the Kalman filter and the actual object position detected in the image. This metric provides a quantitative method for assessing the extent of image distortion, defined as follows:

represents the Kalman filter’s predicted bounding box for trajectory i at time t, while is the corresponding detector output. By calculating the image distortion value during the tracking process, the tracker can autonomously detect camera motion in unknown scenes and compensate for it in an objective manner.

3.3.2. Background Motion Estimation

During the tracking process, when the image distortion exceeds a predefined threshold, we infer that either the drone is moving or the camera is shaking. To align images between adjacent frames under these conditions, we employ the LightGlue [42] architecture. This architecture, based on the Transformer model, utilizes self-attention and cross-attention networks to estimate background motion between frames. It performs feature point cropping at each layer and determines whether to continue inference, significantly reducing computational complexity. Additionally, since most objects of interest are moving, we concentrate on local features relative to the image background. We then apply the RANSAC algorithm to minimize false matches and derive the affine transformation matrix between adjacent frames. By disregarding dynamic objects in the scene, we enhance the accuracy of background motion estimation between frames. The affine transformation matrix is defined as follows:

where is the rotation vector between adjacent frames, and is the translation vector. Based on this, we define two mathematical parameters,

From this, the predicted state vectors of the Kalman filter before and after the camera motion compensation at time t are obtained as ,

and are the prediction covariance matrices of the Kalman filter before and after correction, respectively, which are defined as

The update steps for and in the Kalman filter are as follows:

represents the optimal Kalman gain, denotes the updated state estimate, and refers to the updated covariance estimate. When image distortion surpasses a predefined threshold, the tracker adjusts the motion state, noise vector, and covariance matrix to correct positional deviations during the Kalman filter’s prediction phase.

To address the common issue of distortion in UAV image sequences, we propose an Adaptive Motion Compensation Module (AMC). This module leverages image distortion metrics and inter-frame affine transformation matrices to automatically assess the level of image distortion and activate compensation mechanisms only when significant distortion is detected. When triggered, the tracker corrects positional bias by adjusting key parameters, including the Kalman filter’s state vector, noise estimation, and covariance matrix. This approach minimizes unnecessary computations and optimizes resource allocation, thereby significantly reducing the computational load associated with camera motion compensation. Our method was experimentally validated to strike a balance between performance and processing speed, maintaining tracking accuracy while enhancing processing efficiency. The introduction of AMC notably improves the robustness of UAV target tracking in dynamic environments, ensuring high accuracy and stability even amid rapid movement and complex background changes.

4. Experiments

4.1. Dataset and Metrics

Dataset. To assess the effectiveness of our proposed OMCTrack method in UAV multi-object tracking scenarios, we conducted a series of experiments using the VisDrone2019 and UAVDT datasets.

The VisDrone2019 dataset is gathered from various UAVs and encompasses a range of scenes, climates, and lighting conditions, with the goal of advancing research on target detection and tracking from the UAV perspective. This dataset includes ten target categories: pedestrians, cars, vans, buses, trucks, motorcycles, bicycles, tricycles, and other types of transportation. Specifically, the VisDrone2019-MOT subset is designed for multi-target tracking tasks and is divided into three parts: a training set with 56 video sequences, a validation set with seven video sequences, and a test set with 33 video sequences (16 for challenge testing and 17 for development testing). Each target in the dataset is labeled with a unique tracking identifier, category label, and precise bounding box, with key attributes such as scene visibility and occlusion also recorded. For multi-object tracking assessments, the study followed official guidelines and focused on five primary target categories: pedestrians, cars, vans, buses, and trucks. The dataset features numerous challenging scenarios, including camera motion, motion blur, dense target groups, and occlusion, providing a robust testbed for UAV multi-target tracking tasks.

The UAVDT dataset is a challenging large-scale benchmark designed for vehicle detection and tracking from the UAV perspective. It encompasses three core visual tasks: object detection, single-target tracking, and multi-target tracking. The dataset specifically targets vehicles and is categorized into three subtypes: cars, trucks, and buses. For multi-target tracking, the UAVDT dataset is divided into a training set with 30 video sequences and a test set with 20 video sequences. All videos are recorded at 30 frames per second and have a uniform resolution of 1080 × 540 pixels. The dataset includes a diverse range of everyday environments, such as squares, major roads, toll booths, highways, intersections, and T-junctions.

Metrics. To thoroughly evaluate the performance of OMCTrack and compare it with other state-of-the-art models, this study adheres to a standardized evaluation process for Multi-Object Tracking (MOT) tasks. We use a range of performance indicators, including IDF1, Multi-Object Tracking Accuracy (MOTA), High-Order Tracking Accuracy (HOTA), Association Accuracy (AssA), Detection Accuracy (DetA), False Positives (FP), Missed Detections (FN), Identity Handovers (IDs), and Frames Per Second (FPS), to provide a comprehensive assessment of the algorithm’s tracking performance.

The universal indicator MOTA is defined as

FN is a missed check, FP is a false check, ID_sw is the number of IDs that can be switched, and GT is the number of ground truths.

The HOTA metric, an extension of MOTA, represents high-order tracking accuracy. It integrates detection, association, and localization into a single comprehensive measure with a threshold parameter α,

where is

indicates that the predicted ID and the ground truth ID for the same object are both c. refers to cases where the predicted ID is not c but the ground truth ID is c. Conversely, denotes situations where the predicted ID is c, while the ground truth ID is not c.

In this paper, we use MOTA, HOTA, and IDF1 as the primary evaluation metrics. MOTA primarily assesses the performance of the detector, while HOTA evaluates the overall effectiveness of the tracker. IDF1, which represents the ratio of correctly identified detections to the average of true and predicted detections, measures the model’s ability to maintain consistent tracking over extended periods.

In addition, we evaluated the processing speed of the tracker in terms of frames per second (FPS), which measures the system’s overall frame rate. The FPS is influenced by the performance of the hardware on which the tracker runs, making it challenging to ensure an entirely fair comparison of frame rates across different methods.

4.2. Implementation Details

Training. The OMCTrack network, as proposed, adheres to the detection-tracking paradigm. It utilizes YOLOv8 [43] as the detector with YOLOv8-s as the backbone and employs a COCO pre-trained model [44] for initialization. The network was trained for 100 epochs with data augmentation techniques, including Mosaic and Mixup [45]. Training was conducted on a single GeForce RTX 3070 GPU using PyTorch2.0.1 and Torchvision0.15.2. The model’s weight decay was set to 0.005, the initial learning rate was 0.002, and a stochastic gradient descent optimizer was used with a batch size of 16. For training, images were resized to 640 × 480 pixels before being input into the detection network.

Inference. During the detection phase, we set the thresholds for high and low detection scores to 0.3 and 0.7, respectively. In the object association phase, the occlusion confidence was configured to 0.3, while both the global and local occlusion factors in the OSM module were set to 0.2. Additionally, the AMC module threshold was set to 0.1. We also permitted the tracking track to be restored within 30 frames after it vanished.

4.3. Comparison with the State-of-the-Arts Methods

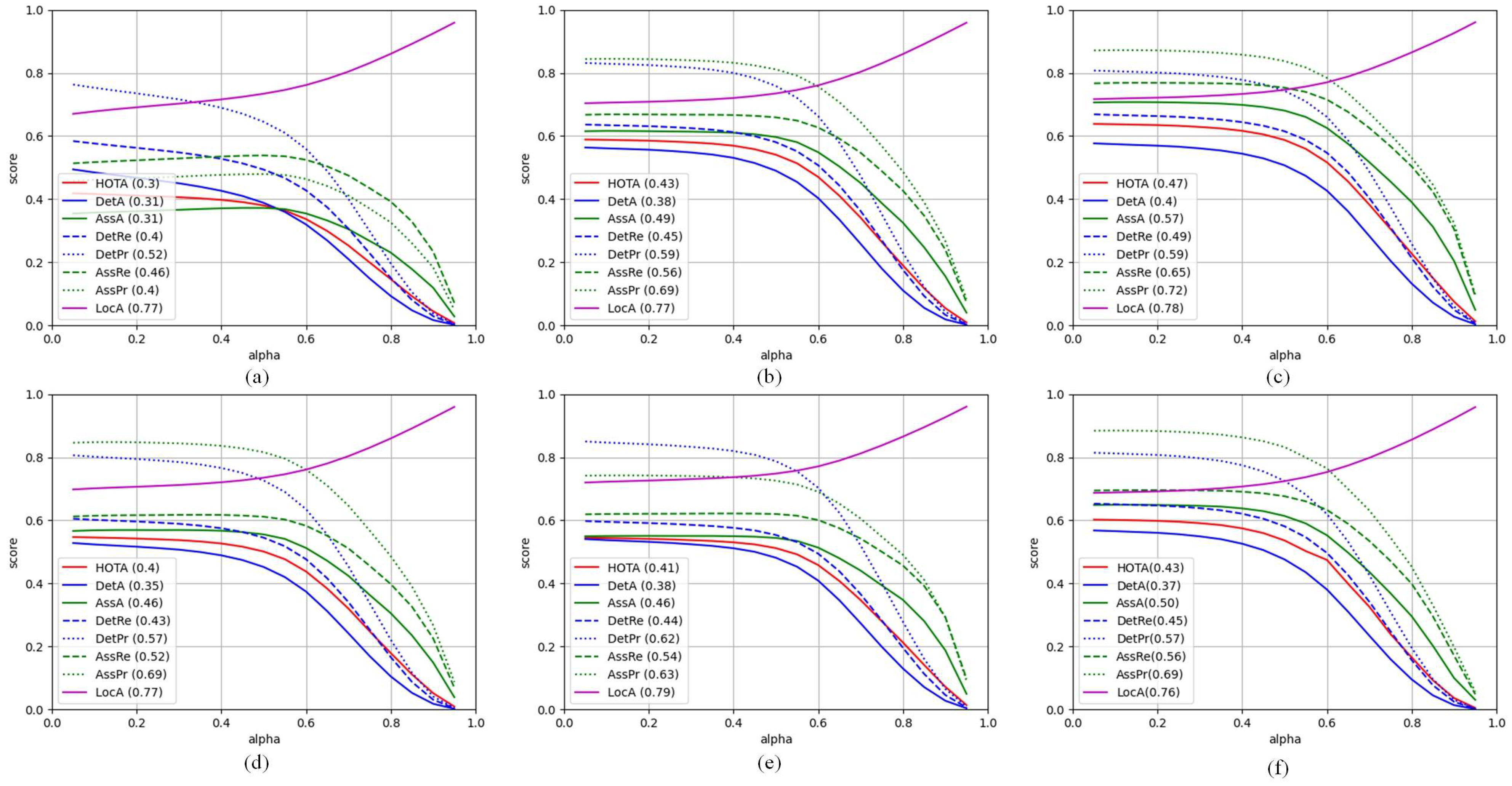

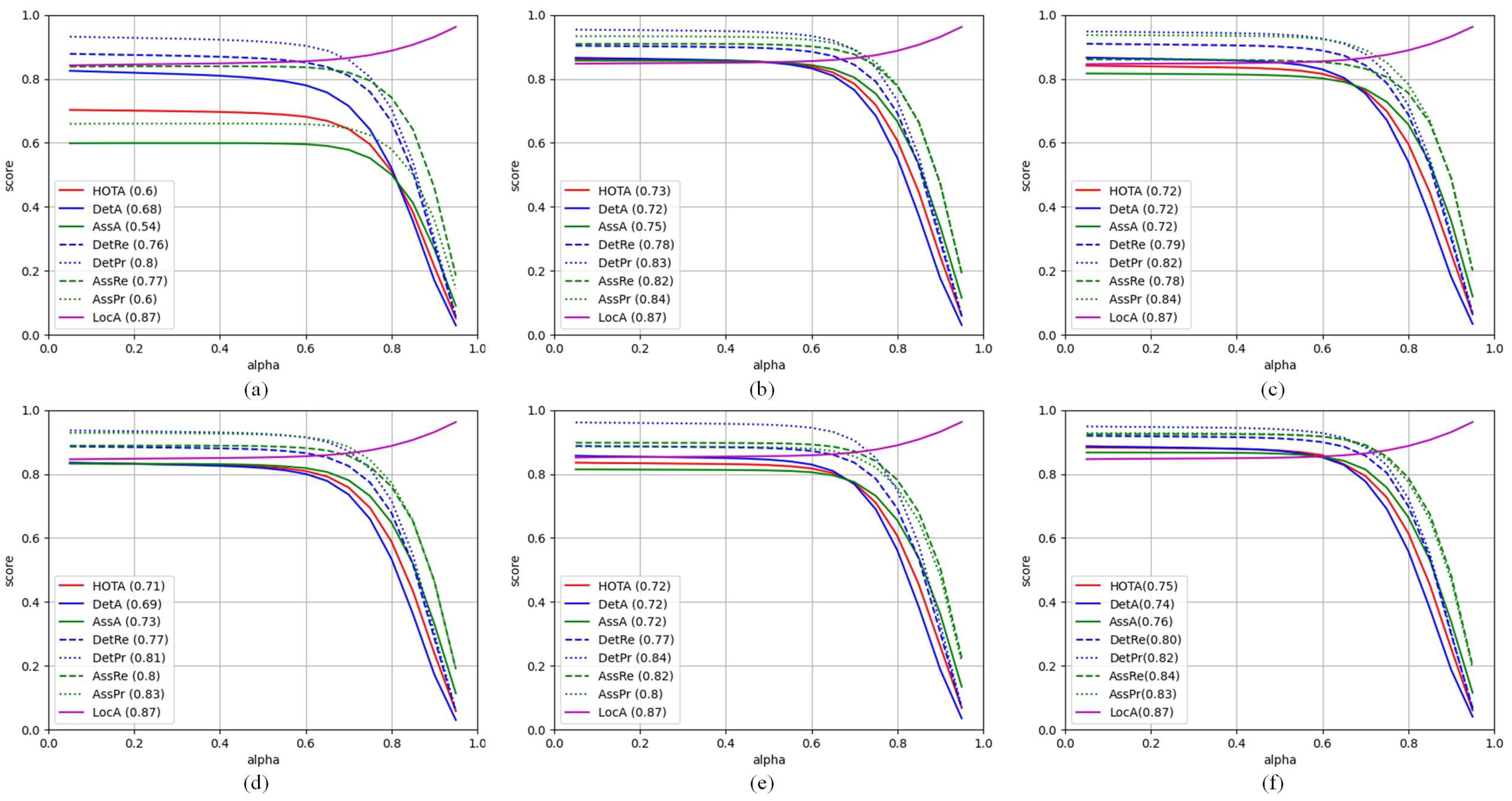

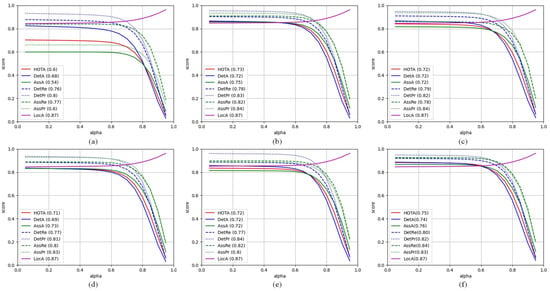

VisDrone2019 Dataset. To verify the effectiveness of OMCTrack in UAV object tracking missions, we compared our method with other advanced multi-object trackers on the VisDrone2019 dataset. We used the VisDrone2019 training set and test set to train the detection network; all the multi-object tracking algorithms used the same detector weights; and we used TrackEval’s official VisDroneMOT toolkit to evaluate the performance of each object tracker on the test development set of VisDrone2019. As shown in Table 1, OMCTrack achieves 50.6% on IDF1, 34.5% on MOTA, and 43.3% on HOTA, and the OMCTrack algorithm proposed on the VisDrone2019 dataset outperforms most advanced multi-object trackers, second only to BoTSORT. However, OMCTrack achieves 35.8 FPS, which is much better than BoTSORT’s 14.3 FPS, proving that the proposed method achieves better real-time performance while achieving better tracking performance. Figure 6 shows a comparison of the metrics of various methods under different thresholds.

Table 1.

Quantitative comparison of OMCTrack with other multi-object trackers on the VisDrone2019 test development set and the UAVDT test set. The best results are shown in bold, the second best results are shown in red, and the third best results are shown in blue.

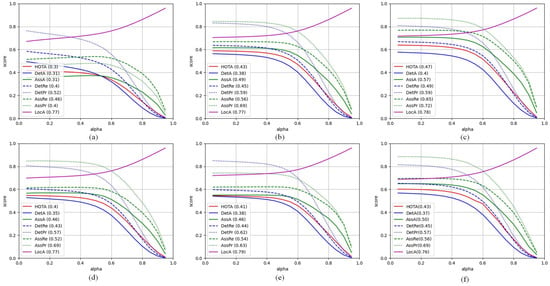

Figure 6.

Comparison of indicators of various methods at different thresholds (VisDrone2019 Dataset). (a) Deepmot. (b) Bytetrack. (c) BoTSORT. (d) UAVMOT. (e) C_BIoUTracker. (f) Ours.

UAVDT Dataset. In addition, we compared OMCTrack with other MOT methods on the UAVDT dataset. We used the training and test sets of the UAVDT dataset to train the detectors, and all the multi-object tracking algorithms used the same detector weights. We evaluated the performance of each object tracker on the UAVDT test set using the same evaluation metrics as those used on the VisDrone2019 dataset. As shown in Table 1, OMCTrack achieves 88.5% on IDF1, 85.5% on MOTA, and 74.7% on HOTA, and our proposed OMCTrack algorithm outperforms other advanced multi-object trackers on the UAVDT dataset. Figure 7 shows a comparison of the metrics of various methods under different thresholds.

Figure 7.

Comparison of indicators of various methods at different thresholds (UAVDT Dataset). (a) Deepmot. (b) Bytetrack. (c) BoTSORT. (d) UAVMOT. (e) C_BIoUTracker. (f) Ours.

The results indicate that the performance of each tracker on the UAVDT dataset significantly exceeds that on the VisDrone2019 dataset. This discrepancy primarily arises from differences in detection performance with the same detector weights across the two datasets. As shown in Table 2, the detection performance for various object categories in the VisDrone2019 dataset is 0.799 mAP50 for “cars” and 0.34 and 0.149 for “people” and “bicycles”, respectively. In contrast, the UAVDT dataset predominantly features vehicle tracking scenarios, while the VisDrone2019 dataset includes a large number of pedestrian tracking scenarios, which negatively impacts the performance of object trackers on VisDrone2019. Thus, the performance of the object detector is crucial for the effectiveness of tracking in detection-tracking paradigms.

Table 2.

Comparison of the detection performances of our detection weights for various types of objects in the VisDrone2019 dataset. The best results are shown in bold.

4.4. Ablation Studies

4.4.1. Module Effectiveness Analysis

In this section, we report a series of ablation experiments on the VisDrone2019 and UAVDT datasets to evaluate the effectiveness of each module in the OMCTrack system. For these experiments, we used ByteTrack as the baseline model and YOLOv8 as the detection network.

As shown in Table 3, occlusion sensing and hierarchical association together form the OAH module, which, is one of the two core modules of the OMCTrack network, along with AMC. We reported four key metrics for each module on the validation set of the above two datasets. Taking the results of the ablation experiment obtained in the VisDrone 2019 dataset as an example, the baseline model achieved 49.1% on IDF1, 33.7% on MOTA, 42.7% on HOTA, and 48.5 on FPS. When the OAH module was added to the baseline model, IDF1 was increased to 49.7%, MOTA was increased to 33.8%, HOTA was increased to 42.9%, and FPS was 47.4. With the addition of both modules, our OMCTrack model achieved 50.6% on IDF1, 34.5% on MOTA, and 43.3% on HOTA, while maintaining a good FPS of 35.8.

Table 3.

Ablation study for each core module. The best results are shown in bold.

4.4.2. Comparison of Occlusion Treatment Methods

We conducted comparative experiments on the proposed OAH method using the VisDrone2019 and UAVDT datasets, as shown in Table 4. The ReID method used in our experiments is the same as that employed in BoTSOT, and while CBIoU is similar to our OAH method in addressing occluded objects, it does not incorporate the temporal and spatial cues of the object itself, leading to superior performance by our method. The ReID method shows greater improvement on the VisDrone2019 dataset, where UAVs fly at lower altitudes, allowing objects to retain better appearance characteristics. In contrast, the UAVDT dataset involves higher shooting heights, making appearance features between objects more difficult to distinguish. Considering both tracking performance and speed, our method demonstrates superior object tracking effectiveness and is more suitable for UAV multi-object tracking tasks.

Table 4.

Performance comparison of OAH with other occlusion treatment methods. The best results are shown in bold.

4.4.3. Comparison of Camera Motion Compensation Methods

Additionally, we conducted ablation experiments on several commonly used camera motion compensation (CMC) methods to compare their effectiveness in reducing distortion in real inter-frame images. As shown in Table 5, while all CMC methods reduce tracking speed compared to the baseline tracker, they also enhance key MOT evaluation metrics. Among the methods tested, our proposed approach delivers the best balance of tracking performance and speed, validating its effectiveness.

Table 5.

Performance comparison of AMC with other camera motion compensation methods (VisDrone2019 test-dev). The best results are shown in bold.

4.4.4. Related Parameter Settings

Determining hyperparameters in OAH. Occlusion essentially introduces error into the system, and the introduction of occlusion variables aims to reduce the interference caused by this error and maintain the stability of the object’s feature quantity. It is evident that choosing different occlusion thresholds can lead to significantly different occlusion variable values, making the occlusion threshold a critical factor in determining occlusion variables. The goal of selecting the right occlusion threshold should be to ensure the stability of the object feature’s similarity. Since the object is in motion while its actual size remains constant, its size on the image plane changes as it moves; for instance, when the object moves closer to the image sensor, its size on the image plane increases, resulting in more pixels and greater information. To account for occlusion confidence, we normalized the area of the object bounding box, thereby eliminating the impact of camera position. We conducted an in-depth analysis of the effects of different occlusion threshold parameters on the MOT task, as shown in Table 6. Additionally, we introduced an occlusion factor to evaluate the extent of the occlusion of the target. It is important to note that the empirical value we used was an estimate, and this value may vary across different datasets.

Table 6.

Performance comparison of different occlusion confidence thresholds (VisDrone2019 test-dev). The best results are shown in bold.

The occlusion confidence is used to determine whether an object should be marked as occluded. If the confidence value exceeds the threshold , the object is marked as occluded; otherwise, it is considered visible. A lower threshold increases the likelihood of marking the object as occluded. When is set to 0.3, the proposed method achieves the highest IDF1 score (50.6) and HOTA score (43.3). As the threshold decreases, the number of missed detections (FN) decreases, but this also leads to an increase in false positives (FP).

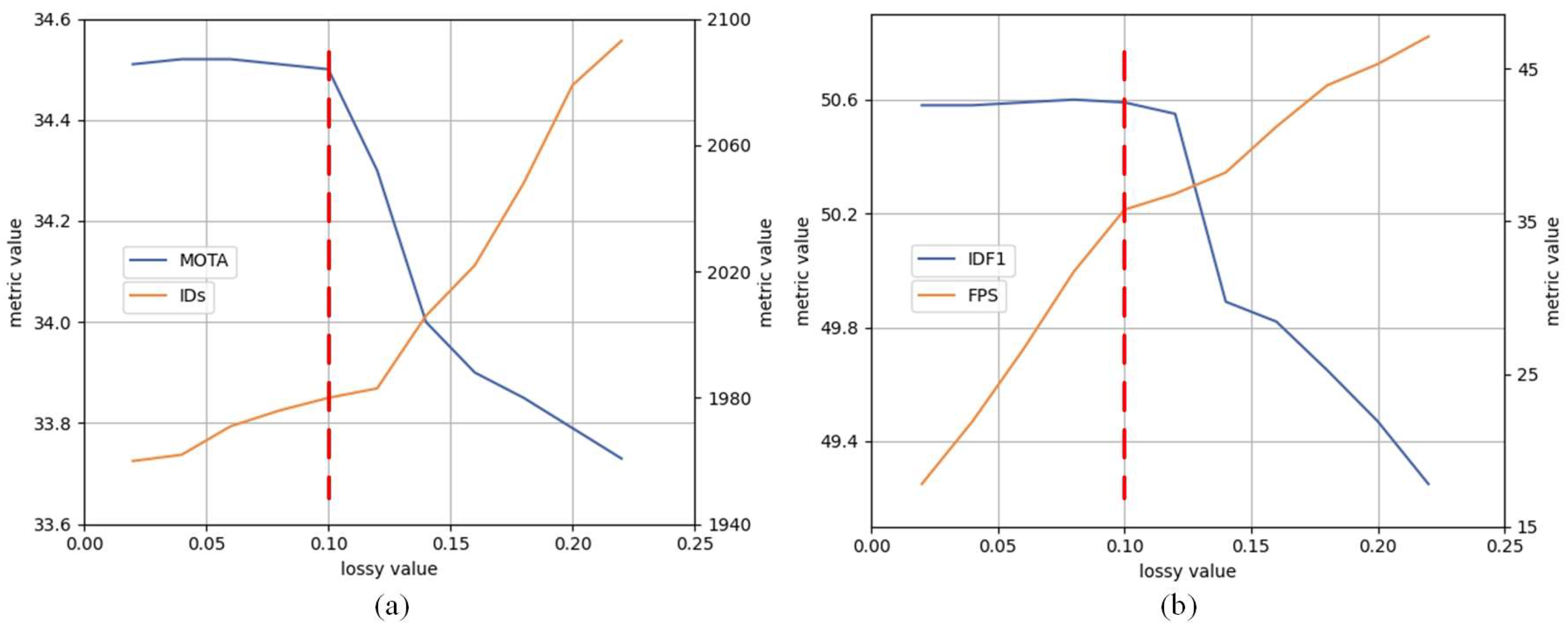

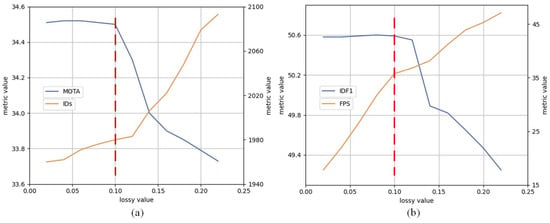

Determining distortion value in AMC. For the AMC module, accurately setting the image distortion threshold is crucial for balancing tracking performance with processing speed. In this study, we systematically analyzed the impact of different distortion threshold parameters on the MOT task. As shown in Figure 8, we evaluated how varying the image distortion threshold from 0.02 to 0.22 affects key MOT evaluation metrics, including MOTA, IDF1, IDs, and FPS. The results indicate that tracking performance remains high with minimal fluctuation within the threshold range of 0.02 to 0.12. A further analysis of the time efficiency revealed that while setting the distortion threshold above 0.1 improves processing speed, it leads to a linear decline in other evaluation metrics. Considering the performance across various indicators, setting the image distortion threshold to 0.1 achieves an optimal trade-off between tracking performance and processing speed. Consequently, we configured to 0.1 in the AMC module, activating camera motion compensation only when significant image distortion was detected. This approach enhances UAV tracking accuracy during irregular motion while avoiding the unnecessary consumption of computing resources in regular tracking scenarios, thus improving the overall efficiency and practicality of the algorithm.

Figure 8.

Determination of image distortion threshold (VisDrone2019 test-dev). (a) The metric values of MOTA and IDs at different thresholds. (b) The metric values of IDF1 and FPS at different thresholds.

4.5. Case Study

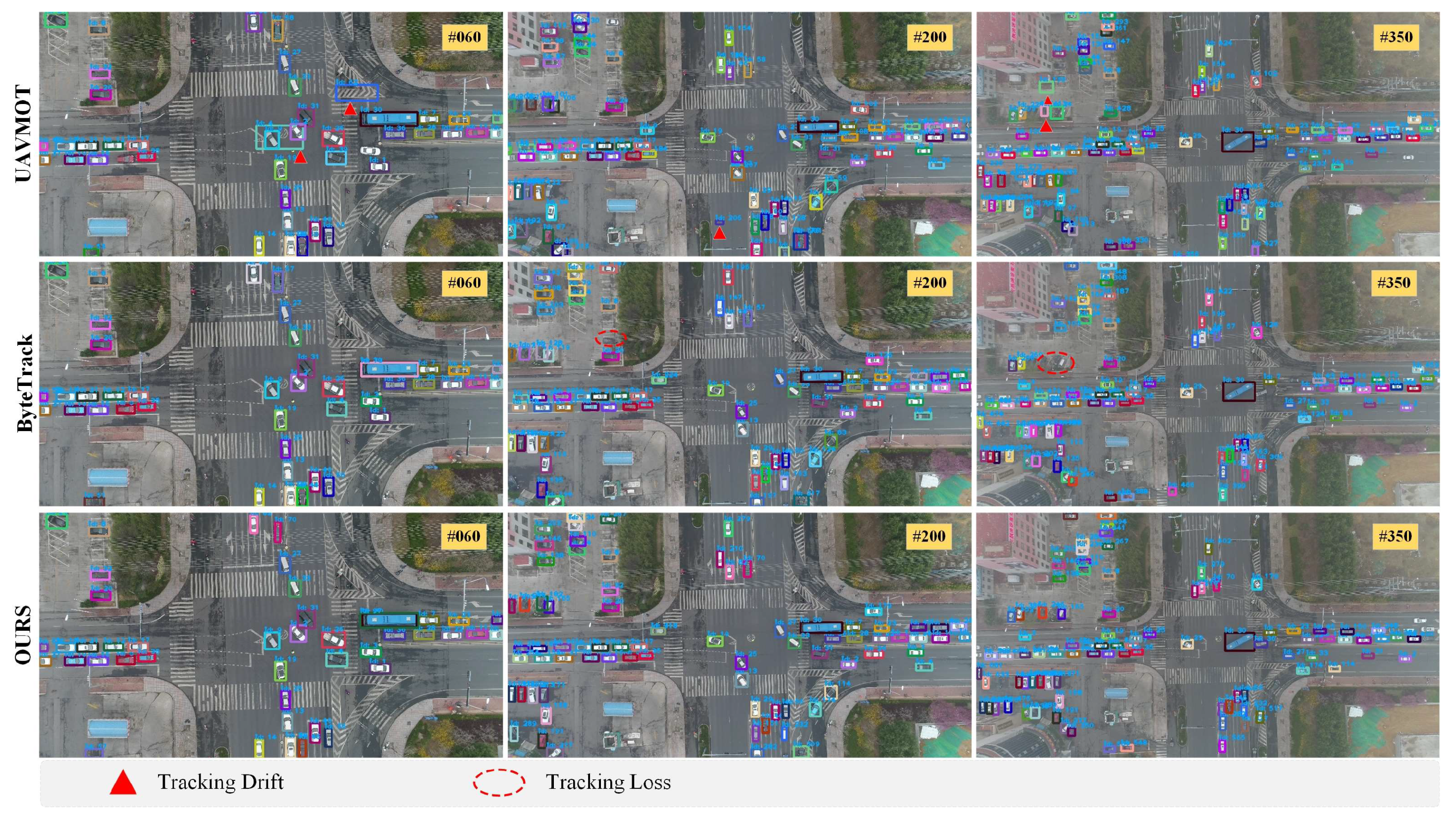

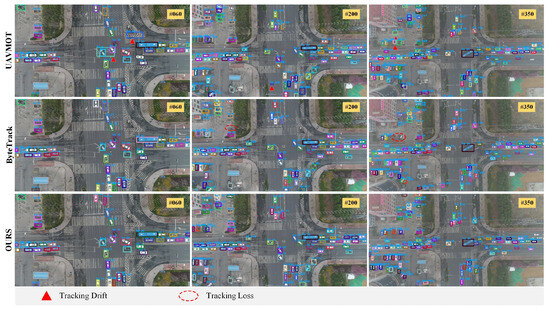

To better showcase the advantages and effectiveness of the OMCTrack method in real UAV object tracking tasks, we analyze specific motion scenarios involving UAVs or objects across three UAV tracking tasks.

Camera Moves Fast. When the drone or its camera shake or move rapidly, the position of the object in the image will change dramatically, which easily causes tracking drift or loss. As shown in Figure 9, the camera pitch angle changes between video frames 81, 83, 90, 93, 98, and 103, causing the camera to move rapidly, causing problems such as ID switching, tracking loss, and tracking frame drift in both UAVMOT and ByteTrack algorithms, making it difficult to achieve accurate tracking in the case of rapid camera movement. However, OMCTrack can effectively deal with camera motion problems, compensate for distorted images in time, and achieve accurate and robust multi-object tracking.

Figure 9.

Analysis of a special case: Camera moves fast.

Object Motion Occlusion. In multi-object tracking, object occlusion is a common and unavoidable challenge that significantly impacts tracking performance. Frequent occlusion, whether between objects or between objects and the background, can cause the loss of key object features, leading to object drift, where the object’s position and motion trajectory deviate, resulting in tracking loss or misidentification as a new object. As illustrated in Figure 10, after the truck in the scene is occluded, both UAVMOT and ByteTrack algorithms experience issues like tracking loss and ID switching. However, OMCTrack effectively handles object drift caused by occlusion, thanks to its occlusion perception module, enabling stable and long-term multi-object tracking.

Figure 10.

Analysis of a special case: Object motion occlusion.

UAV Pulls Up and Hovers. When the drone suddenly ascends or hovers, its shooting angle shifts, causing objects in the video to appear smaller as the drone rises, or to rotate as the drone circles. This disrupts the original motion pattern of the objects, making it difficult to approximate their movement as linear. As shown in Figure 11, algorithms like UAVMOT and ByteTrack struggle to accurately track small-scale objects due to the irregular motion of the drone. However, OMCTrack can maintain accurate tracking despite these challenges. It is important to note that we used the same object detector and detection weights across all the object tracking algorithms.

Figure 11.

Analysis of a special case: UAV pulls up and hovers.

4.6. Visualization

To demonstrate the effectiveness of the proposed method, a visual performance evaluation was conducted on the VisDrone2019 and UAVDT test sets. As shown in Figure 12 and Figure 13, the OMCTrack algorithm exhibits strong adaptability to the dynamic environments encountered by UAVs, accurately identifying and tracking small objects within the scene while maintaining stable multi-object tracking performance during UAV movement. These results clearly validate the OMCTrack algorithm’s efficiency in handling object scale changes and multi-object tracking in UAV video streams.

Figure 12.

Visualization of tracking results on VisDrone2019 datasets.

Figure 13.

Visualization of tracking results on UAVDT datasets.

4.7. Limitations

When motion blur occurs or the object tracking task is performed during camera zoom, our method faces challenges in accurately recovering the object’s ID after it is re-detected. As illustrated in Figure 14, motion blur at low resolution makes it difficult for the object detector to achieve precise detection, complicating the recovery of object IDs even after motion compensation is applied. The limited performance of object detection at low resolutions, combined with the nonlinear motion between the camera and the object, makes it difficult to rely solely on location information to determine identity. Future work should focus on developing more effective object association strategies to address the challenges posed by motion blur or camera zoom, particularly in low-resolution scenarios.

Figure 14.

Visualizing the limitations of our approach.

5. Conclusions

In this study, we developed a real-time multi-object tracking algorithm for UAVs, named OMCTrack, which integrates occlusion perception and motion compensation mechanisms. The algorithm features a specially designed occlusion sensing unit to effectively handle short-term occlusions without adding computational overhead. For the high-density scenarios commonly encountered from UAV perspectives, we propose an efficient hierarchical association strategy to minimize mismatches. Additionally, to address challenges such as perspective changes and motion blur caused by rapid UAV movement, we designed an adaptive motion compensation module. This module dynamically adjusts for deviations between predicted and actual target positions, ensuring both efficiency and real-time performance during tracking. To validate the effectiveness of the algorithm, we conducted a series of experiments on the VisDrone2019 and UAVDT datasets, comparing the results with existing technologies. The experimental data show that our method significantly improves UAV multi-object tracking performance, particularly in handling MOT challenges in UAV video streams, demonstrating excellent tracking accuracy and real-time processing capabilities.

Author Contributions

Z.D. was the main author of the work in this paper; Z.D. conceived the methodology of this paper, designed the experiments, collected and analyzed the data, and wrote the paper; X.S. and B.S. were responsible for directing the writing of the paper and for the design of the experiments; R.G. was responsible for managing the project and providing financial support; C.L. was responsible for assisting in the completion of the experiments. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China under grant 52101377.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, L.; Ai, H.; Zhuang, Z.; Shang, C. Real-Time Multiple People Tracking with Deeply Learned Candidate Selection and Person Re-Identification. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Du, Y.; Zhao, Z.; Song, Y.; Zhao, Y.; Su, F.; Gong, T.; Meng, H. StrongSORT: Make DeepSORT great again. arXiv 2022, arXiv:2202.13514. [Google Scholar] [CrossRef]

- Cao, J.; Weng, X.; Khirodkar, R.; Pang, J.; Kitani, K. Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 9686–9696. [Google Scholar]

- He, J.W.; Huang, Z.H.; Wang, N.Y. Learnable Graph Matching: Incorporating Graph Partitioning with Deep Feature Learning for Multiple Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 5299–5309. [Google Scholar]

- Wang, Y.X.; Kitani, K.; Weng, X. Joint Object Detection and Multi-Object Tracking with Graph Neural Networks. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13708–13715. [Google Scholar]

- Cai, J.R.; Xu, M.Z.; Li, W. MeMOT: Multi-Object Tracking with Memory. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR, New Orleans, LA, USA, 18–24 June 2022; pp. 8080–8090. [Google Scholar]

- Wang, J.; Han, L.; Dong, X. Distributed sliding mode control for time-varying formation tracking of multi-UAV system with adynamic leader. Aerosp. Sci. Technol. 2021, 111, 106549. [Google Scholar] [CrossRef]

- Sheng, H.; Zhang, Y.; Chen, J.; Xiong, Z.; Zhang, J. Heterogeneous Association Graph Fusion for Target Association in Multiple Object Tracking. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 3269–3280. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, M.; Liu, X.; Wu, T. A group target tracking algorithm based on topology. J. Phys. Conf. Ser. 2020, 1544, 012025. [Google Scholar] [CrossRef]

- Zheng, Y.J.; Du, Y.C.; Ling, H.F.; Sheng, W.G.; Chen, S.Y. Evolutionary Collaborative Human-UAV Search for Escaped Criminals. IEEE Trans. Evol. Comput. 2019, 24, 217–231. [Google Scholar] [CrossRef]

- Lee, M.H.; Yeom, S. Multiple target detection and tracking on urban roads with a drone. J. Intell. Fuzzy Syst. 2018, 35, 6071–6078. [Google Scholar] [CrossRef]

- An, N.; Qi Yan, W. Multitarget Tracking Using Siamese Neural Networks. ACM Trans. Multimid. Comput. Commun. Appl. 2021, 17, 75. [Google Scholar] [CrossRef]

- Yoon, K.; Kim, D.; Yoon, Y.C. Data Association for Multi-Object Tracking via Deep Neural Networks. Sensors 2019, 19, 559. [Google Scholar] [CrossRef] [PubMed]

- Pang, H.; Xuan, Q.; Xie, M.; Liu, C.; Li, Z. Research on Target Tracking Algorithm Based on Siamese Neural Network. Mob. Inf. Syst. 2021, 2021, 6645629. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Bewley, A.; Ge, Z.; Ott, L. Simple Online and Real-Time Tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Real-Time Tracking with a Deep Association Metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Fu, H.; Wu, L.; Jian, M.; Yang, Y.; Wang, X. MF-SORT: Simple Online and Real-Time Tracking with Motion Features. In Proceedings of the 2019 International Conference on Image and Graphics (ICIG), Beijing, China, 23–25 August 2019; pp. 157–168. [Google Scholar]

- Aharon, N.; Orfaig, R.; Bobrovsky, B. BoT-SORT: Robust Associations Multi-Pedestrian Tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi Object Tracking by Associating Every Detection Box. In Proceedings of the 2022 European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 1–21. [Google Scholar]

- Fang, L.; Yu, F.Q. Multi-object tracking based on adaptive online discriminative appearance learning and hierarchical association. J. Image Graph. 2020, 25, 708–720. [Google Scholar]

- Shu, G.; Dehghan, A.; Oreifej, O. Part-Based Multiple-Person Tracking with Partial Occlusion Handing. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1815–1821. [Google Scholar] [CrossRef]

- Chu, Q.; Ouyang, W.L.; Li, H.S. Online Multi-Object Tracking Using CNN-Based Single Object Tracker with Spatial-Temporal Attention Mechanism. In Proceeding of 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4846–4855. [Google Scholar] [CrossRef]

- Tokmakov, P.; Li, J.; Burgard, W. Learning to Track with Object Permanence. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10860–10869. [Google Scholar]

- Guo, S.; Wang, J.; Wang, X. Online Multiple Object Tracking with Cross-Task Synergy. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR, IEEE Computer Society, Nashville, TN, USA, 19–25 June 2021; pp. 8132–8141. [Google Scholar]

- Han, R.Z.; Feng, W.; Zhang, Y.J. Multiple human aociation and tracking from egocentric and complementary top views. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5225–5242. [Google Scholar] [PubMed]

- Yeom, S.; Nam, D.H. Moving Vehicle Tracking with a Moving Drone Based on Track Association. Appl. Sci. 2021, 11, 4046. [Google Scholar] [CrossRef]

- Brown, R.G.; Hwang, P.Y.C. Introduction to Random Signals and Applied Kalman Filtering: With MATLAB Exercises and Solutions, 3rd ed.; Wiley: New York, NY, USA, 1997. [Google Scholar]

- Bergmann, P.; Meinhardt, T.; Leal-Taixe, L. Tracking without bells and whistles. In Proceedings of the ICCV International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 941–951. [Google Scholar]

- Khurana, T.; Dave, A.; Ramanan, D. Detecting invisible people. arXiv 2020, arXiv:2012.08419. [Google Scholar]

- Han, S.; Huang, P.; Wang, H.; Yu, E.; Liu, D. Mat: Motion-aware multi-object tracking. Neurocomputing 2022, 476, 75–86. [Google Scholar] [CrossRef]

- Stadler, D.; Beyerer, J. Modelling ambiguous assignments for multi-person tracking in crowds. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 133–142. [Google Scholar]

- Du, Y.; Wan, J.; Zhao, Y.; Zhang, B.; Tong, Z.; Dong, I. Giaotracker: A comprehensive framework for mcmot with global information and optimizing strategies in visdrone2021. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2809–2819. [Google Scholar]

- Evangelidis, G.D.; Psarakis, E.Z. Parametric image alignment using enhanced correlation coefficient maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1858–1865. [Google Scholar] [CrossRef] [PubMed]

- Rublee, E.; Rabaud, V.; Konolige, K. Orb: An Efficient Alternative to Sift or Surf. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Shah, A.P.; Lamare, J.B.; Tuan, N.A. CADP: A Novel Dataset for CCTV Traffic Camera based Accident Analysis. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Stepanyants, V.; Andzhusheva, M.; Romanov, A. A Pipeline for Traffic Accident Dataset Development. In Proceedings of the 2023 International Russian Smart Industry Conference, Sochi, Russia, 27–31 March 2023. [Google Scholar]

- Yu, F.; Chen, H.F.; Wang, X. BDD100K: A Diverse Driving Dataset for Heterogeneous Multitask Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Yu, F.; Yan, H.; Chen, R.; Zhang, G.; Liu, Y.; Chen, M.; Li, Y. City-scale Vehicle Trajectory Data from Traffic Camera Videos. Sci. Data 2023, 10, 711. [Google Scholar] [CrossRef] [PubMed]

- Wu, P.; Su, S.; Zuo, Z.; Sun, B. RISTrack: Robust Infrared Ship Tracking with Modified Appearance Feature Extraction and Matching Strategy. In Proceedings of the IEEE Transactions on Geoscience and Remote Sensing, Changsha, China, 16–21 July 2023; Volume 61. [Google Scholar]

- Lindenberger, P.; Sarlin, P.; Pollefeys, M. LightGlue: Local Feature Matching at Light Speed. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023. [Google Scholar]

- Safaldin, M.; Zaghden, N.; Mejdoub, M. An Improved YOLOv8 to Detect Moving Objects. IEEE Access 2024, 12, 59782–59806. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the ECCV, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).