Quantitative Assessment of Drone Pilot Performance

Abstract

:1. Introduction

Need and Problem Statement

- train an AI-based classifier to recognize ‘good’ and ‘bad’ flight behaviour in real-time, aiding in the development of a virtual AI co-pilot for immediate feedback;

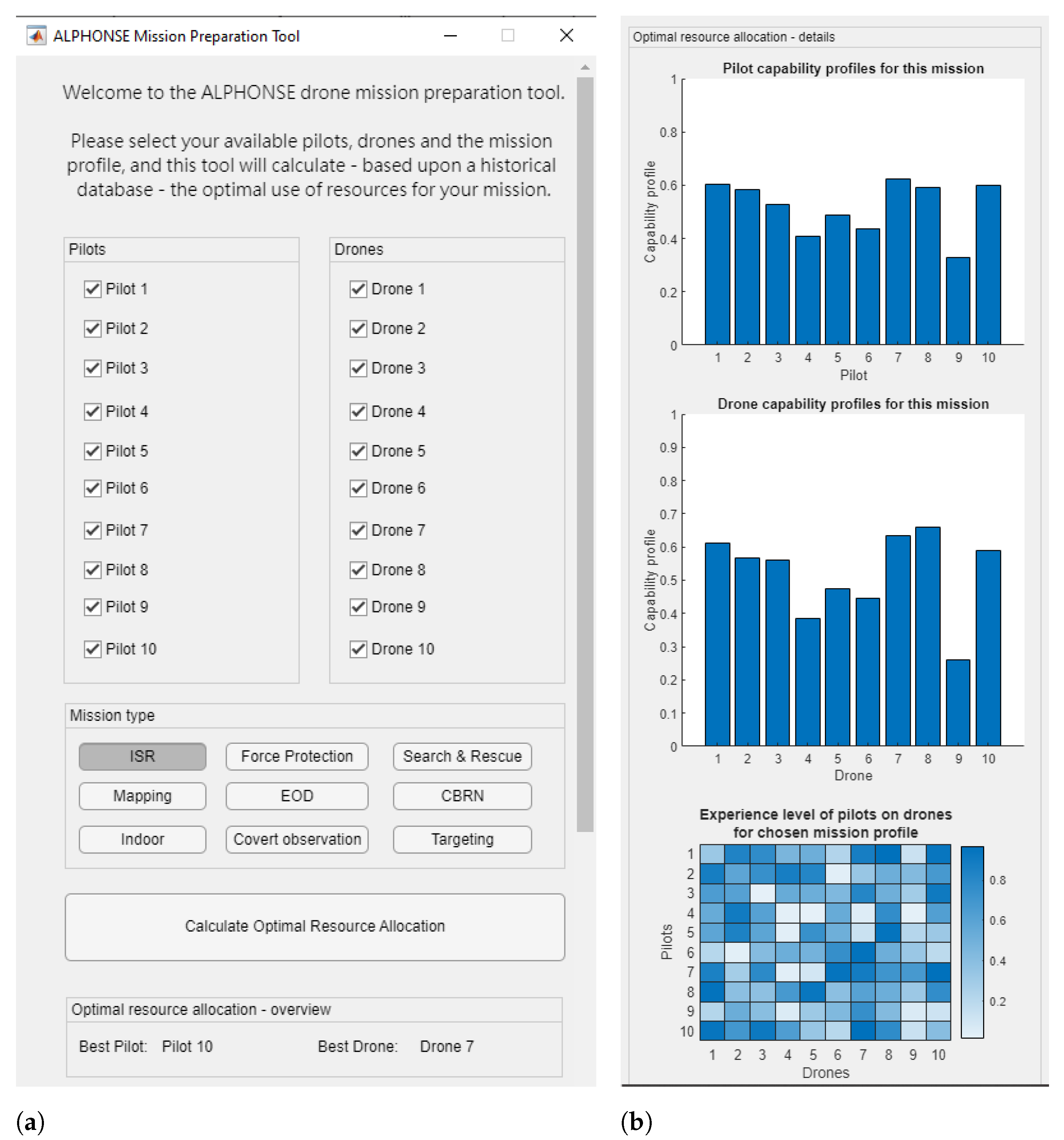

- develop a mission planning tool assigning the best pilot for specific missions based on performance scores and stress models;

- identify and rank human factors impacting flight performance, linking stress factors to performance; and

- update training procedures to account for human factors, improving pilot awareness and performance.

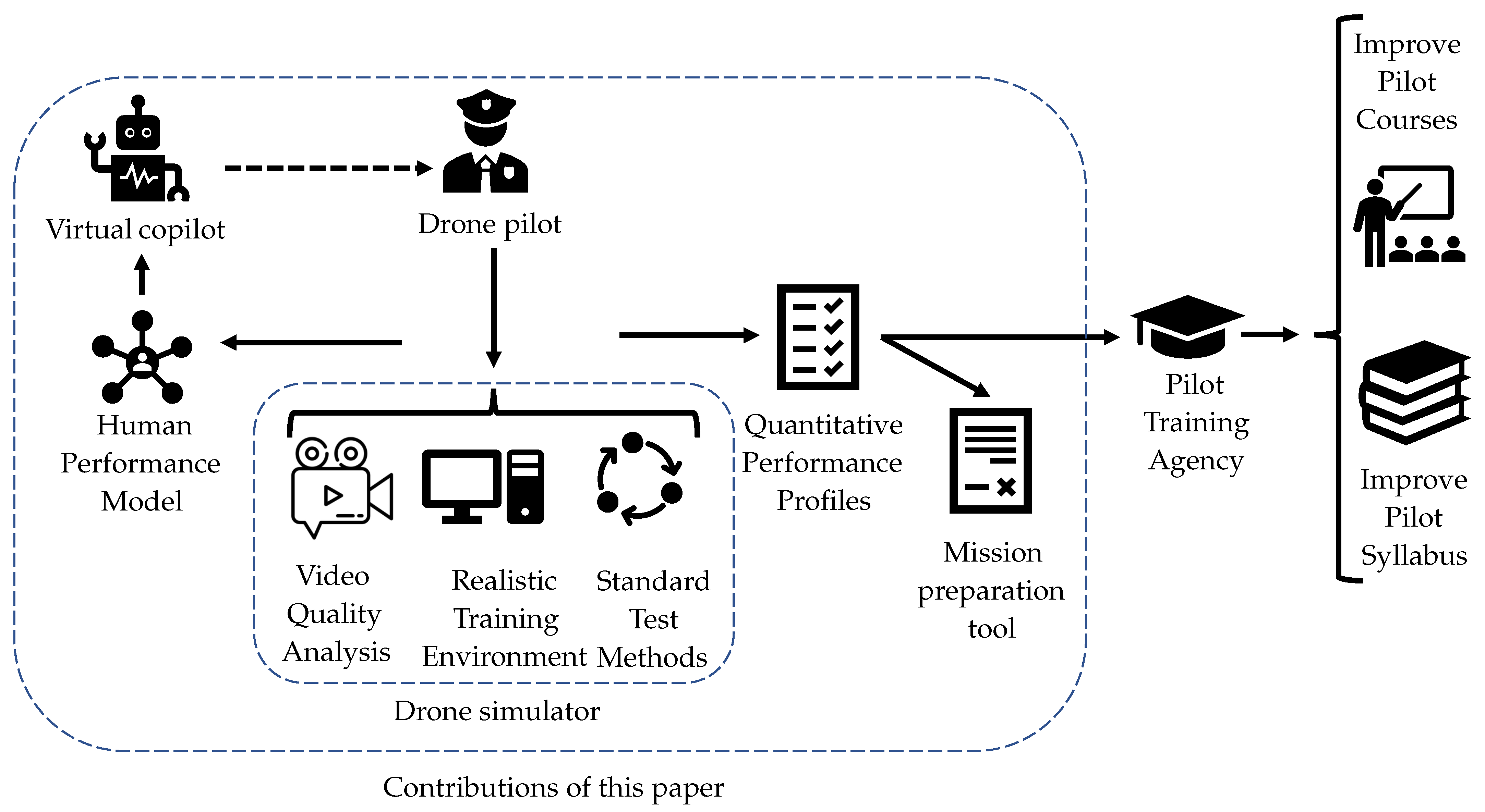

- A drone simulator tracking operator performance under realistic conditions in standardized environments (discussed in Section 4);

- A method for quantitative evaluation of drone-based video quality (discussed in Section 4.2;

- A methodology for modelling human performance in drone operations, relating human factors to operator performance (discussed in Section 5);

- An AI co-pilot offering real-time flight performance guidance (discussed in Section 7.1);

- A flight assistant tool for generating optimal flight trajectories (discussed in Section 7.2);

- A mission planning tool for optimal pilot assignment (discussed in Section 7.3);

- An iterative training improvement methodology based on quantitative input (discussed in Section 7.4).

2. Related Work

2.1. Related Work in the Domain of Drone Simulation

2.2. Related Work in the Domain of Quantitative Evaluation of Drone Pilot Performance

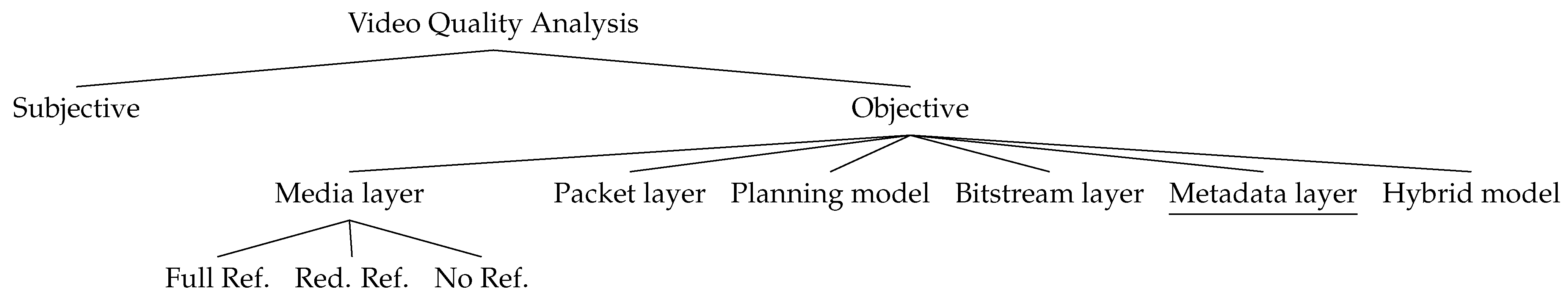

2.3. Related Work in the Domain of Video Quality Analysis

- Full-Reference Methods: These extract data from high-quality, non-degraded source signals and are often derivatives of PSNR [46], commonly used for video codec evaluation;

- Reduced-Reference Methods: These extract data from a side channel containing signal parameter data;

- No-Reference Methods: These evaluate video quality without using any source information.

2.4. Related Work in the Domain of Human-Performance Modelling for Drone Operations

2.5. Related Work in the Domain of AI Assistance for Drone Operations

3. Overview of the Proposed Evaluation Framework and Situation in Comparison to the State of the Art

4. Virtual Environment for Quantitative Assessment

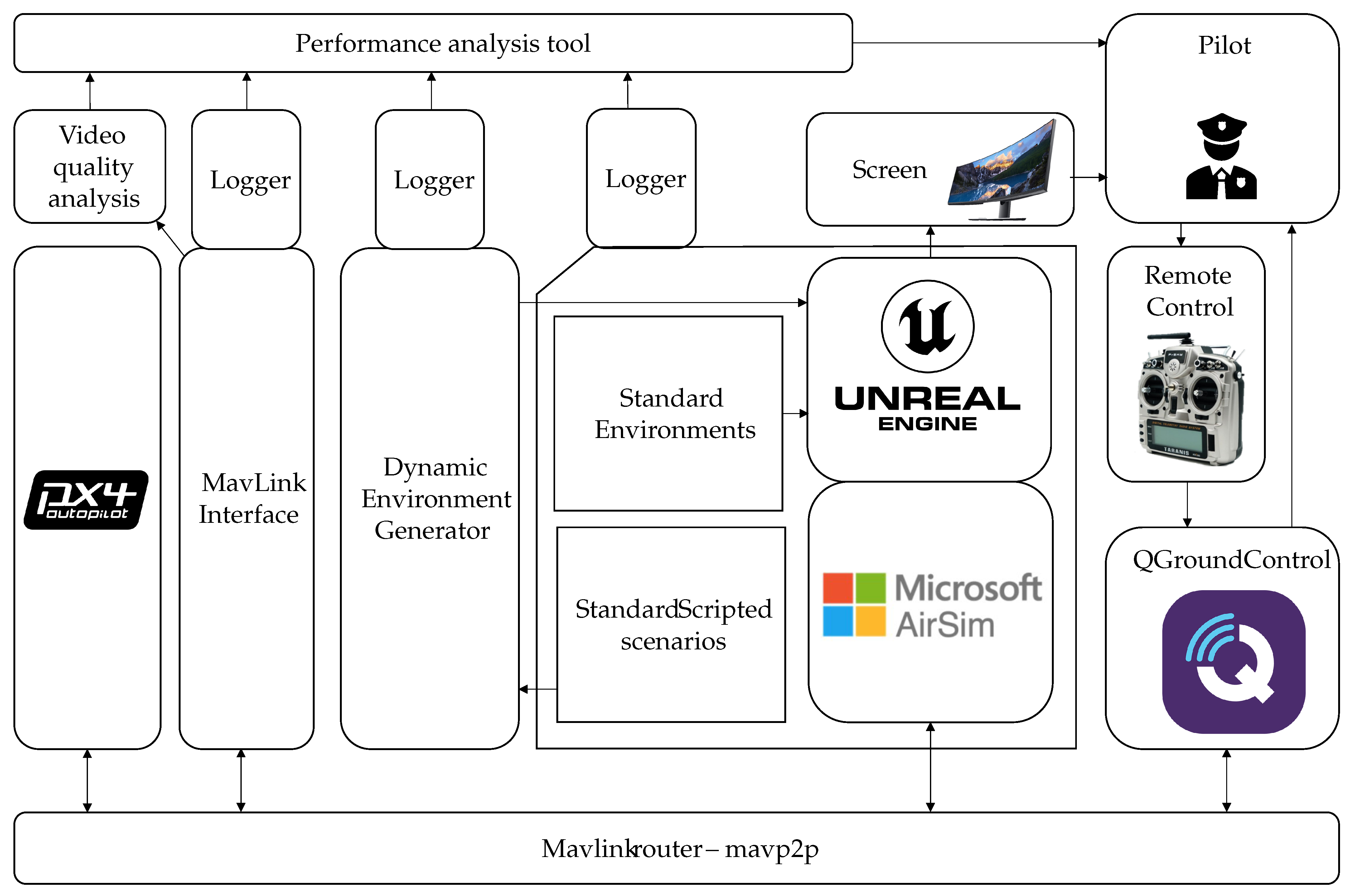

4.1. Software Framework

4.1.1. PX4

4.1.2. Mavlinkrouter

4.1.3. Mavlink Interface

4.1.4. Standard Scripted Scenarios

4.1.5. Unreal Engine

- Next to the standard first-person-view camera, we introduce a ground-based observer viewpoint camera, which renders the environment through the eyes of the pilot. At the start of the operation, the simulator starts in this ground-based observer viewpoint. As the environment is very large and as most operations will take place beyond-visual-line-of-sight (BVLOS), the operator will, after a while, usually switch to first-person-view.

- Measure the number of collisions with the environment, as this is a parameter for the pilot-performance assessment.

- Measure the geo-location of any target (static camp or dynamic enemies).

- Calculate at any moment the minimum distance to any enemy.

- Sound an alarm when the drone is detected.

- Include a battery depletion timer (set at 25 min). The drone crashes if it is not landed within the set time.

- We want to avoid measuring the side-effects of virtual embodiment, which some pilots may be subject to.

- Virtual reality would obstruct the use of exteroceptive sensing tools for measuring the physiological state of the pilot during the test.

4.1.6. Standard Test Environment

- A standardised visual acuity object. This consists of a mannequin with a letter written on its chest plate, as shown on Figure 4e. The user will be requested to read out the letter (like for an ophthalmologist exam). The letters can be dynamically changed for every simulation and the mannequins can be spread randomly over the environment. In order to increase the level of difficulty, some of the mannequins are placed inside enclosures, forcing legibility only under certain viewing angles, thereby making the job even more difficult for the drone pilot.

- An enemy camp. This consists of a series of tents, military installations, and guarded watchtowers, as shown on Figure 4f.

4.1.7. AirSim

4.1.8. Dynamic Environment Generator

4.1.9. QGroundControl

4.1.10. Logging Systems

- The MavLink interface records a series of interesting parameters related to the drone itself (its position, velocity and acceleration, control parameters, vibrations, etc.) on the MavLink datastream.

- The Dynamic Environment Generator records the environmental conditions (wind direction and speed, density of rain, snow and fog, etc.) and the presence of auditory disruptions (type of audio track, sound intensity, etc.).

- The Unreal Engine-based simulation records the number of collisions with the environment, the geo-location of all targets, the minimum distance to any enemy, and the detectability of the drone.

4.2. Quantitative Evaluation of Drone-Based Video Quality

4.2.1. Concept

4.2.2. Methodology

- We assume that the drone camera is always directed at the target. This assumption simplifies the algorithm by avoiding (dynamic) viewpoint adjustments based on drone movement. This is a realistic scenario, as in actual operations a separate camera gimbal operator typically ensures the camera remains focused on the target. This task can also be automated using visual servoing methodologies [85], which we assume to be implemented in this paper.

- To ensure uniform perception of the target from various viewing angles, we assume the target has a perfect spherical shape. While this is an approximation and may differ for targets with non-spherical shapes, it is the most generic assumption and can be refined if specific target shapes are more applicable to particular uses.

- Since the zoom factor is not dynamically available to the algorithm, we assume a static zoom factor.

- The input parameters for the video quality assessment algorithm are the drone’s position at a given time instance, , and the target’s position, , which is assumed to remain static throughout the video sequence.

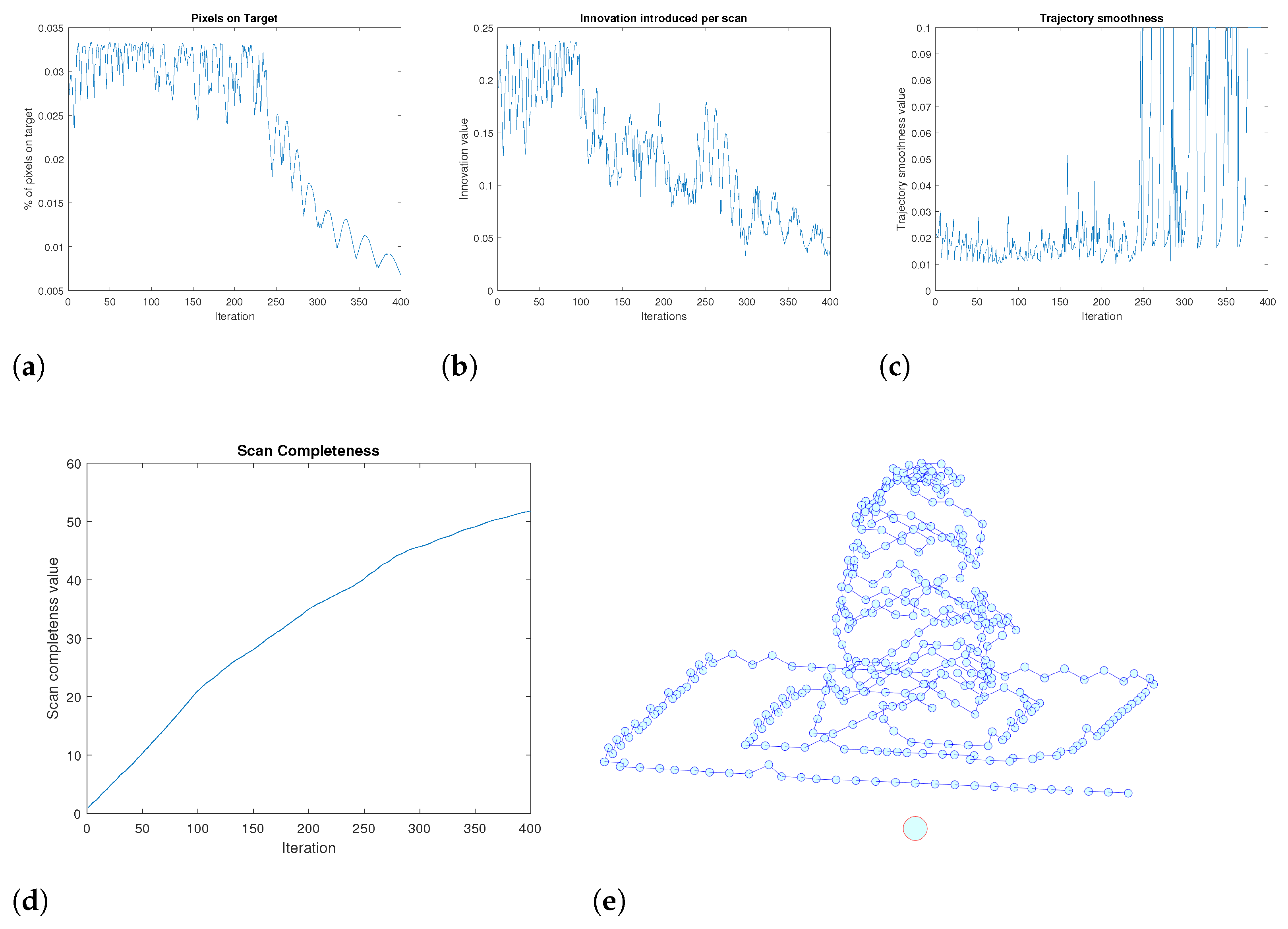

- The number of pixels on target, . It is well-known that for machine-vision image-interpretation algorithms (e.g., human detection [86], vessel detection [87]), the number of pixels on target is crucial for predicting the success of the image-interpretation algorithm [88]. Similarly, for human image interpretation, Johnson’s criteria [89] indicate that the ability of human observers to perform visual tasks (detection, recognition, identification) depends on the image resolution on the target. Given a constant zoom factor, the number of pixels on target is inversely proportional to the distance between the drone and the target, such that:where is a constant parameter ensuring that , dependent on the minimum distance between the drone and the target, the camera resolution, and the focal length.

- The data innovation, . As discussed in the introduction, assessing the capability of drone operators to obtain maximum information about a target in minimal time is crucial. The data innovation metric evaluates the quality of new video data. This is achieved by maintaining a viewpoint history memory, , with , which stores all normalized incident angles of previous viewpoints. The current incident angle, , is compared to this memory by calculating the norm of the difference between the current and the previous incident angles. The data innovation is the smallest of these norms, representing the distance to the closest viewpoint on a unit sphere:New viewpoints should be as distinct as possible from existing ones, as expressed by (2).

- The trajectory smoothness, . High-quality video requires a smooth drone trajectory over time. Irregular motion patterns make the video signal difficult to interpret by human operators or machine-vision algorithms. The metric evaluates trajectory smoothness by maintaining a velocity profile, , with , which stores all previous velocities. The current velocity, , is compared to the n most recent velocities. The norm of the difference between the current and previous velocities is weighted by recency. The weighted sum of the n most recent velocity differences measures changes in the motion profile and is inversely proportional to trajectory smoothness:

4.2.3. Validation

5. Drone Operator Performance Modelling

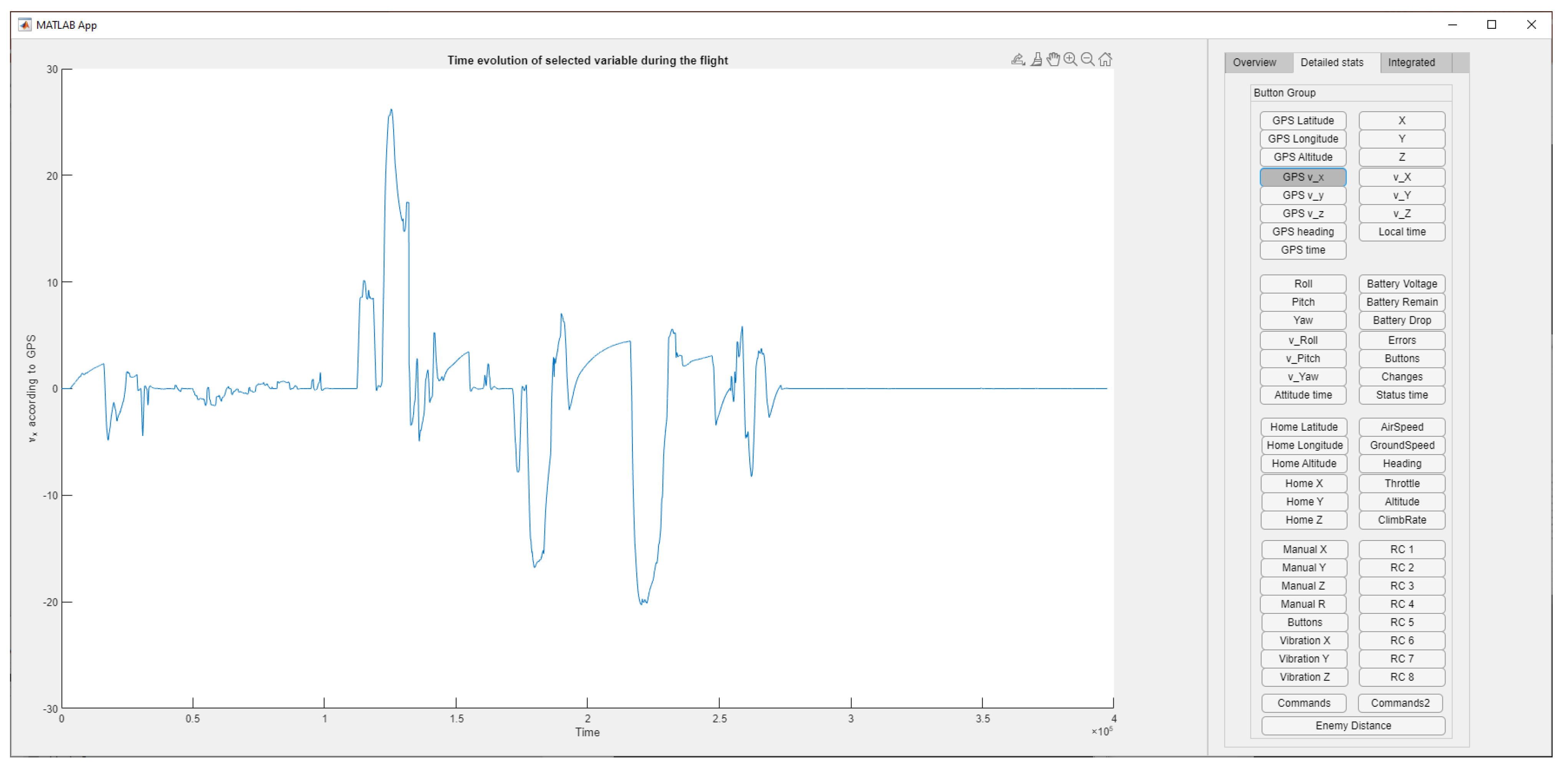

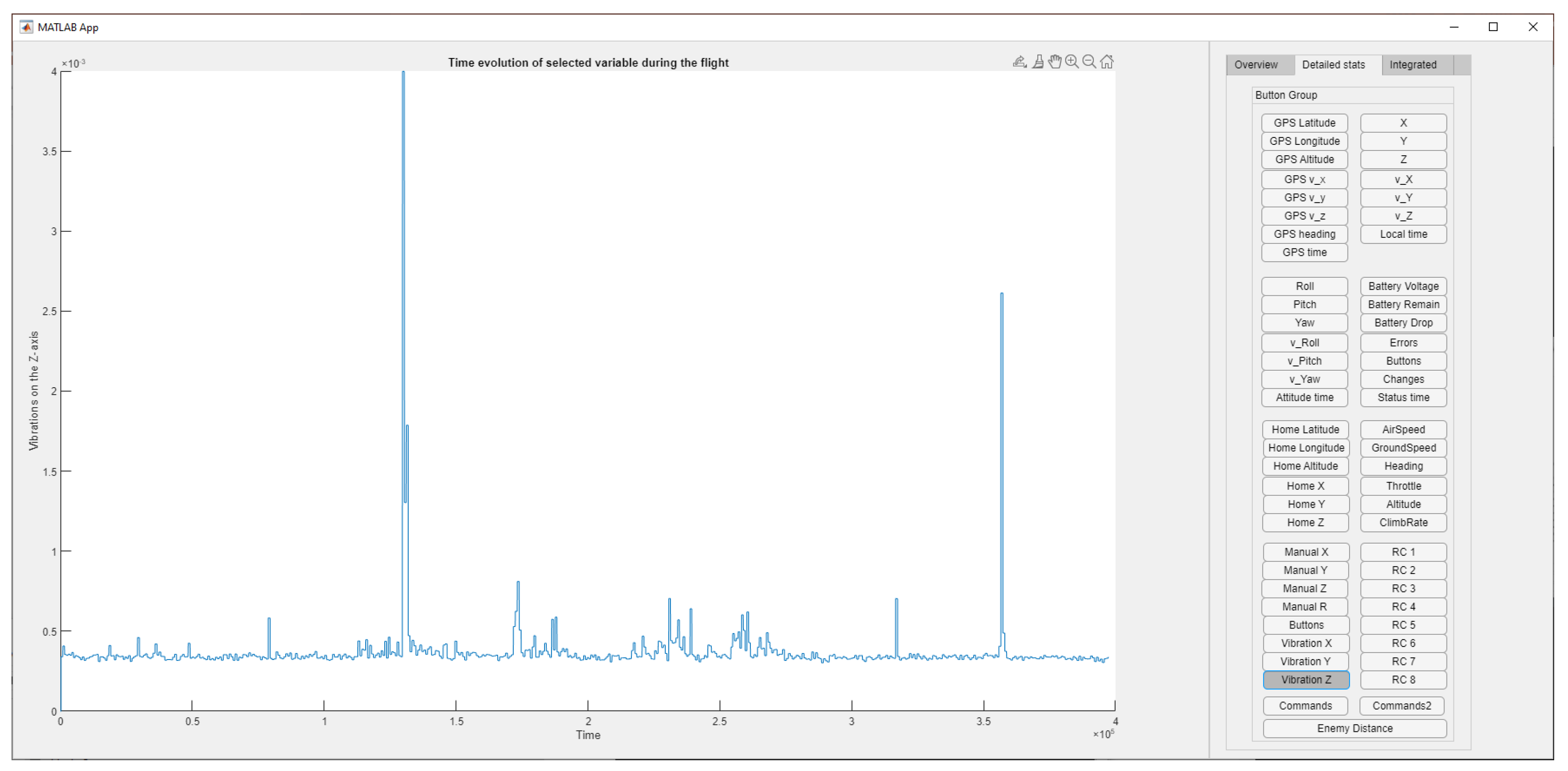

5.1. Metrics Definition

- GPS latitude, altitude, and heading;

- Velocity in the X, Y, Z directions;

- Roll, pitch, and yaw angles;

- Velocity in roll, pitch, and yaw;

- Throttle level;

- Climb rate;

- Vibrations in the X, Y, and Z directions;

- Control stick position in the X, Y, Z, and roll directions.

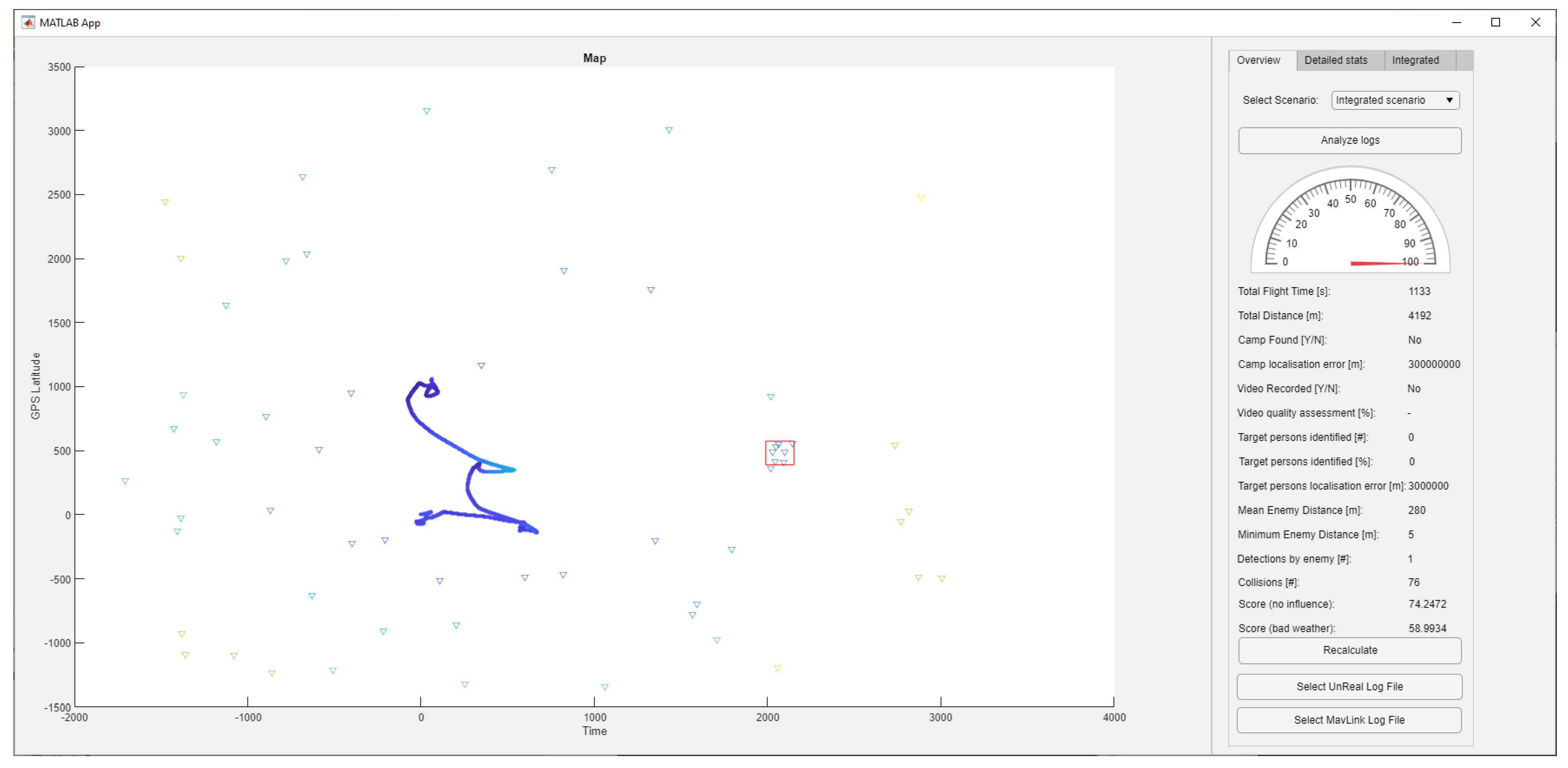

5.2. Performance Analysis Tool Interface Design

- The total flight time in seconds;

- The total distance flown in meters;

- Whether the camp has been found, and—if yes—the error on the distance measurement;

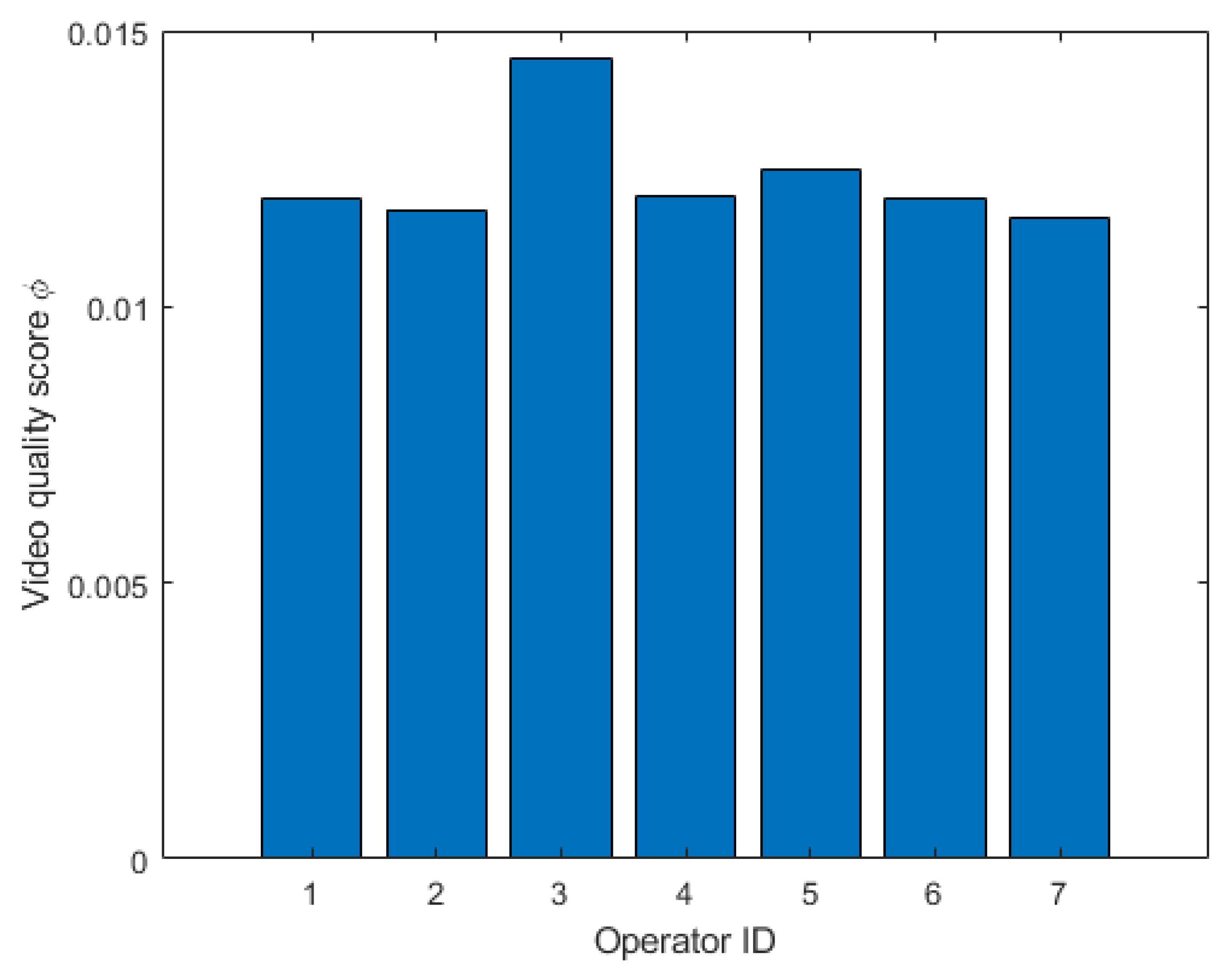

- Whether a video was recorded, and—if yes—the video quality score according to Equation (4);

- The number and percentage of enemies identified and their localisation error;

- The mean and minimum enemy distance in meters;

- Whether or not the drone has been detected by enemies;

- The number of collisions;

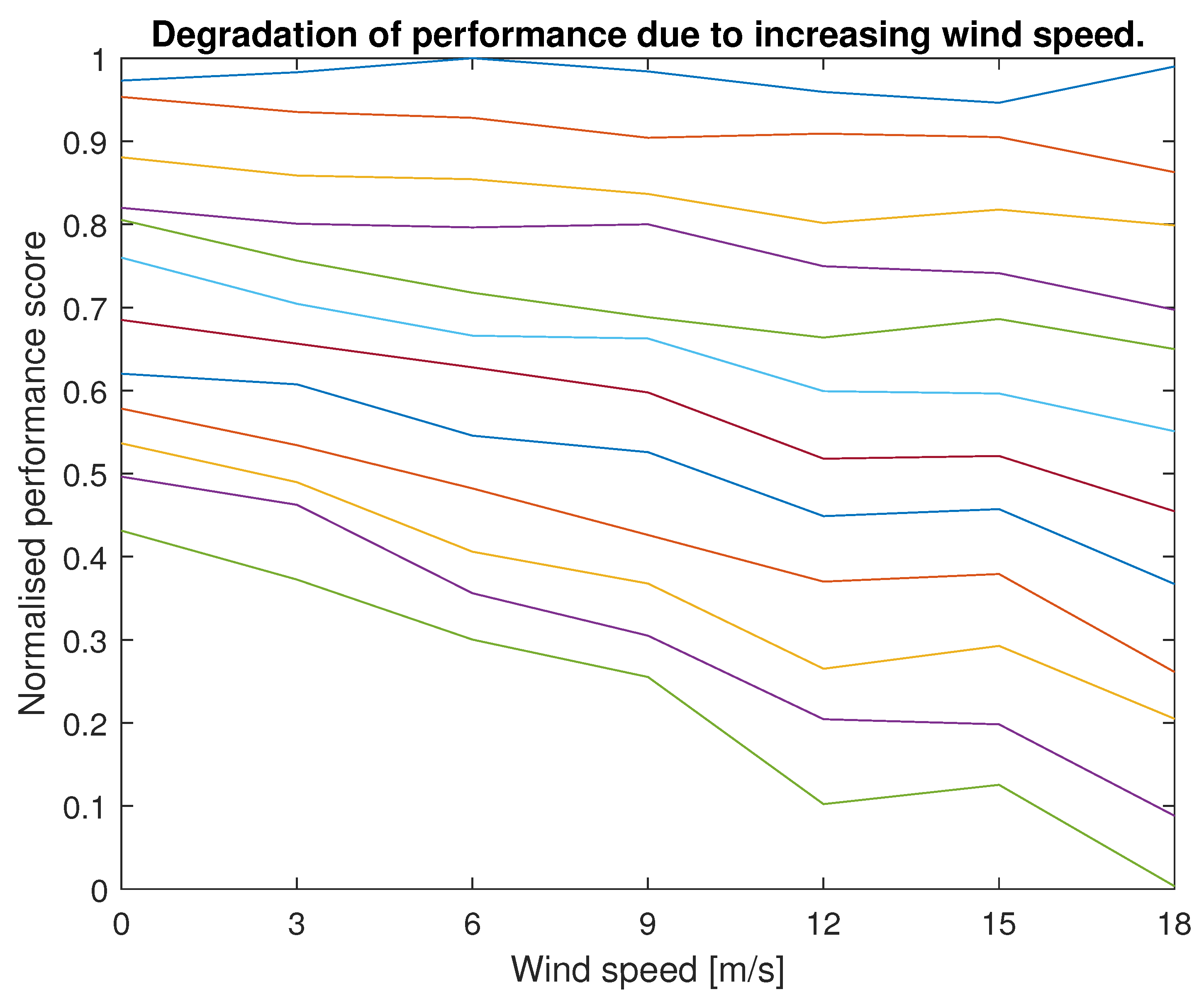

- The performance score under multiple environmental conditions or under multiple human factors. Note that—as can be expected—the performance score in normal weather is better than the performance score in bad weather.

5.3. Human-Performance-Modelling Methodology

6. Results and Discussion

6.1. Design and Scope of the Experiments

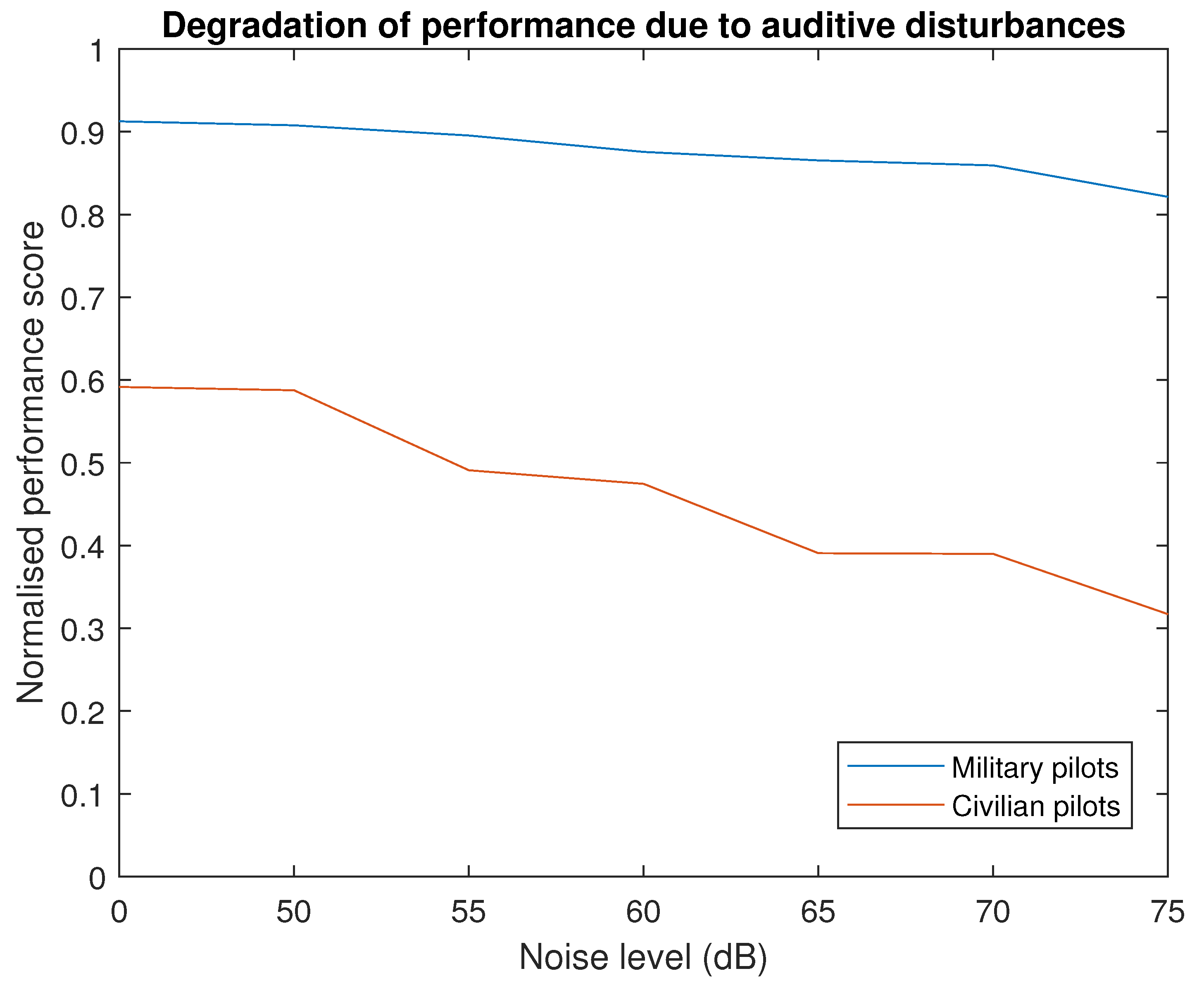

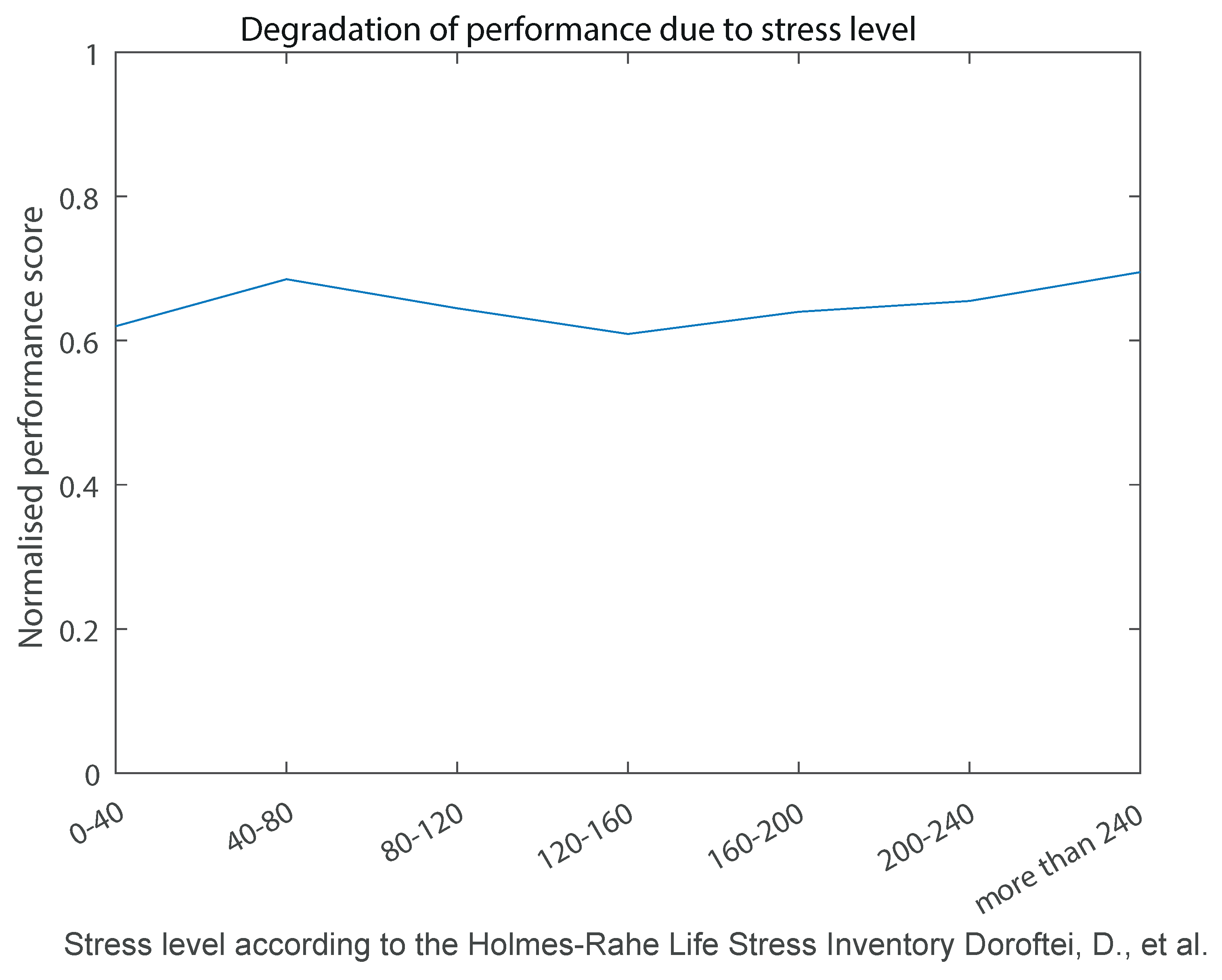

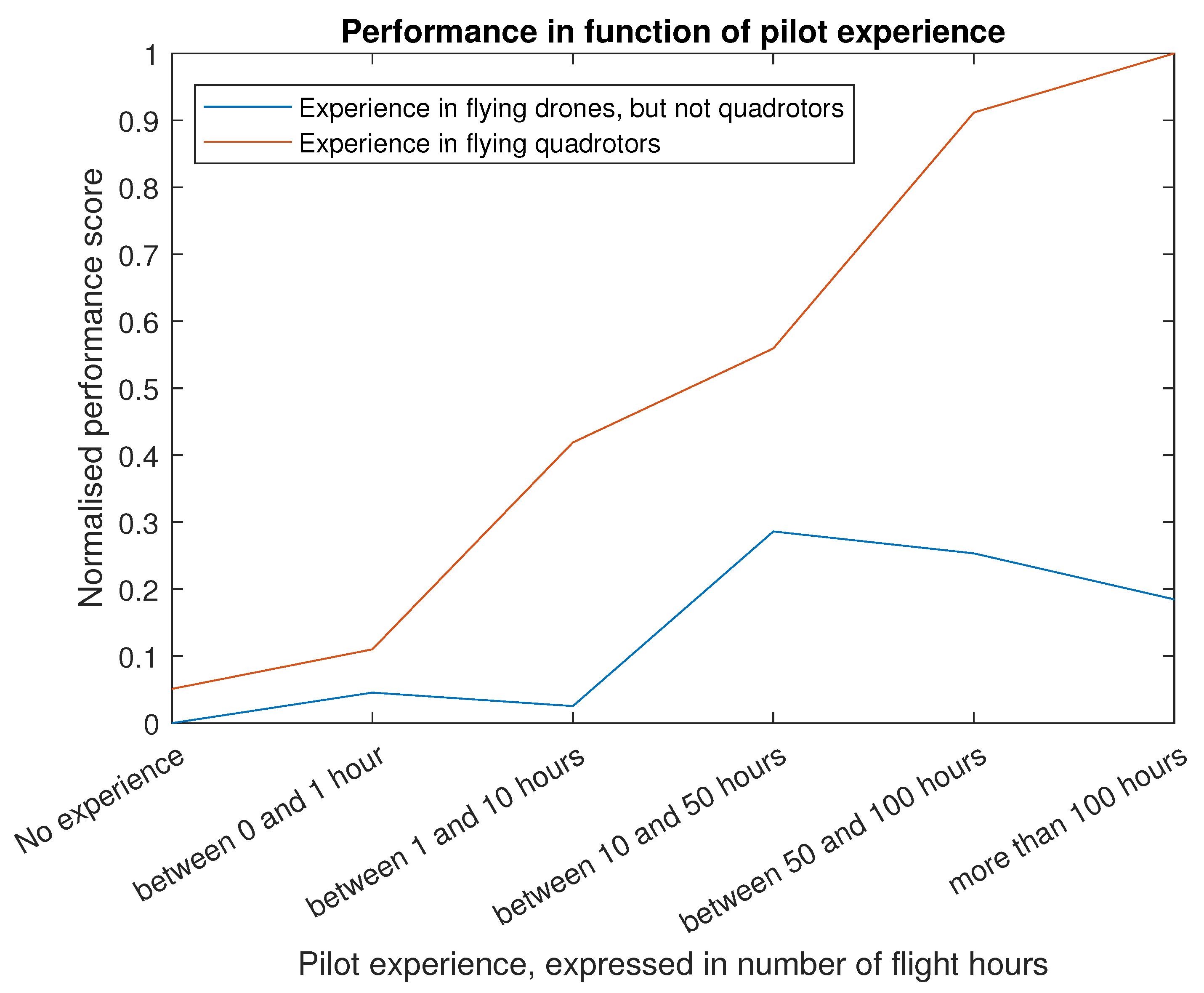

6.2. Flight Performance in Function of Disturbances

- Measurement of stress level only before the test and not during the test may not be enough to yield appropriate measurements of stress levels. As discussed before, we intend to extend the simulator system with exteroceptive sensing systems that would enable the measurement of stress levels during flight, but this is not something that is ready yet.

- The Holmes–Rahe Life Stress Inventory is likely not be the optimal tool for quantifying stress levels in this context, as it emphasizes long-term life events rather than short-term stressors.

- Limited sample size of our pilot population, which may not be sufficient to yield statistically significant results.

7. Application Use Cases

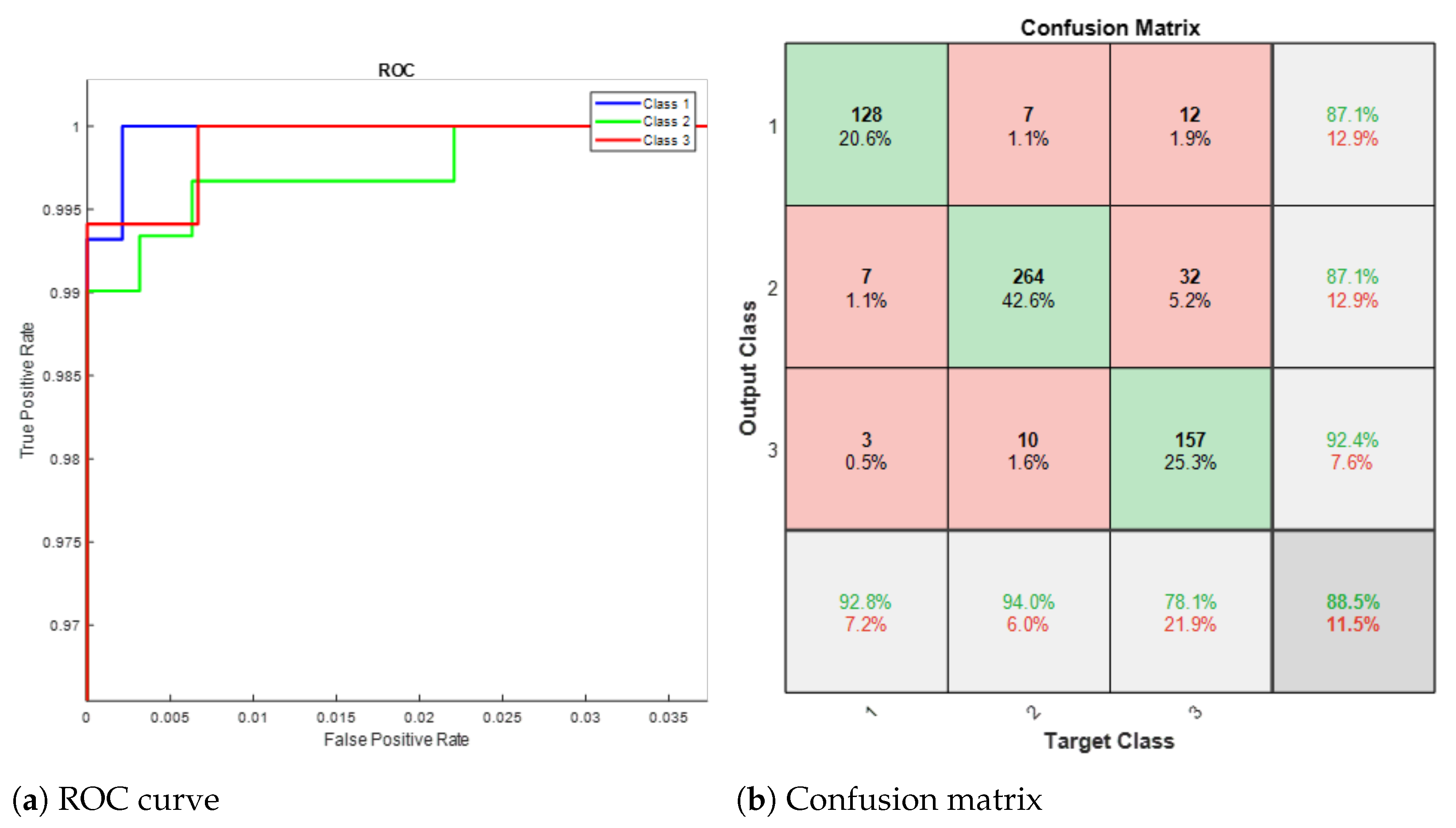

7.1. AI Copilot for Drone Operator Assistance

7.1.1. Motivation and Concept

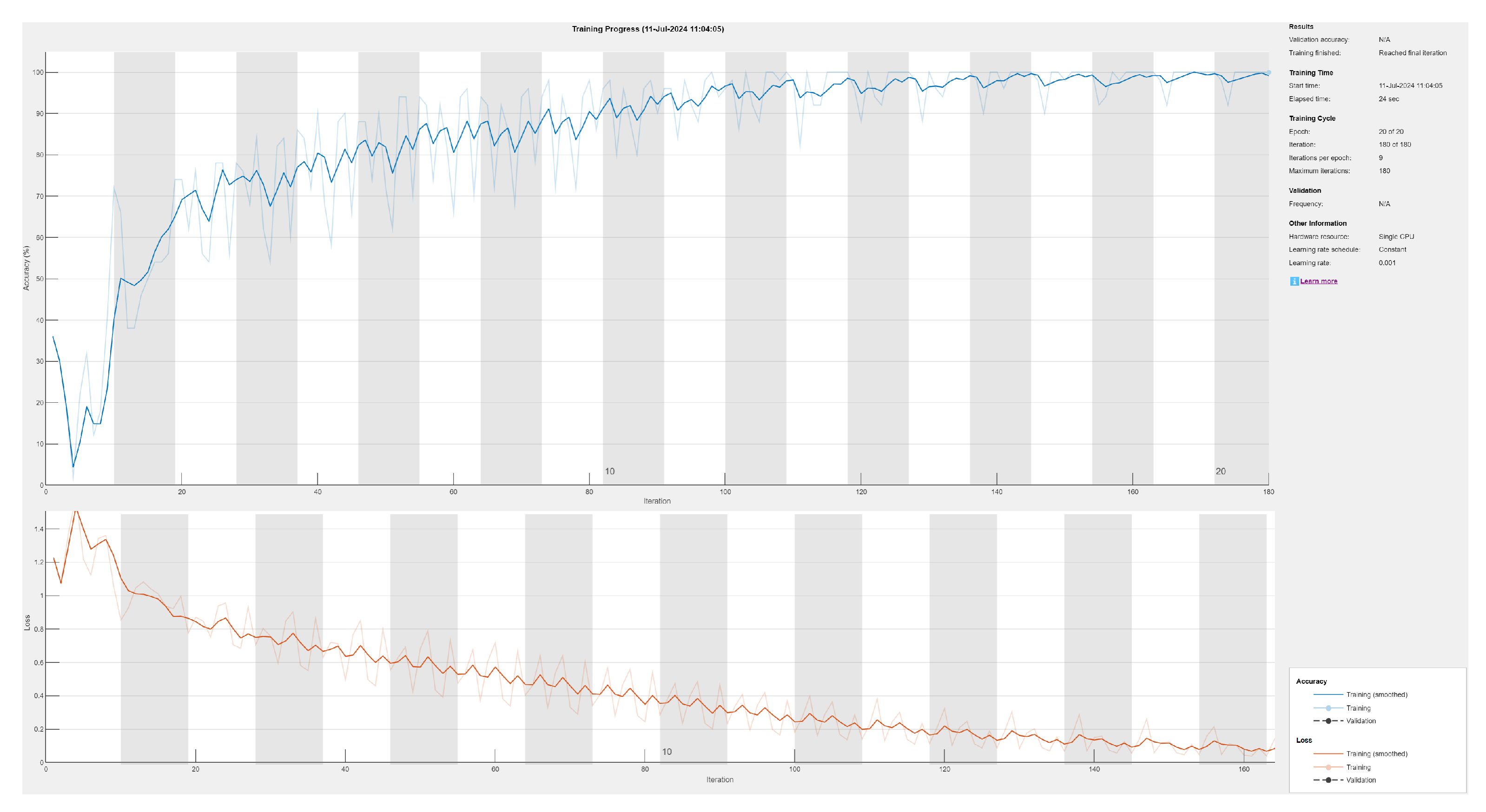

7.1.2. Methodology

- A SequenceInputLayer that handles sequences of the 26 flight parameters.

- A bidirectional long short-term memory layer that learns bidirectional long-term dependencies between time steps of time series.

- A fully connected layer that multiplies the input by a weight matrix and adds a bias vector.

- A softmax layer that applies a softmax function [93] to the input.

- A classification layer that computes the cross-entropy loss for classification tasks with mutually exclusive classes.

- Category 1: Novice pilots, which also includes pilots experienced with fixed-wing drones but not rotary-wing drones, subject to poor skill transfer across drone types, as observed and discussed in Section 5.

- Category 2: Competent pilots with experience flying rotary-wing drones.

- Category 3: Expert pilots, consisting of highly skilled pilots who regularly practice complex flight operations.

7.1.3. Validation and Discussion

7.2. Automated Optimal Drone Trajectories for Target Observation

7.2.1. Methodology

- Line 2: As stated above, the algorithm starts from a simple drone motion model, which proposes a number of possible discrete locations where the drone can move to, taking into account the flight dynamics constraints.In a first step, we perform a search over all possible new locations in order to assess which one is the best to move to. This means that a brute-force search is followed for searching for the optimal position. This is a quite simplistic approach, but we have opted for this option as the number of possible locations is not so enormous and it is therefore not required to incorporate some advanced optimization scheme.

- Line 3: In a second step, the safety of the proposed new drone location is assessed. This analysis considers two different aspects:

- −

- The physical safety of the drone, which is in jeopardy if the drone comes too close to the ground. Therefore, a minimal distance from the ground will be imposed and proposed locations too close to the ground are disregarded.

- −

- The safety of the (stealth) observation operation, which is in jeopardy if the drone comes too close to the target, which means that the target (in a military context, often an enemy) could hear/perceive the drone and the stealthiness of the operation would thus be violated. Therefore, a minimal distance between the drone and the target will be imposed and proposed locations too close to the target will be disregarded.

- Line 7: The global objective video quality measure, , at the newly proposed location is calculated, following Equation (4).

- Line 8: The point with the highest video quality score, , is recorded.

- Lines 9 and 10: At this point, an optimal point for the drone to move to has been selected (). The viewpoint history memory, , and the velocity history memory, , are updated to include this new point.

- Line 11: The drone is moved to the new point, , in order to prepare for the next iteration.

- Line 12: The point is appended to the drone trajectory profile.

| Algorithm 1: Trajectory generation algorithm. |

|

7.2.2. Validation and Discussion

7.3. Drone Mission Planning Tool

7.4. Incremental Improvement of Drone Operator Training Procedures

7.4.1. Enabling Fine-Grained, Pilot Accreditation

7.4.2. Enabling Iterative Improvement of Training Procedures

7.4.3. Enabling Fine-Grained, Pilot-Performance Follow-Up

8. Conclusions

8.1. Discussion on the Proposed Contributions

8.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

DURC Statement

Acknowledgments

Conflicts of Interest

Correction Statement

Abbreviations

| ADAM | Adaptive Moment Estimation |

| AI | Artificial Intelligence |

| APAS | Advanced Pilot Assistance Systems |

| ATM | Aerial Traffic Management |

| BVLOS | Beyond-Visual-Line-Of-Sight |

| CBRN | Chemical, Biological, Radiological, and Nuclear |

| CSV | Comma-separated values |

| DEG | Dynamic Environment Generator |

| DJI | Da-Jiang Innovations |

| DRL | Drone Racing League |

| GB | Gigabyte |

| GPS | Global Positioning System |

| GUI | Graphical User Interface |

| HITL | Hardware-In-The-Loop |

| HMI | Human Machine Interface |

| ISR | Intelligence, Surveillance, Reconnaissance |

| LiDAR | Light Detection and Ranging |

| MDPI | Multidisciplinary Digital Publishing Institute |

| MTE | Mission Task Element |

| NIST | National Institute of Standards and Technology |

| PSD | Power Spectral Density |

| PSNR | Peak Signal-to-Noise Ratio |

| R-CNN | Region Convolutional Neural Network |

| RC | Remote Control |

| RF | Radio Frequency |

| RMSE | Root Mean Square Error |

| ROC | Receiver Operating Characteristic |

| SITL | Software-In-The-Loop |

| TCP | Transmission Control Protocol |

| TLX | Task Load Index |

| UAS | Unmanned Aircraft System |

| UAVs | Unmanned Aerial Vehicle |

| UDP | User Datagram Protocol |

| UTM | Unmanned Traffic Management |

| YOLO | You Only Look Once |

References

- Chow, E.; Cuadra, A.; Whitlock, C. Hazard Above: Drone Crash Database-Fallen from the Skies; The Washington Post: Washington, DC, USA, 2016. [Google Scholar]

- Buric, M.; De Cubber, G. Counter Remotely Piloted Aircraft Systems. MTA Rev. 2017, 27, 9–18. [Google Scholar] [CrossRef]

- Shively, J. Human Performance Issues in Remotely Piloted Aircraft Systems. In Proceedings of the ICAO Conference on Remotely Piloted or Piloted: Sharing One Aerospace System, Montreal, QC, Canada, 23–25 March 2015. [Google Scholar]

- Wang, X.; Wang, H.; Zhang, H.; Wang, M.; Wang, L.; Cui, K.; Lu, C.; Ding, Y. A mini review on UAV mission planning. J. Ind. Manag. Optim. 2023, 19, 3362–3382. [Google Scholar] [CrossRef]

- Hendarko, T.; Indriyanto, S.; Maulana, F.A. Determination of UAV pre-flight Checklist for flight test purpose using qualitative failure analysis. IOP Conf. Ser. Mater. Sci. Eng. 2018, 352. [Google Scholar] [CrossRef]

- Cho, A.; Kim, J.; Lee, S.; Kim, B.; Park, N.; Kim, D.; Kee, C. Fully automatic taxiing, takeoff and landing of a UAV based on a single-antenna GNSS receiver. IFAC Proc. Vol. 2008, 41, 4719–4724. [Google Scholar] [CrossRef]

- Hart, S.; Banks, V.; Bullock, S.; Noyes, J. Understanding human decision-making when controlling UAVs in a search and rescue application. In Human Interaction & Emerging Technologies (IHIET): Artificial Intelligence & Future Applications; Ahram, T., Taiar, R., Eds.; AHFE International Conference: New York, NY, USA, 2008; Volume 68. [Google Scholar]

- Casado Fauli, A.M.; Malizia, M.; Hasselmann, K.; Le Flécher, E.; De Cubber, G.; Lauwens, B. HADRON: Human-friendly Control and Artificial Intelligence for Military Drone Operations. In Proceedings of the 33rd IEEE International Conference on Robot and Human Interactive Communication, IEEE RO-MAN 2024, Los Angeles, CA, USA, 26–30 August 2024. [Google Scholar]

- Doroftei, D.; De Cubber, G.; Chintamani, K. Towards collaborative human and robotic rescue workers. In Proceedings of the 5th International Workshop on Human-Friendly Robotics (HFR2012), Brussels, Belgium, 18–19 October 2012; pp. 18–19. [Google Scholar]

- Nguyen, T.T.; Crismer, A.; De Cubber, G.; Janssens, B.; Bruyninckx, H. Landing UAV on Moving Surface Vehicle: Visual Tracking and Motion Prediction of Landing Deck. In Proceedings of the IEEE/SICE International Symposium on System Integration (SII), Ha Long, Vietnam, 8–11 January 2024; pp. 827–833. [Google Scholar]

- Singh, R.K.; Singh, S.; Kumar, M.; Singh, Y.; Kumar, P. Drone Technology in Perspective of Data Capturing. In Technological Approaches for Climate Smart Agriculture; Kumar, P., Aishwarya, Eds.; Springer: Cham, Switzerland, 2024; pp. 363–374. [Google Scholar]

- Lee, J.D.; Wickens, C.D.; Liu, Y.; Boyle, L.N. Designing for People: An Introduction to Human Factors Engineering; CreateSpace: Charleston, SC, USA, 2017. [Google Scholar]

- Barranco Merino, R.; Higuera-Trujillo, J.L.; Llinares Millán, C. The Use of Sense of Presence in Studies on Human Behavior in Virtual Environments: A Systematic Review. Appl. Sci. 2023, 13, 3095. [Google Scholar] [CrossRef]

- Harris, D.J.; Bird, J.M.; Smart, P.A.; Wilson, M.R.; Vine, S.J. A Framework for the Testing and Validation of Simulated Environments in Experimentation and Training. Front. Psychol. 2020, 11, 605. [Google Scholar] [CrossRef]

- Fletcher, G. Pilot Training Review—Interim Report: Literature Review; British Civil Aviation Authority. 2017. Available online: https://www.caa.co.uk/publication/download/16270 (accessed on 10 June 2024).

- Socha, V.; Socha, L.; Szabo, S.; Hana, K.; Gazda, J.; Kimlickova, M.; Vajdova, I.; Madoran, A.; Hanakova, L.; Nemec, V. Training of pilots using flight simulator and its impact on piloting precision. In Proceedings of the 20th International Scientific Conference, Juodkrante, Lithuania, 5–7 October 2016; pp. 374–379. [Google Scholar]

- Rostáš, J.; Kováčiková, M.; Kandera, B. Use of a simulator for practical training of pilots of unmanned aerial vehicles in the Slovak Republic. In Proceedings of the 19th IEEE International Conference on Emerging eLearning Technologies and Applications (ICETA), Košice, Slovakia, 11–12 November 2021; pp. 313–319. [Google Scholar]

- Sanbonmatsu, D.M.; Cooley, E.H.; Butner, J.E. The Impact of Complexity on Methods and Findings in Psychological Science. Front. Psychol. 2021, 11, 580111. [Google Scholar] [CrossRef]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles. In Field and Service Robotics; Hutter, M., Siegwart, R., Eds.; Springer: Cham, Switzerland, 2017; Volume 5. [Google Scholar]

- Lee, N. Unreal Engine, A 3D Game Engine. In Encyclopedia of Computer Graphics and Games; Lee, N., Ed.; Springer: Cham, Switzerland, 2023. [Google Scholar]

- Mairaj, A.; Baba, A.I.; Javaid, A.Y. Application specific drone simulators: Recent advances and challenges. Simul. Model. Pract. Theory 2019, 94, 100–117. [Google Scholar] [CrossRef]

- DJI Flight Simulator. Available online: https://www.dji.com/be/downloads/products/simulator (accessed on 16 June 2024).

- The Drone Racing League Simulator. Available online: https://store.steampowered.com/app/641780/The_Drone_Racing_League_Simulator/ (accessed on 16 June 2024).

- Zephyr. Available online: https://zephyr-sim.com/ (accessed on 16 June 2024).

- droneSim Pro. Available online: https://www.dronesimpro.com/ (accessed on 16 June 2024).

- RealFlight. Available online: https://www.realflight.com/product/realflight-9.5s-rc-flight-sim-with-interlink-controller/RFL1200S.html (accessed on 16 June 2024).

- Cotting, M. An initial study to categorize unmanned aerial vehicles for flying qualities evaluation. In Proceedings of the 47th AIAA Aerospace Sciences Meeting including The New Horizons Forum and Aerospace Exposition, Orlando, FL, USA, 5–8 January 2009. [Google Scholar]

- Holmberg, J.; Leonard, J.; King, D.; Cotting, M. Flying qualities specifications and design standards for unmanned air vehicles. In Proceedings of the AIAA Atmospheric Flight Mechanics Conference and Exhibit, Honolulu, HI, USA, 18–21 August 2008. [Google Scholar]

- Hall, C.; Southwell, J. Equivalent Safe Response Model for Evaluating the Closed Loop Handling Characteristics of UAS to Contribute to the Safe Integration of UAS into the National Airspace System. In Proceedings of the 11th AIAA Aviation Technology, Integration, and Operations (ATIO) Conference, Including the AIAA Balloon Systems Conference, Virginia Beach, VA, USA, 20–22 September 2011. [Google Scholar]

- Schulze, P.C.; Miller, J.; Klyde, D.H.; Regan, C.D.; Alexandrov, N. System Identification of a Small UAS in Support of Handling Qualities Evaluations. In Proceedings of the AIAA Scitech 2019 Forum, Orlando, FL, USA, 7–11 January 2019. [Google Scholar]

- Abdulrahim, M.; Bates, T.; Nilson, T.; Bloch, J.; Nethery, D.; Smith, T. Defining Flight Envelope Requirements and Handling Qualities Criteria for First-Person-View Quadrotor Racing. In Proceedings of the AIAA Scitech 2019 Forum, Orlando, FL, USA, 7–11 January 2019. [Google Scholar]

- Greene, K.M.; Kunz, D.L.; Cotting, M.C. Toward a Flying Qualities Standard for Unmanned Aircraft. In Proceedings of the AIAA Atmospheric Flight Mechanics Conference, Atlanta, GA, USA, 13–17 January 2014. [Google Scholar]

- Klyde, D.H.; Schulze, P.C.; Mitchell, D.; Alexandrov, N. Development of a Process to Define Unmanned Aircraft Systems Handling Qualities. In Proceedings of the AIAA Atmospheric Flight Mechanics Conference, Kissimmee, FL, USA, 8–12 January 2018. [Google Scholar]

- Sanders, F.C.; Tischler, M.; Berger, T.; Berrios, M.G.; Gong, A. System Identification and Multi-Objective Longitudinal Control Law Design for a Small Fixed-Wing UAV. In Proceedings of the AIAA Atmospheric Flight Mechanics Conference, Kissimmee, FL, USA, 8–12 January 2018. [Google Scholar]

- Abdulrahim, M.; Dee, J.; Thomas, G.; Qualls, G. Handling Qualities and Performance Metrics for First-Person-View Racing Quadrotors. In Proceedings of the AIAA Atmospheric Flight Mechanics Conference, Kissimmee, FL, USA, 8–12 January 2018. [Google Scholar]

- Herrington, S.M.; Hasan Zahed, M.J.; Fields, T. Pilot Training and Task Based Performance Evaluation of an Unmanned Aerial Vehicle. In Proceedings of the AIAA Scitech 2021 Forum, Online, 11–21 January 2021. [Google Scholar]

- Ververs, P.M.; Wickens, C.D. Head up displays: Effect of clutter, display intensity and display location of pilot performance. Int. J. Aviat. Psychol. 1998, 8, 377–403. [Google Scholar] [CrossRef]

- Smith, J.K.; Caldwell, J.A. Methodology for Evaluating the Simulator Flight Performance of Pilots; Report No, AFRL-HE-BR-TR-2004-0118; Air Force Research Laboratory: San Antonio, TX, USA, 2004. [Google Scholar]

- Hanson, C.; Schaefer, J.; Burken, J.J.; Larson, D.; Johnson, M. Complexity and Pilot Workload Metrics for the Evaluation of Adaptive Flight Controls on a Full Scale Piloted Aircraft; Document ID. 20140005730; NASA Dryden Flight Research Center: Edwards, CA, USA, 2014. [Google Scholar]

- Field, E.J.; Giese, S.E.D. Appraisal of several pilot control activity measures. In Proceedings of the AIAA Atmospheric Flight Mechanics Conference and Exhibit, San Francisco, CA, USA, 15–18 August 2005. [Google Scholar]

- Zahed, M.J.H.; Fields, T. Evaluation of pilot and quadcopter performance from open-loop mission-oriented flight testing. J. Aerosp. Eng. 2021, 235, 1817–1830. [Google Scholar] [CrossRef]

- Hebbar, P.A.; Pashilkar, A.A. Pilot performance evaluation of simulated flight approach and landing manoeuvres using quantitative assessment tools. Sādhanā Acad. Proc. Eng. Sci. 2017, 42, 405–415. [Google Scholar] [CrossRef]

- Jacoff, A.; Mattson, P. Measuring and Comparing Small Unmanned Aircraft System Capabilities and Remote Pilot Proficiency; National Institute of Standards and Technology, 2020. Available online: https://www.nist.gov/system/files/documents/2020/07/06/NIST%20sUAS%20Test%20Methods%20-%20Introduction%20%282020B1%29.pdf (accessed on 10 June 2024).

- Hoßfeld, T.; Keimel, C.; Hirth, M.; Gardlo, B.; Habigt, J.; Diepold, K.; Tran-Gia, P. Best practices for qoe crowdtesting: Qoe assessment with crowdsourcing. IEEE Trans. Multimed. 2014, 16, 541–558. [Google Scholar] [CrossRef]

- Takahashi, A.; Hands, D.; Barriac, V. Standardization activities in the ITU for a QoE assessment of IPTV. IEEE Commun. Mag. 2008, 46, 78–84. [Google Scholar] [CrossRef]

- Winkler, S.; Mohandas, P. The evolution of video quality measurement: From PSNR to hybrid metrics. IEEE Trans. Broadcast. 2008, 54, 660–668. [Google Scholar] [CrossRef]

- Hulens, D.; Goedeme, T. Autonomous flying cameraman with embedded person detection and tracking while applying cinematographic rules. In Proceedings of the 14th Conference on Computer and Robot Vision (CRV2017), Edmonton, AB, Canada, 16–19 May 2017; pp. 56–63. [Google Scholar]

- Doroftei, D.; De Cubber, G.; De Smet, H. A quantitative measure for the evaluation of drone-based video quality on a target. In Proceedings of the Eighteenth International Conference on Autonomic and Autonomous Systems (ICAS), Venice, Italy, 22–26 May 2022. [Google Scholar]

- Deutsch, S. UAV Operator Human Performance Models; BBN Report 8460; Air Force Research Laboratory: Cambridge, MA, USA, 2006. [Google Scholar]

- Bertuccelli, L.F.; Beckers, N.W.M.; Cummings, M.L. Developing operator models for UAV search scheduling. In Proceedings of the of AIAA Guidance, Navigation, and Control Conference, Toronto, ON, Canada, 2–5 August 2010. [Google Scholar]

- Wu, Y.; Huang, Z.; Li, Y.; Wang, Z. Modeling Multioperator Multi-UAV Operator Attention Allocation Problem Based on Maximizing the Global Reward. IEEE Math. Probl. Eng. 2016, 2016, 1825134. [Google Scholar] [CrossRef]

- Cummings, M.L.; Mitchell, P.J. Predicting Controller Capacity in Supervisory Control of Multiple UAVs. IEEE Trans. Syst. Man Cybern. Part Syst. Hum. 2008, 38, 451–460. [Google Scholar] [CrossRef]

- Golightly, D.; Gamble, C.; Palacin, R.; Pierce, K. Applying ergonomics within the multi-modelling paradigm with an example from multiple UAV control. Ergonomics 2020, 63, 1027–1043. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research (PDF). In Human Mental Workload. Advances in Psychology; Hancock, P.A., Meshkati, N., Eds.; North Holland Press: Amsterdam, The Netherlands, 1998; Volume 52, pp. 139–183. Available online: https://ia800504.us.archive.org/28/items/nasa_techdoc_20000004342/20000004342.pdf (accessed on 10 June 2024).

- Andrews, J.M. Human Performance Modeling: Analysis of the Effects of Manned-Unmanned Teaming on Pilot Workload and Mission Performance; Air Force Institute of Technology Theses and Dissertations, 2020. Available online: https://scholar.afit.edu/etd/3225 (accessed on 10 June 2024).

- Wright, J.L.; Lee, J.; Schreck, J.A. Human-autonomy teaming with learning capable agents: Performance and workload outcomes. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Orlando, FL, USA, 25–29 July 2021. [Google Scholar]

- Doroftei, D.; De Cubber, G.; De Smet, H. Human factors assessment for drone operations: Towards a virtual drone co-pilot. In Proceedings of the AHFE International Conference on Human Factors in Robots, Drones and Unmanned Systems, Orlando, FL, USA, 26–30 July 2023. [Google Scholar]

- Sakib, M.N.; Chaspari, T.; Ahn, C.; Behzadan, A. An experimental study of wearable technology and immersive virtual reality for drone operator training. In Proceedings of the 27th International Workshop on Intelligent Computing in Engineering, Vigo, Spain, 3–5 July 2020; pp. 154–163. [Google Scholar]

- Sakib, M.N. Wearable Technology to Assess the Effectiveness of Virtual Reality Training for Drone Operators. Ph.D. Thesis, Texas A&M University, College Station, TX, USA, 2019. [Google Scholar]

- Doroftei, D.; De Cubber, G.; De Smet, H. Reducing drone incidents by incorporating human factors in the drone and drone pilot accreditation process. In Proceedings of the AHFE 2020 Virtual Conference on Human Factors in Robots, Drones and Unmanned Systems, Virtual, 16–20 July 2020; pp. 71–77. [Google Scholar]

- Gupta, S.G.; Ghonge, M.; Jawandhiya, P.M. Review of unmanned aircraft system (UAS). Int. J. Adv. Res. Comput. Eng. Technol. (IJARCET) 2013, 2. [Google Scholar] [CrossRef]

- Hussein, M.; Nouacer, R.; Corradi, F.; Ouhammou, Y.; Villar, E.; Tieri, C.; Castiñeira, R. Key technologies for safe and autonomous drones. Microprocess. Microsyst. 2021, 87, 104348. [Google Scholar] [CrossRef]

- Chandran, N.K.; Sultan, M.T.H.; Łukaszewicz, A.; Shahar, F.S.; Holovatyy, A.; Giernacki, W. Review on Type of Sensors and Detection Method of Anti-Collision System of Unmanned Aerial Vehicle. Sensors 2023, 23, 6810. [Google Scholar] [CrossRef]

- Gupta, A.; Fernando, X. Simultaneous localization and mapping (slam) and data fusion in unmanned aerial vehicles: Recent advances and challenges. Drones 2022, 6, 85. [Google Scholar] [CrossRef]

- Castro, G.G.D.; Berger, G.S.; Cantieri, A.; Teixeira, M.; Lima, J.; Pereira, A.I.; Pinto, M.F. Adaptive path planning for fusing rapidly exploring random trees and deep reinforcement learning in an agriculture dynamic environment UAVs. Agriculture 2023, 13, 354. [Google Scholar] [CrossRef]

- Telli, K.; Kraa, O.; Himeur, Y.; Ouamane, A.; Boumehraz, M.; Atalla, S.; Mansoor, W. A comprehensive review of recent research trends on unmanned aerial vehicles (uavs). Systems 2023, 11, 400. [Google Scholar] [CrossRef]

- Azar, A.T.; Koubaa, A.; Ali Mohamed, N.; Ibrahim, H.A.; Ibrahim, Z.F.; Kazim, M.; Casalino, G. Drone deep reinforcement learning: A review. Electronics 2021, 10, 999. [Google Scholar] [CrossRef]

- Jawaharlalnehru, A.; Sambandham, T.; Sekar, V.; Ravikumar, D.; Loganathan, V.; Kannadasan, R.; Alzamil, Z.S. Target object detection from Unmanned Aerial Vehicle (UAV) images based on improved YOLO algorithm. Electronics 2022, 11, 2343. [Google Scholar] [CrossRef]

- McConville, A.; Bose, L.; Clarke, R.; Mayol-Cuevas, W.; Chen, J.; Greatwood, C.; Richardson, T. Visual odometry using pixel processor arrays for unmanned aerial systems in gps denied environments. Front. Robot. AI 2020, 7, 126. [Google Scholar] [CrossRef]

- van de Merwe, K.; Mallam, S.; Nazir, S. Agent transparency, situation awareness, mental workload, and operator performance: A systematic literature review. Hum. Factors 2024, 66, 180–208. [Google Scholar] [CrossRef]

- Woodward, J.; Ruiz, J. Analytic review of using augmented reality for situational awareness. IEEE Trans. Vis. Comput. Graph. 2022, 29, 2166–2183. [Google Scholar] [CrossRef]

- Van Baelen, D.; Ellerbroek, J.; Van Paassen, M.M.; Mulder, M. Design of a haptic feedback system for flight envelope protection. J. Guid. Control. Dyn. 2020, 43, 700–714. [Google Scholar] [CrossRef]

- Dutrannois, T.; Nguyen, T.-T.; Hamesse, C.; De Cubber, G.; Janssens, B. Visual SLAM for Autonomous Drone Landing on a Maritime Platform. In Proceedings of the International Symposium for Measurement and Control in Robotics (ISMCR)—A Topical Event of Technical Committee on Measurement and Control of Robotics (TC17), International Measurement Confederation (IMEKO), Houston, TX, USA, 2–30 September 2022. [Google Scholar]

- Papyan, N.; Kulhandjian, M.; Kulhandjian, H.; Aslanyan, L. AI-Based Drone Assisted Human Rescue in Disaster Environments: Challenges and Opportunities. Pattern Recognit. Image Anal. 2024, 34, 169–186. [Google Scholar] [CrossRef]

- Weber, U.; Attinger, S.; Baschek, B.; Boike, J.; Borchardt, D.; Brix, H.; Brüggemann, N.; Bussmann, I.; Dietrich, P.; Fischer, P.; et al. MOSES: A novel observation system to monitor dynamic events across Earth compartments. Bull. Am. Meteorol. Soc. 2022, 103, 339–348. [Google Scholar] [CrossRef]

- Ramos, M.A.; Sankaran, K.; Guarro, S.; Mosleh, A.; Ramezani, R.; Arjounilla, A. The need for and conceptual design of an AI model-based Integrated Flight Advisory System. J. Risk Reliab. 2023, 237, 485–507. [Google Scholar] [CrossRef]

- Doroftei, D.; De Cubber, G.; De Smet, H. Assessing Human Factors for Drone Operations in a Simulation Environment. In Proceedings of the Human Factors in Robots, Drones and Unmanned Systems—AHFE (2022) International Conference, New York, NY, USA, 24–28 July 2022. [Google Scholar]

- Doroftei, D.; De Smet, H. Evaluating Human Factors for Drone Operations using Simulations and Standardized Tests. In Proceedings of the 10th International Conference on Applied Human Factors and Ergonomics (AHFE 2019), Washington, DC, USA, 24–28 July 2019. [Google Scholar]

- Meier, L.; Honegger, D.; Pollefeys, M. PX4: A node-based multithreaded open source robotics framework for deeply embedded platforms. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6235–6240. [Google Scholar]

- Koubâa, A.; Allouch, A.; Alajlan, M.; Javed, Y.; Belghith, A.; Khalgui, M. Micro Air Vehicle Link (MAVlink) in a Nutshell: A Survey. IEEE Access 2019, 7, 87658–87680. [Google Scholar] [CrossRef]

- mavp2p. Available online: https://github.com/bluenviron/mavp2p (accessed on 3 July 2024).

- Garinther, G.R.; Kalb, I.J.T.; Hodge, D.C.; Price, G.R. Proposed Aural Non-Detectability Limits for Army Materiel; U.S. Army Human Engineering Laboratory: Adelphi, MD, USA, 1985; Available online: https://apps.dtic.mil/sti/citations/ADA156704 (accessed on 10 June 2024).

- Doroftei, D.; De Cubber, G. Using a qualitative and quantitative validation methodology to evaluate a drone detection system. Acta IMEKO 2019, 8, 20–27. [Google Scholar] [CrossRef]

- Ramirez-Atencia, C.; Camacho, D. Extending QGroundControl for automated mission planning of UAVs. Sensors 2018, 18, 2339. [Google Scholar] [CrossRef]

- De Cubber, G.; Berrabah, S.A.; Sahli, H. Color-based visual servoing under varying illumination conditions. Robot. Auton. Syst. 2004, 47, 225–249. [Google Scholar] [CrossRef]

- De Cubber, G.; Marton, G. Human victim detection. In Proceedings of the Third International Workshop on Robotics for Risky Interventions and Environmental Surveillance-Maintenance, RISE, Brussels, Belgium, 12–14 January 2009. [Google Scholar]

- Marques, J.S.; Bernardino, A.; Cruz, G.; Bento, M. An algorithm for the detection of vessels in aerial images. In Proceedings of the 11th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Seoul, Republic of Korea, 26–29 August 2014; pp. 295–300. [Google Scholar]

- De Cubber, G.; Shalom, R.; Coluccia, A.; Borcan, O.; Chamrád, R.; Radulescu, T.; Izquierdo, E.; Gagov, Z. The SafeShore system for the detection of threat agents in a maritime border environment. In IARP Workshop on Risky Interventions and Environmental Surveillance. 2017, Volume 2. Available online: https://www.researchgate.net/profile/Geert-De-Cubber/publication/331258980_The_SafeShore_system_for_the_detection_of_threat_agents_in_a_maritime_border_environment/links/5c6ed38b458515831f650359/The-SafeShore-system-for-the-detection-of-threat-agents-in-a-maritime-border-environment.pdf (accessed on 10 June 2024).

- Johnson, J. Analysis of image forming systems. In Proceedings of the Image Intensifier Symposium, Fort Belvoir, VA, USA, 6–7 October 1958; pp. 244–273. [Google Scholar]

- Doroftei, D.; De Vleeschauwer, T.; Lo Bue, S.; Dewyn, M.; Vanderstraeten, F.; De Cubber, G. Human-Agent Trust Evaluation in a Digital Twin Context. In Proceedings of the 30th IEEE International Conference on Robot Human Interactive Communication (RO-MAN), Vancouver, BC, Canada, 8–12 August 2021; pp. 203–207. [Google Scholar]

- Doroftei, D.; De Cubber, G.; Wagemans, R.; Matos, A.; Silva, E.; Lobo, V.; Guerreiro Cardoso, K.C.; Govindaraj, S.; Gancet, J.; Serrano, D. User-centered design. In Search and Rescue Robotics: From Theory to Practice; De Cubber, G., Doroftei, D., Eds.; IntechOpen: London, UK, 2017; pp. 19–36. [Google Scholar]

- Holmes, T.H.; Rahe, T.H. The Social Readjustment Rating Scale. J. Psychosom. Res. 1967, 11. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Alexis, K.; Nikolakopoulos, G.; Tzes, A. Model predictive quadrotor control: Attitude, altitude and position experimental studies. IET Control Theory Appl. 2012, 6, 1812–1827. [Google Scholar] [CrossRef]

- Szolc, H.; Kryjak, T. Hardware-in-the-loop simulation of a UAV autonomous landing algorithm implemented in SoC FPGA. In Proceedings of the 2022 Signal Processing: Algorithms, Architectures, Arrangements, and Applications, Poznan, Poland, 21–22 September 2022; pp. 135–140. [Google Scholar]

- European Commission. Commission Implementing Regulation (EU) 2019/947 of 24 May 2019 on the Rules and Procedures for the Operation of Unmanned Aircraft; European Commission: Brussels, Belgium, 2019. [Google Scholar]

| Human Factor | Importance Level (0–100%) |

|---|---|

| Task Difficulty | 89% |

| Pilot Position | 83% |

| Pilot Stress | 83% |

| Pilot Fatigue | 83% |

| Pressure | 83% |

| Pilot subjected to water or humidity | 83% |

| Pilot subjected to temperature changes | 78% |

| Information location & organization & formatting of the controller display | 78% |

| Task Complexity | 78% |

| Task Duration | 78% |

| Pilot subjected to low quality breathing air | 72% |

| Pilot subjected to small body clearance | 72% |

| Ease-of-use of the controller | 72% |

| Pilot subjected to noise/dust/vibrations | 67% |

| Task Type | 67% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Doroftei, D.; De Cubber, G.; Lo Bue, S.; De Smet, H. Quantitative Assessment of Drone Pilot Performance. Drones 2024, 8, 482. https://doi.org/10.3390/drones8090482

Doroftei D, De Cubber G, Lo Bue S, De Smet H. Quantitative Assessment of Drone Pilot Performance. Drones. 2024; 8(9):482. https://doi.org/10.3390/drones8090482

Chicago/Turabian StyleDoroftei, Daniela, Geert De Cubber, Salvatore Lo Bue, and Hans De Smet. 2024. "Quantitative Assessment of Drone Pilot Performance" Drones 8, no. 9: 482. https://doi.org/10.3390/drones8090482

APA StyleDoroftei, D., De Cubber, G., Lo Bue, S., & De Smet, H. (2024). Quantitative Assessment of Drone Pilot Performance. Drones, 8(9), 482. https://doi.org/10.3390/drones8090482