Unoccupied-Aerial-Systems-Based Biophysical Analysis of Montmorency Cherry Orchards: A Comparative Study

Abstract

1. Introduction

- How accurately can an RTK-enabled UAS evaluate Montmorency cherry tree height in a sloped cherry orchard?

- Which UAS imagery processing software out of DroneDeploy, Drone2Map, and Pix4D provides the most accurate product, and are the results statistically different?

- How well can RGB-based indices predict Montmorency cherry tree LAI?

2. Materials and Methods

2.1. Study Area

2.2. Field Data Collection

2.3. UAS Data Collection

2.4. Data Processing

2.4.1. Pix4D

2.4.2. Drone2Map

2.4.3. DroneDeploy

2.4.4. Comparison

2.5. Biophysical Characteristics Estimation

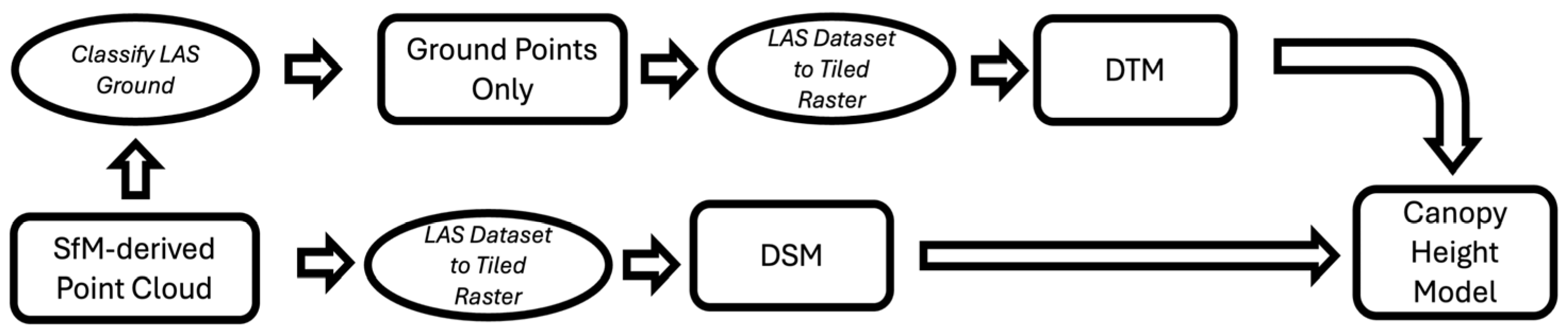

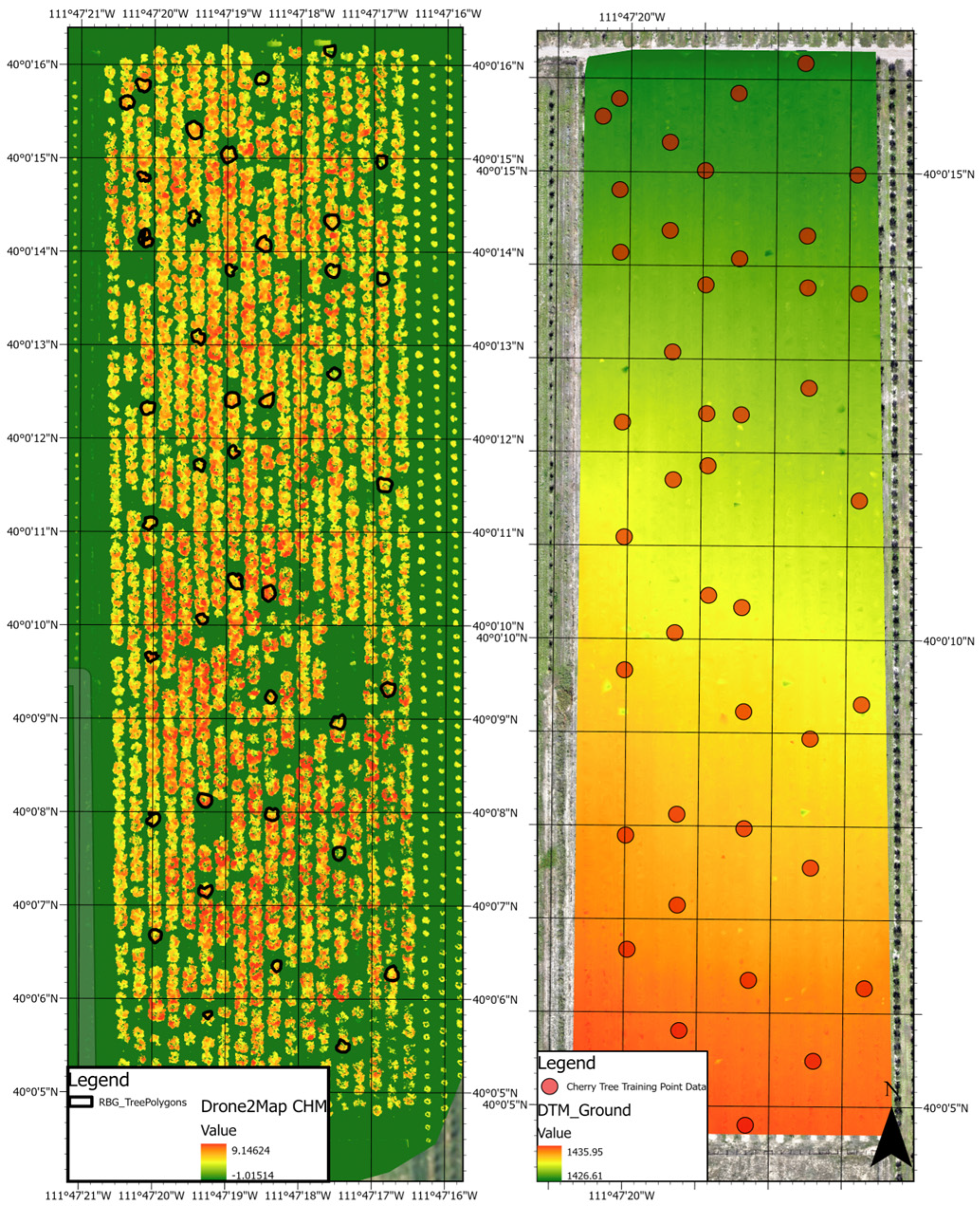

2.5.1. Canopy Height Modeling

2.5.2. Modeling LAI

3. Results

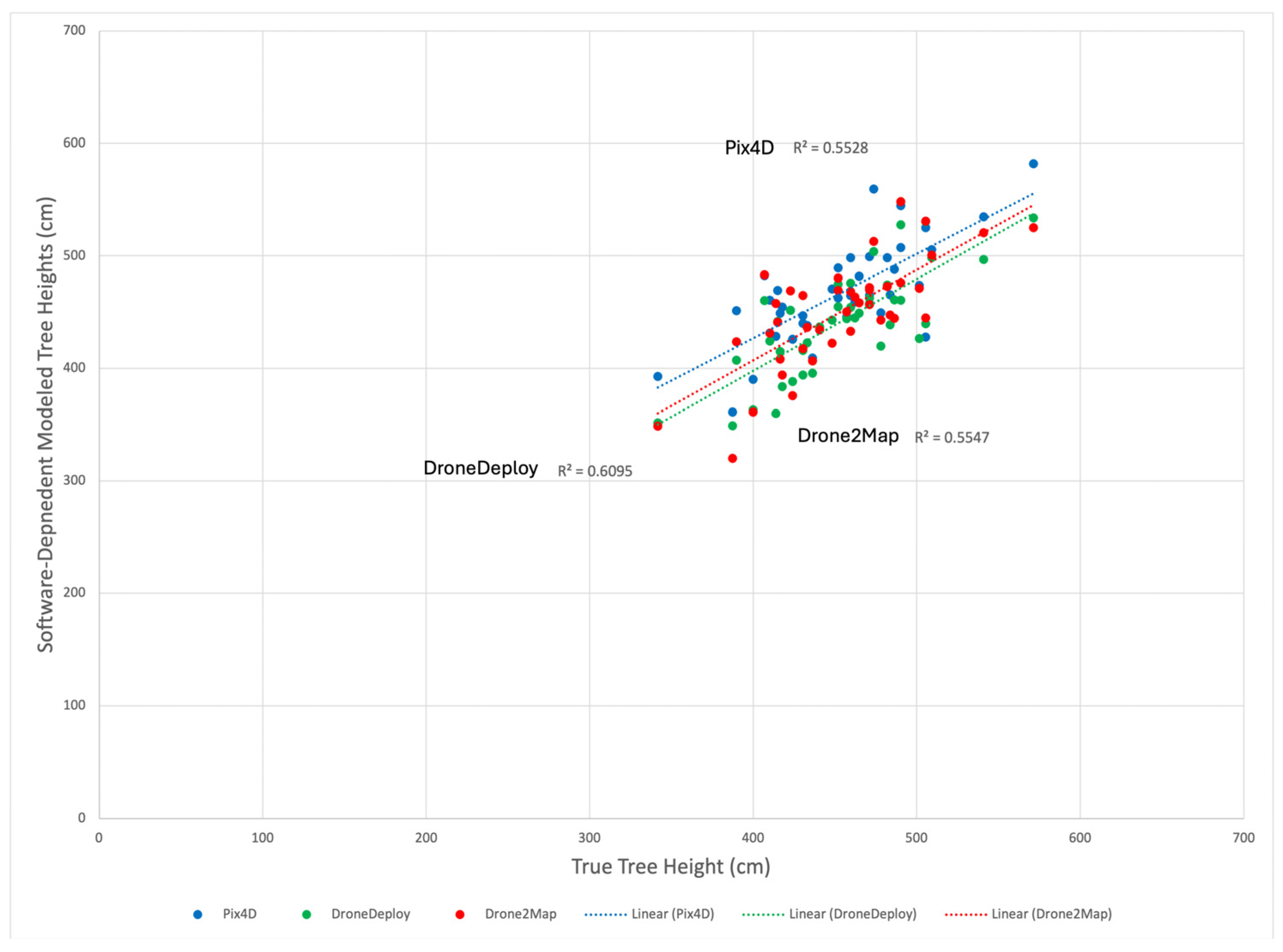

3.1. Modeled Tree Heights

3.2. LAI Modeling with RGB-Based Vegetation Indices

4. Discussion

5. Conclusions

- -

- The use of RTK-enabled UAS has shown promising results in evaluating Montmorency cherry tree height in sloped orchards. Our findings indicate that UAS can provide relatively accurate height measurements (within 30 cm), which are crucial for optimizing yield and managing orchard health.

- -

- Among the three UAS imagery processing software packages tested—DroneDeploy, Drone2Map, and Pix4D—each demonstrated similar degrees of accuracy. Our analysis revealed statistically significant differences in the performance of these tools, with DroneDeploy cloud-based UAS data processing emerging as the most reliable for height mapping in cherry orchards.

- -

- The study explored the effectiveness of RGB-based vegetation indices in predicting the leaf area index (LAI) for Montmorency cherry trees. While NIR-based indices like NDVI have shown strong correlations with LAI, our research revealed that RGB-based indices did not have a significant correlation with LAI.

- -

- Enhancing the accuracy of RGB-based vegetation indices and their relationship with LAI.

- -

- Comparing RTK on-board receivers and using GCPs for georeferencing after data collection in an orchard environment.

- -

- Developing standardized protocols for UAS data collection and processing to produce ideal tree canopy height data.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Wik, M.; Pingali, P.; Brocai, S. Global Agricultural Performance: Past Trends and Future Prospects; World Bank: Washington, DC, USA, 2008. [Google Scholar]

- Altieri, M.A. Agroecology: The Science of Sustainable Agriculture, 2nd ed.; Westview Press, Inc.: Boulder, CO, USA, 1995. [Google Scholar]

- Berger, K.; Machwitz, M.; Kycko, M.; Kefauver, S.C.; Van Wittenberghe, S.; Gerhards, M.; Verrelst, J.; Atzberger, C.; van der Tol, C.; Damm, A.; et al. Multi-sensor spectral synergies for crop stress detection and monitoring in the optical domain: A review. Remote Sens. Environ. 2022, 280, 113198. [Google Scholar] [CrossRef] [PubMed]

- Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Omia, E.; Bae, H.; Park, E.; Kim, M.S.; Baek, I.; Kabenge, I.; Cho, B. Remote sensing in field crop monitoring: A comprehensive review of sensor systems, data analyses and recent advances. Remote Sens. 2023, 15, 354. [Google Scholar] [CrossRef]

- Sishodia, R.P.; Shukla, S.; Graham, W.D.; Wani, S.P.; Jones, J.W.; Heaney, J. Current and future groundwater withdrawals: Effects, management, and energy policy options for a semi-arid Indian watershed. Adv. Water Resour. 2017, 110, 459. [Google Scholar] [CrossRef]

- Przybilla, H.J.; Wester-Ebbinghaus, W. Aerial photos by means of radio-controlled aircraft. Bildmess. Luftbildwes. 1979, 47, 137–142. [Google Scholar]

- Gebbers, R.; Adamchuk, V.I. Precision agriculture and food security. Science 2010, 327, 828–831. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty-five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2012, 114, 358. [Google Scholar] [CrossRef]

- Sun, G.; Wang, X.; Ding, Y.; Lu, W.; Sun, Y. Remote measurement of apple orchard canopy information using unmanned aerial vehicle photogrammetry. Agronomy 2019, 9, 774. [Google Scholar] [CrossRef]

- Shahi, T.B.; Xu, C.; Neupane, A.; Guo, W. Recent advances in crop disease detection using UAV and deep learning techniques. Remote Sens. 2023, 15, 2450. [Google Scholar] [CrossRef]

- Velten, S.; Leventon, J.; Jager, N.; Newig, J. What is sustainable agriculture? A systematic review. Sustainability 2015, 7, 7833–7865. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Velusamy, P.; Rajendran, S.; Mahendran, R.K.; Naseer, S.; Shafiq, M.; Choi, J.-G. Unmanned Aerial Vehicles (UAV) in precision agriculture: Applications and challenges. Energies 2021, 15, 217. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A technical study on UAV characteristics for precision agriculture applications and associated practical challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Singh, K.K.; Frazier, A.E. A meta-analysis and review of Unmanned Aircraft System (UAS) imagery for terrestrial applications. Int. J. Remote Sens. 2018, 39, 5078–5098. [Google Scholar] [CrossRef]

- Simpson, J.E.; Holman, F.; Nieto, H.; Voelksch, I.; Mauder, M.; Klatt, J.; Fiener, P.; Kaplan, J.O. High spatial and temporal resolution energy flux mapping of different land covers using an off-the-shelf unmanned aerial system. Remote Sens. 2021, 13, 1286. [Google Scholar] [CrossRef]

- Avneri, A.; Aharon, S.; Brook, A.; Atsmon, G.; Smirnov, E.; Sadeh, R.; Abbo, S.; Peleg, Z.; Herrmann, I.; Bonfil, D.J.; et al. UAS-based imaging for prediction of chickpea crop biophysical parameters and yield. Comput. Electron. Agric. 2023, 205, 107581. [Google Scholar] [CrossRef]

- Lu, B.; He, Y.; Liu, H.H. Mapping vegetation biophysical and biochemical properties using unmanned aerial vehicles-acquired imagery. Int. J. Remote Sens. 2017, 39, 5265–5287. [Google Scholar] [CrossRef]

- dos Santos, R.A.; Filgueiras, R.; Mantovani, E.C.; Fernandes-Filho, E.I.; Almeida, T.S.; Venancio, L.P.; da Silva, A.C. Surface reflectance calculation and predictive models of biophysical parameters of maize crop from RG-nir sensor on board a UAV. Precis. Agric. 2021, 22, 1535–1558. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned Aerial System (uas)-based phenotyping of soybean using multi-sensor data fusion and Extreme Learning Machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Hassani, K.; Gholizadeh, H.; Taghvaeian, S.; Natalie, V.; Carpenter, J.; Jacob, J. Application of UAS-based remote sensing in estimating winter wheat phenotypic traits and yield during the growing season. PFG–J. Photogramm. Remote Sens. Geoinf. Sci. 2023, 91, 77–90. [Google Scholar] [CrossRef]

- Pazhanivelan, S.; Kumaraperumal, R.; Shanmugapriya, P.; Sudarmanian, N.S.; Sivamurugan, A.P.; Satheesh, S. Quantification of biophysical parameters and economic yield in cotton and rice using drone technology. Agriculture 2023, 13, 1668. [Google Scholar] [CrossRef]

- Zhang, C.; Valente, J.; Kooistra, L.; Guo, L.; Wang, W. Orchard management with small unmanned aerial vehicles: A survey of sensing and analysis approaches. Precis. Agric. 2021, 22, 2007–2052. [Google Scholar] [CrossRef]

- Guimarães, N.; Sousa, J.J.; Pádua, L.; Bento, A.; Couto, P. Remote sensing applications in almond orchards: A comprehensive systematic review of current insights, research gaps, and future prospects. Appl. Sci. 2024, 14, 1749. [Google Scholar] [CrossRef]

- Liu, Z.; Guo, P.; Liu, H.; Fan, P.; Zeng, P.; Liu, X.; Feng, C.; Wang, W.; Yang, F. Gradient boosting estimation of the leaf area index of apple orchards in UAV remote sensing. Remote Sens. 2021, 13, 3263. [Google Scholar] [CrossRef]

- Vinci, A.; Brigante, R.; Traini, C.; Farinelli, D. Geometrical characterization of hazelnut trees in an intensive orchard by an unmanned aerial vehicle (UAV) for precision agriculture applications. Remote Sens. 2023, 15, 541. [Google Scholar] [CrossRef]

- Kovanič, Ľ.; Blistan, P.; Urban, R.; Štroner, M.; Blišťanová, M.; Bartoš, K.; Pukanská, K. Analysis of the suitability of high-resolution DEM obtained using ALS and UAS (SFM) for the identification of changes and monitoring the development of selected Geohazards in the alpine environment—A case study in high tatras, Slovakia. Remote Sens. 2020, 12, 3901. [Google Scholar] [CrossRef]

- Sturdivant, E.; Lentz, E.; Thieler, E.R.; Farris, A.; Weber, K.; Remsen, D.; Miner, S.; Henderson, R. UAS-SFM for coastal research: Geomorphic feature extraction and land cover classification from high-resolution elevation and optical imagery. Remote Sens. 2017, 9, 1020. [Google Scholar] [CrossRef]

- Hobart, M.; Pflanz, M.; Weltzien, C.; Schirrmann, M. Growth height determination of tree walls for precise monitoring in Apple Fruit production using UAV photogrammetry. Remote Sens. 2020, 12, 1656. [Google Scholar] [CrossRef]

- Tu, Y.-H.; Johansen, K.; Phinn, S.; Robson, A. Measuring canopy structure and condition using multi-spectral UAS imagery in a horticultural environment. Remote Sens. 2019, 11, 269. [Google Scholar] [CrossRef]

- Hadas, E.; Jozkow, G.; Walicka, A.; Borkowski, A. Apple Orchard Inventory with a Lidar equipped unmanned aerial system. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101911. [Google Scholar] [CrossRef]

- Jonckheere, I.; Fleck, S.; Nackaerts, K.; Muys, B.; Coppin, P.; Weiss, M.; Baret, F. Review of methods for in situ leaf area index determination: Part I. Theories, sensors and hemispherical photography. Agric. For. Meteorol. 2004, 121, 19–35. [Google Scholar] [CrossRef]

- Garrigues, S.; Lacaze, R.; Baret, F.J.T.M.; Morisette, J.T.; Weiss, M.; Nickeson, J.E.; Fernandes, R.; Plummer, S.; Shabanov, N.V.; Myneni, R.B.; et al. Validation and intercomparison of global Leaf Area Index products derived from remote sensing data. J. Geophys. Res. Biogeosci. 2008, 113, G02028. [Google Scholar] [CrossRef]

- Gower, S.T.; Kucharik, C.J.; Norman, J.M. Direct and indirect estimation of leaf area index, fAPAR, and net primary production of terrestrial ecosystems. Remote Sens. Environ. 1999, 70, 29–51. [Google Scholar] [CrossRef]

- Yamaguchi, T.; Tanaka, Y.; Imachi, Y.; Yamashita, M.; Katsura, K. Feasibility of combining deep learning and RGB images obtained by unmanned aerial vehicle for leaf area index estimation in Rice. Remote Sens. 2020, 13, 84. [Google Scholar] [CrossRef]

- Bajocco, S.; Ginaldi, F.; Savian, F.; Morelli, D.; Scaglione, M.; Fanchini, D.; Raparelli, E.; Bregaglio, S.U. On the use of NDVI to estimate Lai in field crops: Implementing a conversion equation library. Remote Sens. 2022, 14, 3554. [Google Scholar] [CrossRef]

- Morgan, G.R.; Wang, C.; Morris, J.T. RGB indices and canopy height modelling for mapping tidal marsh biomass from a small unmanned aerial system. Remote Sens. 2021, 13, 3406. [Google Scholar] [CrossRef]

- Gracia-Romero, A.; Kefauver, S.C.; Vergara-Díaz, O.; Zaman-Allah, M.A.; Prasanna, B.M.; Cairns, J.E.; Araus, J.L. Comparative performance of ground vs. aerially assessed RGB and multispectral indices for early-growth evaluation of maize performance under phosphorus fertilization. Front. Plant Sci. 2017, 8, 2004. [Google Scholar] [CrossRef]

- Lussem, U.; Bolten, A.; Gnyp, M.L.; Jasper, J.; Bareth, G. Evaluation of RGB-based vegetation indices from UAV imagery to estimate forage yield in grassland. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–3, 1215–1219. [Google Scholar] [CrossRef]

- Mavic 3 Multispectral Edition-See More, Work Smarter–DJI Agricultural Drones. Available online: https://ag.dji.com/mavic-3-m (accessed on 31 August 2024).

- Ekaso, D.; Nex, F.; Kerle, N. Accuracy assessment of real-time kinematics (RTK) measurements on unmanned aerial vehicles (UAV) for direct geo-referencing. Geo-Spat. Inf. Sci. 2020, 23, 165–181. [Google Scholar] [CrossRef]

- PIX4Dmapper: Professional Photogrammetry Software for Drone Mapping. Available online: https://www.pix4d.com/product/pix4dmapper-photogrammetry-software/ (accessed on 19 August 2024).

- GIS Drone Mapping: 2D & 3D Photogrammetry: Arcgis Drone2Map. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-drone2map/overview?srsltid=AfmBOopDU0gPkXGwXiF1wQAM3CmAFwKI4Bmj3mHGGF_RWPudJyYyp5e7#2d-photogrammetry (accessed on 31 August 2024).

- Reality Capture: Drone Mapping Software: Photo Documentation. Available online: https://www.dronedeploy.com/ (accessed on 31 August 2024).

- Jing, R.; Gong, Z.; Zhao, W.; Pu, R.; Deng, L. Above-bottom biomass retrieval of aquatic plants with regression models and SfM data acquired by a UAV platform—A case study in Wild Duck Lake Wetland, Beijing, China. ISPRS J. Photogramm. Remote Sens. 2017, 134, 122–134. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.W.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation index weighted canopy volume model (CVMVI) for soybean biomass estimation from unmanned aerial system-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Cen, H.; Wan, L.; Zhu, J.; Li, Y.; Li, X.; Zhu, Y.; Weng, H.; Wu, W.; Yin, W.; Xu, C.; et al. Dynamic monitoring of biomass of rice under different nitrogen treatments using a lightweight UAV with dual image-frame snapshot cameras. Plant Methods 2019, 15, 32. [Google Scholar] [CrossRef] [PubMed]

- Possoch, M.; Bieker, S.; Hoffmeister, D.; Bolten, A.; Schellberg, J.; Bareth, G. Multi-temporal crop surface models combined with the RGB vegetation index from UAV-based images for forage monitoring in grassland. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 991–998. [Google Scholar] [CrossRef]

- Michez, A.; Bauwens, S.; Brostaux, Y.; Hiel, M.-P.; Garré, S.; Lejeune, P.; Dumont, B. How far can consumer-grade UAV RGB imagery describe crop production? A 3D and multitemporal modeling approach applied to Zea Mays. Remote Sens. 2018, 10, 1798. [Google Scholar] [CrossRef]

- Sunoj, S.; Yeh, B.; Marcaida, M., III; Longchamps, L.; van Aardt, J.; Ketterings, Q.M. Maize grain and silage yield prediction of commercial fields using high-resolution UAS imagery. Biosyst. Eng. 2023, 235, 137–149. [Google Scholar] [CrossRef]

- Mo, J.; Lan, Y.; Yang, D.; Wen, F.; Qiu, H.; Chen, X.; Deng, X. Deep learning-based instance segmentation method of litchi canopy from UAV-acquired images. Remote Sens. 2021, 13, 3919. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual tree segmentation and tree species classification in subtropical broadleaf forests using UAV-based LiDAR, hyperspectral, and ultrahigh-resolution RGB data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high-resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Morgan, G.; Hodgson, M.E.; Wang, C. Using SUAS-derived Point Cloud to supplement lidar returns for improved canopy height model on earthen dams. Pap. Appl. Geogr. 2020, 6, 436–448. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SFM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Ganz, S.; Käber, Y.; Adler, P. Measuring tree height with remote sensing—A comparison of photogrammetric and LIDAR data with different field measurements. Forests 2019, 10, 694. [Google Scholar] [CrossRef]

- Gallardo-Salazar, J.L.; Pompa-García, M. Detecting individual tree attributes and multispectral indices using unmanned aerial vehicles: Applications in a Pine Clonal Orchard. Remote Sens. 2020, 12, 4144. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; de Castro, A.I.; Peña, J.M.; Jiménez-Brenes, F.M.; Arquero, O.; Lovera, M.; López-Granados, F. Mapping the 3D structure of almond trees using UAV acquired photogrammetric point clouds and object-based image analysis. Biosyst. Eng. 2018, 176, 172–184. [Google Scholar] [CrossRef]

- Kaimaris, D.; Patias, P.; Sifnaiou, M. UAV and the comparison of image processing software. Int. J. Intell. Unmanned Syst. 2017, 5, 18–27. [Google Scholar] [CrossRef]

- Kim, T.H.; Lee, Y.C. Comparison of Open Source based Algorithms and Filtering Methods for UAS Image Processing. J. Cadastre Land InformatiX 2020, 50, 155–168. [Google Scholar] [CrossRef]

- Alidoost, F.; Arefi, H. Comparison of UAS-based photogrammetry software for 3D point cloud generation: A survey over a historical site. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-4/W4, 55–61. [Google Scholar] [CrossRef]

- Fraser, B.T.; Congalton, R.G. Issues in unmanned aerial systems (UAS) data collection of Complex Forest Environments. Remote Sens. 2018, 10, 908. [Google Scholar] [CrossRef]

- Li, H.; Yan, X.; Su, P.; Su, Y.; Li, J.; Xu, Z.; Gao, C.; Zhao, Y.; Feng, M.; Shafiq, F.; et al. Estimation of winter wheat lai based on color indices and texture features of rgb images taken by uav. J. Sci. Food Agric. 2024. [Google Scholar] [CrossRef]

- Raj, R.; Walker, J.P.; Pingale, R.; Nandan, R.; Naik, B.; Jagarlapudi, A. Leaf area index estimation using top-of-canopy airborne RGB images. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102282. [Google Scholar] [CrossRef]

- Triana Martinez, J.; De Swaef, T.; Borra-Serrano, I.; Lootens, P.; Barrero, O.; Fernandez-Gallego, J.A. Comparative leaf area index estimation using multispectral and RGB images from a UAV platform. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping VIII, Orlando, FL, USA, 1–2 May 2023. [Google Scholar]

| RGB-Based Index | Equation | Sources |

|---|---|---|

| ExG | 2 × G − R − B | [47] |

| GCC or Green Ratio | G/B + G + R | [48] |

| IKAW | (R − B)/(R + B) | [49] |

| MGRVI | (G2 − R2)/(G2 + R2) | [50] |

| MVARI | (G − B)/(G + R − B) | [50] |

| RGBVI | (G2 − B × R)/(G2 + B × R) | [51] |

| TGI | G − (0.39 × R) − (0.61 × B) | [52] |

| VARI | (G − R)/(G + R − B) | [50] |

| VDVI or GLA | (2 × G − R − B)/(2 × G + R + B) | [50] |

| Red–Blue Ratio Index (simple ratio) | R/B | [53] |

| Green–Blue Ratio Index (simple ratio) | G/B | [53] |

| Green–Red Ratio Index (simple ratio) | G/R | [53] |

| DroneDeploy | Drone2Map | Pix4D | |

|---|---|---|---|

| RMSE value | 31.83 cm | 32.66 cm | 34.03 cm |

| DroneDeploy–Drone2Map | Drone2Map–Pix4D | DroneDeploy–Pix4D | |

|---|---|---|---|

| Two sided p-value | 0.410 | 0.101 | 0.013 1 |

| DroneDeploy | Drone2Map | Pix4D | |

|---|---|---|---|

| Cost (individual/standard pricing) | USD 329 /mo | USD 145.83/mo | USD 291.67/mo |

| Audience | Construction/Engineering/Energy/Agriculture | GIS/Geography/Mapping/Engineers | Precision Ag/Surveying/Mapping/Engineering |

| Computational Environment | Cloud-based | Desktop | Desktop |

| Ability to Adjust Parameters | Low | High | High |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Morgan, G.R.; Stevenson, L. Unoccupied-Aerial-Systems-Based Biophysical Analysis of Montmorency Cherry Orchards: A Comparative Study. Drones 2024, 8, 494. https://doi.org/10.3390/drones8090494

Morgan GR, Stevenson L. Unoccupied-Aerial-Systems-Based Biophysical Analysis of Montmorency Cherry Orchards: A Comparative Study. Drones. 2024; 8(9):494. https://doi.org/10.3390/drones8090494

Chicago/Turabian StyleMorgan, Grayson R., and Lane Stevenson. 2024. "Unoccupied-Aerial-Systems-Based Biophysical Analysis of Montmorency Cherry Orchards: A Comparative Study" Drones 8, no. 9: 494. https://doi.org/10.3390/drones8090494

APA StyleMorgan, G. R., & Stevenson, L. (2024). Unoccupied-Aerial-Systems-Based Biophysical Analysis of Montmorency Cherry Orchards: A Comparative Study. Drones, 8(9), 494. https://doi.org/10.3390/drones8090494