Co-Registration of Multi-Modal UAS Pushbroom Imaging Spectroscopy and RGB Imagery Using Optical Flow

Abstract

:1. Introduction

1.1. Background

1.2. Related Works

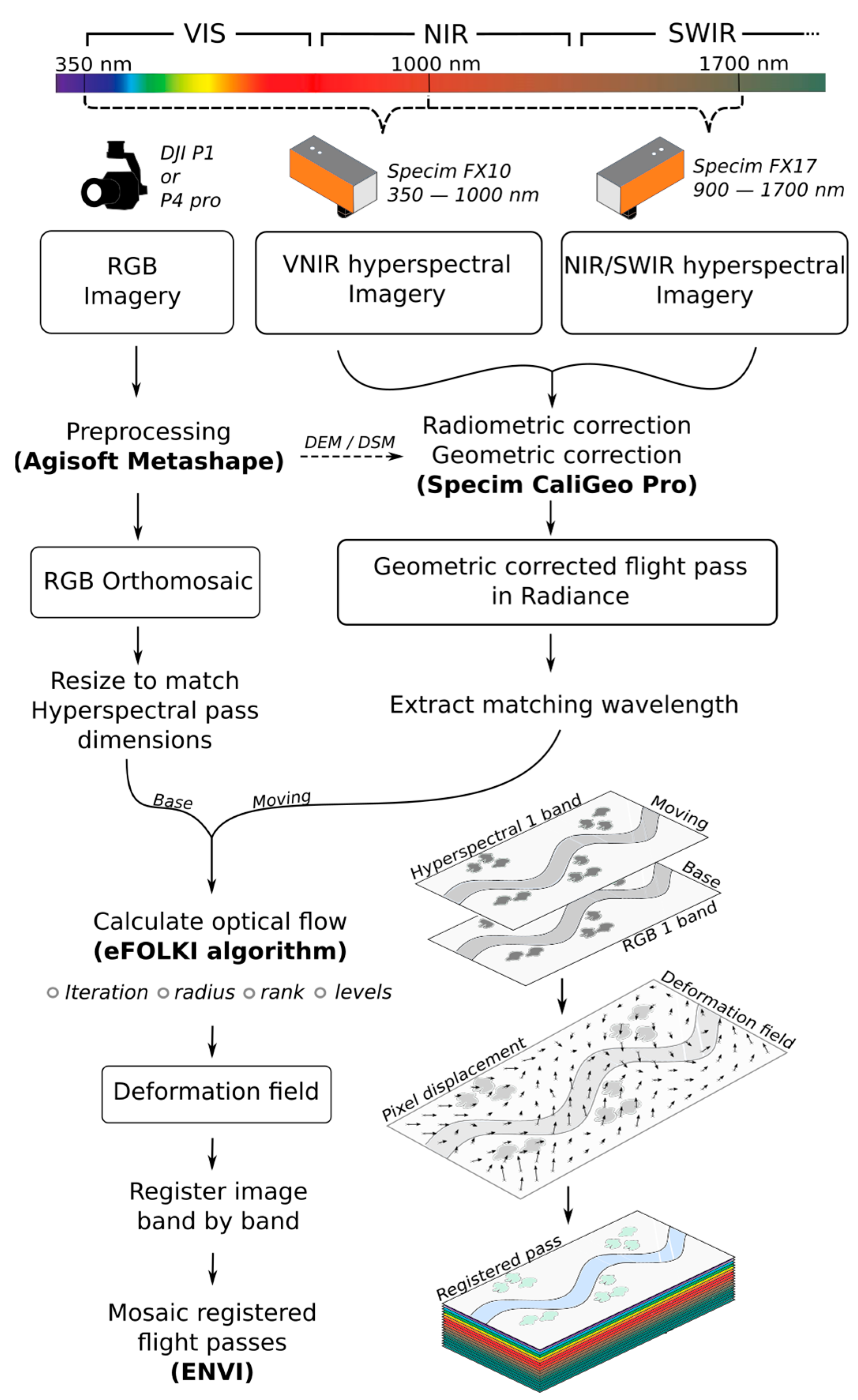

2. Methods

2.1. Study Area

2.2. UAS Platforms and Data Acquisition

2.3. Imagery Pre-Processing

2.3.1. RGB Imagery

2.3.2. Imaging Spectroscopy

2.4. Co-Registration

2.4.1. Algorithm Description

2.4.2. Workflow

2.4.3. Parameter Selection

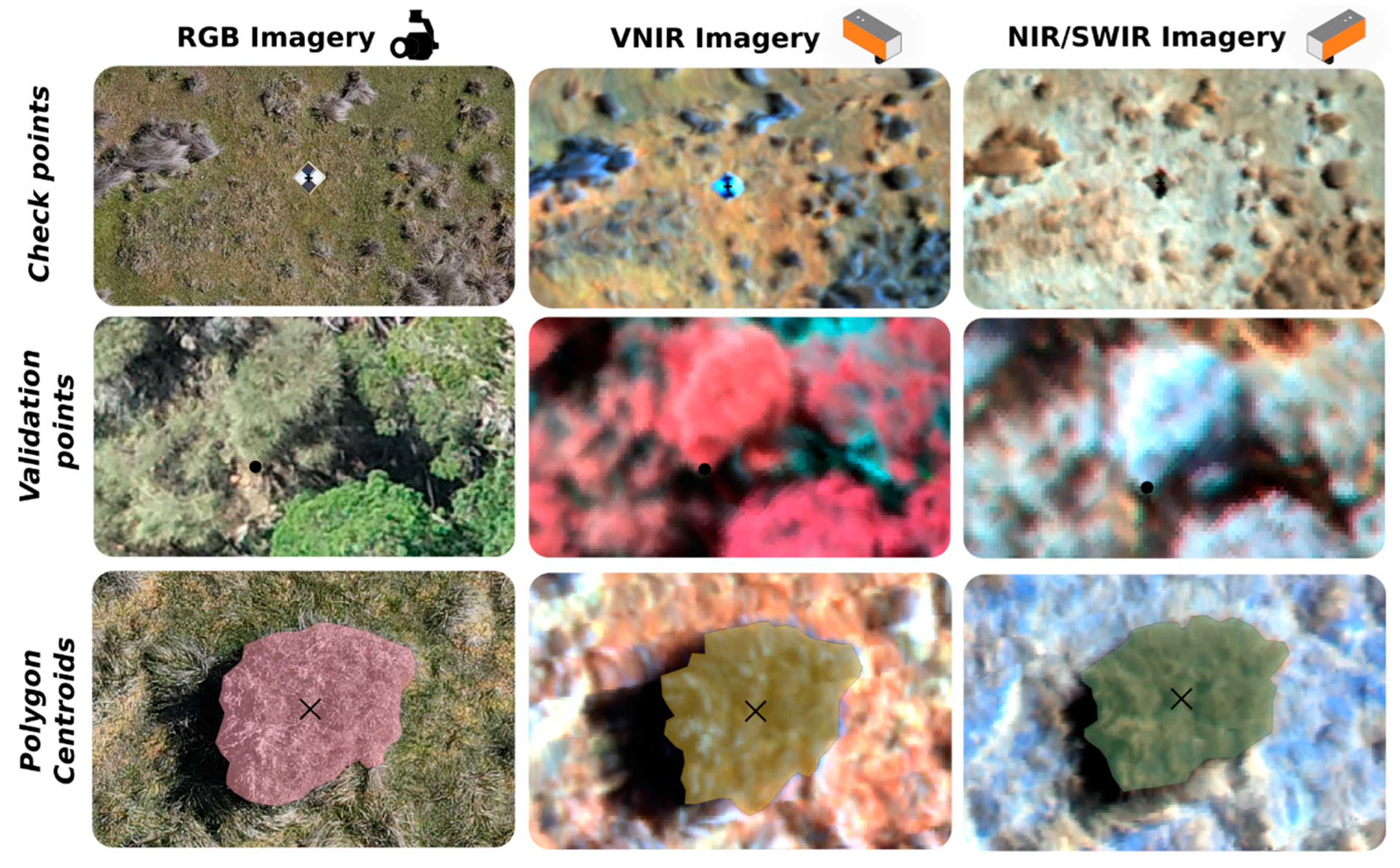

2.5. Accuracy Assessment

- i.

- Check points and validation points

- ii.

- Polygon centroids

Performance Metrics

- i.

- Error quantification

- ii.

- Intersection over union

3. Results

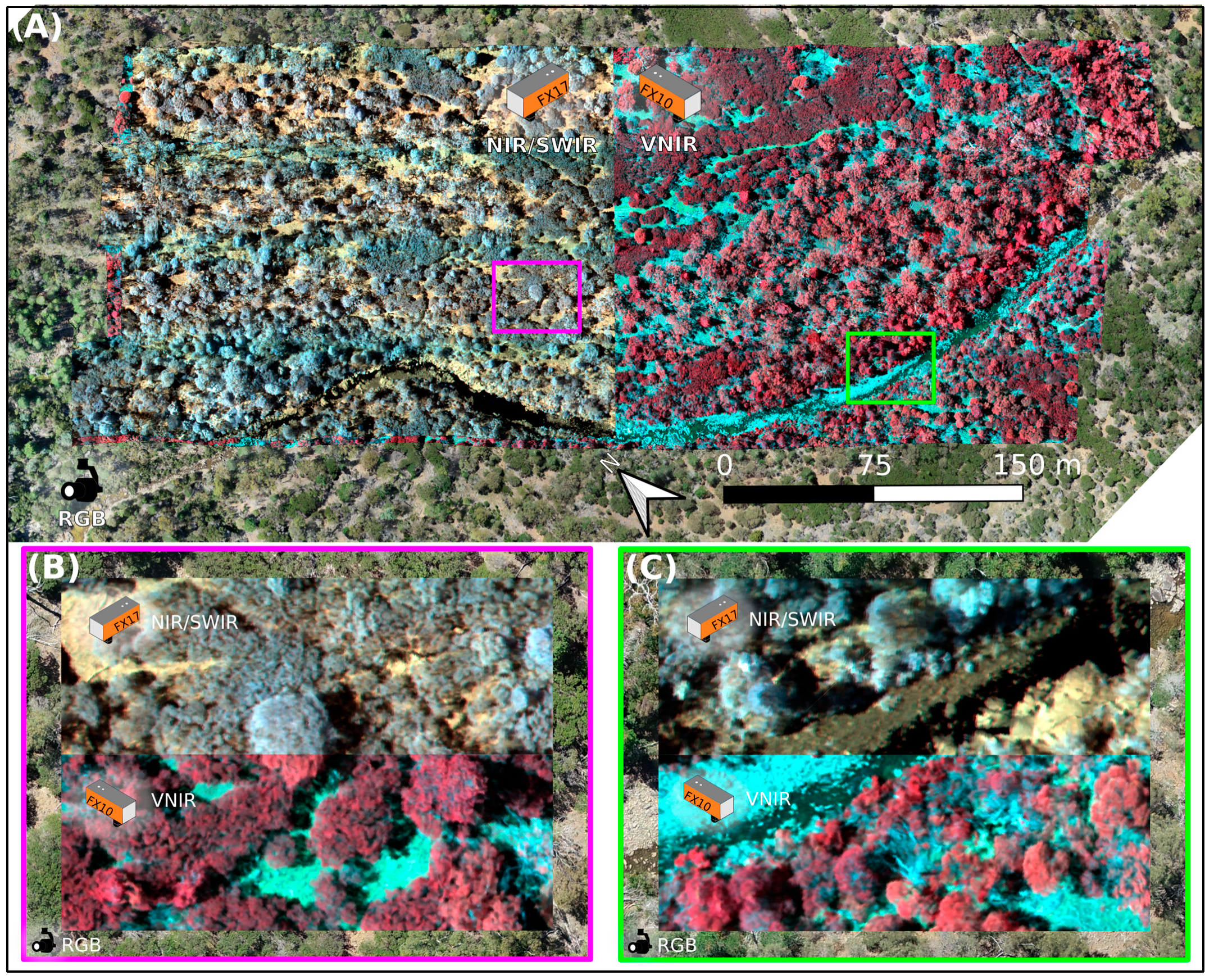

3.1. Optical Flow Results

3.1.1. Cockatoo Hills

3.1.2. Swansea

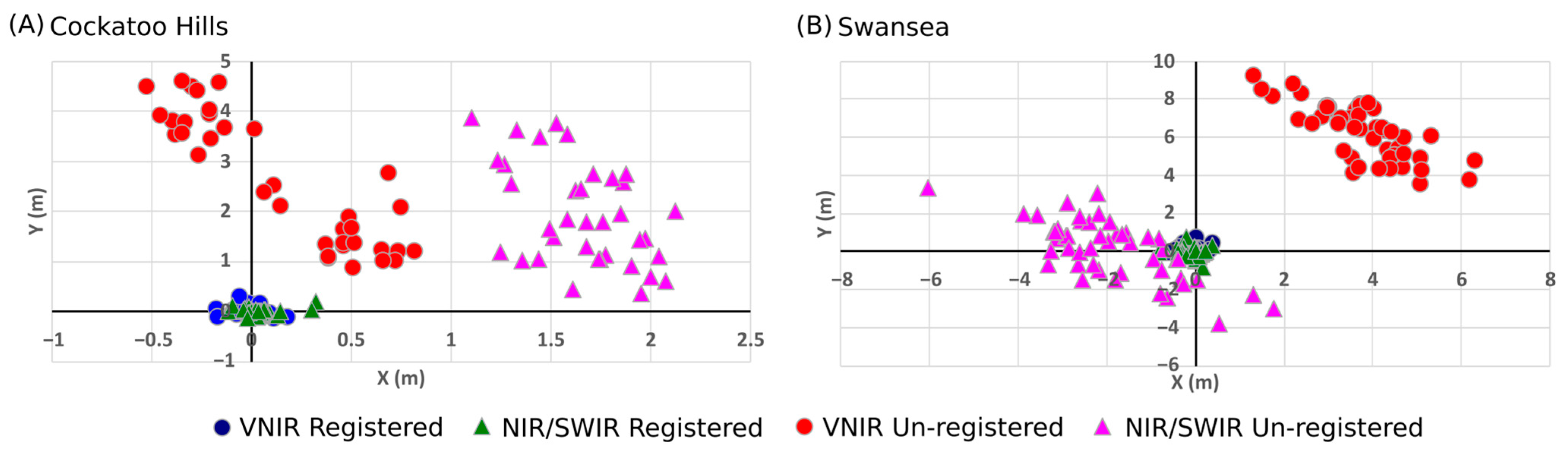

3.2. Accuracy Assessment Results

3.2.1. Error Quantification

3.2.2. Intersection over Union

4. Discussion

4.1. Performance in Different Ecosystems

4.2. Initial Misalignment Errors and Data Quality

4.3. eFOLKI Parametrisation

4.4. Comparison with Other Techniques

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, L. A review on unmanned aerial vehicle remote sensing: Platforms, sensors, data processing methods, and applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Cimoli, E.; Lucieer, A.; Malenovský, Z.; Woodgate, W.; Janoutová, R.; Turner, D.; Haynes, R.S.; Phinn, S. Mapping functional diversity of canopy physiological traits using UAS imaging spectroscopy. Remote Sens. Environ. 2024, 302, 113958. [Google Scholar] [CrossRef]

- Stuart, M.B.; McGonigle, A.J.; Willmott, J.R. Hyperspectral imaging in environmental monitoring: A review of recent developments and technological advances in compact field deployable systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef]

- Dalponte, M.; Ørka, H.O.; Gobakken, T.; Gianelle, D.; Næsset, E. Tree species classification in boreal forests with hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2012, 51, 2632–2645. [Google Scholar] [CrossRef]

- Capolupo, A.; Kooistra, L.; Berendonk, C.; Boccia, L.; Suomalainen, J. Estimating plant traits of grasslands from UAV-acquired hyperspectral images: A comparison of statistical approaches. ISPRS Int. J. Geo-Inf. 2015, 4, 2792–2820. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Van der Meer, F.D.; Van der Werff, H.M.; Van Ruitenbeek, F.J.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; Van Der Meijde, M.; Carranza, E.J.M.; De Smeth, J.B.; Woldai, T. Multi-and hyperspectral geologic remote sensing: A review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Thiele, S.T.; Bnoulkacem, Z.; Lorenz, S.; Bordenave, A.; Menegoni, N.; Madriz, Y.; Dujoncquoy, E.; Gloaguen, R.; Kenter, J. Mineralogical Mapping with Accurately Corrected Shortwave Infrared Hyperspectral Data Acquired Obliquely from UAVs. Remote Sens. 2021, 14, 5. [Google Scholar] [CrossRef]

- Kale, K.V.; Solankar, M.M.; Nalawade, D.B.; Dhumal, R.K.; Gite, H.R. A research review on hyperspectral data processing and analysis algorithms. Proc. Natl. Acad. Sci. India Sect. A Phys. Sci. 2017, 87, 541–555. [Google Scholar] [CrossRef]

- Jurado, J.M.; Pádua, L.; Hruška, J.; Feito, F.R.; Sousa, J.J. An efficient method for generating UAV-based hyperspectral mosaics using push-broom sensors. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6515–6531. [Google Scholar]

- Habermeyer, M.; Bachmann, M.; Holzwarth, S.; Müller, R.; Richter, R. Incorporating a push-broom scanner into a generic hyperspectral processing chain. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 5293–5296. [Google Scholar]

- Schaepman, M.E. Imaging spectrometers. In The SAGE Handbook of Remote Sensing; SAGE Publications Ltd.: Thousand Oaks, CA, USA, 2009; pp. 166–178. [Google Scholar]

- Brady, D.J. Optical Imaging and Spectroscopy; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Turner, D.; Lucieer, A.; McCabe, M.; Parkes, S.; Clarke, I. Pushbroom Hyperspectral Imaging from an Unmanned Aurcraft System (UAS)—Geometric Processing Workflow and Accuracy Assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 379–384. [Google Scholar] [CrossRef]

- Angel, Y.; Turner, D.; Parkes, S.; Malbeteau, Y.; Lucieer, A.; McCabe, M.F. Automated Georectification and Mosaicking of UAV-Based Hyperspectral Imagery from Push-Broom Sensors. Remote Sens. 2019, 12, 34. [Google Scholar] [CrossRef]

- Kim, J.I.; Chi, J.; Masjedi, A.; Flatt, J.E.; Crawford, M.M.; Habib, A.F.; Lee, J.; Kim, H.C. High-resolution hyperspectral imagery from pushbroom scanners on unmanned aerial systems. Geosci. Data J. 2021, 9, 221–234. [Google Scholar] [CrossRef]

- Arroyo-Mora, J.P.; Kalacska, M.; Inamdar, D.; Soffer, R.; Lucanus, O.; Gorman, J.; Naprstek, T.; Schaaf, E.S.; Ifimov, G.; Elmer, K. Implementation of a UAV–hyperspectral pushbroom imager for ecological monitoring. Drones 2019, 3, 12. [Google Scholar] [CrossRef]

- Schläpfer, D.; Schaepman, M.E.; Itten, K.I. PARGE: Parametric geocoding based on GCP-calibrated auxiliary data. In Proceedings of the Imaging Spectrometry IV, San Diego, CA, USA, 20–21 July 1998; pp. 334–344. [Google Scholar]

- Misra, I.; Rohil, M.K.; Manthira Moorthi, S.; Dhar, D. Feature based remote sensing image registration techniques: A comprehensive and comparative review. Int. J. Remote Sens. 2022, 43, 4477–4516. [Google Scholar] [CrossRef]

- Jakob, S.; Zimmermann, R.; Gloaguen, R. The need for accurate geometric and radiometric corrections of drone-borne hyperspectral data for mineral exploration: Mephysto—A toolbox for pre-processing drone-borne hyperspectral data. Remote Sens. 2017, 9, 88. [Google Scholar] [CrossRef]

- Finn, A.; Peters, S.; Kumar, P.; O’Hehir, J. Automated Georectification, Mosaicking and 3D Point Cloud Generation Using UAV-Based Hyperspectral Imagery Observed by Line Scanner Imaging Sensors. Remote Sens. 2023, 15, 4624. [Google Scholar] [CrossRef]

- Yi, L.; Chen, J.M.; Zhang, G.; Xu, X.; Ming, X.; Guo, W. Seamless mosaicking of uav-based push-broom hyperspectral images for environment monitoring. Remote Sens. 2021, 13, 4720. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Xiao, G.; Shao, Z.; Guo, X. A review of multimodal image matching: Methods and applications. Inf. Fusion 2021, 73, 22–71. [Google Scholar] [CrossRef]

- Fu, Y.; Lei, Y.; Wang, T.; Curran, W.J.; Liu, T.; Yang, X. Deep learning in medical image registration: A review. Phys. Med. Biol. 2020, 65, 20TR01. [Google Scholar] [CrossRef]

- Haskins, G.; Kruger, U.; Yan, P. Deep learning in medical image registration: A survey. Mach. Vis. Appl. 2020, 31, 8. [Google Scholar] [CrossRef]

- Zhu, B.; Zhou, L.; Pu, S.; Fan, J.; Ye, Y. Advances and challenges in multimodal remote sensing image registration. IEEE J. Miniaturization Air Space Syst. 2023, 4, 165–174. [Google Scholar] [CrossRef]

- Zhang, X.; Leng, C.; Hong, Y.; Pei, Z.; Cheng, I.; Basu, A. Multimodal remote sensing image registration methods and advancements: A survey. Remote Sens. 2021, 13, 5128. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the IJCAI'81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Le Besnerais, G.; Champagnat, F. Dense optical flow by iterative local window registration. In Proceedings of the IEEE International Conference on Image Processing 2005, Genova, Italy, 14 September 2005; pp. 1–137. [Google Scholar]

- Brigot, G.; Colin-Koeniguer, E.; Plyer, A.; Janez, F. Adaptation and evaluation of an optical flow method applied to coregistration of forest remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2923–2939. [Google Scholar] [CrossRef]

- Plyer, A.; Colin-Koeniguer, E.; Weissgerber, F. A new coregistration algorithm for recent applications on urban SAR images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2198–2202. [Google Scholar] [CrossRef]

- Amici, L.; Yordanov, V.; Oxoli, D.; Truong, X.Q.; Brovelli, M.A. Monitoring landslide displacements through maximum cross-correlation of satellite images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 48, 27–34. [Google Scholar] [CrossRef]

- Ibañez, D.; Fernandez-Beltran, R.; Pla, F. A remote sensing image registration benchmark for operational Sentinel-2 and Sentinel-3 products. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 2246–2249. [Google Scholar]

- Ballesteros, G.; Vandenhoeke, A.; Antson, L.; Shimoni, M. Refining the georeferencing of prisma products using an optical flow methodology. In Proceedings of the IGARSS 2023-2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 1736–1739. [Google Scholar]

- Chanut, M.-A.; Gasc-Barbier, M.; Dubois, L.; Carotte, A. Automatic identification of continuous or non-continuous evolution of landslides and quantification of deformations. Landslides 2021, 18, 3101–3118. [Google Scholar] [CrossRef]

- Fu, Y.; Zhang, B.; Liu, G.; Zhang, R.; Liu, Q.; Ye, Y. An optical flow SBAS technique for glacier surface velocity extraction using SAR images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Charrier, L.; Godet, P.; Rambour, C.; Weissgerber, F.; Erdmann, S.; Koeniguer, E.C. Analysis of dense coregistration methods applied to optical and SAR time-series for ice flow estimations. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020; pp. 1–6. [Google Scholar]

- Whinam, J.; Barmuta, L.; Chilcott, N. Floristic description and environmental relationships of Tasmanian Sphagnum communities and their conservation management. Aust. J. Bot. 2001, 49, 673–685. [Google Scholar] [CrossRef]

- Department of Natural Resources and Environment Tasmania. Sphagnum Moss—Sustainable Use and Management. Available online: https://nre.tas.gov.au/conservation/flora-of-tasmania/sphagnum-moss-sustainable-use-and-management (accessed on 27 May 2024).

- Skelton, R.P.; Brodribb, T.J.; McAdam, S.A.M.; Mitchell, P.J. Gas exchange recovery following natural drought is rapid unless limited by loss of leaf hydraulic conductance: Evidence from an evergreen woodland. New Phytol. 2017, 215, 1399–1412. [Google Scholar] [CrossRef] [PubMed]

- Smith-Martin, C.M.; Skelton, R.P.; Johnson, K.M.; Lucani, C.; Brodribb, T.J. Lack of vulnerability segmentation among woody species in a diverse dry sclerophyll woodland community. Funct. Ecol. 2020, 34, 777–787. [Google Scholar] [CrossRef]

- Pritzkow, C.; Brown, M.J.; Carins-Murphy, M.R.; Bourbia, I.; Mitchell, P.J.; Brodersen, C.; Choat, B.; Brodribb, T.J. Conduit position and connectivity affect the likelihood of xylem embolism during natural drought in evergreen woodland species. Ann. Bot. 2022, 130, 431–444. [Google Scholar] [CrossRef] [PubMed]

- Sivanandam, P.; Lucieer, A. Tree Detection and Species Classification in a Mixed Species Forest Using Unoccupied Aircraft System (UAS) RGB and Multispectral Imagery. Remote Sens. 2022, 14, 4963. [Google Scholar] [CrossRef]

- Geoscience Australia. Online GPS Processing Service. Available online: https://gnss.ga.gov.au/auspos (accessed on 15 August 2024).

- Agisoft. Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 27 May 2024).

- Specim. CaliGeo Pro. Available online: https://www.specim.com/products/caligeo-pro/ (accessed on 27 May 2024).

- Advanced Navigation. Kinematica. Available online: https://www.advancednavigation.com/accessories/gnss-ins-post-processing/kinematica/ (accessed on 27 May 2024).

- Plyer, A.; Le Besnerais, G.; Champagnat, F. Massively parallel Lucas Kanade optical flow for real-time video processing applications. J. Real-Time Image Process. 2016, 11, 713–730. [Google Scholar] [CrossRef]

- Plyer, A. Gefolki. Available online: https://github.com/aplyer/gefolki/tree/master (accessed on 27 May 2024).

- NV5 Geospatial. Envi. Available online: https://www.nv5geospatialsoftware.com/Products/ENVI (accessed on 27 May 2024).

- Padilla, R.; Netto, S.L.; Da Silva, E.A. A survey on performance metrics for object-detection algorithms. In Proceedings of the 2020 international conference on systems, signals and image processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Shen, X.; Cao, L.; Coops, N.C.; Fan, H.; Wu, X.; Liu, H.; Wang, G.; Cao, F. Quantifying vertical profiles of biochemical traits for forest plantation species using advanced remote sensing approaches. Remote Sens. Environ. 2020, 250, 112041. [Google Scholar] [CrossRef]

- Turner, D.; Cimoli, E.; Lucieer, A.; Haynes, R.S.; Randall, K.; Waterman, M.J.; Lucieer, V.; Robinson, S.A. Mapping water content in drying Antarctic moss communities using UAS-borne SWIR imaging spectroscopy. Remote Sens. Ecol. Conserv. 2023, 10, 296–311. [Google Scholar] [CrossRef]

- Schneider, F.D.; Morsdorf, F.; Schmid, B.; Petchey, O.L.; Hueni, A.; Schimel, D.S.; Schaepman, M.E. Mapping functional diversity from remotely sensed morphological and physiological forest traits. Nat. Commun. 2017, 8, 1441. [Google Scholar] [CrossRef]

- Sousa, J.J.; Toscano, P.; Matese, A.; Di Gennaro, S.F.; Berton, A.; Gatti, M.; Poni, S.; Pádua, L.; Hruška, J.; Morais, R. UAV-Based Hyperspectral Monitoring Using Push-Broom and Snapshot Sensors: A Multisite Assessment for Precision Viticulture Applications. Sensors 2022, 22, 6574. [Google Scholar] [CrossRef] [PubMed]

- Jarron, L.R.; Coops, N.C.; MacKenzie, W.H.; Tompalski, P.; Dykstra, P. Detection of sub-canopy forest structure using airborne LiDAR. Remote Sens. Environ. 2020, 244, 111770. [Google Scholar] [CrossRef]

- Hambrecht, L.; Lucieer, A.; Malenovský, Z.; Melville, B.; Ruiz-Beltran, A.P.; Phinn, S. Considerations for Assessing Functional Forest Diversity in High-Dimensional Trait Space Derived from Drone-Based Lidar. Remote Sens. 2022, 14, 4287. [Google Scholar] [CrossRef]

- Xiang, Y.; Wang, F.; Wan, L.; Jiao, N.; You, H. OS-flow: A robust algorithm for dense optical and SAR image registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6335–6354. [Google Scholar] [CrossRef]

- Liu, C.; Yuen, J.; Torralba, A. Sift flow: Dense correspondence across scenes and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 978–994. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Paul, S.; Pati, U.C. A comprehensive review on remote sensing image registration. Int. J. Remote Sens. 2021, 42, 5396–5432. [Google Scholar] [CrossRef]

- Arar, M.; Ginger, Y.; Danon, D.; Bermano, A.H.; Cohen-Or, D. Unsupervised multi-modal image registration via geometry preserving image-to-image translation. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13410–13419. [Google Scholar]

- Merkle, N.; Auer, S.; Mueller, R.; Reinartz, P. Exploring the potential of conditional adversarial networks for optical and SAR image matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1811–1820. [Google Scholar] [CrossRef]

- Ye, Y.; Tang, T.; Zhu, B.; Yang, C.; Li, B.; Hao, S. A multiscale framework with unsupervised learning for remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Yuan, J.; Le, Z.; Liu, W. Rfnet: Unsupervised network for mutually reinforcing multi-modal image registration and fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19679–19688. [Google Scholar]

| Un-Registered | Registered | |||||||

|---|---|---|---|---|---|---|---|---|

| Site | Imagery | Reference | Total Points | RMSE (m) | MAE (m) | RMSE (m) | MAE (m) | |

| Cockatoo Hills | VNIR | RGB | 36 | 2.931 | 2.660 | 0.103 | 0.080 | |

| NIR/SWIR | RGB | 36 | 2.749 | 2.660 | 0.110 | 0.083 | ||

| NIR/SWIR | VNIR | 36 | 2.238 | 2.043 | 0.129 | 0.092 | ||

| Swansea | VNIR | RGB | 48 | 7.534 | 7.481 | 0.243 | 0.186 | |

| NIR/SWIR | RGB | 48 | 2.797 | 2.563 | 0.321 | 0.246 | ||

| NIR/SWIR | VNIR | 48 | 8.730 | 8.656 | 0.221 | 0.168 | ||

| Site | Imagery | Reference | Mean IoU | Median IoU |

|---|---|---|---|---|

| Cockatoo Hills | VNIR | RGB | 0.849 | 0.844 |

| NIR/SWIR | RGB | 0.840 | 0.869 | |

| NIR/SWIR | VNIR | 0.827 | 0.816 | |

| Swansea | VNIR | RGB | 0.858 | 0.870 |

| NIR/SWIR | RGB | 0.830 | 0.840 | |

| NIR/SWIR | VNIR | 0.870 | 0.872 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haynes, R.S.; Lucieer, A.; Turner, D.; Cimoli, E. Co-Registration of Multi-Modal UAS Pushbroom Imaging Spectroscopy and RGB Imagery Using Optical Flow. Drones 2025, 9, 132. https://doi.org/10.3390/drones9020132

Haynes RS, Lucieer A, Turner D, Cimoli E. Co-Registration of Multi-Modal UAS Pushbroom Imaging Spectroscopy and RGB Imagery Using Optical Flow. Drones. 2025; 9(2):132. https://doi.org/10.3390/drones9020132

Chicago/Turabian StyleHaynes, Ryan S., Arko Lucieer, Darren Turner, and Emiliano Cimoli. 2025. "Co-Registration of Multi-Modal UAS Pushbroom Imaging Spectroscopy and RGB Imagery Using Optical Flow" Drones 9, no. 2: 132. https://doi.org/10.3390/drones9020132

APA StyleHaynes, R. S., Lucieer, A., Turner, D., & Cimoli, E. (2025). Co-Registration of Multi-Modal UAS Pushbroom Imaging Spectroscopy and RGB Imagery Using Optical Flow. Drones, 9(2), 132. https://doi.org/10.3390/drones9020132