Abstract

Obstacle avoidance is crucial for the successful completion of UAV missions. Static and dynamic obstacles, such as trees, buildings, flying birds, or other UAVs, can threaten these missions. As a result, safe path planning is essential, particularly for missions involving multiple UAVs. Collision-free paths can be designed in either 2D or 3D environments, depending on the scenario. This study provides an overview of recent advancements in obstacle avoidance and path planning for UAVs. These methods are compared based on various criteria, including avoidance techniques, obstacle types, the environment explored, sensor equipment, map types, and path statuses. Additionally, this paper includes a process addressing obstacle detection and avoidance and reviews the evolution of obstacle detection and avoidance (ODA) techniques in UAVs over the past decade.

1. Introduction

In recent years, the use of unmanned aerial vehicles (UAVs), commonly known as drones, has grown significantly [1]. UAVs are increasingly deployed across various domains to perform diverse missions, including terrain monitoring [2], intelligent agriculture [3], healthcare delivery [4], photogrammetry [5], area coverage [6], in-flight charging of other aerial robots [7], cinematography [8], operating in disaster-prone environments [9], intelligent policing [10], and wildfire detection [11]. Their versatility and ability to operate in complex and dynamic environments have made UAVs a valuable tool across multiple disciplines.

A critical research area for UAVs is path planning [12], which focuses on guiding the UAV from a starting point to a target location within an area of interest (AoI) while fulfilling mission objectives [13,14]. An effective path is typically evaluated through key performance indicators (KPIs) such as path length, the number of turns, and main factors like energy consumption and mission completion time [15]. It is not only important to reduce these KPIs in order to improve efficiency, but also to ensure that the UAV’s safety remains paramount.

Path planning becomes even more challenging when accounting for dynamic environments with obstacles and non-flying zones (NFZs) [16,17,18,19,20,21,22]. NFZs refer to restricted airspace where UAVs are not permitted to fly [23]. Several studies [23,24,25,26,27] model NFZs as static obstacles in two-dimensional environments, simplifying the complexity of planning in real-world conditions. However, real-world scenarios often involve dynamic obstacles and variable NFZs, which require adaptive planning strategies [28]. A primary challenge lies in developing optimal paths that comprehensively cover an AoI while avoiding obstacles and NFZs and minimizing energy costs or operational delays [6].

Different surveys have explored the UAV obstacle detection and avoidance (ODA) problem from diverse perspectives. Some categorize research based on avoidance algorithms and compare their performance [29,30,31,32,33], while others focus on the challenges faced during UAV navigation in various application domains [32,34]. Specialized surveys, such as those by [35,36], investigate ODA techniques tailored to sprayer UAVs, which differ in their operational requirements from other UAV types. However, these works often overlook the integration of NFZ handling within the broader ODA framework.

This survey aims to provide a comprehensive overview of UAV obstacle detection and avoidance techniques, with a particular focus on integrating NFZ considerations, a gap left by previous surveys. Our contributions can be summarized as follows:

- Comprehensive Classification of ODA Methods: We categorize and review state-of-the-art obstacle detection and avoidance approaches, including those addressing static and dynamic obstacles and NFZ avoidance strategies.

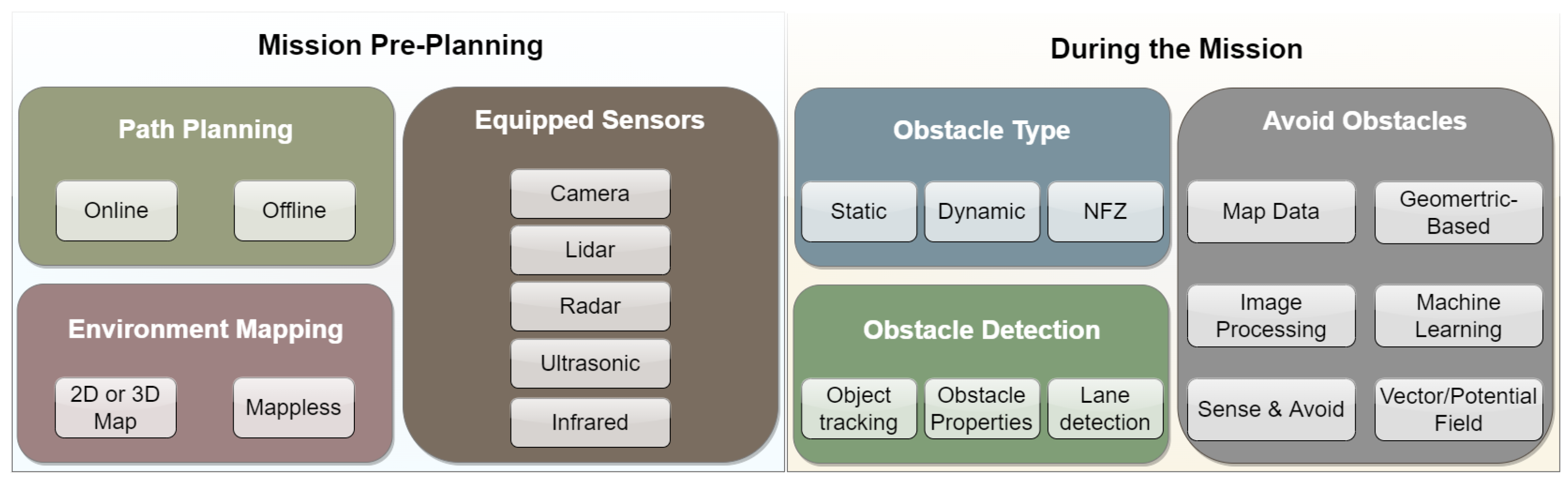

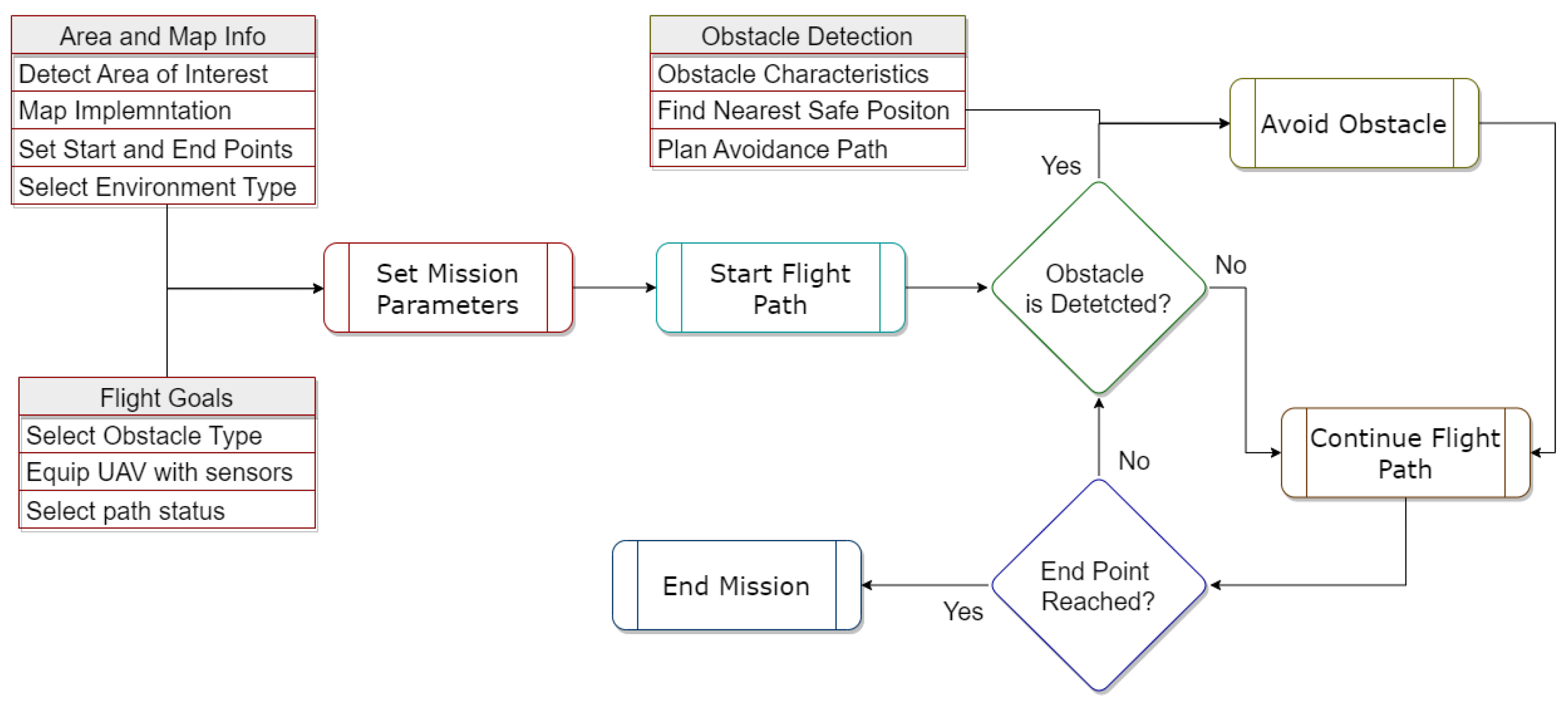

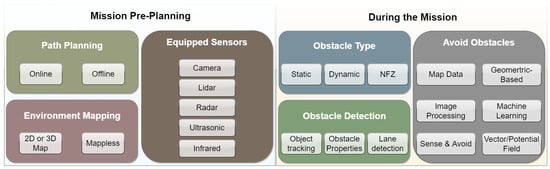

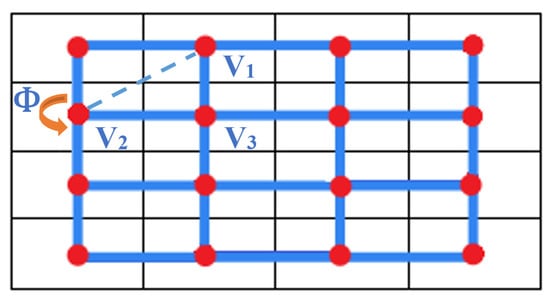

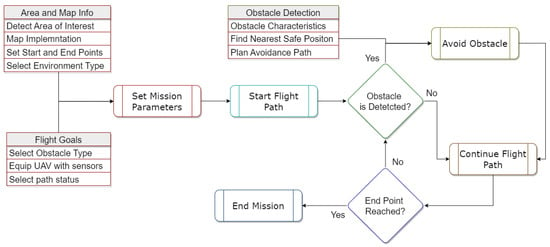

- ODA Process: We present an overview of the components of the UAV ODA mission, as depicted in Figure 1, highlighting the integration of path planning, environmental mapping, and obstacle detection.

Figure 1. ODA mission components overview.

Figure 1. ODA mission components overview. - Comparison of ODA Techniques: We compare various ODA methods based on performance metrics, such as efficiency, energy consumption, and adaptability to dynamic environments.

- Analysis of NFZ Handling: We analyze how NFZs are modeled and incorporated into planning algorithms, highlighting effective approaches and identifying research gaps.

- Evolution of ODA Methods: We trace the progression of ODA methodologies over the past decade, outlining trends and emerging challenges in UAV navigation.

The remainder of this paper is structured as follows: In Section 2, we summarize the methodology employed in this study. Section 3 presents an overview of the path planning and ODA problem. Section 4 provides a description of environmental mapping techniques, while Section 5 presents a review of sensors used for obstacle detection. Section 6 and Section 7 review various approaches related to obstacle and NFZ avoidance for UAVs, respectively. Key performance indicators (KPIs) are presented in Section 8. In Section 9, we compare the ODA and NFZ approaches and outline the steps involved in the ODA process with an eye on the evolution of ODA methods in recent decades. Finally, concluding remarks are given in Section 10.

2. Method

2.1. Study Design

The purpose of this study is to review research related to obstacle detection and avoidance (ODA) and non-flying zones (NFZ) in UAV path planning missions. This study emphasizes algorithms designed to ensure collision-free navigation, the handling of both static and dynamic obstacles, and the identification and avoidance of restricted areas. We selected articles from multiple scientific databases, including Scopus, Web of Science, PubMed, IEEE Xplore, MDPI, Springer, Google Scholar, and others. Studies that focus on static and dynamic obstacle avoidance, NFZ avoidance, collision-free paths, and both 2D and 3D path planning were included. We utilized specific keywords for the search, including “UAV obstacle detection”, “UAV obstacle avoidance”, “Non flying zone detection”, “path planning”, and “collision avoidance”.

2.2. Search and Study Selection

The selection process comprised two stages. First, titles and abstracts were screened from the search results of each database after excluding duplicates. In the second stage, we categorized the relevant studies into three groups based on their primary focus:

- Group 1: Surveys and review articles related to ODA and NFZ in UAV path planning.

- Group 2: Case studies addressing ODA in UAV path planning missions.

- Group 3: Case studies for detecting and avoiding NFZs in UAV path planning.

Each article in the three groups was thoroughly examined and categorized based on its contribution to the research areas. Table 1, Table 2 and Table 3 outline the search terms and the number of selected studies in each group.

Table 1.

Selection of surveys on ODA and NFZ avoidance for UAV and path planning missions (Group 1).

Table 2.

Selection of case studies for ODA for UAVs (Group 2).

Table 3.

Selection of methodologies for NFZ detection and avoidance (Group 3).

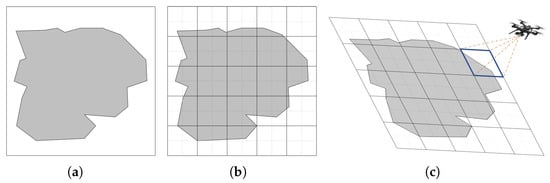

3. Path Planning and Collision Avoidance Problem

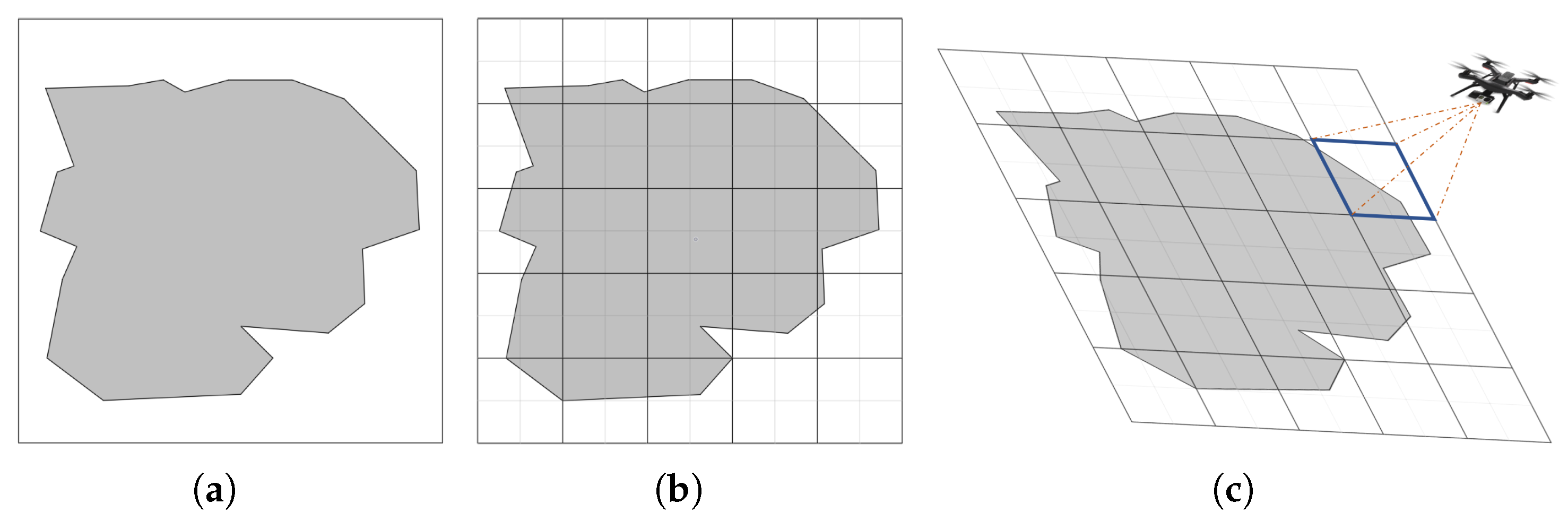

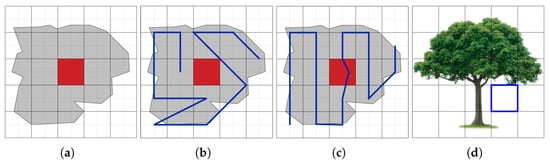

A UAV path can be planned before the mission (offline) or during the mission (online), or both could be combined [37]. The area, in most cases, is divided into a grid (Figure 2b), and each cell is fitted to the UAV footprint (Figure 2c). To plan an offline path, planners scan the area from a top view, as pictured in Figure 2a. For online path planning, UAV vision and flight control require specific equipment to offer real-time visual data in order to generate updated mapping (2D or 3D) and to overcome mission challenges. Challenges addressed in recent works include power consumption, mission cost, time, path length, number of turns, wind, and quality of coverage. These challenges vary according to the mission type and path status.

Figure 2.

AOI (grey area) top view and grid division. (a) AOI top view; (b) grid map; (c) UAV footprint.

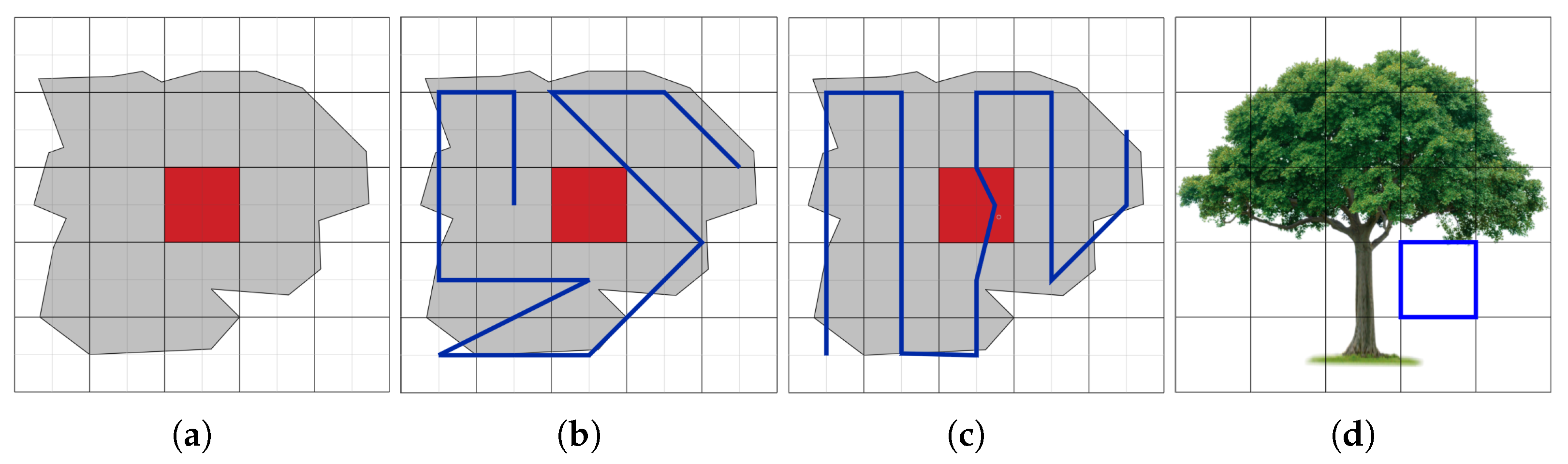

During the mission, an obstacle may block the UAV’s path and prevent it from completing its flight. It may also damage the UAV while it is hovering in a tracking mission. The obstacle could be static or dynamic. Static obstacles include trees and signboards. They can be located in the area grid map from the top view (see the red cell in Figure 3a).

Figure 3.

Static obstacle detection and avoidance path (blue line) examples. (a) Static obstacle marked by a red cell; (b) omit obstacle cell (red cell); (c) passing through red cell; (d) UAV position after scanning the frontal view (blue box safe zone).

The grid cell where the obstacle is located could be avoided either partially or completely by the UAV. In some works [27], the whole cell is completely omitted during the mission, as shown in Figure 3b. However, in other works [38], frontal obstacles are avoided by scanning the frontal view before reaching the red cell (Figure 3c), in order for the UAV to decide about its new position (Figure 3d). The obstacle could be detected from different perspectives and angles using different sensors outlined in the following sections.

Dynamic obstacles are flying birds, another UAV, or any other moving object that could intercept the UAV path [39,40]. They could be avoided in a 2D or 3D environment, but the 3D ones are more realistic. Dealing with dynamic obstacles is typically more challenging than static ones due to several factors, such as the obstacle’s speed and motion. A UAV may also be intercepted by one or more obstacles from different directions.

To ensure safety, UAVs are equipped with sensors to monitor the environment, such as laser scanners, sonar, radar, LiDAR, and cameras (stereo, monocular, RGB-D FPV, etc.) [41]. The sensor can accomplish many tasks by generating 2D or 3D maps that help in detecting the obstacle’s characteristics, for instance, lane boundary shape, vehicles, people, distance estimation from the UAV to obstacles, and so on [42,43]. Different types of maps are used in the case studies mentioned in this survey; the map types are illustrated in the next section.

4. Environment Mapping

Environment mapping is crucial for UAV path planning, providing visual data to ensure safe navigation [44]. Mapping frameworks can be either 2D or 3D, depending on the mission requirements. These maps are either pre-generated before the mission or created in real-time using UAV sensors. Table 4 summarizes the key mapping techniques, including their advantages and limitations. By selecting the appropriate mapping technique, UAV systems can enhance their ability to navigate complex environments while maintaining safe and efficient paths.

Table 4.

Comparison of mapping techniques for UAV path planning.

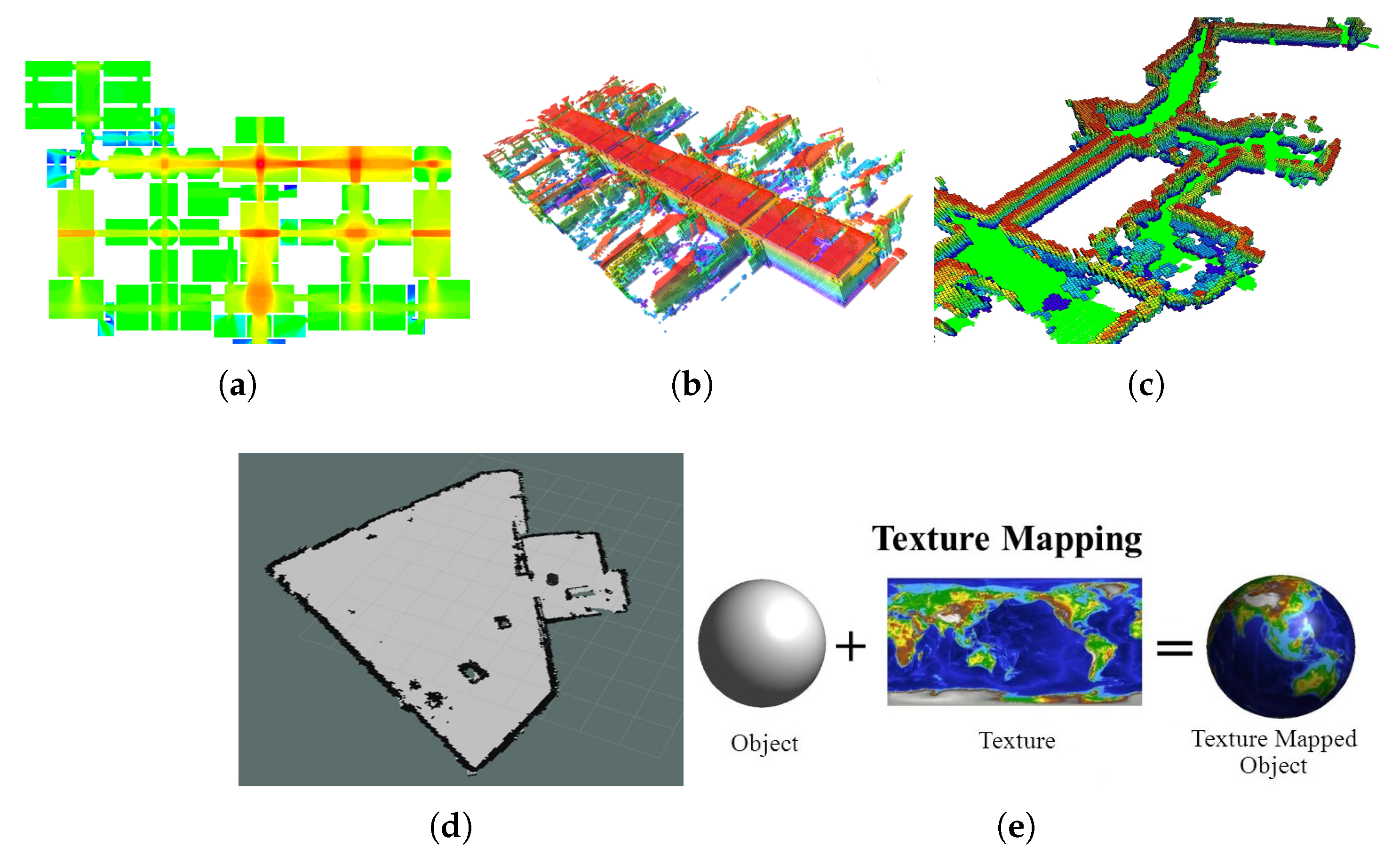

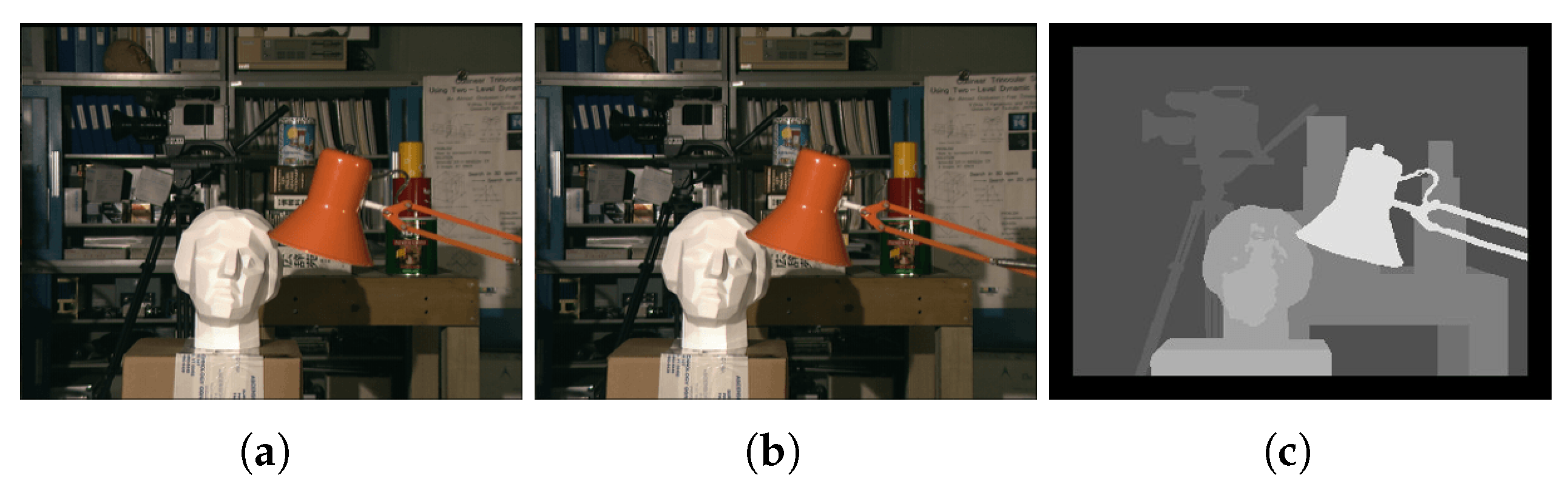

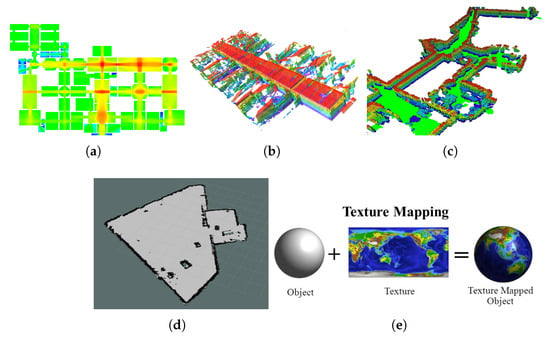

Depth maps encode object distances as image intensities, providing crucial data for obstacle detection and localization. This information is essential for UAVs to understand their surroundings and avoid collisions, as illustrated in Figure 4a [45]. Complementing depth maps, OctoMaps utilize a 3D occupancy grid to represent large-scale environments, enabling efficient volumetric mapping and path planning. This approach is particularly useful for UAVs operating in complex, unstructured spaces (see Figure 4b) [46,47,56].

Figure 4.

Examples of mapping techniques for UAV path planning. (a) Depth map [45]; (b) OctoMap [56]; (c) RTAB-Map [48]; (d) occupancy grid map [56]; (e) UV map [55].

In real-time navigation tasks, RTAB-Map combines visual and LiDAR data for 3D simultaneous localization and mapping (SLAM). This integration allows for loop closure detection, which mitigates cumulative drift errors and enhances positional accuracy, as depicted in Figure 4c [48,49,50]. For simpler environments, occupancy grid maps offer a more straightforward representation by categorizing areas as occupied, free, or unknown on a 2D grid. This method is advantageous for basic path planning tasks (see Figure 4d) [51,52,53].

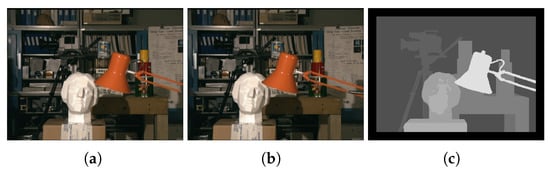

While UV maps primarily apply 2D textures to 3D models to improve visualization, they do not directly aid UAV navigation (shown in Figure 4e) [55]. In contrast, disparity maps play a crucial role in depth estimation by measuring pixel displacement between stereo image pairs. This technique allows UAVs to distinguish object proximity, with closer objects appearing brighter and distant ones darker, as illustrated in Figure 5 [54].

Figure 5.

Disparity map creation from stereo images [54]. (a) Left Camera; (b) right camera; (c) disparity image.

5. Sensors Used for Obstacle Detection

UAVs rely on a variety of sensors for obstacle detection, mapping, and navigation. These sensors differ in their capabilities, costs, and limitations. To improve accuracy and reliability, UAVs often integrate multiple sensors, a technique known as sensor fusion.

Among the most commonly used sensors are camera-based systems, including stereo, monocular, RGB-D, and first-person view (FPV) cameras. Stereo cameras provide 3D depth perception by analyzing two images, but their performance deteriorates in low-light conditions [57,58,59,60,61,62,63,64,65,66]. Monocular cameras, though cost-effective, capture only 2D images and rely on image processing for depth estimation [38,46,67,68,69,70,71,72]. RGB-D cameras combine color and depth information, offering a balance between cost and perception capability [49,50,73,74]. FPV cameras provide real-time video feeds and are lightweight, making them suitable for navigation but susceptible to visibility issues in poor lighting [53,75,76,77,78,79].

Beyond cameras, other sensors enhance UAV performance under various environmental conditions. LiDAR utilizes laser beams to generate highly accurate 3D maps, making it one of the most precise but also the most expensive solutions [62,63]. MIMO radar operates using radio waves, making it unaffected by lighting conditions while also enabling direct velocity measurement through the Doppler effect [68,76,80]. Ultrasonic sensors, which rely on sound waves, are influenced more by environmental factors than by lighting conditions [66,81,82,83,84]. Infrared sensors detect heat radiation, but their effectiveness can be impacted by ambient infrared interference [83,84].

When selecting sensors, it is crucial to consider their limitations. For instance, stereo cameras struggle with long-range depth estimation, monocular cameras lack inherent depth perception, and some camera types may fail to detect transparent or reflective obstacles [68]. LiDAR, while highly effective, comes with a significant cost. MIMO radar is robust against environmental interference but may require complex processing. To address individual weaknesses, UAVs often employ sensor fusion techniques, combining multiple sensors to enhance reliability across different conditions [85].

An important factor influencing sensor performance is ambient light. Cameras, particularly stereo, monocular, RGB-D, and FPV cameras, suffer from degraded performance in low-light scenarios [86,87,88,89]. However, LiDAR and MIMO radar remain unaffected, making them preferable for night-time or low-visibility operations. Ultrasonic and infrared sensors react differently, with ultrasonic performance varying based on environmental factors and infrared sensors being sensitive to external heat sources.

Velocity estimation is another critical aspect of UAV navigation. Stereo cameras can estimate velocity by analyzing depth variations across frames, whereas monocular cameras only provide relative velocity estimates [90,91]. LiDAR enables highly accurate velocity measurements based on distance calculations, while MIMO radar directly measures velocity using the Doppler effect. Ultrasonic and infrared sensors are generally ineffective for precise velocity estimation.

Table 5 summarizes how different sensors perform under varying ambient light conditions and their velocity estimation capabilities.

Table 5.

Sensor performance under ambient light and velocity estimation capabilities.

6. Static and Dynamic Obstacle Detection and Avoidance Methods

In path planning or monitoring missions, obstacles could pop up or interrupt the UAV’s path. As mentioned previously, obstacles could be static or dynamic and could be detected using different types of sensors. After detecting these obstacles, various methods and techniques could be designed to plan safe paths for UAVs to prevent mission failure and assure UAV safety.

The avoidance techniques and methods are categorized as follows: avoidance using map data, geometric-based, using image processing, machine learning, sensor data, vector field, or potential field. Approaches could make use of one method or combine two or more methods depending on the mission components.

6.1. Map Data

Mapping is fundamental for UAV navigation, whether pre-generated or built dynamically during flight. The integration of 2D and 3D map data significantly improves path planning and obstacle avoidance. Various studies explore different mapping techniques, each with distinct advantages and limitations.

Grid-based methods provide structured path planning. The energy-aware obstacle avoidance (EAOA) algorithm [38] initially generates a 2D path but refines it using a 3D frontal view when obstacles are detected. Similarly, Ref. [72] integrates A* for smoother, energy-efficient UAV paths. However, offline approaches like [92] rely on predefined obstacle modeling and lack adaptability to dynamic environments.

OctoMap-based techniques enable 3D spatial representation for obstacle avoidance. A bio-inspired plant growth algorithm [59] optimizes UAV movement within an OctoMap, while [62] combines stereo cameras and LiDAR to construct probabilistic 3D maps. The 3VFH+ algorithm [47] extends 2D VFH+ to 3D, extracting obstacle features for optimal avoidance paths. Despite their accuracy, these methods can be computationally demanding.

Vision-based approaches, such as [57], use stereo cameras to generate disparity maps, while [93] employs a fisheye camera and IMU for visual–inertial navigation (VINS). RGB-D cameras in [94] enable real-time obstacle tracking via Kalman filtering. These methods provide quick response but can struggle under poor lighting conditions.

SLAM-based approaches excel in GPS-denied environments. RTAB-Map with a ZED camera [50] processes sensory data to build an adaptive 3D map, allowing UAVs to navigate static obstacles. Multi-sensor fusion in [49] enhances indoor UAV navigation in rescue missions. A feature-based planner [95] uses point clouds to classify obstacles and optimize real-time trajectory tracking.

Each method has strengths and limitations, as summarized in Table 6:

Table 6.

Comparison of UAV mapping and obstacle avoidance methods.

6.2. Geometric-Based

Geometric-based approaches use spatial representations and mathematical models to ensure UAV obstacle avoidance and collision-free navigation. These methods vary from simple safety zones to advanced velocity and collision modeling techniques.

The 3D-SWAP algorithm [63] ensures safe UAV movement in swarms by modeling obstacles and UAVs as cylinders. Similarly, safety-zone approaches [75,96] define circular safe areas around obstacles to prevent collisions. These methods are computationally efficient but struggle with dynamic threats.

Velocity obstacle methods [97,98] predict potential collisions using obstacle velocity and position, selecting safe paths heuristically; while effective, they struggle with multiple moving obstacles without additional data. Collision cone methods [99,100] generalize this by computing a range of heading angles to ensure collision-free navigation at constant speed, offering more adaptability.

Avoidance maps [101,102] partition UAV control inputs into safe and collision-prone areas, efficiently guiding maneuvers. Meanwhile, binocular vision [61,103] enhances obstacle detection by constructing disparity maps for depth perception, though it is sensitive to environmental conditions.

A hierarchical task-based approach [104] integrates graph theory to enable UAV formations while avoiding obstacles, balancing coordination and obstacle avoidance but requiring high computational resources.

Each method has strengths and limitations, as summarized in Table 7:

Table 7.

Comparison of geometric-based UAV avoidance methods.

6.3. Image Processing

Image processing techniques enhance UAV obstacle avoidance by analyzing visual data to detect, track, and navigate around obstacles. These methods range from monocular vision and depth estimation to radar-based detection, each addressing specific challenges in obstacle identification.

Monocular vision-based techniques allow UAVs to detect obstacles using feature-matching and template-based analysis. For example, the method in [69] utilizes speeded-up robust features (SURF [105]) and template matching to compare obstacle sizes across different frames, distinguishing near and distant objects even in complex backgrounds. However, monocular approaches often struggle with depth estimation in low-texture environments, necessitating additional strategies.

To improve upon this limitation, a size expansion-based approach [67] mimics human perception by analyzing obstacle size changes as the UAV moves closer. This enables real-time collision probability estimation, allowing the UAV to adjust its trajectory dynamically; while effective within a range of 90–120 cm, this approach is constrained by distance limitations and may require supplementary sensors for broader coverage.

For improved accuracy, radar-based image processing [76] incorporates video frame analysis, capturing multiple sequential images to detect obstacles. By applying background subtraction and edge detection, this method accurately estimates object size and clearance distance, ensuring a well-defined avoidance path. Although effective, it relies on multiple frames, which may introduce delays in fast-moving scenarios.

To address real-time responsiveness, stereo vision methods [106] leverage depth images to construct a precise 3D obstacle map. Unlike monocular approaches, stereo cameras provide direct depth perception, enhancing obstacle detection accuracy. This information is processed and transmitted to the UAV’s flight controller, dynamically adjusting its global path. However, stereo vision systems are susceptible to variations in lighting conditions, which can affect depth estimation reliability.

The integration of these methods can create a more robust obstacle avoidance system, while monocular vision offers a lightweight solution, combining it with depth-based or radar-assisted techniques improves reliability and accuracy. Future UAVs may benefit from hybrid approaches, optimizing real-time decision-making under diverse environmental conditions. Each method has strengths and limitations, as summarized in Table 8:

Table 8.

Comparison of image processing-based UAV avoidance methods.

6.4. Machine Learning Methods

Machine learning (ML) enhances UAV obstacle avoidance by enabling adaptive decision-making in complex environments. Various ML techniques, including neural networks, deep reinforcement learning, and object detection models, have been integrated to improve UAV navigation and collision avoidance.

Neural networks play a key role in learning-based avoidance strategies. In [70], a UAV learns obstacle avoidance by imitating human behavior using deep neural networks trained in a simulated environment using Gazebo [107]. However, such approaches rely heavily on extensive training data and may not generalize well to unseen scenarios. To address this, transformer-based learning [108] processes observation sequences to improve UAV decision-making in dynamic environments, effectively balancing tracking and avoidance.

Convolutional neural networks (CNNs) facilitate real-time object detection. Faster R-CNN [71] enables UAVs to detect and navigate around tree trunks using a single monocular camera, estimating obstacle-free paths based on detected tree spacing. Meanwhile, YOLO (you only look once) is a real-time object detection algorithm that uses CNN to predict bounding box positions and class probabilities from input images [109]. YOLO-based approaches [110,111] improve detection speed and accuracy, extending UAV perception to thermal infrared imagery for enhanced obstacle identification.

Reinforcement learning techniques enable UAVs to autonomously adapt to dynamic obstacles. Deep Q-learning (DQL) [64] allows UAVs to learn obstacle avoidance policies in 3D environments by maximizing flight efficiency while minimizing collisions. An extension [112] incorporates priority sampling and exponential weighting to stabilize learning and improve decision-making under uncertainty. Additionally, a Q-learning-based framework [113] integrates a shortest-distance prioritization policy to optimize both local avoidance and global path efficiency.

Sensor fusion further enhances learning-based navigation. RealSense-based reinforcement learning [58] utilizes depth images to compute avoidance maneuvers, while integrated sensing and communication (ISAC) [114] improves UAV path-following using a virtual leader approach. Furthermore, a CNN-based end-to-end learning system [115] combines multiple convolutional techniques to predict collision probabilities and adjust UAV movement in real-time.

These methods collectively contribute to more robust UAV obstacle avoidance. Table 9 compares key learning-based techniques in terms of strengths, weaknesses, and applications.

Table 9.

Comparison of machine learning-based UAV avoidance methods.

6.5. Sense and Avoid

Sense-and-avoid techniques combine various sensing modalities to improve UAV obstacle detection and avoidance. By integrating vision, radar, and ultrasonic sensors, these approaches enhance UAV navigation in dynamic environments.

Vision-based sensing provides critical spatial awareness. A system [68] fuses monocular camera data with millimeter-wave radar to extract 3D obstacle coordinates using an extended Kalman filter (EKF), enabling Bi-RRT* path planning. To enhance UAV swarm navigation, an active sensing framework [53] uses Gaussian mixture models (GMM) and distributed particle swarm optimization (DPSO) to generate a global consensus map, optimizing flight formations while ensuring collision avoidance.

Sensor fusion methods further improve obstacle tracking. An approach [73] integrates IMU and RGB-D camera data, using an information filter to predict obstacle motion and define escape points for collision-free movement. Similarly, a model predictive control (MPC)-based algorithm [116] employs a bin-occupancy filter to track and predict obstacles beyond the UAV’s immediate field of view, refining navigation in cluttered indoor spaces.

Radar-based detection provides robust obstacle awareness. A 3D-sensing MIMO radar [80] alerts UAVs to moving obstacles within their flight path, triggering evasive maneuvers. Ultrasonic sensors [82] complement these techniques by fusing multiple sensor readings to detect objects in multiple directions, addressing limitations of single-sensor approaches. A low-cost system [83] combines ultrasonic and infrared sensors to refine 3D obstacle detection, ensuring efficient collision avoidance in resource-constrained UAVs.

For enhanced precision, stereo vision is paired with ultrasonic sensors [66], enabling UAVs to characterize their surroundings more accurately. By detecting obstacles and applying a rapidly exploring random tree (RRT) algorithm, this method improves UAV path planning in complex environments.

These methods collectively contribute to more robust UAV obstacle avoidance. Table 10 compares sense and avoid based techniques in terms of strengths, weaknesses, and applications.

Table 10.

Comparison of sense-and-avoid UAV methods.

6.6. Vector/Potential Field Methods

Vector and artificial potential field (APF) methods are widely used in UAV obstacle avoidance and path planning. These approaches generate repulsive forces around obstacles and attractive forces towards goals, ensuring real-time adaptability in dynamic environments.

Traditional APF-based methods struggle with local minima, requiring enhancements to improve performance. A leader–follower APF strategy [117] increases formation flexibility, while an improved APF [118] incorporates goal not reachable near obstacle (GNRON) modifications to handle multiple obstacles. Further optimization [119] introduces spatial and temporal constraints, enhancing avoidance speed and efficiency.

To overcome APF’s limitations in dynamic environments, fuzzy logic-based improvements [120] integrate velocity repulsion fields, breaking local minima constraints and ensuring smoother avoidance paths. Similarly, a height-factor-enhanced APF [121] prevents UAV entrapment and maintains stability in fault conditions, while event-triggered APF [122] mitigates cyber-attack risks by treating compromised UAVs as obstacles.

Hybrid approaches combine APF with graph-based methods for improved navigation. A localized vector field and A*-based obstacle avoidance algorithm [52] plans paths dynamically by merging waypoints using the split and merge (SaM) algorithm. Similarly, an ellipsoid-based avoidance method [81] extracts obstacle 3D coordinates from camera images and generates motion vectors for cost-based avoidance path planning.

Vector field methods enhance real-time maneuverability. A depth-camera-based system [60] utilizes OctoMap for perception, computing tangent velocity vectors to guide UAVs through unknown environments. For swarm navigation, a vector field histogram (VFH)-based method [123] integrates Apollonius circles for maintaining safe UAV spacing, improving cooperative flight in complex environments. A fusion of VFH+ and dynamic window approaches (DWA) [124] further refines cooperative multi-UAV obstacle avoidance by optimizing azimuth corrections and rotation cost evaluations.

To address high-speed dynamic obstacle avoidance, a chance-constrained approach [125] predicts obstacle velocities, integrating velocity uncertainty into UAV path planning. These enhancements ensure UAVs can react efficiently to both static and moving obstacles in unpredictable environments.

These methods collectively contribute to more robust UAV obstacle avoidance. Table 11 compares vector/potential field based techniques in terms of strengths, weaknesses, and applications.

Table 11.

Comparison of vector/potential field UAV methods.

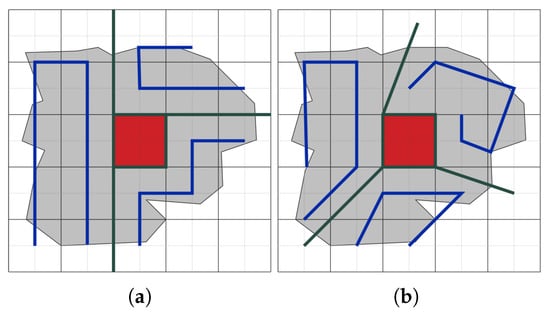

7. Avoiding Non-Flying Zones

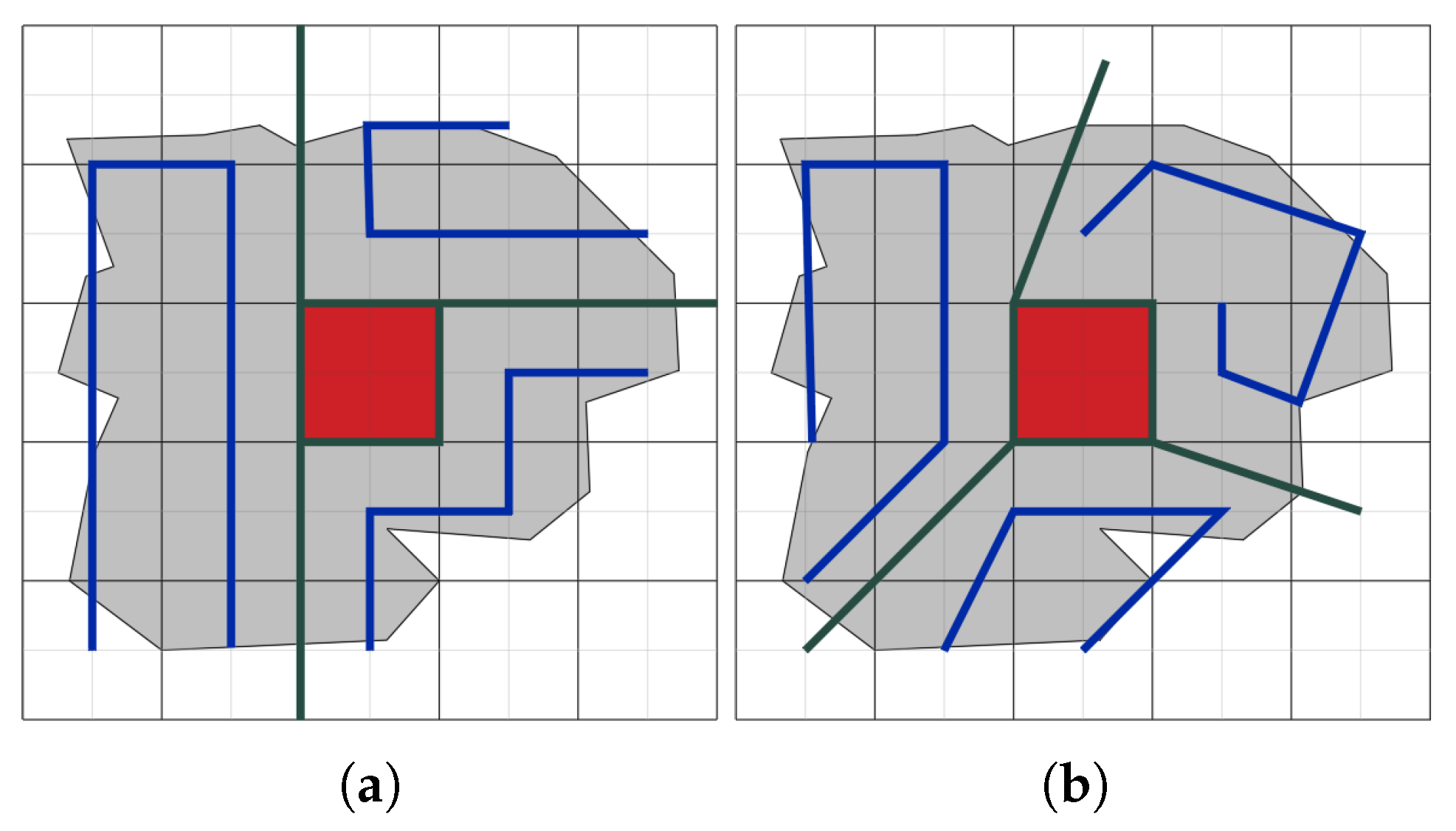

While planning paths for multiple or single UAVs, avoiding NFZs is one of the major challenges. As mentioned previously, NFZs are areas that UAVs must not cover or pass over. NFZs are considered 2D obstacles and are avoided using different methods, such as area partitioning. Figure 6 shows an example of area partitioning, where the partitioning borders are shown in dark green color, NFZs are represented by red cells, and the UAV planned paths are shown in blue.

Figure 6.

An example on area partitioning (green lines) and NFZ (red cells) avoidance path (blue lines). (a) Area partitioning in [23]; (b) area partitioning in [24,25].

7.1. Grid-Based Area Partitioning and Path Planning

A path-planning algorithm for single and multiple UAVs in different area shapes and decomposition (exact and cellular) in the presence of NFZ is proposed in [23]. To avoid NFZ, a new area partitioning method is presented. Using this method, the entire area is divided into equal subareas. Partition borders are set on grid cell borders to avoid omitting area cells.

Figure 6a shows the partitioning area without omitting any grid cells, whereas in Figure 6b, the partitioning border passes through grid cells which are subsequently omitted or ignored. The coverage quality will decrease when the boundary cells are omitted, as in [24,25]. In each partitioned area, a grid is formed, and a graph is then generated using the selected vertices. A sub-graph is created by weighing all edges in the graph. The path is optimized by removing insignificant edges and nodes using a filtering technique. The goal is to avoid NFZs and enable UAVs to plan paths with the minimum energy consumption, number of turns, and completion time while increasing the quality of coverage.

An area allocation algorithm based on a clustering method and a graph method is proposed by [26]. The partitioning algorithm allows the UAVs to plan paths that deal with obstacles and NFZs within the area of interest. This algorithm provides collision-free subareas without the need to perform visibility tests. The planned paths within each subarea are evaluated by their energy consumption and time to completion.

In [27], the top view of the area is divided into grids and the paths are planned using the energy-aware grid-based coverage path planning (EG-CPP) algorithm. This algorithm helps in planning coverage paths over irregularly shaped areas while minimizing energy consumption. This algorithm plans collision-free paths that avoid NFZ and 2D obstacles in an area for a single UAV.

7.2. Optimization Algorithms

The parallel self-adaptive ant colony optimization algorithm (PSAACO) for coverage path planning is presented in [126]. PSAACO aims to minimize energy consumption and completion time while considering various factors and employs grid mapping, inversion, and insertion operators, self-adaptive parameter tuning, and parallel computing to achieve this goal. The authors also propose the dynamic Floyd algorithm (DFA) to efficiently avoid non-flying zones (NFZs) in coverage path planning (CPP) problems. They demonstrate the effectiveness of PSAACO and DFA through experiments.

A simultaneously transmitting and reflecting reconfigurable intelligent surface (STAR-RIS) is explored in [127] as a way to enhance UAV-enabled wireless communication networks (WPCNs) to avoid NFZs in an area of interest. Moreover, an alternating optimization (AO) algorithm, combining the penalty method and successive convex approximation (SCA) techniques, is proposed to optimize the UAV trajectory, resource allocation, and beamforming vectors for the maximization of system performance.

The study in [128] addresses dynamic factors such as NFZs and reappearing targets, using a preprocessing and group-crossover non-dominated sort genetic algorithm II (PGC-NSGAII) for optimized UAV deployment, demonstrating superior performance.

An obstacle avoidance-enabled consensus (OAEC) algorithm that integrates NFZ avoidance into UAV path planning is presented in [129]. This algorithm extends the standard consensus approach by adding a mechanism to consider NFZs. When UAVs are near NFZs, temporary target points are generated within safe zones to guide them around the NFZs. This ensures that UAVs maintain a safe distance from restricted areas while achieving their mission objectives. The multi-step particle swarm optimization (MPSO) algorithm is used to determine waypoints that avoid NFZs, ensuring that the UAV formation paths are both efficient and safe.

An approximation algorithm iteratively constructs flying tours for each UAV by solving an orienteering problem, ensuring that the total reward collected is maximized within the UAV’s energy capacity in [130]. This involves defining a reward function for each point of interest (PoI) and optimizing the UAVs’ paths to maximize the sum of these rewards while respecting energy constraints. It focuses on optimizing UAV paths to maximize monitoring rewards while adhering to energy constraints, which involves consideration of obstacle and NFZ avoidance to ensure feasible and efficient paths.

7.3. ML and AI Methods

A multi-agent reinforcement learning approach, which involves training UAVs to recognize NFZs as areas to avoid during their trajectory optimization process, is presented in [131]. NFZs are incorporated into the simulated environment as obstacles or areas with negative rewards. The UAVs learn to avoid these zones as part of their path optimization strategy. By continuously interacting with the environment, UAVs adapt their paths dynamically to find the most efficient routes that circumvent NFZs.

Markov decision process (MDP) is employed in [132] to model the decision-making process for UAVs, and NFZs are integrated into the model as high-cost areas and UAVs are effectively guided to avoid them. NFZs are associated with high penalty costs in the cost function, encouraging UAVs to choose alternate paths that maintain formation while steering clear of these restricted areas. UAVs use predicted state vectors to assess future positions and avoid NFZs by selecting paths with the lowest associated penalties.

The authors in [133] focus on designing robust trajectories and resource allocation strategies for UAV-enabled wireless networks to maximize spectral efficiency while addressing practical challenges like UAV positioning errors and NFZs. This deep learning framework effectively models UAV trajectory and resource allocation, ensuring reliable NFZ avoidance.

7.4. Search and Heuristic Algorithms

To integrate UAV navigation safely and efficiently into smart cities, a modified A* search algorithm is presented in [134] to enhance using the Haversine and Vincenty formulas to avoid NFZs and calculate distances on Earth’s surface, accounting for its elliptical shape.

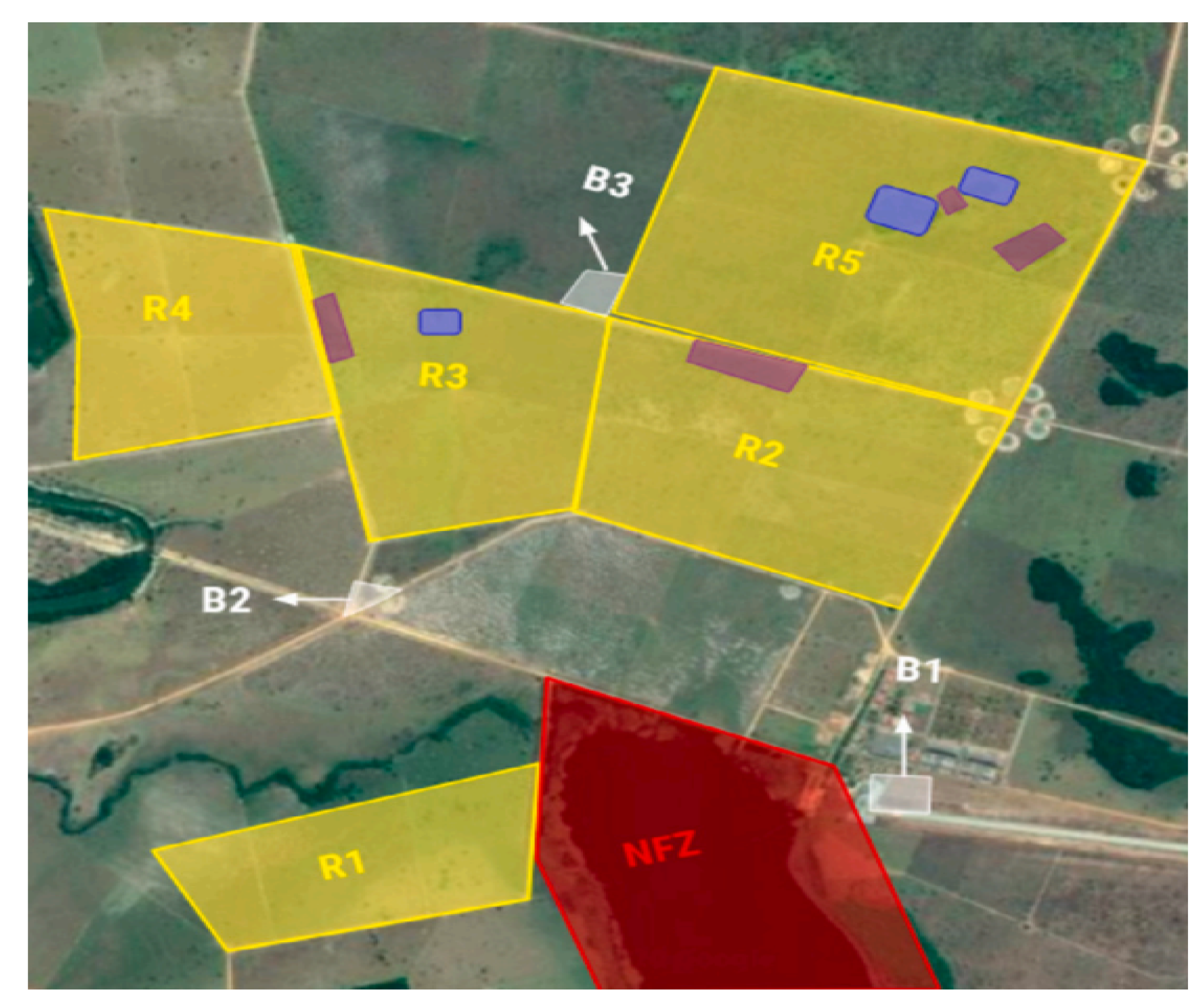

The RRT algorithm is employed in [135] to ensure that waypoints are validated against NFZ boundaries. By casting rays, the algorithm checks for intersections with NFZs and adjusts paths accordingly. Probabilistic constraints are implemented to handle uncertainties in the environment, ensuring a higher probability that UAVs avoid NFZs (Figure 7). This involves adjusting the potential fields based on the likelihood of encountering NFZs.

Figure 7.

Farm scenarios for planning tasks: regions of interest, non-flying zones, and support bases in [135].

7.5. Novel and Hybrid Approaches

Dynamic geo-fences are used in [136] to manage UAV paths in real-time, particularly when unexpected NFZs arise. This system enables UAVs to autonomously adjust their paths in response to new geo-fences.

In [137], a zoning system with government- and user-controlled authorization zones regulates drone fly-overs, using attribute-based access control (ABAC) and a variation of the A* algorithm for dynamic path planning.

The study in [138] investigates the design of UAV trajectories and user assignments for downlink data transmission networks while strictly adhering to multiple NFZs.

Similarly, a collision avoidance algorithm for UAVs is presented in [139] that ensures minimum separation between UAVs and obstacles, including multiple NFZs, using differential geometry to calculate the necessary heading angle changes. This method efficiently navigates complex environments by avoiding NFZs.

A navigation and collision avoidance framework using an improved deep Q-network (DQN) and faster R-CNN model allows UAVs to autonomously avoid obstacles in [140], demonstrating superior performance in simulated 3D environments.

The challenge faced by existing mathematical approaches in avoiding NFZs throughout their UAV paths is addressed in [141]. The conventional method only ensures that UAVs avoid NFZs at discrete points. To overcome this limitation, the authors introduce a new constraint for expanded NFZs that ensures complete avoidance.

Different works on ODA and NFZ avoidance evaluated the performance of their algorithms or methods using different KPIs. The energy, time, and quality of coverage were known as the main KPIs and, along with the factors of path length and turning angle, are all presented in the following section.

8. Key Performance Indicators

Key performance indicators (KPIs) are essential for evaluating the effectiveness of a UAV path-planning approach. These indicators include path length, number of turns, turning angles, rotational rate, mission completion time, UAV energy consumption, mission cost, and coverage quality. Several models for energy consumption and mission completion time have been proposed in the literature [6,23,38,142]. Additionally, alternative models for completion time are available in [143,144], providing further evaluation methods for route efficiency.

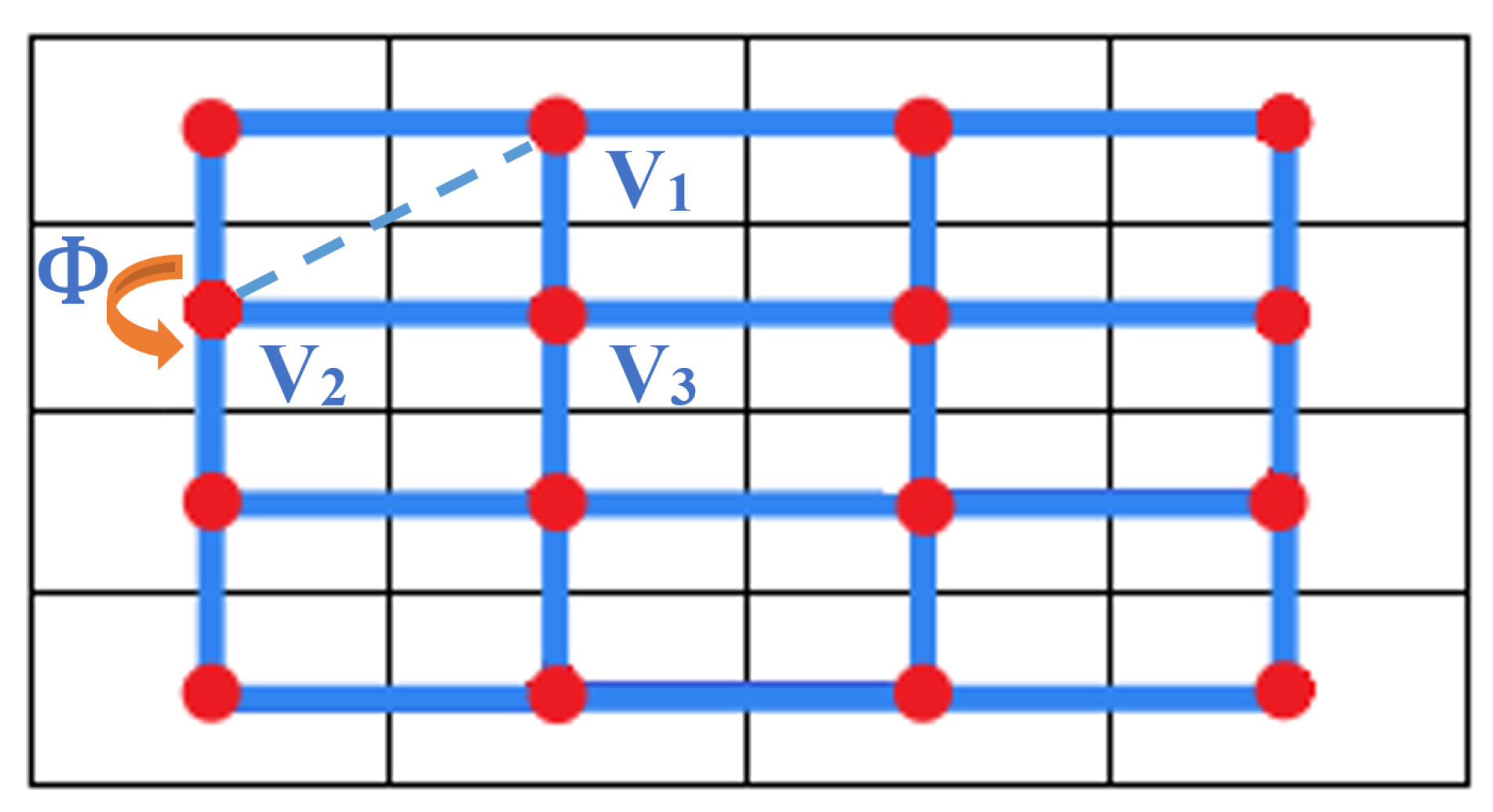

In a grid-based representation, the environment is modeled as a graph , where V denotes the set of vertices and E represents the set of edges. Each vertex corresponds to the center of a grid cell and is defined by its coordinates in a Cartesian plane, as illustrated in Figure 8.

Figure 8.

Grid and graph representation: vertices (red points) and edges (blue lines) [4].

The power consumption of a UAV is influenced by the rate at which it turns [23]. Minimizing the number of turns throughout the mission is therefore an important consideration. If a UAV moves from vertex to and turns before proceeding to (see Figure 8), then the turning angle can be calculated as follows:

where is determined using the law of cosines in . The angle can also be calculated using the Euclidean distances between vertices:

where represents the Euclidean distance between vertices and .

To compute the Euclidean distance between two nodes u and v, the following formula is used:

where and are the coordinates of nodes u and v, respectively.

In UAV path planning, optimizing trajectory length and mission completion time is a critical aspect. The mission completion time accounts for both flight and hovering durations. A commonly used formula for mission completion time [6] is given by

where ⊤ represents the path length, is the UAV speed, ℧ is the number of turns, is the angle of the th turn, and denotes the UAV rotation rate.

The energy consumption model follows the approach in [6]. The energy cost for traversing an edge between nodes u and v is

where denotes the energy consumption per unit distance, and is the Euclidean distance between the nodes. The energy required for making a turn is given by

where represents the energy consumption per degree of turning angle. The values of these parameters should be selected based on the specific application and validated against values reported in the literature for comparison.

The total energy consumption E is expressed as the sum of distance-based and turn-based energy components:

Another critical KPI is the quality of coverage (QoC), which evaluates the percentage of the area covered by the planned path, especially in NFZ avoidance scenarios. The QoC is defined as [23,24,25]

where is the number of covered non-zero grid cells, and is the total number of non-zero cells (excluding NFZ cells).

9. Review and Analysis

A successful ODA strategy consists of several key stages, as illustrated in Figure 9. UAVs are often deployed for missions involving scanning, coverage, or navigation through a designated area. To define the flight path, the mission plan is typically designed using a 2D or 3D map, which provides spatial awareness of the environment.

Figure 9.

ODA Process Steps.

UAVs must detect and avoid static and dynamic obstacles. Pre-known obstacles are handled via offline planning, while unexpected ones require real-time avoidance. Upon detection, the UAV adjusts its trajectory and resumes its path. Obstacle detection relies on sensors like IR, LiDAR, ultrasonic, RGB-D, or stereo cameras, each varying in cost, weight, power, range, and 3D imaging capability [67].

Avoidance is influenced by energy consumption, flight duration, path efficiency, turning angles, and environmental factors like wind. These challenges intensify in complex 3D environments with dynamic obstacles, requiring adaptive, real-time decision-making.

9.1. ODA Approaches Review and Analysis

In this section, we compare and analyze the ODA approaches reviewed in this survey based on different criteria. Table 12 presents this comparison and includes information on the types of obstacles the approach is designed to detect and avoid (static, dynamic, or both), the environment in which the approach is intended to operate (2D or 3D), the key performance metrics that are considered, whether experiments or testing were conducted in real-life or on a simulator, and if paths are planned in offline or online mode. Table 13 illustrates a timeline of ODA methods used in UAVs over the last decade based on recent advancements.

Table 12.

Comparison between approaches related to ODA for UAVs.

Table 13.

Timeline for the evolution of the ODA approaches in the last decade based on the recent advances in UAVs.

The present survey reveals that the majority of the works (66%) detected and avoided obstacles in a 3D environment. On the other hand, 28% relied on a 2D environment, while 6% used a combination of 2D and 3D, which is more realistic.

In addition, there are few energy-aware approaches (7.35%) that require more attention to be paid to this key performance indicator by researchers, as it plays a key role in every mission. The static obstacle type is covered by 29.4% of the approaches, with dynamic obstacles being detected and avoided in 23.5% of the works; however, both dynamic and static obstacles are taken into consideration in 47% of the approaches. The majority of approaches (78%) equipped UAVs with various types of sensors, while 22% of works did not use sensors or assumed that obstacle data were already known. Maps were used in 71.5% of approaches to detect and avoid obstacles.

As for the path planning mode, 47% of the work was conducted online and 7.35% offline, 13.35% of the work combined online and offline planning, and 32.35% did not provide any information. Out of all these paths, 21.7% were tested in real life, 50% in simulators, and 28.3% in both.

It is important to note that using simulators alone does not guarantee that the methodologies will perform effectively and accurately in real-life scenarios. Out of all the works, 42.65% underwent testing in real-life scenarios or both in simulation and real life. This had an impact on their maturity level, which is determined by the method’s success in real life.

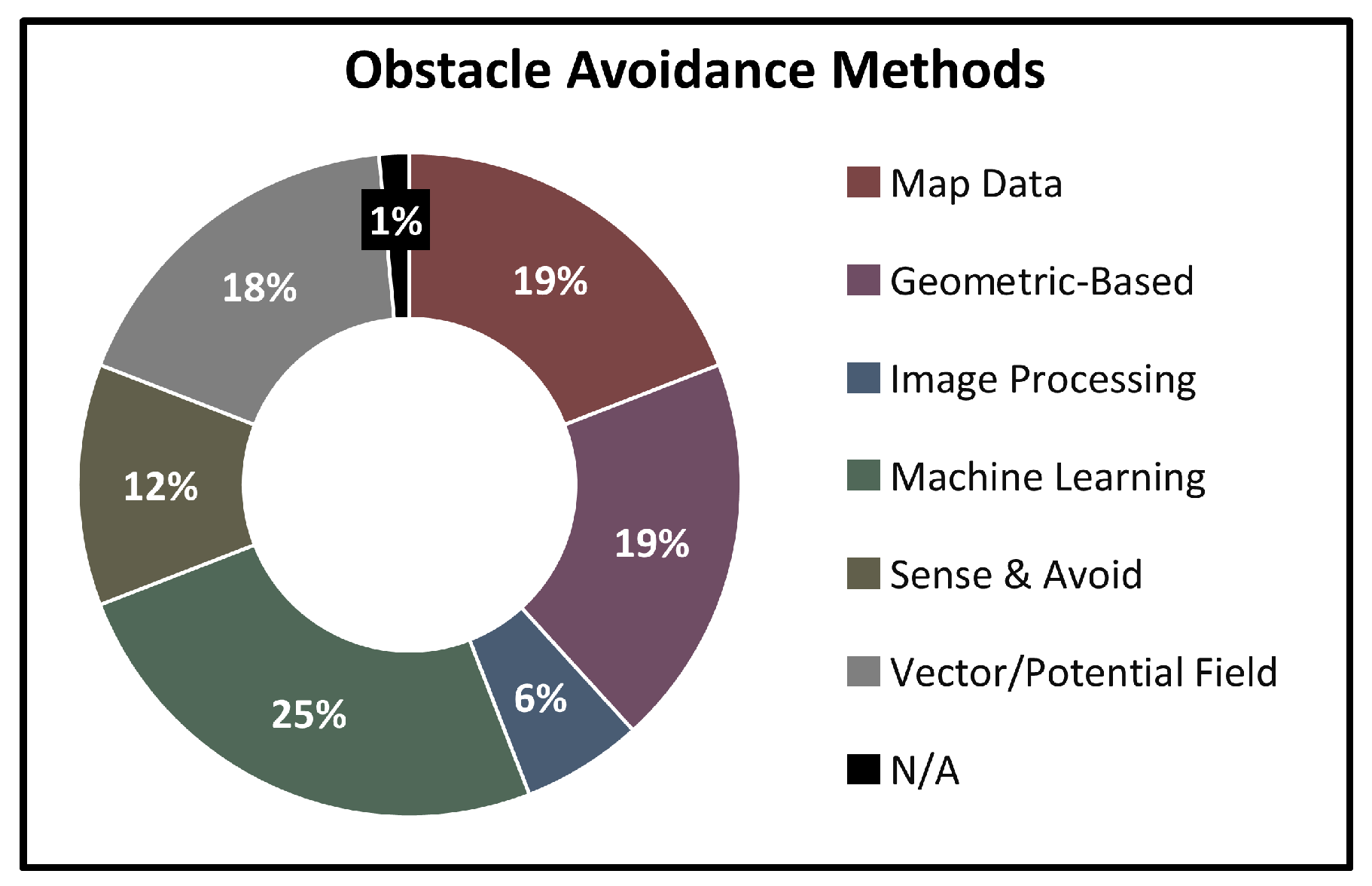

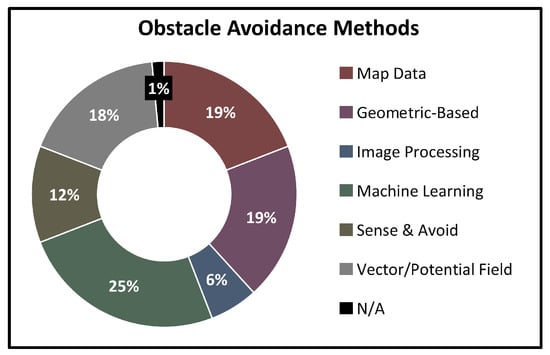

From the data presented in Figure 10, we see that a significant portion of the works reviewed in this study proposed methods for avoiding obstacles during autonomous navigation. Specifically, 25% of the works relied on machine learning techniques and 19% on map data to avoid obstacles. Furthermore, 19% of the works utilized geometrical-based methods, which may involve the use of geometric shapes or mathematical calculations to determine the location and path of obstacles. In 19% of the works, the use of vector or potential fields was involved in guiding the movement of the autonomous system around obstacles.

Figure 10.

Distribution of obstacle avoidance methods.

On the other hand, 12% of the approaches employed sense and avoid methods, in which the autonomous system is equipped with sensors that allow it to detect and avoid obstacles. In addition, 6% of the works used image processing techniques to identify and avoid obstacles, while 1% of the works did not specify any details about the method they used. Table 13 presents a timeline of ODA methods used for UAVs from 2015 to 2024. The ODA methods are classified into categories such as machine learning and AI, sensor fusion, SLAM, reactive control, bio-inspired, collaborative UAV systems, single-sensor navigation, energy-aware, perception, and computer vision and VR.

Notable progress includes the emergence of machine learning and ai studies between 2019 and 2024; perception algorithms from 2015 to 2024; sensor fusion advancements in 2015, 2017, 2018, 2020, 2021, and 2024; and reactive control studies in 2017, 2018, and 2020–2024. Additionally, SLAM-based works present in the years 2015, 2018, 2019, 2023, and 2024. Single-sensor navigation works appeared in the years between 2017 and 2019. The table provides a comprehensive overview of the evolution of ODA techniques in UAVs over the past 10 years.

9.2. NFZ Avoidance Approaches Review and Analyses

Based on various criteria, we compare and analyze the approaches reviewed in this survey for avoiding NFZs. Table 14 compares these approaches based on the type of area decomposition used, the number of NFZs avoided, the method used to partition the area, the number of UAVs involved, and the KPIs used to evaluate the approaches.

Table 14.

Comparison between approaches related to NFZ avoidance for UAVs.

Around 28.6% of the works used approximate cellular decomposition and grid division. Of these approaches, 9.5% used exact and approximate area decomposition, with the application of grid sub-division techniques in 4.8% of all works. In 47.6% of the works, grid-based techniques are applied, and 19% used other techniques.

However, 66.66% and 23.8% of all the works did not include any information about area cellular decomposition and decomposition technique, respectively. Out of all the approaches considered, 76.2% avoided one NFZ within the area, while 28.6% only avoided NFZs around the area. However, 71.4% of the approaches were able to avoid more than one NFZ within the area.

Additionally, 43% of the reviewed works did not provide or use an existing partitioning algorithm for the area of interest to avoid NFZs. Among the works, only 19% mentioned that the area partitioning algorithm did not exclude cells that lie under the partitioning border.

Only 4.8% of the approaches provided an NFZ avoidance algorithm that could be used for a single as well as multiple UAVs. It should be noted that only 38.1% of the approaches were energy aware, and 85.7% of the works considered the completion time in their approach. Moreover, 28.6% of the works took the quality of coverage as a KPI. Additionally, 14.3% of the studies did not consider these three main KPIs.

10. Conclusions and Future Scope

ODA is a fundamental aspect of UAV operations, ensuring safe and efficient navigation in complex environments. This survey has provided a detailed analysis of ODA techniques, categorizing methods based on obstacle type, environmental dimensionality, sensor integration, and path planning strategies. Our findings indicate that while most studies (66%) focus on 3D environments, fewer than half (47%) comprehensively address static and dynamic obstacles. Furthermore, energy-aware solutions remain a significant research gap, with only 7.35% of ODA studies considering energy efficiency to be a critical factor in UAV mission endurance and sustainability. In addition, NFZ avoidance is less studied than ODA, with a limited focus on energy efficiency (38.1%), coverage quality (28.6%), and multi-UAV handling (4. 8%), highlighting a notable gap in tackling the NFZ avoidance topic.

Given these findings, future research should focus on developing energy-efficient ODA methods that reduce energy consumption while ensuring safe and effective navigation. NFZ avoidance, with an emphasis on energy efficiency, coverage quality, and multi-UAV coordination, would help address the existing gaps and contribute to more robust and sustainable UAV mission strategies.

The insights presented in this survey provide a foundation for future research, fostering the development of more advanced and efficient UAV navigation systems.

Author Contributions

Conceptualization, A.M. and A.G.; validation, A.M., H.M., A.G. and D.R.; formal analysis, A.M. and A.G.; data curation, A.M.; writing—original draft preparation, A.M.; writing—review and editing, A.M., H.M., A.G. and D.R.; visualization, A.M.; supervision, H.M. and A.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chaurasia, R.; Mohindru, V. Unmanned aerial vehicle (UAV): A comprehensive survey. In Unmanned Aerial Vehicles for Internet of Things (IoT) Concepts, Techniques, and Applications; Wiley: Hoboken, NJ, USA, 2021; pp. 1–27. [Google Scholar]

- Collins, L.; Ghassemi, P.; Esfahani, E.T.; Doermann, D.; Dantu, K.; Chowdhury, S. Scalable coverage path planning of multi-robot teams for monitoring non-convex areas. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 7393–7399. [Google Scholar]

- Veramendi, W.N.C.; Cruvinel, P.E. Algorithm for the Countering Maize Plants Based on UAV, Digital Image Processing and Semantic Modeling. In Proceedings of the IEEE 15th International Conference on Semantic Computing (ICSC), Laguna Hills, CA, USA, 27–29 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 393–397. [Google Scholar]

- Merei, A.; Mcheick, H.; Ghaddar, A. Survey on Path Planning for UAVs in Healthcare Missions. J. Med. Syst. 2023, 47, 79. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zhang, S.; Zhang, X.; Zhang, X.; Liu, J. Near-Optimal 3-D Visual Coverage for Quadrotor Unmanned Aerial Vehicles Under Photogrammetric Constraints. IEEE Trans. Ind. Electron. 2021, 69, 1694–1704. [Google Scholar] [CrossRef]

- Ghaddar, A.; Merei, A. Energy-Aware Grid Based Coverage Path Planning for UAVs. In Proceedings of the Thirteenth International Conference on Sensor Technologies and Applications SENSORCOMM, Nice, France, 27–31 October 2019; pp. 34–45. [Google Scholar]

- Hassan, J.; Bokani, A.; Kanhere, S.S. Recharging of flying base stations using airborne rf energy sources. In Proceedings of the IEEE Wireless Communications and Networking Conference Workshop (WCNCW), Marrakech, Morocco, 15–19 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Alcántara, A.; Capitán, J.; Cunha, R.; Ollero, A. Optimal trajectory planning for cinematography with multiple unmanned aerial vehicles. Robot. Auton. Syst. 2021, 140, 103778. [Google Scholar] [CrossRef]

- Munawar, H.S.; Hammad, A.W.; Waller, S.T.; Thaheem, M.J.; Shrestha, A. An integrated approach for post-disaster flood management via the use of cutting-edge technologies and UAVs: A review. Sustainability 2021, 13, 7925. [Google Scholar] [CrossRef]

- Wenguang, L.; Zhiming, Z. Intelligent surveillance and reconnaissance mode of police UAV based on grid. In Proceedings of the 7th International Symposium on Mechatronics and Industrial Informatics (ISMII), Zhuhai, China, 22–24 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 292–295. [Google Scholar]

- Shobeiry, P.; Xin, M.; Hu, X.; Chao, H. UAV path planning for wildfire tracking using partially observable Markov decision process. In Proceedings of the AIAA Scitech 2021 Forum, Virtual, 11–21 January 2021; p. 1677. [Google Scholar]

- Cabreira, T.M.; Brisolara, L.B.; Paulo R, F.J. Survey on coverage path planning with unmanned aerial vehicles. Drones 2019, 3, 4. [Google Scholar] [CrossRef]

- Zear, A.; Ranga, V. Path planning of unmanned aerial vehicles: Current state and future challenges. In Proceedings of the First International Conference on Sustainable Technologies for Computational Intelligence, Las Vegas, NV, USA, 16–18 December 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 409–419. [Google Scholar]

- Israr, A.; Ali, Z.A.; Alkhammash, E.H.; Jussila, J.J. Optimization Methods Applied to Motion Planning of Unmanned Aerial Vehicles: A Review. Drones 2022, 6, 126. [Google Scholar] [CrossRef]

- Huang, H.; Savkin, A.V. Towards the internet of flying robots: A survey. Sensors 2018, 18, 4038. [Google Scholar] [CrossRef]

- Yasin, J.N.; Mohamed, S.A.; Haghbayan, M.H.; Heikkonen, J.; Tenhunen, H.; Plosila, J. Unmanned aerial vehicles (uavs): Collision avoidance systems and approaches. IEEE Access 2020, 8, 105139–105155. [Google Scholar] [CrossRef]

- Pham, H.; Smolka, S.A.; Stoller, S.D.; Phan, D.; Yang, J. A survey on unmanned aerial vehicle collision avoidance systems. arXiv 2015, arXiv:1508.07723. [Google Scholar]

- Tang, J.; Lao, S.; Wan, Y. Systematic review of collision-avoidance approaches for unmanned aerial vehicles. IEEE Syst. J. 2021, 16, 4356–4367. [Google Scholar] [CrossRef]

- Dushime, K.; Nkenyereye, L.; Yoo, S.K.; Song, J. A Review on Collision Avoidance Systems for Unmanned Aerial Vehicles. In Proceedings of the International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 20–22 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1150–1155. [Google Scholar]

- Do, H.T.; Hua, H.T.; Nguyen, M.T.; Nguyen, C.V.; Nguyen, H.T.; Nguyen, H.T.; Nguyen, N.T. Formation control algorithms for multiple-uavs: A comprehensive survey. EAI Endorsed Trans. Ind. Netw. Intell. Syst. 2021, 8, e3. [Google Scholar] [CrossRef]

- Sharma, A.; Shoval, S.; Sharma, A.; Pandey, J.K. Path planning for multiple targets interception by the swarm of UAVs based on swarm intelligence algorithms: A review. IETE Tech. Rev. 2022, 39, 675–697. [Google Scholar] [CrossRef]

- Kewang, Z.; Tenghuan, D. Research on Obstacle Avoidance Control Method of Multi-UAV Based on Model Predictive Control. In Proceedings of the International Conference on Electronics, Circuits and Information Engineering (ECIE), Zhengzhou, China, 22–24 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 357–362. [Google Scholar]

- Ghaddar, A.; Merei, A.; Natalizio, E. PPS: Energy-Aware Grid-Based Coverage Path Planning for UAVs Using Area Partitioning in the Presence of NFZs. Sensors 2020, 20, 3742. [Google Scholar] [CrossRef] [PubMed]

- Valente, J.; Del Cerro, J.; Barrientos, A.; Sanz, D. Aerial coverage optimization in precision agriculture management: A musical harmony inspired approach. Comput. Electron. Agric. 2013, 99, 153–159. [Google Scholar] [CrossRef]

- Barrientos, A.; Colorado, J.; Cerro, J.d.; Martinez, A.; Rossi, C.; Sanz, D.; Valente, J. Aerial remote sensing in agriculture: A practical approach to area coverage and path planning for fleets of mini aerial robots. J. Field Robot. 2011, 28, 667–689. [Google Scholar] [CrossRef]

- Ann, S.; Kim, Y.; Ahn, J. Area allocation algorithm for multiple UAVs area coverage based on clustering and graph method. IFAC-PapersOnLine 2015, 48, 204–209. [Google Scholar] [CrossRef]

- Cabreira, T.M.; Ferreira, P.R.; Di Franco, C.; Buttazzo, G.C. Grid-based coverage path planning with minimum energy over irregular-shaped areas with UAVs. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 758–767. [Google Scholar]

- Wu, Y.; Gou, J.; Hu, X.; Huang, Y. A new consensus theory-based method for formation control and obstacle avoidance of UAVs. Aerosp. Sci. Technol. 2020, 107, 106332. [Google Scholar] [CrossRef]

- Dawnee, S.; Kumar, M.M.S.; Jayanth, S.; Singh, V.K. Experimental performance evaluation of various path planning algorithms for obstacle avoidance in UAVs. In Proceedings of the 3rd International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 12–14 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1029–1034. [Google Scholar]

- Radmanesh, M.; Kumar, M.; Guentert, P.H.; Sarim, M. Overview of path-planning and obstacle avoidance algorithms for UAVs: A comparative study. Unmanned Syst. 2018, 6, 95–118. [Google Scholar] [CrossRef]

- Madridano, Á.; Al-Kaff, A.; Flores, P.; Martín, D.; de la Escalera, A. Software Architecture for Autonomous and Coordinated Navigation of UAV Swarms in Forest and Urban Firefighting. Appl. Sci. 2021, 11, 1258. [Google Scholar] [CrossRef]

- Khan, M.T.R.; Muhammad Saad, M.; Ru, Y.; Seo, J.; Kim, D. Aspects of unmanned aerial vehicles path planning: Overview and applications. Int. J. Commun. Syst. 2021, 34, e4827. [Google Scholar] [CrossRef]

- Lu, Y.; Xue, Z.; Xia, G.S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Norland, K.; Marcellin, M.W. Dynamic obstacle characterization and avoidance for unmanned aerial systems. In Proceedings of the International Telemetering Conference, Glendale, AZ, USA, 5–8 November 2018. [Google Scholar]

- Ahmed, S.; Qiu, B.; Ahmad, F.; Kong, C.W.; Xin, H. A State-of-the-Art Analysis of Obstacle Avoidance Methods from the Perspective of an Agricultural Sprayer UAV’s Operation Scenario. Agronomy 2021, 11, 1069. [Google Scholar] [CrossRef]

- Wang, L.; Lan, Y.; Zhang, Y.; Zhang, H.; Tahir, M.N.; Ou, S.; Liu, X.; Chen, P. Applications and prospects of agricultural unmanned aerial vehicle obstacle avoidance technology in China. Sensors 2019, 19, 642. [Google Scholar] [CrossRef] [PubMed]

- Fevgas, G.; Lagkas, T.; Argyriou, V.; Sarigiannidis, P. Coverage path planning methods focusing on energy efficient and cooperative strategies for unmanned aerial vehicles. Sensors 2022, 22, 1235. [Google Scholar] [CrossRef]

- Ghaddar, A.; Merei, A. EAOA: Energy-Aware Grid-Based 3D-Obstacle Avoidance in Coverage Path Planning for UAVs. Future Internet 2020, 12, 29. [Google Scholar] [CrossRef]

- Yaghmaee, F.; Koohi, H.R. Dynamic obstacle avoidance by distributed algorithm based on reinforcement learning. Int. J. Eng. (IJE) Trans. B Appl. 2015, 28, 198–205. [Google Scholar]

- Zhao, R.; Sidobre, D. Dynamic Obstacle avoidance using online trajectory time-scaling and local replanning. In Proceedings of the 2015 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Colmar, France, 21–23 July 2015; IEEE: Piscataway, NJ, USA, 2015; Volume 2, pp. 414–421. [Google Scholar]

- Zhao, L. 3D Obstacle Avoidance for Unmanned Autonomous System (UAS). Master’s Thesis, University of Nevada, Las Vegas, NV, USA, 2015. [Google Scholar]

- Liu, C.; Wang, H.; Yao, P. UAV Autonomous Collision Avoidance Method Based on Three-dimensional Dynamic Collision Region Model and Interfered Fluid Dynamical System’. In Proceedings of the International Conference on Control And Automation, Bandung, Indonesia, 29–31 August 2016; pp. 263–269. [Google Scholar]

- Huang, S.; Teo, R.S.H.; Tan, K.K. Collision avoidance of multi unmanned aerial vehicles: A review. Annu. Rev. Control 2019, 48, 147–164. [Google Scholar] [CrossRef]

- Delamer, J.A.; Watanabe, Y.; Chanel, C.P. Safe path planning for UAV urban operation under GNSS signal occlusion risk. Robot. Auton. Syst. 2021, 142, 103800. [Google Scholar] [CrossRef]

- UCL. DepthmapX: Visual and Spatial Network Analysis Software; The Bartlett School of Architecture: London, UK, 2020; Available online: https://www.ucl.ac.uk/bartlett/architecture/research/space-syntax/depthmapx (accessed on 3 March 2025).

- Singla, A.; Padakandla, S.; Bhatnagar, S. Memory-based deep reinforcement learning for obstacle avoidance in UAV with limited environment knowledge. IEEE Trans. Intell. Transp. Syst. 2019, 22, 107–118. [Google Scholar] [CrossRef]

- Vanneste, S.; Bellekens, B.; Weyn, M. 3DVFH+: Real-time three-dimensional obstacle avoidance using an Octomap. In Proceedings of the MORSE Model-Driven Robot Software Engineering: 1st International Workshop on Model-Driven Robot Software Engineering Co-Located with International Conference on Software Technologies: Applications and Foundations (STAF 2014), York, UK, 21 July 2014; Assmann, U., Ed.; 2014. Number 1319. pp. 91–102. [Google Scholar]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Wu, W.; Chen, B.; Liu, D. Simulation of UAV-Based Indoor Mapping for Multi-Story Building Using a RGB-D SLAM Solution. In Proceedings of the 2024 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Glasgow, UK, 20–23 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Plascencia, A.C.; Beran, V.; Sedlmajer, K. Drone Sensory Data Processing for Advanced Drone Control for Augmented Reality. 2019. Available online: https://www.fit.vut.cz/research/publication/12409 (accessed on 3 March 2025).

- Elfes, A. Occupancy grids: A stochastic spatial representation for active robot perception. arXiv 2013, arXiv:1304.1098. [Google Scholar]

- Tai, J.J.; Phang, S.K.; Wong, F.Y.M. COAA*—An Optimized Obstacle Avoidance and Navigational Algorithm for UAVs Operating in Partially Observable 2D Environments. Unmanned Syst. 2022, 10, 159–174. [Google Scholar] [CrossRef]

- Peng, P.; Dong, W.; Chen, G.; Zhu, X. Obstacle Avoidance of Resilient UAV Swarm Formation with Active Sensing System in the Dense Environment. arXiv 2022, arXiv:2202.13381. [Google Scholar]

- Baeldung. Disparity map in Stereo Vision. Baeldung on Computer Science. 2022. Available online: https://www.baeldung.com/cs/disparity-map-stereo-vision (accessed on 3 March 2025).

- Gullotti, A. 3D Computer Graphics for Digital Humanities: Experiencing 1500–1600 Pavia’s Cultural Heritage Through the Digital Eye. Master’s Thesis, The University of Pavia, Pavia, Italy, 2020. [Google Scholar]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An Efficient Probabilistic 3D Mapping Framework Based on Octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Ruf, B.; Monka, S.; Kollmann, M.; Grinberg, M. Real-time on-board obstacle avoidance for UAVs based on embedded stereo vision. arXiv 2018, arXiv:1807.06271. [Google Scholar] [CrossRef]

- Han, D.; Yang, Q.; Wang, R. Three-dimensional obstacle avoidance for UAV based on reinforcement learning and RealSense. J. Eng. 2020, 2020, 540–544. [Google Scholar] [CrossRef]

- Yaoming, Z.; Yu, S.; Anhuan, X.; Lingyu, K. A newly bio-inspired path planning algorithm for autonomous obstacle avoidance of UAV. Chin. J. Aeronaut. 2021, 34, 199–209. [Google Scholar]

- Iacono, M.; Sgorbissa, A. Path following and obstacle avoidance for an autonomous UAV using a depth camera. Robot. Auton. Syst. 2018, 106, 38–46. [Google Scholar] [CrossRef]

- Chen, Z.; Luo, X.; Dai, B. Design of obstacle avoidance system for micro-uav based on binocular vision. In Proceedings of the International Conference on Industrial Informatics-Computing Technology, Intelligent Technology, Industrial Information Integration (ICIICII), Wuhan, China, 2–3 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 67–70. [Google Scholar]

- Stefansson, T. 3D Obstacle Avoidance for Drones Using a Realistic Sensor Setup; KTH: Stockholm, Sweden, 2018. [Google Scholar]

- Ferrera, E.; Alcántara, A.; Capitán, J.; Castaño, A.R.; Marrón, P.J.; Ollero, A. Decentralized 3d collision avoidance for multiple uavs in outdoor environments. Sensors 2018, 18, 4101. [Google Scholar] [CrossRef]

- Roghair, J.; Niaraki, A.; Ko, K.; Jannesari, A. A vision based deep reinforcement learning algorithm for uav obstacle avoidance. In Proceedings of the SAI Intelligent Systems Conference, Virtual, 2–3 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 115–128. [Google Scholar]

- Xue, Z.; Gonsalves, T. Vision Based Drone Obstacle Avoidance by Deep Reinforcement Learning. AI 2021, 2, 366–380. [Google Scholar] [CrossRef]

- Yu, Y.; Tingting, W.; Long, C.; Weiwei, Z. Stereo vision based obstacle avoidance strategy for quadcopter UAV. In Proceedings of the Chinese Control Furthermore, Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 490–494. [Google Scholar]

- Al-Kaff, A.; García, F.; Martín, D.; De La Escalera, A.; Armingol, J.M. Obstacle detection and avoidance system based on monocular camera and size expansion algorithm for UAVs. Sensors 2017, 17, 1061. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Zhang, F.; Huang, P.; Wang, C.; Yuanhao, L. Autonomous obstacle avoidance for uav based on fusion of radar and monocular camera. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 5954–5961. [Google Scholar]

- Mori, T.; Scherer, S. First results in detecting and avoiding frontal obstacles from a monocular camera for micro unmanned aerial vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1750–1757. [Google Scholar]

- Park, B.; Oh, H. Vision-based obstacle avoidance for UAVs via imitation learning with sequential neural networks. Int. J. Aeronaut. Space Sci. 2020, 21, 768–779. [Google Scholar] [CrossRef]

- Lee, H.; Ho, H.W.; Zhou, Y. Deep learning-based monocular obstacle avoidance for unmanned aerial vehicle navigation in tree plantations. J. Intell. Robot. Syst. 2021, 101, 5. [Google Scholar] [CrossRef]

- Chen, H.; Lu, P.; Xiao, C. Dynamic obstacle avoidance for UAVs using a fast trajectory planning approach. In Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO), Dali, China, 6–8 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1459–1464. [Google Scholar]

- Santos, M.C.; Santana, L.V.; Brandao, A.S.; Sarcinelli-Filho, M. UAV obstacle avoidance using RGB-D system. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 312–319. [Google Scholar]

- Wang, D.; Li, W.; Liu, X.; Li, N.; Zhang, C. UAV environmental perception and autonomous obstacle avoidance: A deep learning and depth camera combined solution. Comput. Electron. Agric. 2020, 175, 105523. [Google Scholar] [CrossRef]

- Kuncha, P.; Sastry, A.; Chaitanya, M. Dynamic Route Planning and Obstacle Avoidance Model for Unmanned Aerial Vehicles. Int. J. Pure Appl. Math. 2017, 116, 315–329. [Google Scholar]

- Parappat, P.B.; Kumar, A.; Mittal, R.; Khan, S.A. Obstacle avoidance by unmanned aerial vehicles using image recognition techniques. In Proceedings of the International Conference on Circuits, Systems, Communications and Computers, San Francisco, CA, USA, 22–24 October 2014; Volume 1, pp. 378–381. [Google Scholar]

- Shin, S.Y.; Kang, Y.W.; Kim, Y.G. Obstacle avoidance drone by deep reinforcement learning and its racing with human pilot. Appl. Sci. 2019, 9, 5571. [Google Scholar] [CrossRef]

- Kim, D.H.; Go, Y.G.; Choi, S.M. First-person-view drone flying in mixed reality. In SIGGRAPH Asia 2018 Posters, Proceedings of the SA ’18: SIGGRAPH Asia 2018, Tokyo, Japan, 4–7 December 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–2. [Google Scholar]

- Smolyanskiy, N.; Gonzalez-Franco, M. Stereoscopic first person view system for drone navigation. Front. Robot. AI 2017, 4, 11. [Google Scholar] [CrossRef]

- Tierney, B.B.; Rodenbeck, C.T. 3D-Sensing MIMO Radar for UAV Formation Flight and Obstacle Avoidance. In Proceedings of the IEEE Radio and Wireless Symposium (RWS), Orlando, FL, USA, 20–23 January 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–3. [Google Scholar]

- Potena, C.; Nardi, D.; Pretto, A. Joint vision-based navigation, control and obstacle avoidance for UAVs in dynamic environments. In Proceedings of the European Conference on Mobile Robots (ECMR), Prague, Czech Republic, 4–6 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–7. [Google Scholar]

- Xu, Y.; Zhu, M.; Xu, Y.; Liu, M. Design and implementation of UAV obstacle avoidance System. In Proceedings of the 2nd International Conference on Safety Produce Informatization (IICSPI), Chongqing, China, 28–30 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 275–278. [Google Scholar]

- Gageik, N.; Benz, P.; Montenegro, S. Obstacle detection and collision avoidance for a UAV with complementary low-cost sensors. IEEE Access 2015, 3, 599–609. [Google Scholar] [CrossRef]

- Gao, K.; Xu, K. Research on Autonomous Obstacle Avoidance Flight Path Planning of Rotating Wing UAV. In Proceedings of the International Conference on Computer Technology, Electronics and Communication (ICCTEC), Dalian, China, 19–21 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1200–1203. [Google Scholar]

- Kenny, T. CHAPTER 14—Optical and Radiation Sensors. In Sensor Technology Handbook; Wilson, J.S., Ed.; Burlington: Newnes, Australia, 2005; pp. 307–320. [Google Scholar] [CrossRef]

- McGrath, M.J.; Scanaill, C.N. Key Sensor Technology Components: Hardware and Software Overview. In Sensor Technologies: Healthcare, Wellness, and Environmental Applications; Apress: Berkeley, CA, USA, 2013; pp. 51–77. [Google Scholar] [CrossRef]

- Wilson, J.S. (Ed.) Chapter 1—Sensor Fundamentals. In Sensor Technology Handbook; Burlington: Newnes, Australia, 2005; pp. 1–20. [Google Scholar] [CrossRef]

- Wilson, J.S. (Ed.) Chapter 3—Measurement Issues and Criteria. In Sensor Technology Handbook; Burlington: Newnes, Australia, 2005; pp. 29–30. [Google Scholar] [CrossRef]

- Kester, W. Chapter 4—Sensor Signal Conditioning. In Sensor Technology Handbook; Wilson, J.S., Ed.; Burlington: Newnes, Australia, 2005; pp. 31–136. [Google Scholar] [CrossRef]

- Aszkler, C. Chapter 5—Acceleration, Shock and Vibration Sensors. In Sensor Technology Handbook; Wilson, J.S., Ed.; Burlington: Newnes, Australia, 2005; pp. 137–159. [Google Scholar] [CrossRef]

- Wilson, J.S. (Ed.) Chapter 15—Position and Motion Sensors. In Sensor Technology Handbook; Burlington: Newnes, Australia, 2005; pp. 321–409. [Google Scholar] [CrossRef]

- Bashir, N.; Boudjit, S.; Dauphin, G.; Zeadally, S. An obstacle avoidance approach for UAV path planning. Simul. Model. Pract. Theory 2023, 129, 102815. [Google Scholar] [CrossRef]

- Lin, Y.; Gao, F.; Qin, T.; Gao, W.; Liu, T.; Wu, W.; Yang, Z.; Shen, S. Autonomous aerial navigation using monocular visual-inertial fusion. J. Field Robot. 2018, 35, 23–51. [Google Scholar] [CrossRef]

- Xu, Z.; Zhan, X.; Chen, B.; Xiu, Y.; Yang, C.; Shimada, K. A real-time dynamic obstacle tracking and mapping system for UAV navigation and collision avoidance with an RGB-D camera. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 10645–10651. [Google Scholar]

- Du, H.; Wang, Z.; Zhang, X. Ef-ttoa: Development of a uav path planner and obstacle avoidance control framework for static and moving obstacles. Drones 2023, 7, 359. [Google Scholar] [CrossRef]

- Geng, Q.; Shuai, H.; Hu, Q. Obstacle avoidance approaches for quadrotor UAV based on backstepping technique. In Proceedings of the 25th Chinese Control and Decision Conference (CCDC), Guiyang, China, 25–27 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 3613–3617. [Google Scholar]

- Choi, M.; Rubenecia, A.; Shon, T.; Choi, H.H. Velocity obstacle based 3D collision avoidance scheme for low-cost micro UAVs. Sustainability 2017, 9, 1174. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, X.; Feng, B. Research on obstacle avoidance path planning of UAV in complex environments based on improved Bézier curve. Sci. Rep. 2023, 13, 16453. [Google Scholar] [CrossRef] [PubMed]

- Chakravarthy, A.; Ghose, D. Obstacle avoidance in a dynamic environment: A collision cone approach. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 1998, 28, 562–574. [Google Scholar] [CrossRef]

- Chakravarthy, A.; Ghose, D. Generalization of the collision cone approach for motion safety in 3-D environments. Auton. Robot. 2012, 32, 243–266. [Google Scholar] [CrossRef]

- Agnel Tony, L.; Ghose, D.; Chakravarthy, A. Unmanned aerial vehicle mid-air collision detection and resolution using avoidance maps. J. Aerosp. Inf. Syst. 2021, 18, 506–529. [Google Scholar] [CrossRef]

- Tony, L.A.; Ghose, D.; Chakravarthy, A. Avoidance maps: A new concept in uav collision avoidance. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL USA, 13–16 June 2017; pp. 1483–1492. [Google Scholar]

- Ma, Z.; Wang, Z.; Ma, A.; Liu, Y.; Niu, Y. A Low-Altitude Obstacle Avoidance Method for UAVs Based on Polyhedral Flight Corridor. Drones 2023, 7, 588. [Google Scholar] [CrossRef]

- Trujillo, M.A.; Becerra, H.M.; Gómez-Gutiérrez, D.; Ruiz-León, J.; Ramírez-Treviño, A. Hierarchical task-based formation control and collision avoidance of UAVs in finite time. Eur. J. Control 2021, 60, 48–64. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Hu, J.; Niu, Y.; Wang, Z. Obstacle avoidance methods for rotor UAVs using RealSense camera. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 7151–7155. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Jiang, W.; Cai, T.; Xu, G.; Wang, Y. Autonomous obstacle avoidance and target tracking of UAV: Transformer for observation sequence in reinforcement learning. Knowl.-Based Syst. 2024, 290, 111604. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Aswini, N.; Uma, S. Custom Based Obstacle Detection Using Yolo v3 for Low Flying Drones. In Proceedings of the 2021 International Conference on Circuits, Controls and Communications (CCUBE), Bangalore, India, 23–24 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Jiang, C.; Ren, H.; Ye, X.; Zhu, J.; Zeng, H.; Nan, Y.; Sun, M.; Ren, X.; Huo, H. Object detection from UAV thermal infrared images and videos using YOLO models. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102912. [Google Scholar] [CrossRef]

- Han, X.; Wang, J.; Xue, J.; Zhang, Q. Intelligent decision-making for 3-dimensional dynamic obstacle avoidance of UAV based on deep reinforcement learning. In Proceedings of the 11th International Conference on Wireless Communications and Signal Processing (WCSP), Xi’an, China, 23–25 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]