Estimation of Amorphophallus Konjac Above-Ground Biomass by Integrating Spectral and Texture Information from Unmanned Aerial Vehicle-Based RGB Images

Abstract

:1. Introduction

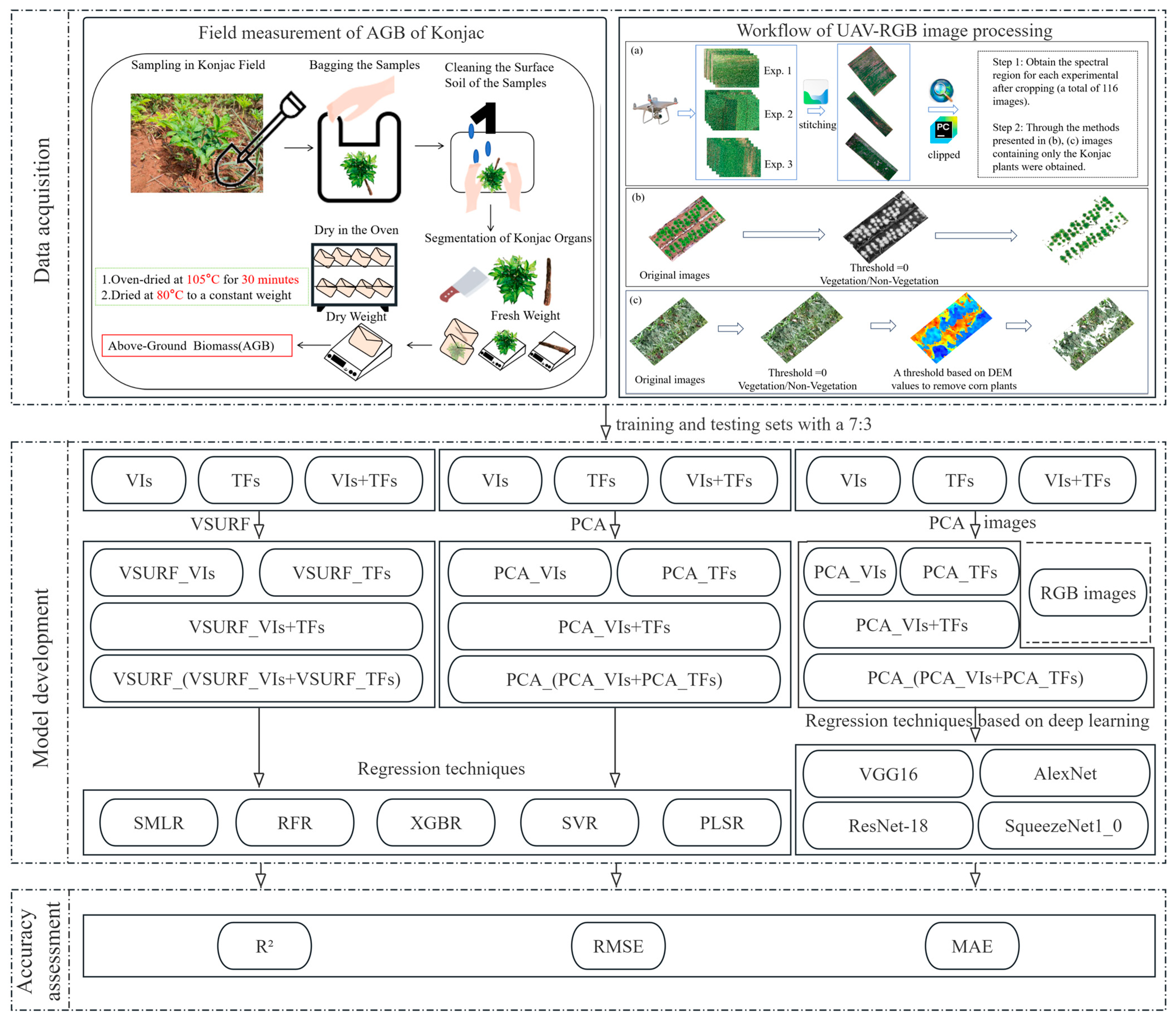

2. Materials and Methods

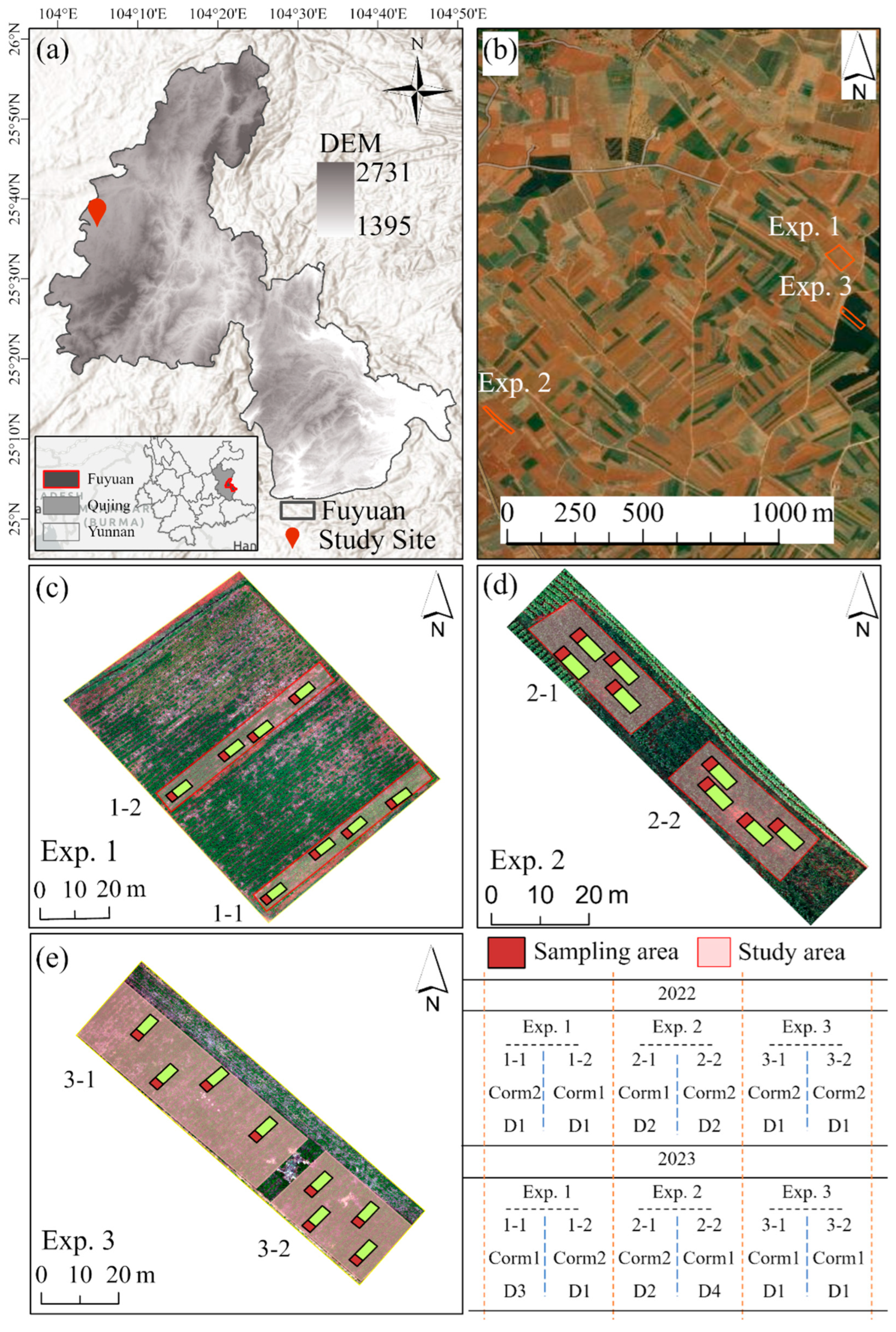

2.1. Experimental Design

2.2. Data Acquisition

2.2.1. Field Measurement of Konjac AGB

2.2.2. Image Collection

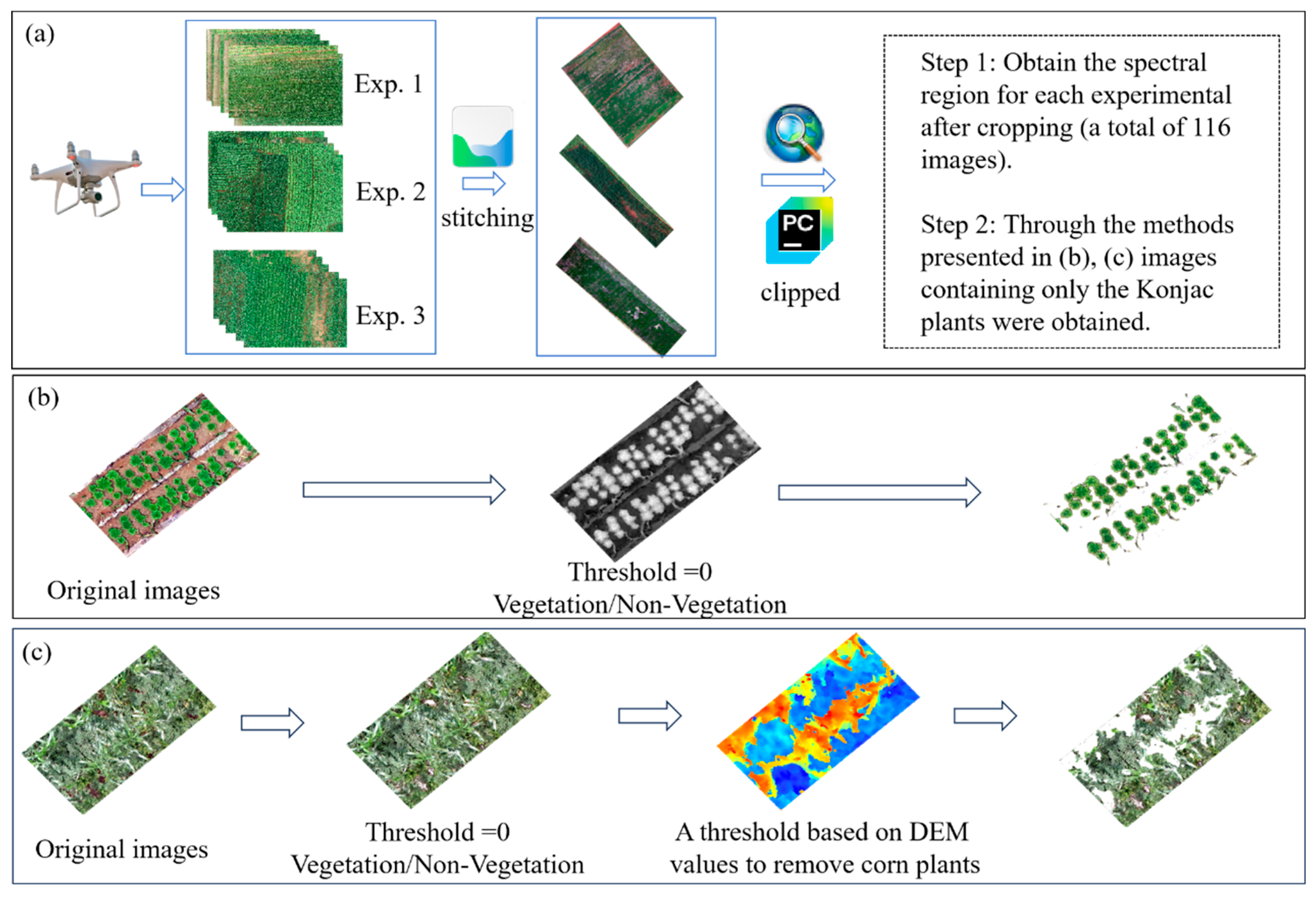

2.2.3. Image Processing

2.3. Feature Extraction

2.3.1. Spectral Vegetation Calculation

| VIs | Name | Formula | Reference |

|---|---|---|---|

| CIVE | Color Index of Vegetation | 0.441r − 0.811g + 0.385b + 18.78745 | [32] |

| EXB | Excess Blue Vegetation Index | 1.4b − g | [33] |

| EXG | Excess Green Vegetation Index | 2g − r − b | [33] |

| EXGR | Excess Green minus Excess Red Vegetation Index | 3g − 2.4r − b | [33] |

| EXR | Excess Red Vegetation Index | 1.4r − g | [34] |

| GLI | Green Leaf Index | (2g − r − b)/(2g + r + b) | [35] |

| GRRI | Green Red Ratio Index | r/g | [36] |

| MGRVI | Modified Green Red Vegetation Index | (g2 − r2)/(g2 + r2) | [37] |

| NDI | Normalized Difference Vegetation Index | (r − g)/(r + g + 0.01) | [38] |

| NGBDI | Normalized Green Blue Difference Index | (g − b)/(g + b) | [37] |

| NGRDI | Normalized Green Red Difference Index | (g − r)/(g + r) | [37] |

| RGBVI | Red Green Blue Vegetation Index | (g2 − br)/(g2 + br) | [37] |

| VARI | Visible Atmospherically Resistant Index | (g − r)/(g + r − b) | [39] |

2.3.2. Texture Feature Extraction

2.3.3. Feature Selection and Dimensionality Reduction

2.4. Regression Techniques

2.4.1. Stepwise Multiple Linear Regression

2.4.2. ML Regression Techniques

2.4.3. DL-Based Regression Techniques

2.5. Accuracy Assessment

3. Results

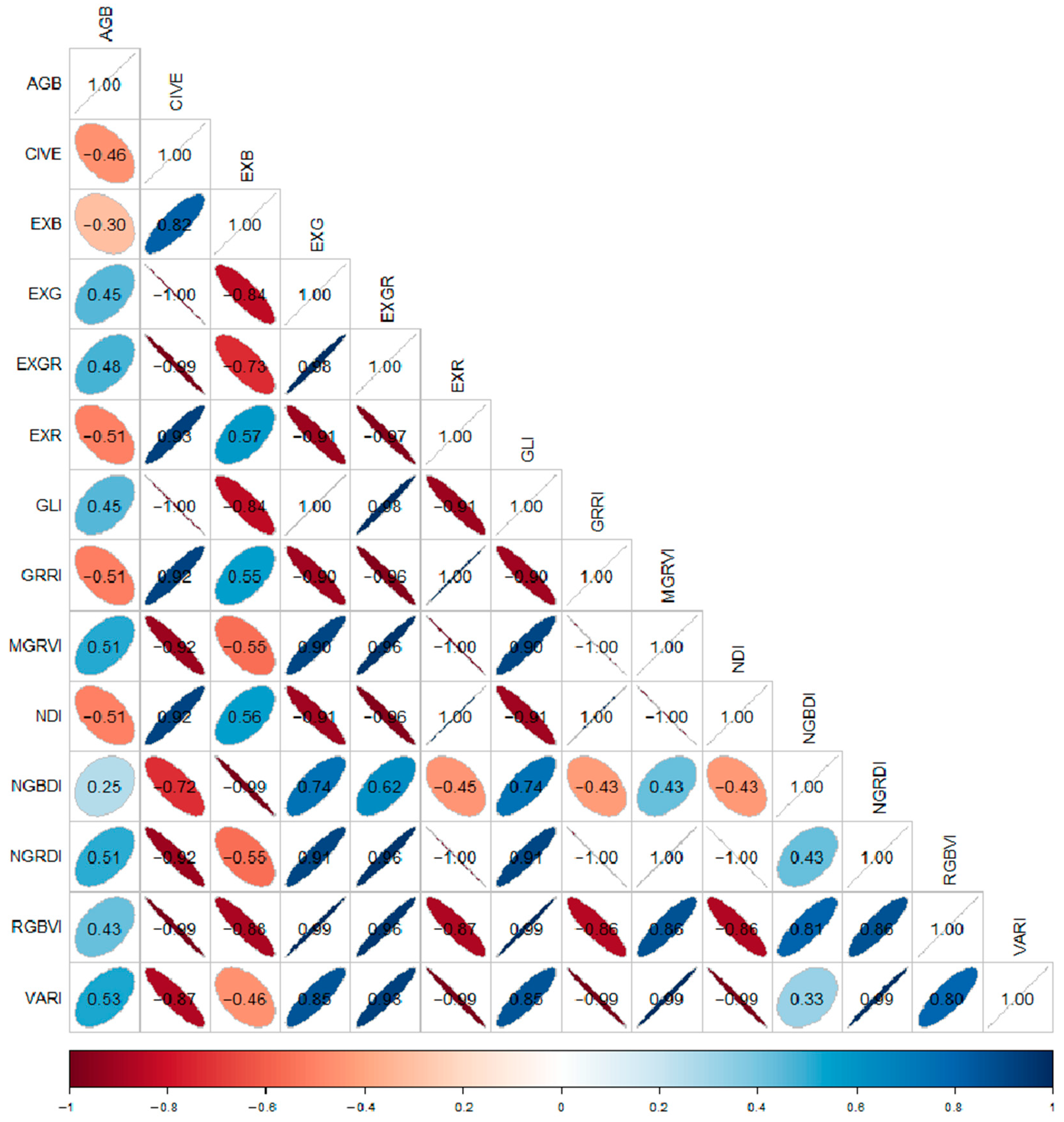

3.1. Correlation Analysis Between AGB and UAV-Derived Variables

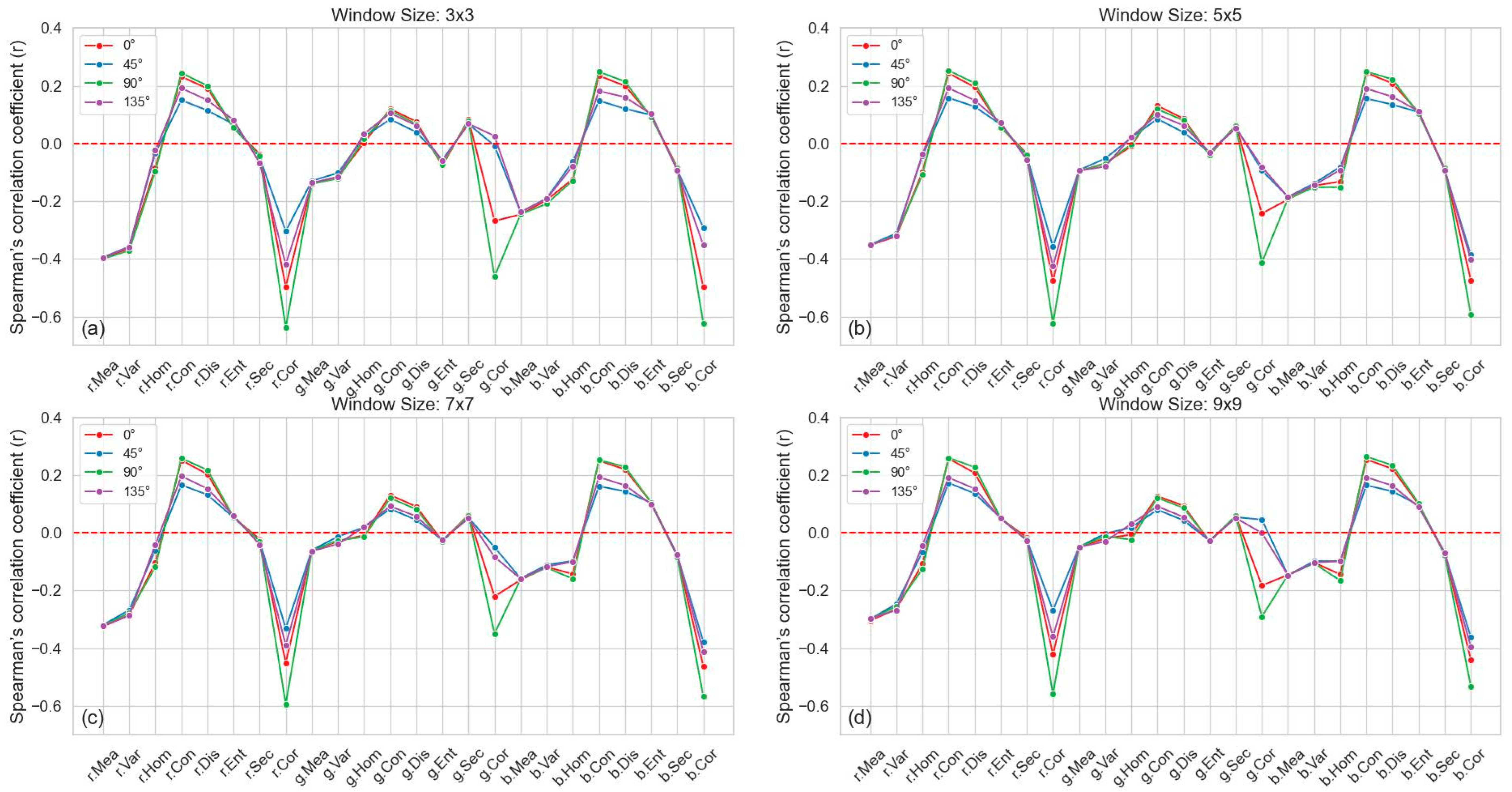

3.1.1. Correlation Analysis of AGB with VIs and TFs

3.1.2. Correlation Analysis Between AGB and Feature-Optimized Variables

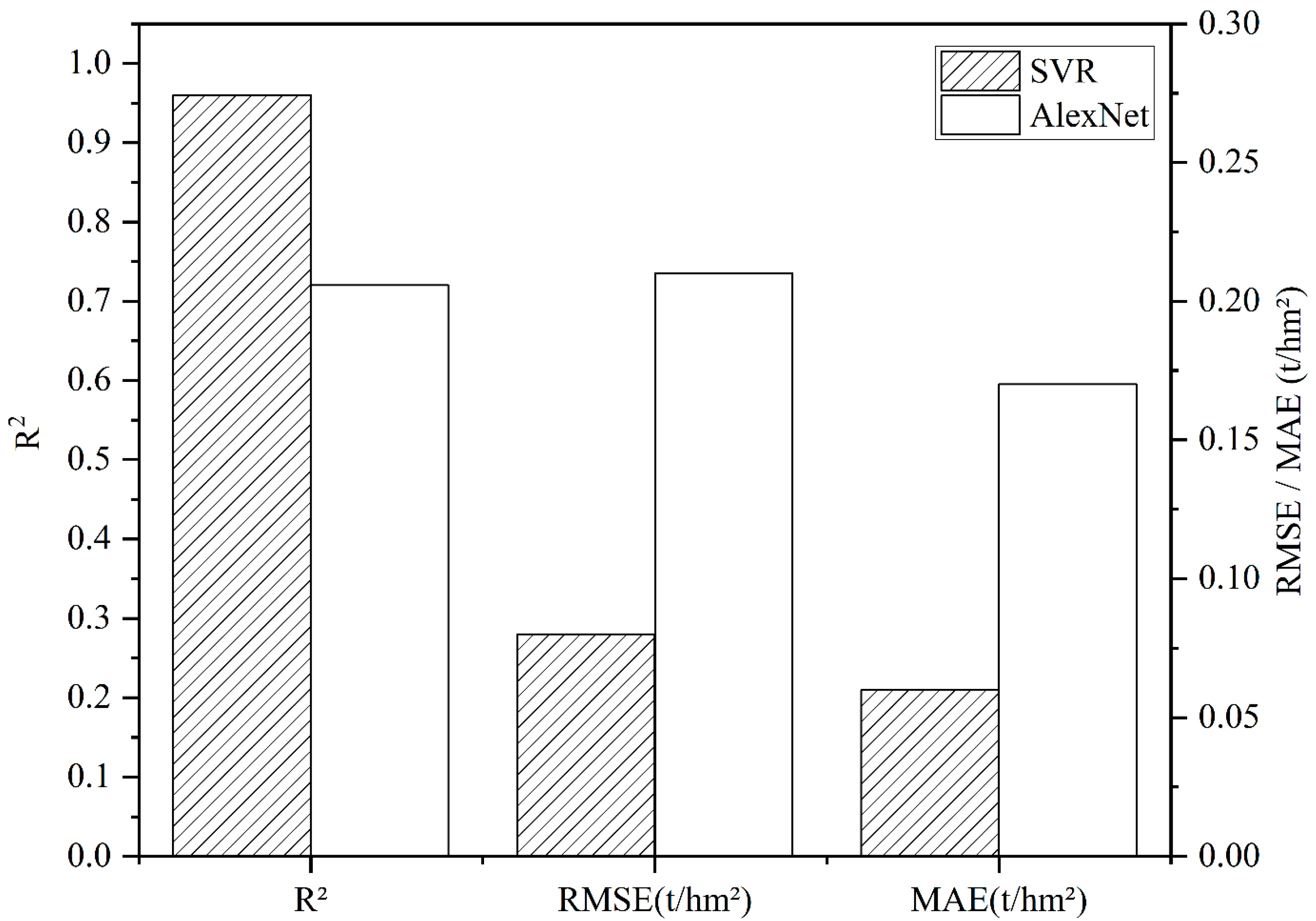

3.2. The Performance of SMLR and ML Regression Techniques Using Selected Variables

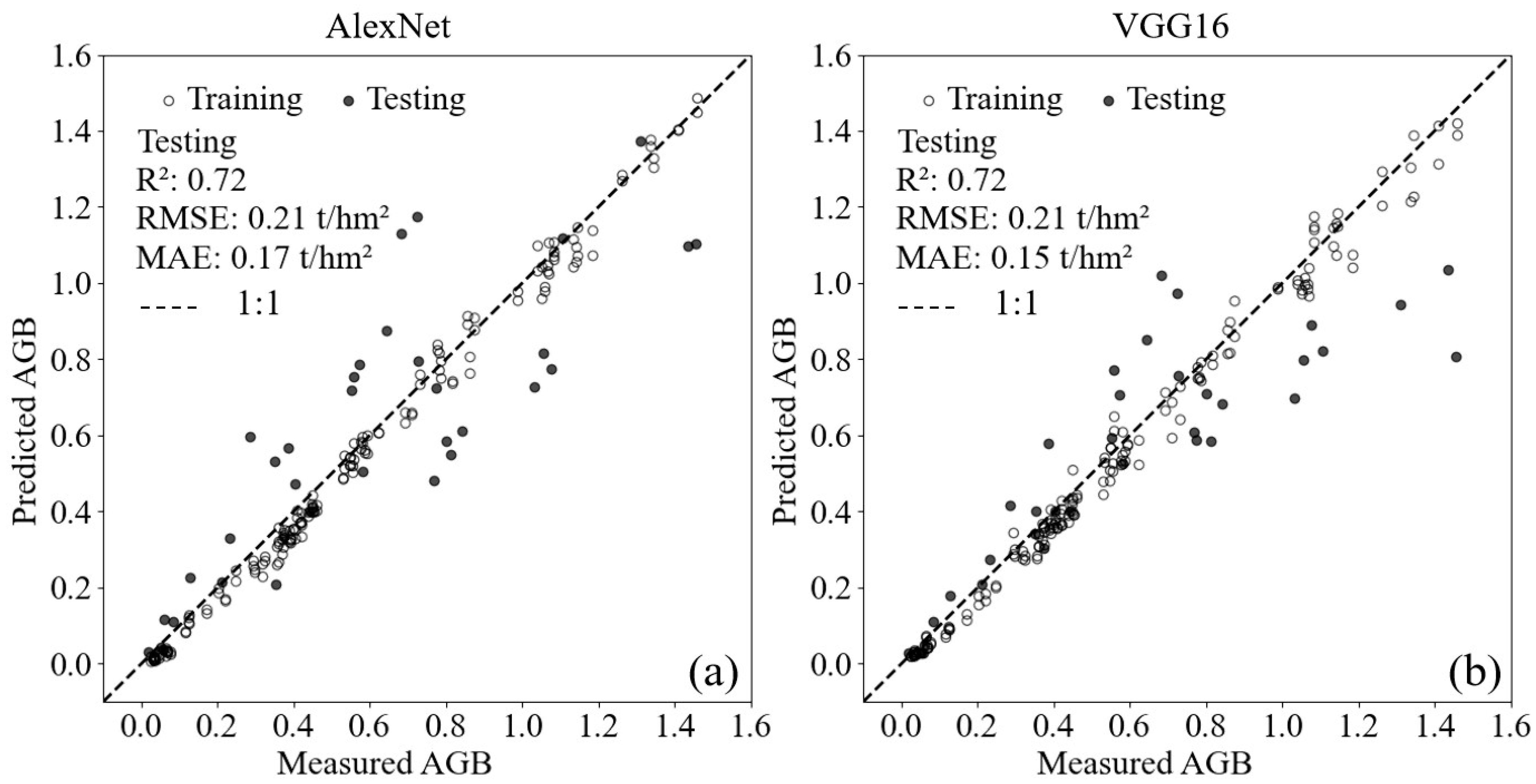

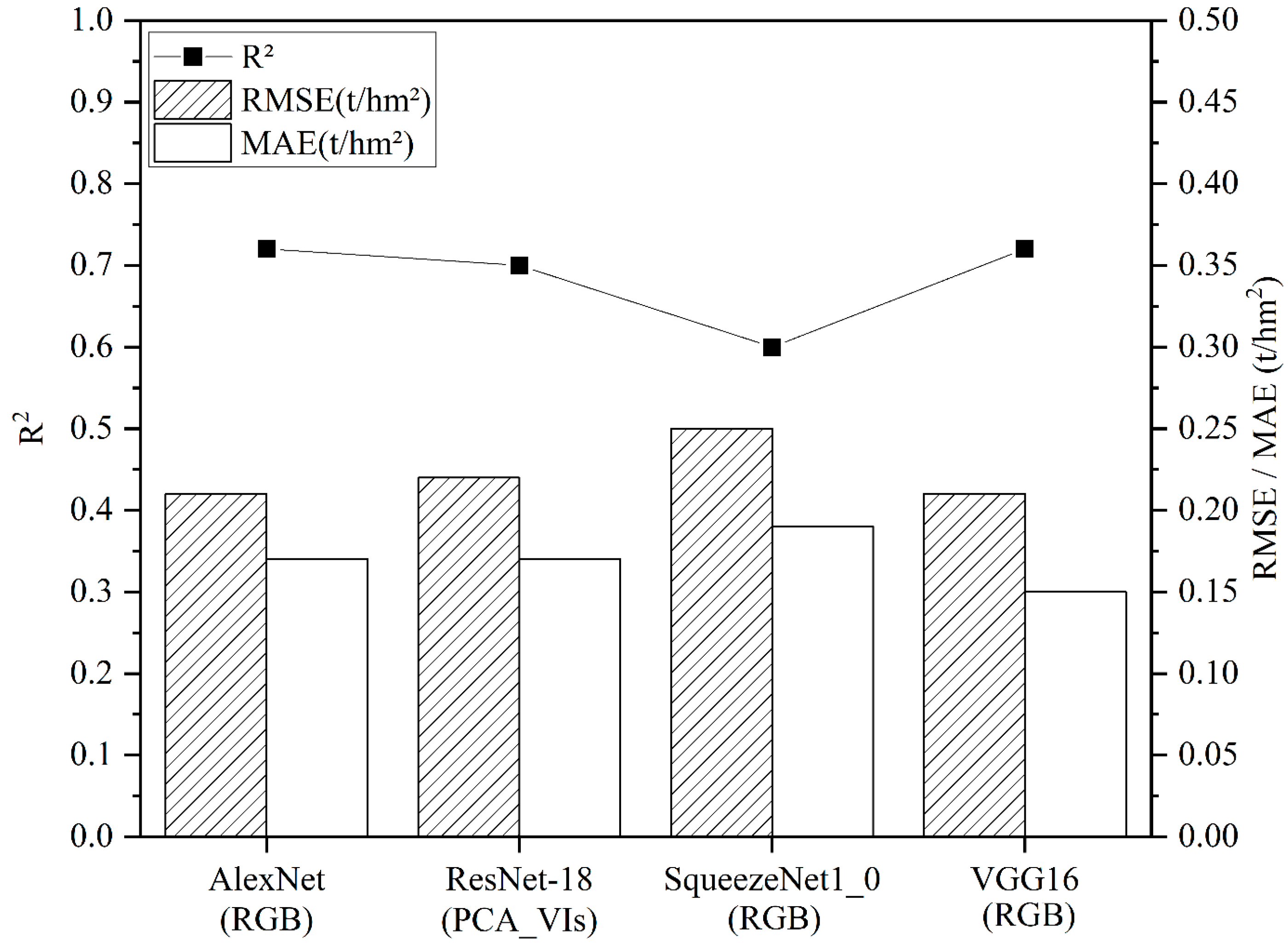

3.3. The Effectiveness of DL-Based Network Models Utilizing Selected Variables

4. Discussion

4.1. The Influence of GLCM Parameters on the Correlation with Konjac AGB

4.2. Advantages of ML Techniques

4.3. Advantages of DL Methods Combined with RGB Imagery for Konjac AGB Estimation Compared to PCA-Based Images

4.4. Potential Applications and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhuo, W.; Huang, J.; Li, L.; Zhang, X.; Ma, H.; Gao, X.; Huang, H.; Xu, B.; Xiao, X. Assimilating soil moisture retrieved from Sentinel-1 and Sentinel-2 data into WOFOST model to improve winter wheat yield estimation. Remote Sens. 2019, 11, 1618. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Jin, X.; Li, Z.; Yang, G. Estimation of potato above-ground biomass based on unmanned aerial vehicle red-green-blue images with different texture features and crop height. Front. Plant Sci. 2022, 13, 938216. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Tewari, S.; Singh, H.; Kumar, P.; Kumar, N.; Bisht, S.; Kushwaha, S.; Tamta, N.; Kaushal, R. Biomass accumulation and carbon stocks in different agroforestry system prevalent in Himalayan foothills, India. Curr. Sci. 2021, 120, 1083–1088. [Google Scholar] [CrossRef]

- Fu, Y.; Tan, H.; Kou, W.; Xu, W.; Wang, H.; Lu, N. Estimation of Rubber Plantation Biomass Based on Variable Optimization from Sentinel-2 Remote Sensing Imagery. Forests 2024, 15, 900. [Google Scholar] [CrossRef]

- Wang, L.; Jia, M.; Yin, D.; Tian, J. A review of remote sensing for mangrove forests: 1956–2018. Remote Sens. Environ. 2019, 231, 111223. [Google Scholar] [CrossRef]

- Duan, B.; Fang, S.; Gong, Y.; Peng, Y.; Wu, X.; Zhu, R. Remote estimation of grain yield based on UAV data in different rice cultivars under contrasting climatic zone. Field Crops Res. 2021, 267, 108148. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Jin, X.; Fan, Y.; Chen, R.; Bian, M.; Ma, Y.; Li, J.; Xu, B. Improving potato AGB estimation to mitigate phenological stage impacts through depth features from hyperspectral data. Comput. Electron. Agric. 2024, 219, 108808. [Google Scholar] [CrossRef]

- Guo, Y.; He, J.; Zhang, H.; Shi, Z.; Wei, P.; Jing, Y.; Yang, X.; Zhang, Y.; Wang, L.; Zheng, G. Improvement of Winter Wheat Aboveground Biomass Estimation Using Digital Surface Model Information Extracted from Unmanned-Aerial-Vehicle-Based Multispectral Images. Agriculture 2024, 14, 378. [Google Scholar] [CrossRef]

- Liu, T.; Yang, T.; Zhu, S.; Mou, N.; Zhang, W.; Wu, W.; Zhao, Y.; Yao, Z.; Sun, J.; Chen, C. Estimation of wheat biomass based on phenological identification and spectral response. Comput. Electron. Agric. 2024, 222, 109076. [Google Scholar] [CrossRef]

- Yang, H.; Li, F.; Wang, W.; Yu, K. Estimating Above-Ground Biomass of Potato Using Random Forest and Optimized Hyperspectral Indices. Remote Sens. 2021, 13, 2339. [Google Scholar] [CrossRef]

- Wang, F.; Yang, M.; Ma, L.; Zhang, T.; Qin, W.; Li, W.; Zhang, Y.; Sun, Z.; Wang, Z.; Li, F. Estimation of above-ground biomass of winter wheat based on consumer-grade multi-spectral UAV. Remote Sens. 2022, 14, 1251. [Google Scholar] [CrossRef]

- Song, E.; Shao, G.; Zhu, X.; Zhang, W.; Dai, Y.; Lu, J. Estimation of Plant Height and Biomass of Rice Using Unmanned Aerial Vehicle. Agronomy 2024, 14, 145. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef]

- Chen, M.; Yin, C.; Lin, T.; Liu, H.; Wang, Z.; Jiang, P.; Ali, S.; Tang, Q.; Jin, X. Integration of Unmanned Aerial Vehicle Spectral and Textural Features for Accurate Above-Ground Biomass Estimation in Cotton. Agronomy 2024, 14, 1313. [Google Scholar] [CrossRef]

- Shi, L.; Westerhuis, J.A.; Rosén, J.; Landberg, R.; Brunius, C. Variable selection and validation in multivariate modelling. Bioinformatics 2019, 35, 972–980. [Google Scholar] [CrossRef] [PubMed]

- Maćkiewicz, A.; Ratajczak, W. Principal components analysis (PCA). Comput. Geosci. 1993, 19, 303–342. [Google Scholar] [CrossRef]

- Genuer, R.; Poggi, J.-M.; Tuleau-Malot, C. VSURF: An R package for variable selection using random forests. R J. 2015, 7, 19–33. [Google Scholar] [CrossRef]

- Wengert, M.; Piepho, H.-P.; Astor, T.; Graß, R.; Wijesingha, J.; Wachendorf, M. Assessing spatial variability of barley whole crop biomass yield and leaf area index in silvoarable agroforestry systems using UAV-borne remote sensing. Remote Sens. 2021, 13, 2751. [Google Scholar] [CrossRef]

- Zhai, W.; Li, C.; Cheng, Q.; Mao, B.; Li, Z.; Li, Y.; Ding, F.; Qin, S.; Fei, S.; Chen, Z. Enhancing wheat above-ground biomass estimation using UAV RGB images and machine learning: Multi-feature combinations, flight height, and algorithm implications. Remote Sens. 2023, 15, 3653. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Li, Z.; Yang, G.; Song, X.; Yang, X.; Zhao, Y. Remote-sensing estimation of potato above-ground biomass based on spectral and spatial features extracted from high-definition digital camera images. Comput. Electron. Agric. 2022, 198, 107089. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Fan, Y.; Bian, M.; Ma, Y.; Jin, X.; Song, X.; Yang, G. Estimating potato above-ground biomass by using integrated unmanned aerial system-based optical, structural, and textural canopy measurements. Comput. Electron. Agric. 2023, 213, 108229. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, F.; Yue, J.; Zhu, W.; Fan, Y.; Fan, J.; Ma, Y.; Bian, M.; Chen, R.; Yang, G. Crop canopy volume weighted by color parameters from UAV-based RGB imagery to estimate above-ground biomass of potatoes. Comput. Electron. Agric. 2024, 227, 109678. [Google Scholar] [CrossRef]

- Huy, B.; Truong, N.Q.; Khiem, N.Q.; Poudel, K.P.; Temesgen, H. Deep learning models for improved reliability of tree aboveground biomass prediction in the tropical evergreen broadleaf forests. For. Ecol. Manag. 2022, 508, 120031. [Google Scholar] [CrossRef]

- Sapkota, B.B.; Popescu, S.; Rajan, N.; Leon, R.G.; Reberg-Horton, C.; Mirsky, S.; Bagavathiannan, M.V. Use of synthetic images for training a deep learning model for weed detection and biomass estimation in cotton. Sci. Rep. 2022, 12, 19580. [Google Scholar] [CrossRef]

- Vahidi, M.; Shafian, S.; Thomas, S.; Maguire, R. Pasture Biomass Estimation Using Ultra-High-Resolution RGB UAVs Images and Deep Learning. Remote Sens. 2023, 15, 5714. [Google Scholar] [CrossRef]

- Zhu, W.; Rezaei, E.E.; Nouri, H.; Sun, Z.; Li, J.; Yu, D.; Siebert, S. UAV flight height impacts on wheat biomass estimation via machine and deep learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 7471–7485. [Google Scholar] [CrossRef]

- Yu, D.; Zha, Y.; Sun, Z.; Li, J.; Jin, X.; Zhu, W.; Bian, J.; Ma, L.; Zeng, Y.; Su, Z. Deep convolutional neural networks for estimating maize above-ground biomass using multi-source UAV images: A comparison with traditional machine learning algorithms. Precis. Agric. 2023, 24, 92–113. [Google Scholar] [CrossRef]

- Patel, M.K.; Padarian, J.; Western, A.W.; Fitzgerald, G.J.; McBratney, A.B.; Perry, E.M.; Suter, H.; Ryu, D. Retrieving canopy nitrogen concentration and aboveground biomass with deep learning for ryegrass and barley: Comparing models and determining waveband contribution. Field Crops Res. 2023, 294, 108859. [Google Scholar] [CrossRef]

- Yang, Z.; Hu, K.; Kou, W.; Xu, W.; Wang, H.; Lu, N. Enhanced recognition and counting of high-coverage Amorphophallus konjac by integrating UAV RGB imagery and deep learning. Sci. Rep. 2025, 15, 6501. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. Automatica 1975, 11, 23–27. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Fan, Y.; Jin, X.; Song, X.; Yang, H.; Yang, G. Estimation of Potato Above-Ground Biomass Based on Vegetation Indices and Green-Edge Parameters Obtained from UAVs. Remote Sens. 2022, 14, 5323. [Google Scholar] [CrossRef]

- Cohen, J. Color Science: Concepts and Methods, Quantitative Data and Formulae. Am. J. Psychol. 1968, 81, 128–129. [Google Scholar] [CrossRef]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.; Burgos-Artizzu, X.; Ribeiro, A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Shigeto, K.; Makoto, N. An algorithm for estimating chlorophyll content in leaves using a video camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Rouse Jr, J.W.; Haas, R.H.; Deering, D.; Schell, J.; Harlan, J.C. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation; ScienceOpen: Berlin, Germany, 1974. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Xu, L.; Zhou, L.; Meng, R.; Zhao, F.; Lv, Z.; Xu, B.; Zeng, L.; Yu, X.; Peng, S. An improved approach to estimate ratoon rice aboveground biomass by integrating UAV-based spectral, textural and structural features. Precis. Agric. 2022, 23, 1276–1301. [Google Scholar] [CrossRef]

- The R Project for Statistical Computing. Available online: https://www.r-project.org/ (accessed on 28 February 2025).

- Silhavy, R.; Silhavy, P.; Prokopova, Z. Analysis and selection of a regression model for the use case points method using a stepwise approach. J. Syst. Softw. 2017, 125, 1–14. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Zhang, Y.; Xia, C.; Zhang, X.; Cheng, X.; Feng, G.; Wang, Y.; Gao, Q. Estimating the maize biomass by crop height and narrowband vegetation indices derived from UAV-based hyperspectral images. Ecol. Indic. 2021, 129, 107985. [Google Scholar] [CrossRef]

- Wold, S.; Ruhe, A.; Wold, H.; Dunn, I.W.J. The collinearity problem in linear regression. The partial least squares (PLS) approach to generalized inverses. SIAM J. Sci. Stat. Comput. 1984, 5, 735–743. [Google Scholar] [CrossRef]

- Yue, J.; Feng, H.; Yang, G.; Li, Z. A Comparison of Regression Techniques for Estimation of Above-Ground Winter Wheat Biomass Using Near-Surface Spectroscopy. Remote Sens. 2018, 10, 66. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Liang, Y.; Kou, W.; Lai, H.; Wang, J.; Wang, Q.; Xu, W.; Wang, H.; Lu, N. Improved estimation of aboveground biomass in rubber plantations by fusing spectral and textural information from UAV-based RGB imagery. Ecol. Indic. 2022, 142, 109286. [Google Scholar] [CrossRef]

- Weerts, H.; Mueller, A.C.; Vanschoren, J. Importance of tuning hyperparameters of machine learning algorithms. arXiv 2020, arXiv:2007.07588. [Google Scholar]

- Jia, Z.; Zhang, X.; Yang, H.; Lu, Y.; Liu, J.; Yu, X.; Feng, D.; Gao, K.; Xue, J.; Ming, B. Comparison and Optimal Method of Detecting the Number of Maize Seedlings Based on Deep Learning. Drones 2024, 8, 175. [Google Scholar] [CrossRef]

- Models and Pre-Trained Weights. Available online: https://pytorch.org/vision/stable/models.html (accessed on 28 February 2025).

- Castro, W.; Marcato Junior, J.; Polidoro, C.; Osco, L.P.; Goncalves, W.; Rodrigues, L.; Santos, M.; Jank, L.; Barrios, S.; Valle, C.; et al. Deep Learning Applied to Phenotyping of Biomass in Forages with UAV-Based RGB Imagery. Sensors 2020, 20, 4802. [Google Scholar] [CrossRef]

- Arumai Shiney, S.S.; Geetha, R.; Seetharaman, R.; Shanmugam, M. Leveraging Deep Learning Models for Targeted Aboveground Biomass Estimation in Specific Regions of Interest. Sustainability 2024, 16, 4864. [Google Scholar] [CrossRef]

- Zheng, H.; Ma, J.; Zhou, M.; Li, D.; Yao, X.; Cao, W.; Zhu, Y.; Cheng, T. Enhancing the Nitrogen Signals of Rice Canopies across Critical Growth Stages through the Integration of Textural and Spectral Information from Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2020, 12, 957. [Google Scholar] [CrossRef]

- Niu, Y.; Song, X.; Zhang, L.; Xu, L.; Wang, A.; Zhu, Q. Enhancing Model Accuracy of UAV-based Biomass Estimation by Evaluating Effects of Image Resolution and Texture Feature Extraction Strategy. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 878–891. [Google Scholar] [CrossRef]

- Tan, H.; Kou, W.; Xu, W.; Wang, L.; Wang, H.; Lu, N. Improved Estimation of Aboveground Biomass in Rubber Plantations Using Deep Learning on UAV Multispectral Imagery. Drones 2025, 9, 32. [Google Scholar] [CrossRef]

- Kurek, J.; Niedbała, G.; Wojciechowski, T.; Świderski, B.; Antoniuk, I.; Piekutowska, M.; Kruk, M.; Bobran, K. Prediction of Potato (Solanum tuberosum L.) Yield Based on Machine Learning Methods. Agriculture 2023, 13, 2259. [Google Scholar] [CrossRef]

- Zheng, C.; Abd-Elrahman, A.; Whitaker, V.M.; Dalid, C. Deep learning for strawberry canopy delineation and biomass prediction from high-resolution images. Plant Phenomics 2022, 2022, 9850486. [Google Scholar] [CrossRef]

- Tamiminia, H.; Salehi, B.; Mahdianpari, M.; Beier, C.M.; Klimkowski, D.J.; Volk, T.A. comparison of machine and deep learning methods to estimate shrub willow biomass from UAS imagery. Can. J. Remote Sens. 2021, 47, 209–227. [Google Scholar] [CrossRef]

| Name | Description |

|---|---|

| Exp. | The experiment was conducted in three plots; each experimental plot is prefixed with “Exp.” |

| Exp. 1 | Exp. 1 denotes the first plot. |

| Exp. 2 | Exp. 2 denotes the second plot. |

| Exp. 3 | Exp. 3 denotes the third plot. |

| D | The planting density is categorized into four levels, represented by “D”, followed by a number to indicate different density levels. |

| D1 | D1 represents the planting density of Konjac at 7 plants/m2. |

| D2 | D2 represents the planting density of Konjac at 10 plants/m2. |

| D3 | D3 represents the planting density of Konjac at 9 plants/m2. |

| D4 | D4 represents the planting density of Konjac at 9 plants/m2. |

| 1-1 | Each plot was further divided into two subplots; 1-1 represents the first subplot in Exp. 1. |

| 1-2 | 1-2 represents the second subplot in Exp. 1. |

| 2-1 | 2-1 represents the first subplot in Exp. 2. |

| 2-2 | 2-2 represents the second subplot in Exp. 2. |

| 3-1 | 3-1 represents the first subplot in Exp. 3. |

| 3-2 | 3-2 represents the second subplot in Exp. 3. |

| Date/Growth Period | |||

|---|---|---|---|

| Year | Seedling Stage (P1) | Tuber Initiation Stage (P2) | Tuber Enlargement Stage (P3) |

| 2022 | June 28 | August 4 | September 24 |

| 2023 | July 28 | August 17 | - |

| Regression Techniques | Parameters |

|---|---|

| RFR | ntree = 500, nodesize = 1 |

| XGBR | max_depth = 6, eta = 0.01 |

| PLSR | - |

| SVR | kernel = “radial” |

| Dataset | Min | Mean | Max | Standard Deviation | Coefficient of Variation (%) |

|---|---|---|---|---|---|

| Training | 0.03 | 0.56 | 1.46 | 0.39 | 68.63 |

| Testing | 0.02 | 0.60 | 1.46 | 0.40 | 66.93 |

| All | 0.02 | 0.57 | 1.46 | 0.39 | 67.86 |

| Variable Name | Parameters |

|---|---|

| VSURF_VIs | VARI, GRRI, RGBVI |

| VSURF_TFs | r.Cor, b.Cor, r.Con, r.Mea, b.Con, g.Sec, b.Sec |

| VSURF_(VIs+TFs) | VARI, r.Cor, GRRI, MGRVI, b.Cor, RGBVI, CIVE, r.Con, g.Var, g.Mea |

| VSURF_(VSURF_VIs+VSURF_TFs) | VARI, GRRI, r.Cor, b.Cor, RGBVI, r.Con |

| Method | Assessment Metrics | VSURF_VIs | VSURF_TFs | VSURF_VIs+TFs | VSURF_(VSURF_VIs+VSURF_TFs) |

|---|---|---|---|---|---|

| SMLR | R2 | 0.22 | 0.41 | 0.60 | 0.44 |

| RMSE (t/hm2) | 0.35 | 0.30 | 0.25 | 0.30 | |

| MAE (t/hm2) | 0.27 | 0.25 | 0.20 | 0.23 | |

| RFR | R2 | 0.50 | 0.46 | 0.64 | 0.62 |

| RMSE (t/hm2) | 0.28 | 0.29 | 0.24 | 0.24 | |

| MAE (t/hm2) | 0.20 | 0.20 | 0.18 | 0.18 | |

| XGBR | R2 | 0.45 | 0.42 | 0.51 | 0.52 |

| RMSE (t/hm2) | 0.29 | 0.30 | 0.27 | 0.27 | |

| MAE (t/hm2) | 0.22 | 0.22 | 0.22 | 0.21 | |

| PLSR | R2 | 0.24 | 0.40 | 0.60 | 0.44 |

| RMSE (t/hm2) | 0.34 | 0.31 | 0.25 | 0.29 | |

| MAE (t/hm2) | 0.26 | 0.24 | 0.2 | 0.23 | |

| SVR | R2 | 0.42 | 0.31 | 0.57 | 0.53 |

| RMSE (t/hm2) | 0.30 | 0.33 | 0.26 | 0.27 | |

| MAE (t/hm2) | 0.21 | 0.22 | 0.20 | 0.19 |

| DL | Images | R2 | RMSE (t/hm2) | MAE (t/hm2) | Training Time |

|---|---|---|---|---|---|

| AlexNet | RGB | 0.72 | 0.21 | 0.17 | 2 min |

| ResNet-18 | PCA_VIs | 0.70 | 0.22 | 0.17 | 2 min |

| SqueezeNet1_0 | RGB | 0.6 | 0.25 | 0.19 | 4 min |

| VGG16 | RGB | 0.72 | 0.21 | 0.15 | 120 min |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Qi, H.; Hu, K.; Kou, W.; Xu, W.; Wang, H.; Lu, N. Estimation of Amorphophallus Konjac Above-Ground Biomass by Integrating Spectral and Texture Information from Unmanned Aerial Vehicle-Based RGB Images. Drones 2025, 9, 220. https://doi.org/10.3390/drones9030220

Yang Z, Qi H, Hu K, Kou W, Xu W, Wang H, Lu N. Estimation of Amorphophallus Konjac Above-Ground Biomass by Integrating Spectral and Texture Information from Unmanned Aerial Vehicle-Based RGB Images. Drones. 2025; 9(3):220. https://doi.org/10.3390/drones9030220

Chicago/Turabian StyleYang, Ziyi, Hongjuan Qi, Kunrong Hu, Weili Kou, Weiheng Xu, Huan Wang, and Ning Lu. 2025. "Estimation of Amorphophallus Konjac Above-Ground Biomass by Integrating Spectral and Texture Information from Unmanned Aerial Vehicle-Based RGB Images" Drones 9, no. 3: 220. https://doi.org/10.3390/drones9030220

APA StyleYang, Z., Qi, H., Hu, K., Kou, W., Xu, W., Wang, H., & Lu, N. (2025). Estimation of Amorphophallus Konjac Above-Ground Biomass by Integrating Spectral and Texture Information from Unmanned Aerial Vehicle-Based RGB Images. Drones, 9(3), 220. https://doi.org/10.3390/drones9030220