Artificial Intelligence in Predicting Mechanical Properties of Composite Materials

Abstract

:1. Introduction

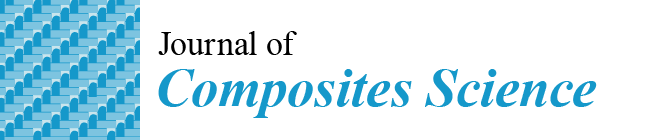

2. Overview of Artificial Intelligence in the Prediction of Material Properties

3. Traditional Machine Learning Methods for Predicting the Mechanical Properties of Composites

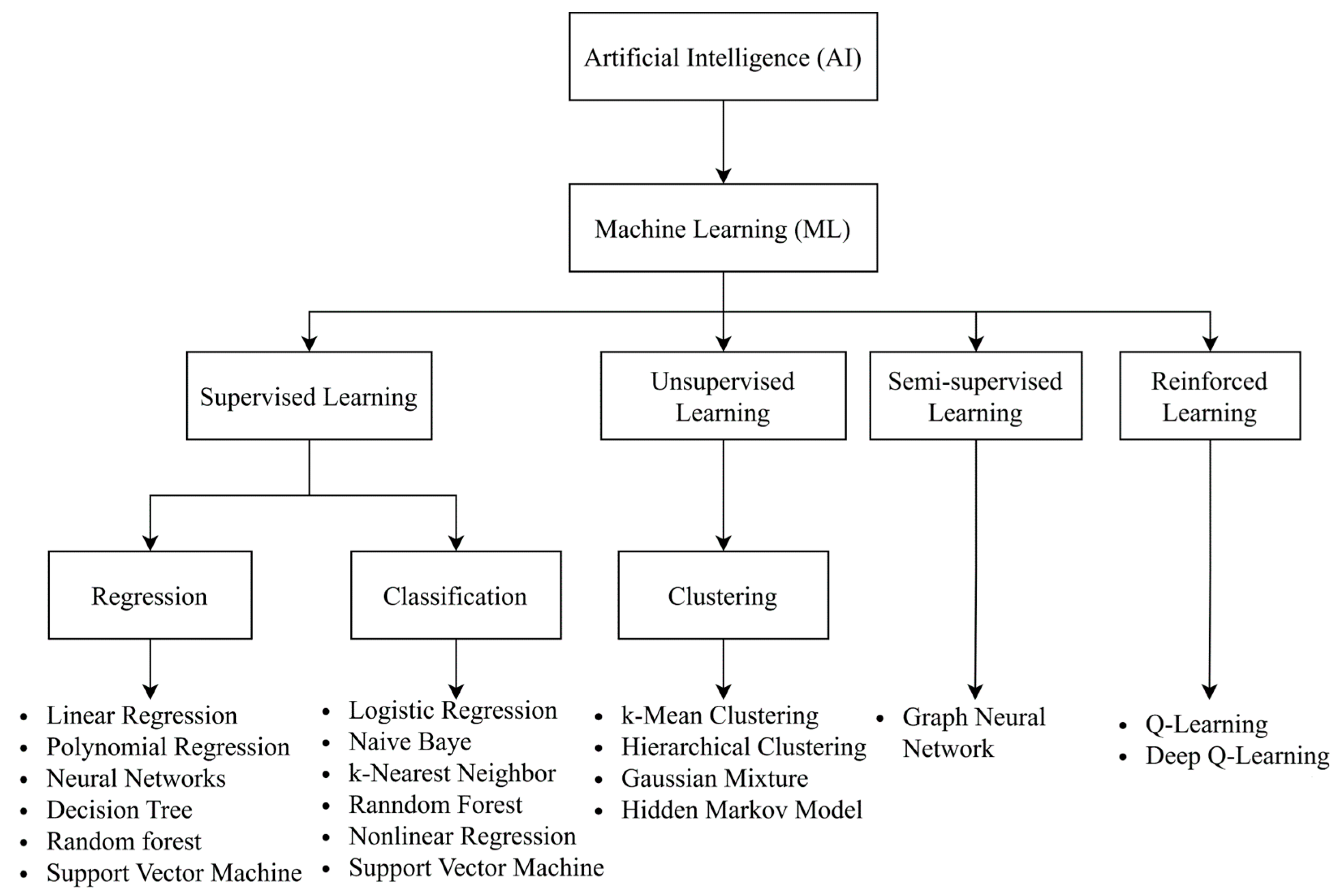

3.1. Support Vector Machine (SVM)

3.2. k-Nearest Neighbor (k-NN)

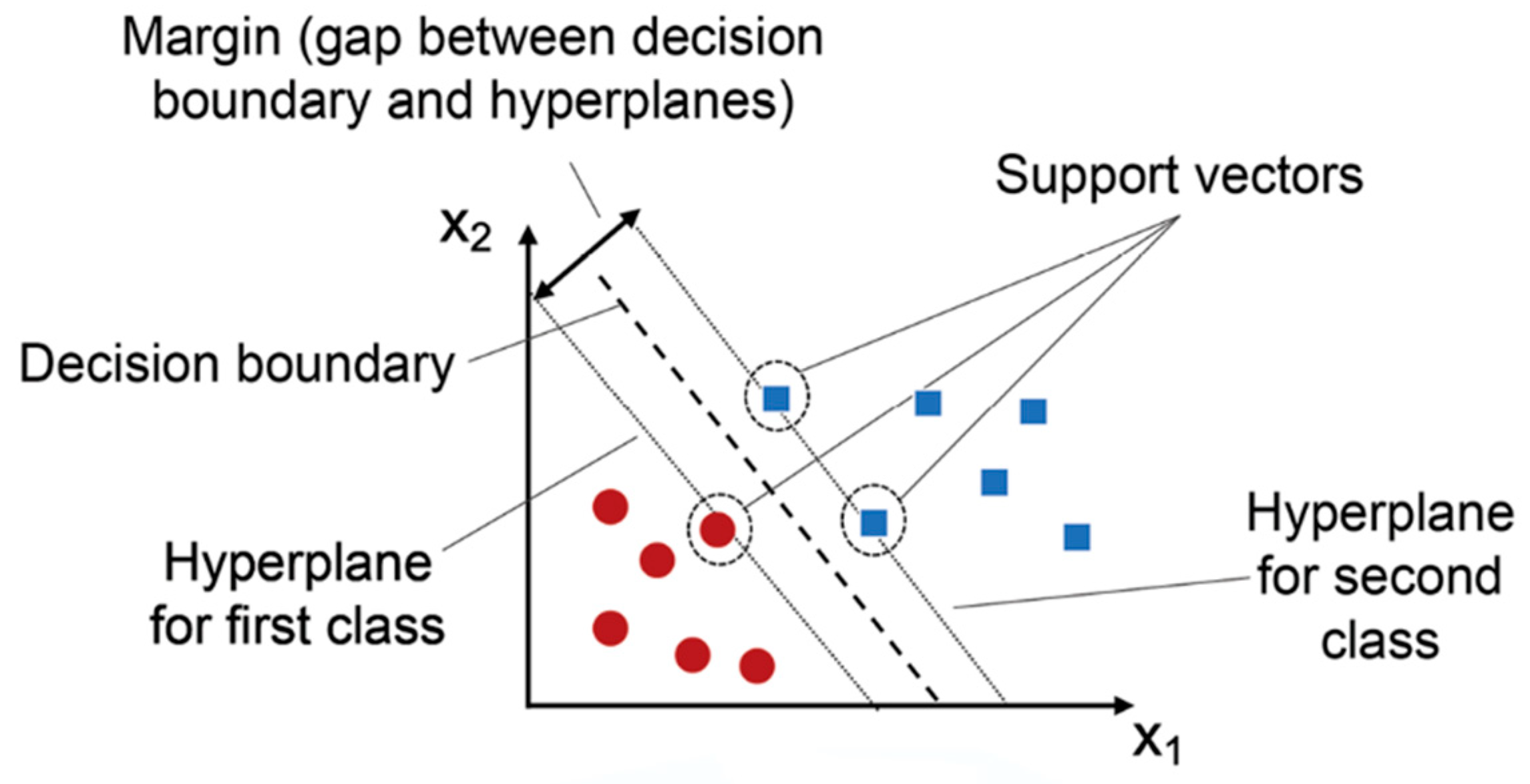

3.3. Decision Tree

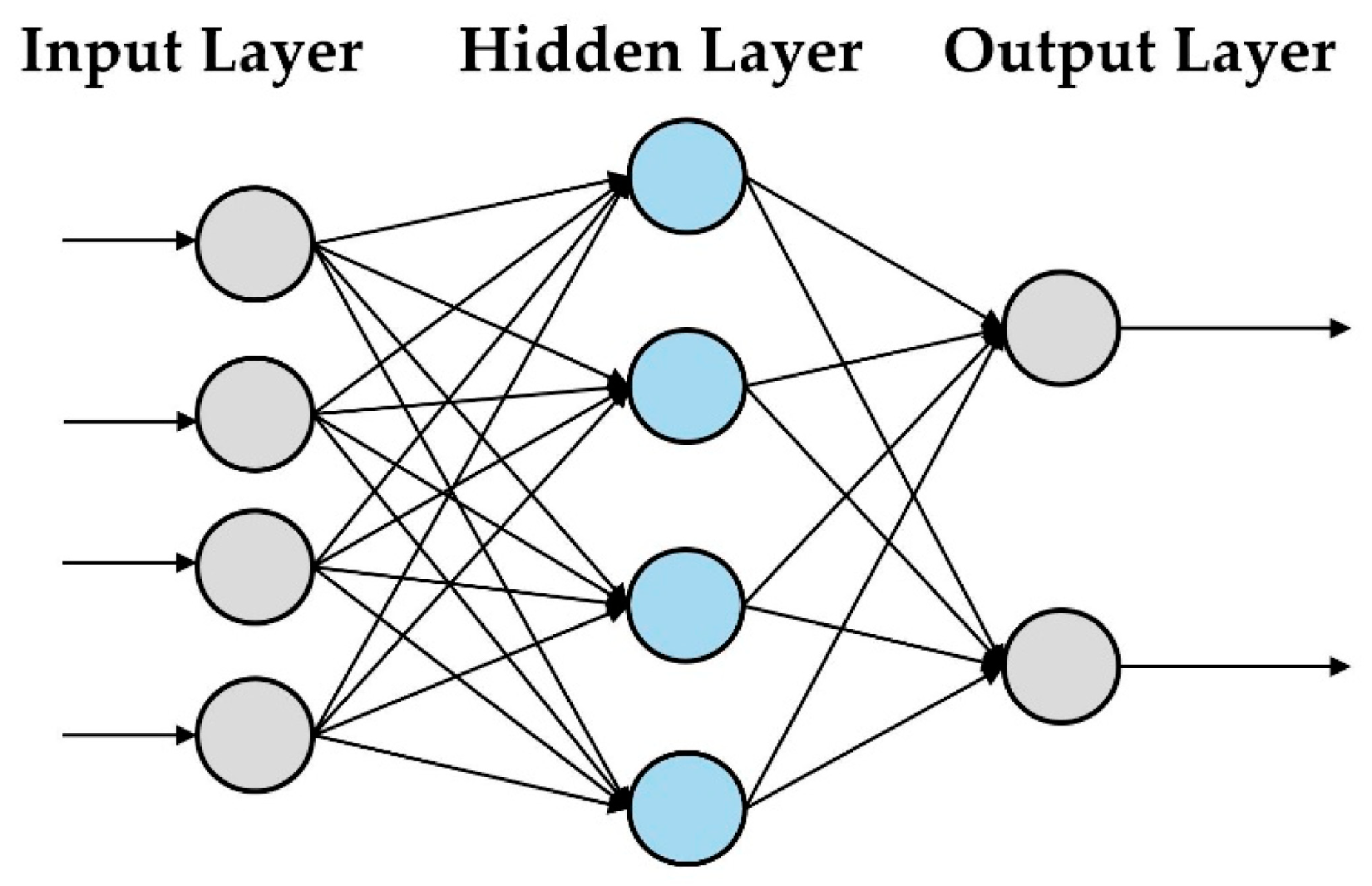

3.4. Artificial Neural Networks (ANNs)

3.5. Other Machine Learning Methods

4. Deep Learning Methods for Predicting Mechanical Properties of Composites

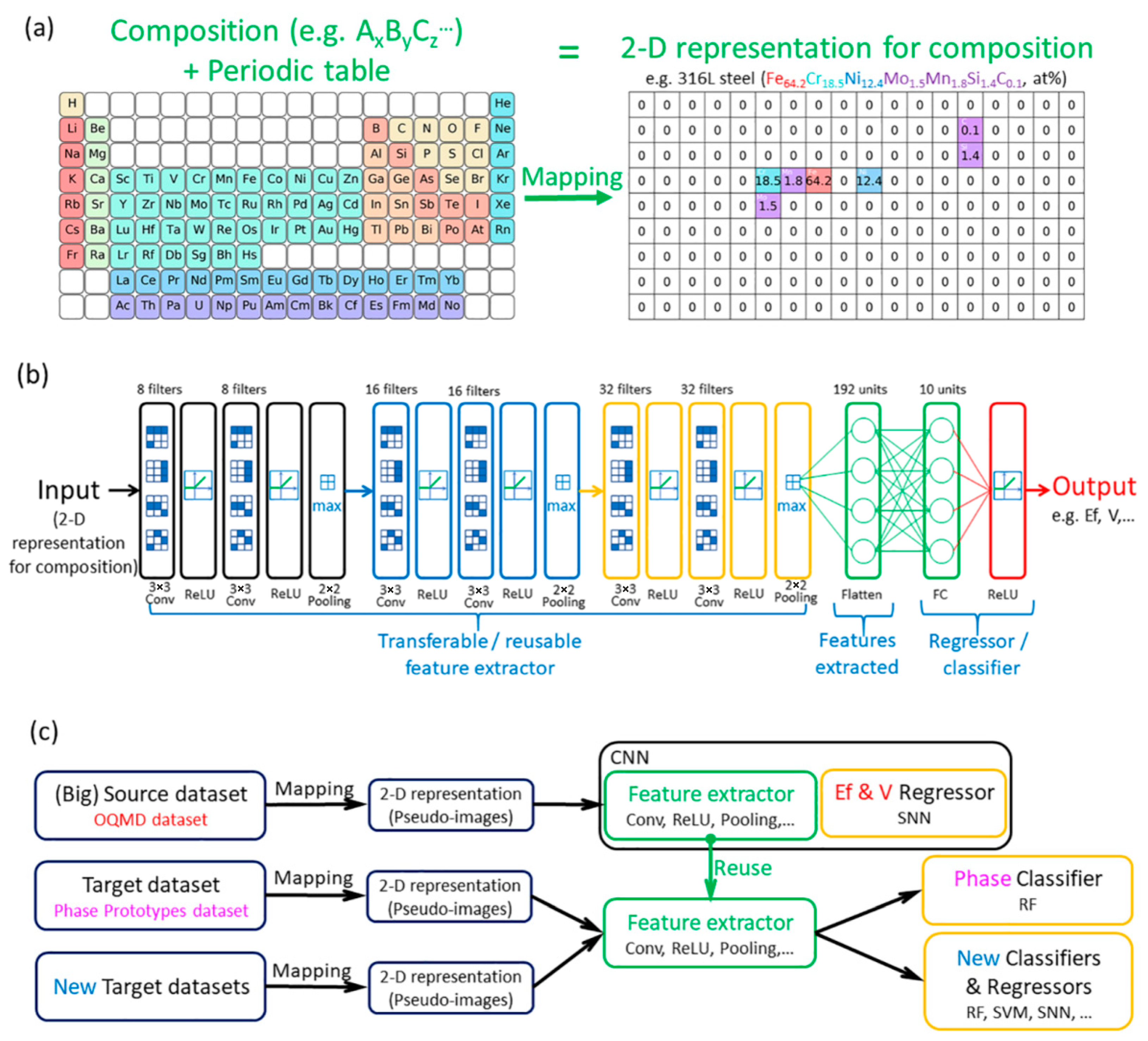

4.1. Convolutional Neural Networks (CNNs)

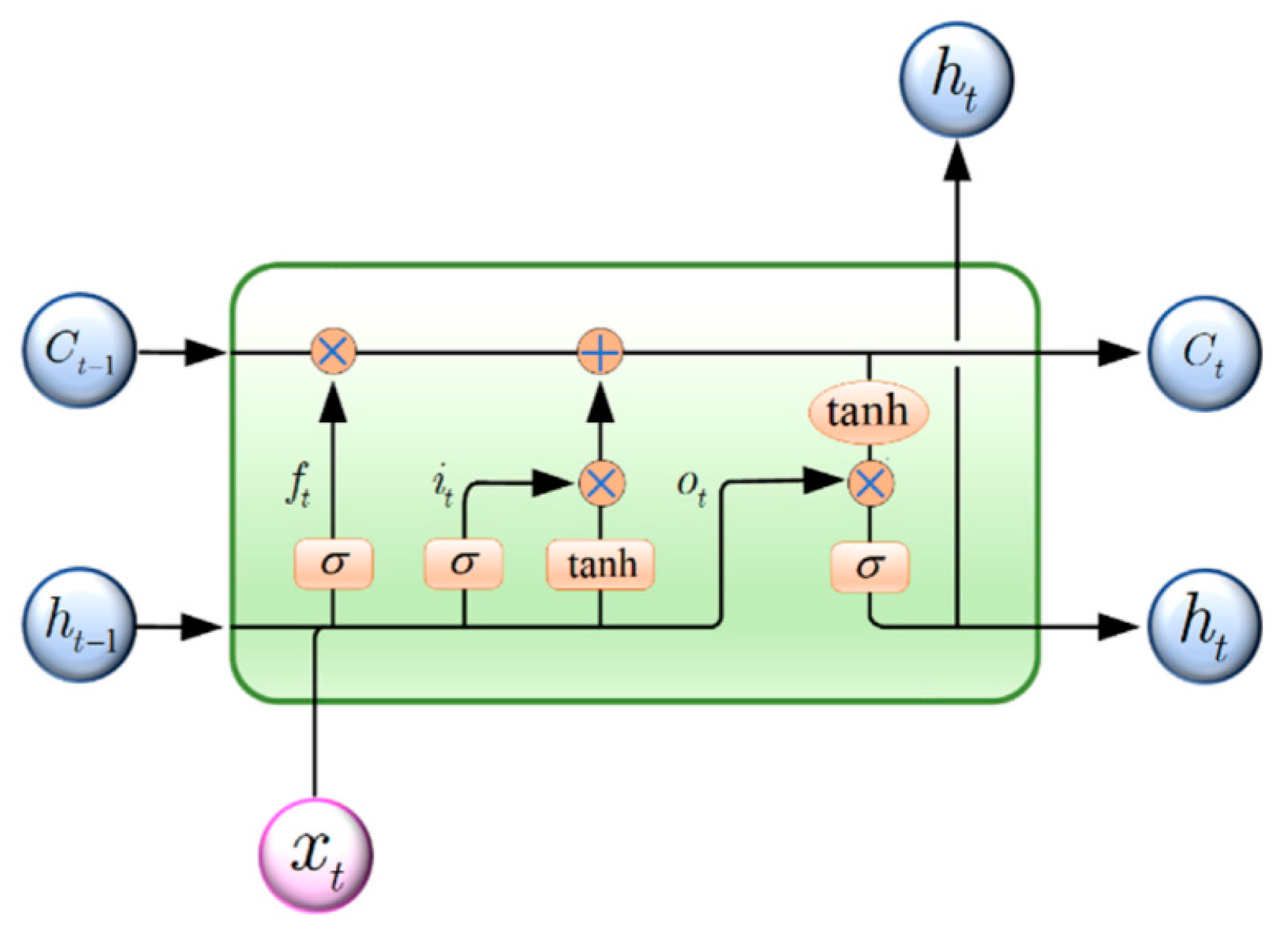

4.2. Recurrent Neural Networks (RNNs)

4.3. Auto-Encoders (AEs)

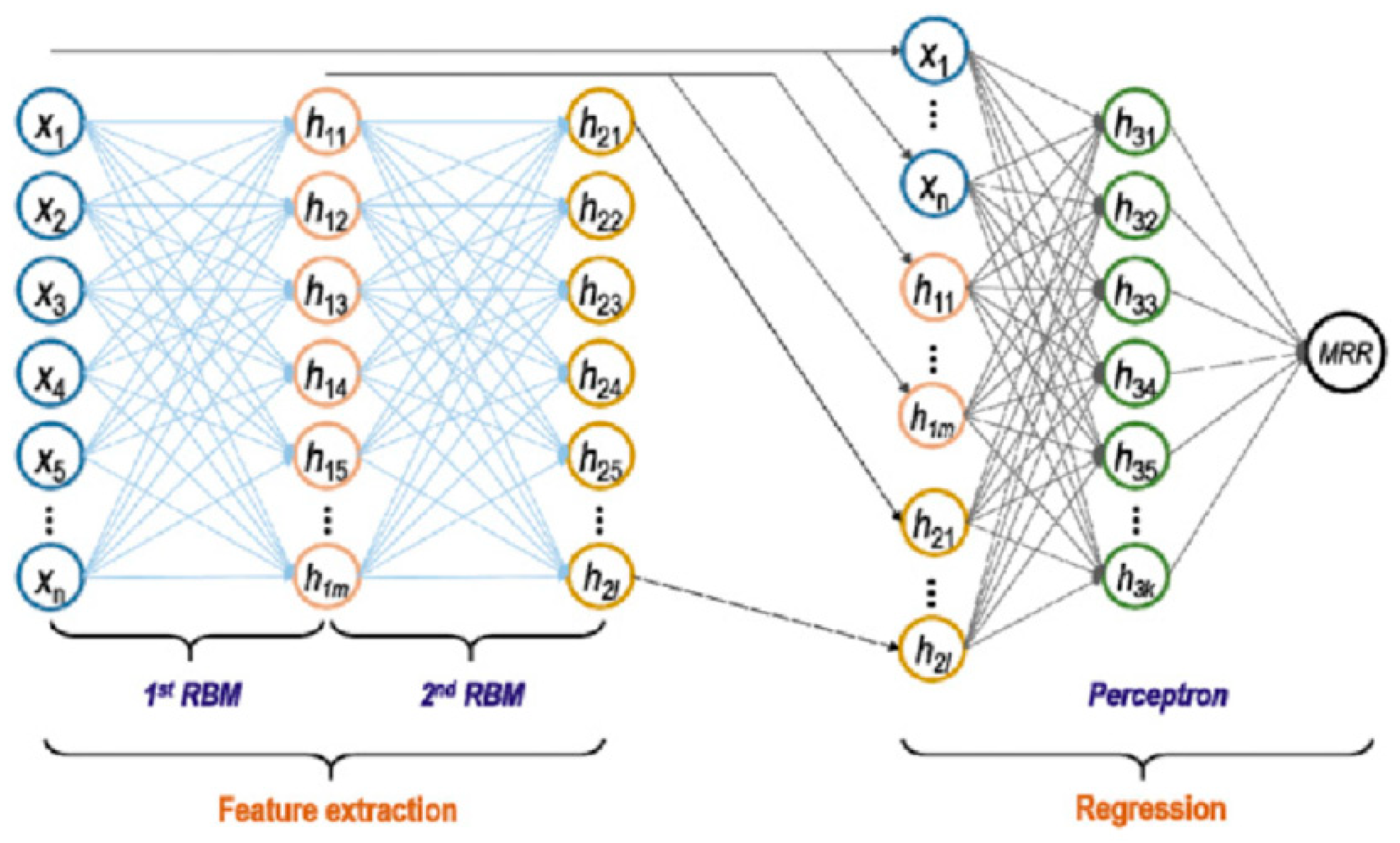

4.4. Deep Belief Networks (DBNs)

4.5. Generative Adversarial Networks (GANs)

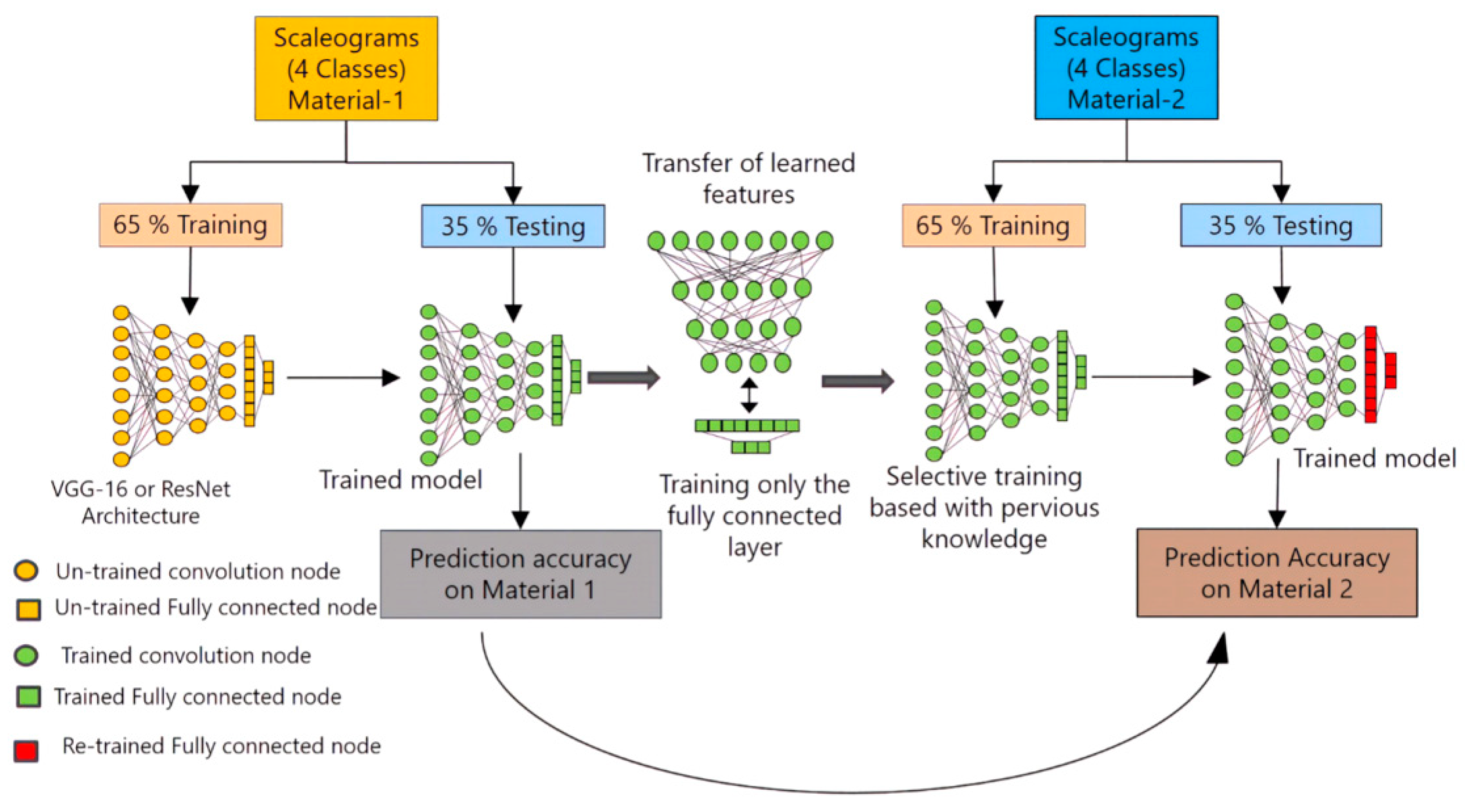

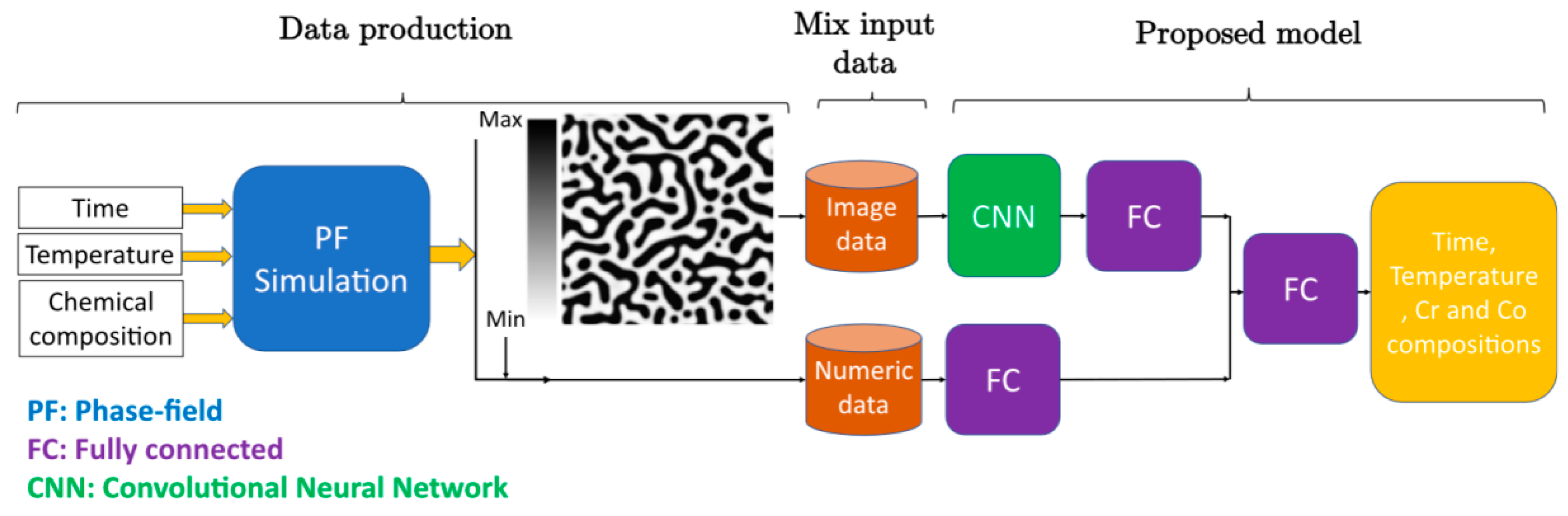

4.6. Deep Transfer Learning

5. Observation, Challenges, and Future Research Directions

- The effectiveness of artificial intelligence methods, particularly deep learning, in predicting material properties relies heavily on the availability of high-quality and comprehensive datasets. The development of accurate artificial intelligence models relies on large and diverse datasets that encompass a wide range of composite materials, manufacturing processes, and mechanical properties. However, such datasets may be limited or difficult to obtain due to various factors such as proprietary information, cost, and time constraints. Limited data availability can hinder the training and validation of artificial intelligence models, potentially leading to reduced performance and generalizability. Consequently, there is a substantial demand for novel approaches that can address the limitations of working with limited data.

- The quality and consistency of the available data can also pose challenges. Composite materials encompass a wide range of compositions, structures, and manufacturing techniques, resulting in variations in data quality and format. Inconsistencies in experimental methodologies, measurement techniques, and reporting standards can introduce noise and biases into the datasets. Lack of standardized data collection procedures can make it challenging to compare and integrate different datasets, potentially affecting the accuracy and reliability of AI predictions.

- Compared to traditional machine learning models, designing the architecture of deep learning models is still a challenging task. Deep learning models have numerous hyperparameters, and selecting appropriate values for these hyperparameters can significantly impact prediction accuracy and generalization ability. The absence of standardized rules for hyperparameter selection presents a challenge when utilizing deep learning for material properties prediction. The development of automated methods or guidelines for more efficient and effective hyperparameter tuning in deep learning models would contribute greatly to addressing this challenge in the prediction of material properties.

- Deep learning models, although powerful in their predictive capabilities, often lack interpretability. The black-box nature of these models makes it difficult to understand the underlying features and mechanisms driving the predictions. This lack of interpretability can limit the trust and acceptance of artificial intelligence predictions in the prediction of material properties. Developing interpretable artificial intelligence models that provide insights into the relationship between input features and predicted mechanical properties is an ongoing research challenge.

- Artificial intelligence models trained on specific datasets might struggle to generalize to unseen data or different composite material systems. The transferability of artificial intelligence models across different material compositions, fabrication techniques, and environmental conditions remains a challenge. Ensuring robust and reliable predictions across a wide range of composite materials requires careful consideration of model architecture, feature representation, and transfer learning techniques.

- While artificial intelligence models can provide rapid predictions, it is essential to validate their accuracy and reliability through experimental verification. The reliance on experimental testing to validate artificial intelligence predictions introduces additional time, cost, and resource requirements. Ensuring a strong correlation between predicted and measured mechanical properties is crucial for establishing the trustworthiness and practical utility of artificial intelligence models.

- Furthermore, many existing studies focus on the prediction of one or two mechanical properties rather than the overall mechanical properties of composite materials. While some studies have explored the prediction of multiple mechanical properties [210], there is still a significant research gap in this area. Therefore, a crucial research direction for the future is to design effective models that can accurately predict multiple mechanical properties in a simultaneous manner. Developing such models would provide a more comprehensive understanding of the material behavior and enable engineers and researchers to make informed decisions across a wide range of mechanical properties. This research direction holds great potential for advancing the field of material properties prediction and its practical applications in various industries.

- Due to the limited availability of large datasets for composite materials, research could be conducted to explore data augmentation techniques specific to composite materials. This could involve generating artificial data using physics-based simulations, generative models like generative adversarial networks (GANs), or incorporating domain knowledge. Data augmentation can help increase the diversity and size of the training datasets, improving the generalization and performance of artificial intelligence models.

- Combining artificial intelligence techniques with physics-based models could be a promising research direction. Hybrid modeling approaches can leverage the strengths of both data-driven artificial intelligence models and mechanistic models in order to improve accuracy and interpretability. Integrating physics-based models with artificial intelligence models can provide a better understanding of the underlying mechanisms governing the mechanical behavior of composite materials.

- Composite materials exhibit complex hierarchical structures, and their mechanical properties depend on interactions at multiple length scales. Future research can focus on developing artificial intelligence models that can capture and predict mechanical properties at different scales, from micro to macro levels. Multi-scale modeling approaches, such as coupling artificial intelligence models with finite element analysis or molecular dynamics simulations, can facilitate accurate predictions across different length scales.

- Enhancing the interpretability of artificial intelligence models for predicting the mechanical properties of composites is an important research direction. Developing techniques to explain the underlying factors influencing predictions, such as feature importance analysis or attention mechanisms, can increase the trust and adoption of artificial intelligence models. Explainable artificial intelligence (XAI) can provide valuable insights into the structure–property relationships of composite materials and facilitate knowledge discovery.

- Collaborative efforts between artificial intelligence researchers and experimentalists are essential to validate and refine artificial intelligence predictions. Integrating artificial intelligence predictions with experimental validation can help assess the accuracy and reliability of the models. Researchers can collaborate with experimentalists to design validation experiments, compare the predicted mechanical properties with actual measurements, and iteratively refine the artificial intelligence models.

- Composite materials encompass a wide range of material systems, such as fiber-reinforced composites, polymer matrix composites, and ceramic matrix composites. Future research can focus on developing domain-specific artificial intelligence models tailored to the unique characteristics and challenges of each material system. This can involve designing specialized architectures, feature representations, and training strategies that are specific to the properties and behaviors of different composite materials.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Song, L.; Wang, D.; Liu, X.; Yin, A.; Long, Z. Prediction of mechanical properties of composite materials using multimodal fusion learning. Sens. Actuators A Phys. 2023, 358, 114433. [Google Scholar] [CrossRef]

- Yu, Z.; Ye, S.; Sun, Y.; Zhao, H.; Feng, X.Q. Deep learning method for predicting the mechanical properties of aluminum alloys with small data sets. Mater. Today Commun. 2021, 28, 102570. [Google Scholar] [CrossRef]

- Ghetiya, N.D.; Patel, K.M. Prediction of Tensile Strength in Friction Stir Welded Aluminium Alloy Using Artificial Neural Network. Procedia Technol. 2014, 14, 274–281. [Google Scholar] [CrossRef]

- Mishra, S.K.; Brahma, A.; Dutta, K. Prediction of mechanical properties of Al-Si-Mg alloy using artificial neural network. Sadhana-Acad. Proc. Eng. Sci. 2021, 46, 139. [Google Scholar] [CrossRef]

- Tran, H.D.; Kim, C.; Chen, L.; Chandrasekaran, A.; Batra, R.; Venkatram, S.; Kamal, D.; Lightstone, J.P.; Gurnani, R.; Shetty, P.; et al. Machine-learning predictions of polymer properties with Polymer Genome. J. Appl. Phys. 2020, 128, 171104. [Google Scholar] [CrossRef]

- Han, T.; Huang, J.; Sant, G.; Neithalath, N.; Kumar, A. Predicting mechanical properties of ultrahigh temperature ceramics using machine learning. J. Am. Ceram. Soc. 2022, 105, 6851–6863. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, Y.; Zhang, Y.; Kitipornchai, S.; Yang, J. Machine learning assisted prediction of mechanical properties of graphene/aluminium nanocomposite based on molecular dynamics simulation. Mater. Des. 2022, 213, 110334. [Google Scholar] [CrossRef]

- Lee, J.A.; Almond, D.P.; Harris, B. Use of neural networks for the prediction of fatigue lives of composite materials. Compos. Part A Appl. Sci. Manuf. 1999, 30, 1159–1169. [Google Scholar] [CrossRef]

- Altinkok, N.; Koker, R. Neural network approach to prediction of bending strength and hardening behaviour of particulate reinforced (Al-Si-Mg)-aluminium matrix composites. Mater. Des. 2004, 25, 595–602. [Google Scholar] [CrossRef]

- Koker, R.; Altinkok, N.; Demir, A. Neural network based prediction of mechanical properties of particulate reinforced metal matrix composites using various training algorithms. Mater. Des. 2007, 28, 616–627. [Google Scholar] [CrossRef]

- Vinoth, A.; Datta, S. Design of the ultrahigh molecular weight polyethylene composites with multiple nanoparticles: An artificial intelligence approach. J. Compos. Mater. 2020, 54, 179–192. [Google Scholar] [CrossRef]

- Daghigh, V.; E Lacy, T.; Daghigh, H.; Gu, G.; Baghaei, K.T.; Horstemeyer, M.F.; Pittman, C.U. Machine learning predictions on fracture toughness of multiscale bio-nano-composites. J. Reinf. Plast. Compos. 2020, 39, 587–598. [Google Scholar] [CrossRef]

- Shah, V.; Zadourian, S.; Yang, C.; Zhang, Z.; Gu, G.X. Data-driven approach for the prediction of mechanical properties of carbon fiber reinforced composites. Mater. Adv. 2022, 3, 7319–7327. [Google Scholar] [CrossRef]

- Barbosa, A.; Upadhyaya, P.; Iype, E. Neural network for mechanical property estimation of multilayered laminate composite. Mater. Today Proc. 2020, 28, 982–985. [Google Scholar] [CrossRef]

- Al Hassan, M.; Derradji, M.; Ali, M.M.M.; Rawashdeh, A.; Wang, J.; Pan, Z.; Liu, W. Artificial neural network prediction of thermal and mechanical properties for Bi2O3-polybenzoxazine nanocomposites. J. Appl. Polym. Sci. 2022, 139, e52774. [Google Scholar] [CrossRef]

- Béji, H.; Kanit, T.; Messager, T. Prediction of Effective Elastic and Thermal Properties of Heterogeneous Materials Using Convolutional Neural Networks. Appl. Mech. 2023, 4, 287–303. [Google Scholar] [CrossRef]

- Balasundaram, R.; Devi, S.S.; Balan, G.S. Machine learning approaches for prediction of properties of natural fiber composites: Apriori algorithm. Aust. J. Mech. Eng. 2022, 20, 30091. [Google Scholar] [CrossRef]

- Gu, G.X.; Chen, C.T.; Buehler, M.J. De novo composite design based on machine learning algorithm. Extreme Mech. Lett. 2018, 18, 19–28. [Google Scholar] [CrossRef]

- Stel’makh, S.A.; Shcherban’, E.M.; Beskopylny, A.N.; Mailyan, L.R.; Meskhi, B.; Razveeva, I.; Kozhakin, A.; Beskopylny, N. Prediction of Mechanical Properties of Highly Functional Lightweight Fiber-Reinforced Concrete Based on Deep Neural Network and Ensemble Regression Trees Methods. Materials 2022, 15, 6740. [Google Scholar] [CrossRef]

- Turing, A. Machinery and Intelligence. Mind 1950, 59, 433–460. [Google Scholar] [CrossRef]

- Helal, S. The Expanding Frontier of Artificial Intelligence. Computer 2018, 51, 14–17. [Google Scholar] [CrossRef]

- Ramprasad, R.; Batra, R.; Pilania, G.; Mannodi-Kanakkithodi, A.; Kim, C. Machine learning in materials informatics: Recent applications and prospects. NPJ Comput. Mater. 2017, 3, 54. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.; Jia, Y.; Lei, J.; Liu, Z. Deep learning approach to mechanical property prediction of single-network hydrogel. Mathematics 2021, 9, 2804. [Google Scholar] [CrossRef]

- Chibani, S.; Coudert, F.X. Machine learning approaches for the prediction of materials properties. APL Mater. 2020, 8, 080701. [Google Scholar] [CrossRef]

- Chan, C.H.; Sun, M.; Huang, B. Application of machine learning for advanced material prediction and design. EcoMat 2022, 4, e12194. [Google Scholar] [CrossRef]

- Guo, K.; Yang, Z.; Yu, C.H.; Buehler, M.J. Artificial intelligence and machine learning in design of mechanical materials. Mater. Horizons 2021, 8, 1153–1172. [Google Scholar] [CrossRef] [PubMed]

- Lecun, Y.; Bengio, Y. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Ampazis, N.; Alexopoulos, N.D. Prediction of aircraft aluminum alloys tensile mechanical properties degradation using Support Vector Machines. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2010; Volume 6040, pp. 9–18. [Google Scholar] [CrossRef]

- Tang, J.L.; Cai, Q.R.; Liu, Y.J. Prediction of material mechanical properties with Support Vector Machine. In Proceedings of the 2010 International Conference on Machine Vision and Human-Machine Interface, MVHI, Kaifeng, China, 24–25 April 2010; pp. 592–595. [Google Scholar] [CrossRef]

- Bonifácio, A.L.; Mendes, J.C.; Farage, M.C.R.; Barbosa, F.S.; Barbosa, C.B.; Beaucour, A.L. Application of support vector machine and finite element method to predict the mechanical properties of concrete. Lat. Am. J. Solids Struct. 2019, 16, e205. [Google Scholar] [CrossRef]

- Hasanzadeh, A.; Vatin, N.I.; Hematibahar, M.; Kharun, M.; Shooshpasha, I. Prediction of the Mechanical Properties of Basalt Fiber Reinforced High-Performance Concrete Using Machine Learning Techniques. Materials 2022, 15, 7165. [Google Scholar] [CrossRef] [PubMed]

- Cheng, W.D.; Cai, C.Z.; Luo, Y.; Li, Y.H.; Zhao, C.J. Mechanical properties prediction for carbon nanotubes/epoxy composites by using support vector regression. Mod. Phys. Lett. B 2015, 29, 1550016. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Kalita, K.; Čep, R.; Chakraborty, S. A comparative analysis on prediction performance of regression models during machining of composite materials. Materials 2021, 14, 6689. [Google Scholar] [CrossRef]

- Lyu, F.; Fan, X.; Ding, F.; Chen, Z. Prediction of the axial compressive strength of circular concrete-filled steel tube columns using sine cosine algorithm-support vector regression. Compos. Struct. 2021, 273, 114282. [Google Scholar] [CrossRef]

- Mahajan, A.; Bajoliya, S.; Khandelwal, S.; Guntewar, R.; Ruchitha, A.; Singh, I.; Arora, N. Comparison of ML algorithms for prediction of tensile strength of polymer matrix composites. Mater. Today Proc. 2022, 12, 105. [Google Scholar] [CrossRef]

- Sharma, A.; Madhushri, P.; Kushvaha, V.; Kumar, A. Prediction of the Fracture Toughness of Silicafilled Epoxy Composites using K-Nearest Neighbor (KNN) Method. In Proceedings of the 2020 International Conference on Computational Performance Evaluation, ComPE 2020, Shillong, India, 2–4 July 2020; pp. 194–198. [Google Scholar] [CrossRef]

- Li, M.; Zhang, H.; Li, S.; Zhu, W.; Ke, Y. Machine learning and materials informatics approaches for predicting transverse mechanical properties of unidirectional CFRP composites with microvoids. Mater. Des. 2022, 224, 111340. [Google Scholar] [CrossRef]

- Thirumoorthy, A.; Arjunan, T.V.; Kumar, K.L.S. Experimental investigation on mechanical properties of reinforced Al6061 composites and its prediction using KNN-ALO algorithms. Int. J. Rapid Manuf. 2019, 8, 161. [Google Scholar] [CrossRef]

- Sarker, L.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Qi, Z.; Zhang, N.; Liu, Y.; Chen, W. Prediction of mechanical properties of carbon fiber based on cross-scale FEM and machine learning. Compos. Struct. 2019, 212, 199–206. [Google Scholar] [CrossRef]

- Kosicka, E.; Krzyzak, A.; Dorobek, M.; Borowiec, M. Prediction of Selected Mechanical Properties of Polymer Composites with Alumina Modifiers. Materials 2022, 15, 882. [Google Scholar] [CrossRef] [PubMed]

- Hegde, A.L.; Shetty, R.; Chiniwar, D.S.; Naik, N.; Nayak, M. Optimization and Prediction of Mechanical Characteristics on Vacuum Sintered Ti-6Al-4V-SiCp Composites Using Taguchi’s Design of Experiments, Response Surface Methodology and Random Forest Regression. J. Compos. Sci. 2022, 6, 339. [Google Scholar] [CrossRef]

- Zhang, C.; Li, Y.; Jiang, B.; Wang, R.; Liu, Y.; Jia, L. Mechanical properties prediction of composite laminate with FEA and machine learning coupled method. Compos. Struct. 2022, 299, 116086. [Google Scholar] [CrossRef]

- Almohammed, F.; Soni, J. Using Random Forest and Random Tree model to Predict the splitting tensile strength for the concrete with basalt fiber reinforced concrete. IOP Conf. Ser. Earth Environ. Sci. 2023, 1110, 012072. [Google Scholar] [CrossRef]

- Karamov, R.; Akhatov, I.; Sergeichev, I.V. Prediction of Fracture Toughness of Pultruded Composites Based on Supervised Machine Learning. Polymers 2022, 14, 3619. [Google Scholar] [CrossRef]

- Pathan, M.V.; Ponnusami, S.A.; Pathan, J.; Pitisongsawat, R.; Erice, B.; Petrinic, N.; Tagarielli, V.L. Predictions of the mechanical properties of unidirectional fibre composites by supervised machine learning. Sci. Rep. 2019, 9, 13964. [Google Scholar] [CrossRef]

- Shang, M.; Li, H.; Ahmad, A.; Ahmad, W.; Ostrowski, K.A.; Aslam, F.; Joyklad, P.; Majka, T.M. Predicting the Mechanical Properties of RCA-Based Concrete Using Supervised Machine Learning Algorithms. Materials 2022, 15, 647. [Google Scholar] [CrossRef]

- Guo, P.; Meng, W.; Xu, M.; Li, V.C.; Bao, Y. Predicting mechanical properties of high-performance fiber-reinforced cementitious composites by integrating micromechanics and machine learning. Materials 2021, 14, 3143. [Google Scholar] [CrossRef]

- Krishnan, K.A.; Anjana, R.; George, K.E. Effect of alkali-resistant glass fiber on polypropylene/polystyrene blends: Modeling and characterization. Polym. Compos. 2016, 37, 398–406. [Google Scholar] [CrossRef]

- Kabbani, M.S.; El Kadi, H.A. Predicting the effect of cooling rate on the mechanical properties of glass fiber–polypropylene composites using artificial neural networks. J. Thermoplast. Compos. Mater. 2018, 32, 1268–1281. [Google Scholar] [CrossRef]

- Wang, J.; Lin, C.; Feng, G.; Li, B.; Wu, L.; Wei, C.; Lv, Y.; Cheng, J. Fracture prediction of CFRP laminates subjected to CW laser heating and pre-tensile loads based on ANN. AIP Adv. 2022, 12, 015010. [Google Scholar] [CrossRef]

- Sharan and, M. Mitra Prediction of static strength properties of carbon fiber-reinforced composite using artificial neural network. Model. Simul. Mater. Sci. Eng. 2022, 30, 075001. [Google Scholar] [CrossRef]

- Devadiga, U.; Poojary, R.K.R.; Fernandes, P. Artificial neural network technique to predict the properties of multiwall carbon nanotube-fly ash reinforced aluminium composite. J. Mater. Res. Technol. 2019, 8, 3970–3977. [Google Scholar] [CrossRef]

- Wang, W.; Wang, H.; Zhou, J.; Fan, H.; Liu, X. Machine learning prediction of mechanical properties of braided-textile reinforced tubular structures. Mater. Des. 2021, 212, 110181. [Google Scholar] [CrossRef]

- Rajkumar, A.G.; Hemath, M.; Nagaraja, B.K.; Neerakallu, S.; Thiagamani, S.M.K.; Asrofi, M. An artificial neural network prediction on physical, mechanical, and thermal characteristics of giant reed fiber reinforced polyethylene terephthalate composite. J. Ind. Text. 2022, 51, 769S–803S. [Google Scholar] [CrossRef]

- Kumar, C.S.; Arumugam, V.; Sengottuvelusamy, R.; Srinivasan, S.; Dhakal, H.N. Failure strength prediction of glass/epoxy composite laminates from acoustic emission parameters using artificial neural network. Appl. Acoust. 2017, 115, 32–41. [Google Scholar] [CrossRef]

- Khademi, F.; Akbari, M.; Jamal, S.M.; Nikoo, M. Multiple linear regression, artificial neural network, and fuzzy logic prediction of 28 days compressive strength of concrete. Front. Struct. Civ. Eng. 2017, 11, 90–99. [Google Scholar] [CrossRef]

- Shabley, A.; Nikolskaia, K.; Varkentin, V.; Peshkov, R.; Petrova, L. Predicting the Destruction of Composite Materials Using Machine Learning Methods. Transp. Res. Procedia 2023, 68, 191–196. [Google Scholar] [CrossRef]

- Tanyildizi, H. Fuzzy logic model for prediction of mechanical properties of lightweight concrete exposed to high temperature. Mater. Des. 2009, 30, 2205–2210. [Google Scholar] [CrossRef]

- Tarasov, V.; Tan, H.; Jarfors, A.E.W.; Seifeddine, S. Fuzzy logic-based modelling of yield strength of as-cast A356 alloy. Neural Comput. Appl. 2020, 32, 5833–5844. [Google Scholar] [CrossRef]

- Nawafleh, N.; Al-Oqla, F.M. Evaluation of mechanical properties of fiber-reinforced syntactic foam thermoset composites: A robust artificial intelligence modeling approach for improved accuracy with little datasets. J. Mech. Behav. Mater. 2023, 32, 0285. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, J.; Liu, C.; Zheng, J. Prediction of Rubber Fiber Concrete Strength Using Extreme Learning Machine. Front. Mater. 2021, 7, 465. [Google Scholar] [CrossRef]

- Li, J.; Salim, R.D.; Aldlemy, M.S.; Abdullah, J.M.; Yaseen, Z.M. Fiberglass-Reinforced Polyester Composites Fatigue Prediction Using Novel Data-Intelligence Model. Arab. J. Sci. Eng. 2019, 44, 3343–3356. [Google Scholar] [CrossRef]

- Hestroffer, J.M.; Charpagne, M.A.; Latypov, M.I.; Beyerlein, I.J. Graph neural networks for efficient learning of mechanical properties of polycrystals. Comput. Mater. Sci. 2023, 217, 111894. [Google Scholar] [CrossRef]

- Lu, W.; Yang, Z.; Buehler, M.J. Rapid mechanical property prediction and de novo design of three-dimensional spider webs through graph and GraphPerceiver neural networks. J. Appl. Phys. 2022, 132, 074703. [Google Scholar] [CrossRef]

- Maurizi, M.; Gao, C.; Berto, F. Predicting stress, strain and deformation fields in materials and structures with graph neural networks. Sci. Rep. 2022, 12, 21834. [Google Scholar] [CrossRef] [PubMed]

- Kibrete, F.; Woldemichael, D.E. Applications of Artificial Intelligence for Fault Diagnosis of Rotating Machines: A Review. In Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST; Springer: Berlin/Heidelberg, Germany, 2023; Volume 455, pp. 41–62. [Google Scholar] [CrossRef]

- Holden, A.V. Competition and cooperation in neural nets. Phys. D Nonlinear Phenom. 1983, 8, 284–285. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Lo, C.C.; Lee, C.H.; Huang, W.C. Prognosis of bearing and gear wears using convolutional neural network with hybrid loss function. Sensors 2020, 20, 3539. [Google Scholar] [CrossRef]

- Wu, C.; Jiang, P.; Ding, C.; Feng, F.; Chen, T. Intelligent fault diagnosis of rotating machinery based on one-dimensional convolutional neural network. Comput. Ind. 2019, 108, 53–61. [Google Scholar] [CrossRef]

- Yang, C.; Kim, Y.; Ryu, S.; Gu, G.X. Prediction of composite microstructure stress-strain curves using convolutional neural networks. Mater. Des. 2020, 189, 108509. [Google Scholar] [CrossRef]

- Yang, C.; Kim, Y.; Ryu, S.; Gu, G.X. Using convolutional neural networks to predict composite properties beyond the elastic limit. MRS Commun. 2019, 9, 609–617. [Google Scholar] [CrossRef]

- Abueidda, D.W.; Almasri, M.; Ammourah, R.; Ravaioli, U.; Jasiuk, I.M.; Sobh, N.A. Prediction and optimization of mechanical properties of composites using convolutional neural networks. Compos. Struct. 2019, 227, 111264. [Google Scholar] [CrossRef]

- Li, X.; Liu, Z.; Cui, S.; Luo, C.; Li, C.; Zhuang, Z. Predicting the effective mechanical property of heterogeneous materials by image based modeling and deep learning. Comput. Methods Appl. Mech. Eng. 2019, 347, 735–753. [Google Scholar] [CrossRef]

- Ye, S.; Li, B.; Li, Q.; Zhao, H.P.; Feng, X.Q. Deep neural network method for predicting the mechanical properties of composites. Appl. Phys. Lett. 2019, 115, 161901. [Google Scholar] [CrossRef]

- Pakzad, S.S.; Roshan, N.; Ghalehnovi, M. Comparison of various machine learning algorithms used for compressive strength prediction of steel fiber-reinforced concrete. Sci. Rep. 2023, 13, 3646. [Google Scholar] [CrossRef]

- Ramkumar, G.; Sahoo, S.; Anitha, G.; Ramesh, S.; Nirmala, P.; Tamilselvi, M.; Subbiah, R.; Rajkumar, S. An Unconventional Approach for Analyzing the Mechanical Properties of Natural Fiber Composite Using Convolutional Neural Network. Adv. Mater. Sci. Eng. 2021, 2021, 5450935. [Google Scholar] [CrossRef]

- Kim, D.-W.; Lim, J.H.; Lee, S. Prediction and validation of the transverse mechanical behavior of unidirectional composites considering interfacial debonding through convolutional neural networks. Compos. Part B Eng. 2021, 225, 109314. [Google Scholar] [CrossRef]

- Valishin, A.; Beriachvili, N. Applying neural networks to analyse the properties and structure of composite materials. E3S Web Conf. 2023, 376, 01041. [Google Scholar] [CrossRef]

- Rao, C.; Liu, Y. Three-dimensional convolutional neural network (3D-CNN) for heterogeneous material homogenization. Comput. Mater. Sci. 2020, 184, 109850. [Google Scholar] [CrossRef]

- Yang, Z.; Yabansu, Y.C.; Al-Bahrani, R.; Liao, W.-K.; Choudhary, A.N.; Kalidindi, S.R.; Agrawal, A. Deep learning approaches for mining structure-property linkages in high contrast composites from simulation datasets. Comput. Mater. Sci. 2018, 151, 278–287. [Google Scholar] [CrossRef]

- Hanakata, P.Z.; Cubuk, E.D.; Campbell, D.K.; Park, H.S. Accelerated Search and Design of Stretchable Graphene Kirigami Using Machine Learning. Phys. Rev. Lett. 2018, 121, 255304. [Google Scholar] [CrossRef] [PubMed]

- Gu, G.X.; Chen, C.T.; Richmond, D.J.; Buehler, M.J. Bioinspired hierarchical composite design using machine learning: Simulation, additive manufacturing, and experiment. Mater. Horizons 2018, 5, 939–945. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. In Proceedings of the EMNLP 2014—2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Chen, G. Recurrent neural networks (RNNs) learn the constitutive law of viscoelasticity. Comput. Mech. 2021, 67, 1009–1019. [Google Scholar] [CrossRef]

- Gorji, M.B.; Mozaffar, M.; Heidenreich, J.N.; Cao, J.; Mohr, D. On the potential of recurrent neural networks for modeling path dependent plasticity. J. Mech. Phys. Solids 2020, 143, 103972. [Google Scholar] [CrossRef]

- Mozaffar, M.; Bostanabad, R.; Chen, W.; Ehmann, K.; Cao, J.; Bessa, M.A. Deep learning predicts path-dependent plasticity. Proc. Natl. Acad. Sci. USA 2019, 116, 26414–26420. [Google Scholar] [CrossRef]

- Trzepieciński, T.; Ryzińska, G.; Biglar, M.; Gromada, M. Modelling of multilayer actuator layers by homogenisation technique using Digimat software. Ceram. Int. 2017, 43, 3259–3266. [Google Scholar] [CrossRef]

- Frankel, A.L.; Jones, R.E.; Alleman, C.; Templeton, J.A. Predicting the mechanical response of oligocrystals with deep learning. Comput. Mater. Sci. 2019, 169, 109099. [Google Scholar] [CrossRef]

- Wang, K.; Sun, W.C. A multiscale multi-permeability poroplasticity model linked by recursive homogenizations and deep learning. Comput. Methods Appl. Mech. Eng. 2018, 334, 337–380. [Google Scholar] [CrossRef]

- Li, X.; Hu, Y.; Zio, E.; Kang, R. A Bayesian Optimal Design for Accelerated Degradation Testing Based on the Inverse Gaussian Process. IEEE Access 2017, 5, 5690–5701. [Google Scholar] [CrossRef]

- Qin, L.; Huang, W.; Du, Y.; Zheng, L.; Jawed, M.K. Genetic algorithm-based inverse design of elastic gridshells. Struct. Multidiscip. Optim. 2020, 62, 2691–2707. [Google Scholar] [CrossRef]

- Bureerat, S.; Pholdee, N. Inverse problem based differential evolution for efficient structural health monitoring of trusses. Appl. Soft Comput. 2018, 66, 462–472. [Google Scholar] [CrossRef]

- Khadilkar, M.R.; Paradiso, S.; Delaney, K.T.; Fredrickson, G.H. Inverse Design of Bulk Morphologies in Multiblock Polymers Using Particle Swarm Optimization. Macromolecules 2017, 50, 6702–6709. [Google Scholar] [CrossRef]

- Sun, G.; Sun, Y.; Wang, S. Artificial neural network based inverse design: Airfoils and wings. Aerosp. Sci. Technol. 2015, 42, 415–428. [Google Scholar] [CrossRef]

- Wirkert, S.J.; Kenngott, H.; Mayer, B.; Mietkowski, P.; Wagner, M.; Sauer, P.; Clancy, N.T.; Elson, D.S.; Maier-Hein, L. Robust near real-time estimation of physiological parameters from megapixel multispectral images with inverse Monte Carlo and random forest regression. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 909–917. [Google Scholar] [CrossRef]

- Wu, Z.; Ding, C.; Li, G.; Han, X.; Li, J. Learning solutions to the source inverse problem of wave equations using LS-SVM. J. Inverse Ill-Posed Probl. 2019, 27, 657–669. [Google Scholar] [CrossRef]

- Rahnama, A.; Zepon, G.; Sridhar, S. Machine learning based prediction of metal hydrides for hydrogen storage, part II: Prediction of material class. Int. J. Hydrogen Energy 2019, 44, 7345–7353. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, X. Machine learning the magnetocaloric effect in manganites from lattice parameters. Appl. Phys. A Mater. Sci. Process. 2020, 126, 341. [Google Scholar] [CrossRef]

- Sun, Y.T.; Bai, H.Y.; Li, M.Z.; Wang, W.H. Machine Learning Approach for Prediction and Understanding of Glass-Forming Ability. J. Phys. Chem. Lett. 2017, 8, 3434–3439. [Google Scholar] [CrossRef]

- Xiong, J.; Shi, S.Q.; Zhang, T.Y. A machine-learning approach to predicting and understanding the properties of amorphous metallic alloys. Mater. Des. 2020, 187, 108378. [Google Scholar] [CrossRef]

- Ward, L.; O’Keeffe, S.C.; Stevick, J.; Jelbert, G.R.; Aykol, M.; Wolverton, C. A machine learning approach for engineering bulk metallic glass alloys. Acta Mater. 2018, 159, 102–111. [Google Scholar] [CrossRef]

- Zhang, Y.; Wen, C.; Wang, C.; Antonov, S.; Xue, D.; Bai, Y.; Su, Y. Phase prediction in high entropy alloys with a rational selection of materials descriptors and machine learning models. Acta Mater. 2020, 185, 528–539. [Google Scholar] [CrossRef]

- Prashun, G.; Vladan, S.; Eric, S.T. Computationally guided discovery of thermoelectric materials. Nat. Rev. Mater. 2017, 2, 17053. [Google Scholar] [CrossRef]

- Ratnayake, R.M.C.; Antosz, K. Risk-Based Maintenance Assessment in the Manufacturing Industry: Minimisation of Suboptimal Prioritisation. Manag. Prod. Eng. Rev. 2017, 8, 38–45. [Google Scholar] [CrossRef]

- Kozłowski, E.; Antosz, K.; Mazurkiewicz, D.; Sęp, J.; Żabiński, T. Integrating advanced measurement and signal processing for reliability decision-making. Eksploat. i Niezawodn.-Maint. Reliab. 2021, 23, 777–787. [Google Scholar] [CrossRef]

- Chen, Q.; Jia, R.; Pang, S. Deep long short-term memory neural network for accelerated elastoplastic analysis of heterogeneous materials: An integrated data-driven surrogate approach. Compos. Struct. 2021, 264, 113688. [Google Scholar] [CrossRef]

- Wu, L.; Nguyen, V.D.; Kilingar, N.G.; Noels, L. A recurrent neural network-accelerated multi-scale model for elasto-plastic heterogeneous materials subjected to random cyclic and non-proportional loading paths. Comput. Methods Appl. Mech. Eng. 2020, 369, 113234. [Google Scholar] [CrossRef]

- Ghavamian, F.; Simone, A. Accelerating multiscale finite element simulations of history-dependent materials using a recurrent neural network. Comput. Methods Appl. Mech. Eng. 2019, 357, 112594. [Google Scholar] [CrossRef]

- Zhu, J.H.; Zaman, M.M.; Anderson, S.A. Modeling of soil behavior with a recurrent neural network. Can. Geotech. J. 1998, 35, 858–872. [Google Scholar] [CrossRef]

- Graf, W.; Freitag, S.; Kaliske, M.; Sickert, J.U. Recurrent Neural Networks for Uncertain Time-Dependent Structural Behavior. Comput. Civ. Infrastruct. Eng. 2010, 25, 322–323. [Google Scholar] [CrossRef]

- Logarzo, H.J.; Capuano, G.; Rimoli, J.J. Smart constitutive laws: Inelastic homogenization through machine learning. Comput. Methods Appl. Mech. Eng. 2021, 373, 113482. [Google Scholar] [CrossRef]

- Hearley, B.; Park, B.; Stuckner, J.; Pineda, E.; Murman, S. Predicting Unreinforced Fabric Mechanical Behavior with Recurrent Neural Networks. 2022. Available online: https://ntrs.nasa.gov/citations/20210023708 (accessed on 10 June 2023).

- Farizhandi, A.A.K.; Mamivand, M. Spatiotemporal prediction of microstructure evolution with predictive recurrent neural network. Comput. Mater. Sci. 2023, 223, 112110. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, P.; Gao, R.X. Deep learning-based tensile strength prediction in fused deposition modeling. Comput. Ind. 2019, 107, 11–21. [Google Scholar] [CrossRef]

- Freitag, S.; Graf, W.; Kaliske, M. A material description based on recurrent neural networks for fuzzy data and its application within the finite element method. Comput. Struct. 2013, 124, 29–37. [Google Scholar] [CrossRef]

- Graf, W.; Freitag, S.; Sickert, J.U.; Kaliske, M. Structural Analysis with Fuzzy Data and Neural Network Based Material Description. Comput. Civ. Infrastruct. Eng. 2012, 27, 640–654. [Google Scholar] [CrossRef]

- Oeser, M.; Freitag, S. Modeling of materials with fading memory using neural networks. Int. J. Numer. Methods Eng. 2009, 78, 843–862. [Google Scholar] [CrossRef]

- Koeppe, A.; Bamer, F.; Selzer, M.; Nestler, B.; Markert, B. Explainable Artificial Intelligence for Mechanics: Physics-Explaining Neural Networks for Constitutive Models. Front. Mater. 2022, 8, 824958. [Google Scholar] [CrossRef]

- Flaschel, M.; Kumar, S.; De Lorenzis, L. Unsupervised discovery of interpretable hyperelastic constitutive laws. Comput. Methods Appl. Mech. Eng. 2021, 381, 113852. [Google Scholar] [CrossRef]

- Nascimento, R.G.; Viana, F.A.C. Cumulative damage modeling with recurrent neural networks. AIAA J. 2020, 58, 5459–5471. [Google Scholar] [CrossRef]

- Yang, K.; Cao, Y.; Zhang, Y.; Fan, S.; Tang, M.; Aberg, D.; Sadigh, B.; Zhou, F. Self-supervised learning and prediction of microstructure evolution with convolutional recurrent neural networks. Patterns 2021, 2, 100243. [Google Scholar] [CrossRef] [PubMed]

- Jung, J.; Yoon, J.I.; Park, H.K.; Jo, H.; Kim, H.S. Microstructure design using machine learning generated low dimensional and continuous design space. Materialia 2020, 11, 100690. [Google Scholar] [CrossRef]

- Iraki, T.; Morand, L.; Dornheim, J.; Link, N.; Helm, D. A multi-task learning-based optimization approach for finding diverse sets of material microstructures with desired properties and its application to texture optimization. J. Intell. Manuf. 2023, 23, 1–17. [Google Scholar] [CrossRef]

- Zhao, Y.; Altschuh, P.; Santoki, J.; Griem, L.; Tosato, G.; Selzer, M.; Koeppe, A.; Nestler, B. Characterization of porous membranes using artificial neural networks. Acta Mater. 2023, 253, 118922. [Google Scholar] [CrossRef]

- Stein, H.S.; Guevarra, D.; Newhouse, P.F.; Soedarmadji, E.; Gregoire, J.M. Machine learning of optical properties of materials-predicting spectra from images and images from spectra. Chem. Sci. 2019, 10, 47–55. [Google Scholar] [CrossRef]

- Arumugam, D.; Kiran, R. Compact representation and identification of important regions of metal microstructures using complex-step convolutional autoencoders. Mater. Des. 2022, 223, 111236. [Google Scholar] [CrossRef]

- Lee, S.M.; Park, S.Y.; Choi, B.H. Application of domain-adaptive convolutional variational autoencoder for stress-state prediction. Knowl.-Based Syst. 2022, 248, 108827. [Google Scholar] [CrossRef]

- Kim, Y.; Park, H.K.; Jung, J.; Asghari-Rad, P.; Lee, S.; Kim, J.Y.; Jung, H.G.; Kim, H.S. Exploration of optimal microstructure and mechanical properties in continuous microstructure space using a variational autoencoder. Mater. Des. 2021, 202, 109544. [Google Scholar] [CrossRef]

- Frącz, W.; Janowski, G. Influence of homogenization methods in prediction of strength properties for wpc composites. Appl. Comput. Sci. 2017, 13, 77–89. [Google Scholar] [CrossRef]

- Morand, L.; Helm, D. A mixture of experts approach to handle ambiguities in parameter identification problems in material modeling. Comput. Mater. Sci. 2019, 167, 85–91. [Google Scholar] [CrossRef]

- Morand, L.; Link, N.; Iraki, T.; Dornheim, J.; Helm, D. Efficient Exploration of Microstructure-Property Spaces via Active Learning. Front. Mater. 2022, 8, 824441. [Google Scholar] [CrossRef]

- Chen, S.; Xu, N. Detecting Microstructural Criticality/Degeneracy through Hybrid Learning Strategies Trained by Molecular Dynamics Simulations. ACS Appl. Mater. Interfaces 2022, 15, 10193–10202. [Google Scholar] [CrossRef] [PubMed]

- Sardeshmukh, A.; Reddy, S.; GauthamB, P.; Bhattacharyya, P. TextureVAE: Learning interpretable representations of material microstructures using variational autoencoders. In CEUR Workshop Proceedings; RWTH Aachen University: Aachen, Germany, 2021; Volume 2964, ISSN 1613-0073. [Google Scholar]

- Oommen, V.; Shukla, K.; Goswami, S.; Dingreville, R.; Karniadakis, G.E. Learning two-phase microstructure evolution using neural operators and autoencoder architectures. npj Comput. Mater. 2022, 8, 190. [Google Scholar] [CrossRef]

- Pitz, E.; Pochiraju, K. A Neural Network Transformer Model for Composite Microstructure Homogenization. arXiv 2023, arXiv:2304.07877v1. [Google Scholar]

- Cang, R.; Xu, Y.; Chen, S.; Liu, Y.; Jiao, Y.; Ren, M.Y. Microstructure Representation and Reconstruction of Heterogeneous Materials Via Deep Belief Network for Computational Material Design. J. Mech. Des. 2017, 139, 071404. [Google Scholar] [CrossRef]

- Wei, H.; Zhao, S.; Rong, Q.; Bao, H. Predicting the effective thermal conductivities of composite materials and porous media by machine learning methods. Int. J. Heat Mass Transf. 2018, 127, 908–916. [Google Scholar] [CrossRef]

- Cecen, A.; Dai, H.; Yabansu, Y.C.; Kalidindi, S.R.; Song, L. Material structure-property linkages using three-dimensional convolutional neural networks. Acta Mater. 2018, 146, 76–84. [Google Scholar] [CrossRef]

- Yang, Z.; Yabansu, Y.C.; Jha, D.; Liao, W.-K.; Choudhary, A.N.; Kalidindi, S.R.; Agrawal, A. Establishing structure-property localization linkages for elastic deformation of three-dimensional high contrast composites using deep learning approaches. Acta Mater. 2018, 166, 335–345. [Google Scholar] [CrossRef]

- Chalapathy, R.; Chawla, S. Deep Learning for Anomaly Detection: A Survey. arXiv 2019, arXiv:1901.03407v2. [Google Scholar]

- Ruff, L.; Kauffmann, J.R.; Vandermeulen, R.A.; Montavon, G.; Samek, W.; Kloft, M.; Dietterich, T.G.; Muller, K.-R. A Unifying Review of Deep and Shallow Anomaly Detection. Proc. IEEE 2021, 109, 756–795. [Google Scholar] [CrossRef]

- Bostanabad, R.; Zhang, Y.; Li, X.; Kearney, T.; Brinson, L.C.; Apley, D.W.; Liu, W.K.; Chen, W. Computational microstructure characterization and reconstruction: Review of the state-of-the-art techniques. Prog. Mater. Sci. 2018, 95, 1–41. [Google Scholar] [CrossRef]

- Ma, W.; Kautz, E.J.; Baskaran, A.; Chowdhury, A.; Joshi, V.; Yener, B.; Lewis, D.J. Image-driven discriminative and generative machine learning algorithms for establishing microstructure-processing relationships. J. Appl. Phys. 2020, 128, 134901. [Google Scholar] [CrossRef]

- Kautz, E.; Ma, W.; Jana, S.; Devaraj, A.; Joshi, V.; Yener, B.; Lewis, D. An image-driven machine learning approach to kinetic modeling of a discontinuous precipitation reaction. Mater. Charact. 2020, 166, 110379. [Google Scholar] [CrossRef]

- Bostanabad, R. Reconstruction of 3D Microstructures from 2D Images via Transfer Learning. CAD Comput.-Aided Des. 2020, 128, 102906. [Google Scholar] [CrossRef]

- Li, W.; Li, W.; Qin, Z.; Tan, L.; Huang, L.; Liu, F.; Xiao, C. Deep Transfer Learning for Ni-Based Superalloys Microstructure Recognition on γ′ Phase. Materials 2022, 15, 4251. [Google Scholar] [CrossRef]

- Chowdhury, A.; Kautz, E.; Yener, B.; Lewis, D. Image driven machine learning methods for microstructure recognition. Comput. Mater. Sci. 2016, 123, 176–187. [Google Scholar] [CrossRef]

- Luo, Q.; Holm, E.A.; Wang, C. A transfer learning approach for improved classification of carbon nanomaterials from TEM images. Nanoscale Adv. 2021, 3, 206–213. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Bengio, Y.; Lamblin, P.; Popovici, D.; Larochelle, H. Greedy layer-wise training of deep networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2007; Volume 19, pp. 153–160. [Google Scholar] [CrossRef]

- Deutsch, J.; He, M.; He, D. Remaining useful life prediction of hybrid ceramic bearings using an integrated deep learning and particle filter approach. Appl. Sci. 2017, 7, 649. [Google Scholar] [CrossRef]

- Fu, Y.; Zhang, Y.; Qiao, H.; Li, D.; Zhou, H.; Leopold, J. Analysis of feature extracting ability for cutting state monitoring using deep belief networks. Procedia CIRP 2015, 31, 29–34. [Google Scholar] [CrossRef]

- Wang, P.; Gao, R.X.; Yan, R. A deep learning-based approach to material removal rate prediction in polishing. CIRP Ann. 2017, 66, 429–432. [Google Scholar] [CrossRef]

- Ye, D.; Fuh, J.Y.H.; Zhang, Y.; Hong, G.S.; Zhu, K. In situ monitoring of selective laser melting using plume and spatter signatures by deep belief networks. ISA Trans. 2018, 81, 96–104. [Google Scholar] [CrossRef] [PubMed]

- Ye, D.; Hong, G.S.; Zhang, Y.; Zhu, K.; Fuh, J.Y.H. Defect detection in selective laser melting technology by acoustic signals with deep belief networks. Int. J. Adv. Manuf. Technol. 2018, 96, 2791–2801. [Google Scholar] [CrossRef]

- Iyer, A.; Dey, B.; Dasgupta, A.; Chen, W.; Chakraborty, A. A Conditional Generative Model for Predicting Material Microstructures from Processing Methods. arXiv 2019, arXiv:1910.02133v1. [Google Scholar]

- Cang, R.; Li, H.; Yao, H.; Jiao, Y.; Ren, Y. Improving direct physical properties prediction of heterogeneous materials from imaging data via convolutional neural network and a morphology-aware generative model. Comput. Mater. Sci. 2018, 150, 212–221. [Google Scholar] [CrossRef]

- Singh, R.; Shah, V.; Pokuri, B.; Sarkar, S.; Ganapathysubramanian, B.; Hegde, C. Physics-aware Deep Generative Models for Creating Synthetic Microstructures. arXiv 2018, arXiv:1811.09669v1. [Google Scholar]

- Yang, Z.; Li, X.; Brinson, L.C.; Choudhary, A.N.; Chen, W.; Agrawal, A. Microstructural materials design via deep adversarial learning methodology. J. Mech. Des. 2018, 140, 4041371. [Google Scholar] [CrossRef]

- Buehler, M.J. Prediction of atomic stress fields using cycle-consistent adversarial neural networks based on unpaired and unmatched sparse datasets. Mater. Adv. 2022, 3, 6280–6290. [Google Scholar] [CrossRef]

- Chun, S.; Roy, S.; Nguyen, Y.T.; Choi, J.B.; Udaykumar, H.S.; Deep, S.S.B. learning for synthetic microstructure generation in a materials-by-design framework for heterogeneous energetic materials. Sci. Rep. 2020, 10, 13307. [Google Scholar] [CrossRef]

- Mosser, L.; Dubrule, O.; Blunt, M.J. Stochastic Reconstruction of an Oolitic Limestone by Generative Adversarial Networks. Transp. Porous Media 2018, 125, 81–103. [Google Scholar] [CrossRef]

- Fokina, D.; Muravleva, E.; Ovchinnikov, G.; Oseledets, I. Microstructure synthesis using style-based generative adversarial networks. Phys. Rev. E 2020, 101, 043308. [Google Scholar] [CrossRef] [PubMed]

- Tang, J. Deep Learning-Guided Prediction of Material’s Microstructures and Applications to Advanced Manufacturing. 2021. Available online: https://tigerprints.clemson.edu/all_dissertations/2936 (accessed on 10 June 2023).

- Pütz, F.; Henrich, M.; Fehlemann, N.; Roth, A.; Münstermann, S. Generating input data for microstructure modelling: A deep learning approach using generative adversarial networks. Materials 2020, 13, 4236. [Google Scholar] [CrossRef]

- Hsu, T.; Epting, W.K.; Kim, H.; Abernathy, H.W.; Hackett, G.A.; Rollett, A.D.; Salvador, P.A.; Holm, E.A. Microstructure Generation via Generative Adversarial Network for Heterogeneous, Topologically Complex 3D Materials. JOM 2021, 73, 90–102. [Google Scholar] [CrossRef]

- Gowtham, N.H.; Jegadeesan, J.T.; Bhattacharya, C.; Basu, B. A Deep Adversarial Approach for the Generation of Synthetic Titanium Alloy Microstructures with Limited Training Data. SSRN Electron. J. 2022, 4148217. [Google Scholar] [CrossRef]

- Mao, Y.; Yang, Z.; Jha, D.; Paul, A.; Liao, W.-K.; Choudhary, A.; Agrawal, A. Generative Adversarial Networks and Mixture Density Networks-Based Inverse Modeling for Microstructural Materials Design. Integr. Mater. Manuf. Innov. 2022, 11, 637–647. [Google Scholar] [CrossRef]

- Thakre, S.; Karan, V.; Kanjarla, A.K. Quantification of similarity and physical awareness of microstructures generated via generative models. Comput. Mater. Sci. 2023, 221, 112074. [Google Scholar] [CrossRef]

- Henkes, A.; Wessels, H. Three-dimensional microstructure generation using generative adversarial neural networks in the context of continuum micromechanics. Comput. Methods Appl. Mech. Eng. 2022, 400, 115497. [Google Scholar] [CrossRef]

- Lee, J.W.; Goo, N.H.; Park, W.B.; Pyo, M.; Sohn, K.S. Virtual microstructure design for steels using generative adversarial networks. Eng. Rep. 2021, 3, e12274. [Google Scholar] [CrossRef]

- Tang, J.; Geng, X.; Li, D.; Shi, Y.; Tong, J.; Xiao, H.; Peng, F. Machine learning-based microstructure prediction during laser sintering of alumina. Sci. Rep. 2021, 11, 10724. [Google Scholar] [CrossRef]

- Agrawal, A.; Choudhary, A. Deep materials informatics: Applications of deep learning in materials science. MRS Commun. 2019, 9, 779–792. [Google Scholar] [CrossRef]

- Suhartono, D.; Purwandari, K.; Jeremy, N.H.; Philip, S.; Arisaputra, P.; Parmonangan, I.H. Deep neural networks and weighted word embeddings for sentiment analysis of drug product reviews. Procedia Comput. Sci. 2023, 216, 664–671. [Google Scholar] [CrossRef]

- Chan, K.Y.; Abu-Salih, B.; Qaddoura, R.; Al-Zoubi, A.M.; Palade, V.; Pham, D.-S.; Del Ser, J.; Muhammad, K. Deep neural networks in the cloud: Review, applications, challenges and research directions. Neurocomputing 2023, 545, 126327. [Google Scholar] [CrossRef]

- Oda, H.; Kiyohara, S.; Tsuda, K.; Mizoguchi, T. Transfer learning to accelerate interface structure searches. J. Phys. Soc. Jpn. 2017, 86, 123601. [Google Scholar] [CrossRef]

- Kailkhura, B.; Gallagher, B.; Kim, S.; Hiszpanski, A.; Han, T.Y.J. Reliable and explainable machine-learning methods for accelerated material discovery. NPJ Comput. Mater. 2019, 5, 108. [Google Scholar] [CrossRef]

- Lee, J.; Transfer, R.A. learning for materials informatics using crystal graph convolutional neural network. Comput. Mater. Sci. 2021, 190, 110314. [Google Scholar] [CrossRef]

- McClure, Z.D.; Strachan, A. Expanding Materials Selection Via Transfer Learning for High-Temperature Oxide Selection. JOM 2021, 73, 103–115. [Google Scholar] [CrossRef]

- Dong, R.; Dan, Y.; Li, X.; Hu, J. Inverse design of composite metal oxide optical materials based on deep transfer learning and global optimization. Comput. Mater. Sci. 2021, 18, 110166. [Google Scholar] [CrossRef]

- Ward, L.; Agrawal, A.; Choudhary, A.; Wolverton, C. A general-purpose machine learning framework for predicting properties of inorganic materials. NPJ Comput. Mater. 2016, 2, 16028. [Google Scholar] [CrossRef]

- Jia, K.; Li, W.; Wang, Z.; Qin, Z. Accelerating Microstructure Recognition of Nickel-Based Superalloy Data by UNet++. In Lecture Notes on Data Engineering and Communications Technologies; Springer Science and Business Media Deutschland GmbH: Berlin, Germany, 2022; Volume 80, pp. 863–870. [Google Scholar] [CrossRef]

- Kondo, R.; Yamakawa, S.; Masuoka, Y.; Tajima, S.; Asahi, R. Microstructure recognition using convolutional neural networks for prediction of ionic conductivity in ceramics. Acta Mater. 2017, 141, 29–38. [Google Scholar] [CrossRef]

- Gupta, V.; Choudhary, K.; Tavazza, F.; Campbell, C.; Liao, W.-K.; Choudhary, A.; Agrawal, A. Cross-property deep transfer learning framework for enhanced predictive analytics on small materials data. Nat. Commun. 2021, 12, 6595. [Google Scholar] [CrossRef]

- Choudhary, K.; Garrity, K.F.; Reid, A.C.E.; DeCost, B.; Biacchi, A.J.; Walker, A.R.H.; Trautt, Z.; Hattrick-Simpers, J.; Kusne, A.G.; Centrone, A.; et al. The joint automated repository for various integrated simulations (JARVIS) for data-driven materials design. NPJ Comput. Mater. 2020, 6, 173. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Y.; Zhao, H.; Burkhart, C.; Brinson, L.C.; Chen, W. A Transfer Learning Approach for Microstructure Reconstruction and Structure-property Predictions. Sci. Rep. 2018, 8, 13461. [Google Scholar] [CrossRef] [PubMed]

- DeCost, B.L.; Francis, T.; Holm, E.A. Exploring the microstructure manifold: Image texture representations applied to ultrahigh carbon steel microstructures. Acta Mater. 2017, 133, 30–40. [Google Scholar] [CrossRef]

- Feng, S.; Zhou, H.; Dong, H. Application of deep transfer learning to predicting crystal structures of inorganic substances. Comput. Mater. Sci. 2021, 195, 110476. [Google Scholar] [CrossRef]

- Pandiyan, V.; Drissi-Daoudi, R.; Shevchik, S.; Masinelli, G.; Le-Quang, T.; Logé, R.; Wasmer, K. Deep transfer learning of additive manufacturing mechanisms across materials in metal-based laser powder bed fusion process. J. Mater. Process. Technol. 2022, 303, 117531. [Google Scholar] [CrossRef]

- Pandiyan, V.; Drissi-Daoudi, R.; Shevchik, S.; Masinelli, G.; Logé, R.; Wasmer, K. Analysis of time, frequency and time-frequency domain features from acoustic emissions during Laser Powder-Bed fusion process. Procedia CIRP 2020, 94, 392–397. [Google Scholar] [CrossRef]

- Scime, L.; Beuth, J. Anomaly detection and classification in a laser powder bed additive manufacturing process using a trained computer vision algorithm. Addit. Manuf. 2018, 19, 114–126. [Google Scholar] [CrossRef]

- Caggiano, A.; Zhang, J.; Alfieri, V.; Caiazzo, F.; Gao, R.; Teti, R. Machine learning-based image processing for on-line defect recognition in additive manufacturing. CIRP Ann. 2019, 68, 451–454. [Google Scholar] [CrossRef]

- Yamada, H.; Liu, C.; Wu, S.; Koyama, Y.; Ju, S.; Shiomi, J.; Morikawa, J.; Yoshida, R. Predicting Materials Properties with Little Data Using Shotgun Transfer Learning. ACS Central Sci. 2019, 5, 1717–1730. [Google Scholar] [CrossRef]

- Farizhandi, A.A.K.; Mamivand, M. Processing time, temperature, and initial chemical composition prediction from materials microstructure by deep network for multiple inputs and fused data. Mater. Des. 2022, 219, 110799. [Google Scholar] [CrossRef]

- Yang, L.; Dai, W.; Rao, Y.; Chyu, M.K. Optimization of the hole distribution of an effusively cooled surface facing non-uniform incoming temperature using deep learning approaches. Int. J. Heat Mass Transf. 2019, 145, 118749. [Google Scholar] [CrossRef]

- Mendizabal, A.; Márquez-Neila, P.; Cotin, S. Simulation of hyperelastic materials in real-time using deep learning. Med. Image Anal. 2019, 59, 10156. [Google Scholar] [CrossRef] [PubMed]

- Altarazi, S.; Ammouri, M.; Hijazi, A. Artificial neural network modeling to evaluate polyvinylchloride composites’ properties. Comput. Mater. Sci. 2018, 153, 1–9. [Google Scholar] [CrossRef]

- Rong, Q.; Wei, H.; Huang, X.; Bao, H. Predicting the effective thermal conductivity of composites from cross sections images using deep learning methods. Compos. Sci. Technol. 2019, 184, 107861. [Google Scholar] [CrossRef]

- You, K.W.; Arumugasamy, S.K. Deep learning techniques for polycaprolactone molecular weight prediction via enzymatic polymerization process. J. Taiwan Inst. Chem. Eng. 2020, 116, 238–255. [Google Scholar] [CrossRef]

- Tong, Z.; Wang, Z.; Wang, X.; Ma, Y.; Guo, H.; Liu, C. Characterization of hydration and dry shrinkage behavior of cement emulsified asphalt composites using deep learning. Constr. Build. Mater. 2021, 274, 121898. [Google Scholar] [CrossRef]

- Tong, Z.; Gao, J.; Wang, Z.; Wei, Y.; Dou, H. A new method for CF morphology distribution evaluation and CFRC property prediction using cascade deep learning. Constr. Build. Mater. 2019, 222, 829–838. [Google Scholar] [CrossRef]

- Choudhary, K.; DeCost, B.; Chen, C.; Jain, A.; Tavazza, F.; Cohn, R.; Park, C.W.; Choudhary, A.; Agrawal, A.; Billinge, S.J.L.; et al. Recent advances and applications of deep learning methods in materials science. NPJ Comput. Mater. 2022, 8, 59. [Google Scholar] [CrossRef]

- Wang, Y.; Soutis, C.; Ando, D.; Sutou, Y.; Narita, F. Application of deep neural network learning in composites design. Eur. J. Mater. 2022, 2, 117–170. [Google Scholar] [CrossRef]

- Kong, S.; Guevarra, D.; Gomes, C.P.; Gregoire, J.M. Materials representation and transfer learning for multi-property prediction. Appl. Phys. Rev. 2021, 8, 021409. [Google Scholar] [CrossRef]

| Traditional ML Methods | Strengths | Weaknesses |

|---|---|---|

| Support Vector Machine (SVM) | High prediction speed and accuracy for small datasets Ability to handle high-dimensional data Relatively memory efficient | Inefficient for large datasets Not suitable for noisy data |

| k-Nearest Neighbor (k-NN) | Simple structure and easy implementation Robust to noise Mature theory | Slow performance with large-volume datasets Computationally expensive Poor performance with high-dimensional data Requires significant storage space Performance influenced by the choice of k |

| Decision Tree | Easy to understand and interpret Good visualization of results | Prone to overfitting Longer training period Requires additional domain knowledge |

| Random Forest | Easy to understand and interpret Low computational cost Good performance with high-dimensional data | Prone to overfitting |

| Artificial Neural Network (ANN) | Parallel information processing capability High prediction accuracy and speed Effective approximation of complex nonlinear functions Suitable for relatively large datasets | Computationally expensive Prone to overfitting with small datasets Lack of transparency due to the “black box” nature of training procedures |

| DL Methods | Strengths | Weaknesses |

|---|---|---|

| Convolutional Neural Network (CNN) | Well-suited for multi-dimensional data, particularly images Effective for extracting relevant features Excellent performance in local feature extraction | Complex architecture, requiring longer training times Requires a sufficient amount of training data Prone to overfitting |

| Recurrent Neural Network (RNN) | Suitable for sequential data analysis Can capture temporal changes and patterns effectively Well suited for time series data. | Difficult to train and implement due to complex architectures |

| Auto-Encoder (AE) | Easy to implement Computationally efficient Can learn enriched representations | Requires a large amount of training data Ineffective when relevant information is overshadowed by noise Performance can degrade if errors occur in the initial layers |

| Deep Belief Network (DBN) | Well suited for one-dimensional data Extracts high-level features from input data Performs well with complex data without requiring extensive data preparation Pre-training stage removes the need for labeled data. | Training can be slow due to complex initialization and computational expense Inference and learning with multiple stochastic hidden layers can be challenging |

| Generative Adversarial Network (GAN) | Efficient at generating synthetic data with limited training data | Difficult to train and optimize Limited data generation ability when training data are extremely limited |

| AI Methods | Strengths | Weaknesses |

|---|---|---|

| Traditional machine learning | Accurate for small datasets Requires less training time Efficient CPU utilization | Less accuracy in the case of high-dimensional data Preprocessing is necessary Requires highly accurate preprocessing |

| Deep learning | Accurate for big data Automatically extracts relevant features Preprocessing is not necessary | Requires big data for optimal performance Computationally expensive and requires GPU acceleration Highly complex network architecture Not easily interpretable |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kibrete, F.; Trzepieciński, T.; Gebremedhen, H.S.; Woldemichael, D.E. Artificial Intelligence in Predicting Mechanical Properties of Composite Materials. J. Compos. Sci. 2023, 7, 364. https://doi.org/10.3390/jcs7090364

Kibrete F, Trzepieciński T, Gebremedhen HS, Woldemichael DE. Artificial Intelligence in Predicting Mechanical Properties of Composite Materials. Journal of Composites Science. 2023; 7(9):364. https://doi.org/10.3390/jcs7090364

Chicago/Turabian StyleKibrete, Fasikaw, Tomasz Trzepieciński, Hailu Shimels Gebremedhen, and Dereje Engida Woldemichael. 2023. "Artificial Intelligence in Predicting Mechanical Properties of Composite Materials" Journal of Composites Science 7, no. 9: 364. https://doi.org/10.3390/jcs7090364

APA StyleKibrete, F., Trzepieciński, T., Gebremedhen, H. S., & Woldemichael, D. E. (2023). Artificial Intelligence in Predicting Mechanical Properties of Composite Materials. Journal of Composites Science, 7(9), 364. https://doi.org/10.3390/jcs7090364