Enhancing Domain-Specific Supervised Natural Language Intent Classification with a Top-Down Selective Ensemble Model

Abstract

1. Introduction

2. Related Work and Motivation

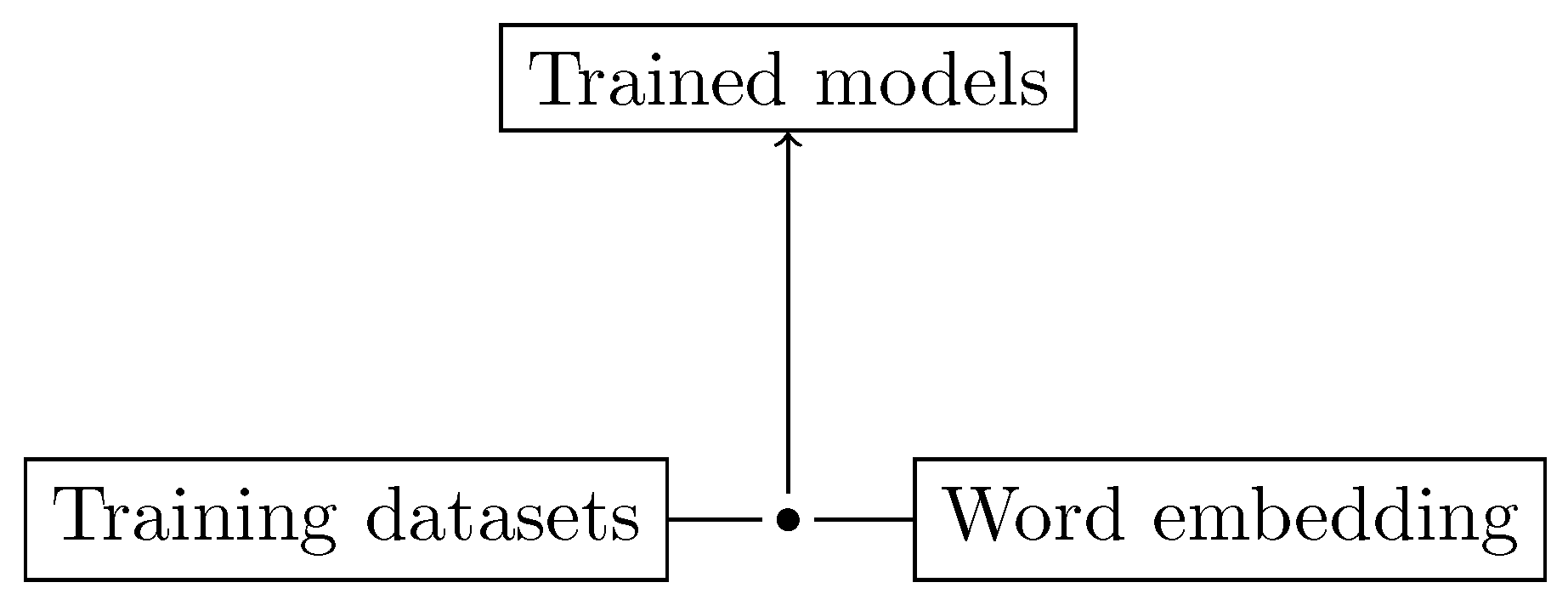

- Train a supervised classifier.

- Train a word embedding.

3. Experiments

3.1. The Models

3.2. The Baseline

3.3. Retrofitting

3.3.1. Retrofitting with Out-of-the-Box Resources

3.3.2. Retrofitting with Bespoke Domain Ontologies

- A collection of words (tokens),

- that are typical or indicative of an intent label,

- grouped on the basis of intent labels.

3.4. Ensemble Approach

4. Results and Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Bengio, Y.; Ducharme, R.; Vincent, P.; Jauvin, C. A neural probabilistic language model. J. Mach. Learn. Res. 2003, 3, 1137–1155. [Google Scholar]

- Goldberg, Y. A primer on neural network models for natural language processing. J. Artif. Intell. Res. 2016, 57, 345–420. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv, 2013; arXiv:1301.3781. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Nooralahzadeh, F.; Øvrelid, L.; Lønning, J.T. Evaluation of Domain-specific Word Embeddings using Knowledge Resources. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC-2018), Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Neuraz, A.; Llanos, L.C.; Burgun, A.; Rosset, S. Natural language understanding for task oriented dialog in the biomedical domain in a low resources context. arXiv, 2018; arXiv:1811.09417. [Google Scholar]

- Zhang, Y.; Li, H.; Wang, J.; Cohen, T.; Roberts, K.; Xu, H. Adapting Word Embeddings from Multiple Domains to Symptom Recognition from Psychiatric Notes. AMIA Summits Transl. Sci. Proc. 2018, 2017, 281–289. [Google Scholar]

- Kang, Y.; Zhang, Y.; Kummerfeld, J.K.; Tang, L.; Mars, J. Data Collection for Dialogue System: A Startup Perspective. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 3 (Industry Papers), New Orleans, LA, USA, 1–6 June 2018; Volume 3, pp. 33–40. [Google Scholar]

- Yang, X.; Chen, Y.N.; Hakkani-Tür, D.; Crook, P.; Li, X.; Gao, J.; Deng, L. End-to-end joint learning of natural language understanding and dialogue manager. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5690–5694. [Google Scholar]

- Mikolov, T.; Yih, W.t.; Zweig, G. Linguistic regularities in continuous space word representations. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Atlanta, GA, USA, 9–14 June 2013; pp. 746–751. [Google Scholar]

- Harris, Z.S. Distributional structure. Word 1954, 10, 146–162. [Google Scholar] [CrossRef]

- Vulić, I.; Glavaš, G.; Mrkšić, N.; Korhonen, A. Post-Specialisation: Retrofitting Vectors of Words Unseen in Lexical Resources. arXiv, 2018; arXiv:1805.03228. [Google Scholar]

- Chiu, B.; Crichton, G.; Korhonen, A.; Pyysalo, S. How to train good word embeddings for biomedical NLP. In Proceedings of the 15th Workshop on Biomedical Natural Language Processing, Berlin, Germany, 12 August 2016; pp. 166–174. [Google Scholar]

- Sundermeyer, M.; Schlüter, R.; Ney, H. LSTM neural networks for language modeling. In Proceedings of the Thirteenth Annual Conference of the International Speech Communication Association, Portland, OR, USA, 9–13 September 2012. [Google Scholar]

- Yin, W.; Kann, K.; Yu, M.; Schütze, H. Comparative study of CNN and RNN for natural language processing. arXiv, 2017; arXiv:1702.01923. [Google Scholar]

- Baker, C.F.; Fillmore, C.J.; Lowe, J.B. The berkeley framenet project. In Proceedings of the 17th International Conference on Computational Linguistics-Volume 1, Montreal, QC, Canada, 10–14 August 1998; pp. 86–90. [Google Scholar]

- Miller, G.A. WordNet: A lexical database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Faruqui, M.; Dodge, J.; Jauhar, S.K.; Dyer, C.; Hovy, E.; Smith, N.A. Retrofitting Word Vectors to Semantic Lexicons. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; pp. 1606–1615. [Google Scholar]

- Jenset, G.B.; McGillivray, B. Quantitative Historical Linguistics: A Corpus Framework; Oxford University Press: Oxford, UK, 2017. [Google Scholar]

- McGillivray, B.; Hengchen, S.; Lähteenoja, V.; Palma, M.; Vatri, A. A computational approach to lexical polysemy in Ancient Greek. Digit. Scholarsh. Humanit. 2019, in press. [Google Scholar]

- Woźniak, M.; Graña, M.; Corchado, E. A survey of multiple classifier systems as hybrid systems. Inf. Fusion 2014, 16, 3–17. [Google Scholar] [CrossRef]

- Sculley, D.; Holt, G.; Golovin, D.; Davydov, E.; Phillips, T.; Ebner, D.; Chaudhary, V.; Young, M.; Crespo, J.F.; Dennison, D. Hidden technical debt in machine learning systems. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2503–2511. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://github.com/fchollet/keras (accessed on 17 April 2019).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: tensorflow.org (accessed on 17 April 2019).

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit; O’Reilly: Sebastopol, CA, USA, 2009. [Google Scholar]

- Kiela, D.; Hill, F.; Clark, S. Specializing word embeddings for similarity or relatedness. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 2044–2048. [Google Scholar]

- Yu, Z.; Cohen, T.; Wallace, B.; Bernstam, E.; Johnson, T. Retrofitting word vectors of mesh terms to improve semantic similarity measures. In Proceedings of the Seventh International Workshop on Health Text Mining and Information Analysis, Austin, TX, USA, 5 November 2016; pp. 43–51. [Google Scholar]

- Mrkšić, N.; Vulić, I.; Séaghdha, D.Ó.; Leviant, I.; Reichart, R.; Gašić, M.; Korhonen, A.; Young, S. Semantic specialisation of distributional word vector spaces using monolingual and cross-lingual constraints. arXiv, 2017; arXiv:1706.00374. [Google Scholar]

- Duc, A.N.; Abrahamsson, P. Minimum viable product or multiple facet product? The Role of MVP in software startups. In Agile Processes, in Software Engineering, and Extreme Programming. XP 2016; Volume 251, Lecture Notes in Business Information Processing; Sharp, H., Hall, T., Eds.; Springer: Cham, Switzerland, 2016; pp. 118–130. [Google Scholar]

- Sparck Jones, K. A statistical interpretation of term specificity and its application in retrieval. J. Doc. 1972, 28, 11–21. [Google Scholar] [CrossRef]

- Roli, F.; Giacinto, G.; Vernazza, G. Methods for designing multiple classifier systems. In International Workshop on Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2001; pp. 78–87. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2017. [Google Scholar]

- Antoniak, M.; Mimno, D. Evaluating the stability of embedding-based word similarities. Trans. Assoc. Comput. Linguist. 2018, 6, 107–119. [Google Scholar] [CrossRef]

- Wendlandt, L.; Kummerfeld, J.K.; Mihalcea, R. Factors Influencing the Surprising Instability of Word Embeddings. arXiv, 2018; arXiv:1804.09692. [Google Scholar]

- Manning, C.D. Computational linguistics and deep learning. Comput. Linguist. 2015, 41, 701–707. [Google Scholar] [CrossRef]

- Church, K.W. Emerging trends: I did it, I did it, I did it, but…. Nat. Lang. Eng. 2017, 23, 473–480. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Why should I trust you?: Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Biran, O.; Cotton, C. Explanation and justification in machine learning: A survey. In Proceedings of the IJCAI-17 Workshop on Explainable AI (XAI), Melbourne, Australia, 20 August 2017; pp. 8–13. [Google Scholar]

- Arras, L.; Horn, F.; Montavon, G.; Müller, K.R.; Samek, W. “What is relevant in a text document?”: An interpretable machine learning approach. PLoS ONE 2017, 12, e0181142. [Google Scholar] [CrossRef] [PubMed]

- Holzinger, A. Introduction to machine learning and knowledge extraction (MAKE). Mach. Learn. Knowl. Extr. 2017, 1, 1–20. [Google Scholar] [CrossRef]

- Koerner, E.F. Bloomfieldian Linguistics and the Problem of “Meaning”: A Chapter in the History of the Theory and Study of Language. Jahrbuch für Amerikastudien 1970, 15, 162–183. [Google Scholar]

- Roe, G.; Gladstone, C.; Morrissey, R. Discourses and Disciplines in the Enlightenment: Topic modeling the French Encyclopédie. Front. Digit. Humanit. 2016, 2, 8. [Google Scholar] [CrossRef]

| Input | Intent |

|---|---|

| Hi | greeting |

| Tell me about ISAs | what_is_isa |

| Can you help me open an account? | open_saving_account |

| How much do I need to save for my retirement? | calculate_ideal_pension_contribution |

| System | Accuracy | Avg. Macro F1 |

|---|---|---|

| Baseline | 0.929 | 0.922 |

| Retrofitted with FrameNet | 0.929 | 0.918 |

| Retrofitted with WordNet | 0.922 | 0.914 |

| Retrofitted empirical lexicon | 0.929 | 0.922 |

| Retrofitted with manually-edited synonyms | 0.926 | 0.915 |

| MCS without grouping | 0.910 | 0.904 |

| MCS with grouping | 0.971 | 0.949 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jenset, G.B.; McGillivray, B. Enhancing Domain-Specific Supervised Natural Language Intent Classification with a Top-Down Selective Ensemble Model. Mach. Learn. Knowl. Extr. 2019, 1, 630-640. https://doi.org/10.3390/make1020037

Jenset GB, McGillivray B. Enhancing Domain-Specific Supervised Natural Language Intent Classification with a Top-Down Selective Ensemble Model. Machine Learning and Knowledge Extraction. 2019; 1(2):630-640. https://doi.org/10.3390/make1020037

Chicago/Turabian StyleJenset, Gard B., and Barbara McGillivray. 2019. "Enhancing Domain-Specific Supervised Natural Language Intent Classification with a Top-Down Selective Ensemble Model" Machine Learning and Knowledge Extraction 1, no. 2: 630-640. https://doi.org/10.3390/make1020037

APA StyleJenset, G. B., & McGillivray, B. (2019). Enhancing Domain-Specific Supervised Natural Language Intent Classification with a Top-Down Selective Ensemble Model. Machine Learning and Knowledge Extraction, 1(2), 630-640. https://doi.org/10.3390/make1020037