1. Introduction

The use of AI in financial services is becoming increasingly interesting for financial companies due to potential cost savings and/or quality improvements [

1]. It introduces benefits and risks for all participants using AI-based services [

2]. Furthermore, increasing data volumes and processing capacities provide the prerequisites necessary for more automation using AI. However, AI-enabled services and products may create financial and non-financial risks and raise consumer and investor protection considerations [

3]. Concurrently, regulators are involved in the finance industry to ensure market safety, consumer protection, and market integrity against any inequitable discrimination by automated services [

2,

4]. To be up to date and gain wider public acceptance, the regulatory principles are gradually renewed and adapted based on market structure and technological changes to provide safety and the necessary high adaptability. However, looking at the basic characteristics of these AI-based services, the development, deployment, and maintenance of such services are challenging due to the increased complexity and required coordination with regulators [

1]. But, any conceptual and structural changes in such services are mostly unique, complex, and costly [

1,

5,

6]. As a result of this increased complexity and rapid technological developments in AI, a deep understanding of the regulatory principles is required to consider and fulfill obligations, as well as to drive further measures [

1].

Furthermore, AI-based services cannot ensure proper results forever; rather, it is necessary to perform multiple periodic tests and validations, including continuous monitoring, and adjustments. For example, due to financial and cross-sectoral differences, it may not be suitable to continue working with datasets before and after the COVID-19 pandemic [

7]. Thus, the financial situation of a company in the healthcare sector and a company in the travel sector differs significantly from the situation before the COVID-19 pandemic. Furthermore, regulatory and supervisory authorities also anticipate such situations and make it essential for financial companies to be prepared with emergency measures and the monitoring structures of AI-based services [

7,

8,

9]. Due to the still immature regulatory environment, it is necessary to identify, analyze, and structure the key regulatory aspects and priorities so that related concepts can be addressed in research and practice. Moreover, due to the complexity of desired AI-based services, financial companies must deal with multiple regulatory expectations, including technological, organizational, and communicational cornerstones, to ensure the robustness, security, quality, compliance, and functionality of AI-based services.

The problem of the immature regulatory environment for practitioners makes the development of compliant AI services more complex. Thus, the development process of AI-based services, which consists of several different phases as foreseen by the regulators, must be addressed in a more organized manner for deriving and defining compliant and practical approaches [

8,

9]. Likewise, the identification of key regulatory aspects and priorities from the regulatory principles is necessary for researchers and financial firms to derive appropriate measures. Missing a clear definition of regulatory expectations makes conducting specific compliant AI-based service research and development difficult. Therefore, an overall regulatory picture of the finance industry can help determine and address research gaps that can in turn contribute to the consolidation of regulations. Derived from the motivation of structuring regulatory principles to promote successful and near-field interventions between practice and regulatory authorities, we define the following research question:

RQ: How can European regulatory principles be classified into useful dimensions and characteristics for the development of AI applications in financial services?

In the following, we first introduce the theoretical background of the European regulatory environment for AI-based services. Subsequently, we present and adapt the methodological approach for taxonomy development proposed by Nickerson et al. (2013) before we show the research process in detail. We then describe the development process that leads to the final taxonomy. Afterward, we discuss our results, mention limitations, and state a conclusion with an outlook on future research.

2. Theoretical Background of AI Regulations in the Finance Industry

Due to the global nature of the finance industry, it is necessary to consider the perspective of the Organization for Economic Co-operation and Development (OECD), which also proposed AI principles to ensure an innovative and trustworthy use of AI. In this way, regulatory authorities collaborate to establish and monitor the cross-industrial use of AI at global, European, and national levels. Moreover, regulators are proposing to create benchmarks for AI that are practical and flexible enough to be proven over time [

5,

10]. Given the rapid pace of technological change and the increasing need and motivation to benefit from intelligent, automated, and more efficient systems in the financial industry, it is a priority of European regulators to establish a fundamental and forward-looking legal basis for the use of AI [

6]. As a result, the European Commission announced the establishment of a standard regulatory framework as part of its Digital Finance Strategy until 2024 [

11].

The first-ever proposed legal framework on AI aims to provide developers, deployers, and users with clear requirements and obligations regarding specific uses of AI [

11]. Due to the increasing complexity and possible lack of explainability with respect to AI algorithms, the European Commission observed an essential prerequisite addressing the risks posed specifically by AI applications and proposed a risk-based approach consisting of four levels of risk in AI: unacceptable, high, limited, and minimal or no risk [

11]. Following this approach, the European Commission published the

Proposal of Artificial Intelligence Act in 2021 [

12]. But, when the structure of the existing regulatory principles is considered in detail, it is clear that the structure still consists of the first-ever drafts called Ethics Guidelines for Trustworthy AI from 2019 proposed by the High-Level Expert Group on AI (AI HLEG) [

13]. Nevertheless, the European objective of establishing a forward-looking legal basis is still pending and cannot provide developers and financial companies with a suitable structure that has clear requirements.

One of the primary objectives of regulatory authorities is to keep the field secure, innovative, and forward-looking. For innovative AI-based services, the concerns and basic principles have been addressed by the OECD in 2019 [

14]. However, the problem of missing regulatory frameworks and incompatibilities with existing regulations has remained under discussion over the years. Due to the ongoing discussion on defining final regulations over the years, there are uncertainties regarding the design and organization of AI-based services in the market. However, due to rushed technological developments, financial companies are increasingly interested in improving the efficiency and quality of financial services and products [

1,

7]. Furthermore, the increasing risks must be reconciled with legal requirements to promote responsible AI development and deployment and ensure the safety of customers in practice [

15]. According to recent research, there is a consensus on the primary objectives of regulations for the use of AI across industries, which can be summarized into four points: fairness, sustainability, accuracy, and explainability [

15,

16,

17,

18,

19,

20].

Nevertheless, the financial and organizational costs of offering these services must be carefully evaluated by financial companies [

21]. Due to the immature regulatory basis, the coordination and approval processes between financial companies and regulators can take a long time. In particular, a broad assessment of AI-based services and maintenance costs in comparison to the improvement achieved is necessary regardless of whether the return on investment is sufficient for financial companies [

5,

22].

3. Research Method

The development of a taxonomy for structuring regulatory principles from financial authorities for AI-based services consists of the taxonomy development methodology according to Nickerson et al. [

23]. This methodology helps us structure the taxonomy development process. A taxonomy (

T) has a set of dimensions (

D), which consists of a set of mutually exclusive and collectively exhaustive characteristics (

C), as defined in the following formula [

23]:

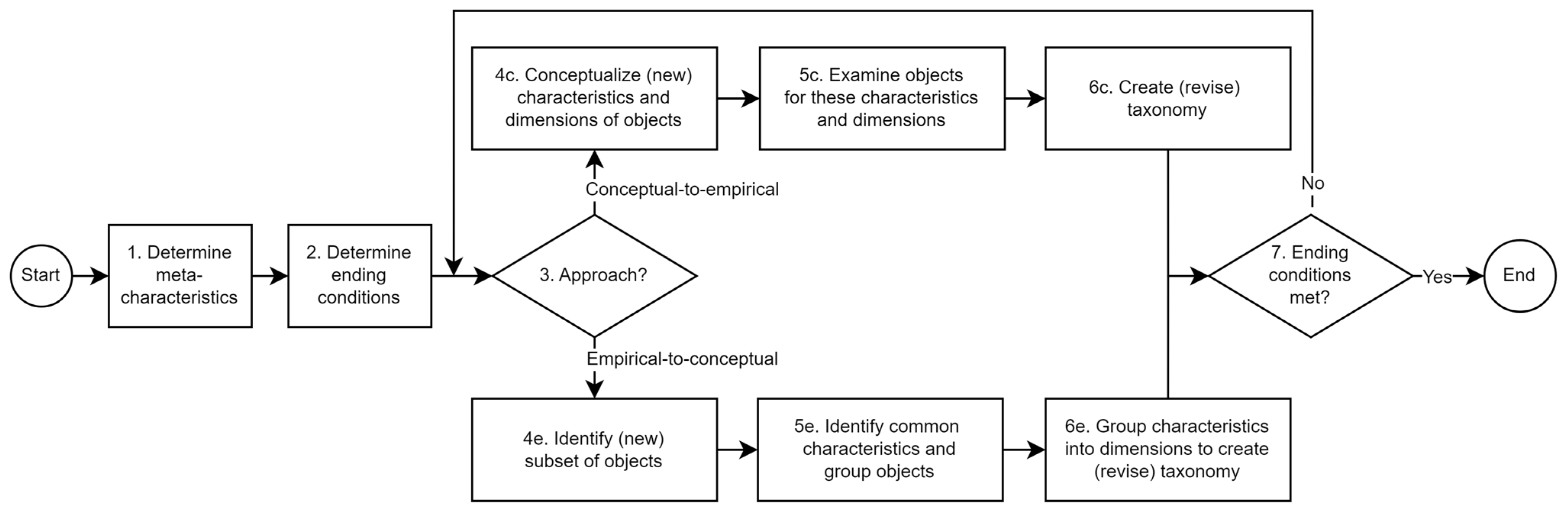

The purpose of this taxonomy is to structure European regulatory principles to highlight key regulatory aspects and priorities, including existing interests and concerns for further research. Moreover, the countries in Europe also have specifications for the use of AI that must be considered to give an overall picture. The objects to be classified are the European and country-specific regulatory principles for AI-based services in financial services. In the first step, it is necessary to define the meta-characteristic of the taxonomy as a basis for the selection of the characteristics. As a result of this selection, the identified characteristics can be summarized as a logical implication of the meta-characteristics and reflect the purpose of the taxonomy [

23]. From this background, we define the meta-characteristic of this taxonomy as the

key regulatory aspects and priorities of financial regulatory principles for AI-based services. As defined in the taxonomy development process, empirical-to-conceptual (inductive) or conceptual-to-empirical (deductive) approaches can be distinctively used to evolve the taxonomy. The conceptual-to-empirical approach (Step 4c) can be used to conceptualize the (new) characteristics and dimensions of objects, whereas the empirical-to-conceptual approach (Step 4e) can be used to identify a (new) subset of objects in the taxonomy (

Figure 1).

The design of this methodology can be considered as a search process for a useful taxonomy [

24]. Therefore, Nickerson et al. [

23] define objective and subjective ending conditions (Step 2) as a crucial point to evaluate the usefulness of the developed taxonomy (see

Table 1). We use a subset of the objective ending conditions that are related to the correctness of the taxonomy and the process, whereas subjective conditions ensure that the taxonomy is meaningful and practical [

23,

25].

4. Research Process

We first start by giving an overview of identified European regulatory authorities and the conducted literature review. Afterward, we determine the choice of the iterative methods in each iteration (Step 3). As mentioned above, European regulators are leading the way in the development of regulatory principles. Therefore, we start with an empirical-to-conceptual approach (first iteration) to derive the fundamental structure of the taxonomy using European regulatory principles. As a result, we decided to extend the taxonomy considering country-specific regulatory principles with an empirical-to-conceptual approach (second iteration), including identified use cases and reports. Lastly, to rethink and finalize the taxonomy, we conducted a conceptual-to-empirical approach and finalized the taxonomy of regulatory principles (third iteration).

4.1. Review of European Regulatory Authorities and the Literature

We first identified the European supervisory authorities for the finance industry and analyzed the regulatory principles for AI usage (

Table 2). Due to the iterative process of analyzing regulatory principles, we also included the identified publications of the authorities from different years, including regulatory backgrounds. For a representative and useful taxonomy, we consider the leading countries in the finance industry in Europe ranked by their GDP to confirm and eventually restructure the taxonomy [

26]. We found that the identified countries contain exemplary AI approaches for financial companies rather than defining any country-specific regulatory perspective. Therefore, we decided not to extend the list of county-specific regulations since these countries consent to the same regulatory perspective with overarching principles from the European Commission.

Additionally, we conducted a structured literature review based on the methodological guidelines of Cooper [

48] and vom Brocke et al. [

49] to consider the current scientific state. The search is conducted in established scientific databases, such as ACM Digital Library, AIS Electronic Library, Ebscohost, EmeraldInsight, Jstor, ScienceDirect, and SpringerLink. The following search terms are used to identify the existing regulatory requirements and essential components: “Regulatory principles”, “Financial Services”, “Artificial Intelligence”, and “Machine Learning”. After analyzing the titles and abstracts of accessible publications, we found 374 publications, of which 38 appeared to be relevant to dealing with the regulatory principles for AI-based services in the finance industry. With back and forward searches, we selected 16 additional relevant publications. Relevant articles are those that consider regulatory requirements for AI-based services.

4.2. First Iteration E2C

Due to the overarching role, we start with the European regulatory principles for the use of AI shown in

Table 2 and follow an empirical-to-conceptional approach (E2C) in the first iteration [

27]. We assume that examining these principles can help understand the underlying objective of supervisory authorities to ensure market safety, consumer protection, and market integrity (Step 4e). First, we distinguish the regulatory principles with underlying goals for the development of AI-based services, which can help summarize the perspective of European regulatory authorities. However, these goals differ depending on the use case, so companies must take an individual and case-based approach depending on the task. From this background, different goals arise from the regulatory principles, which must be checked with respect to whether they have been achieved. The underlying goals of regulatory principles can provide an overview of existing priorities and concerns of regulators, so we have added “goals” as our first dimension [

12]. According to the consensus on the main objectives outlined in

Section 2, we have observed the following characteristics in the regulatory principles that can be adopted in the taxonomy: “explainability and accountability”, “fairness, privacy, and human rights”, and “accuracy” [

15,

17,

18]. Moreover, entire processes (front or back office) that have access to these AI-based services must be equipped with appropriate measures for their “sustainability and robustness” (Step 5e) [

9,

12,

28].

Second, we assume that regulatory principles consider the underlying “approach” at each stage of the value chain. The principles address the complexity because it may hinder the adoption of innovative AI-based services given the need for effective human oversight and skilled management [

27]. The principles can be characterized based on the product development stages “design”, “development”, “training”, “testing”, and “validation” up until the “deployment” of proposed AI-based services [

12]. Moreover, “cooperation with authorities” is considered an important part of proposed services that must be well designed, so we added it as the seventh characteristic under “approach” to address respective regulatory principles (Step 5e) [

29].

A risk-free operation and thoughtful management are recognized as crucial points for AI-based services, so we added “risk management” as the third dimension [

12,

27]. According to the regulatory principles of the European Commission, financial companies must “estimate and evaluate the risks” that may arise if any AI-based service is operated as intended and under conditions of reasonably foreseeable misuse. Moreover, under the regulatory principles, “human oversight” is emphasized to minimize risks that may arise from algorithmic decisions [

29]. This is an important part of design and development in that a natural person can oversee the functioning of these AI-based services. As another characteristic, we have identified the principles, including “control measures”, required in the regulation and management of risks that cannot be automatically eliminated. These “control measures”, together with “human oversight”, are critical components in managing potential attacks or even system failures and protecting ongoing operations with pre-defined procedures and capabilities. In addition, we identified regulatory principles that address “conformity assessment” for the prevention or minimization of risks to protect the fundamental rights posed by such systems, as well as ensure the availability of adequate capacity and resources at designated bodies. Moreover, “mitigation measures” [

12] and “maintenance” [

9] must be well defined and planned to reduce the risks before these systems are placed on the market (Step 5e).

As a fourth dimension, we identified the “monitoring” of these services, which includes both organizational and technical components in regulatory principles. The “(technical) documentation” plays a crucial role in understanding the underlying regulatory and technical dimensions of AI-based services. This documentation is required to assess the compliance of regulatory authorities relative to the system and enable the traceability of the underlying technical and organizational systems that operate with AI. Moreover, we identified “logging” and “post-market” as characteristics that are considered crucial parts of monitoring with respect to regulatory principles for the ongoing validation, overall evaluation, and implementation of necessary adjustments. Further, we identified the continuous monitoring of “functionality (of the model)” as another characteristic necessary for managing and reducing risks: for example, via defined control measures. In the respective principles, the monitoring of functionality is also considered to determine the performance limits of AI-based services (Step 5e).

As the last dimension, we include the regulatory principles addressing the “data”, which set out the characteristics regarding “governance”, “relevance and representativeness”, “collection”, and ”preparation” [

9,

12,

27]. The regulatory principles for data are even more detailed and define the criteria for preventing any systematic discrimination, so we decided to consider “data” as a separate dimension. The taxonomy after the first iteration can be seen in

Figure 2 (Step 6e).

The current taxonomy is concise and robust since the derived dimensions characterize the regulatory principles of European supervisory authorities. However, it is necessary to consider country-specific regulatory principles in Europe to ensure their usefulness and satisfy the taxonomy’s purposes. The second iteration can help expand and confirm the identified characteristics. Moreover, we can conclude that the objective ending conditions are not met after this iteration, and a second iteration must be conducted (Step 7).

4.3. Second Iteration E2C

In the second iteration, we follow an empirical-to-conceptual approach based on the review of country-specific regulatory principles in

Table 2 and examine use cases and reports to find further dimensions and characteristics in the taxonomy. We assume that country-specific regulatory principles can help confirm or (re-)structure dimensions and characteristics by incorporating national guidelines and interpretations for a useful and robust taxonomy.

In the second iteration, we identified regulatory principles that consider the trade-off between explainability and accuracy as an important issue for AI-based services [

8,

32,

34,

38,

41] because an increased level of explanation limits the performance of the AI algorithm [

50]. Therefore, the identified regulatory principles consider the “accuracy” of AI models as a challenge compared to traditional financial models that are rule-based, with explicitly fixed parameterization [

3,

32,

34,

38]. Furthermore, we confirm that the characteristics “sustainability and robustness” [

3,

34,

38], “explainability and accountability” [

3,

34,

47], and “fairness, privacy, and human rights” [

8,

34,

41] are considered goals in the regulatory principles from European countries. Securing data and IT infrastructure for better “sustainability and robustness” is seen as continuous investment in regulatory principles and is required to ensure the resilience of AI-based services [

34,

47,

51].

The underlying “approach” was considered in more detail due to the use-case-based description of country-specific regulatory principles and priorities. Since (re-)training can change everything overnight, the underlying approach of “training” is required to justify the approach [

32]. The same issue arises again through the processes of “testing” [

32,

47] and “validation” [

8,

32,

34,

38] carried out by financial companies. Due to the required impact assessment on customers and employees, the issues with the “deployment” of AI-based services were addressed in the regulatory principles as an important part before the final placement on the market [

34,

38]. Since each change must be reviewed by the respective supervisory authorities, “cooperation with the authorities” is required for financial companies to develop an appropriate review process [

8,

32,

34].

Country-specific regulatory principles recognize the ”estimation and evaluation” of risks as an important part of defining the limits and identifying possible risks of AI-based services [

32,

34,

41]. Likewise, we found that “human oversight” is considered to be essential concerning the level of automation chosen to avoid any algorithmic risks [

3,

8,

34]. Dependence on both of these characteristics is also required to provide “control measures” to limit any damage that can arise in the case of failure [

38,

47]. Moreover, the “maintenance” of AI-based services is seen as part of the model’s changes to ensure the adaptability of such systems to updated or changed datasets [

8,

32]. Furthermore, we can also confirm that country-specific regulations consider “mitigation measures” as an appropriate characteristic to limit the damage to a minimum in the case of failure [

3,

8,

32].

We have seen that all characteristics for “monitoring” were confirmed in the country-specific regulatory principles due to the required observation of functionality and long-term assessments [

8,

38]. We found, in the country-specific regulatory principles, that they also consider “(technical) documentation” as an important part of AI-based services to ensure clarity for both internal and external parties [

3,

8,

38]. Moreover, “logging” is required as a part of monitoring and for understanding the operation of the system [

38,

47]. Likewise, country-specific regulations recognize “post-market” monitoring for ongoing validation, overall evaluation, and appropriate adjustments [

32,

38].

Furthermore, we confirm that the “governance” [

34,

41], “relevancy and representativeness” [

3,

34,

47], “collection”, and "preparation” [

3,

32] of data were considered in county-specific regulatory principles to ensure a high level of data quality. As a result of the analysis of country-specific regulatory principles, we have seen that identified regulatory principles consider the concerns about data quality and privacy issues in more detail [

34,

40,

41]. The second iteration confirmed the dimensions and characteristics mentioned above, and no structural changes to taxonomy (

Figure 2) are therefore necessary (Step 6e).

As a result of the second iteration, we can confirm that the current state of the taxonomy is concise and robust enough since the derived dimensions characterize the European regulatory principles, including the regulatory principles of the top six countries ranked by GDP. Moreover, we can confirm that the identified characteristics reflect country-specific regulatory principles, as no additional dimensions and characteristics have been identified. We believe that the current taxonomy is comprehensive, extendible, and explanatory. The existing dimensions and characteristics are sufficient for the objects. However, we can conclude that not all objective ending conditions are met after this iteration, and a third iteration must be conducted (Step 7).

4.4. Third Iteration—C2E—Final Taxonomy

To rethink the current state of the taxonomy of regulatory principles, we decided to follow a conceptional-to-empirical approach (Step 4c). We found that the dimension “approach” can be restructured based on the country-specific regulatory principles in

Table 2: The characteristics “development”, “training”, “testing”, “deployment”, and “validation” represent the fundamental steps for designing an AI-based service, so they can be grouped by “design”. Regarding the “relevancy and representativeness” of data, we assume that the quality of the underlying dataset is mainly characterized, so we decided to rename it as “quality” (Step 5c).

We define the final taxonomy of the regulatory principles after the third iteration (Step 6c) in

Figure 3. We conclude that the current taxonomy is comprehensive, extendible, and explanatory, as there is no need for further iterations. We can summarize that the subjective and the objective ending conditions are met after this iteration (Step 7).

5. Limitations and Discussion

Our limitations can guide future research on regulatory principles and the use of AI-based services in the finance industry. First, we identified the regulatory authorities in Europe and leading European countries ranked by their GDP. The regulatory principles, including their published articles concerning AI usage in financial services, were examined to understand and consider the background of regulatory principles. The primary limitation of this study was the mixed and confused structure of regulatory principles that made understanding expectations and goals difficult. It is therefore a challenge to address regulatory concerns and (further) develop the appropriate measures for innovative AI-based services. Moreover, we also observed that the European Commission’s proposal was already considered by country-specific regulatory principles as a fundamentally aligned strategy for the use of AI in the financial industry. However, these country-specific regulatory principles contain more use-case-based and exemplary procedures rather than set rules and clear guidelines [

52]. This was a limitation when considering and restructuring our taxonomy. However, we were able to confirm that the taxonomy already meets the countries’ expectations and represents country-specific regulations in the second iteration. Therefore, we confirm that our taxonomy is useful and reflects the priorities of the regulators in providing a better understanding for practitioners and researchers. Nevertheless, a limitation remains due to the subjective interpretation and exploratory approach, so the taxonomy must be tested over time.

The final taxonomy indicates the key regulatory aspects and priorities of regulators for financial companies to consider within five major dimensions. The objectives identified show that the regulatory authorities expect clear solutions for these areas from researchers and financial companies. The goals must also be considered along the identified approaches to satisfy the regulators’ expectations. In addition, financial companies must consider, demonstrate, and satisfy the robustness of AI-based services. It is considered another important issue for a risk-free system to ensure consumer safety and operational well-being [

53]. With the proposed taxonomy of regulatory principles, we identified regulatory principles considering different approaches. Furthermore, for each of these approaches, further research is necessary to outline a clear framework with precise guidelines for the use of AI in financial services. Moreover, the final taxonomy indicates that the management of risks must be recognized together with monitoring structures that are essential in identifying, managing, and preventing potential risks. Regulators are required to make continuous improvements and adjustments from financial companies to secure and increase the quality of AI-based services.

Taxonomy provides insight into the regulatory priorities and the focus of supervisory authorities. However, criteria for compliant services in the form of use cases are not yet available for the financial sector and research, both of which can be investigated in more detail using this taxonomy. Due to the confusing, repetitive, and recurring structure of regulatory principles, understanding the overlapping paragraphs and conducting and structuring further research are difficult. However, as the European Commission’s AI Act comes into force in 2024, the mechanisms for compliant AI-based services are still to be examined, where taxonomy can accelerate and promote this process via a structured presentation of regulatory priorities. In addition, taxonomy summarizes the objectives of regulatory principles for further research, which is necessary to iteratively define the compliance criteria.

One of the main interests is the explainability of not only the AI models created but also the underlying process from creation to deployment. It is therefore necessary to examine specific measures and relevant components on a case-by-case basis, as defined by the dimensions in the taxonomy, e.g., for monitoring and risk management. Due to the uniqueness of each AI-powered service, the taxonomy can be used to determine the characteristics of compliant services for each corresponding set of use cases [

15,

20]. Against this background, it is necessary to structure and evaluate the thematic reference in further research: e.g., for credit scoring, transaction monitoring, and insolvency forecasting. For example, a compliant assessment and evaluation of risks for AI-based credit scoring can be clearly defined. In this way, the taxonomy can provide a structured overview of compliant mechanisms assigned to the respective dimensions and characteristics based on various use cases in the financial industry. In addition, experience with AI-based systems from other sectors can be evaluated and structurally adapted to the financial sector. The taxonomy can encourage and support cooperation between the financial industry and supervisory authorities by clearly defining expectations and associated measures. The interaction, e.g., for the approval process of compliance, can be structured using the dimensions of the taxonomy and extended by further characteristics depending on the use case.

Moreover, the goals are of particular interest to regulatory authorities. We identified a lack of satisfactory criteria and clear guidelines, such as for explainability, accountability, and accuracy; companies must take a case-by-case approach and consult with authorities [

54]. The proposed taxonomy indicates that the underlying approach followed by financial services companies plays a crucial role for the regulators, including organizational and technical phases. As an approach becomes more complex, the process for coordinating with regulatory authorities must be better structured [

21]. In this context, complexity is an obstacle to the use of AI-based financial services due to the required human control and management training [

27].

AI can have a further profound impact on the financial industry, with AI-powered applications being used for a variety of tasks, including fraud detection, risk assessment, customer service, and algorithmic trading. The AI Act and the Network and Information Security Directive (NIS/NIS2), Digital Operational Resilience Act (DORA), General Data Protection Regulation (GDPR), and Cyber Resilience Act (CRA) are all pieces of legislation that aim to regulate the use of AI in the financial industry. These regulations are all designed to promote the responsible development and use of AI and protect consumers and financial stability. But they could also make it more difficult for financial institutions to use AI in financial services. Therefore, financial institutions will need to carefully assess the risks and opportunities associated with these regulations to develop a responsible and effective AI strategy.

Furthermore, for the continuous functionality of AI-based services, it is necessary to design and integrate mechanisms for monitoring the processes. These are not only meant to protect customers, but they also improve the services offered over time, identifying existing deficits. Moreover, these kinds of monitoring mechanisms help in the understanding and checking of functionality. The regulatory principles related to monitoring also intend to increase the transparency of the service and thus facilitate the process of any technical and operational review carried out by regulators. We also see that regulatory principles are comparatively the most related to data, as a possibly appropriate dataset is a prerequisite, and the fundamental regulatory groundwork is already in place with GDPR. However, the existing risks and concerns of regulators go beyond the data and involve the entire process, including all components required for a secure service. We believe that our taxonomy can serve as a structured representation of regulatory principles so that further studies can be conducted to overcome the potential risks and harms associated with AI development, testing, and deployment in the finance industry [

55].

6. Conclusions and Future Work

This paper proposes the development of a useful taxonomy of existing European regulatory principles for researchers and financial companies for the identification and addressing of key regulatory aspects and priorities in order to promote a better understanding of these regulations and guide how compliant AI-based services are built. From this background, we considered the regulatory principles of leading European countries to confirm and restructure the taxonomy. As a result, we have created a hierarchical taxonomy consisting of six dimensions following the iterative method for taxonomy development by Nickerson et al. [

23] and following two empirical-to-conceptional and one conceptional-to-empirical approach. We contribute to the existing literature dealing with the use of AI in financial services from the perspective of regulatory authorities. We conducted a structured literature review and market survey to identify the European regulatory authorities and their regulatory principles for the use of AI in financial services. However, we observed unstructured and unsatisfied measures for existing risks. As a result of our discussion, we have shown that the taxonomy can provide researchers and practitioners with an overview of existing risks in order to identify and address them. We believe that highlighting regulatory priorities and characteristics with our taxonomy can help derive further measures that satisfy regulatory principles.

We also found that country-specific regulatory principles are not as sophisticated as those of Europe, as they mostly contain use cases and exemplary approaches [

10]. These use-case-based explorative descriptions include possible exemplary measures that prevent any severe sanctions such that companies in the finance industry need to consider them when building similar AI-based services. For this purpose, our study sets out an overall view of European regulatory principles so that further studies can be conducted both for deriving potential measures and promoting further innovative AI-based services in the finance industry. Since we have discussed the problem of clear legal requirements and concerns, future research can focus not only on establishing a clear legal basis but also on providing compliant technical measures based on appropriate approaches. This can help facilitate the future of AI in the finance industry by providing technical and organizational guidelines [

22]. Likewise, the identified objectives of the regulatory principles in our taxonomy also indicate the main concerns represented as goals that need to be explored in further studies for compliant and innovative services. Moreover, due to the dynamic nature of AI-based services, the required coordination with regulators poses another important issue [

56]. In this respect, it must be questioned whether the competencies of regulators and responsible managers are sufficient for this and how they must be improved [

13]. Likewise, it is necessary to examine the entire process, including deployment and post-market analysis, to provide an efficient control and approval process.