1. Introduction

Temporary and periodic exhibitions seem to be the oldest form of showing works of art. Before the establishment of annual exhibitions of art, regular exhibitions were a monopoly of the guilds [

1]. In this paper we study them in conjunction with the term of virtual museums (VMs) which have been established to identify the provision of digital means for establishing access to collections and heritage sites [

2]. Such museums are typically considered duplicates of real museums or online collections of museum items, delivered through multiple technologies [

3,

4]. In the same context, on-site interactive installations have appeared as VM services intended to complement a typical museum visit [

5]. A common characteristic in modern VMs is the usage of reconstruction technologies to provide digital representations of monuments, heritage sites, sites of natural heritage, etc., and thus, defining the best practices for virtual archaeology [

6,

7].

Despite the technical evolution and theoretical benefit stemming from VMs that bring together content from all over the world, in practice, there were and still are considerable limitations [

8]. From a technical perspective, a major limitation of VMs is that, in most cases, they are implemented ad hoc in terms of the digital content, curated information, and interaction metaphors. In this research work, we deal with this major limitation by providing a systematic methodology, and by proposing the technical tools to support the implementation of VMs that can preserve and present temporal cultural heritage (CH) exhibitions. In this way, we aspire to address the volatility of the information and content of temporal exhibitions, and at the same time, boost the authoring of VMs by supporting curators at all stages of the VM preparation. At the same time, to enhance the realism of the created VMs and their content-provision capabilities, we integrate the means for importing digital reconstructions of objects and sites, and for creating semantic narrations on CH exhibits to support their improved presentation.

The proposed methodology and technical aids are demonstrated through a use-case VM exhibition that was created to preserve an actual physical exhibition hosted by the Municipality of Heraklion between 15 May and 27 June 2021 in the Basilica of Saint Mark.

3. Method

3.1. Overview

A generic methodology is provided that could support the preservation of temporal exhibitions in digital form, and that can be applied to various forms of physically installed exhibitions. The overall methodology is presented in

Figure 1.

Following the proposed methodology, the first step of the process is to collect rich documentation of the exhibition to be preserved. Such information regards already available digital assets, such as the photographic documentation of exhibits, exhibition catalogs, etc. The existing information is complemented with further onside documentation, mainly to capture the nondocumented parts of the exhibition, and to perform 3D reconstructions of physical artifacts. In the latter case, a mixture of 3D-reconstruction technologies can be employed in a nonintrusive way, such as photogrammetry and lidar-based 3D scanning. The outcome is a collection of digital assets to be digitally curated later on in the process.

At this stage, a decision point regarding whether the VE will be based on the physical exhibition, or a new architectural setting, is preferred. In the first case, the original exhibition should be digitized while it is still running by using a mixture of 3D digitization technologies, with a major modality being the architectural laser scanning complemented with photographic documentation and photogrammetry.

The results of the digitization undertake several steps, including the synthesis of different scans to implement the synthetic model of the exhibition, and the optimization of the 3D model so that it is appropriate for real-time rendering on the Web and in VR. In the second case, the design of the digital exhibition is performed online by facilitating the exhibition designer of the Invisible Museum platform. The outcomes of both variations of the methodology are the shell of the exhibition in a digital form as a 3D model.

In the third step of the provided methodology, the rich digital documentation acquired during the first step of the process is curated. This process regards the integration of digital assets to the authoring platform and the data curation of exhibits. Curated exhibits contain social and historic information, together with alternative digital representations of their documentation, as well as information on the physical constraints and size of their integration into a virtual space. The outcomes of this process in the catalog of the exhibition are digitally curated online. Of course, at this stage, several translations of the information can be provided to complement the digital presentation of the exhibition in different languages.

In the fourth step of the methodology, the exhibition is curated again in a digital form. This regards the selection and placement of digital exhibits in their locations in the digital exhibition. For this process, in this research work, we employ the exhibition designer provided by the Invisible Museum platform [

27].

The final step of the methodology is making the exhibition available online in alternative forms. This is performed by publishing the exhibition and selecting among the supported rendering modalities (3D, VR 3D, online browsing).

3.2. Critical Decision Points on the Proposed Method

Because the proposed methodology makes assumptions about the optimum technology to be used in several steps, in this section, we provide clarifications on the rationales behind the key decision points.

One of the main overheads of the method is in Step 2, when the task is to produce a digital replica of the actual physical installation. Here, we propose the usage of laser scanning instead of the cheaper and more widely available technologies of photogrammetry and panoramic images. Furthermore, one could argue that building the 3D model from a modeling tool would be an easier and more intuitive solution.

Regarding the latter, in the methodology, we integrated an online authoring environment for the modeling of simple interior spaces that could support the exhibition, and thus, a simple user model can be easily created by inexperienced users. Regarding the former case, the rationale for the proposal of laser scanning technology instead of photogrammetry is threefold: first, in internal spaces, there are great variations in light; second, pictures do not have a wide field of view; third, most internal spaces have white walls. All these issues result in the fact that photogrammetry algorithms are not capable of detecting a sufficient number of features to produce a decent textured and meshed model. Another complementary constraint is the difficulty of registering different photogrammetric scans of different locations/exhibition rooms. Furthermore, when obtaining a low-poly model out of photogrammetry with rich texture information, there is always a need for the significant postprocessing of the low-poly model because errors in the photogrammetric reconstruction result in severe implications in the rendering of geometric structures.

Regarding panoramic images, these are sufficient for a static 3D virtual museum, in which the visitor accesses predefined locations. In this paper, we are focusing on providing a richer experience and a sense of being there. We argue that the loss of the experiential part is significant in panoramic images due to the limited navigation and supported interaction. Furthermore, as presented in Step 5 of the methodology, by following the presented approach, the result is a digital environment that can be rendered on the Web, as a 3D desktop app, and as a VR experience, which thus greatly extends the possibilities of supporting different presentation media.

4. The Invisible Museum Platform

As presented in the previous section, for the needs of authoring the digital version of the exhibition, the Invisible Museum platform was employed [

27]. The platform mainstreams the authoring of the curatorial rationale online by supporting many variations of the digital representations of exhibits linked to sociohistoric information encoded with the help of knowledge representation standards. In more detail, the content represented using the Invisible Museum platform adheres to domain standards, such as The Conceptual Reference Model of the International Committee for Documentation of the International Council of Museums (CIDOC-CRM [

43]), and the European Data Model [

44]. Furthermore, the platform facilitates natural language processing to assist curators by generating ontology bindings for textual data. The platform enables the formulation and semantic representation of narratives that guide storytelling experiences and bind the presented artifacts to their sociohistoric contexts.

The main features of this platform that are exploited by this research work are related to: (a) the authoring of user-designed dynamic VEs, (b) the implementation of exhibition tours, and (c) visualization in Web-based 3D/VR technologies.

5. Use Case

To validate the proposed approach, a use case was implemented using an actual physical exhibition as a use case, hosted by the Municipality of Heraklion between 15 May and 27 June 2021 in the Basilica of Saint Mark.

5.1. The Exhibition

On the occasion of the 80th anniversary of the Battle of Crete, the Municipality of Heraklion, in co-organization with the Region of Crete and the Historical Museum of Crete, held an exhibition of documents and memorabilia from the Battle of Crete. The exhibition focused on the historical events related to the Battle of Heraklion, up to and including the day the city was occupied by the Germans (1 June 1941). The exhibition included historical relics, such as rare uniforms, accouterments, weaponry, and personal items of the soldiers of the warring forces, the Greek and Allied forces who had undertaken the defense of the island, and the Germans who attacked on 20 May 1941 (see

Figure 2).

5.2. Collection of Sociohistoric Information on the Exhibition

The exhibitions discussed in this paper are curated temporal exhibitions that are hosted in physical locations and digitized by this research work to be preserved for future reference and digital visits. This fact simplifies the process of collecting sociohistoric information because such information is already present as part of the exhibition setup. It is interesting though that, in this step, there is the possibility of actively collaborating with the exhibition curators to enhance the depicted and presented information, as a new medium is to be supported. This can be performed by extending the information elements with more digital information than that presented in the physical exhibition, and with sociohistoric narratives that relate to the virtual exhibits.

In the case of this use case, the close collaboration with the curator of the exhibitions allowed us to enrich their digital form with extra information and sources that enhance the information part preserved.

5.3. St. Marcos Basilica Model Implementation

A key aspect of this work, and our most important requirement, was to capture the original topology of the Basilica of Saint Mark using laser scanning and photographic documentation.

5.3.1. Laser Scanning

The laser scanning of the Basilica of Saint Mark proved to be one of the main challenges of this research work because it was not possible to scan the monument while hosting the exhibition, but only after its closing. The main reason for this was the occlusions due to the structures installed for hosting the exhibits. Thus, it was decided to scan the clean monument after the removal of all the structures. This minimized the potential scanning problems, leaving us with the decision as to the appropriate scanning strategy. The main challenges were that the Basilica contains ten large columns that produce partial occlusions in all the possible scanning areas, and the huge chandeliers on the roof pose challenges both for the reconstruction of the roof and for their reconstruction as structures of the Basilica.

For the formulation of our strategy, we followed the approach formulated by the Mingei protocol for craft representation [

45], as analyzed in The Mingei Handbook regarding the best practices on the technology usage for digitization [

46]. Based on these guidelines, we decided to facilitate laser scanning instead of photogrammetry, and we carefully designed the scanning experiment to minimize the scanner positions. This contributed to a reduction in the scanning time while still allowing us to scan the entire structure, as presented in

Figure 3. In this topology, the optimal scan locations are presented to acquire partial overlapping scans that can, in turn, be registered to produce the synthetic model of the Basilica.

The scans were acquired in a single full-day session. Each scan took approximately 20 min, and 18 scans were acquired.

3D Point-Cloud Synthesis

Overall, 18 scans of the monument were acquired and registered to create a point cloud that combined the information from all the scans. In

Figure 4, different views of the synthesized point cloud are presented. In each view, the position of the laser scanner can be seen in the form of a grey-colored cube.

3D Model Creation and Optimization

For the implementation of the 3D model of the Basilica, the eighteen LiDAR Faro scans that were acquired were used. These scans can capture accurate distances with the quality geometric representation of the real shapes and colors of the structures, although blind spots remain, which are areas that have obstacles during the scanning process that do not overlap, meaning that even the densest point-cloud mesh suffers from empty patches of geometry. Small geometric artifacts that arise from each scan multiply as they automatically merge by the underlying point-cloud technology, introducing errors in both the accuracy and aesthetic appearance. Not merging the overlapping geometries of the individual scanned meshes makes for an unpleasant appearance, and the models are often unusable, other than a reference point, as even the best systems cannot drive the geometry for real-time VR applications. Polygon decimation is very limited, and it introduces extra issues, such as polygonal invisibility, duplication, and overlapping, and it usually expands any blank areas. Correcting these errors is a tedious process (see

Figure 5).

At this point, artistic-driven retopology was used for the final geometric mesh as a means of balancing between the lesser geometric accuracy vs. optimization and geometrical fixes to alleviate structural errors and omissions. It has the benefit of a reduced vertex overhead, while the underlying geometric details can be made up by using normal mapping extraction. The information can be simplified enough that even a complicated structure can be run using an Internet browser, enabling access to the public (see

Figure 6). For documentation and feature-preservation purposes, a uniform texture distribution is required, but for VR and AR reproduction, a higher-fidelity distribution near the parts that a user is most likely to experience is preferable.

The 3D application Blender [

47] was used for modeling and retexturing the final structure. A total of eighteen scans were registered, consisting of 325,000 polygons and 4K textures each (6.8 million triangles and 352.3 megapixel textures). The retopology process involves projecting individual multiple faces and edges onto the dense LiDAR polygonal structure to create an approximate structural mesh with an 8K texture (67.1 megapixels). For uniform texture unwrapping, SmartUV at 30 degrees with a 0.00006 island margin is appropriate for 8K texturing, and any overlapping UVs can easily be corrected. For the texture extraction, the bake output margin is set to 3 px to minimize the color bleeding while compensating for any acute-angled triangles in the UVs, and the ray distance is set at 4 cm for albedo, and 2 cm for normal. All the individual scan extractions have alpha transparency, which indicates the overlapping geometries between the retopology mesh and LiDAR mesh, and that is used to mask the overlapping textures. Extra artistic-driven masks are used to soften the various textures in one unified synthesis, which is a process that is also used for normal mapping. The final-texture composite can achieve the impression of a higher-fidelity mesh in one simplified mesh. The scans are taken at different times of the day, creating uneven lit surfaces, with major differences in the white balance (temperature), and to compensate, the best-lit scan is used as a guide for the color calibration for the others using RGB curves. To fill the blank surfaces, the boundary edges are extracted in a secondary mesh, which is artistically filled as appropriate. New UVs are created and downscaled to be equivalent or smaller to the target mesh, and they are merged in the empty UV areas. Cloning is used to make up for the reimagined parts in both albedo and normal mapping. The final calibrated colored textures are presented in

Figure 7. Overall, one working week was needed to produce the final model.

5.4. Digital Curation

5.4.1. Data Curation

Data curation, in the context of this research work, is conceived of as the process of transforming the physical exhibits of the original exhibition into digital ones, as well as the authoring of the appropriate semantic information that represents the curatorial rationale of each exhibit. To do so, the data collected during the first step of the proposed methodology were employed. The authoring process was conducted using the Invisible Museum platform, where several digital exhibits were created. Each of these exhibits was authored individually using the form presented in

Figure 8. In the form, the details of the exhibit are provided, including its physical dimensions and digital representation (image, video, 3D model, etc.). Furthermore, the option to translate textual information into more languages is provided (see

Figure 8).

To further enhance the presentation of an exhibit, there is an option for creating a narration, which, in turn, enhances the information presented for the exhibit within the digital exhibition (see

Figure 9). The narration is conceptualized with the help of the platform as a series of steps, each of which is assigned with a narration segment and a selection of media files. This is different from the physical exhibition, and it allows the curator to give a storytelling approach to the presentation of exhibits, or to exploit narratives to provide typical museum guide facilities.

After the creation of the exhibits and the narrations about the exhibits, the full list can be accessed on the corresponding page of the exhibition, as shown in

Figure 10.

5.4.2. Curating the Virtual Exhibition

The curation of the VE is happening via a Web-based authoring system, as shown in

Figure 11. The main page of the exhibition allows for the authoring of basic textual information regarding the VEs, and the setting of language options for its availability. For the exhibition to obtain a virtual form, the design process should be initiated by loading the exhibition designer.

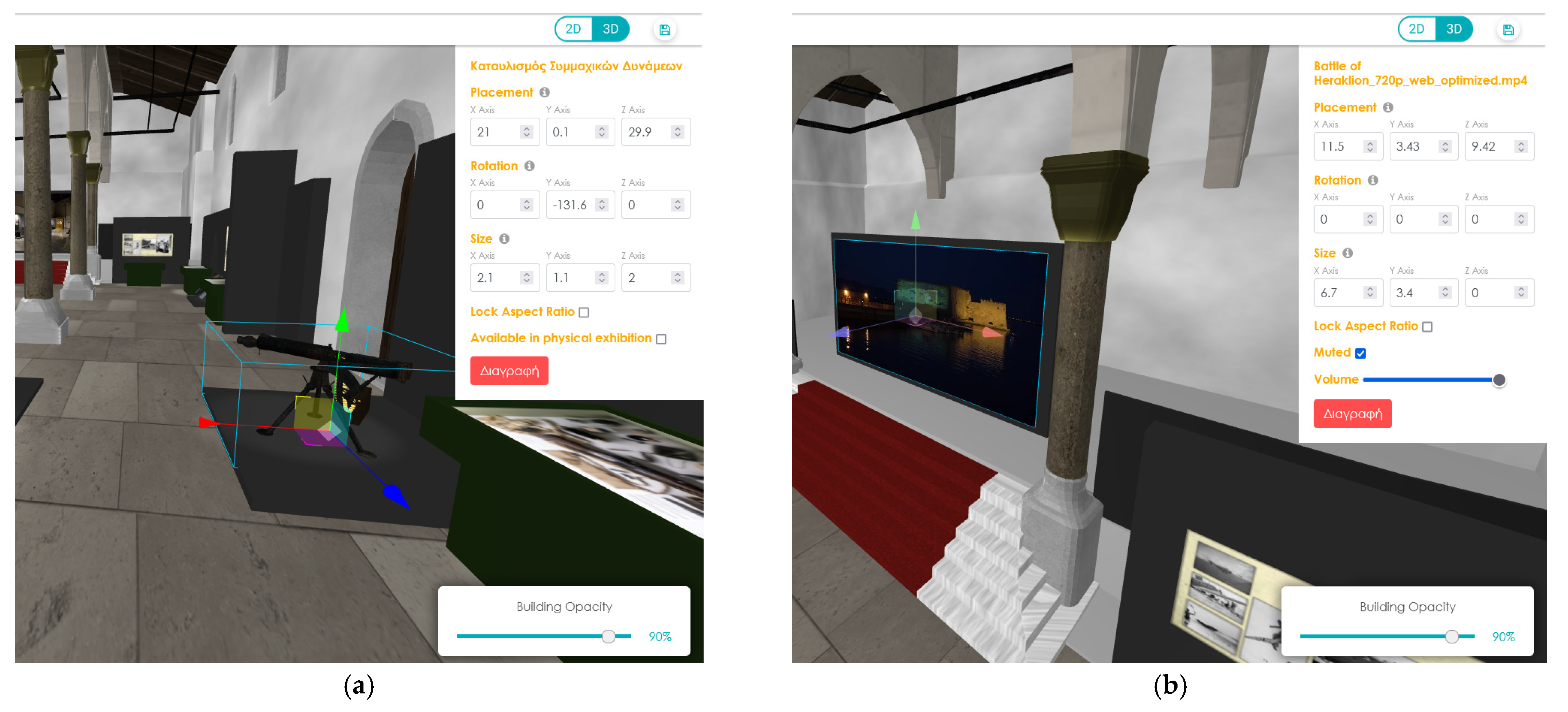

The exhibition designer is a Web3D interface, where the authoring of the virtual space takes place. In this virtual space, the 3D model of the exhibition created in the second step of the proposed method can be loaded. At this stage, the 3D model only presents the space of the exhibition, with no exhibits attached. This is the second step, which assigns exhibits in specific locations of the exhibition. Exhibits can be added through the pool of curated virtual exhibits presented in the previous section. A snapshot of the authoring of the VEs is presented in

Figure 12, while examples of placing a 3D model and a video object are presented in

Figure 13.

Alternatively, although not followed in this use case, there is the option to use the exhibition designer to create a new virtual space, and to thus reposition the exhibits to create an alternative to the original experience. This feature is very handy in cases where the physical space of the exhibition is not of cultural significance, or in cases in which the space constraints inevitably affect the curatorial rationale and result in a nonoptimal solution.

5.5. Publish for Public Access

6. Conclusions, Lessons Learned, and Future Work

In this paper, we present a methodology for the preservation and presentation of temporal exhibitions by facilitating advanced digitization technologies and an authoring platform for the implementation of 3D VMs with VR support. For the validation of the proposed methodology, we created a VE to preserve a temporal exhibition that was organized as part of the 80th anniversary of the Battle of Crete by the Municipality of Heraklion, in co-organization with the Region of Crete and the Historical Museum of Crete, between 15 May and 27 June 2021 in the Basilica of Saint Mark. During the application of the proposed methodology, we acquired precise laser scans of the Basilica, which were merged and postprocessed to create the 3D model of the exhibition. In collaboration with the curators of the exhibition, we formulated the sociohistoric information encoded in the form of textual information, audiovisual content, and narratives used to data-curate the online digital exhibits. Then, using the exhibition designer provided by the Invisible Museum platform, we created the digital version of the exhibition, which was made accessible to the public through the website of the Municipal Art Gallery.

The implementation of the use case allowed us to first validate our proposed methodology, and then to draw valuable conclusions to drive optimizations both of the methodology and technology.

First of all, we are pleased that the proposed methodology as formulated and demonstrated was proven sufficient to cover a very challenging use case that combines a historical building with a temporal exhibition combining artifacts of several types, dimensions, and forms (e.g., small dioramas), including digital information presented on site, such as video testimonies and documentaries. Second, we realized that a significant part of the time spent implementing the use case was spent on the task of digitizing the very complex structure of the Basilica, which was meant to host the VE. We learned from this process that, in the context of applying the proposed methodology, a very important consideration is to digitize parts of the exhibition that are needed from a curatorial perspective. For example, we could save time by creating a simplified synthetic model of the Basilica rather than digitizing it. In our case, we followed this approach because the municipality is using the Basilica to host temporal exhibitions, and thus, scanning once will allow us to reuse the model in the various digital exhibitions to follow. Third, we understood, by working with the curators, that the digital version of the exhibition can be enriched with much more information and content that was not selected to be hosted, mainly due to the lack of space and resources. The digital version can allow curators to work more freely by breaking the space and resource constraints that were applied when designing the physical exhibition. Fourth, we realized that, through the digital exhibition, we are enhancing the value of the temporal exhibition by giving it a permanent dimension, thus contributing to the preservation of the curatorial rationale as a temporal “reading” of our CH. Fifth, we understand that the proposed methodology requires significant expertise, technical resources, and expertise, and especially during the second step of the method, where the task is to produce a 3D digitization of the building. To reduce this burden, we integrated an online authoring environment that would allow inexperienced users to author simple interior structures to host their exhibitions, which radically reduces the effort and expertise needed. This could be further enhanced by using images from the actual exhibition to produce more realistic textures. We currently cannot support the integration of panoramic images because the entire methodology is built on the prerequisite that a 3D virtual environment will be available during the authoring of the virtual exhibition. Finally, on a more philosophical level, the preservation of multiple curations over time could provide insights into how the evolution of societies affects our appreciation and understanding of CH.

Regarding future work, an issue that should be taken into consideration is the long-term preservation of the digital assets that contribute to the implementation of a VM exhibition. To this end, because the semantic interoperability of knowledge is granted through the CIDOC-CRM, we are planning to store digital assets in open-linked data repositories that are supported by the European Commission, such as Zenodo [

48]. This has many advantages, as we are safeguarding the data for long-term preservation, and at the same time, we no longer have the burden and responsibility of their long-term preservation, while also saving institutional resources. At the same time, through Zenodo, the data can be linked back to our VM online. In terms of the technical validation of this research work, we are willing to continue the validation of the proposed methodology in various circumstances in the future, investing time and effort in the implementation of use cases that cover the entire range of possibilities that stem from the theoretical approach. We expect that this process will require us to revisit the methodology and tools for the needed improvements, and thus we are confident that the proposed methodology will be further developed and will flourish in the future.