AUTOGRAF—AUTomated Orthorectification of GRAFfiti Photos

Abstract

:1. Introduction

2. Materials and Methods

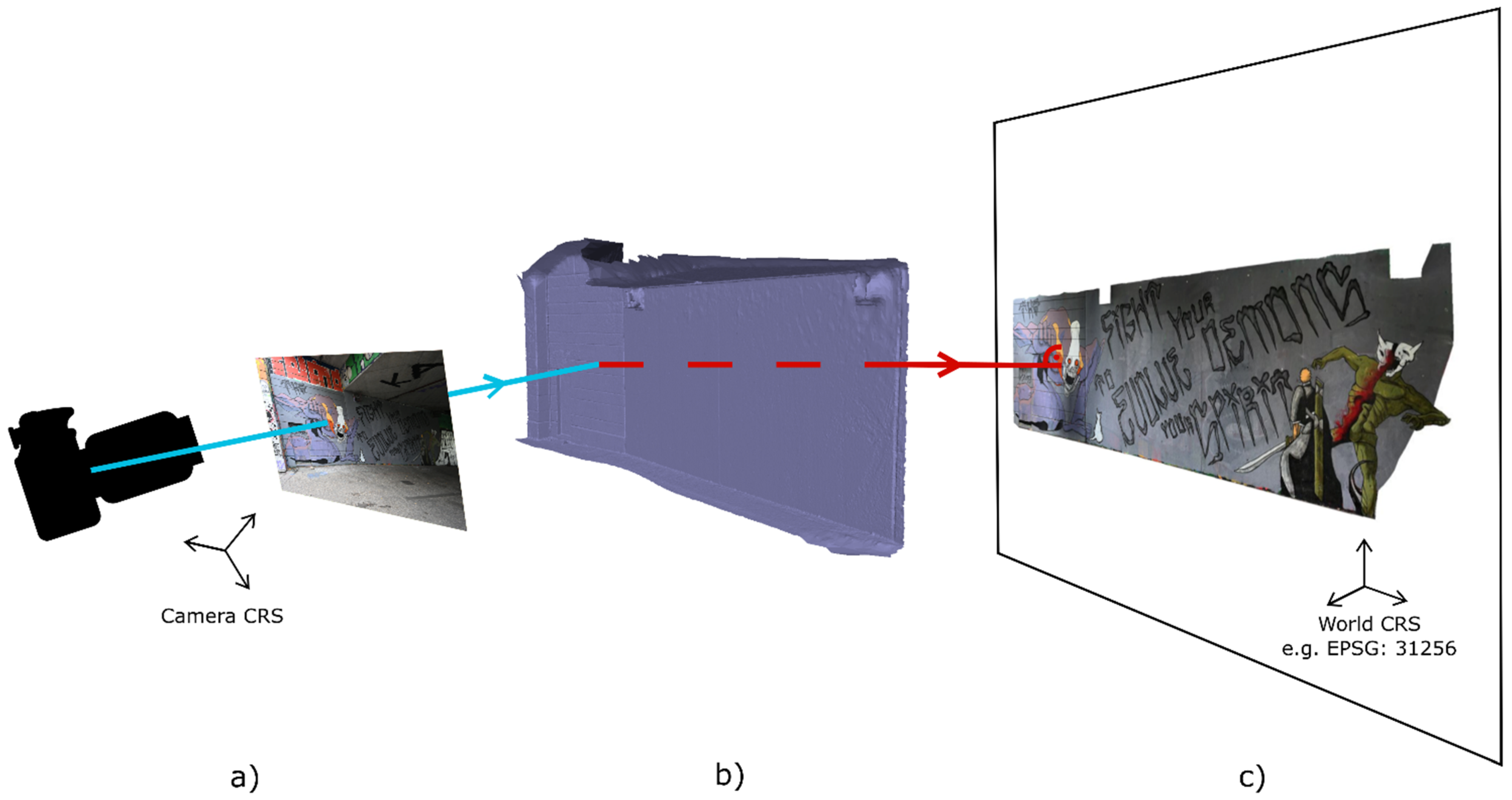

2.1. Photogrammetric Orthophoto Pipeline

- (a).

- The cameras’ interior and exterior orientation parameters. The exterior orientation describes the camera’s absolute position and rotation at the moment of image acquisition. The interior orientation describes the camera’s internal geometry, including lens distortion parameters;

- (b).

- A digital, hole-free, continuous 3D model of the surface the graffito was created on (e.g., wall, bridge pillar or staircase);

- (c).

- A projection plane onto which the texture information from the photo(s) is orthogonally projected via previously intersecting the 3D surface model.

- (1)

- Initial quality and consistency checks of the graffito images;

- (2)

- Estimation of the camera’s interior and exterior orientation;

- (3)

- Derivation of a digital 3D model of the graffiti-covered surface, computation of the (ortho-)projection plane (also referred to as reference plane) and creation of the final orthophoto.

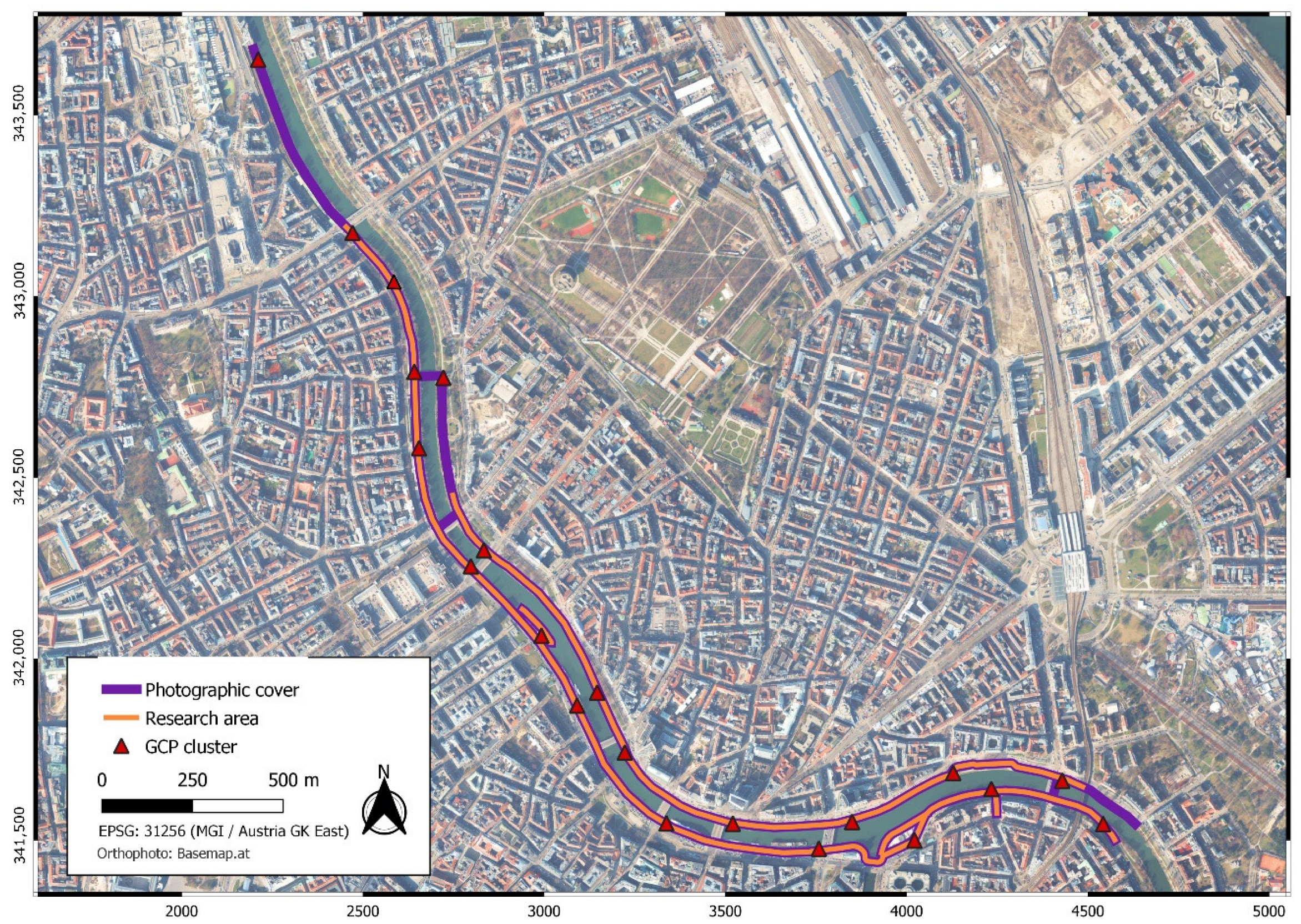

2.1.1. Total Coverage Network

- Camera 1: Nikon D750 (24.2 MP)/Lens 1: Nikon AF-S NIKKOR 85 mm f/1.8 G @ f/5.6 (4609 photos)

- Camera 2: Nikon Z 7II (45.4 MP)/Lens 2: Nikon NIKKOR Z 20 mm f/1.8 S @ f/5.6 (22,097 photos)

- (1)

- It documents the graffiti status quo, thus establishing a starting point for monitoring and recording new graffiti;

- (2)

- It facilitates the generation of a digital, continuous 3D surface model of the whole research area in the form of a triangle-based polymesh. Since INDGO aims to create an online platform that offers visitors virtual walks along the Donaukanal, this surface model is called the 3D geometric backbone;

- (3)

- It establishes a dense photo network that can be used for incremental SfM.

2.1.2. Photo Acquisition and Data Management

2.1.3. Initial SfM and Quality Checks

2.1.4. Incremental SfM Approach

2.1.5. Generation of the 3D Model and Computation of a Custom Projection Plane

2.1.6. Orthophoto Creation and Boundary Selection

2.2. Orthorectification Experiment

2.2.1. Experimental Setup

2.2.2. Experiment Evaluation

3. Results

3.1. Initial Local SfM and Incremental SfM

3.2. Derivation of the 3D Surface Model and the Projection Planes

3.3. Quantity and Quality of the Derived Orthophotos

3.4. Feasibility of the Workflow

4. Discussion and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Baird, J.A.; Taylor, C. Ancient Graffiti in Context: Introduction. In Ancient Graffiti in Context; Routledge: Oxfordshire, UK, 2010; Volume 133, pp. 17–35. [Google Scholar]

- Masilamani, R. Documenting Illegal Art: Collaborative Software, Online Environments and New York City’s 1970s and 1980s Graffiti Art Movement. Art Doc. J. Art Libr. Soc. North Am. 2008, 27, 4–14. [Google Scholar] [CrossRef]

- Ross, J.I.; Bengtsen, P.; Lennon, J.F.; Phillips, S.; Wilson, J.Z. In search of academic legitimacy: The current state of scholarship on graffiti and street art. Soc. Sci. J. 2017, 54, 411–419. [Google Scholar] [CrossRef]

- De la Iglesia, M. Towards the Scholarly Documentation of Street Art. Str. Art Urban Creat. J. 2015, 1, 40–49. [Google Scholar]

- Verhoeven, G.; Wild, B.; Schlegel, J.; Wieser, M.; Pfeifer, N.; Wogrin, S.; Eysn, L.; Carloni, M.; Koschiček-Krombholz, B.; Molada Tebar, A.; et al. Project INDIGO–Document, disseminate & analyse a graffiti-scape. Int. Arch. Photogram. Remote Sens. 2022, 46, 513–520. [Google Scholar]

- Ringhofer, A.; Wogrin, S. Die Kunst der Straße–Graffiti in Wien. Wiener 2018, 428, 46–53. [Google Scholar]

- Kraus, K. Photogrammetry—Geometry from Images and Laser Scans; Walter de Gruyter: Berlin, Germany, 2007. [Google Scholar]

- Novak, D. Methodology for the Measurement of Graffiti Art Works: Focus on the Piece. World Appl. Sci. J. 2014, 32, 40–46. [Google Scholar]

- Bengtsen, P. The Street Art World; Almendros de Granada Press: Lund, Sweden, 2014. [Google Scholar]

- Bengtsen, P. Decontextualisation of Street Art; Cambridge University Press: Cambridge, UK, 2019; Volume 9, pp. 45–58. [Google Scholar]

- Mavromati, D.; Petsa, E.; Karras, G. Theoretical and practical aspects of archaeological orthoimaging. Int. Arch. Photogram. Remote Sens. 2002, 34, 413–418. [Google Scholar]

- Chiabrando, F.; Donadio, E.; Rinaudo, F. SfM for orthophoto generation: A winning approach for cultural heritage knowledge. In Proceedings of the 25th International CIPA Symposium, Taipei, Taiwan, 31 August–4 September 2015; pp. 91–98. [Google Scholar]

- Agnello, F.; Lo Brutto, M.; Lo Meo, G. DSM and Digital Orthophotos in Cultural Heritage Documentation. CIPA XX Torino Italy 2005, 1, 49–54. [Google Scholar]

- Dorffner, L.; Kraus, K.; Tschannerl, J.; Altan, O.; Külür, S.; Toz, G. Hagia Sophia-Photogrammetric record of a world cultural heritage. Int. Arch. Photogram. Remote Sens. 2000, 33, 172–179. [Google Scholar]

- Kurashige, H.; Kato, J.; Nishimura, S. The Colored Comparison of the Wall Sculpture with 3D Laser Scanner and Orthophoto. In Proceedings of the ISPRS Working Group V/4 Workshop, 3D-ARCH 2005, Venice, Italy, 22–24 August 2005. [Google Scholar]

- Verhoeven, G.; Sevara, C.; Karel, W.; Ressl, C.; Doneus, M.; Briese, C. Undistorting the past: New techniques for orthorectification of archaeological aerial frame imagery. In Good Practice in Archaeological Diagnostics: Non-Invasive Survey of Complex Archaeological Sites; Corsi, C., Slapšak, B., Vermeulen, F., Eds.; Natural Science in Archaeology; Springer: Cham, Switzerland, 2013; pp. 31–67. ISBN 978-3-319-01784-6. [Google Scholar]

- Palomar-Vazquez, J.; Baselga, S.; Viñals-Blasco, M.-J.; García-Sales, C.; Sancho-Espinós, I. Application of a combination of digital image processing and 3D visualization of graffiti in heritage conservation. J. Archaeol. Sci. Rep. 2017, 12, 32–42. [Google Scholar] [CrossRef] [Green Version]

- Ruiz López, J.F.; Hoyer, C.T.; Rebentisch, A.; Roesch, A.M.; Herkert, K.; Huber, N.; Floss, H. Tool mark analyses for the identification of palaeolithic art and modern graffiti. The case of Grottes d’Agneux in Rully (Saône-et-Loire, France). Digit. Appl. Archaeol. Cult. Herit. 2019, 14, e00107. [Google Scholar] [CrossRef]

- Sou, L. Carlisle Castle, Cumbria A Geospatial Survey of Historic Carvings and Graffiti; Historic England Research Reports 53-2016; Fort Cumberland: Historic, UK, 2016; 47p. [Google Scholar] [CrossRef]

- Valente, R.; Barazzetti, L. Methods for Ancient Wall Graffiti Documentation: Overview and Applications. J. Archaeol. Sci. Rep. 2020, 34, 102616. [Google Scholar] [CrossRef]

- Markiewicz, J.S.; Podlasiak, P.; Zawieska, D. Attempts to automate the process of generation of orthoimages of objects of cultural heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 393–400. [Google Scholar] [CrossRef] [Green Version]

- Markiewicz, J.S.; Podlasiak, P.; Zawieska, D. A new approach to the generation of orthoimages of cultural heritage objects—Integrating TLS and image data. Remote Sens. 2015, 7, 16963–16985. [Google Scholar] [CrossRef] [Green Version]

- Georgopoulos, A.; Tsakiri, M.; Ioannidis, C.; Kakli, A. Large scale orthophotography using DTM from terrestrial laser scanning. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing Spatial Information Science, Istanbul, Turkey, 12–23 July 2004; Volume 35, pp. 467–472. [Google Scholar]

- Georgopoulos, A.; Natsis, S. A simpler method for large scale digital orthophoto production. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 266. [Google Scholar]

- Deng, F.; Kang, J.; Li, P.; Wan, F. Automatic true orthophoto generation based on three-dimensional building model using multiview urban aerial images. J. Appl. Remote Sens. 2015, 9, 095087. [Google Scholar] [CrossRef]

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. B 1979, 203, 405–426. [Google Scholar] [PubMed] [Green Version]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks: Mitigating systematic error in topographic models. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef] [Green Version]

- Agisoft PhotoScan Professional (Version 1.7.5) (Software). 2022. Available online: http://www.agisoft.com/downloads/installer/ (accessed on 29 September 2022).

- Agisoft Metashape Version 1.8.3 Agisoft Metashape User Manual.Agisoft Metashape. 2022. Available online: https://www.agisoft.com/pdf/metashape-pro_1_8_en.pdf (accessed on 22 August 2022).

- Seitz, S.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A Comparison and Evaluation of Multi-View Stereo Reconstruction Algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern, Washington, DC, USA, 17–22 June 2006; pp. 519–528. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Wieser, M.; Verhoeven, G.J.J.; Briese, C.; Doneus, M.; Karel, W.; Pfeifer, N. Cost-effective geocoding with exterior orientation for airborne and terrestrial archaeological photography: Possibilities and limitations. Int. J. Herit. Digit. Era. 2014, 3, 97–121. [Google Scholar] [CrossRef] [Green Version]

- Doneus, M.; Wieser, M.; Verhoeven, G.; Karel, W.; Fera, M.; Pfeifer, N. Automated archiving of archaeological aerial images. Remote Sens. 2016, 8, 209. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Medioni, G. Object modeling by registration of multiple range images. Image Vision Comput. 1992, 14, 145–155. [Google Scholar] [CrossRef]

| Class | Short Explanation | Long Explanation |

|---|---|---|

| 0 | No orthophoto/Orthophoto with significant flaws | A graffito for which no orthophoto could be generated or the orthophoto’s quality is so poor that it cannot be used for a detailed analysis. |

| 1a | Orthophoto with minor flaws (input data-related) | An orthophoto is generated, and the quality is sufficient for an overall inspection. However, smaller parts of the graffito are cut off, occluded, distorted, underexposed or blurry. The reason is input data-related and cannot be fully resolved by manual intervention during the orthophoto generation process. |

| 1b | Orthophoto with minor flaws (AUTOGRAF-related) | Same as 1a, but the flaws are AUTOGRAF-related. The problem can thus be (largely or entirely) solved by 3D model editing, manual selection of the input images or other manual interventions. |

| 2 | Orthophoto with no or marginal flaws | The orthophoto does not exhibit any or only marginal flaws, which do not disturb the graffito analysis. Manual intervention would not improve the result. |

| Class | % | Examples |

|---|---|---|

| 0 | 5 (3/2) |  |

| 1a | 5 |  |

| 1b | 10 |  |

| 2 | 80 |  |

| Setup | Specifications |

|---|---|

| A | CPU: 2 × AMD EPYC 7302, 3.0 GHz, 16 core processor GPU: NVIDIA GeForce GTX 1650, 4 GB DDR5 VRAM, 896 CUDA cores HDD: Seagate Exos E 7E8 8TB, 6000 MB/s (read/write) RAM: 512 GB DDR4, 2667 MHz |

| B | CPU: Intel Core i9-12900KF, 3.2 GHz, 16 core processor GPU: NVIDIA GeForce RTX 3060, 12 GB DDR6 VRAM, 3584 CUDA cores HDD: Seagate FireCuda 530 2TB M.2 SSD, 7300 MB/s read, 6900 MB/s write RAM: 64 GB DDR4, 2200 MHz |

| Setup A | Setup B | |||

|---|---|---|---|---|

| Task | Duration [h:m] | ⌀ per Graffito [m:s] | Duration [h:m] | ⌀ per Graffito [m:s] |

| Initial SfM | 1:29 | 0:53 | 0:23 | 0:14 |

| Initial quality checks | 0:01 | 0:01 | 0:01 | 0:01 |

| Incremental SfM | 5:41 | 3:25 | 1:28 | 0:53 |

| Data preparation | 1:54 | 1:08 | 0:42 | 0:25 |

| Orthophoto creation | 12:35 | 7:33 | 6:49 | 4:05 |

| Time w/o manual intervention | 21:40 | 13:00 | 9:23 | 5:38 |

| Manual preparatory tasks | 1:10 | 0:42 | 1:10 | 0:42 |

| Total | 22:50 | 13:42 | 10:33 | 6:20 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wild, B.; Verhoeven, G.J.; Wieser, M.; Ressl, C.; Schlegel, J.; Wogrin, S.; Otepka-Schremmer, J.; Pfeifer, N. AUTOGRAF—AUTomated Orthorectification of GRAFfiti Photos. Heritage 2022, 5, 2987-3009. https://doi.org/10.3390/heritage5040155

Wild B, Verhoeven GJ, Wieser M, Ressl C, Schlegel J, Wogrin S, Otepka-Schremmer J, Pfeifer N. AUTOGRAF—AUTomated Orthorectification of GRAFfiti Photos. Heritage. 2022; 5(4):2987-3009. https://doi.org/10.3390/heritage5040155

Chicago/Turabian StyleWild, Benjamin, Geert J. Verhoeven, Martin Wieser, Camillo Ressl, Jona Schlegel, Stefan Wogrin, Johannes Otepka-Schremmer, and Norbert Pfeifer. 2022. "AUTOGRAF—AUTomated Orthorectification of GRAFfiti Photos" Heritage 5, no. 4: 2987-3009. https://doi.org/10.3390/heritage5040155

APA StyleWild, B., Verhoeven, G. J., Wieser, M., Ressl, C., Schlegel, J., Wogrin, S., Otepka-Schremmer, J., & Pfeifer, N. (2022). AUTOGRAF—AUTomated Orthorectification of GRAFfiti Photos. Heritage, 5(4), 2987-3009. https://doi.org/10.3390/heritage5040155