Super-Resolution Techniques in Photogrammetric 3D Reconstruction from Close-Range UAV Imagery

Abstract

1. Introduction

2. Literature Review of 3D Reconstruction with the Aid of Super-Resolution

3. Materials and Methods

3.1. Low-Altitude Image Data

3.2. Deep-Learning Based Super-Resolution Techniques

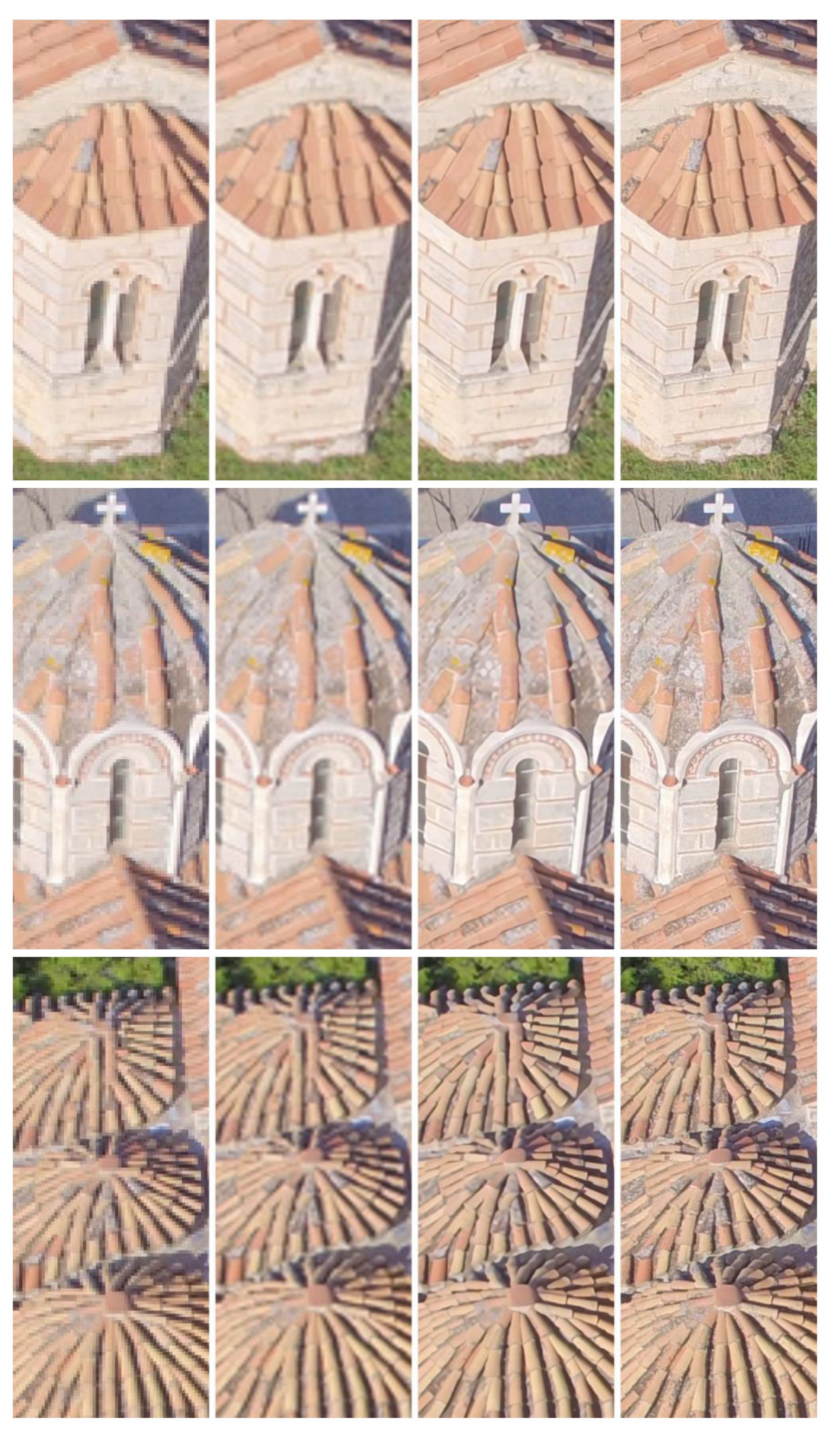

- RankSRGAN: This SR technique [23] was employed for image resolution enhancement by a factor of 4. The RankSRGAN framework is structured into 3 stages. In stage 1, various SR techniques serve for super-resolving images of public SR datasets. Next, pair-wise images are ranked based on the quality score of a chosen perceptual metric while the corresponding ranking labels are preserved. Stage 2 regards Ranker training. The learned Ranker has a Siamese architecture and should be able to rank images depending on their perceptual scores. In stage 3 the trained Ranker serves as the definition of a rank-content loss for a typical SRGAN to generate visually “pleasing” images.

- Densely Residual Laplacian Super-Resolution (DRLN): This SR technique [19] also served in this study for SR by a factor of 4. It relies on a modular convolution neural network, where several components that boost the performance are employed. The cascading residual on the residual architecture used facilitates the circulation of low-frequency information so that high and mid-level information can be learned by the network. There are densely linked residual blocks that reprocess the previously computed features, which results in “deep supervision” and learning from high-level complex features. Another significant characteristic of the DRLN technique is Laplacian attention. Via the latter, the crucial features are modeled on multiple scales, whereas the inter- and intra-level dependencies between the feature maps get comprehended.

- Hybrid Attention Transformer Super-Resolution (HAT): The images were again super-resolved by a factor of 4 via this SR technique [24]. HAT is inspired by the fact that transformer networks can greatly benefit from the self-attention mechanism and exploit long-range information. Shallow feature extraction and deep feature extraction precede the SR reconstruction stage. The HAT transformer jointly utilizes channel attention and self-attention schemes as well as an overlapping cross-attention module. This SR technique aims at activating many more pixels for the reconstruction of HR images.

3.3. SfM/MVS Tool

3.4. Methodology for 3D Model Reconstruction

- (a)

- one model from the original 3000 × 4000 high-resolution images (HR)

- (b)

- one model from the downsampled 750 × 1000 low-resolution images (LR)

- (c)

- one model from the bicubically upsampled 3000 × 4000 low-resolution images (BU)

- (d)

- three models from the super-resolved 3000 × 4000 low-resolution images (SR).

3.5. Criteria for the Comparison of Point Clouds

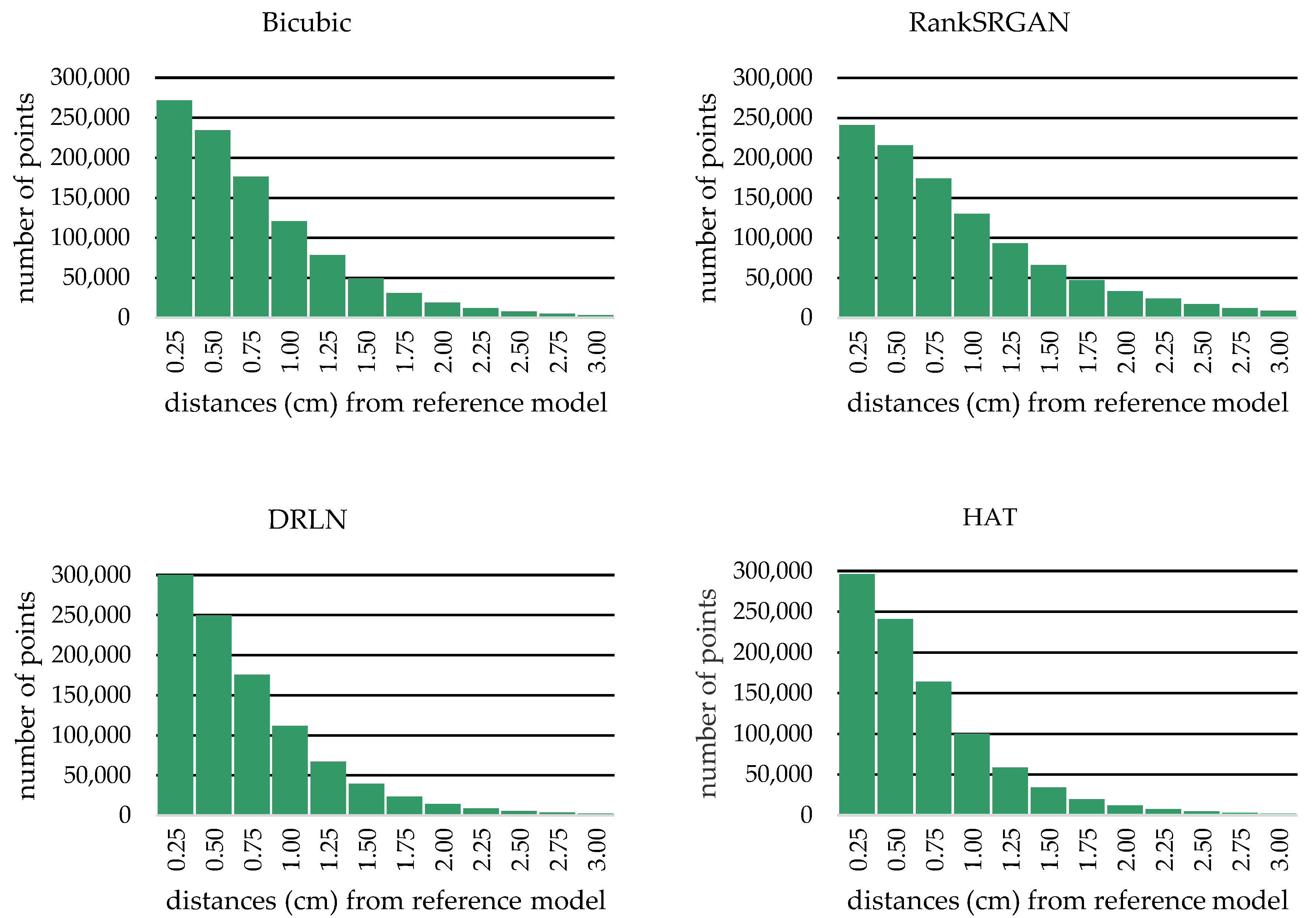

3.6. Comparison of Point Clouds

4. Results

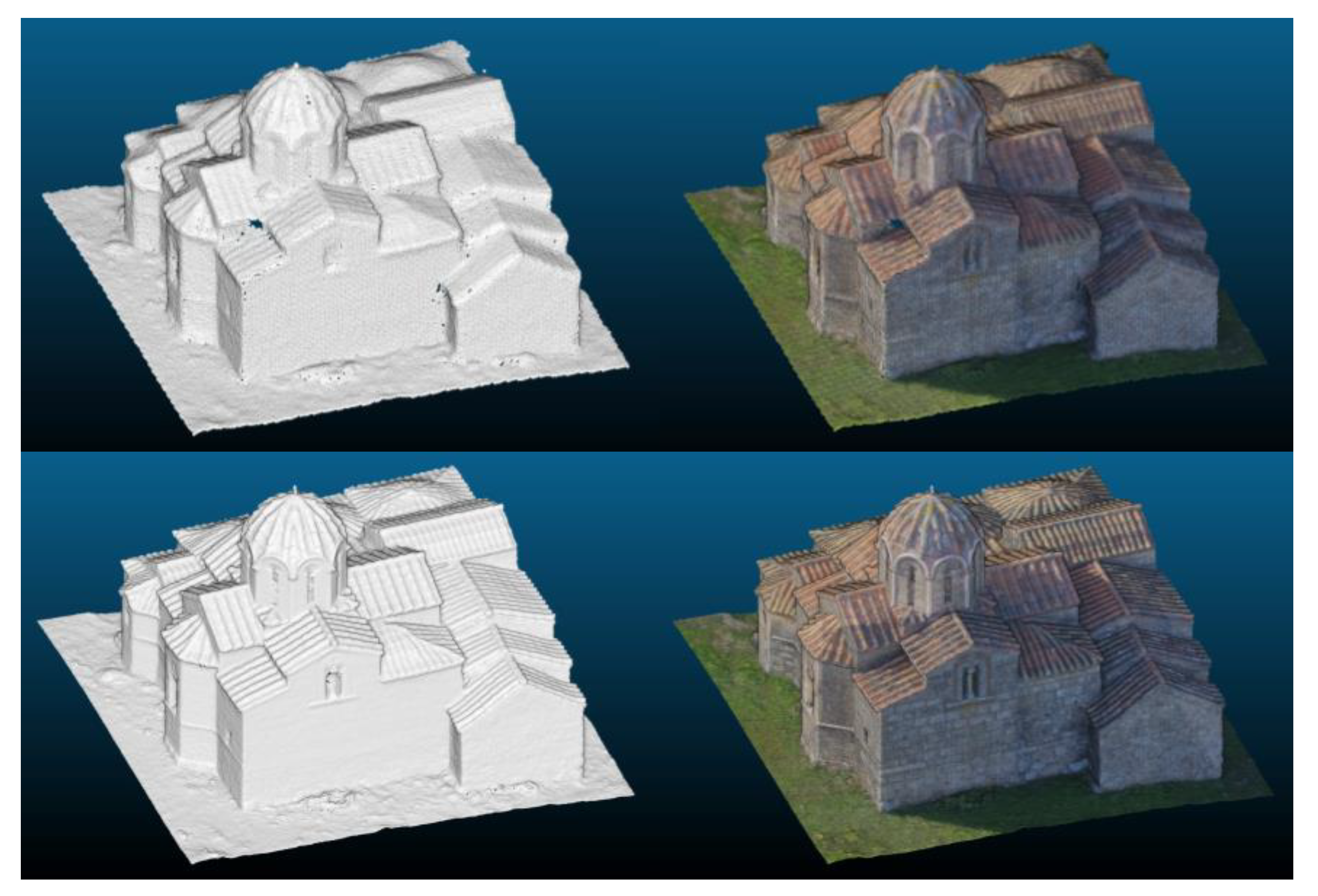

4.1. Full 3D Model

4.2. Model Segments

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Szeliski, R. Computer Vision: Algorithms and Applications, 2nd ed.; University of Washington: Seattle, WA, USA, 2022; Available online: https://szeliski.org/Book (accessed on 26 September 2022).

- Luhmann, T.; Robson, S.; Kyle, S.; Harley, I. Close Range Photogrammetry. Principles, Techniques and Applications; Whittles Publishing: Dunbeath, UK, 2011. [Google Scholar]

- James, M.R.; Chandler, J.H.; Eltner, A.; Fraser, C.; Miller, P.E.; Mills, J.P.; Noble, T.; Robson, S.; Lane, S.N. Guidelines on the Use of Structure-from-Motion Photogrammetry in Geomorphic Research. Earth Surf. Process. Landf. 2019, 44, 2081–2084. [Google Scholar] [CrossRef]

- Gerke, M. Developments in UAV-Photogrammetry. J. Digit. Landsc. Archit. 2018, 3, 262–272. [Google Scholar]

- Berra, E.F.; Peppa, M.V. Advances and Challenges of UAV SfM MVS Photogrammetry and Remote Sensing: Short Review. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Proceedings of the IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS 2020), Santiago, Chile, 22–26 March 2020; IEEE: Piscataway, NJ, USA, 2020; Volume XLII-3/W12, pp. 267–272. [Google Scholar]

- Campana, S. Drones in Archaeology. State-of-the-art and Future Perspectives. Archaeol. Prospec. 2017, 24, 275–296. [Google Scholar] [CrossRef]

- Pepe, M.; Alfio, V.S.; Costantino, D. UAV Platforms and the SfM-MVS Approach in the 3D Surveys and Modelling: A Review in the Cultural Heritage Field. Appl. Sci. 2022, 12, 12886. [Google Scholar] [CrossRef]

- Ran, Q.; Xu, X.; Zhao, S.; Li, W.; Du, Q. Remote sensing images super-resolution with deep convolution networks. Multimed. Tools Appl. 2020, 79, 8985–9001. [Google Scholar] [CrossRef]

- Shermeyer, J.; Van Etten, A. The effects of super-resolution on object detection performance in satellite imagery. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Panagiotopoulou, A.; Bratsolis, E.; Grammatikopoulos, L.; Petsa, E.; Charou, E.; Poirazidis, K.; Martinis, A.; Madamopoulos, N. Sentinel-2 images at 2.5 m spatial resolution via deep learning: A case study in Zakynthos. In Proceedings of the 2022 IEEE 14th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP 2022), Nafplio, Greece, 26–29 June 2022. [Google Scholar]

- Lomurno, E.; Romanoni, A.; Matteucci, M. Improving multi-view stereo via super-resolution. In Image Analysis and Processing—Proceedings of the 21st International Conference, Lecce, Italy, 23–27 May 2022; Sclaroff, S., Distante, C., Leo, M., Farinella, G.M., Tombari, F., Eds.; Lecture Notes in Computer Science 13232; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Burdziakowski, P. Increasing the geometrical and interpretation quality of unmanned aerial vehicle photogrammetry products using super-resolution algorithms. Remote Sens. 2020, 12, 810. [Google Scholar] [CrossRef]

- Pashaei, M.; Starek, M.J.; Kamangir, H.; Berryhill, J. Deep learning-based single image super-resolution: An investigation for dense scene reconstruction with UAS photogrammetry. Remote Sens. 2020, 12, 1757. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, Z.; Luo, Y.; Zhang, Y.; Wu, J.; Peng, Z. A CNN-based subpixel level DSM generation approach via single image super-resolution. Photogramm. Eng. Remote Sens. 2019, 85, 765–775. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Trans. Geosci. Remote Sens. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Wenming, Y.; Zhang, X.; Tian, Y.; Wang, W.; Xue, J.-H.; Liao, Q. Deep learning for single image SR: A brief overview. IEEE Trans. Multimed. 2019, 99, 3106–3121. [Google Scholar]

- Shamsolmoali, P.; Emre Celebi, M.; Wang, R. Deep learning approaches for real-time image super-resolution. Neural Comput. Appl. 2020, 32, 14519–14520. [Google Scholar] [CrossRef]

- Panagiotopoulou, A.; Grammatikopoulos, L.; Kalousi, G.; Charou, E. Sentinel-2 and SPOT-7 images in machine learning frameworks for super-resolution. In Pattern Recognition, ICPR International Workshops and Challenges, Virtual Event, 10–15 January 2021; Lecture Notes in Computer Science 12667; Del Bimbo, A., Cucchiara, R., Sclaroff, S., Farinella, G.M., Mei, T., Bertini, M., Escalante, H.J., Vezzani, R., Eds.; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Anwar, S.; Barnes, N. Densely residual Laplacian super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1192–1204. [Google Scholar] [CrossRef]

- Dong, R.; Zhang, L.; Fu, H. RRSGAN: Reference-based super-resolution for remote sensing image. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 5601117. [Google Scholar] [CrossRef]

- Islam, M.J.; SakibEnan, S.; Luo, P.; Sattar, J. Underwater image super-resolution using deep residual multipliers. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 15 September 2020. [Google Scholar]

- Niu, B.; Wen, W.; Ren, W.; Zhang, X.; Yang, L.; Wang, S.; Zhang, K.; Cao, X.; Shen, H. Single image super-resolution via a holistic attention network. In Computer Vision—16th European Conference (ECCV 2020), Glasgow, UK, 23–28 August 2020; Lecture Notes in Computer Science 12357; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, Y.; Dong, C.; Qiao, Y. RankSRGAN: Generative adversarial networks with ranker for image super-resolution. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 February 2020. [Google Scholar]

- Chen, X.; Wang, X.; Zhou, J.; Dong, C. Activating more pixels in image super-resolution transformer. arXiv 2022, arXiv:2205.04437v2. [Google Scholar]

- Imperatore, N.; Dumas, L. Contribution of super resolution to 3D reconstruction from pairs of satellite images. In ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences: Proceedings of the XXIV ISPRS Congress (2022 edition), Nice, France, 6–11 June 2022; International Society for Photogrammetry and Remote Sensing (ISPRS): Hannover, Germany, 2022; Volume V-2-2022, pp. 61–68. [Google Scholar]

- Agisoft Metashape. Available online: https://www.agisoft.com (accessed on 20 November 2022).

- Inzerillo, L.; Acuto, F.; Di Mino, G.; Uddin, M.Z. Super-resolution images methodology applied to UAV datasets to road pavement monitoring. Drones 2022, 6, 171. [Google Scholar] [CrossRef]

- Inzerillo, L. Super-resolution images on mobile smartphone aimed at 3D modeling. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences: Proceedings of the 9th International Workshop 3D-ARCH “3D Virtual Reconstruction and Visualization of Complex Architectures”, Mantua, Italy, 2–4 March 2022; Politecnico di Milano: Milan, Italy, 2022; Volume XLVI-2/W1-2022, pp. 259–266. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 63–79. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Schönberger, J.L.; Zheng, E.; Frahm, J.-M.; Pollefeys, M. Pixel-wise view selection for unstructured multi-view stereo. In European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 501–518. [Google Scholar]

- Yao, Y.; Luo, Z.; Li, S.; Fang, T.; Quan, L. MVSNet: Depth inference for unstructured multi-view stereo. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8–14 September 2018; Lecture Notes in Computer Science 11212; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Gu, X.; Fan, Z.; Zhu, S.; Dai, Z.; Tan, F.; Tan, P. Cascade cost volume for high-resolution multi-view stereo and stereo matching. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online/Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Deep back-projection networks for super resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Li, J.; Gao, W.; Wu, Y. High-quality 3D reconstruction with depth super-resolution and completion. IEEE Access 2019, 7, 19370–19381. [Google Scholar] [CrossRef]

- Li, J.; Gao, W.; Wu, Y.; Liu, Y.; Shen, Y. High-quality indoor scene 3D reconstruction with RGB-D cameras: A brief review. Comput. Vis. Media 2022, 8, 369–393. [Google Scholar] [CrossRef]

- Kaggle: Your Machine Learning and Data Science Community. Available online: https://www.kaggle.com/ (accessed on 20 November 2022).

- Schonberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Griwodz, C.; Gasparini, S.; Calvet, L.; Gurdjos, P.; Castan, F.; Maujean, B.; De Lillo, G.; Lanthony, Y. AliceVision Meshroom: An Open-Source 3D Reconstruction Pipeline. In Proceedings of the 12th ACM Multimedia Systems Conference, Istanbul, Turkey, 28 September–1 October 2021; pp. 241–247. [Google Scholar]

- Bentley: ContextCapture. Available online: https://www.bentley.com/software/contextcapture/ (accessed on 19 February 2023).

- CapturingReality: RealityCapture. Available online: https://www.capturingreality.com/ (accessed on 19 February 2023).

- Pix4D: Pix4DMapper. Available online: https://www.pix4d.com/ (accessed on 19 February 2023).

- Cheng, L.; Chen, S.; Liu, X.; Xu, H.; Wu, Y.; Li, M.; Chen, Y. Registration of Laser Scanning Point Clouds: A Review. Sensors 2018, 18, 1641. [Google Scholar] [CrossRef]

- Li, L.; Wang, R.; Zhang, X. A Tutorial Review on Point Cloud Registrations: Principle, Classification, Comparison, and Technology Challenges. Hindawi Math. Probl. Eng. 2021, 2021, 9953910. [Google Scholar] [CrossRef]

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A Comprehensive Survey on Point Cloud Registration. arXiv 2021, arXiv:2103.02690v2. [Google Scholar]

- Si, H.; Qiu, J.; Li, Y. A Review of Point Cloud Registration Algorithms for Laser Scanners: Applications in Large-Scale Aircraft Measurement. Appl. Sci. 2022, 12, 10247. [Google Scholar] [CrossRef]

- Brightman, N.; Fan, L.; Zhao, Y. Point Cloud Registration: A Mini-Review of Current State, Challenging Issues and Future Directions. AIMS Geosci. 2023, 9, 68–85. [Google Scholar] [CrossRef]

- Xu, N.; Qin, R.; Song, S. Point Cloud Registration for LiDAR and Photogrammetric Data: A Critical Synthesis and Performance Analysis on Classic and Deep Learning Algorithms. ISPRS Open J. Photogramm. Remote Sens. 2023, 8, 100032. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D.; Roux, M.; Marc, R.; Thibault, G. Change detection on points cloud data acquired with a ground laser scanner. In Proceedings of the ISPRS Workshop, Laser Scanning 2005, Enschede, The Netherlands, 12–14 September 2005. [Google Scholar]

- Cignoni, P.; Rocchini, C.; Scopigno, R. Metro: Measuring error on simplified surfaces. Comput. Graph. Forum 1998, 17, 167–174. [Google Scholar] [CrossRef]

- Ahmad, F.N.; Yusoff, A.R.; Ismail, Z.; Majid, Z. Comparing the Performance of Point Cloud Registration Methods for Landslide Monitoring Using Mobile Laser Scanning Data. In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences: International Conference on Geomatics and Geospatial Technology (GGT 2018), Kuala Lumpur, Malaysia, 3–5 September 2018; International Society for Photogrammetry and Remote Sensing (ISPRS): Hannover, Germany, 2018; Volume XLII-4/W9, pp. 11–21. [Google Scholar]

- Fretes, H.; Gomez-Redondo, M.; Paiva, E.; Rodas, J.; Gregor, R. A Review of Existing Evaluation Methods for Point Clouds Quality. In Proceedings of the International Workshop on Research, Education and Development on Unmanned Aerial Systems (RED-UAS 2019), Cranfield, UK, 25–27 November 2019; pp. 247–252. [Google Scholar]

- Helmholz, P.; Belton, D.; Oliver, N.; Hollick, J.; Woods, A. The Influence of the Point Cloud Comparison Methods on the Verification of Point Clouds Using the Batavia Reconstruction as a Case Study. In Proceedings of the 6th International Congress for Underwater Archaeology, Fremantle, WA, Australia, 28 November–2 December 2020; pp. 370–381. [Google Scholar]

- CloudCompare: 3D Point Cloud and Mesh Processing Software. Available online: https://www.cloudcompare.org/ (accessed on 20 November 2022).

- Knapitsch, A.; Park, J.; Zhou, Q.-Y.; Koltun, V. Tanks and temples: Benchmarking large-scale scene reconstruction. ACM Trans. Graph. (ToG) 2017, 36, 78. [Google Scholar] [CrossRef]

- Schöps, T.; Schönberger, J.L.; Galliani, S.; Sattler, T.; Schindler, K.; Pollefeys, M.; Geiger, A. A multi-view stereo benchmark with high-resolution images and multi-camera videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2538–2547. [Google Scholar]

| 3D Models | Number of Vertices |

|---|---|

| HR (reference) | 907,917 |

| LR | 87,844 |

| BU | 1,020,456 |

| SR RankSRGAN | 1,091,278 |

| SR DRLN | 1,012,209 |

| SR HAT | 951,992 |

| Point Cloud | Evaluation Threshold t (cm) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 | 1.0 | 2.0 | 3.0 | |||||||||

| p | r | F | p | r | F | p | r | F | p | r | F | |

| LR from Agisoft | 15.52 | 13.79 | 14.61 | 30.40 | 27.13 | 28.67 | 57.06 | 51.49 | 54.13 | 77.76 | 72.09 | 74.81 |

| BU | 49.62 | 46.62 | 48.08 | 78.74 | 75.28 | 76.97 | 96.17 | 94.89 | 95.52 | 98.99 | 98.56 | 98.77 |

| RankSRGAN | 41.84 | 40.08 | 40.94 | 69.71 | 67.26 | 68.46 | 91.71 | 90.24 | 90.97 | 97.47 | 96.87 | 97.17 |

| DRLN | 54.38 | 53.04 | 53.70 | 82.80 | 81.35 | 82.07 | 97.12 | 96.85 | 96.98 | 99.19 | 99.18 | 99.18 |

| HAT | 56.16 | 55.10 | 55.62 | 84.25 | 82.94 | 83.59 | 97.35 | 97.17 | 97.26 | 99.22 | 99.27 | 99.24 |

| Point Cloud | Evaluation Threshold t (cm) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 cm | 1 cm | 2 cm | 3 cm | |||||||||

| p | r | F | p | r | F | p | r | F | p | r | F | |

| BU | 44.88 | 41.92 | 43.35 | 75.25 | 71.03 | 73.08 | 95.72 | 94.03 | 94.87 | 98.95 | 98.40 | 98.67 |

| RankSRGAN | 39.72 | 36.82 | 38.21 | 67.74 | 63.79 | 65.71 | 91.04 | 88.92 | 89.97 | 97.33 | 96.62 | 96.97 |

| DRLN | 54.43 | 52.56 | 53.48 | 83.16 | 80.98 | 82.05 | 97.23 | 96.77 | 97.00 | 99.23 | 99.16 | 99.19 |

| HAT | 55.89 | 54.68 | 55.28 | 84.56 | 82.71 | 83.62 | 97.59 | 97.18 | 97.38 | 99.28 | 99.28 | 99.28 |

| Point Cloud | Evaluation Threshold t (cm) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 cm | 1 cm | 2 cm | 3 cm | |||||||||

| p | r | F | p | r | F | p | r | F | p | r | F | |

| BU | 50.83 | 47.37 | 49.04 | 80.38 | 75.93 | 78.09 | 96.32 | 95.09 | 95.70 | 99.05 | 98.78 | 98.91 |

| RankSRGAN | 40.11 | 38.10 | 39.08 | 68.15 | 65.36 | 66.73 | 90.91 | 89.29 | 90.09 | 97.17 | 96.74 | 96.95 |

| DRLN | 52.85 | 44.88 | 48.54 | 82.14 | 75.76 | 78.82 | 97.04 | 96.08 | 96.56 | 99.21 | 99.11 | 99.16 |

| HAT | 54.09 | 52.63 | 53.35 | 83.09 | 81.50 | 82.29 | 97.14 | 96.96 | 97.05 | 99.22 | 99.28 | 99.25 |

| Point Cloud | Evaluation Threshold t (cm) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 cm | 1 cm | 2 cm | 3 cm | |||||||||

| p | r | F | p | r | F | p | r | F | p | r | F | |

| BU | 43.19 | 47.62 | 45.30 | 74.27 | 77.70 | 75.95 | 95.77 | 96.36 | 96.06 | 98.97 | 99.18 | 99.07 |

| RankSRGAN | 38.62 | 40.63 | 39.60 | 66.90 | 68.91 | 67.89 | 91.46 | 91.85 | 91.65 | 97.57 | 97.75 | 97.66 |

| DRLN | 42.27 | 53.96 | 47.40 | 72.30 | 82.94 | 77.25 | 95.12 | 97.51 | 96.30 | 98.89 | 99.45 | 99.17 |

| HAT | 48.40 | 54.94 | 51.46 | 78.68 | 84.15 | 81.32 | 96.62 | 97.78 | 97.20 | 99.13 | 99.44 | 99.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Panagiotopoulou, A.; Grammatikopoulos, L.; El Saer, A.; Petsa, E.; Charou, E.; Ragia, L.; Karras, G. Super-Resolution Techniques in Photogrammetric 3D Reconstruction from Close-Range UAV Imagery. Heritage 2023, 6, 2701-2715. https://doi.org/10.3390/heritage6030143

Panagiotopoulou A, Grammatikopoulos L, El Saer A, Petsa E, Charou E, Ragia L, Karras G. Super-Resolution Techniques in Photogrammetric 3D Reconstruction from Close-Range UAV Imagery. Heritage. 2023; 6(3):2701-2715. https://doi.org/10.3390/heritage6030143

Chicago/Turabian StylePanagiotopoulou, Antigoni, Lazaros Grammatikopoulos, Andreas El Saer, Elli Petsa, Eleni Charou, Lemonia Ragia, and George Karras. 2023. "Super-Resolution Techniques in Photogrammetric 3D Reconstruction from Close-Range UAV Imagery" Heritage 6, no. 3: 2701-2715. https://doi.org/10.3390/heritage6030143

APA StylePanagiotopoulou, A., Grammatikopoulos, L., El Saer, A., Petsa, E., Charou, E., Ragia, L., & Karras, G. (2023). Super-Resolution Techniques in Photogrammetric 3D Reconstruction from Close-Range UAV Imagery. Heritage, 6(3), 2701-2715. https://doi.org/10.3390/heritage6030143