Generative Artificial Intelligence, Human Agency and the Future of Cultural Heritage

Abstract

:1. Introduction

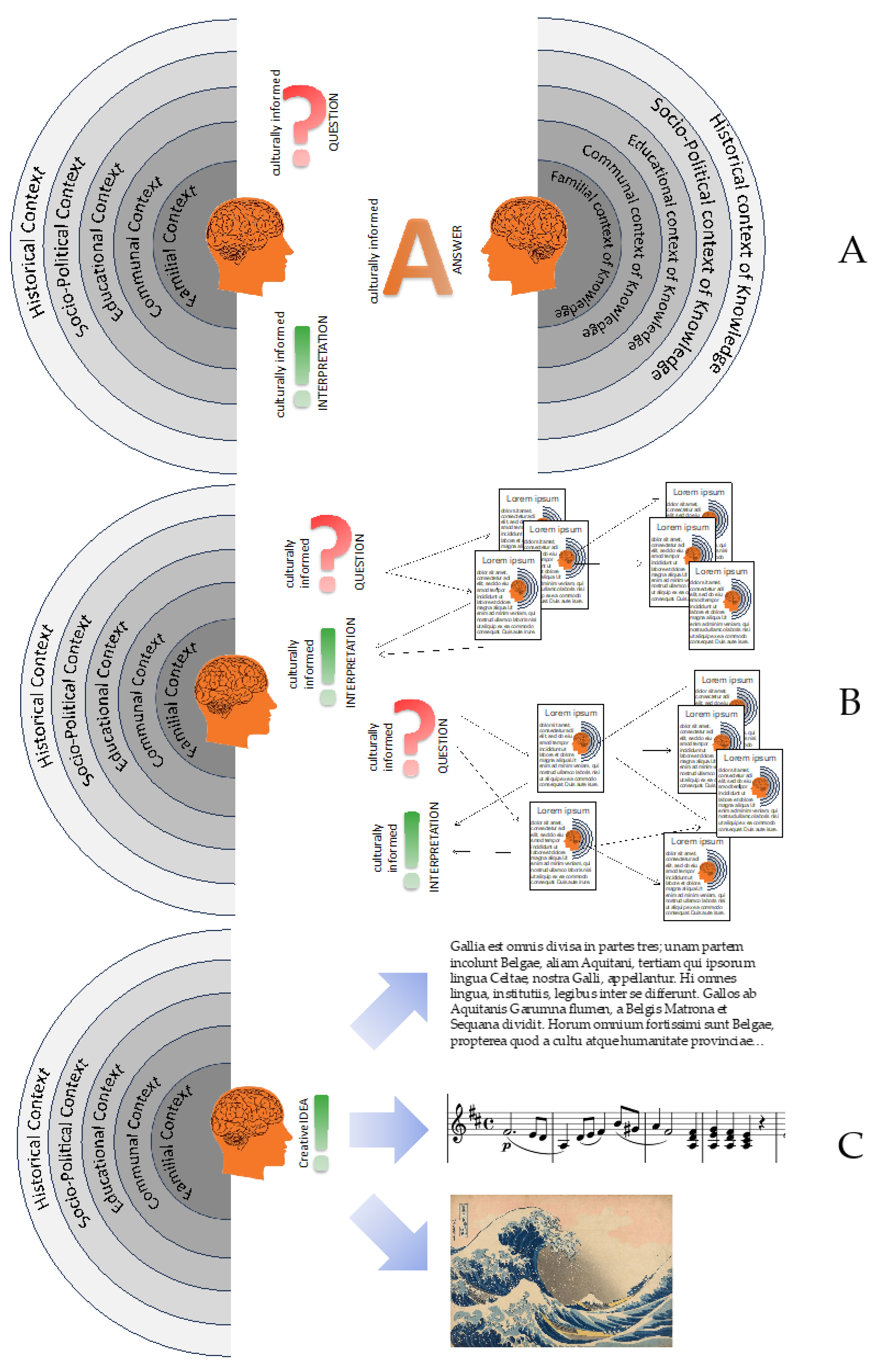

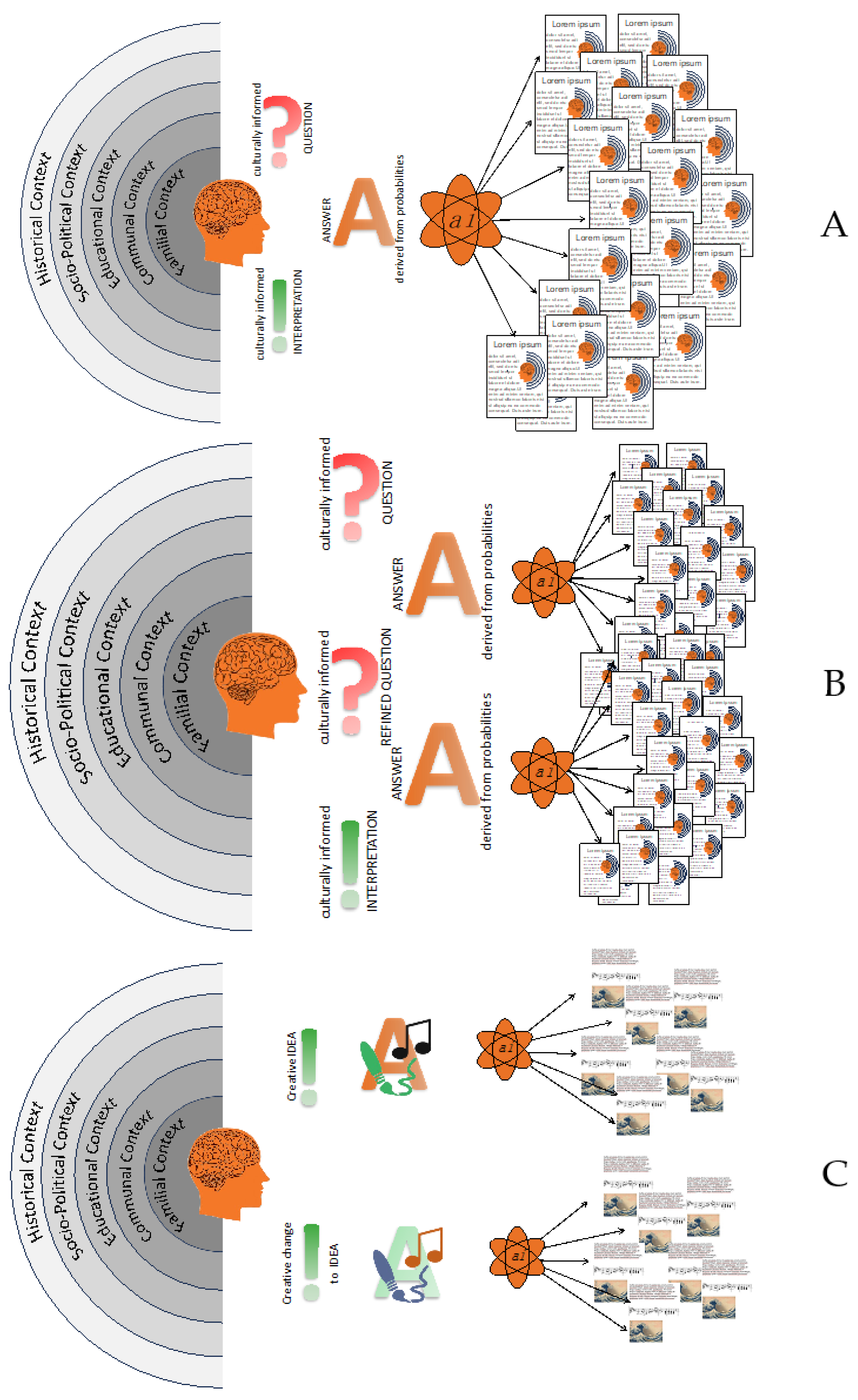

2. Background to Generative Artificial Intelligence Tools

3. GenAI and Human Agency

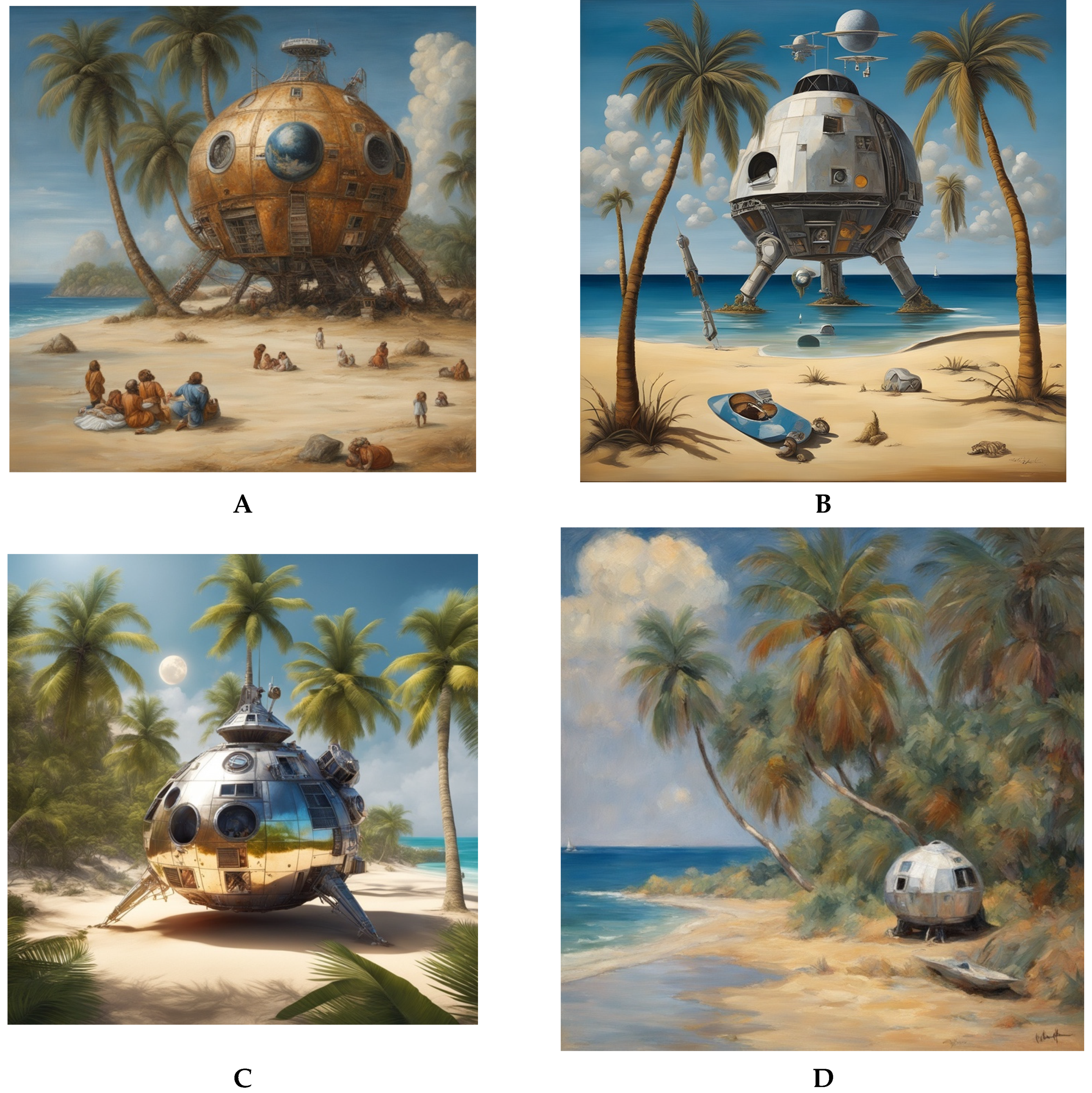

4. GenAI and Authorship

5. GenAI and Cultural Heritage

6. Culture, Heritage and the Future of Cultural Heritage in the Age of GenAI

- The individual must be sentient, i.e., possess the ability to consciously experience sensations, emotions, and feelings; and possess consciousness, i.e., have awareness of internal (self) and external existence;

- The individual demonstrates traces of culture at least at a basic level, i.e., intergenerationally transmitted behavior or skills learned from ‘conspecifics’ that cannot be explained either environmentally or genetically (or in the case of artificial intelligence are not due to human-designed algorithms);

- As heritage is about the relevance of past cultural manifestations to the present, the individual must not only have an understanding of time, including the concepts of past and future, but also an understanding of “being” in the present;

- The individual must have a basic sense of foresight, i.e., that any action or inaction may have consequences in the immediate to near future;

- Beyond basic self-awareness, the individual must be aware of their identity as an individual who is enculturated in familial, communal, educational, socio-political, and historical contexts;

- The individual must be able to understand the concept of ‘values’ either implicitly by experiencing feelings of nostalgia or solastalgia, or conceptually by recognizing values as non-static, relative, and conditional constructs that are projected on behaviors and actions, or on tangible elements of the natural or created environment;

- Finally, ideally (but not as a conditio sine qua non), individuals should also be capable of initiation and conceptualization of creative or research ideas.

Funding

Data Availability Statement

Conflicts of Interest

References

- Jeon, J.; Lee, S. Large language models in education: A focus on the complementary relationship between human teachers and ChatGPT. Educ. Inf. Technol. 2023, 28, 15873–15892. [Google Scholar] [CrossRef]

- Zhu, Y.; Han, D.; Chen, S.; Zeng, F.; Wang, C. How Can ChatGPT Benefit Pharmacy: A Case Report on Review Writing. Preprints.org 2023. [Google Scholar] [CrossRef]

- Sok, S.; Heng, K. ChatGPT for education and research: A review of benefits and risks. Cambodian J. Educ. Res. 2023, 3, 110–121. [Google Scholar] [CrossRef]

- Rao, A.S.; Pang, M.; Kim, J.; Kamineni, M.; Lie, W.; Prasad, A.K.; Landman, A.; Dryer, K.; Succi, M.D. Assessing the utility of ChatGPT throughout the entire clinical workflow. medRxiv 2023. [Google Scholar] [CrossRef]

- Rudolph, J.; Tan, S.; Tan, S. ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? J. Appl. Learn. Teach. 2023, 6, 242–263. [Google Scholar]

- King, M.R.; chatGPT. A Conversation on Artificial Intelligence, Chatbots, and Plagiarism in Higher Education. Cell. Mol. Bioeng. 2023, 16, 1–2. [Google Scholar] [CrossRef] [PubMed]

- Spennemann, D.H.R.; Biles, J.; Brown, L.; Ireland, M.F.; Longmore, L.; Singh, C.J.; Wallis, A.; Ward, C. ChatGPT giving advice on how to cheat in university assignments: How workable are its suggestions? Res. Sq. 2023. [Google Scholar] [CrossRef]

- Biswas, S. Importance of Chat GPT in Agriculture: According to Chat GPT. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4405391 (accessed on 28 June 2023).

- Neves, P.S. Chat GPT AIS “Interview” 1, December 2022. AIS-Archit. Image Stud. 2022, 3, 58–67. [Google Scholar]

- Castro Nascimento, C.M.; Pimentel, A.S. Do Large Language Models Understand Chemistry? A Conversation with ChatGPT. J. Chem. Inf. Model. 2023, 63, 1649–1655. [Google Scholar] [CrossRef]

- Surameery, N.M.S.; Shakor, M.Y. Use chat gpt to solve programming bugs. Int. J. Inf. Technol. Comput. Eng. (IJITC) 2023, 3, 17–22. [Google Scholar] [CrossRef]

- Sng, G.G.R.; Tung, J.Y.M.; Lim, D.Y.Z.; Bee, Y.M. Potential and pitfalls of ChatGPT and natural-language artificial intelligence models for diabetes education. Diabetes Care 2023, 46, e103–e105. [Google Scholar] [CrossRef]

- Grünebaum, A.; Chervenak, J.; Pollet, S.L.; Katz, A.; Chervenak, F.A. The exciting potential for ChatGPT in obstetrics and gynecology. Am. J. Obstet. Gynecol. 2023, 228, 696–705. [Google Scholar] [CrossRef]

- Qi, X.; Zhu, Z.; Wu, B. The promise and peril of ChatGPT in geriatric nursing education: What We know and do not know. Aging Health Res. 2023, 3, 100136. [Google Scholar] [CrossRef]

- Currie, G.; Singh, C.; Nelson, T.; Nabasenja, C.; Al-Hayek, Y.; Spuur, K. ChatGPT in medical imaging higher education. Radiography 2023, 29, 792–799. [Google Scholar] [CrossRef] [PubMed]

- Spennemann, R.; Orthia, L. Creating a Market for Technology through Film: Diegetic Prototypes in the Iron Man Trilogy. Arb. Aus Angl. Am. 2023, 47, 225–242. [Google Scholar] [CrossRef]

- Kelley, P.G.; Yang, Y.; Heldreth, C.; Moessner, C.; Sedley, A.; Kramm, A.; Newman, D.T.; Woodruff, A. Exciting, useful, worrying, futuristic: Public perception of artificial intelligence in 8 countries. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, Virtual Event, 19–21 May 2021; pp. 627–637. [Google Scholar]

- Beets, B.; Newman, T.P.; Howell, E.L.; Bao, L.; Yang, S. Surveying Public Perceptions of Artificial Intelligence in Health Care in the United States: Systematic Review. J. Med. Internet Res. 2023, 25, e40337. [Google Scholar] [CrossRef]

- Subaveerapandiyan, A.; Sunanthini, C.; Amees, M. A study on the knowledge and perception of artificial intelligence. IFLA J. 2023, 49, 503–513. [Google Scholar] [CrossRef]

- Markov, T.; Zhang, C.; Agarwal, S.; Eloundou, T.; Lee, T.; Adler, S.; Jiang, A.; Weng, L. New and Improved Content Moderation Tooling. Available online: https://web.archive.org/web/20230130233845mp_/https://openai.com/blog/new-and-improved-content-moderation-tooling/ [via Wayback Machine] (accessed on 28 June 2023).

- Collins, E.; Ghahramani, Z. LaMDA: Our Breakthrough Conversation Technology. Available online: https://blog.google/technology/ai/lamda/ (accessed on 1 September 2023).

- Spennemann, D.H.R. ChatGPT and the generation of digitally born “knowledge”: How does a generative AI language model interpret cultural heritage values? Knowledge 2023, 3, 480–512. [Google Scholar] [CrossRef]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.L.; Tang, Y. A Brief Overview of ChatGPT: The History, Status Quo and Potential Future Development. IEEE/CAA J. Autom. Sin. 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. What has ChatGPT read? References and referencing of archaeological literature by a generative artificial intelligence application. arXiv 2023, arXiv:2308.03301. [Google Scholar]

- Spennemann, D.H.R. Exploring ethical boundaries: Can ChatGPT be prompted to give advice on how to cheat in university assignments? arXiv 2020, arXiv:202308.1271.v1. [Google Scholar] [CrossRef]

- O’Connor, R. How DALL-E 2 Actually Works. Available online: https://www.assemblyai.com/blog/how-dall-e-2-actually-works/ (accessed on 10 September 2023).

- Marcus, G.; Davis, E.; Aaronson, S. A very preliminary analysis of DALL-E 2. arXiv 2022, arXiv:2204.13807. [Google Scholar]

- Borji, A. Generated faces in the wild: Quantitative comparison of stable diffusion, midjourney and dall-e 2. arXiv 2022, arXiv:2210.00586. [Google Scholar]

- Ruskov, M. Grimm in Wonderland: Prompt Engineering with Midjourney to Illustrate Fairytales. arXiv 2023, arXiv:2302.08961. [Google Scholar]

- Korsten, B.; Haanstra, B. The Next Rembrandt. Available online: www.nextrembrandt.com (accessed on 1 September 2023).

- Kim, B.S. Acculturation and enculturation. Handb. Asian Am. Psychol. 2007, 2, 141–158. [Google Scholar]

- Alcántara-Pilar, J.M.; Armenski, T.; Blanco-Encomienda, F.J.; Del Barrio-García, S. Effects of cultural difference on users’ online experience with a destination website: A structural equation modelling approach. J. Destin. Mark. Manag. 2018, 8, 301–311. [Google Scholar] [CrossRef]

- Hekman, S. Truth and method: Feminist standpoint theory revisited. Signs J. Women Cult. Soc. 1997, 22, 341–365. [Google Scholar] [CrossRef]

- Ginsburg, J.C. The concept of authorship in comparative copyright law. DePaul Law Rev. 2002, 52, 1063–1091. [Google Scholar] [CrossRef]

- U.S. Copyright Office. Compendium of U.S. Copyright Office Practices, 3rd ed.; U.S. Copyright Office: Washington, DC, USA, 2021.

- U.S. Copyright Office. Copyright Registration Guidance: Works Containing Material Generated by Artificial Intelligence; U.S. Copyright Office: Washington, DC, USA, 2023.

- Nguyen, P. The monkey selfie, artificial intelligence and authorship in copyright: The limits of human rights. Pub. Int. LJNZ 2019, 6, 121. [Google Scholar]

- Ncube, C.B.; Oriakhogba, D.O. Monkey selfie and authorship in copyright law: The Nigerian and South African perspectives. Potchefstroom Electron. Law J. 2018, 21, 2–35. [Google Scholar] [CrossRef]

- Rosati, E. The Monkey Selfie case and the concept of authorship: An EU perspective. J. Intellect. Prop. Law Pract. 2017, 12, 973–977. [Google Scholar] [CrossRef]

- Chatterjee, A. Art in an age of artificial intelligence. Front. Psychol. 2022, 13, 1024449. [Google Scholar] [CrossRef]

- Committee on Publication Ethics. Authorship and AI Tools. Available online: https://publicationethics.org/cope-position-statements/ai-author (accessed on 15 September 2023).

- Levene, A. Artificial Intelligence and Authorship. Available online: https://publicationethics.org/news/artificial-intelligence-and-authorship (accessed on 15 September 2023).

- Zielinski, C.; Winker, M.A.; Aggarwal, R.; Ferris, L.E.; Heinemann, M.; Lapeña, J.; Florencio, J.; Pai, S.A.; Ing, E.; Citrome, L.; et al. Chatbots, Generative AI, and Scholarly Manuscripts. WAME Recommendations on Chatbots and Generative Artificial Intelligence in Relation to Scholarly Publications. WAME. 31 May 2023. Available online: https://wame.org/page3.php?id=106 (accessed on 15 September 2023).

- Flanagin, A.; Bibbins-Domingo, K.; Berkwits, M.; Christiansen, S.L. Nonhuman “authors” and implications for the integrity of scientific publication and medical knowledge. Jama 2023, 329, 637–639. [Google Scholar] [CrossRef]

- Wiley. Best Practice Guidelines on Research Integrity and Publishing Ethics. Available online: https://authorservices.wiley.com/ethics-guidelines/index.html (accessed on 15 September 2023).

- Sage. ChatGPT and Generative AI. Available online: https://us.sagepub.com/en-us/nam/chatgpt-and-generative-ai (accessed on 15 September 2023).

- Emerald Publishing’s. Emerald Publishing’s Stance on AI Tools and Authorship. Available online: https://www.emeraldgrouppublishing.com/news-and-press-releases/emerald-publishings-stance-ai-tools-and-authorship (accessed on 15 September 2023).

- Elsevier. The Use of AI and AI-Assisted Writing Technologies in Scientific Writing. Available online: https://www.elsevier.com/about/policies/publishing-ethics/the-use-of-ai-and-ai-assisted-writing-technologies-in-scientific-writing (accessed on 15 September 2023).

- Elsevier. Publishing Ethics. Available online: https://beta.elsevier.com/about/policies-and-standards/publishing-ethics (accessed on 15 September 2023).

- Taylor & Francis. Taylor & Francis Clarifies the Responsible Use of AI Tools in Academic Content Creation. Available online: https://newsroom.taylorandfrancisgroup.com/taylor-francis-clarifies-the-responsible-use-of-ai-tools-in-academic-content-creation/ (accessed on 15 September 2023).

- Vecco, M. A definition of cultural heritage: From the tangible to the intangible. J. Cult. Herit. 2010, 11, 321–324. [Google Scholar] [CrossRef]

- Munjeri, D. Tangible and intangible heritage: From difference to convergence. Mus. Int. 2004, 56, 12–20. [Google Scholar] [CrossRef]

- Parker, M.; Spennemann, D.H.R. Classifying sound: A tool to enrich intangible heritage management. Acoust. Aust. 2021, 50, 23–39. [Google Scholar] [CrossRef]

- Smith, L. Uses of Heritage; Routledge: Abingdon, UK, 2006. [Google Scholar]

- UNESCO. Basic Texts of the 2003 Convention for the Safeguarding of Intangible Cultural Heritage’ for Its Protection and Promotion; UNESCO: Paris, France, 2020. [Google Scholar]

- Howard, K. Music as Intangible Cultural Heritage: Policy, Ideology, and Practice in the Preservation of East Asian Traditions; Routledge: Abingdon, UK, 2016. [Google Scholar]

- Lenzerini, F. Intangible cultural heritage: The living culture of peoples. Eur. J. Int. Law 2011, 22, 101–120. [Google Scholar] [CrossRef]

- Spennemann, D.H.R.; Clemens, J.; Kozlowski, J. Scars on the Tundra: The cultural landscape of the Kiska Battlefield, Aleutians. Alsk. Park Sci. 2011, 10, 16–21. [Google Scholar]

- Wells, J.C.; Stiefel, B.L. Human-Centered Built Environment Heritage Preservation: Theory and Evidence-Based Practice; Routledge: Abingdon, UK, 2018. [Google Scholar]

- Griffin, G.; Wennerström, E.; Foka, A. AI and Swedish Heritage Organisations: Challenges and opportunities. AI Soc. 2023, 8, 301–311. [Google Scholar] [CrossRef]

- Romanengo, C.; Biasotti, S.; Falcidieno, B. Recognising decorations in archaeological finds through the analysis of characteristic curves on 3D models. Pattern Recognit. Lett. 2020, 131, 405–412. [Google Scholar] [CrossRef]

- Ostertag, C.; Beurton-Aimar, M. Matching ostraca fragments using a siamese neural network. Pattern Recognit. Lett. 2020, 131, 336–340. [Google Scholar] [CrossRef]

- Marie, I.; Qasrawi, H. Virtual assembly of pottery fragments using moiré surface profile measurements. J. Archaeol. Sci. 2005, 32, 1527–1533. [Google Scholar] [CrossRef]

- Cardarelli, L. A deep variational convolutional Autoencoder for unsupervised features extraction of ceramic profiles. A case study from central Italy. J. Archaeol. Sci. 2022, 144, 105640. [Google Scholar] [CrossRef]

- De Smet, P. Reconstruction of ripped-up documents using fragment stack analysis procedures. Forensic Sci. Int. 2008, 176, 124–136. [Google Scholar] [CrossRef]

- Aslan, S.; Vascon, S.; Pelillo, M. Two sides of the same coin: Improved ancient coin classification using Graph Transduction Games. Pattern Recognit. Lett. 2020, 131, 158–165. [Google Scholar] [CrossRef]

- Verschoof-Van der Vaart, W.B.; Lambers, K. Learning to look at LiDAR: The use of R-CNN in the automated detection of archaeological objects in LiDAR data from the Netherlands. J. Comput. Appl. Archaeol. 2019, 2, 31–40. [Google Scholar] [CrossRef]

- Frąckiewicz, M. ChatGPT-4 for Digital Archaeology: AI-Powered Artifact Discovery and Analysis. Available online: https://ts2.space/en/chatgpt-4-for-digital-archaeology-ai-powered-artifact-discovery-and-analysis/ (accessed on 29 June 2023).

- Makhortykh, M.; Zucker, E.M.; Simon, D.J.; Bultmann, D.; Ulloa, R. Shall androids dream of genocides? How generative AI can change the future of memorialization of mass atrocities. Discov. Artif. Intell. 2023, 3, 28. [Google Scholar] [CrossRef]

- Trichopoulos, G.; Konstantakis, M.; Alexandridis, G.; Caridakis, G. Large Language Models as Recommendation Systems in Museums. Electronics 2023, 12, 3829. [Google Scholar] [CrossRef]

- Trichopoulos, G.; Konstantakis, M.; Caridakis, G.; Katifori, A.; Koukouli, M. Crafting a Museum Guide Using GPT4. Bid Data Cogntiive Comput. 2023, 7, 148. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. Exhibiting the Heritage of Covid-19—A Conversation with ChatGPT. Heritage 2023, 6, 5732–5749. [Google Scholar] [CrossRef]

- Tenzer, M.; Pistilli, G.; Brandsen, A.; Shenfield, A. Debating AI in Archaeology: Applications, Implications, and Ethical Considerations. SocArXiv Prepr. 2023. Available online: https://osf.io/preprints/socarxiv/r2j7h (accessed on 28 June 2023).

- Leshkevich, T.; Motozhanets, A. Social Perception of Artificial Intelligence and Digitization of Cultural Heritage: Russian Context. Appl. Sci. 2022, 12, 2712. [Google Scholar] [CrossRef]

- Cobb, P.J. Large Language Models and Generative AI, Oh My!: Archaeology in the Time of ChatGPT, Midjourney, and Beyond. Adv. Archaeol. Pract. 2023, 11, 363–369. [Google Scholar] [CrossRef]

- Chang, K.K.; Cramer, M.; Soni, S.; Bamman, D. Speak, memory: An archaeology of books known to chatgpt/gpt-4. arXiv 2023, arXiv:2305.00118. [Google Scholar]

- Spennemann, D.H.R. The Digital Heritage of the battle to contain COVID-19 in Australia and its implications for Heritage Studies. Heritage 2023, 6, 3864–3884. [Google Scholar] [CrossRef]

- Hines, A.; Bishop, P.J.; Slaughter, R.A. Thinking about the Future: Guidelines for Strategic Foresight; Social Technologies: Washington, DC, USA, 2006. [Google Scholar]

- van Duijne, F.; Bishop, P. Introduction to Strategic Foresight; Future Motions, Dutch Futures Society: Den Haag, The Netherlands, 2018; Volume 1, p. 67. [Google Scholar]

- Spennemann, D.H.R. Conceptualizing a Methodology for Cultural Heritage Futures: Using Futurist Hindsight to make ‘Known Unknowns’ Knowable. Heritage 2023, 6, 548–566. [Google Scholar] [CrossRef]

- Boesch, C.; Tomasello, M. Chimpanzee and human cultures. Curr. Anthropol. 1998, 39, 591–614. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. Of Great Apes and Robots: Considering the Future(s) of Cultural Heritage. Futures–J. Policy Plan. Futures Stud. 2007, 39, 861–877. [Google Scholar] [CrossRef]

- Nihei, Y.; Higuchi, H. When and where did crows learn to use automobiles as nutcrackers. Tohoku Psychol. Folia 2001, 60, 93–97. [Google Scholar]

- Krützen, M.; Mann, J.; Heithaus, M.R.; Connor, R.C.; Bejder, L.; Sherwin, W.B. Cultural transmission of tool use in bottlenose dolphins. Proc. Natl. Acad. Sci. USA 2005, 102, 8939–8943. [Google Scholar] [CrossRef]

- Whiten, A.; Goodall, J.; McGrew, W.C.; Nishida, T.; Reynolds, V.; Sugiyama, Y.; Tutin, C.E.; Wrangham, R.W.; Boesch, C. Cultures in chimpanzees. Nature 1999, 399, 682–685. [Google Scholar] [CrossRef]

- van Schaik, C.P.; Ancrenaz, M.; Djojoasmoro, R.; Knott, C.D.; Morrogh-Bernard, H.C.; Nuzuar, O.K.; Atmoko, S.S.U.; Van Noordwijk, M.A. Orangutan cultures revisited. In Orangutans: Geographic Variation in Behavioral Ecology and Conservation; Wich, S.A., Atmoko, S.S.U., Setia, T.M., van Schaik, C.P., Eds.; Oxford University Press: Oxford, UK, 2009; pp. 299–309. [Google Scholar]

- Boyd, R.; Richerson, P.J. Why culture is common, but cultural evolution is rare. In Proceedings-British Academy; Oxford University Press Inc.: Oxford, UK, 1996; pp. 77–94. [Google Scholar]

- UNESCO. UNESCO Universal Declaration on Cultural Diversity 2 November 2001. In Records of the General Conference, 31st Session Paris, France, October 15–November 3, 2001; United Nations Educational, Scientific and Cultural Organization: Paris, France, 2001; Volume 1 Resolutions, pp. 62–64. [Google Scholar]

- Spennemann, D.H.R. Beyond “Preserving the Past for the Future”: Contemporary Relevance and Historic Preservation. CRM J. Herit. Steward. 2011, 8, 7–22. [Google Scholar]

- Spennemann, D.H.R. Futurist rhetoric in U.S. historic preservation: A review of current practice. Int. Rev. Public Nonprofit Mark. 2007, 4, 91–99. [Google Scholar] [CrossRef]

- ICOMOS Australia. The Burra Charter: The Australia ICOMOS Charter for Places of Cultural Significance 2013; Australia ICOMOS Inc. International Council of Monuments and Sites: Burwood, Australia, 2013. [Google Scholar]

- Murtagh, W.J. Keeping Time: The History and Theory of Preservation in America; John Wiley and Sons: New York, NY, USA, 1997. [Google Scholar]

- Bickford, A. The patina of nostalgia. Aust. Archaeol. 1981, 13, 1–7. [Google Scholar] [CrossRef]

- Lowenthal, D. The Past Is a Foreign Country; Cambridge University Press: Cambridge, UK, 1985. [Google Scholar]

- Fredheim, L.H.; Khalaf, M. The significance of values: Heritage value typologies re-examined. Int. J. Herit. Stud. 2016, 22, 466–481. [Google Scholar] [CrossRef]

- Smith, G.S.; Messenger, P.M.; Soderland, H.A. Heritage Values in Contemporary Society; Routledge: Abingdon, UK, 2017. [Google Scholar]

- Díaz-Andreu, M. Heritage values and the public. J. Community Archaeol. Herit. 2017, 4, 2–6. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. The Shifting Baseline Syndrome and Generational Amnesia in Heritage Studies. Heritage 2022, 5, 2007–2027. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. On the Cultural Heritage of Robots. Int. J. Herit. Stud. 2007, 13, 4–21. [Google Scholar] [CrossRef]

- Schwitzgebel, E. AI systems must not confuse users about their sentience or moral status. Patterns 2023, 4, 100818. [Google Scholar] [CrossRef] [PubMed]

- Bronfman, Z.; Ginsburg, S.; Jablonka, E. When will robots be sentient? J. Artif. Intell. Conscious. 2021, 8, 183–203. [Google Scholar] [CrossRef]

- Walter, Y.; Zbinden, L. The problem with AI consciousness: A neurogenetic case against synthetic sentience. arXiv 2022, arXiv:2301.05397. [Google Scholar]

- Shah, C. Sentient AI—Is That What We Really Want? Inf. Matters 2022, 2, 1–3. [Google Scholar] [CrossRef]

- Coghlan, S.; Parker, C. Harm to Nonhuman Animals from AI: A Systematic Account and Framework. Philos. Technol. 2023, 36, 25. [Google Scholar] [CrossRef]

- Gibert, M.; Martin, D. In search of the moral status of AI: Why sentience is a strong argument. AI Soc. 2022, 37, 319–330. [Google Scholar] [CrossRef]

- Silverman, H. Contested Cultural Heritage: Religion, Nationalism, Erasure, and Exclusion in a Global World; Springer: New York, NY, USA, 2010. [Google Scholar]

- Rose, D.V. Conflict and the deliberate destruction of cultural heritage. In Conflicts and Tensions’ The Cultures and Globalization Series; Anheier, H., Isar, Y.R., Eds.; Sage: London, UK, 2007; pp. 102–116. [Google Scholar]

- Tunbridge, J.; Ashworth, G. Dissonant Heritage: The Management of the Past as a Resource in Conflict; Wiley: New York, NY, USA, 1996. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Spennemann, D.H.R. Generative Artificial Intelligence, Human Agency and the Future of Cultural Heritage. Heritage 2024, 7, 3597-3609. https://doi.org/10.3390/heritage7070170

Spennemann DHR. Generative Artificial Intelligence, Human Agency and the Future of Cultural Heritage. Heritage. 2024; 7(7):3597-3609. https://doi.org/10.3390/heritage7070170

Chicago/Turabian StyleSpennemann, Dirk H. R. 2024. "Generative Artificial Intelligence, Human Agency and the Future of Cultural Heritage" Heritage 7, no. 7: 3597-3609. https://doi.org/10.3390/heritage7070170

APA StyleSpennemann, D. H. R. (2024). Generative Artificial Intelligence, Human Agency and the Future of Cultural Heritage. Heritage, 7(7), 3597-3609. https://doi.org/10.3390/heritage7070170