Prediction Models of Collaborative Behaviors in Dyadic Interactions: An Application for Inclusive Teamwork Training in Virtual Environments

Abstract

:1. Introduction

2. Materials and Methods

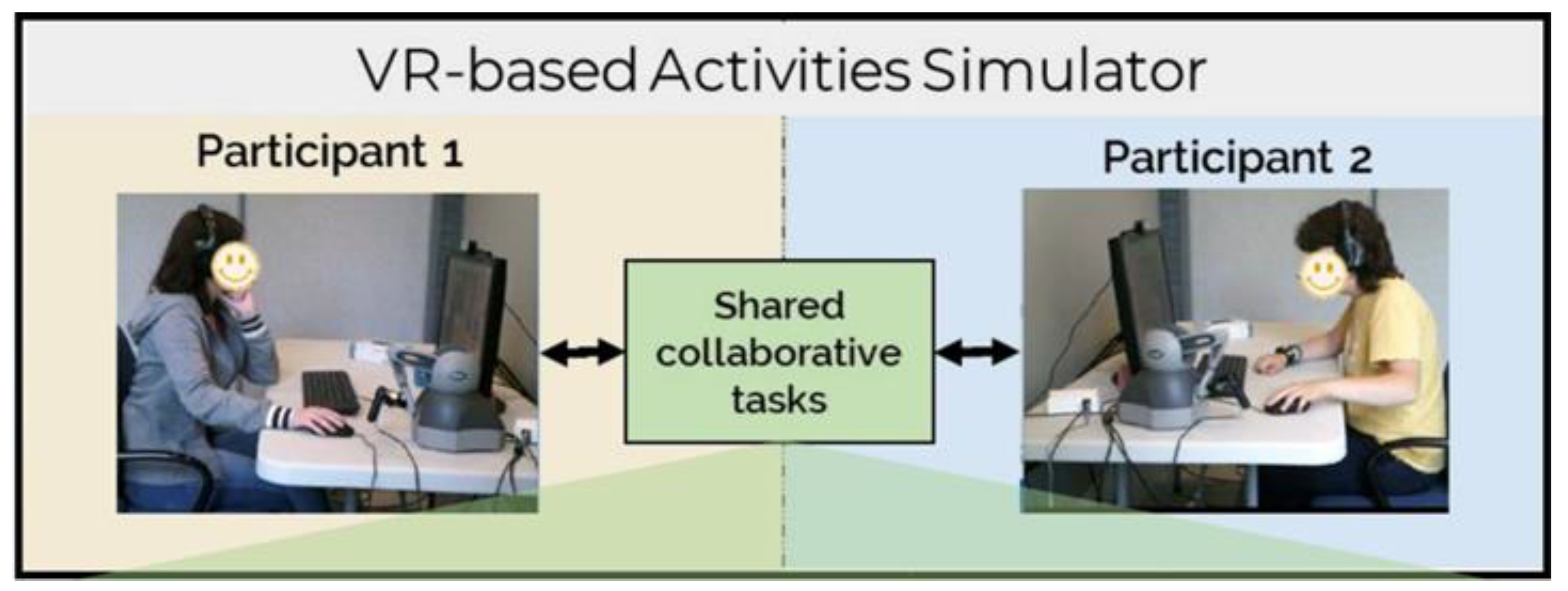

2.1. Experimental Design

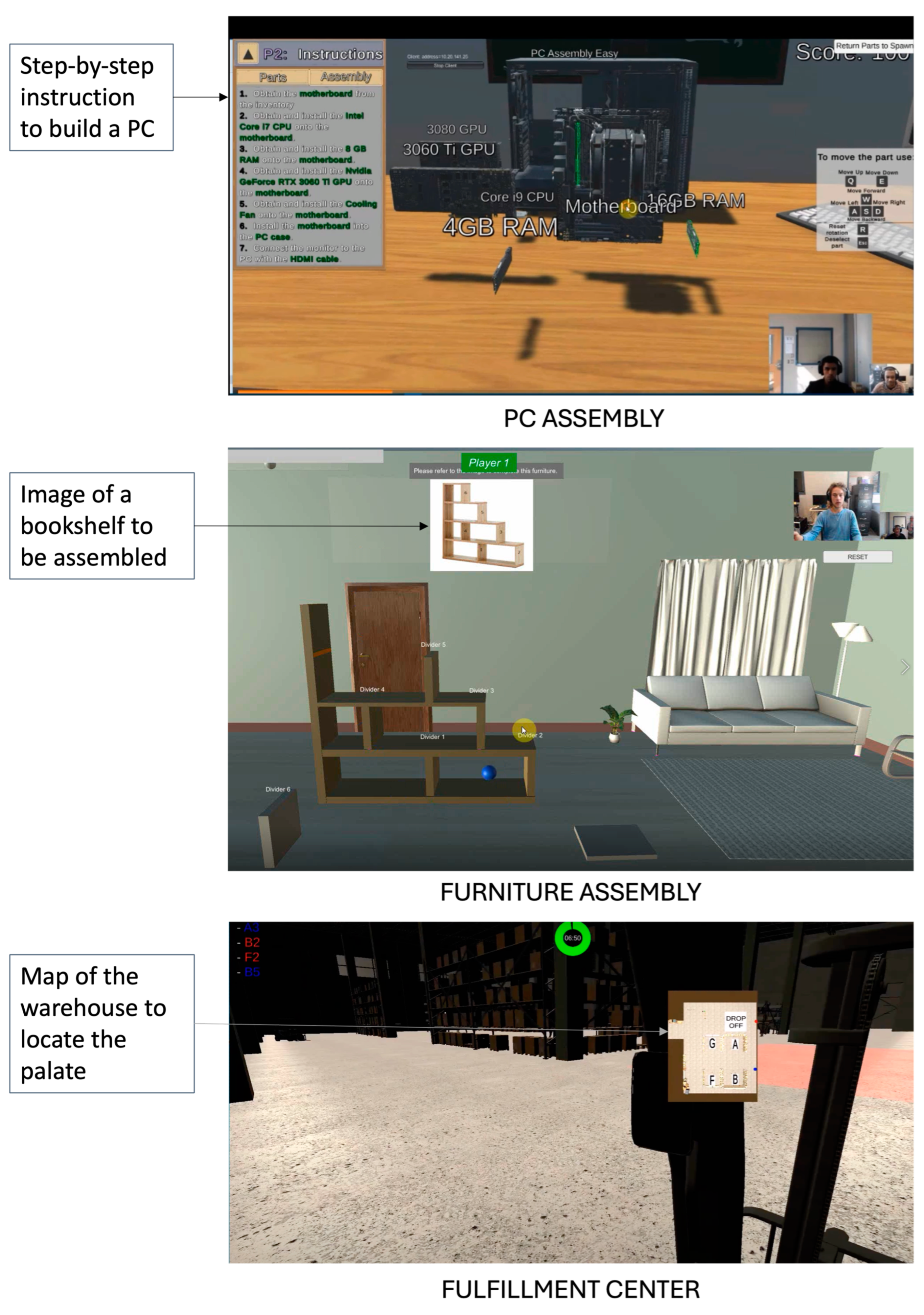

2.1.1. Collaborative Tasks Description

2.1.2. Participants and Protocol

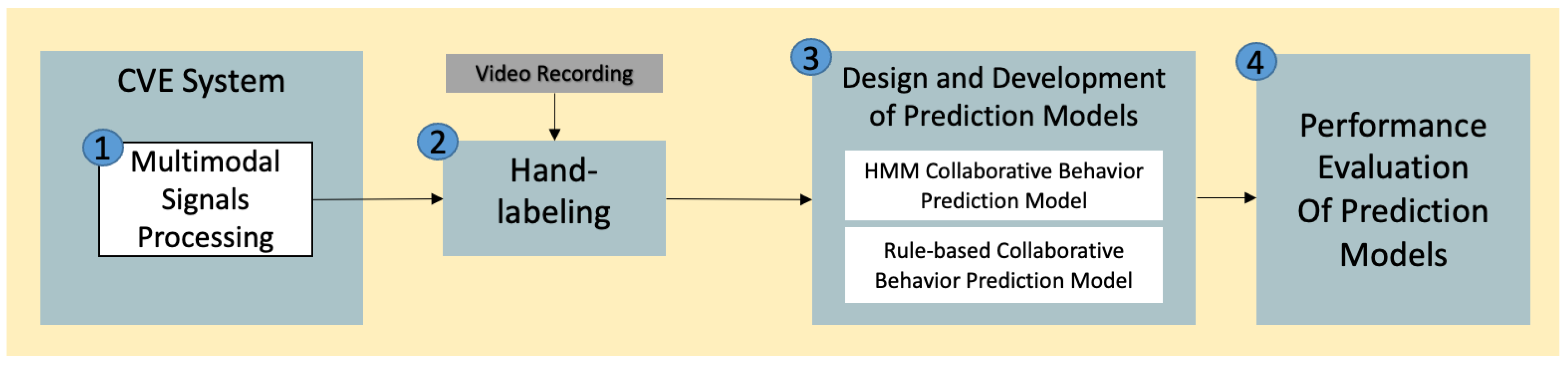

2.2. Prediction Models Workflow

2.2.1. Multimodal Signal Processing

| Device | Binary Feature | Feature Description |

|---|---|---|

| Microphone headset | Speech Presence | Feature is set to “1” when participant is speaking and “0” otherwise. |

| Tobii EyeX eye tracker | Gaze Presence | Feature is set to “1” when participant’s gaze detected on screen and “0” otherwise. |

| Gaze On Object | Feature is set to “1” when gaze is on a virtual object or within the defined “focus area” as depicted in Figure 5. | |

| Task-dependent controller (keyboard, haptic, or game controller) | Controller Presence | Feature is set to “1” when an input is detected from the controller (keyboard button, mouse clicks, haptic presses) and “0” otherwise. |

| Controller Manipulation | Feature is set to “1” when controller is actively moving an object, and “0” otherwise. | |

| * Object Move Closer | Feature is set to “1” when the distance of the object from the target location is decreasing, and “0” otherwise. | |

| * Object Move Away | Feature is set to “1” when the distance of the object from the target location is increasing, and “0” otherwise. |

2.2.2. Collaborative Behavior Coding Scheme

2.2.3. Hand Labelling to Establish Ground Truth

2.2.4. Rule-Based Prediction Model Design

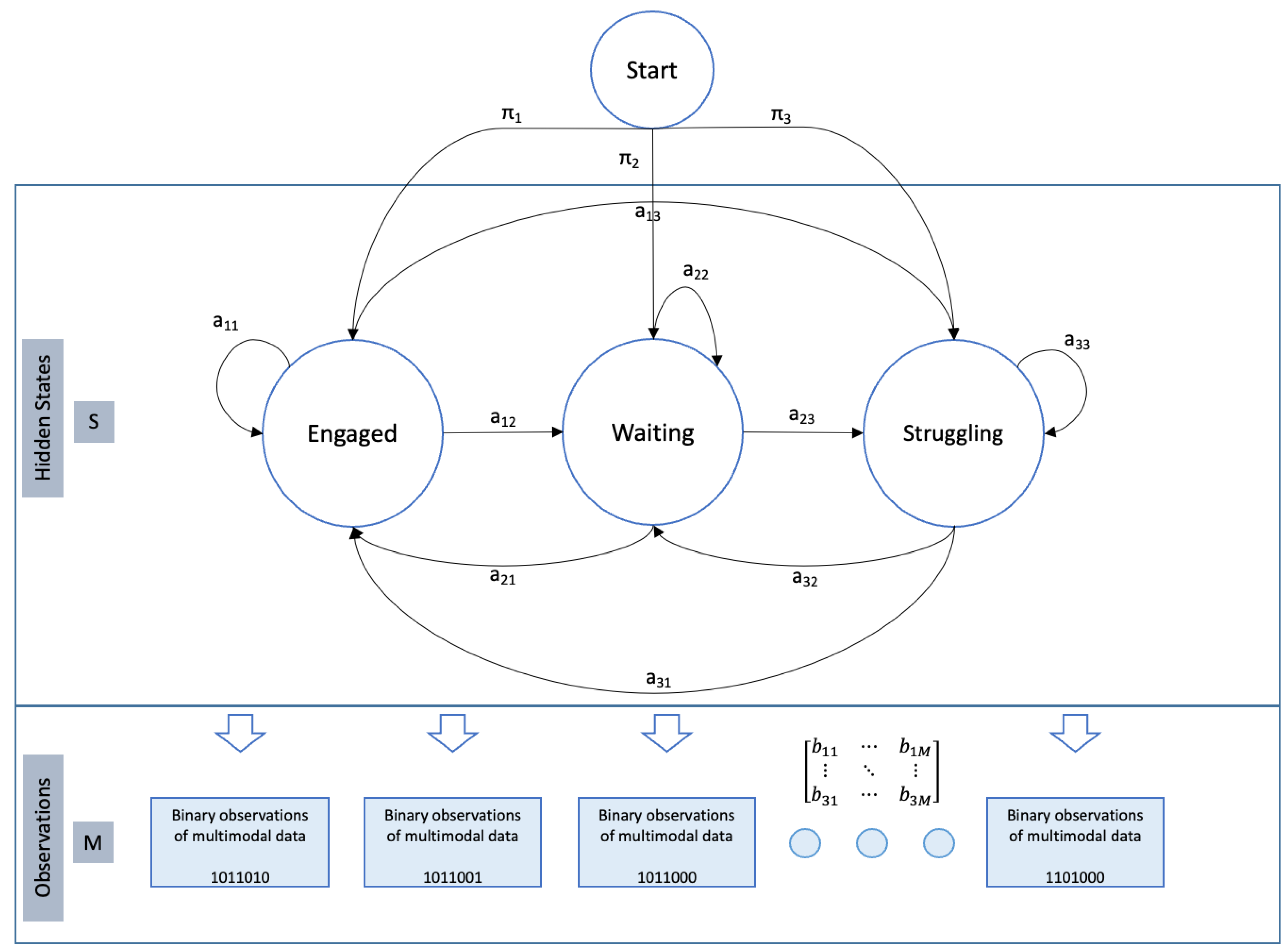

2.2.5. HMM Design and Training

2.2.6. Evaluating Prediction Models Performance

3. Results and Discussion

3.1. HMM Training and Validation Results

3.2. Prediction Models Evaluation Results

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Parsons, S.; Mitchell, P. The potential of virtual reality in social skills training for people with autistic spectrum disorders. J. Intellect. Disabil. Res. 2002, 46, 430–443. [Google Scholar] [CrossRef] [PubMed]

- Slovák, P.; Fitzpatrick, G. Teaching and Developing Social and Emotional Skills with Technology. ACM Trans. Comput.-Hum. Interact. 2015, 22, 1–34. [Google Scholar] [CrossRef]

- Al Mahdi, Z.; Rao Naidu, V.; Kurian, P. Analyzing the Role of Human Computer Interaction Principles for E-Learning Solution Design. In Smart Technologies and Innovation for a Sustainable Future; Al-Masri, A., Curran, K., Eds.; Advances in Science, Technology & Innovation; Springer International Publishing: Cham, Switzerland, 2019; pp. 41–44. ISBN 978-3-030-01658-6. [Google Scholar] [CrossRef]

- Delavarian, M.; Bokharaeian, B.; Towhidkhah, F.; Gharibzadeh, S. Computer-based working memory training in children with mild intellectual disability. Early Child. Dev. Care 2015, 185, 66–74. [Google Scholar] [CrossRef]

- Fernández-Aranda, F.; Jiménez-Murcia, S.; Santamaría, J.J.; Gunnard, K.; Soto, A.; Kalapanidas, E.; Bults, R.G.A.; Davarakis, C.; Ganchev, T.; Granero, R.; et al. Video games as a complementary therapy tool in mental disorders: PlayMancer, a European multicentre study. J. Ment. Health 2012, 21, 364–374. [Google Scholar] [CrossRef] [PubMed]

- Bernardini, S.; Porayska-Pomsta, K.; Smith, T.J. ECHOES: An intelligent serious game for fostering social communication in children with autism. Inf. Sci. 2014, 264, 41–60. [Google Scholar] [CrossRef]

- Zhang, L.; Amat, A.Z.; Zhao, H.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. Design of an Intelligent Agent to Measure Collaboration and Verbal-Communication Skills of Children with Autism Spectrum Disorder in Collaborative Puzzle Games. IEEE Trans. Learn. Technol. 2021, 14, 338–352. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Zaini Amat, A.; Migovich, M.; Swanson, A.; Weitlauf, A.S.; Warren, Z.; Sarkar, N. INC-Hg: An Intelligent Collaborative Haptic-Gripper Virtual Reality System. ACM Transactions on Accessible Computing. Available online: https://dl.acm.org/doi/10.1145/3487606 (accessed on 11 October 2023).

- Zheng, Z.K.; Sarkar, N.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. CheerBrush: A Novel Interactive Augmented Reality Coaching System for Toothbrushing Skills in Children with Autism Spectrum Disorder. ACM Trans. Access. Comput. 2021, 14, 1–20. [Google Scholar] [CrossRef]

- Amat, A.Z.; Zhao, H.; Swanson, A.; Weitlauf, A.S.; Warren, Z.; Sarkar, N. Design of an Interactive Virtual Reality System, InViRS, for Joint Attention Practice in Autistic Children. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1866–1876. [Google Scholar] [CrossRef] [PubMed]

- Amat, A.Z.; Adiani, D.; Tauseef, M.; Breen, M.; Hunt, S.; Swanson, A.R.; Weitlauf, A.S.; Warren, Z.E.; Sarkar, N. Design of a Desktop Virtual Reality-Based Collaborative Activities Simulator (ViRCAS) to Support Teamwork in Workplace Settings for Autistic Adults. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 2184–2194. [Google Scholar] [CrossRef]

- Awais Hassan, M.; Habiba, U.; Khalid, H.; Shoaib, M.; Arshad, S. An Adaptive Feedback System to Improve Student Performance Based on Collaborative Behavior. IEEE Access 2019, 7, 107171–107178. [Google Scholar] [CrossRef]

- Green, C.S.; Bavelier, D. Learning, attentional control and action video games. Curr. Biol. CB 2012, 22, R197–R206. [Google Scholar] [CrossRef] [PubMed]

- Kotov, A.; Bennett, P.N.; White, R.W.; Dumais, S.T.; Teevan, J. Modeling and analysis of cross-session search tasks. In Proceedings of the 34th international ACM SIGIR conference on Research and development in Information Retrieval, Beijing, China, 24–28 July 2011; Available online: https://dl.acm.org/doi/10.1145/2009916.2009922 (accessed on 11 October 2023).

- Vondrick, C.; Patterson, D.; Ramanan, D. Efficiently Scaling up Crowdsourced Video Annotation: A Set of Best Practices for High Quality, Economical Video Labeling. Int. J. Comput. Vis. 2013, 101, 184–204. [Google Scholar] [CrossRef]

- Hagedorn, J.; Hailpern, J.; Karahalios, K.G. VCode and VData: Illustrating a new framework for supporting the video annotation workflow. In Proceedings of the Working Conference on Advanced Visual Interfaces; AVI ’08. Association for Computing Machinery: New York, NY, USA, 2008; pp. 317–321. [Google Scholar]

- Gaur, E.; Saxena, V.; Singh, S.K. Video annotation tools: A Review. In Proceedings of the 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida (UP), India, 12–13 October 2018; pp. 911–914. [Google Scholar]

- Fredriksson, T.; Mattos, D.I.; Bosch, J.; Olsson, H.H. Data Labeling: An Empirical Investigation into Industrial Challenges and Mitigation Strategies. In Proceedings of the Product-Focused Software Process Improvement; Morisio, M., Torchiano, M., Jedlitschka, A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 202–216. [Google Scholar]

- Vinciarelli, A.; Esposito, A.; André, E.; Bonin, F.; Chetouani, M.; Cohn, J.F.; Cristani, M.; Fuhrmann, F.; Gilmartin, E.; Hammal, Z.; et al. Open Challenges in Modelling, Analysis and Synthesis of Human Behaviour in Human–Human and Human–Machine Interactions. Cogn. Comput. 2015, 7, 397–413. [Google Scholar] [CrossRef]

- Salah, A.A.; Gevers, T.; Sebe, N.; Vinciarelli, A. Challenges of Human Behavior Understanding. In Human Behavior Understanding; Salah, A.A., Gevers, T., Sebe, N., Vinciarelli, A., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6219, pp. 1–12. ISBN 978-3-642-14714-2. [Google Scholar]

- Shiyan, A.A.; Nikiforova, L. Model of Human Behavior Classification and Class Identification Method for a Real Person. Supplement. PsyArXiv SSRN 2022. [Google Scholar] [CrossRef]

- Dzedzickis, A.; Kaklauskas, A.; Bucinskas, V. Human Emotion Recognition: Review of Sensors and Methods. Sensers 2020, 20, 592. [Google Scholar] [CrossRef] [PubMed]

- Ravichander, A.; Black, A.W. An Empirical Study of Self-Disclosure in Spoken Dialogue Systems. In Proceedings of the 19th Annual SIGdial Meeting on Discourse and Dialogue; Association for Computational Linguistics: Melbourne, Australia, 2018; pp. 253–263. [Google Scholar]

- Liu, P.; Glas, D.F.; Kanda, T.; Ishiguro, H. Data-Driven HRI: Learning Social Behaviors by Example From Human–Human Interaction. IEEE Trans. Robot. 2016, 32, 988–1008. [Google Scholar] [CrossRef]

- Sturman, O.; Von Ziegler, L.; Schläppi, C.; Akyol, F.; Privitera, M.; Slominski, D.; Grimm, C.; Thieren, L.; Zerbi, V.; Grewe, B.; et al. Deep learning-based behavioral analysis reaches human accuracy and is capable of outperforming commercial solutions. Neuropsychopharmacology 2020, 45, 1942–1952. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Shen, L.; Valstar, M. Human Behaviour-Based Automatic Depression Analysis Using Hand-Crafted Statistics and Deep Learned Spectral Features. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 158–165. [Google Scholar]

- Abdelrahman, A.A.; Strazdas, D.; Khalifa, A.; Hintz, J.; Hempel, T.; Al-Hamadi, A. Multimodal Engagement Prediction in Multiperson Human–Robot Interaction. IEEE Access 2022, 10, 61980–61991. [Google Scholar] [CrossRef]

- D’Mello, S.; Kory, J. Consistent but modest: A meta-analysis on unimodal and multimodal affect detection accuracies from 30 studies. In Proceedings of the 14th ACM International Conference on Multimodal Interaction, Santa Monica, CA, USA, 22–26 October 2012; pp. 31–38. [Google Scholar]

- Mallol-Ragolta, A.; Schmitt, M.; Baird, A.; Cummins, N.; Schuller, B. Performance Analysis of Unimodal and Multimodal Models in Valence-Based Empathy Recognition. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; pp. 1–5. [Google Scholar]

- Okada, S.; Ohtake, Y.; Nakano, Y.I.; Hayashi, Y.; Huang, H.-H.; Takase, Y.; Nitta, K. Estimating communication skills using dialogue acts and nonverbal features in multiple discussion datasets. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo Japan, 12–16 November 2016; pp. 169–176. [Google Scholar]

- Huang, W.; Lee, G.T.; Zhang, X. Dealing with uncertainty: A systematic approach to addressing value-based ethical dilemmas in behavioral services. Behav. Interv. 2023, 38, 1–15. [Google Scholar] [CrossRef]

- Asghari, P.; Soleimani, E.; Nazerfard, E. Online human activity recognition employing hierarchical hidden Markov models. J. Ambient. Intell. Hum. Comput. 2020, 11, 1141–1152. [Google Scholar] [CrossRef]

- Tang, Y.; Li, Z.; Wang, G.; Hu, X. Modeling learning behaviors and predicting performance in an intelligent tutoring system: A two-layer hidden Markov modeling approach. Interact. Learn. Environ. 2023, 31, 5495–5507. [Google Scholar] [CrossRef]

- Sharma, K.; Papamitsiou, Z.; Olsen, J.K.; Giannakos, M. Predicting learners’ effortful behaviour in adaptive assessment using multimodal data. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, Frankfurt, Germany, 23–27 March 2020; pp. 480–489. [Google Scholar]

- Sánchez, V.G.; Lysaker, O.M.; Skeie, N.-O. Human behaviour modelling for welfare technology using hidden Markov models. Pattern Recognit. Lett. 2020, 137, 71–79. [Google Scholar] [CrossRef]

- Soleymani, M.; Stefanov, K.; Kang, S.-H.; Ondras, J.; Gratch, J. Multimodal Analysis and Estimation of Intimate Self-Disclosure. In Proceedings of the 2019 International Conference on Multimodal Interaction, Suzhou China, 14–18 October 2019; pp. 59–68. [Google Scholar]

- Gupta, A.; Garg, D.; Kumar, P. Mining Sequential Learning Trajectories With Hidden Markov Models For Early Prediction of At-Risk Students in E-Learning Environments. IEEE Trans. Learn. Technol. 2022, 15, 783–797. [Google Scholar] [CrossRef]

- Zhao, M.; Eadeh, F.R.; Nguyen, T.-N.; Gupta, P.; Admoni, H.; Gonzalez, C.; Woolley, A.W. Teaching agents to understand teamwork: Evaluating and predicting collective intelligence as a latent variable via Hidden Markov Models. Comput. Hum. Behav. 2023, 139, 107524. [Google Scholar] [CrossRef]

- Mihoub, A.; Bailly, G.; Wolf, C. Social Behavior Modeling Based on Incremental Discrete Hidden Markov Models. In Human Behavior Understanding; Salah, A.A., Hung, H., Aran, O., Gunes, H., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2013; Volume 8212, pp. 172–183. ISBN 978-3-319-02713-5. [Google Scholar]

- Salas, E.; Cooke, N.J.; Rosen, M.A. On Teams, Teamwork, and Team Performance: Discoveries and Developments. Hum. Factors 2008, 50, 540–547. [Google Scholar] [CrossRef] [PubMed]

- McEwan, D.; Ruissen, G.R.; Eys, M.A.; Zumbo, B.D.; Beauchamp, M.R. The Effectiveness of Teamwork Training on Teamwork Behaviors and Team Performance: A Systematic Review and Meta-Analysis of Controlled Interventions. PLoS ONE 2017, 12, e0169604. [Google Scholar] [CrossRef] [PubMed]

- Hanaysha, J.; Tahir, P.R. Examining the Effects of Employee Empowerment, Teamwork, and Employee Training on Job Satisfaction. Procedia—Soc. Behav. Sci. 2016, 219, 272–282. [Google Scholar] [CrossRef]

- Jones, D.R.; Morrison, K.E.; DeBrabander, K.M.; Ackerman, R.A.; Pinkham, A.E.; Sasson, N.J. Greater Social Interest Between Autistic and Non-autistic Conversation Partners Following Autism Acceptance Training for Non-autistic People. Front. Psychol. 2021, 12, 739147. [Google Scholar] [CrossRef] [PubMed]

- Crompton, C.J.; Sharp, M.; Axbey, H.; Fletcher-Watson, S.; Flynn, E.G.; Ropar, D. Neurotype-Matching, but Not Being Autistic, Influences Self and Observer Ratings of Interpersonal Rapport. Front. Psychol. 2020, 11, 586171. [Google Scholar] [CrossRef]

- Milton, D.E.M.; Heasman, B.; Sheppard, E. Double Empathy. In Encyclopedia of Autism Spectrum Disorders; Volkmar, F.R., Ed.; Springer: New York, NY, USA, 2018; pp. 1–8. ISBN 978-1-4614-6435-8. [Google Scholar]

- Edey, R.; Cook, J.; Brewer, R.; Johnson, M.H.; Bird, G.; Press, C. Interaction takes two: Typical adults exhibit mind-blindness towards those with autism spectrum disorder. J. Abnorm. Psychol. 2016, 125, 879–885. [Google Scholar] [CrossRef]

- Heasman, B.; Gillespie, A. Perspective-taking is two-sided: Misunderstandings between people with Asperger’s syndrome and their family members. Autism 2018, 22, 740–750. [Google Scholar] [CrossRef] [PubMed]

- Bozgeyikli, L.; Raij, A.; Katkoori, S.; Alqasemi, R. A Survey on Virtual Reality for Individuals with Autism Spectrum Disorder: Design Considerations. IEEE Trans. Learn. Technol. 2018, 11, 133–151. [Google Scholar] [CrossRef]

- Juliani, A.; Berges, V.-P.; Teng, E.; Cohen, A.; Harper, J.; Elion, C.; Goy, C.; Gao, Y.; Henry, H.; Mattar, M.; et al. Unity: A General Platform for Intelligent Agents. arXiv 2018. [Google Scholar] [CrossRef]

- Li, S.; Zhang, B.; Fei, L.; Zhao, S.; Zhou, Y. Learning Sparse and Discriminative Multimodal Feature Codes for Finger Recognition. IEEE Trans. Multimed. 2023, 25, 805–815. [Google Scholar] [CrossRef]

- Khamparia, A.; Gia Nhu, N.; Pandey, B.; Gupta, D.; Rodrigues, J.J.P.C.; Khanna, A.; Tiwari, P. Investigating the Importance of Psychological and Environmental Factors for Improving Learner’s Performance Using Hidden Markov Model. IEEE Access 2019, 7, 21559–21571. [Google Scholar] [CrossRef]

- Sanghvi, J.; Castellano, G.; Leite, I.; Pereira, A.; McOwan, P.W.; Paiva, A. Automatic analysis of affective postures and body motion to detect engagement with a game companion. In Proceedings of the 6th International Conference on Human-Robot Interaction, Lausanne, Switzerland, 6–9 March 2011; pp. 305–312. [Google Scholar]

- Isohätälä, J.; Järvenoja, H.; Järvelä, S. Socially shared regulation of learning and participation in social interaction in collaborative learning. Int. J. Educ. Res. 2017, 81, 11–24. [Google Scholar] [CrossRef]

- Lobczowski, N.G. Bridging gaps and moving forward: Building a new model for socioemotional formation and regulation. Educ. Psychol. 2020, 55, 53–68. [Google Scholar] [CrossRef]

- Camacho-Morles, J.; Slemp, G.R.; Oades, L.G.; Morrish, L.; Scoular, C. The role of achievement emotions in the collaborative problem-solving performance of adolescents. Learn. Individ. Differ. 2019, 70, 169–181. [Google Scholar] [CrossRef]

- Sarason, I.G.S.; Gregory, R.P.; Barbara, R. (Eds.) Cognitive Interference: Theories, Methods, and Findings; Routledge: New York, NY, USA, 2014; ISBN 978-1-315-82744-5. [Google Scholar]

- D’Mello, S.; Olney, A.; Person, N. Mining Collaborative Patterns in Tutorial Dialogues. JEDM 2010, 2, 2–37. [Google Scholar] [CrossRef]

- Schmidt, M.; Laffey, J.M.; Schmidt, C.T.; Wang, X.; Stichter, J. Developing methods for understanding social behavior in a 3D virtual learning environment. Comput. Hum. Behav. 2012, 28, 405–413. [Google Scholar] [CrossRef]

- Basden, B.H.; Basden, D.R.; Bryner, S.; Thomas III, R.L. A comparison of group and individual remembering: Does collaboration disrupt retrieval strategies? J. Exp. Psychol. Learn. Mem. Cogn. 1997, 23, 1176–1189. [Google Scholar] [CrossRef] [PubMed]

- Adam, T.; Langrock, R.; Weiß, C.H. Penalized estimation of flexible hidden Markov models for time series of counts. Metron 2019, 77, 87–104. [Google Scholar] [CrossRef]

- Rabiner, L.; Juang, B. An introduction to hidden Markov models. IEEE ASSP Mag. 1986, 3, 4–16. [Google Scholar] [CrossRef]

- Nadas, A. Hidden Markov chains, the forward-backward algorithm, and initial statistics. IEEE Trans. Acoust. Speech Signal Process. 1983, 31, 504–506. [Google Scholar] [CrossRef]

- Tao, C. A generalization of discrete hidden Markov model and of viterbi algorithm. Pattern Recognit. 1992, 25, 1381–1387. [Google Scholar] [CrossRef]

- Baum, L.E.; Petrie, T.; Soules, G.; Weiss, N. A Maximization Technique Occurring in the Statistical Analysis of Probabilistic Functions of Markov Chains. Ann. Math. Stat. 1970, 41, 164–171. [Google Scholar] [CrossRef]

- Schreiber, R. MATLAB. Scholarpedia 2007, 2, 2929. [Google Scholar] [CrossRef]

- Matlab, R. MATLAB Statistics and Machine Learning Toolbox, Version R2017a 2017; The MathWorks Inc.: Natick, MA, USA.

- Plunk, A.; Amat, A.Z.; Tauseef, M.; Peters, R.A.; Sarkar, N. Semi-Supervised Behavior Labeling Using Multimodal Data during Virtual Teamwork-Based Collaborative Activities. Sensors 2023, 23, 3524. [Google Scholar] [CrossRef] [PubMed]

- Hiatt, L.M.; Narber, C.; Bekele, E.; Khemlani, S.S.; Trafton, J.G. Human modeling for human–robot collaboration. Int. J. Robot. Res. 2017, 36, 580–596. [Google Scholar] [CrossRef]

- Stolcke, A.; Ries, K.; Coccaro, N.; Shriberg, E.; Bates, R.; Jurafsky, D.; Taylor, P.; Martin, R.; Ess-Dykema, C.V.; Meteer, M. Dialogue Act Modeling for Automatic Tagging and Recognition of Conversational Speech. Comput. Linguist. 2000, 26, 339–373. [Google Scholar] [CrossRef]

| Participants | ASD (N = 6) | NT (N = 6) |

|---|---|---|

| Mean (SD) | Mean (SD) | |

| Age | 20.5 (2.8) | 22.8 (3.6) |

| Gender (% male-female) | 50%-50% | 50%-50% |

| Race (% White, % African American) | 100% | 83%, 0% |

| Ethnicity (% Hispanic) | 0% | 17% |

| # | Collaborative Behavior | Definition | Condition |

|---|---|---|---|

| 1 | Engaged | The participant is focused on the task, communicating, and progressing well. | Participant could be talking to their partner. Participant is using the controller and virtual object is moving closer to the target. Engaged = Speech Presence ∪ (Controller Manipulation ∩ Object Move Closer) |

| 2 | Struggling | The participant is not progressing with the task due to difficulty performing the task, not communicating with their partner, distracted, or disinterested with the task. | Participant is not talking to their partner while: i. manipulating the controller but virtual object moving away from the target, or ii. not manipulating the controller and not looking at the screen (virtual objects, focused area). Struggling = ¬Speech Presence ∩ ((Controller Manipulation ∩ Object Move Away) ∪ (¬Controller Manipulation ∩ ¬Gaze)) |

| 3 | Waiting | The participant is on standby for their partner in a turn-taking task, not moving. | Participant is not talking to their partner, not using the controller, and not moving virtual objects, but is looking at an object or focus area. Waiting = ¬Speech Presence ∩ ¬Controller Manipulation ∩ ¬Object Move Away ∩ ¬Object Move Closer ∩ Gaze |

| Symbol | Definition | Values |

|---|---|---|

| N | Number of hidden states in the model. | |

| M | Number of distinct observations. | We are using a 7-digit binary vector based on the extracted features from the multi-modal data. Example values: 1101010, 0010100 |

| A | State transition probability distribution—Probability matrix of transition from one state to another. | Matrix size is

, in our case

. The values of the matrix are generated from training the model. |

| B | Emission probability distribution—Probability matrix of observing a particular observation in the current state. | Matrix size is

. The values of the matrix are generated from training the model. |

| π | Initial state probability distribution. | Initial state probability matrix, usually equally distributed. |

| Rule-Based (%) | HMM (%) | |

|---|---|---|

| Accuracy | 76.53 | 90.58 |

| Precision | 71.81 | 89.55 |

| Recall | 68.93 | 87.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amat, A.Z.; Plunk, A.; Adiani, D.; Wilkes, D.M.; Sarkar, N. Prediction Models of Collaborative Behaviors in Dyadic Interactions: An Application for Inclusive Teamwork Training in Virtual Environments. Signals 2024, 5, 382-401. https://doi.org/10.3390/signals5020019

Amat AZ, Plunk A, Adiani D, Wilkes DM, Sarkar N. Prediction Models of Collaborative Behaviors in Dyadic Interactions: An Application for Inclusive Teamwork Training in Virtual Environments. Signals. 2024; 5(2):382-401. https://doi.org/10.3390/signals5020019

Chicago/Turabian StyleAmat, Ashwaq Zaini, Abigale Plunk, Deeksha Adiani, D. Mitchell Wilkes, and Nilanjan Sarkar. 2024. "Prediction Models of Collaborative Behaviors in Dyadic Interactions: An Application for Inclusive Teamwork Training in Virtual Environments" Signals 5, no. 2: 382-401. https://doi.org/10.3390/signals5020019

APA StyleAmat, A. Z., Plunk, A., Adiani, D., Wilkes, D. M., & Sarkar, N. (2024). Prediction Models of Collaborative Behaviors in Dyadic Interactions: An Application for Inclusive Teamwork Training in Virtual Environments. Signals, 5(2), 382-401. https://doi.org/10.3390/signals5020019