Trustworthiness of Situational Awareness: Significance and Quantification

Abstract

:1. Introduction

- We discuss the significance of security and trust in SA systems from a military and an air force perspective;

- We elaborate the significance of quantifying the trustworthiness of SA systems for improving the trust of SA systems;

- We propose a model for quantifying the trustworthiness of an SA system;

- We present numerical examples that demonstrate the quantification of the trustworthiness of an SA system using our proposed model.

2. Related Work

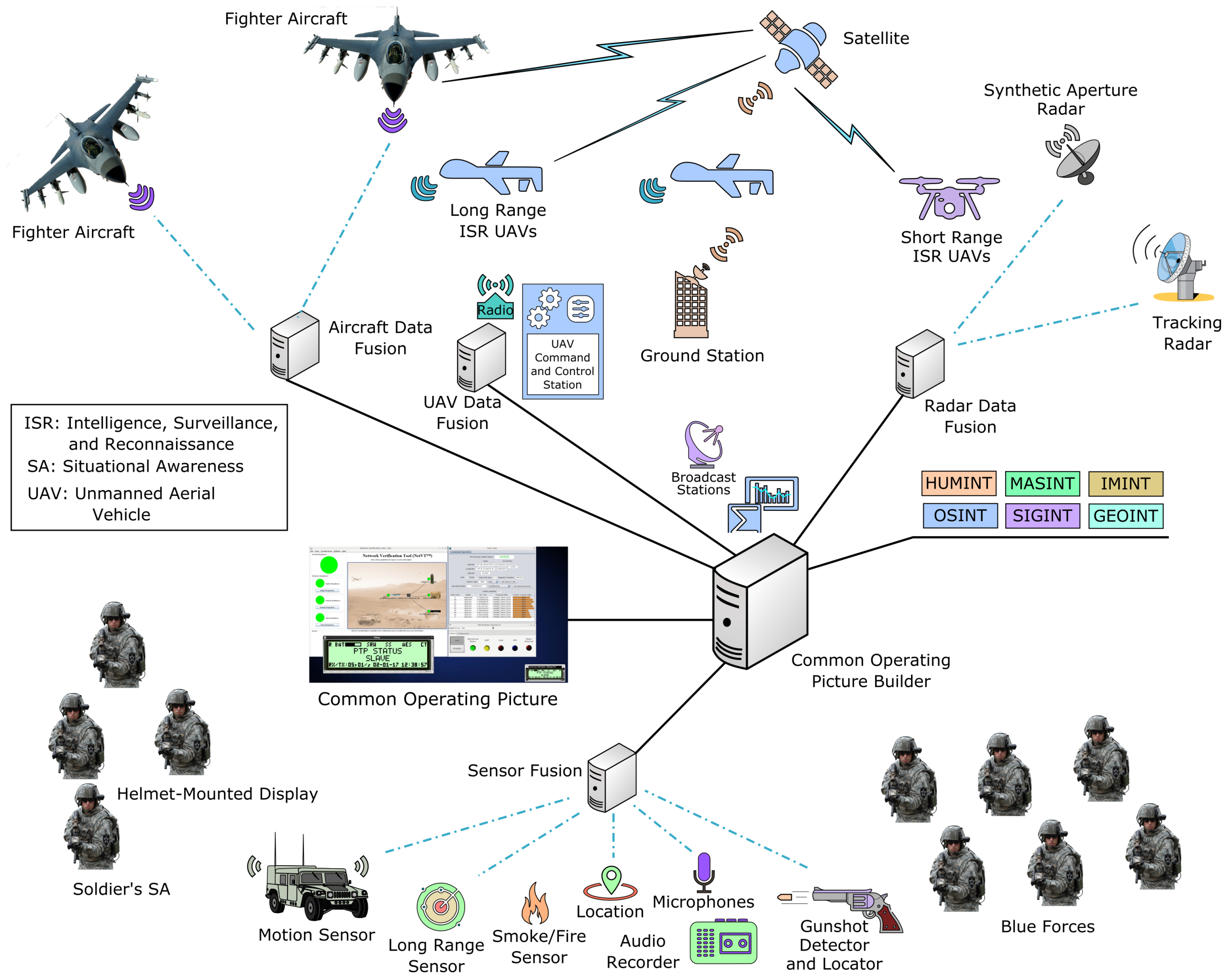

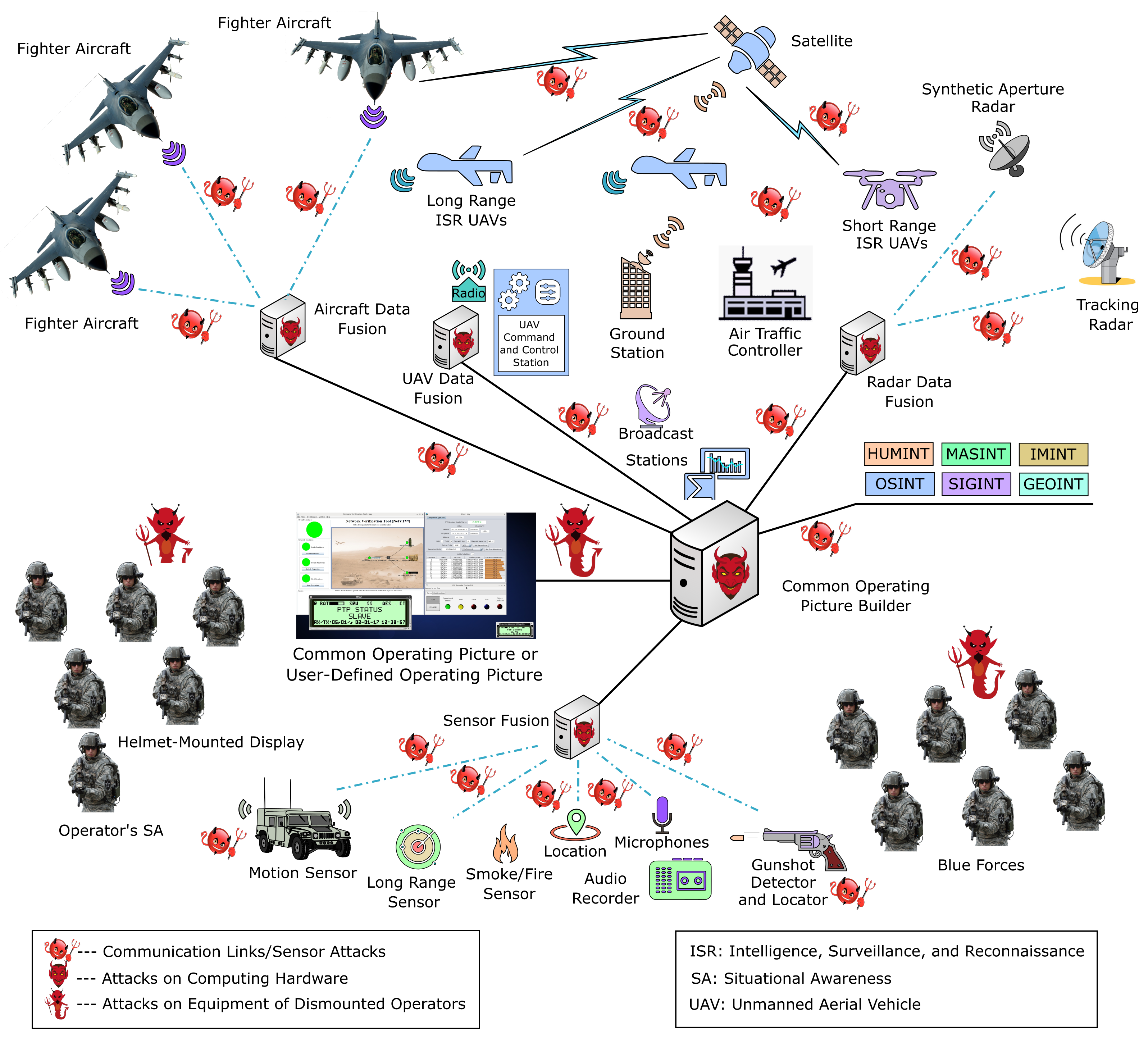

3. Security and Trust of Situational Awareness

3.1. Security of Common Operating Picture (COP)

3.2. SA Components Susceptible to Security Attacks

- Attacks on communication links;

- Attacks on sensors;

- Attacks on the computing hardware of SA infrastructure; and

- Attacks on the equipment of dismounted operators and pilots.

3.3. Impacts of Security Attacks on COP

- If the COP displays the last available aircraft positions and flight paths, the COP will not be accurate anymore. In this case, the commander is able to notice the failure of the communication link, and he/she will realize there are aircraft whose positions and paths are not current in the COP, but can impact the operation. If the commander is not able to discern that the link is down (i.e., not available), he/she will keep on trusting the displayed COP as real-time information;

- If the COP erases the last available aircraft positions and flight paths, then the COP will not be accurate anymore. In this case, the commander will not be certain whether there are actually no aircraft in the region of interest or whether no aircraft are shown in the COP because the link is down.

- Is the link down because of sabotage, attack, interference, or benign failure?

- In cases where the link was sabotaged, what is the purpose behind that and which enemy force is responsible?

- Can the data be trusted if the link becomes live again?

- Will he/she be notified that the link is down?

4. Quantifying Trustworthiness of SA

4.1. Model for Quantifying the Trustworthiness of COP

4.2. Required Trustworthiness Measure (RTM)

5. Numerical Results

5.1. Case 1: High-Trust Information Source

- ISR UAV (SA Source): We calculate the trustworthiness of the ISR UAV based on Equation (1) of our proposed model (Section 4.1). To obtain the trustworthiness of a source, we first need to obtain the score of various metrics (e.g., confidentiality, integrity, authenticity, etc.) stipulated in Equation (1) that affect the trustworthiness of the source. In the following, we calculate the score of these metrics for this case.

- Confidentiality: For this case, the assumption is that the information gathered and analyzed by the UAV is stored in a secure memory, and is also encrypted using an advanced encryption standard (AES) or another encryption algorithm with a comparable security level. Consequently, the UAV for this case can be assigned a confidentiality score of 1. As a guideline, one can assign the highest scores (1 in this case) for security metrics, such as confidentiality, integrity, authenticity, etc., if the source employs algorithms and protocols standardized by the standardization bodies, such as the National Institute of Standards and Technology (NIST) [17].

- Integrity: For this case, the UAV employs error correction code (ECC) memory to detect and correct n-bit errors in memory, and also calculates the hash-based message authentication code (HMAC) of the message or information to be transmitted to the GCS. Hence, the UAV for this case can be assigned an integrity score of 1.

- Authenticity: For this case, the UAV can be successfully authenticated with an authentication protocol (such as the protocol in [18]), and can be assigned an authenticity score of 1.

- Availability: For this case, the UAV is responsive to the authentication and communication protocols’ messages, and is available; hence, it can be assigned an availability score of 1.

- Non-repudiability: Since the UAV encrypts the message with its secret key, which no other source possesses, the message can be associated with the UAV. In this case, public key infrastructure (PKI) is employed by the UAV; the UAV can also sign the message with its private key, which can be verified by the CBN using the public key of the UAV, thus unambiguously binding the message to the UAV. Since the UAV in this case employs these security mechanisms that ensure that the message originated from the UAV, it can be assigned a non-repudiability score of 1.

- Precision: In this case, the UAV is performing on-board analytics on the sensed information, the precision can be assigned based on the average precision of the AI algorithm employed for tasks, such as classification, object detection, etc. The precision score depends on the precision of a particular model or algorithm employed by the UAV. For this numerical example, we assign a precision score of 0.96. We note that different precision scores can be assigned for the source depending on the AI model.

- Quality: The UAV obtains high-resolution images of objects of interest, and thus the quality score can be assigned as 1.

- Usability: The UAV is mobile and can gather the information about different targets as desired; thus, the UAV can be assigned a usability score of 1.

- Ground Control Station: Here, we calculate the trustworthiness of the GCS based on Equation (3) of our proposed model (Section 4.1). To obtain the trustworthiness of a node, we first need to obtain the scores of various metrics (i.e., confidentiality, integrity, authenticity, availability, non-repudiation) stipulated in Equation (3) that affect the trustworthiness of a node. In the following, we calculate the score of these metrics for this case.

- Confidentiality: In this case, the encrypted information from the UAV is relayed by the GCS to the GN, and thus for the GCS can be assigned a confidentiality score of 1.

- Integrity: The GCS also relays the HMAC of the message sent by the UAV, and so an integrity score of 1 can be ascribed to GCS.

- Authenticity: For this case, the GCS can be successfully authenticated with an authentication protocol, and thus an authenticity score of 1 can be attributed to the GCS.

- Availability: Assuming that the GCS is responsive to the authentication and other protocols’ messages, and is available, its availability score can be assigned as 1.

- Gateway Node: The trustworthiness of the GN can be calculated similarly to that for the GCS based on Equation (3), and can be given as .

- Router Node: The trustworthiness of the RN can be calculated similarly to that for the GCS based on Equation (3), and can be given as .

- Data Fusion Node: The trustworthiness of the DFN can be calculated similarly to that for the GCS based on Equation (3), and can be given as .

- Link or : Here, we calculate the trustworthiness of the radio link between the ISR UAV and the GCS based on Equation (4) of our proposed model (Section 4.1). To obtain the trustworthiness of a link, we first need to obtain the score of various metrics (i.e., confidentiality, integrity, availability, and quality) stipulated in Equation (4) that affect the trustworthiness of a link. In the following, we calculate the scores of these metrics for this case.

- Confidentiality: Since the UAV encrypts the information to be sent, and this encrypted information traverses through the radio link between the UAV and GCS, the confidentiality score for or can be assigned as 1.

- Integrity: Since the HMAC of the message is transmitted along with the message in this case, can be ascribed an integrity score of 1.

- Availability: Assuming that the radio link can carry the messages and is not overloaded with data traffic nor is affected by any denial-of-service attack, an availability score of 1 can be attributed to .

- Quality: Here, the quality of a link can be referred to as its bit error rate. Assuming a bit error rate of [19], the quality score for can be calculated as 0.995 (i.e., 1 − 0.005).

- Link or : Here, we calculate the trustworthiness of the wired link or between the GCS and the GN that connects the GCS to the core network based on Equation (4). In the following, we calculate the score of the metrics that affect the trustworthiness of the link for this case.

- Confidentiality: Since the encrypted information traverses the wired link between the GCS and the GN, or can be assigned a confidentiality score of 1.

- Integrity: Since the HMAC of the message is transmitted along with the message on the link in this case, the integrity score for can be ascribed as 1.

- Availability: Assuming that the link can carry the messages and is not overloaded with data traffic nor is affected by any denial-of-service attack, an availability score of 1 can be attributed to .

- Quality: Assuming a bit error rate of [20], the quality score for can be calculated as .

- Link or : The trustworthiness of the wired link or between the GN and the RN in the core network can be calculated similarly to the wired link , and can be given as .

- Link or : The trustworthiness of the wired link or between the RN and the DFN can be calculated similarly to the wired link , and can be given as .

- Link or : The trustworthiness of the wired link or between the DFN and the CBN can be calculated similarly to the wired link , and can be given as .

5.2. Case 2: Low-Trust Information Source

- Motion Sensor (SA Source): Here, we calculate the trustworthiness of the MS based on Equation (1) of our proposed model (Section 4.1). We first need to obtain the scores of various metrics stipulated in Equation (1) that affect the trustworthiness of a source. In the following, we calculate the score of these metrics for this case.

- Confidentiality: In this case, we assume that the information gathered is not encrypted but is stored in private memory, which is not accessible to unauthorized parties. Thus, the MS for this case can be assigned a confidentiality score of 0.3. For less trustworthy sources not employing standardized protocols, the score for the security metrics can be assigned based on the overall security assessment of the source. Encryption, for example, is a primary means for ensuring confidentiality, and constitutes about 70% of the confidentiality score. For our considered example, the assumption is that the information is not encrypted, which results in a 70% penalty on the confidentiality score, while the employment of private memory and storage control permits 30% of the confidentiality score, which results in an overall confidentiality score of 0.3 for the source. We note that this weightage assignment is not standard, but just a guideline, and COP designers can choose different weightage for different aspects of the confidentiality metric.

- Integrity: In this case, the MS employs error correction code (ECC) memory to detect and correct n-bit errors in memory, but does not calculate the HMAC of the message to be transmitted to the CHN. Hence, the integrity score for MS for this case can be assigned equal to 0.2.

- Authenticity: Since the MS in this case does not incorporate security primitives, the MS cannot be authenticated with an authentication protocol (such as the protocol in [18]), and can be assigned an authenticity score of 0.

- Availability: Since the CBN receives data from the MS periodically, an availability score of 1 can be attributed to the MS.

- Non-repudiability: Since the MS in this case does not sign the message with its private key nor does it encrypt the messages with its secret key, it can be ascribed a non-repudiability score of 0.

- Precision: With the assumption that the MS can detect nearby motion with 100% precision [21], we assign it a precision score of 1.

- Quality: The MS can obtain good quality signals regarding nearby motion, and thus its quality score can be assigned as 1.

- Usability: The motion sensors can be installed at different places, in particular places with restricted entry, to detect nearby motion; hence, a usability score of 1 can be attributed to the MS.

- Cluster Head Node: Here, we calculate the trustworthiness of the CHN based on Equation (3). In the following, we calculate the score of various metrics that affect the trustworthiness of a node.

- Confidentiality: In this case, the plaintext information from the MS is relayed by the CHN to the GN. The message itself is stored in private in the CHN, and is not accessible to unauthorized parties, and thus a confidentiality score equal to 0.3 can be assigned to the CHN.

- Integrity: The messages obtained from the MS are stored in ECC memory in the CHN, but no HMAC is calculated at the CHN, and thus an integrity score of 0.2 can be ascribed to CHN.

- Authenticity: The CHN can be successfully authenticated (with an authentication protocol), and thus an authenticity score of 1 can be attributed to the CHN.

- Availability: With the assumption that the CHN is responsive to the authentication messages and is available, its availability score can be assigned as 1.

- Gateway Node: The trustworthiness of the GN can be calculated similarly to that for the CHN based on Equation (3), and can be given as .

- Router Node: The trustworthiness of the RN can be calculated similarly to that for the CHN based on Equation (3), and can be given as .

- Data Fusion Node: The trustworthiness of the DFN can be calculated similarly to that for the CHN based on Equation (3), and can be given as .

- Link or : Here, we calculate the trustworthiness of the wireless link between the MS and the CHN based on Equation (4) of our proposed model (Section 4.1). In the following, we calculate the score of various metrics (i.e., confidentiality, integrity, availability, and quality) stipulated in Equation (4) that affect the trustworthiness of a link.

- Confidentiality: Since the MS sends the information in plaintext (i.e., without encryption) to the CHN over the wireless link, the confidentiality score for or can be assigned as 0.

- Integrity: Since no hash (or HMAC) of the message is transmitted from the MS and the CHN in this case, the integrity score for can be ascribed as 0.

- Availability: Assuming that the wireless link between the MS and the CHN can carry the messages and is not overloaded with data traffic nor is affected by any denial-of-service attack, an availability score of 1 can be attributed to .

- Quality: Here, the quality of a link can be referred to as its bit error rate. Assuming a bit error rate of [19], the quality score for can be calculated as .

- Link or : Here, we calculate the trustworthiness of the wired link or between the CHN and the GN that connects the CHN to the core network based on Equation (4). In the following, we calculate the score of the metrics that affect the trustworthiness of the link for this case.

- Confidentiality: Since the plaintext information (without encryption) sent from the MS traverses the wired link between the CHN and the GN, the confidentiality score for or can be assigned as 0.

- Integrity: Since no HMAC of the message is transmitted along with the message on the link in this case, the integrity score for can be ascribed as 0.

- Availability: Assuming that the link can carry the messages and is not overloaded with data traffic nor is affected by any denial-of-service attack, an availability score of 1 can be attributed to .

- Quality: Assuming a bit error rate of [20], the quality score for can be calculated as .

- Link or : The trustworthiness of the wired link or between the GN and the RN in the core network can be calculated similarly to the wired link , and can be given as .

- Link or : The trustworthiness of the wired link or between the RN and the DFN can be calculated similar to the wired link , and can be given as .

- Link or : The trustworthiness of the wired link or between the DFN and the CBN can be calculated similarly to the wired link , and can be given as .

6. Conclusions and Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Disclaimer

Abbreviations

| SA | Situational awareness |

| DOD | Department of Defense |

| OODA | observe–orient–decide–act |

| USAF | United States Air Force |

| CC | Command and control |

| UAV | Unmanned aerial vehicles |

| ISR | Intelligence, surveillance, and reconnaissance |

| HUD | Heads-up display |

| HMD | Helmet-mounted display |

| HUMINT | Human intelligence |

| MASINT | Measurement and signature intelligence |

| IMINT | Imagery intelligence |

| OSINT | Open source intelligence |

| SIGNIT | Signals intelligence |

| GEOINT | Geospatial intelligence |

| COP | Common operating picture |

| UDOP | User-defined operating picture |

| AI | Artificial intelligence |

| ICT | Information and communications technology |

| RTM | Required trustworthiness measure |

| CFAR | Constant false alarm rate |

| GCS | Ground control station |

| GN | Gateway node |

| RN | Router node |

| DFN | Data fusion node |

| CBN | COP builder node |

| AES | Advanced encryption standard |

| ECC | Error correction code |

| HMAC | Hash-based message authentication code |

| PKI | Public key infrastructure |

| MS | Motion sensor |

| WSN | Wireless sensor network |

| CHN | Cluster head node |

| ECC | Error correction code |

References

- Munir, A.; Aved, A.; Blasch, E. Situational Awareness: Techniques, Challenges, and Prospects. AI 2022, 3, 55–77. [Google Scholar] [CrossRef]

- Spick, M. The Ace Factor: Air Combat and the Role of Situational Awareness; Naval Institute Press: Annapolis, MD, USA, 1988. [Google Scholar]

- Munir, A.; Blasch, E.; Aved, A.; Ratazzi, E.P.; Kong, J. Security Issues in Situational Awareness: Adversarial Threats and Mitigation Techniques. IEEE Secur. Priv. 2022, 20, 51–60. [Google Scholar] [CrossRef]

- McKay, B.; McKay, K. The Tao of Boyd: How to Master the OODA Loop. 2019. Available online: https://www.artofmanliness.com/articles/ooda-loop/ (accessed on 14 August 2019).

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors J. Hum. Factors Ergon. Soc. 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Endsley, M.R. Situation Awareness Global Assessment Technique (SAGAT). In Proceedings of the IEEE National Aerospace and Electronics Conference, Dayton, OH, USA, 23–27 May 1988. [Google Scholar]

- Nguyen, T.; Lim, C.P.; Nguyen, N.D.; Gordon-Brown, L.; Nahavandi, S. A Review of Situation Awareness Assessment Approaches in Aviation Environments. IEEE Syst. J. 2019, 13, 3590–3603. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Taylor, R. Situational Awareness Rating Technique (SART): The Development of a Tool for Aircrew Systems Design. In Proceedings of the AGARD AMP Symposium on Situational Awareness in Aerospace Operations (AGARD-CP-478), Copenhagen, Denmark, 2–6 October 1989. [Google Scholar]

- O’Hare, D.; Wiggins, M.; Williams, A.; Wong, W. Cognitive Task Analyses for Decision Centred Design and Training. Ergonomics 1998, 41, 1698–1718. [Google Scholar] [CrossRef] [PubMed]

- Blasch, E.P.; Salerno, J.J.; Tadda, G.P. Measuring the Worthiness of Situation Assessment. In High-Level Information Fusion Management and Systems Design; Blasch, E., Bossé, E., Lambert, D.A., Eds.; Artech House: Norwood, MA, USA, 2012; pp. 315–329. [Google Scholar]

- Endsley, M.R. The Divergence of Objective and Subjective Situation Awareness: A Meta-Analysis. J. Cogn. Eng. Decis. Mak. 2020, 14, 34–53. [Google Scholar] [CrossRef]

- Zhang, T.; Yang, J.; Liang, N.; Pitts, B.J.; Prakah-Asante, K.O.; Curry, R.; Duerstock, B.S.; Wachs, J.P.; Yu, D. Physiological Measurements of Situation Awareness: A Systematic Review. Hum. Factors 2020, 65, 737–758. [Google Scholar] [CrossRef]

- Robertson, J. Integrity of a Common Operating Picture in Military Situational Awareness. In Proceedings of the Information Security for South Africa (ISSA), Johannesburg, South Africa, 13–14 August 2014. [Google Scholar]

- Roberts, P. Researchers Warn of Physics-Based Attacks on Sensors. 2018. Available online: https://securityledger.com/2018/01/researchers-warn-physics-based-attacks-sensors/ (accessed on 23 June 2020).

- Paar, C.; Pelzl, J. Understanding Cryptography; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- NIST. National Institute of Standards and Technology. 2024. Available online: https://www.nist.gov/ (accessed on 9 February 2024).

- Giri, N.K.; Munir, A.; Kong, J. An Integrated Safe and Secure Approach for Authentication and Secret Key Establishment in Automotive Cyber-Physical Systems. In Proceedings of the Computing Conference, Virtual, 16–17 July 2020. [Google Scholar]

- Li, B.; Jiang, Y.; Sun, J.; Cai, L.; Wen, C.Y. Development and Testing of a Two-UAV Communication Relay System. Sensors 2016, 16, 1696. [Google Scholar] [CrossRef] [PubMed]

- Okpeki, U.; Egwaile, J.; Edeko, F. Performance and Comparative Analysis of Wired and Wireless Communication Systems using Local Area Network Based on IEEE 802.3 And IEEE 802.11. J. Appl. Sci. Environ. Manag. 2018, 22, 1727–1731. [Google Scholar] [CrossRef]

- Liu, H.; Wang, Y.; Wang, K.; Lin, H. Turning a Pyroelectric Infrared Motion Sensor into a High-Accuracy Presence Detector by Using a Narrow Semi-Transparent Chopper. Appl. Phys. Lett. 2017, 111, 243901. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

| Metric | Score | Weightage |

|---|---|---|

| Confidentiality | 1.0 | 0.125 |

| Integrity | 1.0 | 0.125 |

| Authenticity | 1.0 | 0.125 |

| Availability | 1.0 | 0.125 |

| Non-reputability | 1.0 | 0.125 |

| Precision | 0.96 | 0.125 |

| Quality | 1.0 | 0.125 |

| Usability | 1.0 | 0.125 |

| 0.995 | – |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Munir, A.; Aved, A.; Pham, K.; Kong, J. Trustworthiness of Situational Awareness: Significance and Quantification. J. Cybersecur. Priv. 2024, 4, 223-240. https://doi.org/10.3390/jcp4020011

Munir A, Aved A, Pham K, Kong J. Trustworthiness of Situational Awareness: Significance and Quantification. Journal of Cybersecurity and Privacy. 2024; 4(2):223-240. https://doi.org/10.3390/jcp4020011

Chicago/Turabian StyleMunir, Arslan, Alexander Aved, Khanh Pham, and Joonho Kong. 2024. "Trustworthiness of Situational Awareness: Significance and Quantification" Journal of Cybersecurity and Privacy 4, no. 2: 223-240. https://doi.org/10.3390/jcp4020011

APA StyleMunir, A., Aved, A., Pham, K., & Kong, J. (2024). Trustworthiness of Situational Awareness: Significance and Quantification. Journal of Cybersecurity and Privacy, 4(2), 223-240. https://doi.org/10.3390/jcp4020011