Development of a Smart Material Resource Planning System in the Context of Warehouse 4.0

Abstract

:1. Introduction

- -

- Common problems that arise in inventory management when using ERP systems;

- -

- Inaccurate data tracking, poor integration with other software, and inefficient processes;

- -

- Bottlenecks in material accounting at certain workstations, which can lead to discrepancies in the stock of the entire warehouse;

- -

- Problems with updating the balance in inter-warehouses, which affects delays in order fulfilment and an increase in operating costs, which ultimately affects the overall efficiency of the business;

- -

- Problems that arise in inventory management associated with manual processes;

- -

- Lack of real-time visibility and, as a result, poor production planning.

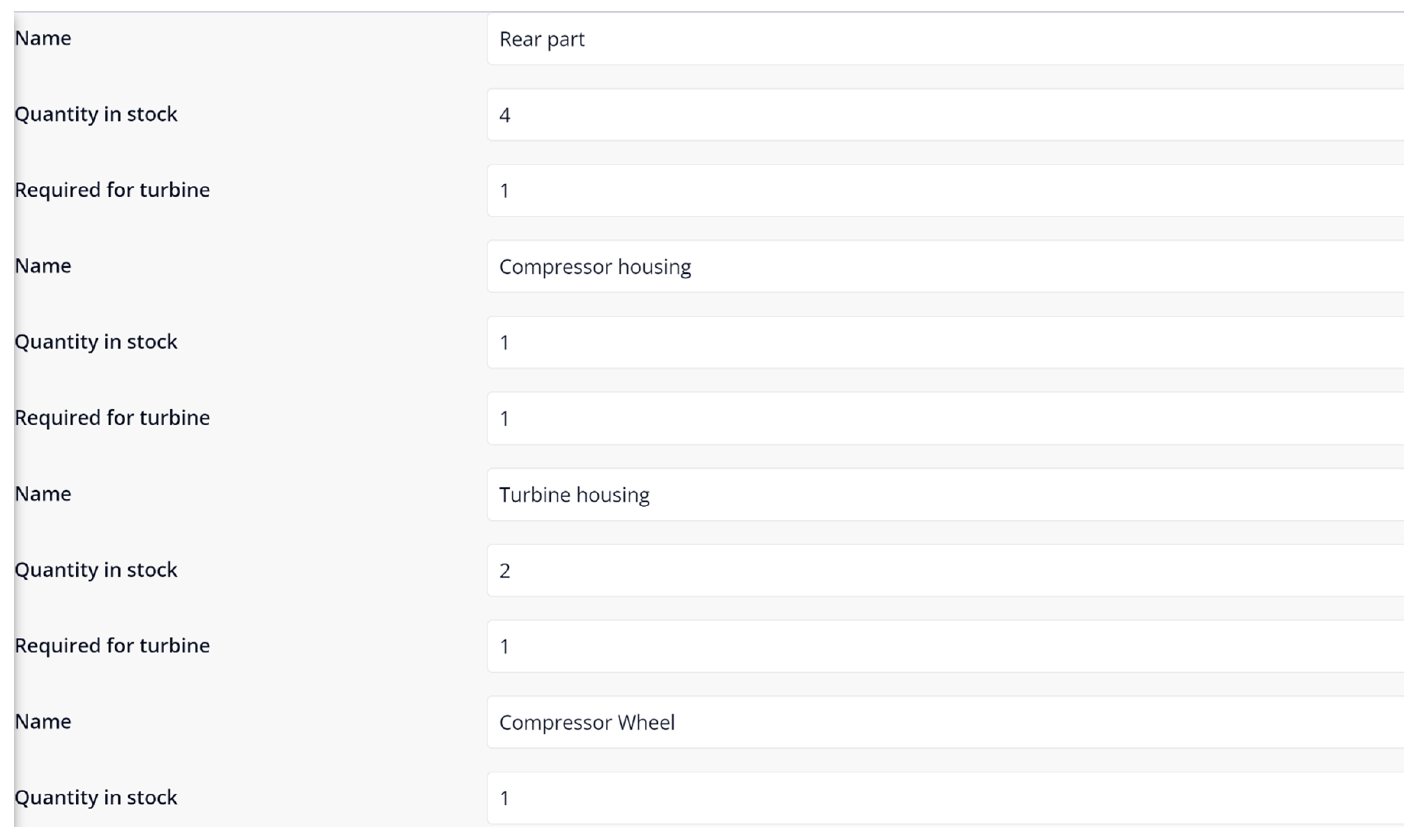

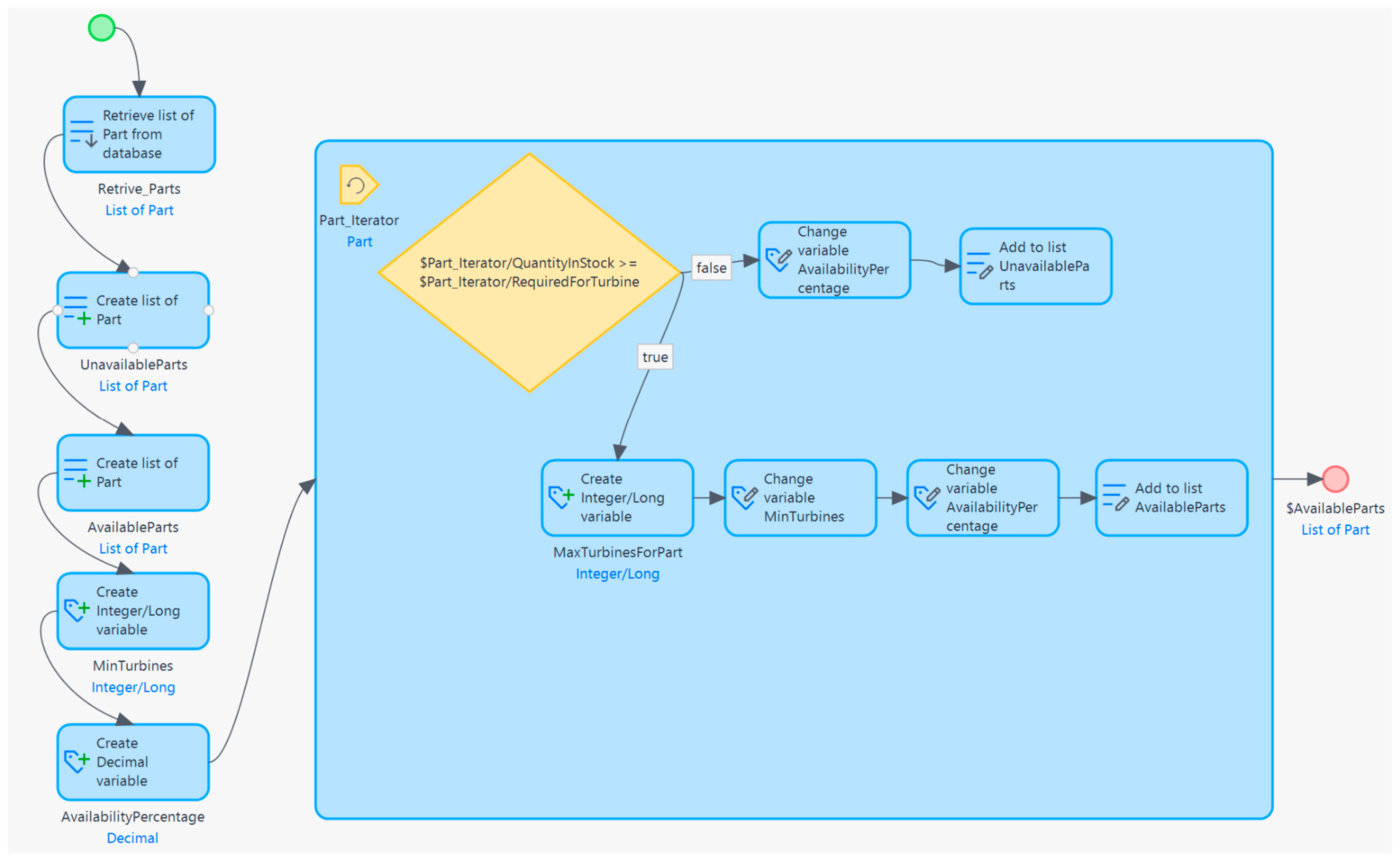

2. Materials and Methods

- -

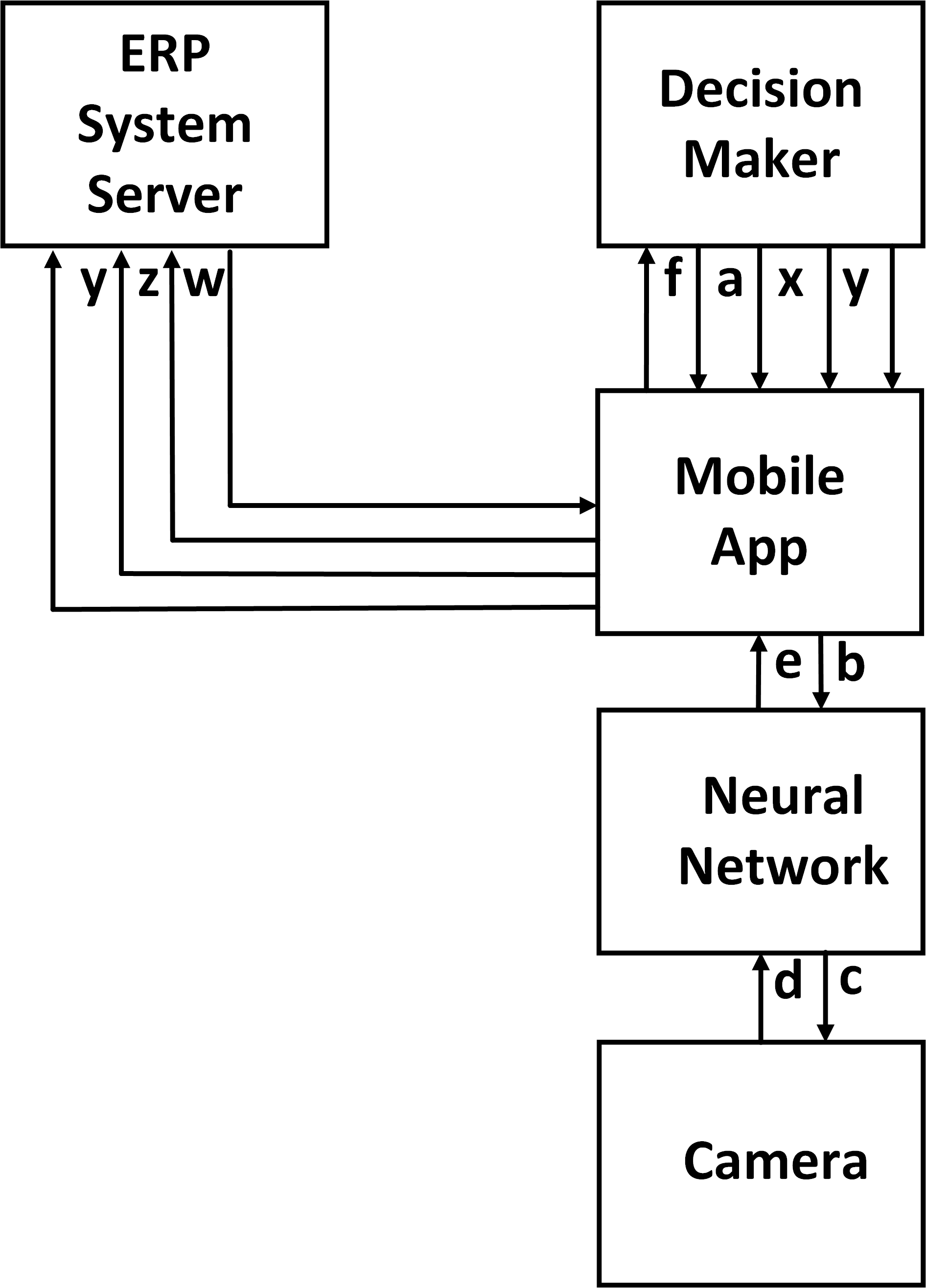

- Data flow (a) is the user’s request to receive data from his profile;

- -

- Data stream (b) is a request stream for the neural network to send a signal to the video camera for further processing;

- -

- Data stream (c)—request to neural network to receive a video stream;

- -

- (d) is a reverse unprocessed video stream;

- -

- (e) is a transformed video stream with data on detected objects. In the mobile application, (f) is the number of detected parts with data on them;

- -

- (x) is a request to check the completeness of the turbine;

- -

- (y) is a request to re-order missing components for a work order for production. This request is sent directly to the ERP system and the logistics department;

- -

- (z) is the formulation of the production work order; therefore, this signal is sent through the application directly to the ERP system.

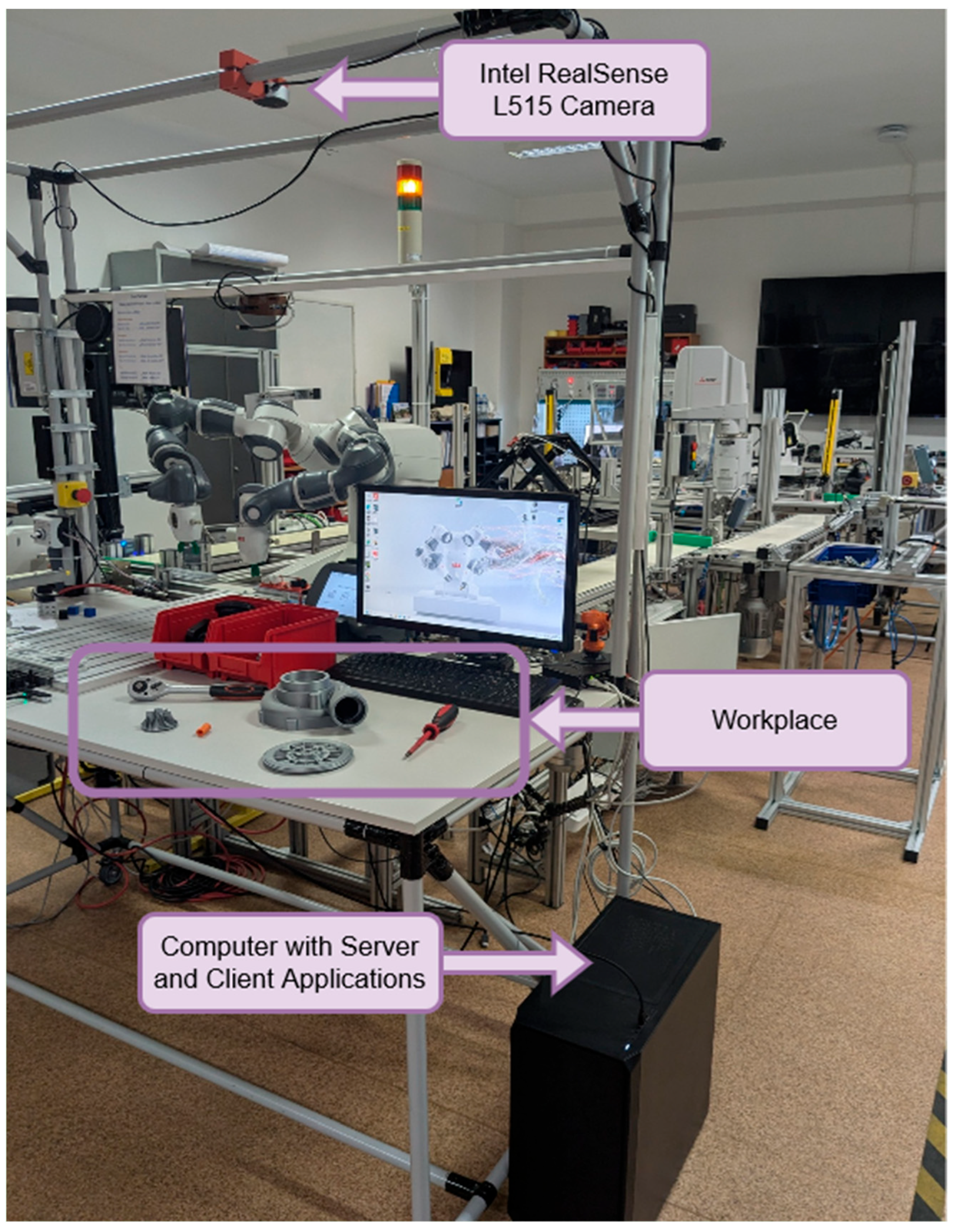

2.1. Workplace Configuration

2.2. Server Configuration

- -

- POST request to the storekeeper to add parts to the warehouse (/add);

- -

- POST request for a storekeeper to remove parts from the warehouse (/remove);

- -

- POST request to the assembler’s workplace to send a photo of the current state of disassembly (/workplace);

- -

- GET manager request for current warehouse status (/warehouse_status);

- -

- GET manager request for the current state of disassembly (/workplace _status).

2.3. Client Part Configuration

2.4. YOLLOv8 Convolutional Neural Network Architecture

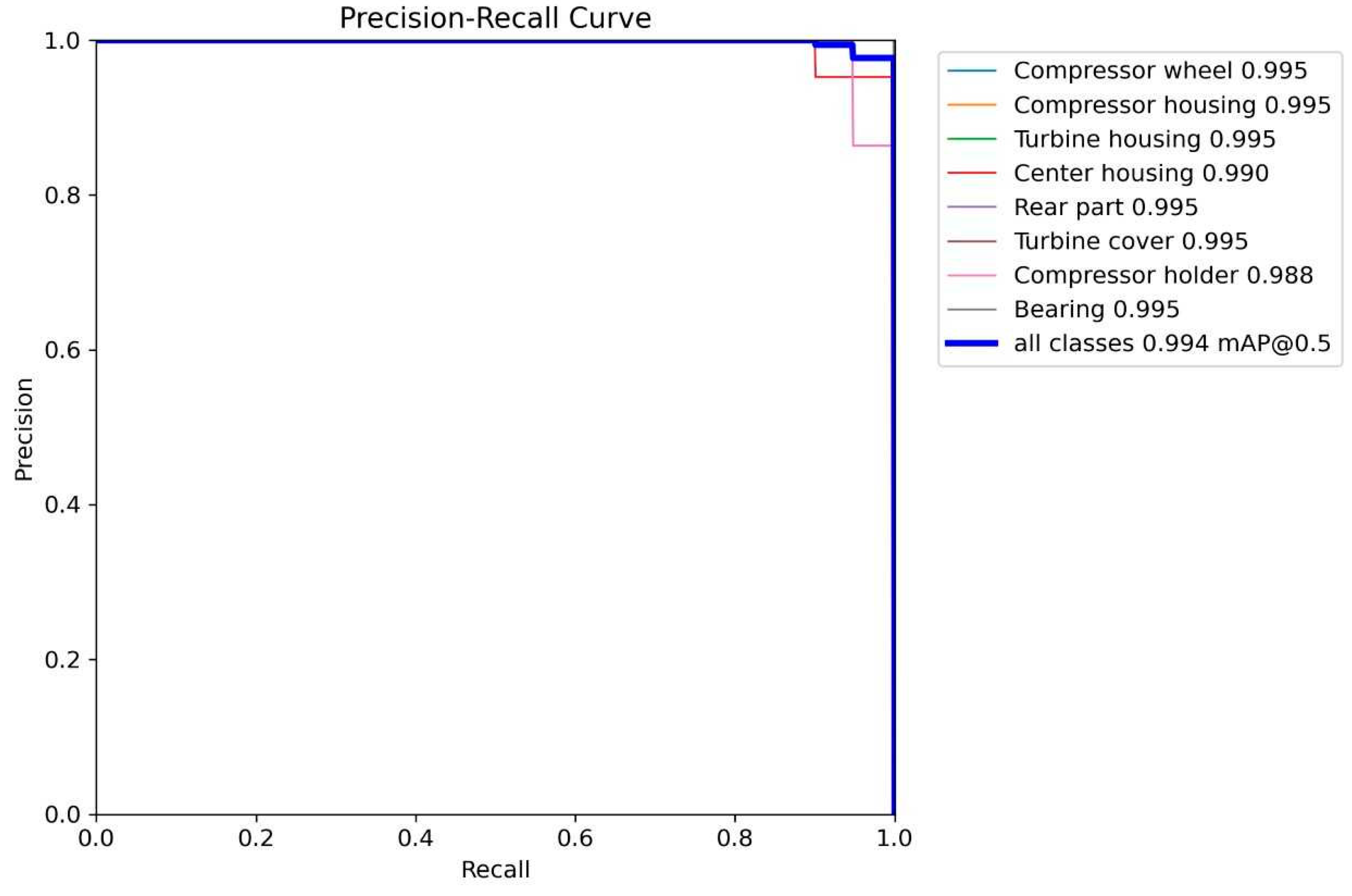

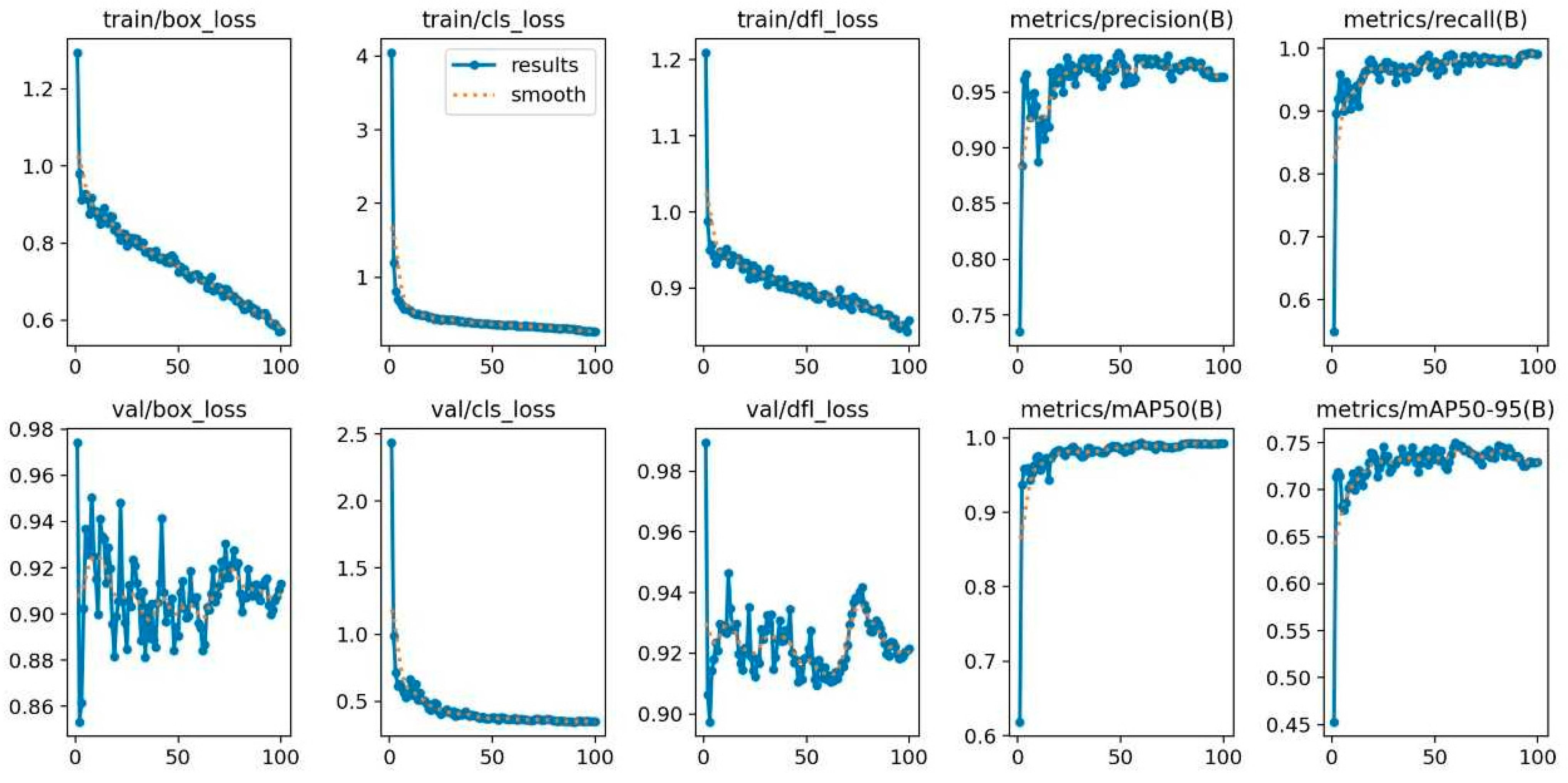

2.5. Training and Validation of YOLOv8

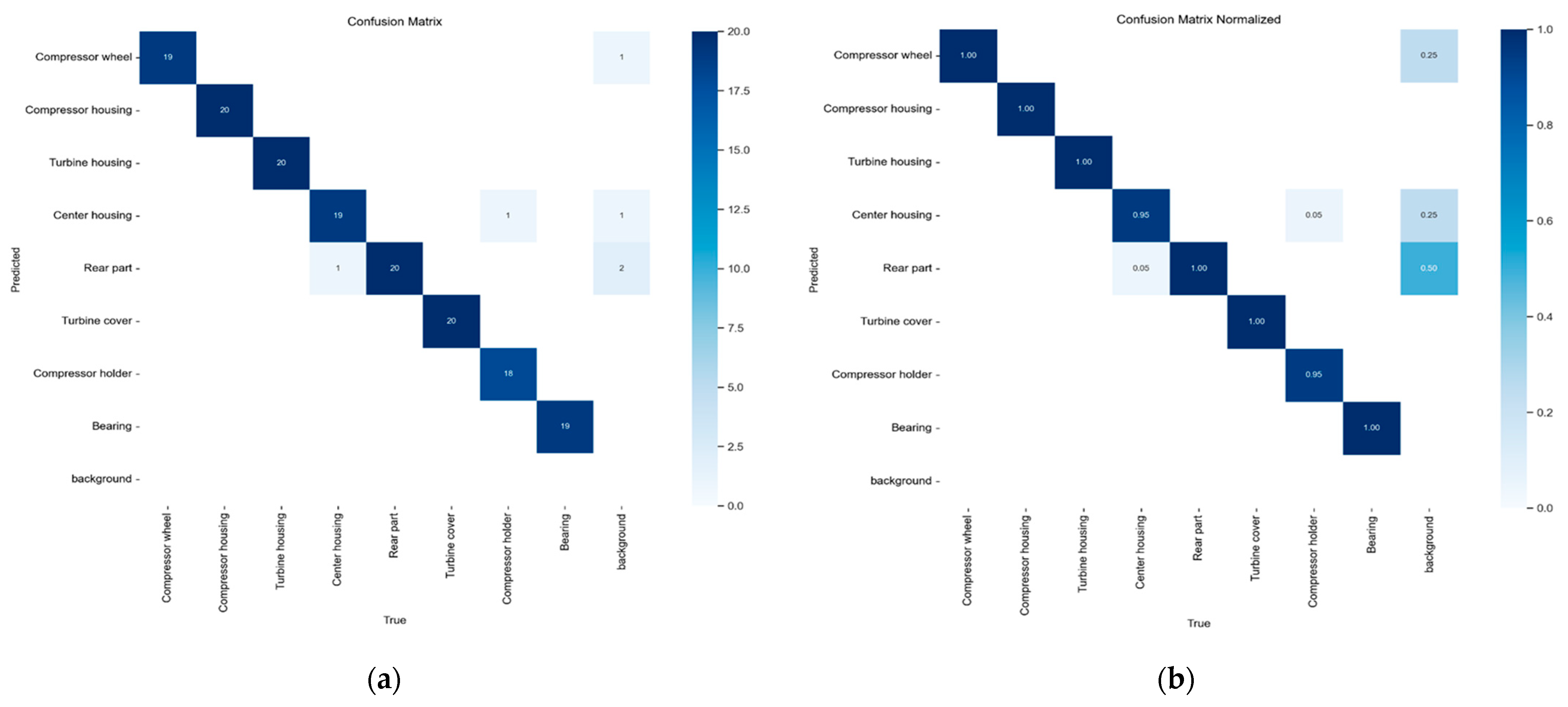

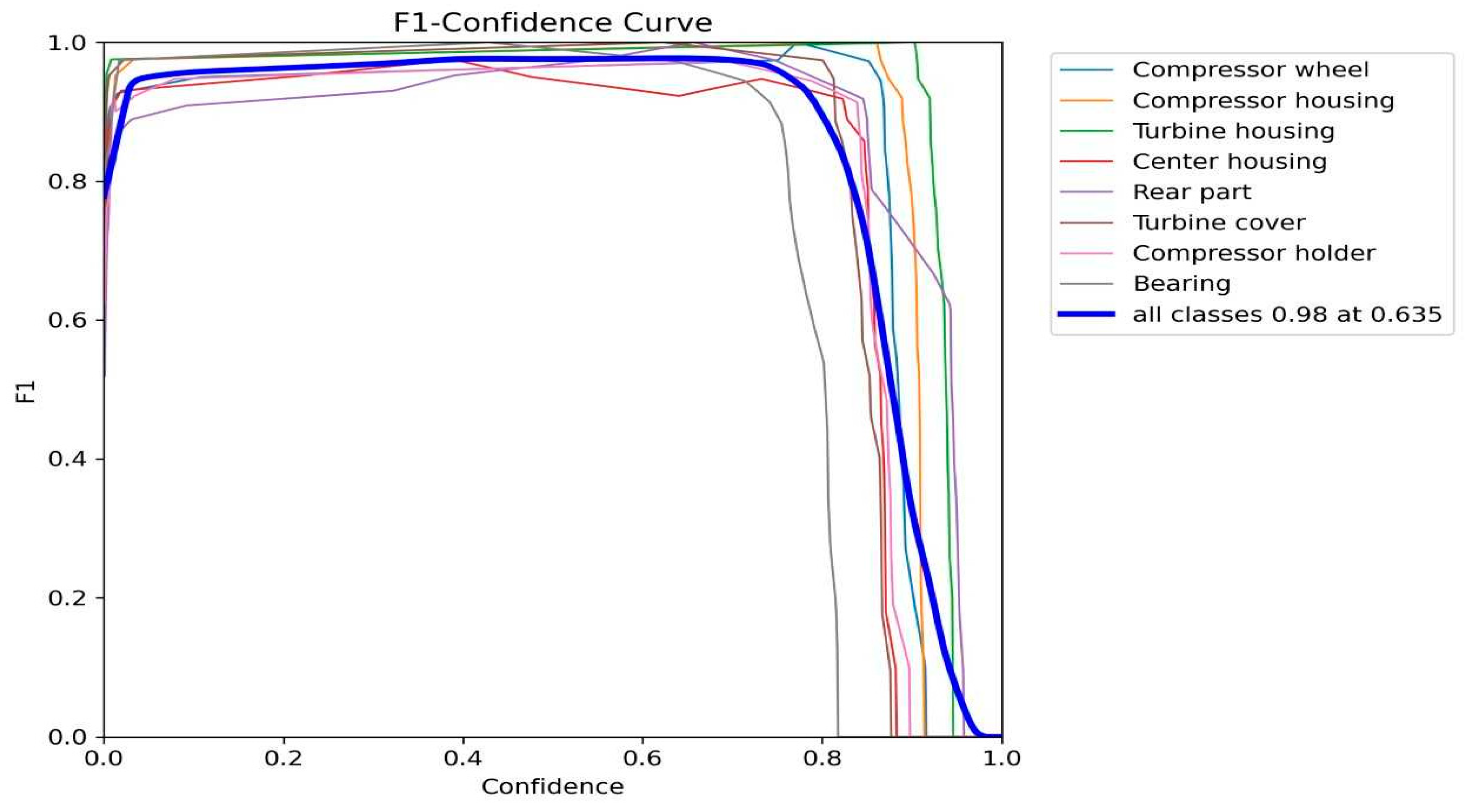

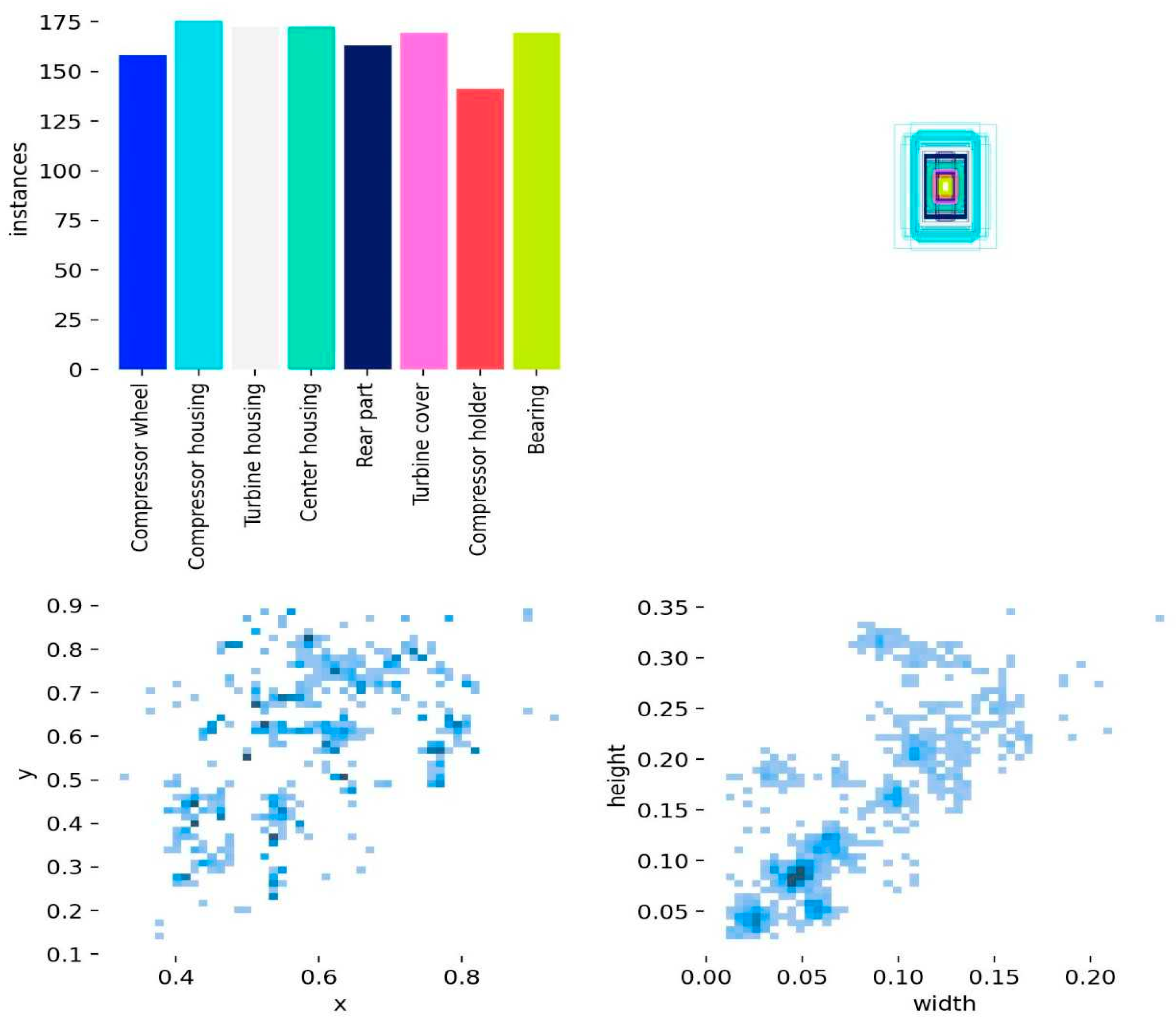

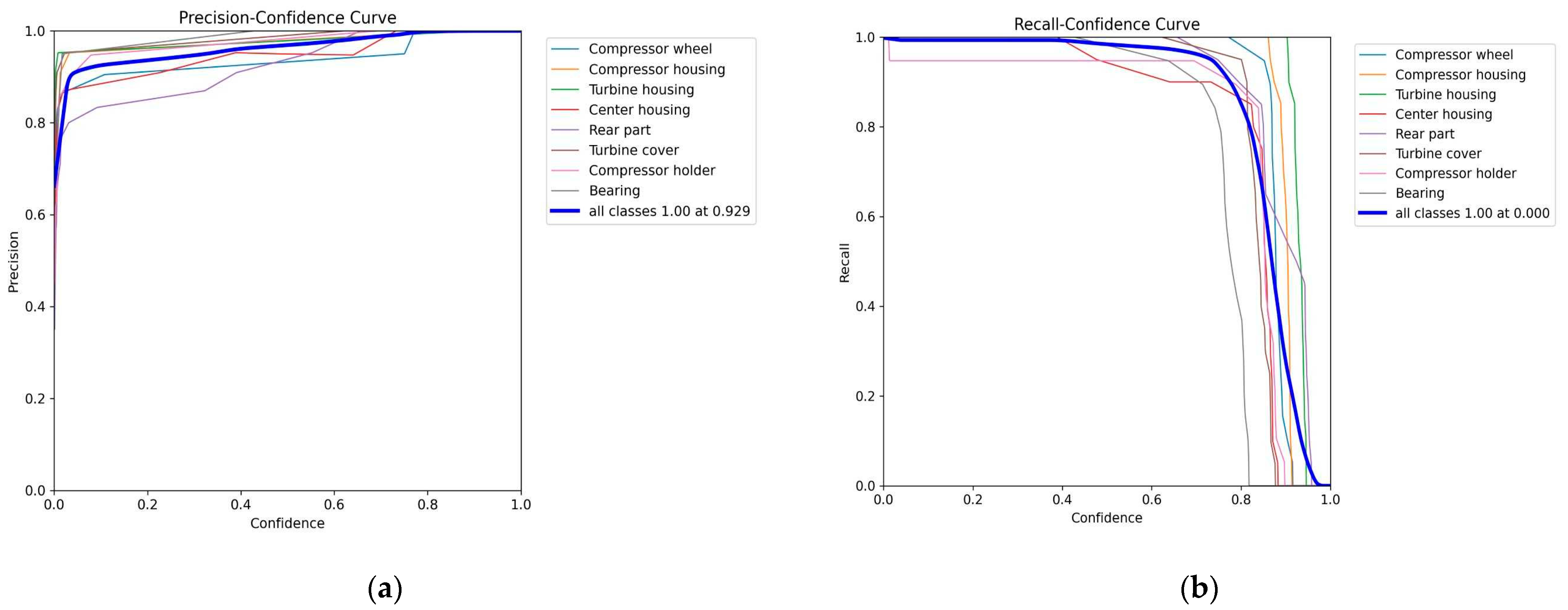

3. Results and Discussion

4. Conclusions

5. Discussion

- -

- Expanding the range of components;

- -

- Training the system to detect external defects, since at this stage, the system is not able to do this;

- -

- Identifying factors that can interfere with the quality of camera operation in real enterprise conditions;

- -

- Integrating the proposed neural network into warehouse facilities in order to reduce the costs of holding stale material and offering several decision-making options for this category of material in accordance with the principles of the circular economy and Warehouse 4.0;

- -

- Expanding the areas of use of the neural network by mobile applications, since the studied workplaces are important for operational planning and quality control. From this, it is possible to determine further areas of application for the proposed system: personnel management, quality control, logistics, material supply for productionm and the process of creating a cost chain.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Husár, J.; Hrehova, S.; Trojanowski, P.; Wojciechowski, S.; Kolos, V. Perspectives of Lean Management Using the Poka Yoke Method. In Lecture Notes in Mechanical Engineering, Proceedings of the Advances in Design, Simulation and Manufacturing VI, High Tatras, Slovakia, 6–9 June 2023; Ivanov, V., Trojanowska, J., Pavlenko, I., Rauch, E., Piteľ, J., Eds.; DSMIE 2023; Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Wang, H. Customer Needs Assessment and Screening for Transmission Solution Selection. In Lecture Notes in Mechanical Engineering, Proceedings of the 8th International Conference on Advances in Construction Machinery and Vehicle Engineering, Shanghai, China, 13–16 October 2023; Halgamuge, S.K., Zhang, H., Zhao, D., Bian, Y., Eds.; ICACMVE 2023; Springer: Singapore, 2024. [Google Scholar] [CrossRef]

- Kaya, B.; Karabağ, O.; Çekiç, F.R.; Torun, B.C.; Başay, A.Ö.; Işıklı, Z.E.; Çakır, Ç. Inventory Management Optimization for Intermittent Demand. In Lecture Notes in Mechanical Engineering, Proceedings of the Industrial Engineering in the Industry 4.0 Era, Antalya, Türkiye, 5–7 October 2023; Durakbasa, N.M., Gençyılmaz, M.G., Eds.; ISPR 2023; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Thürer, M.; Fernandes, N.O.; Lödding, H.; Stevenson, M. Material flow control in make-to-stock production systems: An assessment of order generation, order release and production authorization by simulation. Flex. Serv. Manuf. J. 2024. [Google Scholar] [CrossRef]

- Tubis, A.A.; Rohman, J. Intelligent Warehouse in Industry 4.0—Systematic Literature Review. Sensors 2023, 23, 4105. [Google Scholar] [CrossRef] [PubMed]

- Aravindaraj, K.; Chinna, P.R. A systematic literature review of integration of industry 4.0 and warehouse management to achieve Sustainable Development Goals (SDGs). Clean. Logist. Supply Chain. 2022, 5, 100072. [Google Scholar] [CrossRef]

- Tikwayo, L.N.; Mathaba, T.N.D. Applications of Industry 4.0 Technologies in Warehouse Management: A Systematic Literature Review. Logistics 2023, 7, 24. [Google Scholar] [CrossRef]

- Hamdy, W.; Al-Awamry, A.; Mostafa, N. Warehousing 4.0: A proposed system of using node-red for applying internet of things in warehousing. Sustain. Futures 2022, 4, 100069. [Google Scholar] [CrossRef]

- Vukicevic, A.; Mladineo, M.; Banduka, N.; Macuzic, I. A smart Warehouse 4.0 approach for the pallet management using machine vision and Internet of Things (IoT): A real industrial case study. Adv. Prod. Eng. Manag. 2021, 16, 297–306. [Google Scholar] [CrossRef]

- Ongbali, S.O.; Afolalu, S.A.; Oyedepo, S.A. Aworinde AK, Fajobi MA. A study on the factors causing bottleneck problems in the manufacturing industry using principal component analysis. Heliyon 2021, 7, e07020. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Franco-Silvera, N.; Valdez-Yrigoen, A.; Quiroz-Flores, J.C. Warehouse Management Model under the Lean Warehousing Approach to Increase the Order Fill Rate in Glass Marketing SMEs. In Proceedings of the 2023 9th International Conference on Industrial and Business Engineering (ICIBE ‘23), Beijing, China, 22–24 September 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 379–387. [Google Scholar] [CrossRef]

- Manufacturing ERP Software Development: All You Need to Know. Available online: https://appinventiv.com/blog/manufacturing-erp-software-development/ (accessed on 26 September 2024).

- ERP Implementation Challenges Manufacturers Need to Be On Top Of. Available online: https://dwr.com.au/erp-implementation-challenges-manufacturers-need-to-be-on-top-of/ (accessed on 26 September 2024).

- TechTarget. Common Problems with Inventory Management. Available online: https://www.techtarget.com/searcherp/tip/Common-problems-with-inventory-management (accessed on 26 September 2024).

- NetSuite. Inventory Management Challenges: Overcoming Obstacles to Success. Available online: https://www.netsuite.com/portal/resource/articles/inventory-management/inventory-management-challenges.shtml (accessed on 26 September 2024).

- Aptean. Challenges of Inventory Management: How to Overcome Them. Available online: https://www.aptean.com/fr/insights/blog/challenges-of-inventory-management (accessed on 26 September 2024).

- Battaïa, O.; Dolgui, A.; Heragu, S.S.; Meerkov, S.M.; Tiwari, M.K. Design for manufacturing and assembly/disassembly: Joint design of products and production systems. Int. J. Prod. Res. 2018, 56, 7181–7189. [Google Scholar] [CrossRef]

- Arakawa, M.; Park, W.Y.; Abe, T.; Tasaki, K.; Tamaki, K. Development of Service and Product Design Processes Considering Product Life Cycle Management for a Circular Economy. In Lecture Notes in Mechanical Engineering, Proceedings of the Industrial Engineering and Management, Chengdu, China, 17–19 November 2023; Chien, C.F., Dou, R., Luo, L., Eds.; SMILE 2023; Springer: Singapore, 2024. [Google Scholar] [CrossRef]

- Cebi, S.; Baki, B.; Ozcelik, G. Overcoming Barriers in Circular Economy Implementation with Industry 4.0 Technologies: The Case of Defense Industry. In Lecture Notes in Mechanical Engineering, Proceedings of the Industrial Engineering in the Industry 4.0 Era, Antalya, Türkiye, 5–7 October 2023; Durakbasa, N.M., Gençyılmaz, M.G., Eds.; ISPR 2023; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Plakantara, S.P.; Karakitsiou, A.; Mantzou, T. Managing Risks in Smart Warehouses from the Perspective of Industry 4.0. In Disruptive Technologies and Optimization Towards Industry 4.0 Logistics; Karakitsiou, A., Migdalas, A., Pardalos, P.M., Eds.; Springer Optimization and Its Applications, vol 214; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Favi, C.; Mandolini, M.; Campi, F.; Cicconi, P.; Raffaeli, R.; Germani, M. Design for Manufacturing and Assembly: A Method for Rules Classification. In Lecture Notes in Mechanical Engineering, Proceedings of the Advances on Mechanics, Design Engineering and Manufacturing III, Aix-en-Provence, France, 2–4 June 2020; Roucoules, L., Paredes, M., Eynard, B., Morer Camo, P., Rizzi, C., Eds.; JCM 2020; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Demčák, J.; Lishchenko, N.; Pavlenko, I.; Pitel’, J.; Židek, K. The Experimental SMART Manufacturing System in SmartTechLab. In Lecture Notes in Mechanical Engineering, Proceedings of the Advances in Manufacturing II, Poznan, Poland, 19–22 May 2019; Springer International Publishing: Cham, Switzerland, 2022; pp. 228–238. [Google Scholar] [CrossRef]

- Sá, J.C.; Dinis-Carvalho, J.; Costa, B.; Silva, F.J.G.; Silva, O.; Lima, V. Implementation of Lean Tools in Internal Logistic Improvement. In Lean Thinking in Industry 4.0 and Services for Society; Antosz, K., Carlos Sa, J., Jasiulewicz-Kaczmarek, M., Machado, J., Eds.; IGI Global: Hershey, PA, USA, 2023; pp. 125–137. [Google Scholar] [CrossRef]

- Widanage, C.; Kim, K.P. Integrating Design for Manufacture and Assembly (DfMA) with BIM for infrastructure. Autom. Constr. 2024, 167, 105705. [Google Scholar] [CrossRef]

- Pathan, M.S.; Richardson, E.; Galvan, E.; Mooney, P. The Role of Artificial Intelligence within Circular Economy Activities—A View from Ireland. Sustainability 2023, 15, 9451. [Google Scholar] [CrossRef]

- Iakovets, A.; Andrusyshyn, V. Design of a Decision-Making Model for Engineering Education. In EAI/Springer Innovations in Communication and Computing, Proceedings of the 2nd EAI International Conference on Automation and Control in Theory and Practice, Orechová Potôň, Slovakia 7–9 February 2024; Balog, M., Iakovets, A., Hrehová, S., Berladir, K., Eds.; EAI ARTEP 2024; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Quality-One. (n.d.). Design for Manufacturing/Assembly (DFM/DFA). Available online: https://quality-one.com/dfm-dfa/#:~:text=What%20is%20Design%20for%20Manufacturing,assembled%20with%20minimum%20labor%20cost (accessed on 2 October 2024).

- GitHub—Boostorg/Beast: HTTP and WebSocket built on Boost.Asio in C++11. Available online: https://github.com/boostorg/beast (accessed on 11 August 2024).

- GitHub—Boostorg/Mysql: MySQL C++ Client Based on Boost.Asio. Available online: https://github.com/boostorg/mysql (accessed on 11 August 2024).

- OpenCV—Open Computer Vision Library. Available online: https://opencv.org/ (accessed on 11 August 2024).

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. Available online: https://arxiv.org/abs/1804.02767 (accessed on 2 October 2024).

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. Computer Science. Computer Vision and Pattern Recognition. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) 2017, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ultralytics|Revolutionizing the World of Vision AI. Available online: https://www.ultralytics.com/ (accessed on 11 August 2024).

- YOLOv8. (n.d.). YOLOv8 Architecture; Deep Dive into its Architecture. Available online: https://yolov8.org/yolov8-architecture/ (accessed on 11 August 2024).

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Lalinia, M.; Sahafi, A. Colorectal polyp detection in colonoscopy images using YOLO-V8 network. Signal Image Video Process. 2024, 18, 2047–2058. [Google Scholar] [CrossRef]

- Ultralytics. Ultralytics YOLO. Available online: https://github.com/ultralytics/ultralytics (accessed on 2 October 2024).

| Component | Parameter | Values |

|---|---|---|

| Focus Layer | Input image size | (3, 640, 640) |

| Output size after convolution | (32, 320, 320) | |

| Convolution kernel size | 3 × 3 | |

| Stride | 2 | |

| Backbone (CSPDarknet53) | Number of CSP Bottleneck Blocks | 5 |

| Input size for CSP1 | (32, 320, 320) | |

| Output size for CSP1 | (64, 160, 160) | |

| Number of filters | 64, 128, 256, 512, 1024 | |

| Convolution kernel sizes | 3 × 3 | |

| Stride | 2 | |

| SPP Block (Spatial Pyramid Pooling) | Input size | (1024, 10, 10) |

| Pooling sizes | 5 × 5, 9 × 9, 13 × 13 | |

| Output size | (1024, 10, 10) | |

| Neck (PANet) | FPN Path input size | (1024, 10, 10) |

| Output size after FPN Path | (256, 40, 40), (128, 80, 80) | |

| PANet Path Blocks (C3 Blocks) input size | (512, 20, 20), (256, 40, 40), (128, 80, 80) | |

| Output size after PANet Path Blocks | (256, 40, 40), (128, 80, 80), (64, 160, 160) |

| Hyperparameter | Value | Description |

|---|---|---|

| Epochs | 100 | The number of times the entire dataset is passed through the model during training |

| Batch | 16 | The number of images processed in one training iteration |

| Iou | 0.7 | The threshold for determining whether overlapping bounding boxes should be merged during non-maximum suppression |

| Max_det | 300 | The maximum number of objects the model can predict in one image |

| lr0 | 0.01 | The starting rate at which the model’s weights are updated during training |

| lrf | 0.01 | The learning rate maintained during the final phase of training |

| momentum | 0.937 | Controls the amount of influence past updates have on the current weight updates |

| weight_decay | 0.0005 | A regularization parameter that helps prevent the model from overfitting by penalizing large weights |

| warmup_epochs | 3.0 | The number of epochs during which the learning rate gradually increases from a very low value to the set initial learning rate |

| warmup_momentum | 0.8 | The starting momentum value during the warmup phase, which gradually increases as training progresses |

| warmup_bias_lr: | 0.1 | The initial learning rate for bias parameters during the warmup phase, helping them converge faster in the early epochs |

| Micrometer | Value | Description |

|---|---|---|

| Confidence Threshold | 0.25 | This is the minimum probability at which the model considers that the detected region contains an object |

| Score Threshold | 0.45 | The score threshold takes into account both the model’s confidence in the presence of the object and its classification |

| Non-Maximum Suppression (NMS) Threshold | 0.50 | This parameter defines the overlap threshold between predicted bounding boxes |

| Image Resolution | YOLOv8s (11.2 M Parameters) | YOLOv8m (25.9 M Parameters) | YOLOv8l (43.7 M Parameters) |

|---|---|---|---|

| 416 × 416 | Training Time: 4 m 2 s Processing Speed: max: 0.185 s min: 0.007 s avg: 0.008 s Note: Detected object (bearing) showed false positives near edges | Training Time: 9 m 30 s Processing Speed: max: 0.225 s min: 0.009 s avg: 0.013 s Note: Bearing detection had false positives near edges | Training Time: 16 m 1 s Processing Speed: max: 0.248 smin: 0.014 savg: 0.016 s |

| 640 × 640 | Training Time: 5 m 23 s Processing Speed: max: 0.200 s min: 0.011 s avg: 0.013 s Note: Compressor housing not always detected, some false positives | Training Time: 13 m 37 s Processing Speed: max: 0.235 s min: 0.016 s avg: 0.018 s | Out of Memory |

| 768 × 768 | Training Time: 9 m 14 s Processing Speed: max: 0.225 s min: 0.017 s avg: 0.019 s Noti: Bearing false positives still present | Out of Memory | Out of Memory |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sokolov, O.; Iakovets, A.; Andrusyshyn, V.; Trojanowska, J. Development of a Smart Material Resource Planning System in the Context of Warehouse 4.0. Eng 2024, 5, 2588-2609. https://doi.org/10.3390/eng5040136

Sokolov O, Iakovets A, Andrusyshyn V, Trojanowska J. Development of a Smart Material Resource Planning System in the Context of Warehouse 4.0. Eng. 2024; 5(4):2588-2609. https://doi.org/10.3390/eng5040136

Chicago/Turabian StyleSokolov, Oleksandr, Angelina Iakovets, Vladyslav Andrusyshyn, and Justyna Trojanowska. 2024. "Development of a Smart Material Resource Planning System in the Context of Warehouse 4.0" Eng 5, no. 4: 2588-2609. https://doi.org/10.3390/eng5040136

APA StyleSokolov, O., Iakovets, A., Andrusyshyn, V., & Trojanowska, J. (2024). Development of a Smart Material Resource Planning System in the Context of Warehouse 4.0. Eng, 5(4), 2588-2609. https://doi.org/10.3390/eng5040136